Abstract

Network theory has often disregarded many-body relationships, solely focusing on pairwise interactions: neglecting them, however, can lead to misleading representations of complex systems. Hypergraphs represent a suitable framework for describing polyadic interactions. Here, we leverage the representation of hypergraphs based on the incidence matrix for extending the entropy-based approach to higher-order structures: in analogy with the Exponential Random Graphs, we introduce the Exponential Random Hypergraphs (ERHs). After exploring the asymptotic behaviour of thresholds generalising the percolation one, we apply ERHs to study real-world data. First, we generalise key network metrics to hypergraphs; then, we compute their expected value and compare it with the empirical one, in order to detect deviations from random behaviours. Our method is analytically tractable, scalable and capable of revealing structural patterns of real-world hypergraphs that differ significantly from those emerging as a consequence of simpler constraints.

Similar content being viewed by others

Introduction

Networks provide a powerful language to model interacting systems1,2. Within such a framework, the basic unit of interaction, i.e., the edge, involves two nodes, and the complexity of the structure as a whole arises from the combination of these units. Despite its many successes, network science disregards certain aspects of interacting systems, notably the possibility that more-than-two constituent units could interact at a time3. Yet, it has been increasingly shown that, for a variety of systems, interactions cannot be always decomposed into a pairwise fashion and that neglecting higher-order ones can lead to an incomplete, if not misleading, representation of them3,4,5: examples include chemical reactions involving several compounds, coordination activities within small teams of co-working people and brain activities mediated by groups of neurons. Generally speaking, thus, modelling the joint coordination of multiple entities calls for a generalisation of the traditional edge-centred framework.

While approaches focusing on the so-called simplicial complexes have been proposed6,7, an increasingly popular alternative to support a science of many-body interactions is provided by hypergraphs, as these mathematical objects allow nodes to interact in groups without posing restrictions, such as the ‘hierarchical’’ ones characterising the former ones8, which, in fact, include all the subsets of a given simplex6.

Several contributions to the definition of analytical tools for their study have already appeared9,10,11,12: while some pertain to the purely mathematical literature and have considered probabilistic hypergraphs with the aim of studying properties such as the existence of cycles, cliques, etc.13,14,15, others have adopted approaches rooted into statistical physics. Among the latter ones, some have proposed microcanonical approaches9,11 while others have focused on canonical ones10,12,16.

The present contribution aims at extending the class of entropy-based benchmarks17,18,19,20 to hypergraphs while providing a coherent framework to formally derive the canonical approaches that have been proposed so far. These models work by preserving a given set of quantities while randomising everything else, hence destroying all possible correlations between structural properties except for those that are genuinely embodied into the constraints themselves20,21,22. The versatility of such an approach allows it to be employed either in presence of full information (to quantify the level of self-organisation of a given configuration by identifying the patterns that are incompatible with simpler, structural constraints23,24,25,26,27) or in presence of partial information (to infer the missing portion of a given configuration28).

Our strategy for defining null models for hypergraphs is based on the randomisation of their incidence matrix, i.e., the (generally, rectangular) table contains information about the connectivity of nodes (the set of hyperedges they belong to) and the connectivity of hyperedges (the set of nodes they cluster). We will explicitly derive two members of this novel class of models hereby named Exponential Random Hypergraphs (ERH), i.e., the Random Hypergraph Model (RHM) (RHM, generalising the Erdös-Rényi Model) and the Hypergraph Configuration Model (HCM) (HCM, generalising the Configuration Model), and provide an analytical characterisation of their behaviour. To this aim, we will exploit the formal equivalence between the incidence matrix of a hypergraph and the biadjacency matrix of a bipartite graph10,12. Afterwards, we will employ the HCM to assess the statistical significance of a number of patterns characterising several real-world hypergraphs.

Methods

Formalism and basic quantities

A hypergraph can be defined as a pair \(H({{\mathcal{V}}},{{{\mathcal{E}}}}_{H})\) where \({{\mathcal{V}}}\) is the set of vertices and \({{{\mathcal{E}}}}_{H}\) is the set of hyperedges. Moving from the observation that the edge set \({{{\mathcal{E}}}}_{G}\) of a traditional, binary, undirected graph \(G({{\mathcal{V}}},{{{\mathcal{E}}}}_{G})\) is a subset of the power set of \({{\mathcal{V}}}\), several definitions of the hyperedge set \({{{\mathcal{E}}}}_{H}\) have been provided: the two most popular ones are those proposed in29,30, where hyperedges tie one or more vertices, and in31, where hyperedges are allowed to be empty sets as well. Hereby, we adopt the definition according to which \({{{\mathcal{E}}}}_{H}\) is a multiset of the power set of \({{\mathcal{V}}}\): since the concept of ‘multiset’’ extends the concept of ‘set’’, allowing for multiple instances of (each of) its elements, our choice implies that we are considering non-simple hypergraphs, admitting loops and parallel edges (i.e., hyperedges involving exactly the same nodes) of any size, including 0 (corresponding to empty hyperedges) and \(| {{\mathcal{V}}}| \) (corresponding to hyperedges clustering all vertices together).

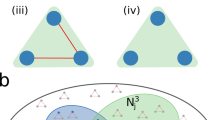

As for traditional graphs, an algebraic representation of hypergraphs can be devised as well. In analogy with the traditional case, we call the cardinality of the set of nodes \(| {{\mathcal{V}}}| \equiv N\) and the cardinality of the set of hyperedges \(| {{{\mathcal{E}}}}_{H}| \equiv L\): then, we consider the N × L table known as incidence matrix, each row of which corresponds to a node and each column of which corresponds to a hyperedge. If we indicate the incidence matrix with I, its generic entry Iiα will be 1 if vertex i belongs to hyperedge α and 0 otherwise. Notice that the number of 1s along each row can vary between 0 and L, the former case indicating an isolated node and the latter one indicating a node that belongs to each hyperedge; similarly, the number of 1s along each column can vary between 0 and N, the former case indicating an empty hyperedge and the latter one indicating a hyperedge that includes all nodes. As explicitly noticed elsewhere9,10,12, representing a hypergraph via its incidence matrix is equivalent to considering the bipartite graph defined by the sets \({{\mathcal{V}}}\) and \({{{\mathcal{E}}}}_{H}\) - more formally, the function that assigns each hypergraph to the bipartite graph associated with it is a bijection when both nodes and hyperedges are uniquely labelled9. For instance, the incidence matrix I describing the binary, undirected hypergraph shown in Fig. 1 is the following:

Black dots represent nodes, while the coloured shapes represent hyperedges. The incidence matrix describing the present hypergraph is defined by Eq. (1).

Once the incidence matrix has been defined, several quantities needed for the description of hypergraphs can be defined quite straightforwardly: for example, the ‘degree of node i’’ (hereby, degree) reads

and counts the number of hyperedges that are incident to it; analogously, the ‘degree of hyperedge α’‘ (hereby, hyperdegree) reads

and counts the number of nodes it clusters. Both the sum of degrees and that of hyperdegrees equal the total number of 1s, i.e., \({\sum }_{i=1}^{N}{k}_{i}={\sum }_{i=1}^{N}{\sum }_{\alpha =1}^{L}{I}_{i\alpha }={\sum }_{\alpha =1}^{L}{\sum }_{i=1}^{N}{I}_{i\alpha }={\sum }_{\alpha =1}^{L}{h}_{\alpha }\equiv T\). Importantly, a node degree no longer coincides with the number of its neighbours: instead, it matches the number of hyperedges it belongs to; a hyperdegree, instead, provides information about the hyperedge size. Analogously, T paves the way for the alternative definition of ‘density of connections’’ reading ρ = T/NL ≡ h/N, i.e., the ratio between the (average) number of nodes each hyperedge clusters and the total number of nodes.

Binary, undirected hypergraphs randomisation

An early attempt to define randomisation algorithms for hypergraphs within a framework closely resembling ours can be found in ref. 32. Its authors, however, have just considered hyperedges that are incident to triples of nodes—a framework that has been, later, applied to the study of the World Trade Network10.

Considering the incidence matrix has two clear advantages over the tensor-based representation employed in10,32: i) generality, because the incidence matrix allows hyperedges of any size to be handled at once; ii) compactness, because the order of the tensor I never exceeds two, hence allowing any hypergraph to be represented as a traditional, bipartite graph.

In order to extend the rich set of null models induced by graph-specific global and local constraints to hypergraphs, we first need to identify the quantities that can play this role within the novel setting. In what follows, we will consider the total number of 1s, i.e., T, the degree and the hyperdegree sequences, i.e., \({\{{k}_{i}\}}_{i = 1}^{N}\) and \({\{{h}_{\alpha }\}}_{\alpha = 1}^{L}\)—either separately or in a joint fashion; moreover, we will distinguish between microcanonical and canonical randomisation techniques.

Homogeneous benchmarks: the RHM

Microcanonical formulation

The model is defined by just one, global constraint, which, in our case, reads

Its microcanonical version extends the model by Erdös and Rényi33—also known as Random Graph Model—to hypergraphs and prescribes to count the number of incidence matrices that are compatible with a given, total number of 1s, say T*: they are

with V ≡ NL being the total number of entries of the incidence matrix I. Once the total number of configurations composing the microcanonical ensemble has been determined, a procedure to generate them is needed: in the case of the RHM, it simply boils down to reshuffling the entries of the incidence matrix, a procedure ensuring that the total number of 1s is kept fixed while any, other correlation is destroyed.

Canonical formulation

The canonical version of the RHM, instead, extends the model by Gilbert34 and rests upon the constrained maximisation of Shannon entropy, i.e.

where \(S[P]=-{\sum}_{{{\bf{I}}}\in {{\mathscr{I}}}}P({{\bf{I}}})\ln P({{\bf{I}}})\), C0 ≡ 〈C0〉 ≡ 1 sums up the normalisation condition and the remaining M − 1 constraints represent proper topological properties. The sum defining Shannon entropy runs over the set \({{\mathscr{I}}}\) of incidence matrices described in the introductory paragraph and known as canonical ensemble. Such an optimisation procedure defines the ERH framework, described by the expression

In the simplest case, the only global constraint is represented by T and leads to the expression

that can be rewritten as

with e−θ ≡ x and p ≡ x/(1 + x). The canonical ensemble, now, includes all N × L, rectangular matrices whose number of entries equal to 1 ranges from 0 to NL. According to such a model, the entries of the incidence matrix are i.i.d. Bernoulli random variables, i.e., Iiα ~ Ber(p), ∀ i, α; as a consequence, the total number of 1s, the degrees and the hyperdegrees obey Binomial distributions, being all defined as sums of i.i.d. Bernoulli random variables: specifically, T ~ Bin(NL, p), ki ~ Bin(L, p), ∀ i and hα ~ Bin(N, p), ∀ α, in turn, implying that 〈T〉RHM = NLp, \({\langle {k}_{i}\rangle }_{{{\rm{RHM}}}}=Lp\), ∀ i and \({\langle {h}_{\alpha }\rangle }_{{{\rm{RHM}}}}=Np\), ∀ α.

Parameter estimation

In order to ensure that 〈T〉RHM = T*, parameters have to be tuned opportunistically. To this aim, the likelihood maximisation principle can be invoked19: it prescribes to maximise the function \({{\mathcal{L}}}(\theta )\equiv \ln P({{{\bf{I}}}}^{* }| \theta )\) with respect to the unknown parameter that defines it. Such a recipe leads us to find

with T* = T(I*) indicating the empirical value of the constraint defining the RHM.

The RHM (also considered in12, although without providing any derivation from first principles, and in16, although without providing any recipe for the estimation of its parameter) is formally equivalent to the Bipartite Random Graph Model35. Such an identification is guaranteed by our focus on non-simple hypergraphs.

Estimation of the number of empty hyperedges

Non-simple hypergraphs admit the presence of empty as well as parallel hyperedges. As this type of structures is associated with configurations that may be regarded as problematic (since not observed in empirical data), we evaluate how frequently they appear in the ensembles induced by our benchmarks. Let us denote the number of empty hyperedges, i.e., the number of hyperedges whose hyperdegree equals zero, with \({N}_{{{\emptyset}}}\): since the hyperdegrees are i.i.d. Binomial random variables, \({N}_{{{\emptyset}}} \sim \,{\mbox{Bin}}\,(L,{p}_{{{\emptyset}}})\) where

is the probability for the generic hyperedge to be empty or, equivalently, for its hyperdegree to equal zero; the expected number of empty hyperedges reads

Let us, now, inspect the behaviour of \({p}_{{{\emptyset}}}\) the ensemble induced by the RHM as the density of 1s in the incidence matrix, i.e., p = T/NL, varies. The two regimes of interest are the dense one, defined by T → NL, and the sparse one, defined by T → 0. In the dense case, one finds

a relationship inducing \(\langle {N}_{{{\emptyset}}}\rangle {\to }^{T\to NL}0\): in words, the probability of observing empty hyperedges progressively vanishes as the density of 1s increases. Consistently, in the sparse case, one finds

a relationship inducing \(\langle {N}_{{{\emptyset}}}\rangle {\to }^{T\to 0}L\): in words, the probability of observing empty hyperedges progressively rises as the density of 1s decreases.

To evaluate the density of 1s in the incidence matrix in correspondence of which the transition from the sparse to the dense regime happens, let us consider the case N ≫ 1: more formally, this amounts to consider the asymptotic framework defined by letting N → + ∞ while posing T = O(L) - equivalently, defined by posing p = O(1/N). Since p = T/NL and h = T/L remain finite, the probability for the generic hyperedge to be empty obeys the relationship

i.e., remains finite as well: consistently, the expected number of empty hyperedges becomes

upon imposing Le−h≤1, i.e., that the expected number of empty hyperedges is at most 1, one derives what may be called filling threshold, corresponding to \({h}_{f}^{\,{\mbox{RHM}}\,}\equiv \ln L\). In words, a value

ensures that the expected number of empty hyperedges in our random hypergraph is strictly less than one. As a last observation, let us notice that evaluating \({p}_{{{\emptyset}}}\) in correspondence with the filling threshold returns the value 1/L.

A comparison with simple graphs: estimating the number of isolated nodes

A similar line of reasoning can be repeated for traditional graphs, the aim being, now, that of estimating N0, i.e., the number of isolated nodes. To this aim, let us consider the asymptotic framework defined by letting N → + ∞ while posing L = O(N) - equivalently, defined by posing q = O(1/N). Since q = 2L/N(N − 1) and k ≡ 2L/(N − 1) remains finite, the probability for the generic node i to be isolated obeys the relationship

i.e., remains finite as well: this, in turn, implies that the expected number of isolated nodes 〈N0〉 = Nq0 obeys the relationship

upon imposing Ne−k≤1, i.e., that the expected number of isolated nodes is at most 1, one derives the connectivity threshold, corresponding to \({k}_{c}^{\,{\mbox{RGM}}\,}\equiv \ln N\). In words, a value

ensures that the expected number of isolated nodes in our random graph is strictly less than one1. As a last observation, let us notice that evaluating q0 in correspondence with the connectivity threshold returns the value 1/N.

We also note that N is the only quantity playing a relevant role in the case of graphs, while an interplay between L and N can be observed in the case of hypergraphs: in both cases, however, a condition on connectivity is present, driven by the request that the objects under investigations (nodes on the one hand and hyperedges on the other) have a non-zero number of connections.

Estimation of the number of parallel hyperedges

Let us now move to considering the issue of parallel hyperedges. By definition, two, parallel hyperedges α and β are characterised by identical columns: hence, their Hamming distance, defined as the number of positions at which the corresponding symbols are different, is zero. More formally,

a sum whose generic addendum is 1 in just two cases: either Iiα = 1 and Iiβ = 0, or Iiα = 0 and Iiβ = 1. Since dαβ ~ Bin(N, 2p(1 − p)), one finds that

and that

∀ α ≠ β. Since p(1 − p) = (T/NL)(1 − T/NL), one finds that

i.e., p(1 − p) vanishes in both regimes, a result further implying that both \(P({d}_{\alpha \beta }=0){\to }^{T\to NL}1\) and \(P({d}_{\alpha \beta }=0){\to }^{T\to 0}1\) and that both \(\langle {d}_{\alpha \beta }\rangle {\to }^{T\to NL}0\) and \(\langle {d}_{\alpha \beta }\rangle {\to }^{T\to 0}0\): in words, the probability of observing parallel hyperedges progressively rises both as a consequence of having many 1s and as a consequence of having few 1s. Analogously, for the expected Hamming distance.

Let us, now, evaluate the expected Hamming distance between any two hyperedges α and β within the asymptotic framework defined by letting N → + ∞ while posing T = O(L) - equivalently, defined by posing p = O(1/N). Since p = T/NL and h ≡ T/L remains finite, one finds that

upon imposing 2h≥1, i.e., that the expected Hamming distance between any two hyperedges α and β is at least 1, one derives what may be called resolution threshold, corresponding to \({h}_{r}^{\,{\mbox{RHM}}\,}\equiv 1/2\). In words, a value \(p > {p}_{r}^{\,{\mbox{RHM}}}={h}_{r}^{{\mbox{RHM}}\,}/N=1/2N\) ensures that, on average, any two hyperedges α and β differ by at least one element.

To derive a global condition on the total number of parallel hyperedges, note that, although the overlaps between pairs of hyperedges cannot be treated as i.i.d. random variables, the expected number of parallel hyperedges can still be computed explicitly. Upon posing \({p}_{/\!/} \equiv {p}_{/\!/}^{\alpha \beta }\), it reads

Considering that \({p}_{/\!/ }{\to }^{N\to +\infty }{e}^{-2h}\) and imposing 〈N//〉≤1, i.e., that the expected number of parallel hyperedges is at most 1, one derives what may be called a multiple resolution threshold, corresponding to \({h}_{m}^{\,{\mbox{RHM}}}\equiv \ln L-\ln \sqrt{2}\lesssim {h}_{f}^{{\mbox{RHM}}\,}\). In words, a value

(also) ensures that the expected number of parallel hyperedges in our random hypergraph is strictly less than one.

Estimation of the percolation threshold

The two thresholds derived in the previous subsections emerge in consequence of the attempts to solve the problems related to the appearance of empty as well as parallel hyperedges. Remarkably, a third threshold exists: known as percolation threshold, it was first derived in ref. 12, following the definition according to which any two hyperedges are said to be connected if they share at least one node. Here, we re-derive the percolation threshold by considering the ‘hypergraph to graph’ projection (see also below): more formally, the total number of nodes shared by hyperedge α with any other hyperedge reads

its expected value being

imposing 〈σα〉 = 1 leads to find the value

which, in turn, induces the value \({h}_{p}^{\,{\mbox{RHM}}\,}\equiv \sqrt{N/L}\). In words, a value \(p > {p}_{p}^{\,{\mbox{RHM}}\,}\) ensures that any two hyperedges in our random hypergraph share, on average, at least one node.

Heterogeneous benchmarks: the HCM

Microcanonical formulation

The number of constraints can be enlarged to include the degrees, i.e., the sequence \({\{{k}_{i}\}}_{i = 1}^{N}\), and the hyperdegrees, i.e., the sequence \({\{{h}_{\alpha }\}}_{\alpha = 1}^{L}\). Although counting the number of configurations on which both sequences match their empirical values is a hard task, numerical recipes that shuffle the entries of a rectangular matrix, while preserving its marginals, exist9,36,37,38. It should be, however, noticed that, if not carefully implemented, algorithms of the kind may lead to a non-uniform exploration of the space of configurations39,40; moreover, the issue concerning the time needed to collect a sufficiently large number of configurations should be addressed even in the presence of an ergodic system. A recent proposal is that of extending the traditional Curveball algorithm to hypergraphs38.

Canonical formulation

Solving the corresponding problem in the canonical framework is, instead, straightforward. Indeed, Shannon entropy maximisation leads to

an expression that can be re-written as

with \({e}^{-{\alpha }_{i}}\equiv {x}_{i}\), ∀ i, \({e}^{-{\beta }_{\alpha }}\equiv {y}_{\alpha }\), ∀ α and piα ≡ xiyα/(1 + xiyα), ∀ i, α. According to such a model, the entries of the incidence matrix of a hypergraph are independent random variables that obey different Bernoulli distributions, i.e., Iiα ~ Ber(piα), ∀ i, α. As a consequence, both degrees and hyperdegrees obey Poisson-Binomial distributions, i.e. \({k}_{i} \sim \,{\mbox{PoissBin}}\,(L,{\{{p}_{i\alpha }\}}_{\alpha = 1}^{L})\), ∀ i and \({h}_{\alpha } \sim \,{\mbox{PoissBin}}\,(N,{\{{p}_{i\alpha }\}}_{i = 1}^{N})\), ∀ α24.

Parameter estimation

In this case, solving the likelihood maximisation problem amounts to solving the system of coupled equations

ensuring that \(\langle {k}_{i}\rangle ={k}_{i}^{* }\), ∀ i, \(\langle {h}_{\alpha }\rangle ={h}_{\alpha }^{* }\), ∀ α (and, as a consequence, 〈T〉 = T*). In case hypergraphs are sparse and in the absence of hubs

The HCM reduces to a ‘partial’’ Configuration Model24 when either the degree or the hyperdegree sequence is left unconstrained (see also Supplementary Note 1 of the Supplementary Information). The canonical ensemble of each randomisation model (Supplementary Table 1 in the Supplementary Information sums up the sets of constraints defining them) can be explicitly sampled by considering each entry of I, drawing a real number uiα ∈ U[0, 1] and posing Iiα = 1 if uiα≤piα, ∀ i, α.

The HCM is formally equivalent to the Bipartite Configuration Model35. Such an identification is guaranteed by our focus on non-simple hypergraphs.

Estimation of the number of empty hyperedges

Let us, now, consider the probability for the generic hyperedge α to be empty or, in other terms, that its hyperdegree hα is zero. Upon remembering that \({h}_{\alpha } \sim \,{\mbox{PoissBin}}\,(N,{\{{p}_{i\alpha }\}}_{i = 1}^{N})\), one finds

while the expected number of empty hyperedges, now, reads

As previously done, let us inspect the behaviour of the aforementioned quantities on the ensemble induced by the HCM as the density of 1s in the incidence matrix varies. Although it depends on (the heterogeneity of) the sets of coefficients \({\{{x}_{i}\}}_{i = 1}^{N}\) and \({\{{y}_{\alpha }\}}_{\alpha = 1}^{L}\), general conclusions can be still drawn within a simpler framework. To this aim, let us consider the functional form reading

where the vector of fitnesses \({\{{f}_{i}\}}_{i = 1}^{N}\) accounts for the heterogeneity of nodes, the vector of fitnesses \({\{{g}_{\alpha }\}}_{\alpha = 1}^{L}\) accounts for the heterogeneity of hyperedges, and z tunes the density of 1s in the incidence matrix - ‘partial’’ Configuration Models are recovered upon posing either fi = 1, ∀ i or gα = 1, ∀ α.) Within such a framework, the fitnesses of the nodes and the fitnesses of the hyperedges can be drawn from any distribution. The dense and sparse regimes are now defined by the positions z → + ∞ and z → 0, respectively. In the dense case, one finds

a relationship inducing \(\langle {N}_{{{\emptyset}}}\rangle {\to }^{z\to +\infty }0\): in words, the probability of observing empty hyperedges progressively vanishes as the density of 1s increases. Consistently, in the sparse case one finds

a relationship inducing \(\langle {N}_{{{\emptyset}}}\rangle {\to }^{z\to 0}L\): in words, the probability of observing empty hyperedges progressively rises as the density of 1s decreases.

For what concerns the filling threshold, a derivation that is similar-in-spirit to the one carried out for the case of the RHM can be sketched. Let us pose ourselves in the sparse regime: since \(1-{p}_{i\alpha }\simeq {e}^{-{p}_{i\alpha }}\), the probability for the generic hyperedge to be empty satisfies the chain of relationships

consistently, the expected number of empty hyperedges becomes

and imposing \(\langle {N}_{{{\emptyset}}}\rangle \le 1\), i.e., that the expected number of empty hyperedges is at most 1, one derives a global condition to be satisfied by the hyperdegrees. In general terms, the aforementioned condition leads to require \({e}^{-{h}_{\alpha }}=O(1/L)\), i.e., \({h}_{\alpha }=O(\ln L)\), ∀ α and \({p}_{i\alpha }=O(\ln L/N)\), ∀ i, α.

A comparison with simple graphs: estimating the number of isolated nodes

Coming to traditional graphs, the aim is now to estimate the number of isolated nodes. In this case, \(1-{p}_{ij}\simeq {e}^{-{p}_{ij}}\) and the probability for the generic node i to be isolated reads

consistently, the expected number of isolated nodes becomes

and imposing 〈N0〉≤1, i.e., that the expected number of isolated nodes is at most 1, one derives a global condition to be satisfied by the degrees. In general terms, the aforementioned condition leads to require \({e}^{-{k}_{i}}=O(1/N)\), i.e., \({k}_{i}=O(\ln N)\), ∀ i and \({p}_{ij}=O(\ln N/N)\), ∀ i < j.

Estimation of the number of parallel hyperedges

As in the case of the RHM, we consider the Hamming distance between the columns representing the two hyperedges α and β. Since, now, \({d}_{\alpha \beta } \sim \,{\mbox{PoissBin}}\,(N,{\{{q}_{i}^{\alpha \beta }\}}_{i = 1}^{N})\), where \({q}_{i}^{\alpha \beta }\equiv {p}_{i\alpha }(1-{p}_{i\beta })+{p}_{i\beta }(1-{p}_{i\alpha })\) with piα = zfigα/(1 + zfigα) and piβ = zfigβ/(1 + zfigβ), one finds that

and that

∀ α ≠ β. Since

i.e., \({q}_{i}^{\alpha \beta }\) vanishes in both regimes, one finds that both \(P({d}_{\alpha \beta }=0){\to }^{z\to +\infty }1\) and \(P({d}_{\alpha \beta }=0){\to }^{z\to 0}1\) and that both \(\langle {d}_{\alpha \beta }\rangle {\to }^{z\to +\infty }0\) and \(\langle {d}_{\alpha \beta }\rangle {\to }^{z\to 0}0\): as in the case of the RHM, the probability of observing parallel hyperedges progressively rises both as a consequence of having many 1s and as a consequence of having few 1s. Analogously, for the expected Hamming distance.

For what concerns the resolution threshold, a derivation that is similar-in-spirit to the one carried out for the case of the RHM can be sketched. Let us pose ourselves in the sparse regime and consider that \(1-{q}_{i}^{\alpha \beta }\simeq {e}^{-{q}_{i}^{\alpha \beta }}\simeq {e}^{-({p}_{i\alpha }+{p}_{i\beta })}\). As a consequence

and

Imposing 〈dαβ〉≥1, i.e., that the expected Hamming distance between any two hyperedges α and β is at least 1, amounts to require that P(dαβ = 0)≤e−1 - thus recovering the same condition holding true in the case of the RHM where, in fact, \({p}_{/\!/ }\equiv {p}_{/\!/ }^{\alpha \beta }{\to }^{N\to +\infty }{e}^{-2h}\).

The expected number of parallel hyperedges, now, reads

upon imposing 〈N//〉≤1, i.e., that the expected number of parallel hyperedges is at most 1, one derives a global condition to be satisfied by the hyperdegrees. In general terms, the aforementioned condition leads to require \({e}^{-({h}_{\alpha }+{h}_{\beta })}=O(1/{L}^{2})\), i.e., \({h}_{\alpha }=O(\ln L)\), ∀ α and \({p}_{i\alpha }=O(\ln L/N)\), ∀ i, α.

Estimation of the percolation threshold

For what concerns the percolation threshold, the expected value of the total number of nodes shared by hyperedge α with any other hyperedge, now, reads

and imposing 〈σα〉 = 1 leads to a global condition to be satisfied. In general terms, the aforementioned condition leads to require piαpiβ = O(1/NL), i.e., \({p}_{i\alpha }=O(1/\sqrt{NL})\), ∀ i, α.

Results

Hypergraphs in the dense and sparse regime

Let us start by verifying the correctness of the estimations of the filling, multiple resolution and percolation thresholds provided by our benchmarks: to this aim, we have considered the values N = 300 and L = 1000.

The RHM

Each quantity has been plotted as a function of p ∈ [10−6, 1]. The dense (sparse) regime is recovered for large (small) values of p. Each dot of Figs. 2–4 represents an average taken over an ensemble of 103 configurations explicitly sampled from the RHM and is accompanied by the corresponding 95% confidence interval, calculated via the bootstrap method41.

More in detail, the trends of \(\langle {N}_{{{\emptyset}}}\rangle /L={p}_{{{\emptyset}}}={(1-p)}^{N}{\to }^{N\to +\infty }{e}^{-h}\), i.e., the probability for the generic hyperedge to be emtpy is represented in (a) and the one of \(P({N}_{{{\emptyset}}} > 0)=1-{[1-{(1-p)}^{N}]}^{L}{\to }^{N\to +\infty }1-{(1-{e}^{-h})}^{L}\), i.e., the probability of observing at least one empty hyperedge is represented in (b). Evaluating them in correspondence of \({p}_{f}^{\,{\mbox{RHM}}}={h}_{f}^{{\mbox{RHM}}\,}/N=\ln L/N\simeq 0.023\) (vertical line) returns, respectively, the values 1/L = 10−3 and 1 − (1−1/L)L ≃ 0.6323. The dense (sparse) regime is recovered for large (small) values of p. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the RHM) and is accompanied by the corresponding 95% confidence interval.

The filling threshold

Figure 2a depicts the (analytical) trend of \(\langle {N}_{{{\emptyset}}}\rangle /L={p}_{{{\emptyset}}}={(1-p)}^{N}\) (solid line): its agreement with the numerical estimations (dots) confirms the correctness of our formula.

Although the value of the filling threshold has been determined by inspecting the asymptotic behaviour of \(\langle {N}_{{{\emptyset}}}\rangle \), the quantity showing the neatest transition from the sparse to the dense regime is the probability of observing at least one empty hyperedge

where we have exploited the fact that \(P({N}_{{{\emptyset}}} > 0)\) is nothing but the complementary of the probability that no hyperedge is empty. Since \({p}_{{{\emptyset}}}{\to }^{N\to +\infty }{e}^{-h}\), evaluating such an expression in correspondence of \({h}_{f}^{\,{\mbox{RHM}}\,}=\ln L\simeq 6.907\) returns the value 1/L = 10−3 (see Fig. 2a). As a consequence, the result \(P({N}_{{{\emptyset}}} > 0){\to }^{N\to +\infty }1-{(1-{e}^{-h})}^{L}\) is numerically recovered (see Fig. 2b). In words, although the filling threshold ensures that each, single hyperedge is empty with an overall small probability, the likelihood of observing at least one, empty hyperedge is still large (i.e., ≃ 2/3): the steepness of the trend of \(P({N}_{{{\emptyset}}} > 0)\), however, suggests it to quickly vanish as the density of 1s in the incidence matrix crosses the value \({p}_{f}^{\,{\mbox{RHM}}}={h}_{f}^{{\mbox{RHM}}\,}/N=\ln L/N\simeq 0.023\).

The multiple resolution threshold

Figure 3a depicts the trend of 2〈N//〉/L(L − 1) = [1−2p(1−p)]N = p// (solid line): again, its agreement with the numerical estimations (dots) confirms the correctness of our formula.

More in detail, the trends of \(2\langle {N}_{\parallel }\rangle /L(L-1)={[1-2p(1-p)]}^{N}={p}_{\parallel }{\to }^{N\to +\infty }{e}^{-2h}\), i.e., the probability for the generic pair of hyperedges to be parallel and P(N∥ > 0), i.e., the probability of observing at least one pair of parallel hyperedges, as functions of p, are represented, respectively, in (a and b). Evaluating them in correspondence of \({p}_{m}^{\,{\mbox{RHM}}}={h}_{m}^{{\mbox{RHM}}\,}/N\lesssim \ln L/N\simeq 0.023\) (vertical line) returns, respectively, the values 1/L2 = 10−6 and ≃ 0.6. The dense (sparse) regime is recovered for large (small) values of p. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the RHM) and is accompanied by the corresponding 95% confidence interval.

Evaluating \({p}_{/\!/ }{\to }^{N\to +\infty }{e}^{-2h}\) in correspondence of \({h}_{m}^{\,{\mbox{RHM}}\,}\lesssim \ln L\simeq 6.907\) returns the value 1/L2 = 10−6 (see Fig. 3a): in words, the aforementioned, critical value causes the likelihood of observing any two parallel hyperedges to be almost the square of the probability for each single hyperedge to be empty (i.e., 1/L, see the comments under Eq. (17)).

As the overlaps between pairs of hyperedges cannot be treated as i.i.d. random variables, evaluating the probability of observing at least one pair of parallel hyperedges forces us to proceed in a purely numerical fashion. Calculating P(N∥ > 0) in correspondence of \({p}_{m}^{\,{\mbox{RHM}}}={h}_{m}^{{\mbox{RHM}}\,}/N\lesssim \ln L/N\simeq 0.023\) returns the value 0.6 (see Fig. 3b).

Carrying out such an estimation in the aforementioned regime is, however, instructive as it leads to the expression \(P({N}_{/\!/ } > 0)=1-{(1-{p}_{/\!/ })}^{L(L-1)/2}=1-{\{1-{[1-2p(1-p)]}^{N}\}}^{L(L-1)/2}{\to }^{N\to +\infty }1-{[1-{e}^{-2h}]}^{L(L-1)/2}\) that, evaluated in correspondence of \({h}_{m}^{\,{\mbox{RHM}}\,}\lesssim \ln L\simeq 6.907\), returns the value \(P({N}_{/\!/ } > 0)=1-{(1-1/{L}^{2})}^{L(L-1)/2}\simeq 0.393\) - whose difference with 0.6, obtained numerically, lets us fully appreciate the role played by correlations.

The percolation threshold

Let us, now, focus on the projection of our hypergraph onto the layer of hyperedges. The generic hyperedge is isolated either because is not ‘connected’’ with any node or because is a singleton (i.e., it is ‘connected’’ with a node with which no other hyperedge is ‘connected’’): in symbols,

In order to evaluate p0 in correspondence of the percolation threshold, let us consider that L = s2N, with s2 = 10/3; one, then, finds

whose numerical value amounts to 0.631 (see Fig. 4a): in words, the value \({p}_{0}(p={p}_{p}^{\,{\mbox{RHM}}\,})\lesssim 2/3\) implies that the expected number of isolated hyperedges in the projection 〈N0〉 = Lp0 tends to 2L/3 as p tends to \({p}_{p}^{\,{\mbox{RHM}}\,}\).

More in detail, trends of 〈N0〉/L = p0, i.e., the probability for the generic hyperedge to be isolated in the projection, P(N0 > 0), i.e., the probability of observing at least one, isolated hyperedge in the projection and ∣LCC∣/N, i.e., the percentage of nodes belonging to the largest connected component (LCC), are represented as functions of p, respectively in (a–c). Evaluating the first two in correspondence of \({p}_{p}^{\,{\mbox{RHM}}}={h}_{p}^{{\mbox{RHM}}\,}/N=1/\sqrt{NL}\simeq 0.002\) (vertical line) returns, respectively, the values \({e}^{({e}^{-s}-1)/s}\simeq 0.631\) and ≃ 1. The dense (sparse) regime is recovered for large (small) values of p. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the RHM) and is accompanied by the corresponding 95% confidence interval.

Pairs of hyperedges cannot be treated as independent. Let us, in fact, consider the bipartite representation of a hypergraph: as adjacent pairs of hyperedges (say α, β and β, γ) may share some neighbours (on the opposite layer), the number of common neighbours of α and β will, in general, covariate with the number of common neighbours of β and γ; therefore, evaluating the probability of observing at least one, isolated hyperedge in the projection forces us to proceed in a purely numerical fashion. Calculating P(N0 > 0) in correspondence of \({p}_{p}^{\,{\mbox{RHM}}}={h}_{p}^{{\mbox{RHM}}\,}/N=1/\sqrt{NL}\simeq 0.002\) practically returns 1 (see Fig. 4b). From the perspective of a hypergraph connectedness, the percolation threshold is ‘less strict’’ than the filling threshold, allowing for a larger number of disconnected nodes (2/3 of the total versus 1).

As before, estimating the percolation threshold in the regime where the pairs of hyperedges behave as i.i.d. Binomial random variables is instructive. In this case, projecting a bipartite network onto the layer of hyperedges amounts to connect any two of them with probability \(1-{(1-{p}^{2})}^{N}\)—i.e., the complementary of the probability \({(1-{p}^{2})}^{N}\) of not sharing any node. The number of isolated nodes, thus, obeys the relationship N0 ~ Bin(L, p0), with

being the probability for the generic hyperedge to be isolated: in words, such an expression returns the probability for the generic hyperedge to not share any node - with probability \({(1-{p}^{2})}^{N}\) - with any other hyperedge—with a probability amounting to the previous one raised to the power of L. As a consequence, evaluating p0 in correspondence with the percolation threshold returns a value tending to 1/3, a result further implying that the expected number of isolated hyperedges in the projection 〈N0〉 = Lp0 tends to the value L/3 - both letting us fully appreciate the role played by correlations.

Within such a context, the probability of observing at least one isolated hyperedge in the projection satisfies the chain of relationships \(P({N}_{0} > 0)=1-P({N}_{0}=0)=1-{(1-{p}_{0})}^{L}=1-{[1-{(1-{p}^{2})}^{NL}]}^{L}{\to }^{N\to +\infty }1-{(1-{e}^{-1})}^{L}\): as the last expression quickly converges to 1 for large values of L, the same qualitative behaviour observed before is thus recovered.

Finally, one may wonder which kind of mesoscale structure is identified by the percolation threshold: the answer is provided by Fig. 4c, showing the appearance of a large connected component - as also pointed out in12, the presence of a large connected component is inspected by projecting our hypergraph onto the layer of nodes, whose connectedness is ensured by requiring the connectedness of hyperedges.

The role of thresholds in a hypergraph evolution

Let us, now, make a couple of observations. The first one concerns the result according to which \({h}_{m}^{\,{\mbox{RHM}}}\lesssim {h}_{f}^{{\mbox{RHM}}\,}\): such a relationship suggests that, while filling a large portion of the incidence matrix is not required to observe a limited amount of parallel hyperedges (see Fig. 3b), when empty hyperedges are no longer observed, parallel hyperedges are no longer observed as well.

The second one concerns the result according to which either \({h}_{f}^{\,{\mbox{RHM}}}\le {h}_{p}^{{\mbox{RHM}}\,}\) or \({h}_{f}^{\,{\mbox{RHM}}}\ge {h}_{p}^{{\mbox{RHM}}\,}\). By progressively rising the parameter p, two, different thresholds are, thus, met: if \({h}_{f}^{\,{\mbox{RHM}}}\le {h}_{p}^{{\mbox{RHM}}\,}\), the filling threshold \({p}_{f}^{\,{\mbox{RHM}}\,}=\ln L/N\) is met before the percolation threshold \({p}_{p}^{\,{\mbox{RHM}}\,}=1/\sqrt{NL}\), i.e., hyperedges are filled before they start sharing nodes—as a consequence, singletons appear; if \({h}_{f}^{\,{\mbox{RHM}}}\ge {h}_{p}^{{\mbox{RHM}}\,}\), the percolation threshold \({p}_{p}^{\,{\mbox{RHM}}\,}=1/\sqrt{NL}\) is met before the filling threshold \({p}_{f}^{\,{\mbox{RHM}}\,}=\ln L/N\), i.e., hyperedges start sharing nodes before they are filled—as a consequence, no singleton appears before the filling threshold is crossed.

Notice that, for simple graphs, \({k}_{p}^{\,{\mbox{RGM}}}=1\le {k}_{c}^{{\mbox{RGM}}\,}=\ln N\), i.e., by progressively rising the parameter q, the percolation threshold is always met before the connectivity threshold.

The HCM

In order to carry out the numerical simulations in the case of the HCM, we have followed the procedure described in the previous sections and drawn both the fitnesses of nodes and those of hyperedges from a Pareto distribution with α = 2 - other fat-tailed distributions were considered: qualitatively, results do not change. Each quantity has been plotted as a function of ρ(z) = 〈T〉/NL ∈ [10−6, 1] where \(\langle T\rangle ={\sum }_{i=1}^{N}{\sum }_{\alpha =1}^{L}z{f}_{i}{g}_{\alpha }/(1+z{f}_{i}{g}_{\alpha })\) varies with z. The dense (sparse) regime is recovered for large (small) values of z. Each dot of Figs. 5–7 represents an average taken over an ensemble of 103 configurations explicitly sampled from the HCM and is accompanied by the corresponding 95% confidence interval.

More in detail, trends of \(\langle {N}_{{{\emptyset}}}\rangle /L=\overline{{p}_{{{\emptyset}}}}\), i.e., the probability for the generic hyperedge to be empty and \(P({N}_{{{\emptyset}}} > 0)\simeq 1-{\prod }_{\alpha =1}^{L}(1-{e}^{-{h}_{\alpha }})\), i.e., the probability of observing at least one empty hyperedge, are represented as functions of the connectance ρ, respectively in (a and b). Evaluating the latter in correspondence of the filling threshold, reading \({p}_{f}^{\,{\mbox{HCM}}\,}\simeq 0.032\) (vertical line), returns the value ≃ 0.642. The dense (sparse) regime is recovered for large (small) values of z. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the HCM) and is accompanied by the corresponding 95% confidence interval.

The filling threshold

Figure 5a depicts the (analytical) trend of \(\langle {N}_{{{\emptyset}}}\rangle /L={\sum }_{\alpha =1}^{L}{p}_{{{\emptyset}}}^{\alpha }/L=\overline{{p}_{{{\emptyset}}}}\) (solid line): as in the case of the RHM, its agreement with the numerical estimations (dots) confirms the correctness of our formula. Deriving an explicit expression for the filling threshold in the case of the HCM is a rather difficult task; still, we can proceed in a purely numerical fashion and individuate the value of the density of 1s in the incidence matrix guaranteeing that the expected number of empty hyperedges (divided by L) amounts to 1 (divided by L): although its, precise, numerical value depends on the values of the fitnesses, such a threshold still lies in the right tail of the trend induced by the HCM and reads \({p}_{f}^{\,{\mbox{HCM}}\,}\simeq 0.032\) (see Fig. 5a).

Even in this case, the quantity showing the neatest transition from the sparse to the dense regime is the probability of observing at least one empty hyperedge

that, in the sparse regime, can be approximated as \(P({N}_{{{\emptyset}}} > 0)\simeq 1-{\prod }_{\alpha =1}^{L}(1-{e}^{-{h}_{\alpha }})\): as Fig. 5b shows, evaluating \(P({N}_{{{\emptyset}}} > 0)\) in correspondence of the filling threshold returns 0.642. Finally, let us explicitly notice that the value of the filling threshold is shifted on the right with respect to its homogeneous counterpart, an evidence probably due to the presence of small fitnesses that increase the probability of observing at least one, empty hyperedge, hence requiring a larger value of z to let \(P({N}_{{{\emptyset}}} > 0)\) vanish.

The multiple resolution threshold

Figure 6a depicts the (analytical) trend of \(2\langle {N}_{/\!/ }\rangle /L(L-1)=2\mathop{\sum }_{\alpha =1}^{L}\mathop{\sum }_{\begin{array}{c}\beta =1\\ \beta > \alpha \end{array}}^{L}{p}_{/\!/ }^{\alpha \beta }/L(L-1)=\overline{{p}_{/\!/ }}\) (solid line): as in the case of the RHM, its agreement with the numerical estimations (dots) confirms the correctness of our formula.

More in detail, trends of \(2\langle {N}_{\parallel }\rangle /L(L-1)=\overline{{p}_{\parallel }}\), i.e., the probability for the generic pair of hyperedges to be parallel and P(N∥ > 0), i.e., the probability of observing at least one pair of parallel hyperedges, are represented as functions of the connectance ρ, respectively, in (a and b). Evaluating the latter in correspondence with the multiple resolution threshold, reading \({p}_{m}^{\,{\mbox{HCM}}\,}\simeq 0.031\) (vertical line), returns 0.493. The dense (sparse) regime is recovered for large (small) values of z. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the HCM) and is accompanied by the corresponding 95% confidence interval.

Adopting the strategy described in the previous paragraph, i.e., that of requiring that the expected number of parallel hyperedges (divided by L(L − 1)/2) amounts to 1 (divided by L(L − 1)/2), we found that \({p}_{m}^{\,{\mbox{HCM}}\,}\simeq 0.031\) (see Fig. 6a). Numerically clculating P(N∥ > 0) in correspondence of the multiple resolution threshold returns the value 0.493 (see Fig. 6b).

Let us notice that the value of the multiple resolution threshold no longer coincides with the value of the filling threshold, although it is still shifted to the right with respect to its homogeneous counterpart.

The percolation threshold

The probability for the generic hyperedge to be isolated in the projection, now, reads

that, in the sparse regime, can be approximated as \({p}_{0}^{\alpha }\simeq {\prod }_{i=1}^{N}[(1-{p}_{i\alpha })+{p}_{i\alpha }{e}^{-{k}_{i}}]\). Adopting the strategy described in the previous paragraphs, i.e., that of requiring that the expected value of the total number of nodes shared by any hyperedge with any other hyperedge amounts to 1 - in symbols, \(\overline{\langle \sigma \rangle }={\sum }_{\alpha =1}^{L}\langle {\sigma }_{\alpha }\rangle /L=1\) - we found that \({p}_{p}^{\,{\mbox{HCM}}\,}\simeq 0.001\): since \(\langle {N}_{0}\rangle =\mathop{\sum }_{\alpha =1}^{L}{p}_{0}^{\alpha }\), evaluating \(\langle {N}_{0}\rangle /L=\mathop{\sum }_{\alpha =1}^{L}{p}_{0}^{\alpha }/L=\overline{{p}_{0}}\) and P(N0 > 0) in correspondence of the percolation threshold returns respectively the values 0.766 (see Fig. 7a) and ≃ 1 (see Fig. 7b). For what concerns the hypergraph connectedness, the same conclusion drawn in the case of the RHM holds true, as the percolation threshold allows for a larger number of disconnected nodes (3/4 of the total versus 1).

More in detail, trends of \(\langle {N}_{0}\rangle /L=\overline{{p}_{0}}\), i.e., the probability for the generic hyperedge to be isolated in the projection, P(N0 > 0), i.e., the probability of observing at least one, isolated hyperedge in the projection, and ∣LCC∣/N, i.e., the percentage of nodes belonging to the largest connected component (LCC), are repesented as functions of the connectance ρ, respectively, in (a–c). Evaluating the first two in correspondence of \({p}_{p}^{\,{\mbox{HCM}}\,}\simeq 0.001\) (vertical line), returns the values ≃ 0.766 and ≃ 1. The dense (sparse) regime is recovered for large (small) values of p. Each dot represents an average taken over an ensemble of 103 configurations (explicitly sampled from the HCM) and is accompanied by the corresponding 95% confidence interval.

Analogously, the mesoscale structure individuated by the percolation threshold consists of a large connected component constituted (see Fig. 7c).

Solving the HCM on real-world hypergraphs

In order to test our benchmarks on real-world configurations, we have focused on a number of data sets taken from Austin R. Benson’s website (https://www.cs.cornell.edu/~arb/data/), i.e., the contact-primary-school, the email-Enron and the NDC-classes ones.

Although the parameters defining the HCM must be numerically determined by solving the system of equations induced by the likelihood maximisation, when the system under analysis is sparse they can be approximated as described in Supplementary Note 1 of the Supplementary Information. Such an approximation leads to the expression

that, as Supplementary Fig. 1 in the Supplementary Information shows, is quite accurate for each data set considered here—in fact, one can safely assume that \({x}_{i}\simeq {k}_{i}^{* }/\sqrt{{T}^{* }}\), ∀ i and \({h}_{\alpha }^{* }/\sqrt{{T}^{* }}\), ∀ α.

‘‘Hypergraph to graph’’ projection

The canonical formalism that we have adopted leads to factorisable distributions, i.e., distributions that can be written as a product of pair-wise probability distributions; this allows the expectation of several quantities of interest to be evaluated analytically.

Let us start by considering the matrix, introduced in4, reading

with K being the diagonal matrix whose i-th entry reads ki; according to the definition above, it induces a projection of a hypergraph onto a weighted graph, whose generic entry

returns the number of hyperedges both i and j belong to - more explicitly, \({w}_{ij}={\sum }_{\alpha =1}^{L}{I}_{i\alpha }{I}_{j\alpha }\), i ≠ j and \({w}_{ii}={\sum }_{\alpha =1}^{L}{I}_{i\alpha }{I}_{i\alpha }-{k}_{i}={\sum }_{\alpha =1}^{L}{I}_{i\alpha }-{k}_{i}={k}_{i}-{k}_{i}=0\). In other words, W represents the object most closely resembling a traditional adjacency matrix. The null models discussed so far can be employed to calculate 〈wij〉, i ≠ j that, in a perfectly general fashion, reads

as we said, the total number of hyperedges shared by node i with any other node in the hypergraph (in a sense, its ‘strength’’ - see also Fig. 8) can be computed as

whose expected value reads

Notice that while the degree of each node (i.e., the number of hyperedges that are incident to it) reads {ki} = (3, 3, 3, 3, 4, 1), the sigma of each node (i.e., the total number of hyperedges shared by it with any other node or, equivalently, the strength on the projection) reads {σi} = (8, 8, 9, 6, 10, 3) and the kappa of each node (i.e., the number of nodes it shares an hyperedge with or, equivalently, the degree on the projection) reads {κi} = (5, 4, 5, 4, 5, 3).

As further confirmed by Supplementary Fig. 1 in the Supplementary Information, the approximation provided by Eq. (58) allows us to pose \({\langle {\sigma }_{i}\rangle }_{{{\rm{HCM}}}}\simeq {k}_{i}^{* }{\sum }_{\alpha =1}^{L}{({h}_{\alpha }^{* })}^{2}/{T}^{* }\), ∀ i. Interestingly, as Fig. 9a shows, the HCM overestimates the extent to which any two nodes of the email-Enron data set overlap: in words, such a real-world hypergraph is more compartmentalised than expected.

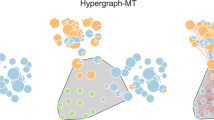

More in detail, {σi} vs. \(\{{\langle {\sigma }_{i}\rangle }_{{{\rm{HCM}}}}\}\) (a), {κi} vs. \(\{{\langle {\kappa }_{i}\rangle }_{{{\rm{HCM}}}}\}\) (b), {Yi} vs. \({\langle \left\{\right.{Y}_{i}\rangle }_{{{\rm{HCM}}}}\left\}\right.\) (c), and {CECi} vs. \(\{{\langle {{{\rm{CEC}}}}_{i}\rangle }_{{{\rm{HCM}}}}\}\) (d). The HCM overestimates the extent to which any two nodes overlap, as well as the CEC; the disparity ratio, instead, is underestimated by it. These results can be understood by considering that the HCM just constrains the degree sequences, hence inducing an ensemble where connections are ‘distributed'' more evenly than observed.

Let us, now, extend the concept of assortativity to hypergraphs. To this aim, we consider the quantity named average incident hyperedges degree, defined as

and representing the arithmetic mean of the degrees of the hyperedges including node i. An analytical approximation of its expected value can be provided as well:

Disparity ratio and degree in the projection

More information about the patterns shaping real-world hypergraphs can be obtained upon defining the ratio fij = wij/σi, i ≠ j that induces the quantity

known as disparity ratio and quantifying the (un)evenness of the distribution of the weights constituting the strength of node i over the \({\kappa }_{i}={\sum }_{\begin{array}{c}j=1\atop (j\ne i)\end{array}}^{N}{{\Theta }}[{w}_{ij}]\equiv {\sum }_{\begin{array}{c}j=1\atop j\ne i\end{array}}^{N}{a}_{ij}\) links characterising its connectivity - since aij = 1 if nodes i and j share, at least, one hyperedge, κi is the degree of node i in the projection of the hypergraph (see also Figs. 8 and 9b). Since, under the RHM, wij ~ Bin(L, p2), we find that \(\langle {a}_{ij}\rangle =1-{(1-{p}^{2})}^{L}\), i.e., the expected value of aij coincides with the probability of observing a non-zero overlap. Under the HCM, instead, \({w}_{ij} \sim \,{\mbox{PoissBin}}\,(L,{\{{p}_{i\alpha }{p}_{j\alpha }\}}_{\alpha = 1}^{L})\), hence

Let us also notice that

in case weights are equally distributed among the connections established by node i, i.e., wij = aijσi/κi, i ≠ j. Any larger value signals an excess concentration of weight in one or more links. An analytical approximation of the expected value of the disparity ratio of node i can be provided as well:

Contrary to what has been previously observed, the expected value of the disparity ratio cannot always be safely decomposed as a ratio of expected values, not even if the ‘full’’ HCM is employed. In fact, while this approximation works relatively well for the contact-primary-school data set, it does not for the email-Enron and the NDC-classes ones (see also Supplementary Fig. 3 in the Supplementary Information). For this reason, the expected value of the disparity ratio has been evaluated by explicitly sampling the ensemble of incidence matrices induced by the ‘full’ HCM. In any case, as Fig. 9c shows, such a null model underestimates the disparity ratio characterising each node of the email-Enron data set: in words, the empirical overlap between any two nodes is (much) less evenly ‘distributed’ than expected. A similar conclusion can be drawn by considering \(\langle {\kappa }_{i}\rangle ={\sum }_{i=1}^{N}\left[1-{\prod }_{\alpha =1}^{L}(1-{p}_{i\alpha }{p}_{j\alpha })\right]\): as Fig. 9b shows, the degree of the nodes in the projection tend to be significantly smaller than expected, meaning that hyperedges concentrate on fewer edges than expected. This observation is in line with recent works showing the encapsulation and ‘simpliciality’’ of real-world hypergraphs42,43.

Eigenvector centrality

Centrality measures for hypergraphs have been defined as well. An example is provided by the clique motif eigenvector centrality (CEC), defined in44 (see also Supplementary Note 3 of the Supplementary Information): CECi corresponds to the i-th entry of the Perron-Frobenius eigenvector of W. As Fig. 9d shows, the HCM underestimates the CEC as well: such a result can be understood by considering that the HCM constrains only the degree sequences, hence inducing an ensemble where connections are ‘distributed’’ more evenly than observed, an evidence letting the nodes overlap more, thus causing the entries of 〈W〉 to be overall larger and less dissimilar, as well as those of its Perron-Frobenius eigenvector.

Confusion matrix

Let us, now, consider the set of indices constituting the so-called confusion matrix (see also Supplementary Note 4 of the Supplementary Information). They are intended to quantify the capability of a given network model in reproducing microscopic properties, such as the position of 1s and 0s by explicitly comparing their empirical location with the one expected under the chosen model. They are named true positive rate (TPR), i.e., the percentage of 1s correctly recovered by a given method, whose expected value reads

specificity (SPC), i.e., the percentage of 0s correctly recovered by a given method, whose expected value reads

positive predictive value (PPV), i.e., the percentage of 1s correctly recovered by a given method with respect to the total number of 1s predicted by it, whose expected value reads

accuracy (ACC), measuring the overall performance of a given method in correctly placing both 1s and 0s, whose expected value reads

Results on the confusion matrix of a number of real-world hypergraphs reveal that the large sparsity of the latter ones makes it difficult to reproduce the TPR and the PPV (see also Supplementary Table 2 in the Supplementary Information); on the other hand, the capability of the HCM (both in its ‘full’’ and approximated version) to reproduce the density of 1s—and, as a consequence, the density of 0s—ensures the SPC to be recovered quite precisely, in turn ensuring the overall ACC of the model to be large (for an overall evaluation of the performance of the HCM in reproducing real-world hypergraphs, see also Supplementary Table 3 in Supplementary Note 5 of the Supplementary Information).

Community detection

Communities are commonly understood as densely connected groups of nodes. Representing an hypergraph via its incidence matrix allows this statement to be made more precise from a statistical perspective: in fact, the null models discussed so far can be employed to test if any two nodes share a significantly large number of hyperedges - hence can be clustered together, should this be the case. In other words, it is possible to devise a ‘validation procedure’’ that filters the projection described by the matrix W by removing the entries that do not satisfy the requirement above.

To this aim, we can adapt the recipe proposed in24 to project bipartite networks and summarised in the following. One, first, computes

for each pair of nodes; f(x) depends on the chosen null model: in case the RHM is employed, it coincides with the Binomial distribution Bin(x∣L, p); in case the HCM is employed, it coincides with the Poisson-Binomial distribution \(\,{\mbox{PoissBin}}\,(x| L,{\{{p}_{i\alpha }{p}_{j\alpha }\}}_{\alpha = 1}^{L})\). Second, one implements the FDR procedure, designed to handle multiple tests of hypothesis45: in practice, after ranking the p-values in increasing order, i.e., p value1≤p-value2≤ ⋯ ≤p-valuen, one individuates the largest integer \(\hat{i}\) satisfying the condition

where n = N(N − 1)/2 and t is the single-test significance level, set to 0.01 in the present analysis. Third, one links the (pairs of) nodes whose related p value is smaller than the aforementioned threshold.

Figure 10 shows the partitions returned by the Louvain algorithm run on the validated projections: as noticed elsewhere24,46,47, the detection of mesoscale structures is enhanced if carried out on filtered topologies.

a–c projections of the contact-primary-school, email-Enron and NDC-classes data sets onto the layer of nodes. d–f validated counterparts of the aforementioned projections: any two nodes are linked if they share a significantly large number of hyperedges. Communities have been detected by running the Louvain algorithm.

Conclusions

Our paper contributes to current research on hypergraphs by extending the constrained entropy-maximisation framework to incidence matrices, i.e., their simplest, tabular representation. Differently from the currently-available techniques9, our methodology has the advantage of being analytically tractable, scalable and versatile enough to be straightforwardly extensible to directed and/or weighted hypergraphs.

Beside leading to results whose relevance is mostly theoretical (i.e., the individuation of different regimes for higher-order structures and the estimation of the actual impact of empty and parallel hyperedges on the analysis of empirical systems), our models prove to be particularly useful when employed as benchmarks for real-world systems, i.e., for detecting patterns that are not imputable to purely random effects. Specifically, our results suggest that real-world hypergraphs are characterised by a degree of self-organisation that is absolutely non-trivial (see also Supplementary Note 5 of the Supplementary Information).

This is even more surprising when considering that our results are obtained under a benchmark such as the HCM, i.e., a null model constraining both the degree and the hyperdegree sequences: since it overestimates the extent to which any two nodes overlap—a result whose relevance becomes evident as soon as one considers the effects that higher-order structures have on spreading and cooperation processes48,49,50—our future efforts will be directed towards the analysis of benchmarks constraining non-linear quantities such as the co-occurrences between nodes and/or hyperedges.

Data availability

All the data used for the analysis are freely available at https://www.cs.cornell.edu/arb/data/.

Code availability

The authors will provide the code used for the analysis upon request.

References

Newman, M. Networks: An Introduction. (Oxford University Press, 2010).

Caldarelli, G. Scale-Free Networks: Complex Webs in Nature and Technology. (Oxford University Press, 2010)

Lambiotte, R., Rosvall, M. & Scholtes, I. From networks to optimal higher-order models of complex systems. Nat. Phys. 15, 313–320 (2019).

Battiston, F. et al. Networks beyond pairwise interactions: structure and dynamics. Phys. Rep. 874, 1–92 (2020).

Bick, C., Gross, E., Harrington, H. A. & Schaub, M. T. What are higher-order networks? SIAM Rev. 65, 686–731 (2023).

Courtney, O.T., Bianconi, G. Generalized network structures: the configuration model and the canonical ensemble of simplicial complexes. Phys. Rev. E 93, 062311 (2016).

Bianconi, G. Satistical physics of exchangeable sparse simple networks, multiplex networks and simplicial complexes. Phys. Rev. E 105, 034310 (2022).

Battiston, F. et al. The physics of higher-order interactions in complex systems. Nat. Phys. 17, 1093–1098 (2021).

Chodrow, P. S. Configuration models of random hypergraphs. J. Complex Netw. 8, 3 (2020).

Nakajima, K., Shudo, K. & Masuda, N. Randomizing Hypergraphs Preserving Degree Correlation and Local Clustering, IEEE Transactions on Network Science and Engineering, Vol. 9, 1139–1153 (2022).

Musciotto, F., Battiston, F. & Mantegna, R. N. Detecting informative higher-order interactions in statistically validated hypergraphs. Commun. Phys. 4, 218 (2021).

Barthelemy, M. Class of models for random hypergraphs. Phys. Rev. E 106, 064310 (2022).

Dudek, A., Frieze, A., Ruciński, A. & Šileikis, M. Embedding the Erdös-Rényi hypergraph into the random regular hypergraph and hamiltonicity. J. Combinatorial Theory, Ser. B 122, 719–740 (2017).

Boix-Adserà, E., Brennan, M., Bresler, G. The average-case complexity of counting cliques in Erdos-Renyi hypergraphs. SIAM J. Comput. 19–39 https://doi.org/10.1137/20M1316044 (2019).

Barrett, J., Prałat, P., Smith, A., Théberge, F. Counting Simplicial Pairs In Hypergraphs (Springer, 2024).

Wegner, A. E., Olhede, S. Atomic subgraphs and the statistical mechanics of networks. Phys. Rev. E 103, 042311 (2021).

Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev. 106, 181–218 (1957).

Park, J. & Newman, M. E. J. Statistical mechanics of networks. Phys. Rev. E 70, 66117 (2004).

Garlaschelli, D. & Loffredo, M. I. Maximum likelihood: extracting unbiased information from complex networks. Phys. Rev. E 78, 1–5 (2008).

Squartini, T. & Garlaschelli, D. Analytical maximum-likelihood method to detect patterns in real networks. N. J. Phys. 13, 083001 (2011).

Cimini, G. et al. The statistical physics of real-world networks. Nat. Rev. Phys. 1, 58–71 (2018).

Squartini, T., Caldarelli, G., Cimini, G., Gabrielli, A. & Garlaschelli, D. Reconstruction methods for networks: the case of economic and financial systems. Phys. Rep. 757, 1–47 (2018).

Squartini, T., Lelyveld, I. & Garlaschelli, D. Early-warning signals of topological collapse in interbank networks. Sci. Rep. 3, 3357 (2013).

Saracco, F. et al. Inferring monopartite projections of bipartite networks: an entropy-based approach. N. J. Phys. 19, 16 (2017).

Becatti, C., Caldarelli, G., Lambiotte, R. & Saracco, F. Extracting significant signal of news consumption from social networks: the case of Twitter in Italian political elections.Palgrave Commun. 5, 1–16 (2019).

Caldarelli, G. et al. The role of bot squads in the political propaganda on Twitter. Commun. Phys. 3, 1–15 (2020).

Neal, Z. P., Domagalski, R. & Sagan, B. Comparing alternatives to the fixed degree sequence model for extracting the backbone of bipartite projections. Sci. Rep. 11, 23929 (2021).

Parisi, F., Squartini, T. & Garlaschelli, D. A faster horse on a safer trail: generalized inference for the efficient reconstruction of weighted networks. N. J. Phys. 22, 053053 (2020).

Berge, C. Graphes et Hypergraphes, 1st edn., Dunod, Paris, France. Monographies universitaires de mathématiques №37 p. 502 (1970).

Berge, C. Graphes et Hypergraphes, 2nd edn., Dunod, Paris, France. 2 édition, Dunod université, Mathématiques 604 p. 516 (1973).

Voloshin, V.I. Introduction to Graph and Hypergraph Theory, p. 287. (Nova Science Publishers, 2009).

Ghoshal, G., Zlatic, V., Caldarelli, G. & Newman, M. E. J. Random hypergraphs and their applications. Phys. Rev. E 79, 066118 (2009).

Erdös, P. & Rényi, A. On random graphs i. Publicationes Mathematicae 6, 290–297 (1959).

Gilbert, E. N. Random graphs. Ann. Math. Stat. 30, 1141–1144 (1959).

Saracco, F., Clemente, R. D., Gabrielli, A. & Squartini, T. Randomizing bipartite networks: the case of the world trade web. Sci. Rep. 5, 10595 (2015).

Strona, G., Nappo, D., Boccacci, F., Fattorini, S. & San-Miguel-Ayanz, J. A fast and unbiased procedure to randomize ecological binary matrices with fixed row and column totals. Nat. Commun. 5, 4114 (2014).

Carstens, C. J. Proof of uniform sampling of binary matrices with fixed row sums and column sums for the fast Curveball algorithm. Phys. Rev. E 91, 1–7 (2015).

Kraakman, Y.J., Stegehuis, C. Hypercurveball algorithm for sampling hypergraphs with fixed degrees (2024). https://arxiv.org/abs/2412.05100.

Coolen, A. C. C., Martino, A. D. & Annibale, A. Constrained Markovian dynamics of random graphs. J. Stat. Phys. 136, 1035–1067 (2009).

Roberts, E.S., Coolen, A.C.C. Unbiased degree-preserving randomization of directed binary networks. Phys. Rev. E 85, 046103 (2012).

Efron, B. Bootstrap methods: another look at the jackknife. Ann. Stat. 7, 1–26 (1979).

LaRock, T. & Lambiotte, R. Encapsulation structure and dynamics in hypergraphs. J. Phys. Complex. 4, 045007 (2023).

Landry, N. W., Young, J.-G. & Eikmeier, N. The simpliciality of higher-order networks. EPJ Data Sci. 13, 17 (2024).

Benson, A. R. Three hypergraph eigenvector centralities. SIAM J. Math. Data Sci. 1, 293–312 (2019).

Benjamini, Y. & Hochberg, Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B (Methodol.) 57, 289–300 (1995).

Pratelli, M., Saracco, F. & Petrocchi, M. Entropy-based detection of Twitter echo chambers. PNAS Nexus 3, 177 (2024).

Guarino, S., Mounim, A., Caldarelli, G. & Saracco, F. Verified authors shape X/Twitter discursive communities. arXiv preprint arXiv:2405.04896 (2024).

Ferraz de Arruda, G. et al. Multistability, intermittency, and hybrid transitions in social contagion models on hypergraphs. Nat. Commun. 14, 1375 (2023).

Iacopini, I., Petri, G., Baronchelli, A. & Barrat, A. Group interactions modulate critical mass dynamics in social convention. Commun. Phys. 5, 64 (2022).

St-Onge, G. et al. Influential groups for seeding and sustaining nonlinear contagion in heterogeneous hypergraphs. Commun. Phys. 5, 25 (2022).

Acknowledgements

R.L. acknowledges support from the EPSRC grants EP/V013068/1, EP/V03474X/1 and EP/Y028872/1. T.S. acknowledges support from SoBigData.it that receives funding from European Union - NextGenerationEU - National Recovery and Resilience Plan (Piano Nazionale di Ripresa e Resilienza, PNRR) - Project: ‘SoBigData.it - Strengthening the Italian RI for Social Mining and Big Data Analytics’ - Prot. IR0000013 - Avviso n. 3264 del 28/12/2021. F.S. acknowledges support from the project ‘CODE - Coupling Opinion Dynamics with Epidemics’, funded under PNRR Mission 4 ‘Education and Research’ - Component C2 - Investment 1.1 - Next Generation EU ‘Fund for National Research Programme and Projects of Significant National Interest’ PRIN 2022 PNRR, grant code P2022AKRZ9.

Author information

Authors and Affiliations

Contributions

F.S., G.P., R.L. and T.S. conceived the study. F.S. performed the analyses and T.S. wrote the first version of the manuscript. F.S., G.P., R.L. and T.S. discussed the results, revised the draft and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Physics thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saracco, F., Petri, G., Lambiotte, R. et al. Entropy-based models to randomise real-world hypergraphs. Commun Phys 8, 284 (2025). https://doi.org/10.1038/s42005-025-02182-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42005-025-02182-2