Abstract

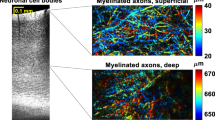

Widefield microscopy is widely used for non-invasive imaging of biological structures at subcellular resolution. When applied to a complex specimen, its image quality is degraded by sample-induced optical aberration. Adaptive optics can correct wavefront distortion and restore diffraction-limited resolution but require wavefront sensing and corrective devices, increasing system complexity and cost. Here we describe a self-supervised machine learning algorithm, CoCoA, that performs joint wavefront estimation and three-dimensional structural information extraction from a single-input three-dimensional image stack without the need for external training datasets. We implemented CoCoA for widefield imaging of mouse brain tissues and validated its performance with direct-wavefront-sensing-based adaptive optics. Importantly, we systematically explored and quantitatively characterized the limiting factors of CoCoA’s performance. Using CoCoA, we demonstrated in vivo widefield mouse brain imaging using machine learning-based adaptive optics. Incorporating coordinate-based neural representations and a forward physics model, the self-supervised scheme of CoCoA should be applicable to microscopy modalities in general.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data used for the results in the paper, for example, fixed mouse brain slice (Fig. 2) and mouse brain in vivo (Fig. 5), are available at https://github.com/iksungk/CoCoA (ref. 61). Due to repository storage limitations, please email the corresponding authors (I.K. and Q.Z.) for access to the rest of the data for both the paper and supplementary material.

Code availability

Code is publicly available at https://github.com/iksungk/CoCoA (ref. 61).

Change history

17 March 2025

A Correction to this paper has been published: https://doi.org/10.1038/s42256-025-01022-w

References

Ji, N. Adaptive optical fluorescence microscopy. Nat. Methods 14, 374–380 (2017).

Hampson, K. M. et al. Adaptive optics for high-resolution imaging. Nat. Rev. Methods Primer 1, 68 (2021).

Zhang, Q. et al. Adaptive optics for optical microscopy [invited]. Biomed. Opt. Express 14, 1732 (2023).

Rueckel, M., Mack-Bucher, J. A. & Denk, W. Adaptive wavefront correction in two-photon microscopy using coherence-gated wavefront sensing. Proc. Natl Acad. Sci. USA 103, 17137–17142 (2006).

Cha, J. W., Ballesta, J. & So, P. T. C. Shack-Hartmann wavefront-sensor-based adaptive optics system for multiphoton microscopy. J. Biomed. Opt. 15, 046022 (2010).

Aviles-Espinosa, R. et al. Measurement and correction of in vivo sample aberrations employing a nonlinear guide-star in two-photon excited fluorescence microscopy. Biomed. Opt. Express 2, 3135 (2011).

Azucena, O. et al. Adaptive optics wide-field microscopy using direct wavefront sensing. Opt. Lett. 36, 825–827 (2011).

Wang, K. et al. Rapid adaptive optical recovery of optimal resolution over large volumes. Nat. Methods 11, 625–628 (2014).

Wang, K. et al. Direct wavefront sensing for high-resolution in vivo imaging in scattering tissue. Nat. Commun. 6, 7276 (2015).

Paine, S. W. & Fienup, J. R. Machine learning for improved image-based wavefront sensing. Opt. Lett. 43, 1235 (2018).

Asensio Ramos, A., De La Cruz Rodríguez, J. & Pastor Yabar, A. Real-time, multiframe, blind deconvolution of solar images. Astron. Astrophys. 620, A73 (2018).

Nishizaki, Y. et al. Deep learning wavefront sensing. Opt. Express 27, 240 (2019).

Andersen, T., Owner-Petersen, M. & Enmark, A. Neural networks for image-based wavefront sensing for astronomy. Opt. Lett. 44, 4618 (2019).

Saha, D. et al. Practical sensorless aberration estimation for 3D microscopy with deep learning. Opt. Express 28, 29044 (2020).

Wu, Y., Guo, Y., Bao, H. & Rao, C. Sub-millisecond phase retrieval for phase-diversity wavefront sensor. Sensors 20, 4877 (2020).

Allan, G., Kang, I., Douglas, E. S., Barbastathis, G. & Cahoy, K. Deep residual learning for low-order wavefront sensing in high-contrast imaging systems. Opt. Express 28, 26267 (2020).

Yanny, K., Monakhova, K., Shuai, R. W. & Waller, L. Deep learning for fast spatially varying deconvolution. Optica 9, 96 (2022).

Hu, Q. et al. Universal adaptive optics for microscopy through embedded neural network control. Light: Sci. Appl. 12, 270 (2023)

Lehtinen, J. et al. Noise2Noise: learning image restoration without clean data. In Proc. 35th International Conference on Machine Learning Vol. 80 (eds Dy, J. & Krause, A.) 2965–2974 (PMLR, 2018).

Krull, A., Buchholz, T.-O. & Jug, F. Noise2Void - learning denoising from single noisy images. In Proc. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2124–2132 (IEEE, 2019); https://doi.org/10.1109/CVPR.2019.00223

Platisa, J. et al. High-speed low-light in vivo two-photon voltage imaging of large neuronal populations. Nat. Methods 20, 1095–1103 (2023).

Li, X. et al. Real-time denoising enables high-sensitivity fluorescence time-lapse imaging beyond the shot-noise limit. Nat. Biotechnol. https://doi.org/10.1038/s41587-022-01450-8 (2022).

Eom, M. et al. Statistically unbiased prediction enables accurate denoising of voltage imaging data. Nat. Methods 20, 1581–1592 (2022).

Ren, D., Zhang, K., Wang, Q., Hu, Q. & Zuo, W. Neural blind deconvolution using deep priors. In Proc. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3338–3347 (IEEE, 2020); https://doi.org/10.1109/CVPR42600.2020.00340

Wang, F. et al. Phase imaging with an untrained neural network. Light: Sci. Appl. 9, 77 (2020).

Bostan, E., Heckel, R., Chen, M., Kellman, M. & Waller, L. Deep phase decoder: self-calibrating phase microscopy with an untrained deep neural network. Optica 7, 559 (2020).

Kang, I. et al. Simultaneous spectral recovery and CMOS micro-LED holography with an untrained deep neural network. Optica 9, 1149 (2022).

Zhou, K. C. & Horstmeyer, R. Diffraction tomography with a deep image prior. Opt. Express 28, 12872 (2020).

Sun, Y., Liu, J., Xie, M., Wohlberg, B. & Kamilov, U. CoIL: coordinate-based internal learning for tomographic imaging. IEEE Trans. Comput. Imaging 7, 1400–1412 (2021).

Liu, R., Sun, Y., Zhu, J., Tian, L. & Kamilov, U. Recovery of continuous 3D refractive index maps from discrete intensity-only measurements using neural fields. Nat. Mach. Intell. 4, 781–791 (2022).

Kang, I. et al. Accelerated deep self-supervised ptycho-laminography for three-dimensional nanoscale imaging of integrated circuits. Optica 10, 1000–1008 (2023).

Chan, T. F. & Chiu-Kwong, W. Total variation blind deconvolution. IEEE Trans. Image Process. 7, 370–375 (1998).

Levin, A., Weiss, Y., Durand, F. & Freeman, W. T. Understanding and evaluating blind deconvolution algorithms. In Proc. 2009 IEEE Conference on Computer Vision and Pattern Recognition 1964–1971 (IEEE, 2009); https://doi.org/10.1109/CVPR.2009.5206815

Perrone, D. & Favaro, P. Total variation blind deconvolution: the devil is in the details. In Proc. 2014 IEEE Conference on Computer Vision and Pattern Recognition 2909–2916 (IEEE, 2014); https://doi.org/10.1109/CVPR.2014.372

Jin, M., Roth, S. & Favaro, P. in Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science Vol. 11211 (eds Ferrari, V. et al.) 694–711 (Springer, 2018).

Hornik, K., Stinchcombe, M. & White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 2, 359–366 (1989).

Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989).

Tewari, A. et al. Advances in neural rendering. In ACM SIGGRAPH 2021 Courses, 1–320 (Association for Computing Machinery, 2021).

Tancik, M. et al. in Advances in Neural Information Processing Systems Vol. 33 (eds Larochelle, H. et al.) 7537–7547 (Curran Associates, 2020).

Mildenhall, B. et al. NeRF: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 99–106 (2022).

Perdigao, L., Shemilt, L. A. & Nord, N. rosalindfranklininstitute/RedLionfish v.0.9. Zenodo https://doi.org/10.5281/zenodo.7688291 (2023).

Richardson, W. H. Bayesian-based iterative method ofimage restoration*. J. Opt. Soc. Am. 62, 55 (1972).

Lucy, L. B. An iterative technique for the rectification of observed distributions. Astron. J. 79, 745 (1974).

Sitzmann, V. et al. Scene representation networks: continuous 3D-structure-aware neural scene representations. In Proc. 33rd International Conference on Neural Information Processing Systems Vol. 32 (eds Wallach, H. et al.) 1121–1132 (Curran Associates, 2019).

Martel, J. N. P. et al. ACORN: adaptive coordinate networks for neural scene representation. ACM Trans. Graph. 40, 1–13 (2021).

Zhao, H., Gallo, O., Frosio, I. & Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging 3, 47–57 (2017).

Kang, I., Zhang, F. & Barbastathis, G. Phase extraction neural network (PhENN) with coherent modulation imaging (CMI) for phase retrieval at low photon counts. Opt. Express 28, 21578 (2020).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://doi.org/10.48550/arXiv.1412.6980 (2017).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. In Proc. 33rd International Conference on Neural Information Processing Systems (eds Wallach, H. M. et al.) 721 (Curran Associates, 2019).

Turcotte, R., Liang, Y. & Ji, N. Adaptive optical versus spherical aberration corrections for in vivo brain imaging. Biomed. Opt. Express 8, 3891–3902 (2017).

Kolouri, S., Park, S. R., Thorpe, M., Slepcev, D. & Rohde, G. K. Optimal mass transport: signal processing and machine-learning applications. IEEE Signal Process Mag. 34, 43–59 (2017).

Villani, C. Topics in Optimal Transportation Vol. 58 (American Mathematical Society, 2021).

Turcotte, R. et al. Dynamic super-resolution structured illumination imaging in the living brain. Proc. Natl Acad. Sci. USA 116, 9586–9591 (2019).

Li, Z. et al. Fast widefield imaging of neuronal structure and function with optical sectioning in vivo. Sci. Adv. 6, eaaz3870 (2020).

Zhang, Q., Pan, D. & Ji, N. High-resolution in vivo optical-sectioning widefield microendoscopy. Optica 7, 1287 (2020).

Zhao, Z. et al. Two-photon synthetic aperture microscopy for minimally invasive fast 3D imaging of native subcellular behaviors in deep tissue. Cell 186, 2475–2491.e22 (2023).

Wu, J. et al. Iterative tomography with digital adaptive optics permits hour-long intravital observation of 3D subcellular dynamics at millisecond scale. Cell 184, 3318–3332.e17 (2021).

Gerchberg, R. W. A practical algorithm for the determination of plane from image and diffraction pictures. Optik 35, 237–246 (1972).

Flamary, R. et al. POT: Python optimal transport. J. Mach. Learn. Res. 22, 1–8 (2021).

Holmes, T. J. et al. in Handbook of Biological Confocal Microscopy (ed. Pawley, J. B.) 389–402 (Springer, 1995).

Kang, I., Zhang, Q., Yu, S. & Ji, N. iksungk/CoCoA: Github CoCoA WF 1.0.0. Zenodo https://doi.org/10.5281/zenodo.10655781 (2024).

Acknowledgements

This work was supported by the Weill Neurohub (N.J.) and National Institutes of Health (U01NS118300) (I.K., Q.Z. and N.J.).

Author information

Authors and Affiliations

Contributions

I.K. and Q.Z. conceived of the project. N.J. supervised the project. I.K., Q.Z. and N.J. designed experiments. I.K. developed the CoCoA method with input from S.X.Y. Q.Z. prepared samples. Q.Z. and I.K. acquired data and prepared figures. I.K., Q.Z. and N.J. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Xi Chen and Jiamin Wu for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Note, Figs. 1–15 and Tables 1 and 2.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kang, I., Zhang, Q., Yu, S.X. et al. Coordinate-based neural representations for computational adaptive optics in widefield microscopy. Nat Mach Intell 6, 714–725 (2024). https://doi.org/10.1038/s42256-024-00853-3

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-024-00853-3

This article is cited by

-

Materials and device strategies to enhance spatiotemporal resolution in bioelectronics

Nature Reviews Materials (2025)

-

Single-shot reconstruction of three-dimensional morphology of biological cells in digital holographic microscopy using a physics-driven neural network

Nature Communications (2025)

-

Fourier-based three-dimensional multistage transformer for aberration correction in multicellular specimens

Nature Methods (2025)

-

Non-invasive and noise-robust light focusing using confocal wavefront shaping

Nature Communications (2024)