Abstract

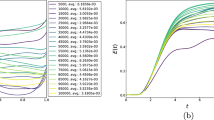

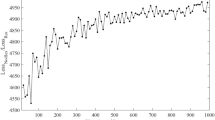

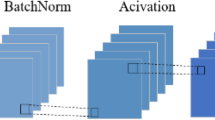

Neural networks suffer from spectral bias and have difficulty representing the high-frequency components of a function, whereas relaxation methods can resolve high frequencies efficiently but stall at moderate to low frequencies. We exploit the weaknesses of the two approaches by combining them synergistically to develop a fast numerical solver of partial differential equations (PDEs) at scale. Specifically, we propose HINTS, a hybrid, iterative, numerical and transferable solver by integrating a Deep Operator Network (DeepONet) with standard relaxation methods, leading to parallel efficiency and algorithmic scalability for a wide class of PDEs, not tractable with existing monolithic solvers. HINTS balances the convergence behaviour across the spectrum of eigenmodes by utilizing the spectral bias of DeepONet, resulting in a uniform convergence rate and hence exceptional performance of the hybrid solver overall. Moreover, HINTS applies to large-scale, multidimensional systems; it is flexible with regards to discretizations, computational domain and boundary conditions; and it can also be used to precondition Krylov methods.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets used in the paper are generated using the provided code (see Supplementary Section 2.1 for parametric details). The dataset for generating the large-scale results (Helmholtz-annular cylinder example) has been uploaded to the open-source Zenodo repository and can be freely accessed at https://doi.org/10.5281/zenodo.10904349 (ref. 92).

Code availability

The code used for generating all numerical experiments is publicly available via GitHub at https://github.com/kopanicakova/HINTS_precond (ref. 80) and Zenodo at https://doi.org/10.5281/zenodo.13321073 (ref. 93).

References

Chapra, S. C. et al. Numerical Methods for Engineers Vol. 1221 (Mcgraw-Hill, 2011).

Mathews, J. H. Numerical Methods for Mathematics, Science and Engineering Vol. 10 (Prentice-Hall International, 1992).

Bodenheimer, P. Numerical Methods in Astrophysics: An Introduction (CRC Press, 2006).

Feit, M., Fleck Jr, J. & Steiger, A. Solution of the Schrödinger equation by a spectral method. J. Comput. Phys. 47, 412–433 (1982).

Patera, A. T. A spectral element method for fluid dynamics: laminar flow in a channel expansion. J. Comput. Phys. 54, 468–488 (1984).

Kim, J., Moin, P. & Moser, R. Turbulence statistics in fully developed channel flow at low Reynolds number. J. Fluid Mech. 177, 133–166 (1987).

Cockburn, B., Karniadakis, G. E. & Shu, C.-W. Discontinuous Galerkin Methods: Theory, Computation and Applications Vol. 11 (Springer, 2012).

Hughes, T. J. The Finite Element Method: Linear Static and Dynamic Finite Element Analysis (Courier Corporation, 2012).

Simo, J. C. & Hughes, T. J. Computational Inelasticity Vol. 7 (Springer, 2006).

Hughes, T. J., Cottrell, J. A. & Bazilevs, Y. Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput. Methods Appl. Mech. Eng. 194, 4135–4195 (2005).

Jing, L. & Hudson, J. Numerical methods in rock mechanics. Int. J. Rock Mech. Mining Sci. 39, 409–427 (2002).

Rappaz, M., Bellet, M., Deville, M. O & Snyder, R. Numerical Modeling in Materials Science and Engineering (Springer, 2003).

Kong, J. A., Tsang, L., Ding, K.-H. & Ao, C. O. Scattering of Electromagnetic Waves: Numerical Simulations (John Wiley & Sons, 2004).

Strikwerda, J. C. Finite Difference Schemes and Partial Differential Equations (SIAM, 2004).

Bathe, K.-J. Finite Element Procedures (Klaus-Jurgen Bathe, 2006).

Karniadakis, G. E. & Sherwin, S. Spectral/HP Element Methods for Computational Fluid Dynamics (Oxford Univ. Press, 2005).

Burden, R. L., Faires, J. D. & Burden, A. M. Numerical Analysis (Cengage Learning, 2015).

Van der Vorst, H. A. Iterative Krylov Methods for Large Linear Systems Vol. 13 (Cambridge Univ. Press, 2003).

Mathew, T. Domain Decomposition Methods for the Numerical Solution of Partial Differential Equations Vol. 61 (Springer, 2008).

Xu, J. Iterative methods by space decomposition and subspace correction. SIAM Rev. 34, 581–613 (1992).

Greenbaum, A. Iterative Methods for Solving Linear Systems (SIAM, 1997).

Olshanskii, M. A. & Tyrtyshnikov, E. E. Iterative Methods for Linear Systems: Theory and Applications (SIAM, 2014).

Saad, Y. Iterative Methods for Sparse Linear Systems (SIAM, 2003).

Knoll, D. A. & Keyes, D. E. Jacobian-free Newton–Krylov methods: a survey of approaches and applications. J. Comput. Phys. 193, 357–397 (2004).

Ciaramella, G. & Gander, M. J. Iterative Methods and Preconditioners for Systems of Linear Equations (SIAM, 2022).

Gander, M. J., Lunet, T., Ruprecht, D. & Speck, R. A unified analysis framework for iterative parallel-in-time algorithms. SIAM J. Sci. Comput. 5, A2275–A2303 (2023).

Briggs, W. L., Henson, V. E. & McCormick, S. F. A Multigrid Tutorial (SIAM, 2000).

Hackbusch, W. Multi-Grid Methods and Applications Vol. 4 (Springer, 2013).

Bramble, J. H. Multigrid Methods (Chapman and Hall, CRC, 2019).

Shapira, Y. Matrix-Based Multigrid: Theory and Applications (Springer, 2008).

AlOnazi, A., Markomanolis, G. S. & Keyes, D. Asynchronous task-based parallelization of algebraic multigrid. In Proc. Platform for Advanced Scientific Computing Conference 1–11 (ACM, 2017).

Berger-Vergiat, L., Waisman, H., Hiriyur, B., Tuminaro, R. & Keyes, D. Inexact Schwarz-algebraic multigrid preconditioners for crack problems modeled by extended finite element methods. Int. J. Num. Methods Eng. 90, 311–328 (2012).

Hiriyur, B., Tuminaro, R. S., Waisman, H., Boman, E. G. & Keyes, D. A quasi-algebraic multigrid approach to fracture problems based on extended finite elements. SIAM J. Sci. Comput. 34, A603–A626 (2012).

Bramble, J. H., Pasciak, J. E. & Xu, J. Parallel multilevel preconditioners. Math. Comput. 55, 1–22 (1990).

Lu, L., Jin, P., Pang, G., Zhang, Z. & Karniadakis, G. E. Learning nonlinear operators via DeepONet based on the universal approximation theorem of operators. Nat. Mach. Intell. 3, 218–229 (2021).

Li, Z. et al. Fourier neural operator for parametric partial differential equations. Preprint at https://arxiv.org/abs/2010.08895 (2020).

Kissas, G. et al. Learning operators with coupled attention. J. Mach. Learn. Res. 23, 215 (2022).

Patel, R. G., Trask, N. A., Wood, M. A. & Cyr, E. C. A physics-informed operator regression framework for extracting data-driven continuum models. Comput. Methods Appl. Mech. Eng. 373, 113500 (2021).

Chen, T. & Chen, H. Universal approximation to nonlinear operators by neural networks with arbitrary activation functions and its application to dynamical systems. IEEE Trans. Neural Netw. 6, 911–917 (1995).

Lu, L. et al. A comprehensive and fair comparison of two neural operators (with practical extensions) based on FAIR data. Comput. Methods Appl. Mech. Eng. 393, 114778 (2022).

Lin, C. et al. Operator learning for predicting multiscale bubble growth dynamics. J. Chem. Phys. 154, 104118 (2021).

Cai, S., Wang, Z., Lu, L., Zaki, T. A. & Karniadakis, G. E. DeepM&Mnet: inferring the electroconvection multiphysics fields based on operator approximation by neural networks. J. Comput. Phys. 436, 110296 (2021).

Di Leoni, P. C., Lu, L., Meneveau, C., Karniadakis, G. & Zaki, T. A. DeepONet prediction of linear instability waves in high-speed boundary layers. J. Comp. Phys. 474, 111793 (2023).

Goswami, S., Yin, M., Yu, Y. & Karniadakis, G. E. A physics-informed variational DeepONet for predicting crack path in quasi-brittle materials. Comput. Methods Appl. Mech. Eng. 391, 114587 (2022).

Yin, M. et al. Simulating progressive intramural damage leading to aortic dissection using DeepONet: an operator–regression neural network. J. R. Soc. Interface 19, 20210670 (2022).

Yin, M., Zhang, E., Yu, Y. & Karniadakis, G. E. Interfacing finite elements with deep neural operators for fast multiscale modeling of mechanics problems. Comput. Methods Appl. Mech. Eng. 402, 115027 (2022).

Oommen, V., Shukla, K., Goswami, S., Dingreville, R. & Karniadakis, G. E. Learning two-phase microstructure evolution using neural operators and autoencoder architectures. npj Comput. Mater. 8, 1–13 (2022).

Goswami, S., Kontolati, K., Shields, M. D. & Karniadakis, G. E. Deep transfer learning for partial differential equations under conditional shift with DeepONet. Nat. Mach. Intell. 4, 1155–1164 (2022).

Zhang, E., Spronck, B., Humphrey, J. D. & Karniadakis, G. E. G2Φnet: relating genotype and biomechanical phenotype of tissues with deep learning. PLoS ONE https://doi.org/10.1371/journal.pcbi.1010660 (2022).

Raissi, M., Perdikaris, P. & Karniadakis, G. E. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686–707 (2019).

Raissi, M., Yazdani, A. & Karniadakis, G. E. Hidden fluid mechanics: learning velocity and pressure fields from flow visualizations. Science 367, 1026–1030 (2020).

Cai, S., Mao, Z., Wang, Z., Yin, M. & Karniadakis, G. E. Physics-informed neural networks (PINNs) for fluid mechanics: a review. Acta Mechanica Sinica 37, 1727–1738 (2022).

Zhang, E., Dao, M., Karniadakis, G. E. & Suresh, S. Analyses of internal structures and defects in materials using physics-informed neural networks. Sci. Adv. 8, eabk0644 (2022).

Zhang, E., Yin, M. & Karniadakis, G. E. Physics-informed neural networks for nonhomogeneous material identification in elasticity imaging. Preprint at https://arxiv.org/abs/2009.04525 (2020).

Daneker, M., Zhang, Z. & Karniadakis, G. E. & Lu, L. in Computational Modeling of Signaling Networks (ed. Nguyn, L.) Vol. 2634, 87–105 (Springer, 2022).

Chen, Y., Lu, L., Karniadakis, G. E. & Dal Negro, L. Physics-informed neural networks for inverse problems in nano-optics and metamaterials. Opt. Express 28, 11618–11633 (2020).

Wang, S., Wang, H. & Perdikaris, P. Learning the solution operator of parametric partial differential equations with physics-informed DeepONets. Sci. Adv. 7, eabi8605 (2021).

Sirignano, J. & Spiliopoulos, K. DGM: a deep learning algorithm for solving partial differential equations. J. Comput. Phys. 375, 1339–1364 (2018).

Pfaff, T., Fortunato, M., Sanchez-Gonzalez, A. & Battaglia, P. W. Learning mesh-based simulation with graph networks. Preprint at arXiv arXiv:2010.03409 (2021).

Xue, T., Beatson, A., Adriaenssens, S. & Adams, R. Amortized finite element analysis for fast pde-constrained optimization. In International Conference on Machine Learning 10638–10647 (PMLR, 2020).

Kochkov, D. et al. Machine learning–accelerated computational fluid dynamics. Proc. Natl Acad. Sci. USA 118, e2101784118 (2021).

Long, Z., Lu, Y., Ma, X. & Dong, B. PDE-Net: Learning PDEs from data. In International Conference on Machine Learning 3208–3216 (PMLR, 2018).

Kahana, A., Turkel, E., Dekel, S. & Givoli, D. Obstacle segmentation based on the wave equation and deep learning. J. Comput. Phys. 413, 109458 (2020).

Ovadia, O., Kahana, A., Turkel, E. & Dekel, S. Beyond the Courant–Friedrichs–Lewy condition: numerical methods for the wave problem using deep learning. J. Comput. Phys. 442, 110493 (2021).

Tompson, J., Schlachter, K., Sprechmann, P. & Perlin, K. Accelerating Eulerian fluid simulation with convolutional networks. In International Conference on Machine Learning 3424–3433 (PMLR, 2017).

Um, K., Brand, R., Fei, Y. R., Holl, P. & Thuerey, N. Solver-in-the-loop: learning from differentiable physics to interact with iterative PDE-solvers. In 34th Conference on Neural Information Processing Systems Vol. 33, 6111–6122 (NeurIPS, 2020).

Hsieh, J.-T., Zhao, S., Eismann, S., Mirabella, L. & Ermon, S. Learning neural PDE solvers with convergence guarantees. Preprint at https://arxiv.org/abs/1906.01200 (2019).

He, J. & Xu, J. MgNet: a unified framework of multigrid and convolutional neural network. Sci. China Math. 62, 1331–1354 (2019).

Chen, Y., Dong, B. & Xu, J. Meta-MgNet: meta multigrid networks for solving parameterized partial differential equations. J. Comput. Phys. 455, 110996 (2022).

Huang, J., Wang, H. & Yang, H. Int-deep: a deep learning initialized iterative method for nonlinear problems. J. Comput. Phys. 419, 109675 (2020).

Zhang, Z., Wang, Y., Jimack, P. K., Wang, H. MeshingNet: a new mesh generation method based on deep learning. In International Conference on Computational Science 186–198 (Springer, 2020).

Kato, H., Ushiku, Y. & Harada, T. Neural 3D mesh renderer. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 3907–3916 (IEEE, 2018).

Bar-Sinai, Y., Hoyer, S., Hickey, J. & Brenner, M. P. Learning data-driven discretizations for partial differential equations. Proc. Natl. Acad. Sci. USA 116, 15344–15349 (2019).

Luz, I., Galun, M., Maron, H., Basri, R. & Yavneh, I. Learning algebraic multigrid using graph neural networks. In International Conference on Machine Learning 6489–6499 (PMLR, 2020).

Greenfeld, D., Galun, M., Basri, R., Yavneh, I. & Kimmel, R. Learning to optimize multigrid PDE solvers. In International Conference on Machine Learning 2415–2423 (PMLR, 2019).

Azulay, Y. & Treister, E. Multigrid-augmented deep learning preconditioners for the Helmholtz equation. J. Sci. Comput. 45, S127–S151 (2022).

Elman, H. C., Ernst, O. G. & O’leary, D. P. A multigrid method enhanced by Krylov subspace iteration for discrete helmholtz equations. SIAM J. Sci. Comput. 23, 1291–1315 (2001).

Balay, S.et al. PETSc Users Manual (PETSc, 2019).

Kopaničáková, A. & Karniadakis, G. E. DeepOnet based preconditioning strategies for solving parametric linear systems of equations. Preprint at https://arxiv.org/abs/2401.02016 (2024).

Zhang, E. et al. kopanicakova/HINTS_precond. GitHub https://github.com/kopanicakova/HINTS_precond (2024).

Saad, Y. & Schultz, M. H. GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J. Sci. Stat. Comput. 7, 856–869 (1986).

Saad, Y. A flexible inner-outer preconditioned gmres algorithm. SIAM J. Sci. Comput. 14, 461–469 (1993).

Falgout, R. D. & Yang, U. M. hypre: A library of high performance preconditioners. In International Conference on Computational Science 632–641 (Springer, 2002).

Olson, L. N. & Schroder, J. B. Smoothed aggregation for Helmholtz problems. Num. Linear Algebra with Appl. 17, 361–386 (2010).

Rathgeber, F. et al. Firedrake: automating the finite element method by composing abstractions. ACM Trans. Math. Software 43, 24 (2016).

Farrell, P. E., Piggott, M. D., Pain, C. C., Gorman, G. J. & Wilson, C. R. Conservative interpolation between unstructured meshes via supermesh construction. Comput. Methods Appl. Mech. Eng. 198, 2632–2642 (2009).

Krause, R. & Zulian, P. A parallel approach to the variational transfer of discrete fields between arbitrarily distributed unstructured finite element meshes. SIAM J. Sci. Comput. 38, C307–C333 (2016).

Kontolati, K., Goswami, S., Karniadakis, G. E. & Shields, M. D. Learning nonlinear operators in latent spaces for real-time predictions of complex dynamics in physical systems. Nat. Commun. 15, 5101 (2024).

Ovadia, O., Turkel, E., Kahana, A. & Karniadakis, G. E. Ditto: diffusion-inspired temporal transformer operator. Preprint at https://arxiv.org/abs/2307.09072 (2023).

Kahana, A. et al. On the geometry transferability of the hybrid iterative numerical solver for differential equations, Comput. Mech. 72, 471–484 (2023).

Rahaman, N. et al. On the spectral bias of neural networks. In International Conference on Machine Learning 5301–5310 (PMLR, 2019).

Kopaničáková, A. NonNestedHelmholtz3DAnnularCylinde. Zenodo https://doi.org/10.5281/zenodo.10904349 (2024).

Kopaničáková, A. kopanicakova/HINTS_precond: v0.0.3. Zenodo https://doi.org/10.5281/zenodo.13321073 (2024).

Acknowledgements

This work is supported by the DOE PhILMs (grant no. de-sc0019453) and MURI-AFOSR (grant no. FA9550-20-1-0358) projects. G.E.K. is supported by the ONR Vannevar Bush Faculty Fellowship (grant no. N00014-22-1-2795). A. Kopaničáková acknowledges support of the Swiss National Science Foundation (SNF) through the 'Multilevel training of DeepONets—multiscale and multiphysics applications’ project (grant no. 206745).

Author information

Authors and Affiliations

Contributions

G.E.K., J.P. and E.T. designed the study and supervised the project. E.Z. and A. Kahana developed the method, implemented the computer code and performed computations. A. Kopaničáková developed the PETSc HINTS code, extended the HINTS methodology to preconditioning settings, and designed and performed large-scale experiments. All authors analysed the results and contributed to the writing and revising of the manuscript.

Corresponding author

Ethics declarations

Competing interests

G.E.K. holds a small equity in Analytica, a private startup company developing AI software products for engineering. He provides technical advice on the direction of machine learning to Analytica. Analytica has licensed IP from his research related to Physics-Informed Neural Networks. A. Kahana and G.E.K. are the founders of Phinyx AI, a private startup company developing AI software products for engineering. The remaining authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Stefano Markidis and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

The Supplementary Information provides comprehensive algorithmic details, additional numerical experiments and an in-depth description of the experiments presented in the main text.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, E., Kahana, A., Kopaničáková, A. et al. Blending neural operators and relaxation methods in PDE numerical solvers. Nat Mach Intell 6, 1303–1313 (2024). https://doi.org/10.1038/s42256-024-00910-x

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-024-00910-x

This article is cited by

-

Automated design for physics-informed modeling with convolutional neural networks

Communications Physics (2025)

-

Leveraging operator learning to accelerate convergence of the preconditioned conjugate gradient method

Machine Learning for Computational Science and Engineering (2025)