Abstract

Automated detection of tumour lesions on positron emission tomography–computed tomography (PET/CT) image data is a clinically relevant but highly challenging task. Progress in this field has been hampered in the past owing to the lack of publicly available annotated data and limited availability of platforms for inter-institutional collaboration. Here we describe the results of the autoPET challenge, a biomedical image analysis challenge aimed to motivate research in the field of automated PET/CT image analysis. The challenge task was the automated segmentation of metabolically active tumour lesions on whole-body 18F-fluorodeoxyglucose PET/CT. Challenge participants had access to a large publicly available annotated PET/CT dataset for algorithm training. All algorithms submitted to the final challenge phase were based on deep learning methods, mostly using three-dimensional U-Net architectures. Submitted algorithms were evaluated on a private test set composed of 150 PET/CT studies from two institutions. An ensemble model of the highest-ranking algorithms achieved favourable performance compared with individual algorithms. Algorithm performance was dependent on the quality and quantity of data and on algorithm design choices, such as tailored post-processing of predicted segmentations. Future iterations of this challenge will focus on generalization and clinical translation.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The training data used in this challenge, including manual annotations of tumour lesions, are publicly available on TCIA11 (https://www.cancerimagingarchive.net/collection/fdg-pet-ct-lesions/, https://doi.org/10.7937/gkr0-xv29). Test data cannot be shared publicly as they will be part of the private test dataset of future iterations of the autoPET challenge. Analyses using the private test data can be performed if they do not interfere with the challenge execution (after completion of the autoPET challenge series). Data can be requested by contacting the challenge organizing team as listed on https://autopet.grand-challenge.org/Organization/.

Code availability

All code used for data processing and performance analysis as part of this challenge, including a trained baseline model, is publicly available via GitHub at https://github.com/lab-midas/autoPET (ref. 37) under the MIT licence.

References

Antonelli, M. et al. The Medical Segmentation Decathlon. Nat. Commun. 13, 4128 (2022).

Menze, B. H. et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans. Med. Imaging 34, 1993–2024 (2015).

Halabi, S. S. et al. The RSNA Pediatric Bone Age Machine Learning Challenge. Radiology 290, 498–503 (2019).

Weisman, A. J. et al. Comparison of 11 automated PET segmentation methods in lymphoma. Phys. Med. Biol. 65, 235019 (2020).

Groendahl, A. R. et al. A comparison of fully automatic segmentation of tumors and involved nodes in PET/CT of head and neck cancers. Phys. Med. Biol. https://doi.org/10.1088/1361-6560/abe553 (2021).

Capobianco, N. et al. Deep-learning 18F-FDG uptake classification enables total metabolic tumor volume estimation in diffuse large B-cell lymphoma. J. Nucl. Med. 62, 30–36 (2021).

Oreiller, V. et al. Head and neck tumor segmentation in PET/CT: the HECKTOR challenge. Med. Image Anal. 77, 102336 (2022).

Chardin, D. et al. Baseline metabolic tumor volume as a strong predictive and prognostic biomarker in patients with non-small cell lung cancer treated with PD1 inhibitors: a prospective study. J. Immunother. Cancer 8, e000645 (2020).

Bradley, J. et al. Impact of FDG-PET on radiation therapy volume delineation in non-small-cell lung cancer. Int. J. Radiat. Oncol. Biol. Phys. 59, 78–86 (2004).

Unterrainer, M. et al. Recent advances of PET imaging in clinical radiation oncology. Radiat. Oncol. 15, 88 (2020).

Gatidis, S. & Kuestner, T. FDG-PET-CT-Lesions—A whole-body FDG-PET/CT dataset with manually annotated tumor lesions. TCIA https://www.cancerimagingarchive.net/collection/fdg-pet-ct-lesions/ (2022).

Gatidis, S. et al. A whole-body FDG-PET/CT dataset with manually annotated tumor lesions. Sci. Data 9, 601 (2022).

Maier-Hein, L. et al. BIAS: transparent reporting of biomedical image analysis challenges. Med. Image Anal. 66, 101796 (2020).

Ma, J. et al. Loss odyssey in medical image segmentation. Med. Image Anal. 71, 102035 (2021).

Lin, T.-Y., Goyal, P., Girshick, R., He, K. & Dollár, P. Focal loss for dense object detection. Preprint at https://ui.adsabs.harvard.edu/abs/2017arXiv170802002L (2017).

Wu, Z., Shen, C. & van den Hengel, A. Bridging category-level and instance-level semantic image segmentation. Preprint at https://ui.adsabs.harvard.edu/abs/2016arXiv160506885W (2016).

Berman, M., Rannen Triki, A. & Blaschko, M. B. The Lovász-Softmax loss: a tractable surrogate for the optimization of the intersection-over-union measure in neural networks. Preprint at https://ui.adsabs.harvard.edu/abs/2017arXiv170508790B (2017).

Sadegh Mohseni Salehi, S., Erdogmus, D. & Gholipour, A. Tversky loss function for image segmentation using 3D fully convolutional deep networks. Preprint at https://ui.adsabs.harvard.edu/abs/2017arXiv170605721S (2017).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211 (2021).

Cardoso, M. J. et al. MONAI: an open-source framework for deep learning in healthcare. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv221102701C (2022).

Barrington, S. F. & Meignan, M. Time to prepare for risk adaptation in lymphoma by standardizing measurement of metabolic tumor burden. J. Nucl. Med. 60, 1096–1102 (2019).

Erickson, N. et al. AutoGluon-Tabular: robust and accurate AutoML for structured data. Preprint at https://ui.adsabs.harvard.edu/abs/2003.06505 (2020).

Dagogo-Jack, I. & Shaw, A. T. Tumour heterogeneity and resistance to cancer therapies. Nat. Rev. Clin. Oncol. 15, 81–94 (2018).

Gatidis, S., Küstner, T., Ingrisch, M., Fabritius, M. & Cyran, C. Automated lesion segmentation in whole-body FDG-PET/CT. Zenodo https://zenodo.org/records/7845727 (2022).

Rosenfeld, A. & Pfaltz, J. L. Sequential operations in digital picture processing. J. ACM 13, 471–494 (1966).

Maier-Hein, L. et al. Metrics reloaded: recommendations for image analysis validation. Nat. Methods 21, 195–212 (2024).

Ye, J. et al. Exploring Vanilla U-Net for lesion segmentation from whole-body FDG-PET/CT scans. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv221007490Y (2022).

Peng, Y., Kim, J., Feng, D. & Bi, L. Automatic tumor segmentation via false positive reduction network for whole-body multi-modal PET/CT images. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv220907705P (2022).

Ma, J. & Wang, B. nnU-Net for automated lesion segmentation in whole-body FDG-PET/CT. GitHub https://github.com/JunMa11/PETCTSeg/blob/main/technical_report.pdf (2022).

Zhang, J., Huang, Y., Zhang, Z. & Shi, Y. Whole-body lesion segmentation in 18F-FDG PET/CT. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv220907851Z (2022).

Heiliger, L. et al. AutoPET challenge: combining nn-Unet with Swin UNETR augmented by maximum intensity projection classifier. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv220901112H (2022).

Sibille, L., Zhan, X. & Xiang, L. Whole-body tumor segmentation of 18F-FDG PET/CT using a cascaded and ensembled convolutional neural networks. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv221008068S (2022).

Bendazzoli, S. & Astaraki, M. PriorNet: lesion segmentation in PET-CT including prior tumor appearance information. Preprint at https://ui.adsabs.harvard.edu/abs/2022arXiv221002203B (2022).

Wiesenfarth, M. et al. Methods and open-source toolkit for analyzing and visualizing challenge results. Sci. Rep. 11, 2369 (2021).

Ross, T. et al. Beyond rankings: learning (more) from algorithm validation. Med. Image Anal. 86, 102765 (2023).

Sundar, L. K. S. et al. Fully automated, semantic segmentation of whole-body 18F-FDG PET/CT images based on data-centric artificial intelligence. J. Nucl. Med. 63, 1941–1948 (2022).

Gatidis, S. & Küstner, T. AutoPET Challenge 2022 code repository. Zenodo https://doi.org/10.5281/zenodo.13119561 (2020).

Acknowledgements

This project was partly supported by the Leuze Foundation, Owen/Teck, Germany (S.G.). This project was conducted under Germany’s Excellence Strategy–EXC-Number 2064/1–390727645 and EXC 2180/1-390900677 (S.G. and T.K.). This study is part of the doctoral thesis of Alexandra Kubičkova.

Author information

Authors and Affiliations

Contributions

S. Gatidis, M.F., S. Gu, M.P.F., M.I., C.C.C. and T.K.: organization of the challenge, preparation of training and test data, contribution of software, data analysis, and drafting of the paper. K.N. and C.L.F.: scientific and clinical consultation during challenge preparation, data analysis, and critical revision of the paper. J.Y., J.H., Y.P., L.B., J.M., B.W., J.Z., Y.H., L.H., Z.M., J.K., R.S., J.E., L.S., L.X., S.B. and M.A.: members of the best-performing participating teams, contribution of software, participation in drafting and critical revision of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial or non-financial interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Masatoshi Hotta and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Quantitative comparison of training data (top) and test data (bottom).

Training data were drawn from UKT (blue), test data partially from UKT (blue) and partially from LMU (green). As shown in the histogram plots, the distribution of age, mean Standardized Uptake Value (SUV) of lesions and Metabolic Tumor Volume (MTV) of lesions was similar between training and test data and between the two data sources (UKT and LMU).

Extended Data Fig. 2 Example of a complete dataset.

Computed tomography (CT) volumes, CT-based organ segmentation masks and Fluorodeoxyglucose Positron Emission Tomography (FDG-PET/CT) volumes (or subsets of these volumes) were used as input data. Metabolically active tumor lesions were segmented manually (colored in blue) and automatically (colored in red). This particular dataset is drawn from the UKT test set and shows excellent alignment between manual lesion segmentation and automated lesion segmentation using an nnU-Net baseline with CT and FDG-PET as input.

Extended Data Fig. 3 Results of ranking robustness analysis using a bootstrap approach.

The median rank order is largely concordant with the final challenge result indicating overall robustness of the challenge outcome regarding sampling from the challenge dataset.

Extended Data Fig. 4 Association of PET/CT image properties with evaluation metrics.

Left column: Mixed effects analysis revealed a positive association between the Dice score and the mean lesion volume (p = 0.0002) and the Dice score and the lesion count (p = 0.008). Middle column: Mixed effects analysis revealed a positive association between the BMI and the false positive volume (p = 0.006) and a negative association between the lesion count and the false positive volume (p = 0.008). Right Column: Mixed effect analysis revealed a negative association between mean lesion volume and false negative volume (p = 0.01). These findings indicate that the detection of small lesions was challenging for submitted algorithms and that patient-related factors such as BMI may have an impact on overall algorithm performance. Mixed effects analysis was performed in Python using the Statsmodels module (Version 0.14.0) based on a two-tailed Wald test. P-values are corrected for multiple testing (Bonferroni correction).

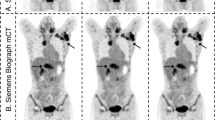

Extended Data Fig. 5 Typical PET image examples of patients with high BMI (left) and low BMI (right).

Arrows I and II demonstrate metabolically active tumor lesions (blue = manual segmentation, red = automated segmentation using the nnU-Net basline). Arrow II demonstrates typical false-positive automated segmentation of the myocardium.

Extended Data Fig. 6 Typical PET image examples of studies with small (left) and high (right) average lesion volume (ground truth segmentation in blue, automated segmentation in red).

Arrows (I) indicate small lesions that were missed by automated segmentation using the nnU-Net baseline. Overall, smaller lesions tended to be missed by submitted algorithms more often than larger lesions.

Extended Data Fig. 7 Algorithm performance of final leaderboard submissions with respect to the metrics (from top to bottom) overlapping Dice score, normalized surface dice, panoptic quality metric and per lesion sensitivity.

Overall, the team ranking with respect to these scores varied but was similar to the challenge leaderboard ranking. Importantly, the ensemble model again performs favorably for these metrics compared to individual algorithms.

Extended Data Fig. 8 Per lesion sensitivity depending on lesion volume and lesion tracer uptake (mean SUV).

Lesions were grouped by size (left) or mean SUV (right) into inter-decile ranges and the respective sensitivities were computed across all final leaderboard algorithms. Smaller lesions and lesions with very low tracer uptake were more often missed compared to larger lesions and lesions with higher tracer uptake. A total number of 680 distinct lesions were present (68 lesions per decile interval). Box plots represent the mean (horizontal line) and inter-quartile range (IQR) (boxes), as well as the range (whiskers) of datapoints; outliers (outside 1.5 times IQR of the upper or lower quartiles) are represented as diamonds.

Extended Data Fig. 9 Inter-reader Dice score versus best performing algorithm (ensemble) Dice score on a subset of challenge samples.

Overall, quantitative metrics suggest similar performance of the second reader compared to the ensemble model. FP volume = false positive volume, FN volume = false negative volume, NSD = Normalized Surface Dice.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gatidis, S., Früh, M., Fabritius, M.P. et al. Results from the autoPET challenge on fully automated lesion segmentation in oncologic PET/CT imaging. Nat Mach Intell 6, 1396–1405 (2024). https://doi.org/10.1038/s42256-024-00912-9

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-024-00912-9

This article is cited by

-

Fully automated volumetric assessment of tumor burden using artificial intelligence on 68Ga-PSMA-11 PET predicts survival after 177Lu-PSMA therapy in metastatic Castration-resistant prostate cancer

European Journal of Nuclear Medicine and Molecular Imaging (2026)

-

Global and regional accuracy of deep learning-based tumor segmentation from whole-body [18F]fluorodeoxyglucose PET/CT images

EJNMMI Research (2025)

-

Whole-Body [18F]FDG-PET/CT Imaging of Healthy Controls: Test/Retest Data for Systemic, Multi-Organ Analysis

Scientific Data (2025)

-

Artificial intelligence-enabled opportunistic identification of immune checkpoint inhibitor-related adverse events using [18F]FDG PET/CT

European Journal of Nuclear Medicine and Molecular Imaging (2025)

-

Artificial intelligence & nuclear medicine: an emerging partnership

Clinical and Translational Imaging (2025)