Abstract

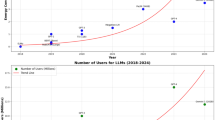

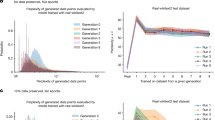

Data compression is a fundamental technology that enables efficient storage and transmission of information. However, traditional compression methods are approaching their theoretical limits after 80 years of research and development. At the same time, large artificial intelligence models have emerged, which, trained on vast amounts of data, are able to ‘understand’ various semantics. Intuitively, semantics conveys the meaning of data concisely, so large models hold the potential to revolutionize compression technology. Here we present LMCompress, a new method that leverages large models to compress data. LMCompress shatters all previous lossless compression records on four media types: text, images, video and audio. It halves the compression rates of JPEG-XL for images, FLAC for audio and H.264 for video, and it achieves nearly one-third of the compression rates of zpaq for text. Our results demonstrate that the better a model understands the data, the more effectively it can compress it, suggesting a deep connection between understanding and compression.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

ILSVRC is available at https://www.image-net.org/challenges/LSVRC/2012/index.php. CLIC is available at https://clic.compression.cc/2019/. LibriSpeech is available at www.openslr.org/12. LJSpeech is available at https://keithito.com/LJ-Speech-Dataset. Mozilla Common Voice 11 is available at https://huggingface.co/datasets/mozilla-foundation/common_voice_11_0. VoxPopuli is available at https://huggingface.co/datasets/facebook/voxpopuli. MeDAL is available at https://github.com/McGill-NLP/medal. Eurlex is available at https://huggingface.co/datasets/pile-of-law/pile-of-law. CIPR SIF is available at https://media.xiph.org/video/derf/.

Code availability

Our code is available via Code Ocean at https://doi.org/10.24433/CO.9735997.v1. (ref. 28)

Change history

09 May 2025

In the version of the article initially published, Xingwu Liu was listed with two affiliations. This has now been corrected to a single affiliation (School of Mathematical Sciences, Dalian University of Technology, Dalian, China) in the HTML and PDF versions of the article.

References

Pavlov, I. 7-zip. www.7-zip.org/a/lzma-specification.7z (2024).

Xiph.Org Foundation. Flac: free lossless audio codec. Xiph.org https://xiph.org/flac/features.html (2023).

Boutell, T. Rfc2083: png (portable network graphics) specification version 1.0. W3C https://www.w3.org/TR/REC-png-961001 (1997).

Richardson, I. E. The H.264 Advanced Video Compression Standard 2nd edn (Wiley, 2010).

High efficiency video coding (hevc) - itu-t recommendation h.265. ITU https://www.itu.int/rec/T-REC-H.265 (2013).

Shannon, C. E. A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 (1948).

Solomonoff, R. A formal theory of inductive inference. Inform. control 7, 1–22 (1964).

Grau-Moya, J. et al. Learning universal predictors. In Proc. 41st International Conference on Machine Learning (eds Salakhutdinov, R. et al.) 16178–16205 (PMLR, 2024).

Huang, C., Xie, Y., Jiang, Z., Lin, J. & Li, M. Approximating human-like few-shot learning with gpt-based compression. Preprint at https://arXiv.org/abs/2308.06942 (2023).

Deletang, G. et al. Language modeling is compression. In Twelfth International Conference on Learning Representations (eds Chaudhuri, S. et al.) (ICLR, 2024).

Bellard, F. Nncp v2: lossless data compression with transformer. Preprint at Fabrice Bellard https://bellard.org/nncp/nncp_v2.pdf (2021).

Chen, M. et al. Generative pretraining from pixels. In International conference on machine learning (eds Daumé III, H. et al.) 1691–1703 (PMLR, 2020).

Wu, S. et al. Beyond language models: byte models are digital world simulators. Preprint at https://arXiv.org/abs/2402.19155 (2024).

Jiang, Z., Wang, R., Bu, D. & Li, M. A theory of human-like few-shot learning. Preprint at https://arXiv/org/abs/2301.01047 (2023).

Russakovsky, O. et al. Imagenet large scale visual recognition challenge. Int. J. of Comput. Vis. 115, 211–252 (2015).

Challenge on Learned Image Compression (CLIC). https://archive.compression.cc/2019/challenge/ (2019).

Xiph.org video test media. Xiph.org https://media.xiph.org/video/derf/ (accessed 2024).

Panayotov, V., Chen, G., Povey, D. & Khudanpur, S. Librispeech: an asr corpus based on public domain audio book. In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2015).

Ito, K. & Johnson, L. The lj speech dataset. https://keithito.com/LJ-Speech-Dataset/ (2017).

Ardila, R. et al. Common voice: a massively-multilingual speech corpus. In Proc. of the 12th Conference on Language Resources and Evaluation (eds Calzolari, N. et al.) 4211–4215 (LREC, 2020).

Wang, C. et al. VoxPopuli: a large-scale multilingual speech corpus for representation learning, semi-supervised learning and interpretation. In Proc. 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers) (eds Zong, C., Xia, F., Li, W. & Navigli, R.) 993–1003 (Association for Computational Linguistics, 2021).

Wen, Z., Lu, X. H. & Reddy, S. MeDAL: medical abbreviation disambiguation dataset for natural language understanding pretraining. In Proc. 3rd Clinical Natural Language Processing Workshop (eds Rumshisky, A. et al.) 130–135 (Association for Computational Linguistics, 2020).

Henderson, P. et al. Pile of law: Learning responsible data filtering from the law and a 256gb open-source legal dataset. Adv. Neural Inf. Process. Syst. 35, 29217–29234 (2022).

Satellite Communications and their Role in Enabling 6G. Technical Report (GSOA, 2014); https://gsoasatellite.com/wp-content/uploads/6G-Paper-GSOA.pdf

Li, M. & Vitányi, P. An Introduction to Kolmogorov Complexity and Its Applications (Springer, 2019).

Niedermayer, M., Rice, D. & Martinez, J. FFV1 Video Coding Format Version 4. Internet-Draft draft-ietf-cellar-ffv1-v4-22. Internet Engineering Task Force https://datatracker.ietf.org/doc/draft-ietf-cellar-ffv1-v4/22/ (2024).

Information technology - jpeg 2000 image coding system: motion jpeg 2000 - part 3 (ISO, 2007); https://www.iso.org/standard/41570.html

Li, Z. & Wang, X. Understanding is compression: v.0.1.0 Code Ocean https://doi.org/10.24433/CO.9735997.v1 (2024).

Acknowledgements

This work is partially supported by the National Key R&D Program of China grant no. 2022YFA1304603 (to M.L.); Proteomic Navigator of the Human Body Project (to M.L.); Canada’s NSERC OGP0046506 (to M.L. and C.W.); Canada Research Chair Program (to C.W.); National Natural Science Foundation of China grant no. 62072433 (to X.L.), grant no. 62088102 (to W.G. and M.L.) and grant no. 62025101 (to W.G. and M.L.); and Kechuang Yongjiang 2035 key technology breakthrough plan of Zhejiang Ningbo grant no. 2024Z119 (to C.H.). We thank N. Zhang and P. Vitanyi for discussions on Solomonoff induction. We thank C. Huang, Y. Xie, Z. Jiang, R. Wang and P. Guo for their discussions and related work in ref. 14 and ref. 9.

Author information

Authors and Affiliations

Contributions

M.L. conceived the presented idea. M.L., X.L., C.H., Q.Y. and W.G. developed the theory and supervised the findings of this work. Z.L., C.H., X.W., H.H. and C.W. performed the computations and carried out the experiments. Z.L., C.H., X.W., H.H., C.W., D.B., X.L. and M.L. wrote the paper. All authors discussed the results and contributed to the final paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Ziv Goldfeld, Jan Voges and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary information about experiments and Supplementary Tables 1–10.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Z., Huang, C., Wang, X. et al. Lossless data compression by large models. Nat Mach Intell 7, 794–799 (2025). https://doi.org/10.1038/s42256-025-01033-7

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-025-01033-7