Abstract

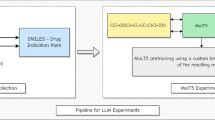

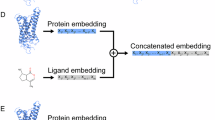

Understanding and designing biomolecules, such as proteins and small molecules, is central to advancing drug discovery, synthetic biology and enzyme engineering. Recent breakthroughs in artificial intelligence have revolutionized biomolecular research, achieving remarkable accuracy in biomolecular prediction and design. However, a critical gap remains between artificial intelligence’s computational capabilities and researchers’ intuitive goals, particularly in using natural language to bridge complex tasks with human intentions. Large language models have shown potential to interpret human intentions, yet their application to biomolecular research remains nascent due to challenges including specialized knowledge requirements, multimodal data integration, and semantic alignment between natural language and biomolecules. To address these limitations, we present InstructBioMol, a large language model designed to bridge natural language and biomolecules through a comprehensive any-to-any alignment of natural language, molecules and proteins. This model can integrate multimodal biomolecules as the input, and enable researchers to articulate design goals in natural language, providing biomolecular outputs that meet precise biological needs. Experimental results demonstrate that InstructBioMol can understand and design biomolecules following human instructions. In particular, it can generate drug molecules with a 10% improvement in binding affinity and design enzymes that achieve an enzyme–substrate pair prediction score of 70.4. This highlights its potential to transform real-world biomolecular research.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The dataset used in this study is available via Zenodo at https://doi.org/10.5281/zenodo.15303508 (ref. 81).

Code availability

The source code of this study is available via GitHub at https://github.com/HICAI-ZJU/InstructBioMol and via Zenodo at https://doi.org/10.5281/zenodo.15335654 (ref. 82).

References

Kim, J., Park, S., Min, D. & Kim, W. Comprehensive survey of recent drug discovery using deep learning. Int. J. Mol. Sci. 22, 9983 (2021).

Volk, MichaelJeffrey et al. Biosystems design by machine learning. ACS Synth. Biol. 9, 1514–1533 (2020).

Mazurenko, S., Prokop, Z. & Damborsky, J. Machine learning in enzyme engineering. ACS Catal. 10, 1210–1223 (2019).

Abramson, J. et al. Accurate structure prediction of biomolecular interactions with AlphaFold 3. Nature 630, 493–500 (2024).

Krishna, R. et al. Generalized biomolecular modeling and design with RoseTTAFold All-Atom. Science 384, eadl2528 (2024).

Zhou, C. et al. A comprehensive survey on pretrained foundation models: a history from BERT to ChatGPT. Int. J. Mach. Learn. Cybern. https://doi.org/10.1007/s13042-024-02443-6 (2024).

Touvron, H. et al. Llama 2: open foundation and fine-tuned chat models. Preprint at https://arxiv.org/abs/2307.09288 (2023).

OpenAI. GPT-4 technical report. Preprint at https://arxiv.org/abs/2303.08774 (2023).

Zhang, Q. et al. Scientific large language models: a survey on biological & chemical domains. ACM Comput. Surv. 57, 161 (2025).

Weininger, D. SMILES, a chemical language and information system. 1. Introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31–36 (1988).

Krenn, M., Häse, F., Nigam, AkshatKumar, Friederich, P. & Aspuru-Guzik, Alán Self-referencing embedded strings (SELFIES): a 100% robust molecular string representation. Mach. Learn. Sci. Technol. 1, 45024 (2020).

Pearson, W. R. in Computer Analysis of Sequence Data: Part I 307–331 (Humana Press, 1994).

Jumper, J. et al. Highly accurate protein structure prediction with AlphaFold. Nature 596, 583–589 (2021).

Ouyang, L. et al. Training language models to follow instructions with human feedback. In Advances in Neural Information Processing Systems 27730–27744 (Curran Associates, 2022).

Edwards, C. et al. Translation between molecules and natural language. In Proc. 2022 Conference on Empirical Methods in Natural Language Processing 375–413 (Association for Computational Linguistics, 2022).

Wang, Z. et al. InstructProtein: aligning human and protein language via knowledge instruction. In Proc. 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 1114–1136 (Association for Computational Linguistics, 2024).

Pei, Q. et al. Biot5: enriching cross-modal integration in biology with chemical knowledge and natural language associations. In 2023 Conference on Empirical Methods in Natural Language Processing 1102–1123 (Association for Computational Linguistics, 2023).

Fang, Y. et al. Mol-Instructions: a large-scale biomolecular instruction dataset for large language models. In The Twelfth International Conference on Learning Representations (ICLR, 2024).

Pei, Q. et al. Biot5+: towards generalized biological understanding with IUPAC integration and multi-task tuning. In ACL (Findings) 1216–1240 (Association for Computational Linguistics, 2024).

Luo, Y. et al. BioMedGPT: open multimodal generative pre-trained transformer for biomedicine. Preprint at https://arxiv.org/abs/2308.09442 (2023).

Liu, S. et al. Conversational drug editing using retrieval and domain feedback. In The Twelfth International Conference On Learning Representations (ICLR, 2024).

Kroll, A., Ranjan, S., Engqvist, MartinK. M. & Lercher, M. J. A general model to predict small molecule substrates of enzymes based on machine and deep learning. Nat. Commun. 14, 2787 (2023).

Vaswani, A. et al. Attention is all you need. In 31st Conference on Neural Information Processing Systems (NIPS 2017) https://papers.nips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf (2017).

Hastings, J. et al. ChEBI in 2016: improved services and an expanding collection of metabolites. Nucleic Acids Res. 44, D1214–D1219 (2016).

Li, J. et al. Empowering molecule discovery for molecule-caption translation with large language models: a ChatGPT perspective. IEEE Trans. Knowl. Data Eng. https://doi.ieeecomputersociety.org/10.1109/TKDE.2024.3393356 (2024).

Zhao, Z. et al. ChemDFM: dialogue foundation model for chemistry. Preprint at https://arxiv.org/abs/2401.14818 (2024).

Cao, H., Liu, Z., Lu, X., Yao, Y. & Li, Y. InstructMol: multi-modal integration for building a versatile and reliable molecular assistant in drug discovery. In Proc. 31st International Conference on Computational Linguistics 354–379 (Association for Computational Linguistics, 2025).

Liu, Z. et al. Prott3: protein-to-text generation for text-based protein understanding. In Proc. 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 5949–5966 (Association for Computational Linguistics, 2024).

Liu, S. et al. A text-guided protein design framework. Nat. Mach. Intell. 7, 580–591 (2025).

Anderson, A. C. The process of structure-based drug design. Chem. Biol. 10, 787–797 (2003).

Peng, X. et al. Pocket2Mol: efficient molecular sampling based on 3D protein pockets. In Proc. Machine Learning Research 17644–17655 (PMLR, 2022).

Luo, S., Guan, J., Ma, J. & Peng, J. A 3D generative model for structure-based drug design. In Advances in Neural Information Processing Systems 6229–6239 (Curran Associates, 2021).

Guan, J. et al. 3D equivariant diffusion for target-aware molecule generation and affinity prediction. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf?id=kJqXEPXMsE0 (ICLR, 2023).

Li, Y. et al. DrugGPT: a GPT-based strategy for designing potential ligands targeting specific proteins. Preprint at bioRxiv https://doi.org/10.1101/2023.06.29.543848 (2023).

Bar-Even, A. et al. The moderately efficient enzyme: evolutionary and physicochemical trends shaping enzyme parameters. Biochemistry 50, 4402–4410 (2011).

Hu, E. J. et al. LoRA: low-rank adaptation of large language models. In International Conference on Learning Representations https://openreview.net/pdf?id=nZeVKeeFYf9 (ICLR, 2022).

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O. & Dahl, G. E. Neural message passing for quantum chemistry. In Proc. Machine Learning Research 1263–1272 (PMLR, 2017).

Zhou, G. et al. Uni-Mol: a universal 3D molecular representation learning framework. In The Eleventh International Conference on Learning Representations https://openreview.net/pdf?id=6K2RM6wVqKu (ICLR, 2023).

Lin, Z. et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science 379, 1123–1130 (2023).

Xu, K., Hu, W., Leskovec, J. & Jegelka, S. How powerful are graph neural networks? In International Conference on Learning Representations https://openreview.net/pdf?id=ryGs6iA5Km (ICLR, 2019).

Hu, W. et al. Strategies for pre-training graph neural networks. In International Conference on Learning Representations https://openreview.net/pdf?id=HJlWWJSFDH (2020).

Wang, Y. et al. Geometric transformer with interatomic positional encoding. In Advances in Neural Information Processing Systems 55981–55994 (Curran Associates, 2023).

Su, J. et al. SaProt: protein language modeling with structure-aware vocabulary. In The Twelfth International Conference on Learning Representations https://openreview.net/pdf?id=6MRm3G4NiU (ICLR, 2024).

Zhang, Z., Liu, Q., Wang, H., Lu, C. & Lee, C. K. Motif-based graph self-supervised learning for molecular property prediction. In Advances in Neural Information Processing Systems 15870–15882 (Curran Associates, 2021).

Li, H. et al. A knowledge-guided pre-training framework for improving molecular representation learning. Nat. Commun. 14, 7568 (2023).

Grant, C. E., Bailey, T. L. & Noble, WilliamStafford FIMO: scanning for occurrences of a given motif. Bioinformatics 27, 1017–1018 (2011).

Rogers, D. & Hahn, M. Extended-connectivity fingerprints. J. Chem. Inf. Model. 50, 742–754 (2010).

Boeckmann, B. et al. The SWISS-PROT protein knowledgebase and its supplement TrEMBL in 2003. Nucleic Acids Res. 31, 365–370 (2003).

Radford, A. et al. Improving language understanding by generative pre-training. OpenAI https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (2018).

Kim, S. Pubchem substance and compound databases. Nucleic Acids Res. 44, D1202–D1213 (2016).

Suzek, B. E., Huang, H., McGarvey, P., Mazumder, R. & Wu, C. H. Uniref: comprehensive and non-redundant uniprot reference clusters. Bioinformatics 23, 1282–1288 (2007).

White, J. PubMed 2.0. Med. Ref. Serv. Q. 39, 382–387 (2020).

Sever, R. et al. bioRxiv: the preprint server for biology. Preprint at bioRxiv https://doi.org/10.1101/833400 (2019).

Mudrak, B. et al. Five years of ChemRxiv: where we are and where we go from here. Angew. Chem. Int. Ed. 62, e202215847 (2023).

McNaught, A. D. et al. Compendium of Chemical Terminology Vol. 1669 (Blackwell Science Oxford, 1997).

The UniProt Consortium. UniProt: the universal protein knowledgebase. Nucleic Acids Res. 46, 2699–2699 (2018).

Gilson, M. K. et al. BindingDB in 2015: a public database for medicinal chemistry, computational chemistry and systems pharmacology. Nucleic Acids Res. 44, D1045–D1053 (2016).

Uludoğan, G., Ozkirimli, E., Ulgen, K. O., Karalí, N. & Özgür, A. Exploiting pretrained biochemical language models for targeted drug design. Bioinformatics 38, ii155–ii161 (2022).

Bansal, P. et al. Rhea, the reaction knowledgebase in 2022. Nucleic Acids Res. 50, D693–D700 (2022).

Landrum, G. RDKit: a software suite for cheminformatics, computational chemistry, and predictive modeling. Greg Landrum 8, 5281 (2013).

Riniker, S. & Landrum, G. A. Better informed distance geometry: using what we know to improve conformation generation. J. Chem. Inf. Model. 55, 2562–2574 (2015).

Halgren, T. A. Merck molecular force field. I. Basis, form, scope, parameterization, and performance of MMFF94. J. Comput. Chem. 17, 490–519 (1996).

Varadi, M. AlphaFold protein structure database: massively expanding the structural coverage of protein-sequence space with high-accuracy models. Nucleic Acids Res. 50, D439–D444 (2022).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. In 33rd Conference on Neural Information Processing Systems (NeurIPS 2019) https://papers.nips.cc/paper_files/paper/2019/file/bdbca288fee7f92f2bfa9f7012727740-Paper.pdf (NeurIPS, 2019).

Rajbhandari, S., Rasley, J., Ruwase, O. & He, Y. ZeRO: memory optimizations toward training trillion parameter models. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis 1–16 (IEEE, 2020).

Papineni, K., Roukos, S., Ward, T. & Zhu, W.J. Bleu: a method for automatic evaluation of machine translation. In Proc. 40th Annual Meeting of the Association for Computational Linguistics 311–318 (ACL, 2002).

Lin, C. Y. in Text Summarization Branches Out 74–81 (2004).

Banerjee, S. & Lavie, A. METEOR: an automatic metric for MT evaluation with improved correlation with human judgments. In Proc. ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translationand/or Summarization 65–72 (Association for Computational Linguistics, 2005).

Miller, F. P., Vandome, A. F. & McBrewster, J. Levenshtein Distance: Information Theory, Computer Science, String (Computer Science), String Metric, Damerau? Levenshtein Distance, Spell Checker, Hamming Distance (Alpha Press, 2009).

Durant, J. L., Leland, B. A., Henry, D. R. & Nourse, J. G. Reoptimization of MDL keys for use in drug discovery. J. Chem. Inf. Comput. Sci. 42, 1273–1280 (2002).

Schneider, N., Sayle, R. A. & Landrum, G. A. Get your atoms in order an open-source implementation of a novel and robust molecular canonicalization algorithm. J. Chem. Inf. Model. 55, 2111–2120 (2015).

Bajusz, D., Rácz, A. & Héberger, K. Why is Tanimoto index an appropriate choice for fingerprint-based similarity calculations? J. Cheminform. 7, 20 (2015).

Preuer, K., Renz, P., Unterthiner, T., Hochreiter, S. & Klambauer, G. Fréchet ChemNet distance: a metric for generative models for molecules in drug discovery. J. Chem. Inf. Model. 58, 1736–1741 (2018).

Smith, T. F. Identification of common molecular subsequences. J. Mol. Biol. 147, 195–197 (1981).

Henikoff, S. & Henikoff, J. G. Amino acid substitution matrices from protein blocks. Proc. Natl Acad. Sci. USA 89, 10915–10919 (1992).

Qu, Y. et al. MolCRAFT: structure-based drug design in continuous parameter space. In Proc. Forty-first International Conference on Machine Learning https://openreview.net/pdf?id=KaAQu5rNU1 (2024).

Corso, G. et al. Deep confident steps to new pockets: strategies for docking generalization. In International Conference on Learning Representations https://openreview.net/pdf?id=UfBIxpTK10 (ICLR, 2024).

Alhossary, A., Handoko, S. D., Mu, Y. & Kwoh, C.-K. Fast, accurate, and reliable molecular docking with QuickVina 2. Bioinformatics 31, 2214–2216 (2015).

Bickerton, G. R., Paolini, G. V., Besnard, J., Muresan, S. & Hopkins, A. L. Quantifying the chemical beauty of drugs. Nat. Chem. 4, 90–98 (2012).

Ertl, P. & Schuffenhauer, A. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J. Cheminform. 1, 8 (2009).

Zhuang, X. Dataset for the paper ‘advancing biomolecule understanding and design following human instructions’. Zenodo https://doi.org/10.5281/zenodo.15303508 (2025).

Zhuang, X. HICAI-ZJU/InstructBioMol: version 1.0.0. Zenodo https://doi.org/10.5281/zenodo.15335654 (2025).

Probst, D. & Reymond, Jean-Louis SmilesDrawer: parsing and drawing smiles-encoded molecular structures using client-side Javascript. J. Chem. Inf. Model. 58, 1–7 (2018).

Schrödinger, LLC. The PyMOL molecular graphics system, version 3.0. (2024).

Acknowledgements

This work is funded by NSFCU23B2055 (H.C.), NSFC2302433 (Q.Z.), NSFCU23A20496 (Q.Z.), the Fundamental Research Funds for the Central Universities (226-2023-00138, H.C.), Zhejiang Provincial ‘Jianbing’ ‘Lingyan’ Research and Development Program of China (2025C01097, K.D. and Q.Z.), Zhejiang Provincial Natural Science Foundation of China (LQ24F020007, Q.Z.) and Hangzhou West Lake Pearl Project Leading Innovative Youth Team Project (TD2023017, K.D.).

Author information

Authors and Affiliations

Contributions

X.Z., K.D., Q.Z. and H.C. conceived the study. X.Z. developed the method, implemented the code and conducted the experiments. T.L. participated in benchmarking some baseline models. X.Z., Y.J., X.L. and Z.X. contributed to the dataset collection. K.D., Z.W., M.Q., K.F., J.W., Q.Z. and H.C. provided critical suggestions on the methodology and experiments. All authors wrote the paper, reviewed it and approved the final paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Martin Min and Hongyu Guo for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhuang, X., Ding, K., Lyu, T. et al. Advancing biomolecular understanding and design following human instructions. Nat Mach Intell 7, 1154–1167 (2025). https://doi.org/10.1038/s42256-025-01064-0

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-025-01064-0

This article is cited by

-

Democratizing protein language model training, sharing and collaboration

Nature Biotechnology (2025)