Abstract

Unlike human-engineered systems, such as aeroplanes, for which the role and dependencies of each component are well understood, the inner workings of artificial intelligence models remain largely opaque, which hinders verifiability and undermines trust. Current approaches to neural network interpretability, including input attribution methods, probe-based analysis and activation visualization techniques, typically provide limited insights about the role of individual components or require extensive manual interpretation that cannot scale with model complexity. This paper introduces SemanticLens, a universal explanation method for neural networks that maps hidden knowledge encoded by components (for example, individual neurons) into the semantically structured, multimodal space of a foundation model such as CLIP. In this space, unique operations become possible, including (1) textual searches to identify neurons encoding specific concepts, (2) systematic analysis and comparison of model representations, (3) automated labelling of neurons and explanation of their functional roles, and (4) audits to validate decision-making against requirements. Fully scalable and operating without human input, SemanticLens is shown to be effective for debugging and validation, summarizing model knowledge, aligning reasoning with expectations (for example, adherence to the ABCDE rule in melanoma classification) and detecting components tied to spurious correlations and their associated training data. By enabling component-level understanding and validation, the proposed approach helps mitigate the opacity that limits confidence in artificial intelligence systems compared to traditional engineered systems, enabling more reliable deployment in critical applications.

Similar content being viewed by others

Main

Technical systems designed by humans are constructed step by step, with each component serving a specific, well-understood function. For instance, the wings and wheels of an aeroplane have clear roles, and an edge-detection algorithm applies defined signal-processing steps like high-pass filtering. Such a construction by synthesis not only helps one to understand the overall behaviour of the system but also simplifies safety validations. By contrast, neural networks are developed holistically through optimization, often using datasets of unprecedented scale. Although this process yields models with impressive capabilities that increasingly outperform engineered systems, it has a principal drawback: it does not provide a semantic description of the function of each neuron. Especially in high-stakes applications such as medicine or autonomous driving, the sole reliance on the output of the black-box artificial intelligence (AI) model is often unacceptable, as faulty or Clever Hans-type behaviours1,2,3 may go unnoticed but have serious consequences. Recent regulations, such as the EU AI Act and the earlier US President’s Executive Order on AI, underline the need for transparency and conformity assessment. What is urgently needed, therefore, is the ability to understand and validate the inner workings and individual components of AI models4,5, as we do for human-engineered systems.

Despite progress in fields such as explainable AI6,7 and mechanistic interpretability8, the automated explanation and validation of model components at scale remains infeasible. Current approaches are limited in several ways. First, they often strongly depend on human intervention9, for example, the manual investigation of individual components10,11 or predictions12, which prevents them from being scaled to large modern architectures and datasets. Second, current explanatory methods focus mostly on isolated aspects of the model behaviour and lack a holistic perspective, that is, they do not enlighten the relations between the input data, the uncovered behaviour of specific network components and the final network prediction. It is, for example, not enough to measure only that specific components detect certain input patterns (‘concepts’)13,14; it is also essential to understand how these components are used15,16,17,18 and where these patterns originate within the training dataset19. Further, existing tools are suited for assessing whether neural networks detect predefined, human-expected input patterns20, but are unable to identify components that encode unexpected or non-interpretable concepts. This limitation restricts our ability to uncover new decision strategies that the model may have developed autonomously, and it may lead to unfaithful explanations, as the interactions between expected and unexpected encodings can influence model behaviour in non-trivial ways. Finally, methods that can ensure compliance with legal and real-world requirements are scarce21,22. Holistic approaches are needed to quantify which parts of a model align with expectations and which do not, thereby revealing spurious and potentially harmful components along with related training data.

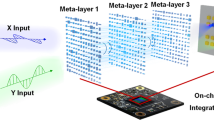

To overcome these limitations, we propose SemanticLens, which introduces the core principle of embedding individual components of an AI model into the semantically structured, multimodal space of a foundation model such as CLIP23. The embedding of components is achieved through two key mappings, as illustrated in Fig. 1a:

-

1.

Components → concept examples: For each component of model \({\mathcal{M}}\), we collect a set of data samples \({\mathcal{E}}\) (for example, highly activating image patches) representing the ‘concept’ of a component, that is, input patterns that it activates upon. Components can be neurons, feature space directions or weights, with neurons being the focus in our experiments.

-

2.

Concept examples → semantic space: We embed each set of examples \({\mathcal{E}}\) into the semantic space \({\mathcal{S}}\) of a multimodal foundation model \({\mathcal{F}}\) such as CLIP23. As a result, each single component of model \({\mathcal{M}}\) is represented by a vector ϑ \(\in {\mathcal{S}}\) in the semantic space of model \({\mathcal{F}}\).

In addition, we compute component-specific attributions15 to identify components and circuits relevant for individual model predictions, forming a third mapping:

-

3.

Prediction → components: Relevance scores \({\mathcal{R}}\) quantify the contributions of model components to individual predictions \({\bf{y}}={\mathcal{M}}({\bf{x}})\) on data points x.

a, To turn the incomprehensible latent feature space (hidden knowledge) into an understandable representation, we leverage a foundation model \({\mathcal{F}}\) that serves as a semantic expert. Concretely, for each component of the analysed model \({\mathcal{M}}\), (1) concept examples \({\mathcal{E}}\) are extracted from the dataset, representing samples that induce high stimuli (they activate the component) and (2) embedded in the latent space of the foundation model resulting in a semantic representation ϑ. Further, (3) relevance scores \({\mathcal{R}}\) for all components are collected, which illustrate their role in decision-making. b, This understandable model representation (set of ϑ’s, potentially linked to \({\mathcal{E}}\)’s and \({\mathcal{R}}\)’s) enables one to systematically search, describe, structure and compare the internal knowledge of AI models. Further, it allows one to audit the alignment to human expectation and opens ways in which we can evaluate and optimize human-interpretability. Credit: image in a, Unsplash.

Within SemanticLens, the foundation model \({\mathcal{F}}\) is assumed to act as a ‘semantic expert’ for the data domain under consideration. It effectively represents the model \({\mathcal{M}}\) as a comparable and searchable vector database, that is, as a set of vectors ϑ (one per component), potentially linked to sets of \({\mathcal{E}}\) and \({\mathcal{R}}\).

This embedding-based framework addresses previous limitations by integrating several interpretability perspectives, thereby enabling large-scale analyses of encoded concepts: where they reside within the architecture, how they influence outputs and how they relate to input data. Furthermore, SemanticLens supports automated probing that evaluates the alignment between model behaviour and user-defined expectations to uncover unexpected or spurious concepts. Finally, advanced capabilities are enabled, such as textual searches, cross-model concept comparison and targeted alignment audits.

The core functionalities of SemanticLens for analysing a model \({\mathcal{M}}\) include:

Searching for concepts encoded by the model across modalities (for example, text or images) and efficiently identifying relevant components and associated data samples (‘Search capability’ and Supplementary Note C).

Describing the concepts learned by the model at scale and highlighting which concepts are present, missing or misused during inference (‘Describing what knowledge exists and how it is used’ and Supplementary Note D).

Comparing learned concepts across models, various architectures or training procedures (‘Identifying common and unique knowledge’ and Supplementary Note E).

Auditing the alignment between learned concepts and human-defined expectations to detect spurious correlations (‘Auditing concept alignment with expected reasoning’ and ‘Towards safe and robust medical models’ and Supplementary Note F).

Evaluating the human-interpretability of network components in terms of ‘clarity’, ‘polysemanticity’ and ‘redundancy’ (‘Evaluating human-interpretability of model components’ and Supplementary Note G).

A detailed overview of related work and our contributions is provided in ‘Understanding the inner knowledge of AI models’. Example questions that SemanticLens can address are listed in Table 1, with the corresponding methodological steps illustrated as workflows in Supplementary Note H.4.

Results

We begin in ‘Understanding the inner knowledge of AI models’ by demonstrating how we can understand the internal knowledge of AI models by searching and describing the semantic space. ‘Auditing concept alignment with expected reasoning’ describes how these functionalities provide the basis for effectively auditing the alignment of the reasoning of the model with respect to human expectation. We demonstrate how to spot flaws in medical models and improve robustness and safety in ‘Towards robust and safe medical models’. Last, in ‘Evaluating human-interpretability of model components’, computable measures for the human-interpretability of model components are introduced, enabling one to rate and improve interpretability at scale.

The different sets of experiments reported in this paper were conducted on a variety of models, including convolutional neural networks with the ResNet24 and VGG25 architectures as well as different vision transformers (ViTs)26. Additionally, we used two large vision datasets, namely ImageNet27 and ISIC 201928, along with several foundation models, including Mobile-CLIP29, DINOv2 (ref. 30) and WhyLesionCLIP31. Further details about the experimental setting can be found in Supplementary Note B. More analyses are reported in Supplementary Notes C to G.

Understanding the inner knowledge of AI models

In the following, SemanticLens is used to systematically analyse the knowledge (learned concepts) encoded by neurons of ResNet50v2 trained on the ImageNet classification task27. The individual neurons are embedded as vectors ϑ into the multimodal and semantically organized space of the Mobile-CLIP foundation model29, as illustrated in Fig. 1 and described in ‘Methods’.

Search capability

The first capability of SemanticLens that we demonstrate is its search capability, which allows one to quickly browse through all neurons of the ResNet50v2 model and identify encoded concepts that a user is interested in, such as potential biases (for example, gender or racial), data artefacts (for example, watermarks) or specific knowledge. The search is based on a (cosine) similarity comparison between a probing vector ϑprobe, representing the concept we are looking for (for example, the concept ‘person’), and the set of embedded neurons ϑ’s of the ResNet model. The shared vision/text embedding space of Mobile-CLIP enables one to query concepts using either textual descriptions (the word ‘person’) or visual examples (an image of a person). In the following experiments, we focus on text-based probing. Details of the construction of probing vectors and the retrieval process can be found in ‘Methods’.

As illustrated in Fig. 2a, the neurons in the ResNet50v2 model that encode for person-related concepts can be identified. Two embedded neurons that most closely resemble the addtext-based probing vector represent different, non-obvious and potentially discriminative aspects of a person, such as a hijab (neuron 1216) and dark skin (neuron 1454). This is, in principle, a valid strategy for representing different object subgroups sharing certain visual features by specialized neurons. However, if these sensitive attribute-encoding neurons are used for other purposes, for example, the dark skin-related neuron is used in the classification of ‘steel drum’ (Fig. 3b), then this may hint at potential fairness issues.

a, Using search-engine-like queries, one can probe for knowledge referring to, for example, (racial) biases, data artefacts or specific knowledge of interest (here done with the text modality). b, A low-dimensional UMAP projection of the semantic embeddings provides a structured overview of the knowledge in the model. Each point corresponds to the encoded concept of a model component. By searching for human-defined concepts, we can add descriptions to all parts of the semantic space. c, Having grouped the knowledge into concepts, attribution graphs reveal where concepts are encoded in the model and how they are used (and interconnected) for inference. When predicting ‘ox’, we learn that ox-cart-related background concepts are used. Importantly, we can also identify relevant knowledge that could not be labelled and should be manually inspected by the user. d, The set of unexpected concepts includes the ‘Indian person’, ‘palm tree’ and ‘watermark’ concepts, which correlate in the dataset with ‘ox’. We can find other affected output classes, for example, ‘butcher shop’, ‘scale’ and ‘ricksha’ for the ‘Indian person’ concept. Watermarks depicted in images of panels a and d were manually overlaid to simulate artefacts. Credit: images from Pexels and Unsplash.

a, (1) In the first step, a set of valid and spurious concepts is defined with text descriptions, for example, ‘curved horns’ or ‘palm tree’ for ‘ox’ detection. (2) Afterwards, we evaluate the alignment of each model component (defined in ‘Auditing concept alignment’) with spurious (y axis) and valid (x axis) concepts, to determine if the concepts it encodes are primarily valid, spurious, a combination of both or neither. The size of each dot in the chart represents the importance of a component for ‘ox’ detections. We learn that ResNet50v2 relies on the ‘Indian person’, ‘palm tree’ and ‘cart’ concepts. (3) Last, we can test our model and try to distinguish the ‘ox’ output logits on ‘ox’ images (from the test dataset) and diffusion-based images with spurious features only. When several spurious features are present, as for ‘Indian person pulling a cart under palm trees’, the model outputs become more difficult to separate, indicated by a lower AUC score. b, When auditing the alignment of ResNet to valid concepts for 26 ImageNet classes, we find that in all cases, spurious or background concepts are used. For each class, we show a violin plot depicting the distribution of alignment scores for the top n = 20 most relevant components. Special markers indicate the median, minimum and maximum alignment. The radius of each dot reflects the highest relevance of a component on the test set. Credit: images in a, Unsplash; b, Pexels.

We also query the model for the concept ‘watermark’ through text-based probing. The retrieved neurons encode watermarks and other text superimposed on an image. Such data artefacts may become part of the prediction strategy of the model, known as shortcut learning12,32 or the Clever Hans phenomenon15, and massively undermine its trustworthiness (the model predicts correctly but for the wrong reason33). Although previous works have unmasked such watermark-encoding neurons more or less by chance15,34, SemanticLens allows one to intentionally query the model for the presence of such neurons.

In addition to searching for bias- or artefact-related neurons, we can also query the model for specific knowledge, for example, the concept ‘bioluminescence’. The results show that this concept has been learned by the ResNet50v2 model. Such specific knowledge queries can help ensure that the model has learned all the relevant concepts needed to solve a task, as demonstrated in the ABCDE rule for melanoma detection in ‘Auditing concept alignment with expected reasoning’. Notably, SemanticLens not only allows one to query the model for specific concepts but also to identify the output classes for which concepts are used and the respective (training) data, as shown in Fig. 2d. More examples, comparisons between models and details are provided in Supplementary Note C.

Describing what knowledge exists and how it is used

Another feature of SemanticLens is its ability to describe and systematically analyse what concepts the model has learned and how they are used. Figure 2b provides an overview of the internally encoded concepts in the ResNet50v2 model (by penultimate-layer neurons) as a Uniform Manifold Approximation and Projection (UMAP) projection of the semantic embeddings ϑ. Here, for example, a text-based search for ‘animal’ results in aligned embeddings on the left (indicated in red), whereas transport-related embeddings are in the centre (blue). Even more insights can be gained when systematically searching and annotating semantic embeddings, as described in the following.

Labelling and categorizing knowledge

To structure the learned knowledge systematically, we assign a text-form concept label (from a user-defined set) to a neuron embedding if its alignment exceeds the alignment with a baseline, which is an empty text label. The labelled embeddings can then be grouped according to their annotation. For example, all embeddings matching ‘dog’ are grouped together, which reduces the complexity, especially if there are many neurons with similar semantic embeddings. For example, the results for ResNet indicate that the model has over a hundred neurons related to dog, as illustrated in Fig. 2b, which shows the overall top-aligned label from the expected set for clusters of semantic embeddings ϑ. Further details (including labels) and examples are provided in Supplementary Notes D.1 and D.2, respectively.

It is possible to ‘dissect’14 the knowledge in a model at different levels of complexity, ranging from broad categories such as ‘objects’ and ‘animals’ to more fine-grained concepts such as ‘bicycle’ or ‘elephant’. For instance, in Supplementary Note D, we categorize the model components relevant to the ‘ox’ class into ‘breeds’ like ‘water buffalo’, ‘work’-related concepts such as ‘ploughing’, and ‘physical attributes’ such as ’horns’. Importantly, labelling not only facilitates the assessment of what the model has learned but also identifies gaps in its knowledge, that is, cases where no neuron aligns with a user-defined concept. In the studied ResNet model, for instance, no neuron encodes the ox breeds ‘Angus’ or ‘Hereford’, indicating areas where further training data could enhance model performance. Notably, the faithfulness of labels is important35, which is evaluated in Supplementary Note D.4.

Understanding how knowledge is used

Understanding how the model uses learned knowledge is as crucial as knowing what knowledge exists. For instance, although ‘wheels’ can be a valid concept for detecting sports cars, it should not be relevant for detecting an ox, which is, however, measurable for ResNet. Figure 2c shows the attribution graph for the class ‘ox’, constructed by computing conditioned attribution scores15. The graph reveals associations between neuron groups with the same concept label. For the class ‘ox’, in the attribution graph in Fig. 2c, for example, next to the ‘wheels’ concept there is another highly relevant ‘long fur’ concept encoded by neuron 179 in layer 3, which in turn relies on a ‘grass’ concept in the preceding layer, indicating that neuron 179 encodes long-furred animals on green grass. Attribution graphs, thus, not only describe what and how concepts are used but also enhance our understanding of subgraphs (‘circuits’) within the model. A complete graph is detailed in Supplementary Note D.5.

The link among knowledge, data and predictions

Notably, some components do not align with any of the predefined concept labels, yielding embedding similarities that were equal to or lower than those obtained using an empty text prompt. As shown in Fig. 2d, manual inspection of these unexpected concepts reveals associations with ‘Indian person’, ‘palm tree’ and ‘watermark’, traced to neurons 179, 1560 and 800 in layer 3, respectively. All three concepts correspond to spurious correlations in the dataset, for example, farmers using an ox to plough a field, palm trees in the background or overlaid watermarks, where the training data responsible can be generally identified by retrieving highly activating samples \({\mathcal{E}}\). The same neurons are also relevant to other ImageNet classes: ‘butcher shop’, ‘scale’, and ‘rickshaw’ for ‘Indian person’; ‘thatch’, ‘bell cote’ and ‘swim trunk’ for ‘palm tree’; and ‘Lakeland terrier’, ‘bulletproof vest’ and ‘safe’ for ‘watermark’. By inherently connecting data, model components and predictions, SemanticLens is an effective and actionable tool for model debugging, as further described in ‘Towards robust and safe medical models’.

Identifying common and unique knowledge

So far, we have investigated a single model in semantic space. However, this space allows the embedding and comparing of several models with different architectures, layers or model parts. Thus, the influence on learned concepts when changing the network architecture or training hyperparameters, such as the training duration, can be studied.

Supplementary Note E compares using SemanticLens two ResNet50 models trained on ImageNet. One (ResNet50v2) was trained more extensively, which results in higher test accuracy. As illustrated in Supplementary Fig. E.1, both models share common concepts, for example, bird-related ones. However, whereas the better-trained ResNet50v2 learns more class-specific concepts, like the unique fur texture of the Komondor dog breed, ResNet50 tends to learn more abstract, shared concepts. For instance, ResNet50 detects a Komondor using a mop-like concept also used for class ‘mop’, whereas ResNet50v2 learns a concept specific to ‘Komondor’. This is in line with works that study the generalization of neural networks after long training regimes, which observed that latent model components become more structured and class-specific36. We further provide quantitative comparisons through network dissection in Supplementary Note D.3. Alternatively, SemanticLens allows one to compare models quantitatively without access to concept labels by evaluating the similarity between the knowledge in the models. In Supplementary Note E, we discuss the alignment of various pretrained neural networks across layers and architectures.

Auditing concept alignment with expected reasoning

The analyses introduced in ‘Understanding the inner knowledge of AI models’ enable the quantification of the alignment of a model with human expectations by measuring its reliance on valid, spurious or unexpected concepts. The steps of an alignment audit, outlined in Fig. 3a, include (1) defining concepts, (2) evaluating concept alignment and (3) testing model behaviour.

Defining a set of expected concepts

First, a set of valid and spurious concepts is defined and compared against the concepts actually used by the model. For illustration, we revisit the ox example. Valid concepts include ‘curved horns’, ‘wide muzzle’ and ‘large muscular body’, as shown in Fig. 3a (left). On the other hand, we are also aware of spurious correlations, such as ‘palm tree’, ‘Indian person’ and ‘watermark’. Notably, all these concepts can be defined within the modality of the data domain of the model (with example images) or, as demonstrated here, simply with text prompts when using a multimodal foundation model to compute embeddings.

Evaluating alignment to valid and spurious concepts

The alignment of the knowledge in a model with user-defined spurious or valid concepts is visualized in the scatter plot in Fig. 3a (middle) when detecting ‘ox’. Concretely, we calculate the maximum alignment between an embedding ϑ and all probing embeddings ϑprobe within a set (valid or spurious), with mathematical formulations detailed in ‘Auditing concept alignment’. Each dot in the plot represents a neuron in the penultimate layer, with its size indicating its highest importance (shown in parentheses) during inference on the test set.

Several spurious concepts, such as ‘palm tree’, ‘Indian person’ and ‘cart’, are identified besides valid concepts such as ‘short’, ‘rough fur’ and ‘curved horns’. Notably, neurons that do not align to any user-defined concept can be manually inspected, as illustrated in Fig. 2d, and incorporated into the set of spurious or valid concepts. As discussed for a VGG model in Supplementary Note F, lower overall alignment scores can also result for neurons that encode for highly abstract concepts or that exhibit ‘polysemantic’ behaviour, encoding several semantics simultaneously.

Testing models for spurious behaviour

Although SemanticLens enables the quantification of the reliance of a model on valid or spurious features (for example, by sharing spuriously aligned components), it is equally important to assess the actual impact of identified spurious features on inference. Here we use a model test evaluating the separability of two sets of outputs37: one generated from images containing valid features (associated with the ‘ox’ class) and the other from images with spurious features, as illustrated in Fig. 3a (right). When testing the model on images (generated with Stable Diffusion) for a single concept (‘Indian person’, ‘palm tree’ or ‘cart’), the model output logits for ‘ox’ are clearly distinguishable from those attained from ‘ox’ images, achieving area under the curve (AUC) scores above 0.98. However, when several spurious features are presented simultaneously and we test the model on images combining all three concepts, the ‘ox’ output logits are further amplified. Specifically, the ‘ox’ class ranks among the top five predictions in over half of the spurious samples, resulting in an AUC of 0.91, as further detailed in Supplementary Note F.

Problematic concept reliance everywhere

The previous example highlights the presence of unexpected spurious correlations, such as the association of palm trees with ‘ox’. Expanding on this, we evaluate the alignment of model components with valid concepts across 26 other ImageNet classes, including ‘shovel’, ‘steel drum’ and ‘screwdriver’. Figure 3b presents the resulting highest alignment scores with a valid concept for neurons, where size again indicates relevance for ‘ox’. Notably, no class shows complete alignment of all relevant model components with valid concepts. In every case, spurious or background features are relevant, including snow for ‘shovel’, ‘Afro-American person’ for ‘steel drum’, and ‘child’ for ‘screwdriver’. A comprehensive overview of the concepts used by the model is provided in Supplementary Note F.

Unaligned models are often challenging to interpret

Analysing popular pretrained models on ImageNet reveals substantial variation in alignment with valid expected concepts, often due to learned concepts that are neither clearly valid nor spurious, as shown for VGG-16 in Supplementary Note F. For instance, VGG-16 contains several polysemantic neurons that perform several roles in decision-making, which generally reduces alignment. More performant and wider models tend to have more specialized and monosemantic neurons, as quantified in ‘Evaluating human-interpretability of model components’, and exhibit higher alignment scores, as shown in Supplementary Note F, thus highlighting the link between interpretability and auditability. After assessing the issue of hidden concepts across components in Supplementary Note F.1.4 for ResNet50v2, we suggest that they have a minimal impact on audit faithfulness.

Towards robust and safe medical models

A popular medical use case for AI is melanoma detection in dermoscopic images, as shown in Fig. 4a. In the following, we demonstrate how to debug a VGG-16 model with SemanticLens that is trained to discern melanoma from other irregular or benign (referred to as ‘other’) cases in a public benchmark dataset28,38,39.

a, The ABCDE rule is a popular guide to visual melanoma clues. We expect models to learn several concepts corresponding to the ABCDE rule, as well as other melanoma-unrelated indications (such as regular border) or spurious concepts, including hairs or a band-aid. b, In a semantic space visualized with a UMAP projection, we can identify valid concepts, such as blue-white veil for ‘melanoma’, but also spurious ones such as red skin or ruler. c, When investigating the importance of concepts, red skin or band-aid concepts are strongly used for the ‘other’ (non-melanoma) class. Ruler concepts are used with slightly higher relevance for ‘melanoma’. d, We can improve the safety and robustness of our model either by changing the model and removing spurious components or by retraining it on augmented data. Whereas both approaches lead to improved clean performance, the influence of artefacts is only substantially reduced through retraining. Images in a, b and d are adapted with permission from ref. 28 under a Creative Commons license CC BY 4.0 and ref. 39 under a Creative Commons license CC BY 4.0.

ABCDE rule for melanoma detection

Dermatologists have created guidelines for visual melanoma detection, such as the ABCDE rule, short for asymmetry, border, colour, diameter and evolving40. We will use SemanticLens to evaluate whether the model captures corresponding concepts, such as ‘asymmetric lesion’ (A), ‘ragged border’ (B), ‘blue-white veil’ (C), ‘large lesion’ (D) and ‘crusty surface’ (E). In addition, we also define concepts for benign conditions, other skin diseases and known spurious correlations41,42, including ‘hairs’, ‘band-aids’, ‘red-hued skin’, ‘rulers’, ‘vignetting’ and ‘skin markings’. Refer to Supplementary Note F.2.1 for a full list of concepts.

Finding bugs in medical models

We embed neurons in the last convolutional layer in VGG into the semantic space of a CLIP model trained on skin lesion data31 and probe for concepts using textual embeddings. As shown in Fig. 4b, the semantic embeddings are structured, aligning to concepts related to ‘irregular’ at the top (red), ‘melanoma’ at bottom left (blue) and ‘regular’ at bottom right (green). Relevant melanoma indicators, such as ‘blue-white veil’ and ‘irregular streaks’, emerge, alongside benign features like ‘regular border’. However, several spurious components are also detected: neuron 403 encodes for measurement scale bars, 508 detects ‘blue band-aids and 272 responds to red skin.

To quantify how concepts are used by the model, we computed their highest importance for predicting the ‘melanoma’ or ‘other’ class on the test set, as shown in Fig. 4c. Alarmingly, spurious concepts are highly relevant: ‘red skin’ and ‘blue band-aid’ are strongly used for ‘other’, whereas ‘measurement scale bar’ is slightly more strongly used for ‘melanoma’.

Model correction and evaluation

SemanticLens allows one to identify model components and associated data, which can be used to reduce the influence of background features, such as red skin, plasters and rulers. To debug the model43, we apply two approaches: (1) pruning 40 identified spurious neurons without retraining and (2) retraining on a cleaned and augmented dataset. For retraining, we remove training samples containing artefacts, identified by studying the highly activating samples of our labelled components. We further overlay hand-crafted artefacts onto training images to desensitize the model, as illustrated in Fig. 4d (left).

The results in Fig. 4d (right) show that both strategies, pruning and retraining, lead to increased accuracy on a clean test set (without artefact samples), especially for melanoma (from 71.4% to 72.8%). We further modify the data by artificially inserting artefacts: cropping out ruler and plasters from real test samples and overlaying them onto clean test samples, as done in ref. 34. Additionally, for red skin, we introduce a reddish hue, as detailed in Supplementary Note F.2.3. Interestingly, the pruned model remains highly sensitive to artefacts, with the test accuracy for non-melanoma samples still dropping by over 20% when adding red colour. Although computationally more expensive, only retraining leads to a strong reduction in artefact sensitivity. Further details and discussions are provided in Supplementary Note F.2.3.

Evaluating human-interpretability of model components

Deciphering the meaning of concept examples \({\mathcal{E}}\) can be challenging, especially when neurons are polysemantic and encode for several semantics. We introduce a set of easily computable measures that assess how ‘clear’, ‘similar’ and ‘polysemantic’ concepts are perceived by humans, as inferred from their concept examples \({\mathcal{E}}\). Additionally, we introduce a measure to quantify the ‘redundancies’ present within a set of concepts. All measures are based on evaluating similarities of concept examples \({\mathcal{E}}\) in semantic space \({\mathcal{S}}\), with mathematical definitions provided in ‘Human-interpretability measures for concepts’.

Alignment of interpretability measures with human perception

Aiming to assess human-interpretability, we first evaluate the alignment between human judgements and our proposed measures (similarity, clarity and polysemanticity) through user studies. Over 218 participants were recruited through Amazon Mechanical Turk for 15-min tasks in which they evaluated concept examples from the ImageNet object detection task. For each interpretability measure, we designed an independent study consisting of both qualitative and quantitative experiments. Further details regarding the study design, the models used and the data-filtering procedures can be found in Supplementary Note G.1.

We find high alignment between our measures and human perception, with correlation scores above 0.74 (Fig. 5a), consistent with recent works using textual concept examples44. Regarding concept similarity, human-alignment varies across foundation models, namely DINOv2 (ref. 30; unimodal), CLIP-OpenAI23, CLIP-LAION45 and the most recent CLIP-Mobile29 (specific variants are reported in Supplementary Note G.1). Our results indicate that more recent and more performant CLIP models are also more aligned with human perception. Other hyperparameter choices, such as the similarity measure used, are compared in ‘Methods’. In an odd-one-out task, where participants identified outlier concepts, our similarity measures are often better than those of the participants, indicating that computational measures can be more reliable than humans. However, Amazon Mechanical Turk participants may prioritize speed over accuracy, potentially affecting performance.

a–c, ‘Clarity’ refers to how clear and easy it is to understand the common theme of concept examples. Polysemanticity indicates whether several distinct semantics are present in the concept examples. Similarity refers to the similarity of concepts. Redundancy describes the degree of redundancy in a set of concepts. a, Our computable measures align with human perception in user studies, resulting in correlation scores above 0.73. Generally, more recent and performant foundation models have higher correlation scores. For each scatter plot, we report the number n of data points. Each point corresponds to a study question and its average perceived user rating. b, Interpretability differs strongly for common pretrained models. Usually, ViTs or smaller and less performant convolutional models show lower interpretability. c, We can optimize model interpretability with respect to hyperparameter choices, such as dropout or activation sparsity regularization, during training. Although dropout leads to more redundancies besides improved clarity of concepts, applying a sparsity loss improves interpretability overall. Green arrows indicate a positive effect on interpretability, gray arrows a neutral effect and red arrows a negative effect.

Rating and improving interpretability

The difficulty of understanding the role of components in pretrained models can vary strongly, as observed in the sections ‘Understanding the inner knowledge of AI models’ and ‘Search capability’. This is confirmed by evaluating various popular neural networks trained on ImageNet using our introduced measures for the penultimate-layer neurons, as illustrated in Fig. 5b. Larger and broader models, like ResNet101, show higher degrees of redundancy, as more neurons per layer allow redundancies to increase, for example, to increase robustness. By contrast, narrow models, such as ResNet18, have a smaller neural basis, potentially leading to superimposed signals and higher polysemanticity46.

The convolution-based ResNet architecture shows higher concept clarity compared to transformer-based ViTs. The nonlinearities in the rectified linear units (ReLUs) in ResNet allow them to associate a high neuronal activation with a specific active input pattern. ViTs often refrain from using ReLUs, which enables them to superimpose signals throughout model components, ultimately leading to high polysemanticity47. Recent efforts to improve large language model interpretability are addressing this by introducing sparse autoencoders (SAEs) with ReLUs to enhance interpretability48. Additionally, more extensively trained models, such as ResNet50v2, show clearer, more interpretable components than ResNet50, indicating that training parameters may influence latent interpretability. We explore this further below.

Dropout regularization is effective for reducing overfitting, which prevents a high reliance on a few features by randomly setting a fraction of component activations to zero during training. Our results shown in Fig. 5c indicate that VGG-13 model components become more redundant but also clearer when dropout is applied during training on a subset of ImageNet (standard errors given by grey error bars for eight runs each). It can be expected that more redundancies form, as redundancies improve robustness when components are pruned. On the other hand, neurons are measured to become more class-specific and thus clearer. Notably, different architectures respond differently, with ResNet34 and ResNet50 less impacted. Qualitative examples of concepts, detailed training procedures and results are provided in Supplementary Fig. G.2.

L1 sparsity regularization on neuron activations, as common for SAEs, improves interpretability, resulting in more specific, less polysemantic and semantically redundant neurons. We further investigate the effect of task complexity, number of training epochs and data augmentation on latent interpretability in Supplementary Note G.2.

Discussion

With SemanticLens, we propose to transfer the components of large machine learning models into a semantic representation, thus enabling a holistic understanding and evaluation of their inner workings. This transfer is made possible through recent foundation models that serve as domain experts and take the human out of interpretation loops that would be cognitively infeasible due to the vast number of components in modern deep neural networks. Especially useful are multimodal foundation models that allow users to search, annotate and label network components with textual descriptions. Foundation models are constantly being improved and becoming more efficient and applicable in scarcer data domains, such as medical data, or other data modalities, including audio and video49,50.

These unique capabilities offered by SemanticLens allow one to comprehensively audit the internal components of AI models. A multitude of spurious behaviours by popular pretrained models are hereby revealed, which emphasizes the need to understand every part of a model to ensure fairness, safety and robustness during application. To understand and audit models, we depend on the interpretability of the model components themselves. Although some models demonstrate higher interpretability, progress is still needed to develop truly interpretable models, especially regarding recent transformer architectures. However, post hoc architecture modifications or training regularizations are promising, continuing endeavours for achieving high interpretability in modern architectures. Our introduced human-interpretability measures are an effective tool for optimizing and understanding model architecture choices without relying on expensive user studies for evaluation. There are still many other hyperparameters that we leave for future work, including training with pretrained models, adversarial training, weight decay regularization and SAEs.

Ensuring trust and safety in AI systems necessitates the verification of internal components, as is the case in traditional engineered systems, such as aeroplanes. Achieving this requires holistic interpretability approaches like SemanticLens. Such methods enable one to understand and quantify the validity of latent components, thus improving interpretability and reducing potential spurious behaviours. However, several challenges remain for post hoc component-level explainable AI approaches, such as SemanticLens. These include the need to adopt meaningful evaluation metrics51, to adapt to generative models52 and to address the limitations of ‘post hoc’ versus ‘ante hoc’ interpretability53. Thus, there are ample opportunities for innovation in the next generation of explainable AI research54.

Methods

SemanticLens is based on encoding each component of a neural network and its associated concept as a single, universal semantic vector. As demonstrated in ‘Results’, this encoding enables a comprehensive analysis of the model, including multimodal search, textual labelling, concept comparisons across networks, alignment audits and interpretability evaluations. In the following, we provide methodological details, define key terms and discuss underlying assumptions.

Components of neural networks

Throughout ‘Results’ we view individual neurons or filters as the atomic components of machine learning models. However, components can be defined in different ways. More generally, a model component refers to any unit within a machine learning model, such as a neuron, convolutional filter or weight, that contributes to the computations by the model. It can also include directions in activations17, like those extracted through concept activation vectors20 or SAEs48. Notably, SemanticLens is applicable to any component representations as long as their role can be described with concept examples.

Describing the role of components through concept examples

In this work, the term ‘concept’ is defined as a structured pattern or combination of input features that can be associated with a specific model component. Concepts can be human-interpretable and represent semantic constructs like ‘dog’, ‘red’ or ‘striped pattern’. They are often defined through modalities like text or images. However, a concept may also manifest as abstract or non-interpretable, and their role in model inference may not be easily understood from a human perspective.

To describe the role of a component and its associated concept, highly activating data samples are retrieved from the (training) database. As the concept represented by a component can occur in only a small part of a large input sample, we compute component-specific input attribution maps15 to identify the relevant part of the input and crop each data sample to exclude input features with less than 1% of the highest attribution value, as illustrated in Supplementary Fig. H.1a. Component-specific attributions are not available yet for ViTs, so instead, we use upsampled spatial maps, as discussed in Supplementary Note H. Concept examples for neuron k in layer ℓ are, thus, retrieved as

where the latent activations at layer ℓ ∈ {1, …, n} with \({k}_{\ell }\in {{\mathbb{N}}}^{+}\) neurons are given by \({{\mathcal{M}}}^{\ell }:X\to {{\mathbb{R}}}^{{k}_{\ell }}\), ‘crop’ denotes the cropping operation and topm selects the m maximally activating samples of dataset \({\mathcal{D}}\subset X\).

Transformation into a semantic space

In the second step, SemanticLens generates a universal semantic representation for each model component based on the concept examples. To do this, we employ a foundation model \({\mathcal{F}}\) that is assumed to serve as a semantic expert of the data domain and operates on the set of concept examples \({{\mathcal{E}}}_{k}\). For domains with sparse data, foundation models should be assessed for their ability to capture relevant semantics to ensure effectiveness, as demonstrated by the OpenCLIP benchmark55, or by concept labelling evaluations, such as in Supplementary Note D.4.

As illustrated in Fig. 1a for step 2, we obtain the semantic representation of the kth neuron in layer ℓ as a single vector ϑk in the latent space \({\mathcal{S}}\) of foundation model \({\mathcal{F}}\) (index ℓ omitted for clarity):

Computing the mean over individual feature vectors \({\{{\mathcal{F}}({\bf{x}})\}}_{{\bf{x}}\in {{\mathcal{E}}}_{k}}\) (as also proposed in ref. 56) is usually more meaningful than using individual vectors (for example, for labelling as in Supplementary Note D). Averaging embeddings can be viewed as a smoothing operation, where noisy background signals are reduced, resulting in a better representation of the overall semantic meaning. Setting \(| {{\mathcal{E}}}_{k}| =30\) results in converged ϑk throughout ImageNet experiments, as detailed in Supplementary Note D.

From semantic space to model, predictions and data

The semantic space representation is inherently connected to the model components, that are, themselves, linked to model predictions and the data, as illustrated in Supplementary Fig. H.1a,c. We can, thus, identify all neurons that correspond to a concept (through search, as detailed in ‘Towards robust and safe medical models’), filter this selection to those relevant for a decision output (through component-specific relevance scores \({\mathcal{R}}\) per data point; see ‘Evaluating human-interpretability of model components’ and step 3 in Fig. 1a) and last, identify all data (\({\mathcal{E}}\)) that highly activate the corresponding group of model components.

Concept search, labelling and comparison

As semantic embeddings ϑ are elements in a vector space, we measure similarity s directly with cosine similarity, which is also the design choice of CLIP (ref. 23):

Search: Given a set of semantic embeddings of model components \({{\mathcal{V}}}_{{\mathcal{M}}}=\{{{\boldsymbol{\vartheta }}}_{1},\ldots ,{{\boldsymbol{\vartheta }}}_{k}\}\) and an extra probing embedding ϑprobe representing a sought-after concept, we can now search for model components encoding the concept with

where we additionally subtract the similarity to a ‘null’ embedding ϑ<> representing background (noise) present in the concept examples if available. For text, for example, it is common to subtract the embedding of the empty template to remove its influence18, leading to more faithful labelling, as described in Supplementary Note D.4.

Label: To assign a textual label to a model component describing its encoded concept, a set of l predefined concept labels is embedded, resulting in \({{\mathcal{V}}}_{{\rm{probe}}}:= \{{{\boldsymbol{\vartheta }}}_{1}^{{\rm{probe}}},\ldots ,{{\boldsymbol{\vartheta }}}_{l}^{{\rm{probe}}}\}\subset {{\mathbb{R}}}^{d}\). Analogously to equation (4), each component is assigned the most aligned label from the predefined set, or none if the similarity falls below a certain threshold.

Compare: Two models \({\mathcal{N}}\) and \({\mathcal{M}}\) may be quantitatively compared by considering the number of components that were assigned to concept labels, as introduced by NetDissect14, or by measuring set similarity \({S}_{{{\mathcal{V}}}_{{\mathcal{M}}}\to {{\mathcal{V}}}_{{\mathcal{N}}}}\) based on the average maximal pairwise similarity:

which quantifies the degree to which the concepts encoded in model \({\mathcal{M}}\) are also encoded in model \({\mathcal{N}}\). Notably, the interpretability measures detailed in ‘Human-interpretability measures for concepts’ are another way to perform comparisons.

Explaining concept use through component-level relevance

So far, we have focused on understanding the relationship between inputs and components, that is, identifying input patterns that activate specific components. Together with the previously introduced functionalities, such as search, describe and compare, this provides insights into what a model can perceive. However, it is equally important to understand how components influence higher-layer components or output predictions.

To do this, we assign relevance scores \({\mathcal{R}}\) to components using feature attribution methods based on (modified) gradient backpropagation, such as LRP57, following the approach in ref. 15. These methods provide not only input attributions (for example, heat maps) but also relevance scores for all latent components (including neurons or directions in latent feature spaces) in a single explanatory step17. To simplify an explanation, attribution scores for components that were assigned the same label can be aggregated, as, for example, done in Fig. 2c.

This enables one to analyse how individual components or groups thereof contribute to specific predictions at the instance level or globally through aggregation across samples. Furthermore, attribution scores can be computed with respect to intermediate-layer component activations rather than final logits, which enables the construction of attribution graphs (as in Fig. 2c) that reveal the hierarchical structure and concept flow within the model.

Auditing concept alignment

As outlined in ‘Auditing concept alignment with expected reasoning’, it is important to measure how well the concepts used by a model are aligned with the expected behaviour. To compute concept alignment, we require a set of model embeddings \({{\mathcal{V}}}_{{\mathcal{M}}}\) and a set of expected valid and spurious semantic embeddings \({{\mathcal{V}}}_{{\rm{valid}}}\) and \({{\mathcal{V}}}_{{\rm{spur}}}\), respectively. For each model component k, we then compute the alignment scores:

Additionally, it is important to take into account how the components are used. We, thus, propose to retrieve the relevance of each model component during inference, for example, the relevance for predictions of a specific class. Optimally, all relevant components are aligned to valid concepts only, that is, avalid > 0 and aspur < 0. A high spurious alignment score aspur > 0 indicates potential harmful model behaviour. Neurons that align to neither should be examined more closely, as they represent unexpected concepts.

Note that we assume that the components comprehensively capture the relevant concepts used by the model. However, concepts may be distributed across several components. Isolating them (for example, through SAEs) is an active field of research58. Our analysis in Supplementary Note F.1.4 on 100 spurious concepts37 indicates that the neural basis of the examined ResNet50v2 model provides faithful results, with SAEs enhancing faithfulness and their combination proving even more effective.

Human-interpretability measures for concepts

We now introduce measures to capture the human-interpretability of concepts.

Concept clarity

The clarity measure aims to represent how easy it is to understand the role of a model component, that is, how easy it is to grasp the common theme of concept examples. Intuitively, clarity is low when there are many distracting (background) elements in the concept examples. Further, clarity is low when a concept is very abstract and many, at first glance, unrelated elements are shown throughout examples. Inspired by refs. 44,59,60, we compute semantic similarities in the set of concept examples as a measure of clarity. Cosine similarity serves here as a measure of how semantically similar two samples are in the latent space of the foundation model used. For the overall clarity score of neuron k, we compute the average pairwise semantic similarity \({s}_{\cos }\) (equation (3)) of the individual feature vectors \({V}_{k}={\left\{{{\bf{v}}}_{k,i}\right\}}_{i}\):

where the last expression is a formulation that is computationally less expensive and circumvents the need to compute large similarity matrices.

Concept similarity and redundancy

The semantic representation allows one to conduct comparisons across arbitrary sets of neurons without being restricted to neurons from identical layers or model architectures. In particular, it allows us to assess the degree of similarity between the concepts of two neurons k and j, which we define as

through cosine similarity. Based on similarity, we can further assess the degree of redundancy across the concepts of m neurons with the ϑ set \({\mathcal{V}}=\{{{\boldsymbol{\vartheta }}}_{1},\ldots ,{{\boldsymbol{\vartheta }}}_{m}\}\), which we define as

Notably, semantic redundancy might not imply functional redundancy. Even though two semantics are similar, they might correspond to different input features. For example, the stripes of a zebra or striped boarfish are semantically similar but might be functionally very different for a model that discerns both animals.

Concept polysemanticity

A neuron is considered polysemantic if several semantic directions exist in the concept example set. Formally, we define a neuron as polysemantic if subsets of \({{\mathcal{E}}}_{k}\) can be identified that provide diverging ϑ’s. The polysemanticity measure is defined as

where \({V}_{k}^{\;(i)}\subseteq {V}_{k}\) for i = 1, …, h is a subset of the embedded concept examples, generated by an off-the-shelf clustering method, where we use h = 2 throughout the experiments. Alternatively, as proposed by ref. 59, polysemanticity can be measured as an increase in the clarity of each set of concept examples, which, however, performs worse in the user study evaluation as detailed in Supplementary Note G.1.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

The datasets used in this study are publicly available from third-party sources. The user study, as well as the experiments described in the sections ‘Understanding the inner knowledge of AI models’, ‘Auditing concept alignment with expected reasoning’ and ‘Evaluating human-interpretability of model components’ were conducted using the ImageNet Large Scale Visual Recognition Challenge 2012 dataset27. This dataset is available from the official ImageNet website image-net.org, where specific download instructions and terms of use can be found. The medical use case was demonstrated with the ISIC 2019 dataset28,38,39, which can be acquired from the official ISIC Challenge website (challenge.isic-archive.com). The Broden14 and the Paco61 datasets are required for the extended experiments described in Supplementary Note D. The Broden dataset is available at github.com/CSAILVision/NetDissect-Lite and the Paco dataset at github.com/facebookresearch/paco. More information about the data and models used in the experiments can be found in Supplementary Note B.

Code availability

We provide an open-source toolbox for the scientific community written in Python and based on PyTorch, Zennit-CRP and Zennit. The GitHub repository containing our implementations of SemanticLens is publicly available via https://github.com/jim-berend/semanticlens (ref. 62). All experiments were conducted with Python v.3.10.12, zennit-crp v.0.6, Zennit v.0.4.6 and PyTorch v.2.2.2.

References

Lapuschkin, S. et al. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. 10, 1096 (2019).

Kauffmann, J. et al. Explainable AI reveals Clever Hans effects in unsupervised learning models. Nat. Mach. Intell. 7, 412–422 (2025).

Borys, K. et al. Explainable AI in medical imaging: an overview for clinical practitioners–beyond saliency-based XAI approaches. Eur. J. Radiol. 162, 110786 (2023).

Tegmark, M. & Omohundro, S. Provably safe systems: the only path to controllable AGI. Preprint at https://arxiv.org/abs/2309.01933 (2023).

Hernández-Orallo, J. The Measure of All Minds: Evaluating Natural and Artificial Intelligence (Cambridge Univ. Press, 2017).

Samek, W., Montavon, G., Vedaldi, A., Hansen, L. K. & Müller, K.-R. (eds) Explainable AI: Interpreting, Explaining and Visualizing Deep Learning (Springer, 2019).

Gunning, D. et al. XAI–explainable artificial intelligence. Sci. Robot. 4, 7120 (2019).

Bricken, T. et al. Towards monosemanticity: decomposing language models with dictionary learning. Transformer Circuits Thread https://transformer-circuits.pub/2023/monosemantic-features (2023).

Miller, T. Explanation in artificial intelligence: insights from the social sciences. Artif. Intell. 267, 1–38 (2019).

Bereska, L. & Gavves, S. Mechanistic interpretability for AI safety-a review. Trans. Mach. Lern. Res. https://openreview.net/forum?id=ePUVetPKu6 (2024).

Ramaswamy, V. V., Kim, S. S., Fong, R. & Russakovsky, O. Overlooked factors in concept-based explanations: dataset choice, concept learnability, and human capability. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 10932–10941 (IEEE, 2023).

Friedrich, F., Stammer, W., Schramowski, P. & Kersting, K. A typology for exploring the mitigation of shortcut behaviour. Nat. Mach. Intell. 5, 319–330 (2023).

Nguyen, A., Yosinski, J. & Clune, J. in Explainable AI: Interpreting, Explaining and Visualizing Deep Learning (eds Samek, A. et al.) 55–76. (Springer, 2019).

Bau, D., Zhou, B., Khosla, A., Oliva, A. & Torralba, A. Network dissection: quantifying interpretability of deep visual representations. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 6541–6549 (IEEE, 2017).

Achtibat, R. et al. From attribution maps to human-understandable explanations through concept relevance propagation. Nat. Mach. Intell. 5, 1006–1019 (2023).

Fel, T. et al. Craft: concept recursive activation factorization for explainability. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2711–2721 (IEEE, 2023).

Fel, T. et al. A holistic approach to unifying automatic concept extraction and concept importance estimation. In Proc. Advances in Neural Information Processing Systems, Vol. 37 (eds Oh, A. et al.) 54805–54818 (Curran Associates, 2024).

Ahn, Y. H., Kim, H. B. & Kim, S. T. WWW: a unified framework for explaining what where and why of neural networks by interpretation of neuron concepts. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 10968–10977 (IEEE, 2024).

Koh, P. W. & Liang, P. Understanding black-box predictions via influence functions. In Proc. International Conference on Machine Learning (eds Precup, D. et al.) 1885–1894 (PMLR, 2017).

Kim, B. et al. Interpretability beyond feature attribution: quantitative testing with concept activation vectors (TCAV). In Proc. International Conference on Machine Learning (eds Dy, J. G. et al.) 2668–2677 (PMLR, 2018).

Li, Y. & Goel, S. Making it possible for the auditing of AI: a systematic review of AI audits and AI auditability. Inf. Syst. Front. 27, 1121–1151 (2025).

Anwar, U. et al. Foundational challenges in assuring alignment and safety of large language models. Trans. Mach. Learn. Res. https://openreview.net/forum?id=oVTkOs8Pka (2024)

Radford, A. et al. Learning transferable visual models from natural language supervision. In Proc. International Conference on Machine Learning (eds Meila, M. et al.) 8748–8763 (PMLR, 2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proc. International Conference on Learning Representations (eds Bengio, Y. et al.) (OpenReview.net, 2015).

Dosovitskiy, A. et al. An image is worth 16 × 16 words: transformers for image recognition at scale. In Proc. International Conference on Learning Representations (OpenReview.net, 2021).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Tschandl, P., Rosendahl, C. & Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 5, 180161 (2018).

Vasu, P. K. A., Pouransari, H., Faghri, F., Vemulapalli, R. & Tuzel, O. Mobileclip: fast image-text models through multi-modal reinforced training. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 15963–15974 (IEEE, 2024).

Oquab, M. et al. DINOv2: learning robust visual features without supervision. Trans. Mach. Learn. Res. https://openreview.net/forum?id=a68SUt6zFt (2024).

Yang, Y. et al. A textbook remedy for domain shifts: knowledge priors for medical image analysis. In Proc. Advances in Neural Information Processing Systems, Vol. 37 (eds Globerson, A. et al.) 90683–90713 (Curran Associates, 2024).

Kuhn, L., Sadiya, S., Schlotterer, J., Seifert, C. & Roig, G. Efficient unsupervised shortcut learning detection and mitigation in transformers. Preprint at https://arxiv.org/abs/2501.00942 (2025).

Stammer, W., Schramowski, P. & Kersting, K. Right for the right concept: revising neuro-symbolic concepts by interacting with their explanations. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 3619–3629 (IEEE, 2021).

Pahde, F., Dreyer, M., Samek, W. & Lapuschkin, S. Reveal to revise: an explainable AI life cycle for iterative bias correction of deep models. In Proc. International Conference on Medical Image Computing and Computer-Assisted Intervention (eds Greenspan, H. et al.) 596–606 (Springer, 2023).

Kopf, L. et al. Cosy: evaluating textual explanations of neurons. In Proc. Advances in Neural Information Processing Systems, Vol. 37 (eds Globerson, A. et al.) 34656–34685 (Curran Associates, 2024).

Liu, Z. et al. Towards understanding grokking: an effective theory of representation learning. In Proc. Advances in Neural Information Processing Systems, Vol. 35 (eds Koyejo, S. et al.) 34651–34663 (Curran Associates, 2022).

Neuhaus, Y., Augustin, M., Boreiko V. & Hein, M. Spurious features everywhere-large-scale detection of harmful spurious features in ImageNet. In Proc. IEEE International Conference on Computer Vision 20235–20246 (IEEE, 2023).

Codella, N. C. et al. Skin lesion analysis toward melanoma detection. In Proc. IEEE 15th International Symposium on Biomedical Imaging 168–172 (IEEE, 2018).

Hernández-Pérez, C. et al. Bcn20000: Dermoscopic lesions in the wild. Sci. Data 11, 641 (2024).

Duarte, A. F. et al. Clinical ABCDE rule for early melanoma detection. Eur. J. Dermatol. 31, 771–778 (2021).

Cassidy, B., Kendrick, C., Brodzicki, A., Jaworek-Korjakowska, J. & Yap, M. H. Analysis of the ISIC image datasets: usage, benchmarks and recommendations. Med. Image Anal. 75, 102305 (2022).

Kim, C. et al. Transparent medical image AI via an image-text foundation model grounded in medical literature. Nat. Med. 30, 1154–1165 (2024).

Spinner, T., Fürst, D. & El-Assady, M. iNNspector: visual, interactive deep model debugging. Preprint at https://arxiv.org/abs/2407.17998 (2024).

Li, M. et al. Evaluating readability and faithfulness of concept-based explanations. In Proc. 2024 Conference on Empirical Methods in Natural Language Processing (eds Al-Onaizan, Y. et al.) 607–625 (ACL, 2024).

Schuhmann, C. et al. Laion-5b: an open large-scale dataset for training next generation image-text models. In Proc. Advances in Neural Information Processing Systems, Vol. 35 (eds Koyejo, S. et al.) 25278–25294 (Curran Associates, 2022).

Elhage, N. et al. Toy models of superposition. Transformer Circuits Thread https://transformer-circuits.pub/2022/toy_model/index.html (2022).

Scherlis, A., Sachan, K., Jermyn, A. S., Benton, J. & Shlegeris, B. Polysemanticity and capacity in neural networks. Preprint at https://arxiv.org/abs/2210.01892 (2022).

Huben, R., Cunningham, H., Smith, L. R., Ewart, A. & Sharkey, L. Sparse autoencoders find highly interpretable features in language models. In Proc. International Conference on Learning Representations (OpenReview.net, 2023).

Wu, Y. et al. Large-scale contrastive language-audio pretraining with feature fusion and keyword-to-caption augmentation. In Proc. IEEE International Conference on Acoustics, Speech and Signal Processing 1–5 (IEEE, 2023).

Xu, H. et al. Videoclip: contrastive pre-training for zero-shot video-text understanding. In Proc. 2021 Conference on Empirical Methods in Natural Language Processing (eds Moens, M.-F. et al.) 6787–6800 (ACL, 2021).

Nauta, M. et al. From anecdotal evidence to quantitative evaluation methods: a systematic review on evaluating explainable AI. ACM Comput. Surv. 55, 295 (2023).

Amara, K., Sevastjanova, R. & El-Assady, M. Challenges and opportunities in text generation explainability. In Proc. World Conference on Explainable Artificial Intelligence (eds Longo, L. et al.) 244–264 (Springer, 2024).

Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215 (2019).

Longo, L. et al. Explainable artificial intelligence (XAI) 2.0: a manifesto of open challenges and interdisciplinary research directions. Inf. Fusion 106, 102301 (2024).

Ilharco, G. et al. OpenCLIP. Zenodo https://doi.org/10.5281/zenodo.5143773 (2021).

Park, H. et al. Concept evolution in deep learning training: a unified interpretation framework and discoveries. In Proc. 32nd ACM International Conference on Information and Knowledge Management (eds Frommholz, I. et al.) 2044–2054 (ACM, 2023).

Bach, S. et al. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 10, 0130140 (2015).

Sharkey, L. et al. Open problems in mechanistic interpretability. Preprint at https://arxiv.org/abs/2501.16496 (2025).

Dreyer, M., Purelku, E., Vielhaben, J., Samek, W. & Lapuschkin, S. Pure: turning polysemantic neurons into pure features by identifying relevant circuits. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 8212–8217 (IEEE, 2024).

Kalibhat, N. et al. Identifying interpretable subspaces in image representations. In Proc. International Conference on Machine Learning, Vol. 202 (eds Krause, A. et al.) 15623–15638 (PMLR, 2023).

Ramanathan, V. et al. Paco: parts and attributes of common objects. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 7141–7151 (IEEE, 2023).

Berend, J. & Dreyer, M. SemanticLens software. Zenodo https://zenodo.org/records/15422641 (2025).

Acknowledgements

We would like to express our gratitude to O. Hein for fruitful discussions and for developing a public demo of SemanticLens on https://semanticlens.hhi-research-insights.eu.

Funding

Open access funding provided by Fraunhofer-Gesellschaft zur Förderung der angewandten Forschung e.V.

Author information

Authors and Affiliations

Contributions

M.D., J.B., W.S., S.L., T.W. and J.V. were responsible for the conceptualization and methodology. M.D., J.B., W.S., J.V., S.L. and T.L. designed the experiments. J.B., M.D. and T.L. analysed the data and produced the software. W.S., S.L. and T.W. were responsible for supervision and funding acquisition. All authors participated in writing the original draft and revising the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Jaesik Choi and Ribana Roscher for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary information including related work, experimental details, Supplementary Figs. C.1–H.1 and Tables A.1–H.1.

Supplementary Code 1

Code for reproducing the experimental results.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dreyer, M., Berend, J., Labarta, T. et al. Mechanistic understanding and validation of large AI models with SemanticLens. Nat Mach Intell 7, 1572–1585 (2025). https://doi.org/10.1038/s42256-025-01084-w

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s42256-025-01084-w