Abstract

Background

Measuring clinician experiences of care and well-being (e.g. job satisfaction, fulfillment) offers insights into the practice environment’s impact, aiding workforce retention, patient safety, and care quality. However, valid measurement instruments are essential. This systematic review identified validated self-reported questionnaires designed to assess clinician well-being and its influencing factors.

Methods

Psychometric studies in English or French on measurement instruments addressing factors that influence clinician well-being, as proposed by the National Academy of Medicine, were included. Studies published between 2013 and 2023 were retrieved in December 2023 by searching these databases: CINAHL, Embase, HaPI, MEDLINE, PsycINFO, Mental Measurements Yearbook, and APA PsycTests. Study selection was completed by two independent reviewers. Results were summarized narratively, in tables, and figures. Quality of psychometric studies was assessed by the number of measurement properties addressed. The review protocol was registered with INPLASY® (202410047).

Results

Out of 10,441 records identified, 136 studies are included. The majority come from the USA (27.2%), Spain (11.0%), Canada (5.9%), or Australia (5.9%). Most focus on instruments for clinicians, regardless of their specialty (55.9%). Among profession-specific instruments (44.1%), nurses and physicians are mainly targeted. The most common domains are: (1) ‘Learning/practice environment’ (38.2%), (2) ‘Healthcare responsibilities’ (21.3%), and (3) ‘Organizational factors’ (19.1%). The most frequently addressed measurement properties are: (1) Internal consistency (88.2%), (2) Structural validity (75.7%), and (3) Content validity (68.4%).

Conclusions

Many tools for measuring clinician well-being exist, but few are fully validated. The results of this review provide a foundation to support ongoing psychometric evaluation and cross-cultural adaptation.

Plain Language Summary

Society and healthcare services are evolving rapidly, requiring clinicians to constantly adapt and placing them under continuous pressure. It is essential to investigate the factors influencing their well-being at work to maintain safe and high-quality patient care. To achieve this, valid tools are needed to measure clinician well-being. We conducted a literature review to identify tools currently available worldwide. Our results show that most of these tools are in English and originate from the United States. Moreover, a large proportion of tools focus on physicians and nurses. Given that healthcare organization varies between countries, it is important to have valid tools adapted to each country’s cultural context and language. We therefore identify a need for cross-cultural adaptation of these tools into multiple languages and care settings. Additionally, there should be profession-specific tools for various healthcare providers (e.g., pharmacists, dentists, physiotherapists), not only for physicians and nurses. Improvements to these tools will enable better assessment of health worker wellbeing, which will have a positive impact on them and the patients they treat.

Similar content being viewed by others

Introduction

Clinicians, including physicians, nurses, and allied healthcare professionals who directly provide care to patients1, face work that generates substantial stress and imposes a substantial cognitive and emotional burden2. The aging population, the shortage of clinicians3 and events such as the COVID-19 pandemic have repeatedly stressed the health care system4. Because of chronic strain, there is little room for recovery and growth, and clinicians are in constant survival mode5. Notably, more than 100,000 nurses left the profession in the United States between 2021 and 2023, marking the highest attrition frequency since the 1970s6.

To ensure the continuity and the quality of care to the population, it is essential to recognize and respect the needs of clinicians, fostering a supportive work environment that promotes their well-being and professional satisfaction2. In 2007, the Institute for Healthcare Improvement (IHI) introduced the Triple Aim framework to optimize the health system by enhancing patient experience, improving population health, and reducing per capita healthcare costs7. The Triple Aim evolved into the Quadruple Aim in 2014 to include clinician well-being8, which is considered essential for achieving the other three aims and enhance the quality of care8. The Quadruple aim subsequently became the Quintuple Aim in 2021 to incorporate health equity9,10.

In recent years, there has been considerable attention focused on improving patient health outcomes and experience through Patient Reported Outcomes (PROMs) and Patient Reported Experience Measures (PREMs)11,12,13,14,15. However, less attention has been given to Clinician Reported Experience Measures (CREMs), instruments designed to capture clinician experience of providing care16 that is essential for measuring and achieving the quintuple aim in the context of a learning health system10,17 (Fig. 1). In published research, clinician experience is often contextualized within specific events or care settings (e.g., experience of telepsychiatry, organizational changes, implementation of new interventions)18. Few authors have addressed the definition of what constitutes clinician well-being as a whole, using a holistic perspective that integrates multiple dimensions of work experience. For example, clinician well-being embodies job satisfaction, the search for meaning in one’s work, the sense of engagement at work, and professional fulfillment19,20. Among the very few frameworks that have been specifically proposed to define and identify the domains of clinician well-being and its influencing factors, the National Academy of Medicine (NAM) framework21 stands out as particularly relevant. It offers a comprehensive perspective by addressing key domains and influencing factors of clinician well-being (organizational factors, learning/practice environment, healthcare responsibilities, society & culture, rules and regulations, skills and abilities, and personal factors). For example, personal factors include psychological health such as burnout, and factors such as social support, work-life integration, level of engagement or personal values. With its seven domains, the NAM framework21 is consistent with the two principal schools of thought in the definition of well-being, namely hedonic well-being22 and eudaimonic well-being23, as reflected in concepts such as autonomy, the search for meaning, mental health, emotional responses, and others22,23. Thus, its holistic approach encompasses multiple dimensions of work experience and well-being, and it clearly outlines factors that are important for healthcare employers and organizations to consider.

To accurately reflect clinical settings and provide insights into organizational change, it is crucial to validly measure clinician well-being and its influencing factors, as this has the potential to influence workforce retention, patient safety, and care quality 24,25. However, valid measurement instruments (self-administered questionnaires) must first be available. To date, various systematic reviews and syntheses have examined clinician reported experience measures available in the literature18,26,27. The systematic review by Wang et al.26 covered 13 instruments of quality of life at work designed for use by clinicians26. The systematic review by Jarden et al.27 focused on the well-being of workers (including, but not limited to clinicians) and concluded that while several instruments are available to measure well-being (n = 18), the methodological quality of their development and validation was deemed generally inadequate27. The authors however did not employ a specific framework to delineate the concept of clinician well-being. As a result, some references that did not explicitly include the keyword “well-being” but assessed related concepts, such as burnout or workplace climate, may have been overlooked. A rapid review by Pervaz Iqbal et al.18 focused on clinician experience of providing care18. The authors emphasized defining clinician experience and identified instruments, but did not specifically focus their research strategy on instruments. It should be noted that neither of these last two reviews included the Cumulative Index to Nursing and Allied Health Literature (CINAHL) database, a key resource for literature on occupational well-being, including stress and burnout among the nursing and allied health workforce28. According to the findings of Pervaz Iqbal et al.18, further work is needed to study clinician experience in a holistic sense, and to understand this experience on a continuum rather than in the context of episodes of care or organizational change18.

Guided by Peter Drucker’s assertion that “what gets measured gets managed”29, this systematic review aims to contribute to a culture on constructive measurement of clinician well-being, founded in the belief that meaningful improvement begins with understanding the starting point. The objective of this systematic review was therefore to identify validated self-reported measurement instruments designed to assess clinicians’ well-being and its influencing factors. An innovative addition sets our review methodology apart, notably the use of the well-established clinician well-being framework proposed by the National Academy of Medicine (2018)21 which allowed for a structured and comprehensive analysis of the instruments in relation to key factors influencing clinician well-being.

This systematic review is the first to comprehensively identify validated self-reported instruments designed to assess clinician well-being and its influencing factors. It reveals a large number of instruments and constructs, limited assessment of measurement properties per study, underrepresentation of certain factors from the National Academy of Medicine’s framework, and a focus on nurses and physicians. Few instruments are validated in other populations, only one study includes sex or gender subgroup analyses, and most tools are available only in English.

Methods

This work is reported according to the Guideline for reporting systematic reviews of outcome measurement instruments (PRISMA-COSMIN)30, and followed the COSMIN methodology for systematic reviews of patient reported outcomes measures31. The study was registered in the International Platform for Registering Systematic Reviews and Meta-Analysis Protocols (INPLASY202410047) and can be accessed at: https://inplasy.com/inplasy-2024-1-0047/. The conduct of this review did not require ethics approval or informed consent from human participants.

Eligibility criteria

To be included in the systematic review, peer-reviewed journal articles had to be original investigations reporting on the measurement properties of self-administered measurement instruments (questionnaires) aimed at assessing the well-being of clinicians (including nurses, physicians, and allied healthcare professionals who directly provide care to patients1). Psychometric studies had to be published in English or in French in order to reflect the bilingual context of Canada and our team’s working environment. Included studies also had to report at least one measurement property as defined by the COnsensus-based Standards for the selection of health Measurement Instruments (COSMIN) Initiative32,33: (1) content validity, (2) structural validity, (3) internal consistency, (4) cross-cultural validity, (5) reliability (test-retest), (6) measurement error, (7) criterion validity, (8) hypothesis testing for construct validity, (9) responsiveness. Accordingly, eligible studies included those that either developed a new instrument and evaluated one or more of its psychometric properties, or validated an existing instrument in a new context or population while assessing one or more of its psychometric properties.

Applying the selection criteria without a framework to determine whether or not an instrument is eligible could have led to ambiguity. Therefore, to delineate which constructs to include or exclude, factors influencing clinician well-being proposed by the National Academy of Medicine (2018)21 were used as the study framework. The seven groups of factors include: (1) organizational factors, (2) learning/practice environment, (3) healthcare responsibilities, (4) society & culture, and (5) rules and regulations as ‘external factors’, and (6) skills and abilities and (7) personal factors as ‘individual factors’21.

Psychometric studies on generic mental health instruments, which may also be applicable to patients, were not targeted as we focused on instruments specifically sensitive to the unique context of healthcare work. Instruments aiming to measure non-clinical staff only (e.g., administrative staff, managers, auxiliary staff), or professionals who were still in training only (e.g., medical residents, interns) were excluded. Also, very specific instruments covering clinician experiences of treating specific diseases or attitudes towards specific treatments were excluded.

Information sources and search strategy

Peer-reviewed journal articles published between 2013 and 2023 were identified on December 15th 2023 by searching the following computerized databases: CINAHL (EBSCOhost), Embase (Ovid), HaPI (Ovid), MEDLINE (Ovid), PsycINFO (Ovid), and Mental Measurements Yearbook (Ovid). The APA PsycTests (APA PsycNet) database, which contains a collection of psychological tests and measures descriptions, was also consulted. The search strategy (Supplementary Methods) was developed by the research team in collaboration with an experienced medical librarian and included several synonyms for: (1) clinicians (including general keywords for healthcare staff and specific keywords for physicians, nurses, pharmacists, midwives, and social workers), (2) well-being [keywords inspired by Pervaz Iqbal et al. (2020) work18 and well-being and its influencing factors National Academy of Medicine (2018) framework21], and (3) measurement properties of instruments.

Without being used as exclusion criteria when screening the records, filters were applied during the electronic search and contributed to the feasibility of the review: (1) Studies published in the past 10 years (2013–2023) to ensure that our review was reflective of the current state of knowledge and to streamline the screening process (it reduced the quantity of results by nearly 50% in some databases but still led us to review 10,441 records). (2) Studies from the Organisation for Economic Co-operation and Development (OCDE) countries34, which contributed to comparability in the context where the present review is conducted from Canada, and (3) Primary care settings as they involve a broad range of clinicians and represent the frontline of patient care.

All records were entered into the citation management software Endnote®, facilitating the initial removal of duplicates. Subsequently, records were exported to the Rayyan® web and mobile systematic review application, where a second round of duplicates were removed.

Study selection

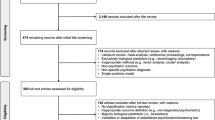

The screening and selection process were achieved by trained reviewers with expertise in primary care nursing (AB), social work (CA), quantitative research (MG-P), biostatistics (HLNN), and physical rehabilitation (LF), under the direction of the principal investigator with expertise in epidemiology and validation of instruments (AL). Using Rayyan®, two reviewers screened the title and abstracts of all records retrieved from electronic databases independently in duplicate. At the end of this step, conflicts were resolved by consensus and discussion with a third reviewer. All records selected during the title-abstract screening phase were then assessed in full text for inclusion by two independent reviewers, working in duplicate (discussion with a third reviewer if needed). Since the APA PsycTests database search results are presented as instrument summaries citing peer-reviewed articles, rather than as individual peer-reviewed journal articles themselves, and since the results of this database are not directly exportable into EndNote®/Rayyan®, records management was performed by two independent reviewers manually in parallel and is presented as an add-on within the systematic review flow diagram (Fig. 2). Peer-reviewed articles were retrieved for all included instrument summaries.

* Since the APA PsycTests database search results are operationalized in the form of instrument short reviews rather than individual peer-reviewed journal articles, and since the results of this database are not directly exportable into EndNote®/Rayyan®, records management was performed manually in parallel and is presented as an add-on within the systematic review flow diagram. The database led to the retrieval of 149 instrument records, among which 55 were kept based on the abstracts (by two independent authors who reached consensus on any conflicts). Those 55 instruments were covered in 51 articles, 43 of which were not already retrieved in the main electronic search.

Non-retrieved original articles encountered during the preparation of this systematic review could also be added (n = 1 in our case).

Data extraction process

The standardized extraction form used in this study was adapted from extraction tables proposed by COSMIN35. Due to the very large number of instruments retrieved, only the most relevant items to address the objectives of the present review were retained for feasibility reasons (see ‘Data items’ section). The extraction tool was pilot-tested, and an explanatory video was developed to help reviewers. Detailed definitions of each information/variable to be extracted was used by the reviewers to better standardize data collection. Data collection was achieved by one reviewer and then verified by a second reviewer. Conflicts were resolved by consensus and discussion with a third reviewer. On occasion, data had to be confirmed with the authors of the eligible articles (e.g., the language of the instrument was not always explicitly specified, and sometimes the instrument appeared in English in the article, even though it was used in a country where English is not an official language).

Data items

For each eligible study, the following information was retrieved: Author(s); date of publication; name of the instrument; data collection country; instrument language; whether it was a cross-cultural adaptation (since the review was conducted by a Canadian team, attention was given to instruments available in French); measured construct and total number of items; subscales and number of items; target population of the instrument; psychometric study population and size; study setting; whether previous studies were published about the instrument and the URL to the first article. For each instrument, information about the following COSMIN measurement properties32,33 were extracted if applicable: (1) content validity (degree to which the content of an instrument is an adequate reflection of the construct to be measured); (2) structural validity (degree to which the scores are an adequate reflection of the dimensionality of the construct to be measured); (3) internal consistency (degree of the interrelatedness among the items); (4) cross-cultural validity (degree to which the performance of the items of a translated or culturally adapted instrument are an adequate reflection of the performance of the items of the original version of the instrument); (5) reliability (extent to which scores for participants who have not changed are the same for repeated measurement under several conditions over time, i.e., test-retest; interrater reliability was not applicable as this review focused on self-reported measures); (6) measurement error (systematic and random error of a participant’s score that is not attributed to true changes in the construct to be measured); (7) criterion validity (degree to which the scores are an adequate reflection of a ‘gold standard’); (8) hypothesis testing for construct validity (degree to which the scores are consistent with hypotheses, i.e., relationships to scores of other instruments, or differences between relevant groups); (9) responsiveness (ability to detect change over time in the construct to be measured). When available, measurement properties were extracted according to sex, gender identity or other subgroups if applicable.

Risk of bias

Although we had planned (INPLASY202410047) to use the COSMIN Risk of Bias checklist for systematic reviews33, given the inclusion of 136 articles and the considerable investment already required for data extraction, we streamlined the quality assessment process. We thus focused on evaluating the number of COSMIN measurement properties addressed by each psychometric study, enhancing a balance between thoroughness and feasibility. In other words, our appraisal identified which measurement properties were evaluated across studies, providing an overview of comprehensiveness, but did not include a formal evaluation of the methodological quality, risk of bias, sample size adequacy, or statistical rigor of psychometric studies.

Analysis and synthesis of results

The study selection process was described using a PRISMA flow diagram36. For each psychometric study, the extracted data items were described narratively in two tables: (1) the characteristics of the instruments and (2) their measurement properties. Each instrument was classified according to the National Academy of Medicine Framework21 factors covered by the questionnaire. The classification was conducted in parallel with data extraction by one reviewer, revised by a second reviewer, and underwent final verification and standardization by the corresponding author. Examples of constructs classified in each group of factors are presented in Table 1. Frequencies regarding the language of the instruments, target clinicians, and groups of factors covered in the included studies were depicted in bar charts. The number of measurement properties addressed by each psychometric study was also depicted in a table.

Results

Study selection

The study selection flow diagram is shown in Fig. 2. After removal of duplicate records, a total of 10,441 records were identified, but most were excluded based on the title or abstract. Of the 245 full-text articles reviewed, 136 studies were retained for the review. Six studies covered two instruments, and two studies covered three instruments, yielding a total of 146 instruments included in the review.

Included studies

Characteristics of the included studies are presented in Supplementary Data 1. The studies emanated from over 40 countries with the USA (n = 37; 27.2%), Spain (n = 15; 11.0%), Canada (n = 8; 5.9%), Australia (n = 8; 5.9%), the Netherlands (n = 7; 5.1%), and Japan (n = 6; 4.4%) being the most represented. As shown in Fig. 3, more than half of the studies validated instruments in English (n = 78, 57.2%); only five (3.7%) focused on instruments in French. Cross-cultural adaptation was at the core of 25.0% (n = 34) of the studies reviewed. The majority of the studies (n = 76; 55.9%) focused on ‘versatile’ instruments intended to be administered to more than one type of clinician. Of these, 24 studies also included non-clinical staff. Although ‘versatile’ instruments were intended to be used among various types of clinicians, nurses and physicians were still predominant in the study samples. Among the profession-specific instruments (n = 60; 44.1%), the most targeted clinicians were nurses (22.8%), followed by physicians (14.0%) and pharmacists (5.1%) (Fig. 4). More than half of the studies (n = 71; 52.2%) dealt with newly developed instruments that had not been previously published.

Of the seven groups of factors from the National Academy of Medicine framework21 (organizational factors, learning/practice environment, healthcare responsibilities, society & culture, rules and regulations, skills and abilities, and personal factors), the three most covered were: (1) ‘Learning/practice environment’ (38.2%), (2) ‘Healthcare responsibilities’ (21.3%), and (3) ‘Organizational factors’ (19.1%) (Fig. 5). Only 7 studies focused on ‘Society and culture’ measures, and 4 studies focused on measures of ‘Rules and regulations’.

Measurement properties of instruments included in this review are detailed in Supplementary Data 2. Comprehensiveness of psychometric studies is depicted in Supplementary Data 3. In order of frequency, reported measurement properties were: (1) Internal consistency (88.2% of studies), (2) Structural validity (75.7%), (3) Content validity (68.4%), (4) Hypothesis testing for construct validity (27.9%), (5) Test-retest reliability (25.7%), (6) Criterion validity (11.0%), and (7) Responsiveness (2.9%). None reported on measurement error. No study covered all seven above-mentioned measurement properties; most (n = 93; 68.4%) covered fewer than four. In total, 92.7% of the studies assessed at least one aspect of instrument reliability, 96.3% assessed at least one aspect of validity, and 89.0% evaluated both reliability and validity. Stratification of measurement properties across sex subgroups was reported in only 1 study (0.7%); none considered gender. Across the 136 included studies, versions and lengths for a given instrument could vary.

Discussion

To our knowledge, this study is the first systematic review identifying, in a comprehensive manner, validated self-reported instruments designed to assess clinician well-being and its influencing factors. Several key findings emerged, including the large number of instruments and constructs assessed, the limited number of measurement properties assessed in individual studies, the underrepresentation of certain factors from the National Academy of Medicine21 framework, and the underrepresentation of instruments validated for populations other than nurses and physicians. Furthermore, only one study appeared to have conducted subgroup analyses by sex or gender, and most validated instruments remain available exclusively in English.

There were multiple studies, but few comprehensive psychometric evaluations. The risk of using non-validated instruments to measure clinician well-being and its influencing factors in clinical settings and research lies in the potential for inaccurate assessments, which may lead to ineffective interventions. The international COSMIN recommendations published in 2010 and updated in 201832,33 underline the importance of providing study results about content validity, structural validity (e.g., factor analysis), internal consistency, cross-cultural validity, reliability (e.g., test-retest), measurement error, criterion validity, hypothesis testing for construct validity, and responsiveness. Yet, three-quarters of the studies identified in our review did not address test-retest reliability (probably explained by the longitudinal design requirement for the assessment of this type of reliability). Additionally, there is a need to better understand why responsiveness (addressed in 2.9% of studies) and measurement error (absent in all studies) were poorly covered. If this reflects a lack of awareness and education, efforts could begin as early as graduate courses for students and should also attract the attention of journal peer reviewers. The incomplete psychometric coverage of most of the individual studies identified in our review underscores the importance of further enhancing the quality of validation studies in this area of research. However, we should note that the usability of an instrument often requires several psychometric studies and publications to cover the full range of relevant measurement properties. In our analysis, given the variety of versions and lengths of a same instrument, it was challenging to map findings by type of instrument rather than by study. An observation also worth noting is that many questionnaires seem to have originated from spin-off analyses rather than being developed as part of dedicated psychometric research initiatives. This may partly explain the frequent use of methodological shortcuts aimed primarily at achieving publication (e.g., restricting to internal consistency only).

In terms of the most promising versatile instruments for research, our study provides a detailed compendium for the research community interested in selecting clinician well-being instruments. The majority of the instruments we identified are what we have termed ‘versatile’ in that they were designed for a diverse range of clinicians and, in some cases, non-clinical staff (e.g., administrative staff, managers, support personnel like housekeeping). In fact, according to patient partners on the team, “sometimes the receptionist ‘provides care’ just as much”. Versatile tools have the potential to reduce the number of instruments needed to measure the Quintuple Aim and ensure a certain level of comparability between settings and studies. For example, Grandes et al.37 developed the Organizational Readiness for Knowledge Translation questionnaire, a 59-item tool designed for both clinical and non-clinical staff37. It can therefore be used across all employees within a department or service, assessing important aspects of workplace well-being such as motivation, leadership, and organizational support37. Gascon et al.38 developed the Areas of Worklife Scale, a 29-item questionnaire designed to evaluate six critical domains of work life: workload, control, reward, community, fairness, and values38. The instrument developed by Harrison et al.25, the 18-item Clinician Experience Measure (CEM), is also particularly valuable in this context, as it provides a comprehensive overview of clinician experience25. Since our electronic search, the CEM instrument has been updated to a 10-item format16.

When looking at profession-specific instruments, there was an underrepresentation of validated instruments for populations other than physicians and nurses. Although generic instruments present some advantages, one may still prefer to use more discriminant measures that capture the specificities of each profession. For example, physiotherapists are more prone to developing musculoskeletal issues due to the nature of their work39, while social workers are at high risk for secondary traumatic stress40.

Some ultra-brief instruments for managers and clinical settings were identified. In the research context, users of instruments often prioritize validity over conciseness, e.g., tools with a large number of items, but thoroughly validated such as the Maslach Burnout Inventory-Human Services Survey (MBI-HSS; 22 item)41. However, in clinical practice, very short screeners may be preferred. Therefore, we examined ultra-brief tools with six items or fewer (only three studies identified in our review; in English): Two research teams tested the performance of a single item to measure burnout (as compared to the Maslach Burnout Inventory): “Overall, based on your definition of burnout, how would you rate your level of burnout?”, where 1 = “I enjoy my work. I have no symptoms of burnout” 2 = “Occasionally I am under stress, and I don’t always have as much energy as I once did, but I don’t feel burned out” 3 = “I am definitely burning out and have one or more symptoms of burnout, such as physical and emotional exhaustion” 4 = “The symptoms of burnout that I’m experiencing won’t go away. I think about frustration at work a lot” and 5 = “I feel completely burned out and often wonder if I can go on. I am at the point where I may need some changes or may need to seek some sort of help”42,43. They concluded that this single item measure of burnout could serve as a reliable substitute42,43. Waddimba et al.44 validated 3-item and 6-item scales to assess physicians’ perceived autonomy support from health plans contracting with them. Considering the formulation of the questions, we believe that their two ultra-brief instruments have the potential to be adapted to broader healthcare settings and various types of clinicians, and should be the subject of future psychometric studies. In summary, if relevant to the users’ inquiry, the above-mentioned ultra-brief instruments are feasible to implement in clinical practice, where their results can have a direct impact on patient care. Stakeholders should consistently balance conciseness with methodological rigor when selecting instruments to ensure data quality, thereby making interventions both relevant and scientifically robust across diverse clinical contexts.

Some constructs were underrepresented. The factors most addressed by instruments were ‘Learning/practice environment’, ‘Healthcare responsibilities’, and ‘Organizational factors’, which seems to align with the implementation of Lean Management practices45 in a variety of healthcare settings such as emergency departments, pharmacy services and ambulatory care clinics46. This management approach is based on the premise that greater quality and efficiency can be achieved through a continuous improvement process with the objective of eliminating inefficiencies and maximizing value-added activities45. In other words, the Lean Management practices could have an impact on clinician and health system performance47. Consequently, it could be assumed that the factors most targeted by instruments are professional practice, responsibilities, and organizational factors, as they are closely tied to job performance. Similarly, physician well-being can be assessed by healthcare organizations to monitor health system performance48.

Rules and regulations were among the least reported factors related to well-being- in the instruments available in the literature. This is concerning, as it is important to consider these macro level factors that can influence various aspects of work, such as the scope of practice for professionals, as well as the development and acceptance of clinical practice guidelines49.

Where Do Equity, Diversity, and Inclusion Fit Into All of This? The Quintuple Aim Framework underscores the importance of measuring and improving equity to enhance the quality of care and achieve better health outcomes for all populations10. Only 7 studies (comprising 5 distinct instruments) covering stigma or cultural competency50,51,52,53,54,55,56 were retrieved in our review. They were classified in the ‘Society & culture’ group of factors influencing clinician well-being. None of these were specific to Indigenous Peoples, despite the clear priority for their inclusion in the Canadian health system57. One instrument of particular interest identified by our search strategy was the Greek Cultural Competence Assessment Tool (Ccatool) published by Vasiliou et al.55. This questionnaire measures the extent to which community nurses can properly address the care needs of people from differing cultural backgrounds and includes 40 items across the following sub-scales: cultural awareness, cultural knowledge, cultural sensitivity, and cultural practice55. Only available in English, Greek, and Spanish55,58,59, cross-cultural adaptation to the Canadian context, along with shortening and further validation, could represent a promising avenue for future research. Other cultural competency measurement tools not captured in the present review should however be reviewed60. It should be noted that none of the reviewed instruments appeared to address the experience of clinicians immigrating to a new healthcare culture, a situation that warrants further exploration.

In a context where the importance of considering sex and gender in health research is no longer debatable61,62, it is imperative that the instruments used to assess clinician well-being and its influencing factors are shown to be equally valid for both men and women. Nevertheless, statistical stratification by sex or gender was absent in nearly all studies, with only one study verifying that the instrument’s validity was equally acceptable across sex at birth groups.

The specific bilingual context of Canada is worth discussing. Canada is a bilingual country with two official languages: English and French. A total of 22% of the Canadian population speaks French as their first official language; 11.2% can speak French but not English which represented in 2021 4,087,895 individuals63. In the province of Québec where French is the sole official language, 84.1% of the population speaks French as their first official language and 47% of the population can speak French but not English63. Important Francophone communities are also present in the provinces of New Brunswick and Ontario63. Access to instruments that have been cross-culturally validated for French-Canadian respondents is an imperative for researchers and healthcare settings in Canada. However, our systematic review identified only 5 studies focusing on the validity of tools in French64,65,66,67,68 (3 of which were specifically French-Canadian versions64,65,66). These tools address team climate (‘Practice environment’ factor)64, functioning of family health teams (‘Organizational’ factor)65, and delivery of community-based primary health care (‘Practice environment’ and ‘Healthcare responsibility’ factors)66. The need for the development and/or adaptation of instruments for French-Canadian clinician well-being is therefore urgent. Prioritization exercises for the constructs and tools to be emphasized in such cross-cultural validations should be the next step.

A few methodological remarks are worth emphasizing. The lack of uniformity in the terminology used to describe psychometric properties sometimes made it difficult to understand the methodology applied by the authors of studies included in the present systematic review. This required several conflict resolutions with the psychometric measurement experts on our team. Adopting a recognized classification system, such as that of COSMIN31, is recommended to streamline the process. Publication of study protocols is also an effective avenue for gathering feedback from the scientific community.

During the screening process, we observed that a large proportion of records reported the use of validated instruments, but these were not proper psychometric studies that reported measurement properties. This finding underscores the challenge of developing sensitive search strategies to identify validated tools. Additionally, although many studies were deemed ineligible because they were not psychometric studies, the screening process revealed that a considerable number of surveys conducted about the well-being of clinicians are conducted without necessarily using validated instruments. It is thus essential to promote the use of reliable and validated instruments in this field to ensure scientific rigour and the quality of results.

Despite the numerous strengths of this review, certain limitations must be acknowledged. First of all, our review focused on ‘how to measure’ rather than ‘what to measure,’ a highly relevant question, but one that is beyond the scope of our work and not discussed in the present paper. The search strategy was restricted to articles published in the past ten years to provide a contemporary analysis of the available instruments and ensure alignment with the realities of today’s health system. Given the fast-paced evolution of health systems3, it is worth considering whether instruments that have not been validated in the past 10 years still accurately reflect the current realities of global healthcare settings.

The large number of articles included in the study made it unfeasible to provide thorough descriptions and appraisals of the quality of each instrument using the COSMIN Risk of Bias checklist for systematic reviews33, which is very comprehensive and relatively time-consuming to complete. Counting the number of measurement properties addressed by each psychometric study is a useful descriptive approach to summarize which psychometric aspects are reported, but by itself, it is not a full critical appraisal of study quality. In fact, it doesn’t assess how well these properties were assessed, the methodological rigor, risk of bias, sample size adequacy, or quality of the statistical methods used. This choice prompted considerable reflection and deliberation throughout, particularly as we aimed to publish our findings within an appropriate timeframe, and considering that students with training in the subject that were involved in the review were concluding their availability window. All future users of the measurement tools identified in this review should, once the target construct is clearly defined, conduct a thorough and rigorous appraisal of the quality of the psychometric studies underpinning their choice.

A strength of our study is the adoption of the holistic National Academy of Medicine (2018) framework21, which has the potential to support multiple endeavors of measurement instrument users. Those interested in focusing on a more narrowly defined construct of well-being are advised to use instruments that specifically target distinct categories of factors. Still, the concept of clinician well-being was challenging to define during the initial screening stages, even with the use of the framework. This led to several screening conflicts and required multiple discussions among the reviewers in order to standardize the classification. This calls for further reflection surrounding the framework. Finally, as in any review, we cannot exclude the possibility of publication bias69 (i.e., instruments with poor psychometric properties may not have been published).

In conclusion, despite growing interest for self-reported measurement instruments for assessing clinician well-being and its influencing factors18,26,27, the present study revealed gaps in the literature, including the lack of comprehensive psychometric evaluations and limited assessment of measurement properties like responsiveness and measurement error. Additionally, instruments subject to validation studies for non-physician/non-nurse populations are underrepresented, and there is a lack of sex- and gender-based subgroup analyses. These findings underscore the need for more rigorous psychometric studies following established psychometric standards, such as COSMIN recommendations31. Efforts should focus on developing versatile, culturally adapted instruments that are inclusive of diverse clinician groups. Attention to equity, diversity, and inclusion is crucial, as many tools fail to address such values. This review provides a foundation for future research to improve the measurement of clinician well-being and its influencing factors, offering the potential for learning and aiming for more effective interventions and better health outcomes.

Data availability

Complete search strategies used in the various electronic databases are available in Supplementary Methods. Supplementary Data 1 presents the included psychometric studies (listed in alphabetical order by the last name of the first author), and Supplementary Data 2 details the measurement properties covered in the included studies. Comprehensiveness of psychometric studies is depicted in Supplementary Data 3. The Excel dataset containing the numerical results for Figs. 3, 4, and 5 is provided as Supplementary Data 4.

References

Patient Engagement Action Team. Engaging Patients in Patient Safety – A Canadian Guide - Glossary of Terms, <https://www.healthcareexcellence.ca/en/resources/engaging-patients-in-patient-safety-a-canadian-guide/glossary-of-terms/#:~:text=Providers%20(or%20clinicians)%3A%20Includes,family%20caregivers%20or%20care%20partners> (2019).

Søvold, L. E. et al. Prioritizing the mental health and well-being of healthcare workers: An urgent global public health priority. Front. Public Health 9 https://doi.org/10.3389/fpubh.2021.679397 (2021).

Figueroa, C. A., Harrison, R., Chauhan, A. & Meyer, L. Priorities and challenges for health leadership and workforce management globally: A rapid review. BMC Health Serv. Res 19, 239 (2019).

Fteropoulli, T. et al. Beyond the physical risk: Psychosocial impact and coping in healthcare professionals during the COVID‐19 pandemic. Journal of clinical nursing https://doi.org/10.1111/jocn.15938 (2021).

Ehrlich, H., McKenney, M. & Elkbuli, A. Protecting our healthcare workers during the COVID-19 pandemic. Am. J. Emerg. Med 38, 1527–1528 (2020).

Richemond, D. The effect of the COVID-19 pandemic on nurse retention. Open J. Soc. Sci. 12, 98–117 (2024).

Institute for Healthcare Improvement. Triple Aim and Population Health, <https://www.ihi.org/improvement-areas/improvement-area-triple-aim-and-population-health> (2024).

Bodenheimer, T. & Sinsky, C. From triple to quadruple aim: care of the patient requires care of the provider. Ann. Fam. Med 12, 573–576 (2014).

Nundy, S., Cooper, L. A. & Mate, K. S. The quintuple aim for health care improvement: A new imperative to advance health equity. JAMA 327, 521–522 (2022).

Unité de soutien SSA Québec. Système de santé apprenant, <https://ssaquebec.ca/lunite/systeme-de-sante-apprenant/> (2024).

Bull, C., Byrnes, J., Hettiarachchi, R. & Downes, M. A systematic review of the validity and reliability of patient‐reported experience measures. Health Serv. Res. 54, 1023–1035 (2019).

Churruca, K. et al. Patient‐reported outcome measures (PROMs): a review of generic and condition‐specific measures and a discussion of trends and issues. Health Expectations 24, 1015–1024 (2021).

Gilmore, K. J., Corazza, I., Coletta, L. & Allin, S. The uses of patient reported experience measures in health systems: a systematic narrative review. Health policy 128, 1–10 (2023).

Pennucci, F., De Rosis, S. & Nuti, S. Can the jointly collection of PROMs and PREMs improve integrated care? The changing process of the assessment system for the hearth failure path in Tuscany Region. Int. J. Integrated Care (IJIC) 19 https://doi.org/10.5334/ijic.s3421 (2019).

Black, N. Patient reported outcome measures could help transform healthcare. BMJ 346, f167 (2013).

Harrison, R. et al. Measuring clinician experience in value-based healthcare initiatives: A 10-item core clinician experience measure (CEM-10). Aust. Health Rev. 48, 160–166 (2024).

Itchhaporia, D. The evolution of the quintuple aim: health equity, health outcomes, and the economy. J. Am. Coll. Cardiol. 78, 2262–2264 (2021).

Pervaz Iqbal, M. et al. Clinicians’ experience of providing care: a rapid review. BMC Health Serv. Res. 20, 952 (2020).

Danna, K. & Griffin, R. W. Health and well-being in the workplace: A review and synthesis of the literature. J. Manag. 25, 357–384 (1999).

National Academy of Medicine. National Plan for Health Workforce Well-Being. (The National Academies Press, Washington, DC, 2024).

Brigham, T. C. et al. A journey to construct an all-encompassing conceptual model of factors affecting clinician well-being and resilience. (National Academy of Sciences, Washington, DC 2018).

Diener, E., Suh, E. M., Lucas, R. E. & Smith, H. L. Subjective well-being: Three decades of progress. Psychol. Bull. 125, 276 (1999).

Ryff, C. D. & Singer, B. H. Know thyself and become what you are: A eudaimonic approach to psychological well-being. J. Happiness Stud. 9, 13–39 (2008).

New South Wales Health. Leading Better Value Care, https://www.health.nsw.gov.au/Value/lbvc/Pages/default.aspx (2019).

Harrison, R. et al. Evaluating clinician experience in value-based health care: the development and validation of the Clinician Experience Measure (CEM). BMC Health Serv. Res. 22, 1484 (2022).

Wang, L., Touré, M. & Poder, T. G. Measuring quality of life at work for healthcare and social services workers: A systematic review of available instruments. Health Care Sci. 2, 173–193 (2023).

Jarden, R. J., Siegert, R. J., Koziol-McLain, J., Bujalka, H. & Sandham, M. H. Wellbeing measures for workers: a systematic review and methodological quality appraisal. Front Public Health 11, 1053179 (2023).

EBSCO. Cumulative Index to Nursing and Allied Health Literature (CIHNAL), <https://www.ebsco.com/products/research-databases/cinahl-database> (2024).

Drucker, P. The practice of management. 424. (Harper & Row, 1954).

Elsman, E. B. M. et al. Guideline for reporting systematic reviews of outcome measurement instruments (OMIs): PRISMA-COSMIN for OMIs 2024. Qual. Life Res. 33, 2029–2046 (2024).

Mokkink, L. B., Elsman, E. B. M. & Terwee, C. B. COSMIN guideline for systematic reviews of patient-reported outcome measures version 2.0. Qual. Life Res. 33, 2929–2939 (2024).

Mokkink, L. B. et al. The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. J. Clin. Epidemiol. 63, 737–745 (2010).

Mokkink, L. B. et al. COSMIN risk of bias checklist for systematic reviews of patient-reported outcome measures. Qual. Life Res 27, 1171–1179 (2018).

Organisation for Economic Co-operation and Development (OECD). Members and partners, <https://www.oecd.org/en/about/members-partners.html> (2024).

Mokkink, L. B. et al. COSMIN methodology for systematic reviews of patient-reported outcome measures (PROMs) - User manual. (Amsterdam, Netherlands, 2018).

Moher, D. et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 4, 1 (2015).

Grandes, G., Bully, P., Martinez, C. & Gagnon, M.-P. Validity and reliability of the Spanish version of the Organizational Readiness for Knowledge Translation (OR4KT) questionnaire. Implement. Sci. 12, 1–11 (2017).

Gascon, S. et al. A factor confirmation and convergent validity of the “Areas of Work-life Scale” (AWS) to Spanish translation. Health and Quality of Life Outcomes Vol 11, 2013, ArtID 63 11 https://doi.org/10.1186/1477-7525-11-63 (2013).

Gorce, P. & Jacquier-Bret, J. Global prevalence of musculoskeletal disorders among physiotherapists: a systematic review and meta-analysis. BMC Musculoskelet. Disord. 24, 265 (2023).

Caringi, J. C. et al. Secondary traumatic stress and licensed clinical social workers. Traumatology 23, 186 (2017).

Maslach, C. et al. Burnout Inventory. Mind Garden. Inc. Retrieved from https://www.mindgarden.com/117-maslach-burnout-inventory-mbi (2016).

Dolan, E. D. et al. Using a single item to measure burnout in primary care staff: A psychometric evaluation. J. Gen. Intern. Med. 30, 582–587 (2015).

Knox, M., Willard-Grace, R., Huang, B. & Grumbach, K. Maslach burnout inventory and a self-defined, single-item burnout measure produce different clinician and staff burnout estimates. JGIM: J. Gen. Intern. Med. 33, 1344–1351 (2018).

Waddimba, A. C., Mohr, D. C., Beckman, H. B. & Meterko, M. M. Physicians’ perceptions of autonomy support during transition to value-based reimbursement: A multi-center psychometric evaluation of six-item and three-item measures. PLoS One 15, e0230907 (2020).

Womack, J. P., Jones, D. T. & Roos, D. The machine that changed the world: The story of lean production--Toyota’s secret weapon in the global car wars that is now revolutionizing world industry. (Simon and Schuster, 2007).

Mahmoud, Z. Hospital Management in the Anthropocene: an international examination of Lean-based management control systems and alienation of nurses in operating theatres, Université de Nantes (FR); Macquarie University (Sydney), (2020).

D’Andreamatteo, A., Ianni, L., Lega, F. & Sargiacomo, M. Lean in healthcare: A comprehensive review. Health Policy 119, 1197–1209 (2015).

Brady, K. J. S., Kazis, L. E., Sheldrick, R. C., Ni, P. & Trockel, M. T. Selecting physician well-being measures to assess health system performance and screen for distress: Conceptual and methodological considerations. Curr. Probl. Pediatr. Adolesc. Health Care 49, 100662 (2019).

Smith, T., McNeil, K., Mitchell, R., Boyle, B. & Ries, N. A study of macro-, meso- and micro-barriers and enablers affecting extended scopes of practice: the case of rural nurse practitioners in Australia. BMC Nurs. 18, 14 (2019).

Almeida, B., Samouco, A., Grilo, F., Pimenta, S. & Moreira, A. M. Prescribing stigma in mental disorders: A comparative study of Portuguese psychiatrists and general practitioners. Int. J. Soc. Psychiatry 68, 708–717 (2022).

Bidell, M. P. The lesbian, gay, bisexual, and transgender development of clinical skills scale (LGBT-DOCSS): Establishing a new interdisciplinary self-assessment for health providers. J. Homosexuality 64, 1432–1460 (2017).

Rojas Vistorte, A. O. et al. Adaptation to Brazilian Portuguese and Latin-American Spanish and psychometric properties of the Mental Illness Clinicians’ Attitudes Scale (MICA v4). Trends Psychiatry Psychother. 45, e20210291 (2023).

Sapag, J. C. et al. Validation of the opening minds scale and patterns of stigma in Chilean primary health care. PLoS ONE 14, e0221825 (2019).

van der Maas, M. et al. Examining the application of the opening minds survey in the community health centre setting. Can. J. Psychiatry / La Rev. canadienne de. Psychiatr. 63, 30–36 (2018).

Vasiliou, M., Kouta, C. & Raftopoulos, V. The use of the cultural competence assessment tool (Ccatool) in community nurses: The pilot study and test-retest reliability. Int. J. Caring Sci. 6, 44–52 (2013).

Modgill, G., Patten, S. B., Knaak, S., Kassam, A. & Szeto, A. C. Opening minds stigma scale for health care providers (OMS-HC): Examination of psychometric properties and responsiveness. BMC Psychiatry 14, 120 (2014).

Canadian Institute for Helath Information (CIHI). Culturally safe health care for First Nations, Inuit and Métis Peoples, <https://www.cihi.ca/en/taking-the-pulse-measuring-shared-priorities-for-canadian-health-care-2024/culturally-safe-health-care-for-first-nations-inuit-and-metis-peoples> (2024).

Vázquez-Sánchez, M. Á. et al. Spanish adaptation and validation of the Cultural Competence Assessment Tool (CCATool) for undergraduate nursing students. Int. Nurs. Rev. 70, 43–49 (2023).

Papadopoulos, R., Tilki, M. & Lees, S. Promoting cultural competence in health care through a research based intervention in the UK. Diversity Health Soc. Care 1, 107–115 (2004).

Osmancevic, S., Schoberer, D., Lohrmann, C. & Großschädl, F. Psychometric properties of instruments used to measure the cultural competence of nurses: A systematic review. Int. J. Nurs. Stud. 113, 103789 (2021).

Rich-Edwards, J. W., Kaiser, U. B., Chen, G. L., Manson, J. E. & Goldstein, J. M. Sex and gender differences research design for basic, clinical, and population studies: essentials for investigators. Endocr. Rev. 39, 424–439 (2018).

Tannenbaum, C., Greaves, L. & Graham, I. D. Why sex and gender matter in implementation research. BMC Med. Res. Methodol. 16, 145 (2016).

Gouvernement du Canada. Portraits des langues officielles au Canada, <https://www.clo-ocol.gc.ca/fr/outils-ressources/portraits-langues-officielles-au-canada> (2024).

Beaulieu, M.-D. et al. The team climate inventory as a measure of primary care teams’ processes: Validation of the French version. Healthc. policy = Politiques de. sante 9, 40–54 (2014).

Farmanova, E., Grenier, J. & Chomienne, M. H. Pilot testing of a questionnaire for the evaluation of mental health services in family health team clinics in Ontario. Healthc. Q. (Tor., Ont.) 16, 61–67 (2013).

Kosteniuk, J. G. et al. Exploratory factor analysis and reliability of the Primary Health Care Engagement (PHCE) Scale in rural and remote nurses: findings from a national survey. Prim. Health Care Res. Dev. 18, 608–622 (2017).

Shih, C. et al. Patient safety culture for health professionals in primary care: French adaptation of the MOSPSC questionnaire (Medical Office Survey on Patient Safety Culture). Rev. d.’Epidemiologie et. de. Sante Publique 70, 51–58 (2022).

Sicsic, J., Le Vaillant, M. & Franc, C. Building a composite score of general practitioners’ intrinsic motivation: A comparison of methods. Int. J. Qual. Health Care 26, 167–173 (2014).

Drucker, A. M., Fleming, P. & Chan, A.-W. Research techniques made simple: Assessing risk of bias in systematic reviews. J. Investig. Dermatol. 136, e109–e114 (2016).

Acknowledgements

We would like to thank medical librarian Ms. Genevieve Gore (McGill University) who helped with the development of the electronic search strategies. We would also like to extend our gratitude to Ashkan Baradaran for his valuable contributions to the planning of this project. This study was supported by the Unité de soutien au système de santé apprenant (SSA) Québec and University of Quebec in Abitibi-Témiscamingue’s Institutional Research Chair in Chronic Pain Epidemiology.

Author information

Authors and Affiliations

Contributions

P.L.B., T.A.B., and A.L. secured funding and designed the work. A.L. directed the work. C.A., A.B., M.G.-P., H.L.N.N., L.F., and A.L. participated as reviewers in the systematic review process. All authors (C.A., A.B., M.G.-P., H.L.N.N., L.F., P.L.B., M.-D.P., S.L., T.A.B., S.D.L., A.L.), including patient partners, contributed substantially to the interpretation of data. C.A. and A.L. co-drafted the manuscript. All authors (C.A., A.B., M.G.-P., H.L.N.N., L.F., P.L.B., M.-D.P., S.L., T.A.B., S.D.L., A.L.) critically revised it for important intellectual content, approved the version to be published, and agreed to be accountable for all aspects of the work.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Medicine thanks Simone Willis and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Audet, C., Bernier, A., Godbout-Parent, M. et al. Assessment of clinician well-being and the factors that influence it using validated questionnaires: a systematic review. Commun Med 5, 343 (2025). https://doi.org/10.1038/s43856-025-01069-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s43856-025-01069-1