Abstract

Terrestrial insect–machine hybrid systems have long been proposed for complex terrains such as post-disaster search and rescue (SAR) missions. Previously, methods for human detection and autonomous navigation algorithms reliant on external localization were developed for these tasks. The next challenge is exploring unknown regions using only onboard sensors. Here, we propose a three-phase exploration strategy: Phase I rapidly searches for targets; Phase II approaches them to gather more information; and Phase III reliably classifies the target. To enable outdoor use, we introduce an IMU-based localization algorithm that estimates position and orientation from gait. Experiments show that this system achieves a 2D position error of ≤1 m (≈5%) without external tracking. Demonstrations in indoor (4.8 × 6.6 m²) and outdoor (3.5 × 6.0 m²) arenas confirm the strategy’s feasibility and accuracy, bringing the insect–machine hybrid system closer to practical deployment.

Similar content being viewed by others

Introduction

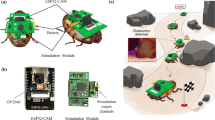

Terrestrial insect-machine hybrid systems have been developed for navigating complex terrain, such as post-disaster search-and-rescue missions1,2,3. These hybrid systems combine insects as mobile platforms and miniature electronic backpacks mounted on their bodies (Fig. 1a). They can be controlled to perform various tasks such as autonomous navigation or human detection2,4. Their locomotion is regulated by electrically stimulating the insects’ muscular, sensory, and neural systems4,5,6,7,8. The power consumed for this locomotion control is negligible (i.e., a few 0.1 mW)5,9,10, reserving the energy resource for other essential tasks, e.g., localization. Importantly, these systems could exploit vital features of the insects, e.g., their skillful and robust locomotion or the vast collection of natural sensors/receptors11,12.

a The hybrid system. It is made of a living Madagascar hissing cockroach and an electronic backpack. b The backpack & its essential components. c The process consists of three stages: (1) identifying the objective through a stochastic exploration strategy, (2) approaching it by utilizing thermal information as a guide, and (3) categorizing it using a machine learning (ML) model. The trajectory of the hybrid system is represented by a blue line, while its position is indicated by a highlighted green circle.

The navigation capabilities of terrestrial hybrid systems have advanced significantly. Initially, path-follow navigations were demonstrated manually. Automatic systems were then introduced to support studies on the insects’ behaviors, display functionalities of the backpacks, or carry out simple demonstrations (e.g., miniature object transportation)4,13,14. Practical applications, such as autonomous navigation in unknown environments, have also been studied2. Instead of employing obstacle detection sensors (e.g., camera15), a navigation program named Predictive Feedback was introduced. This program integrated the insects’ innate obstacle-negotiation abilities with artificial control rules to direct them in unknown and obstructed terrains2. An infrared (IR) image-based human detection algorithm was also introduced for these hybrid systems2. Building on these advancements, the next step involves developing an exploration strategy to enable these hybrid systems to navigate unknown environments proactively and locate or classify sources of interest, such as humans. A simple demonstration of such exploration was attained with acoustic sources16. However, the operation range was short, ~1 m, and the source localization process was not executed onboard, thus wasting power for data transmission and lacking practicability.

Exploration strategy can generally be divided into three sequential phases. In Phase I, the hybrid system would promptly acquire knowledge about the assigned environment17. In Phase II, such knowledge would be used to estimate the searching target’s position, which the hybrid system would navigate toward to gain more information about the target. Once the hybrid system approaches the target, Phase III is dedicated to target classification, where sufficient information is used to identify or confirm the target. This structured approach ensures that the hybrid system can efficiently search for and classify targets in unknown environments.

Existing literature provides the means to perform Phase III with the IR image-based human detection algorithm2,18. The IR image could also be used to execute Phase II. E.g., blob detection techniques in image processing could implement the image’s thermal information to locate hot regions inside it19,20. These regions could potentially contain the spatial knowledge about the target, thus allowing its position to be estimated20. Existing robotic studies report numerous source localization techniques to seek such thermal information promptly, thus supporting the hybrid system to carry out Phase I efficiently. Random and gradient-based searches are some examples21,22. Despite their simplicity, random searches were reported to locate unknown targets effectively21. These techniques are well-suited for hybrid systems equipped with an IR camera as the main sensor, which does not require environmental gradient measurements.

While advancements in navigation techniques have been significant, the challenge of accurate localization in complex terrains remains unresolved, particularly in environments where traditional systems are ineffective. For the purpose of autonomous navigation, accurate knowledge of the position and orientation of the insect-machine hybrid system is essential. Traditionally, this information has been obtained through external tracking systems like VICON, which utilize cameras surrounding the subject to determine its location and orientation2,23. Such systems are effective indoors but are unsuitable for outdoor environments, especially the unpredictable terrains encountered during real-life Urban Search and Rescue (USAR) missions. Therefore, the need arises for an onboard localization solution capable of operating independently of external infrastructure. Accurate localization is essential for both navigation and reporting the positions of discovered victims. However, developing a suitable positioning system for hybrid systems faces significant challenges, including size, power consumption, and computational capacity. Global Positioning systems (GPS) work effectively outdoors but are unreliable indoors24,25. Similarly, traditional indoor positioning systems (IPS) rely on cameras and struggle in low-visibility conditions of collapsed structures26,27,28. Given that the payload of an insect platform usually matches its own weight—a matter of several grams, primarily occupied by the controller IC and battery—implementing a bulky and heavy localization system like LIDAR is out of the question29,30,31. An alternative approach involves utilizing lightweight, low-power microelectromechanical systems (MEMS) and inertial measurement units (IMUs). However, most low-power IMU positioning systems are designed for pedestrian dead reckoning. These systems rely on specific characteristics in human walking gait, such as distinct stance and swing phases, which are not applicable to insect walking32,33,34. A recent study by Cole et al. used machine learning for insect localization; however, it remains in the post-processing positioning phase and is not yet ready for on-board application35.

Herein, this study presents a feasible exploration strategy for terrestrial hybrid systems developed from the above arguments (Fig. 1). The hybrid system serving this study was made of hissing cockroaches (Gromphadorhina portentosa, Fig. 1a) and equipped with the IR image-based human detection algorithm2. This algorithm was employed to execute Phase III of the exploration strategy. To execute Phase II, a blob detection technique was integrated with the Predictive Feedback navigation to form a Thermal Source-Based Navigation Algorithm. Experiments were set up to evaluate this algorithm’s performance and determine the criteria for switching between the three phases. Phase I was initially developed through simulation in a Robot Operating System (ROS) environment to emulate terrestrial insects’ natural and controlled motions. This simulation was used to compare distribution algorithms and select the most suitable one, with Lévy walk chosen for stochastic exploration. The selected distribution was then used to develop Phase I. All three phases were implemented onboard for a demonstration in an unknown environment, verifying their practicability (Supplementary Video 1). Furthermore, this study introduces an algorithm to estimate the insect’s position using a low-power MEMS IMU. The algorithm capitalizes on the insect’s body vibrations during locomotion. Variations in acceleration data, reflecting the insect’s step frequency and speed, were directly utilized to estimate its linear speed. The position was then calculated through time integration. This IMU-based positioning system supports the exploration strategy for terrestrial hybrid systems capable of operating outdoors (Supplementary Video 2). Limitations and future works were also discussed. The exploration strategy, coupled with the IMU-based positioning method, represents one of the first solutions to meet all hardware requirements while providing adequate accuracy for insect localization. This advancement brings the hybrid system closer to practical application in real-life Search and Rescue (SAR) missions.

Results

Localization methods of hybrid system with IMU (Inertial Measurement Unit)

Previous research has featured a hybrid robot navigation method that relies on position and orientation data from an external optical tracking system to guide the hybrid system2. However, such dependency limits the system’s applicability in real-world scenarios. In this study, an onboard IMU-based localization method is developed for navigation algorithm, which enables the hybrid system to operate autonomously in realistic search and rescue environments without the support from external tracking devices.

As the hybrid system targets search-and-rescue applications, localization techniques that employ a Global Positioning System (GPS) are unsuitable due to the potential absence of a GPS signal under the rubble. Vision-based or range-finder-based methods such as LIDAR are not applicable in the dust-filled rubble environment36,37,38. An inertial navigation system using an Inertial Measurement Unit (IMU) is the most reasonable method for under-rubble localization. It is well known that MEMS IMU contains noise that prevents the velocity and position of the IMU from being obtained by the integration method. The size and the sensitivity of IMU and the control backpack also restrict the computation algorithm that can be used for localization. Such algorithms like step counting34,39 is not applicable to the reading provided when the backpack is mounted on the back of the insect. However, instead of directly counting steps and estimating step length, a simplified method was proposed for positioning calculation, suitable for insects with a walking gait. During insect locomotion, the cockroach’s body shakes with a similar frequency to the walking gait, which is from 3 to 9 Hz40. The acceleration recorded from the natural walking state of the cockroach has also reflected the tendency, with a majority of signal frequencies being below 10 Hz (Fig. 2).

a, b Position estimation in 2D is based on IMU and compared to the true position provided by an accurate position tracking system. c Error propagation during the cockroach walking. The scale of the error bar is 5% of the traveled distance scale. Estimated positions are shown in blue, while true positions are depicted in red.

While absolute acceleration magnitude was unreliable, change in acceleration could be used to demonstrate the cockroach’s body shaking. This movement was visible to the accelerometer. As the walking gait induces cockroach movement, the linear speed of the cockroach is dependent on the step frequency or, in another way, body shaking behavior. Hence, an algorithm utilizing acceleration variance to estimate the speed as well as the position of the hybrid system is proposed:

Where Acceleration is the gravity-subtracted acceleration recorded by IMU Vlinear is the linear speed of the insect-machine robot, K is the gain factor, which depends only on the insect-machine configuration and nature properties [Vx Vy Vz], [Ox Oy Oz] and [X Y Z] are velocity, orientation, and position vector of the insect-machine system in global coordinate respectively. The algorithm directly estimates the system speed at discrete points in the operation instead of the acceleration integration; hence, the effect of velocity random walk and drifting can be avoided or reduced. The gain K will only need to be calibrated once for each system-environment combination by letting the cockroach run freely for 5 seconds. The position of the cockroach calculated on-board from the IMU data was verified to be accurate by comparing it with the position recorded by the external optical tracking system (Fig. 3). Throughout the test duration, the error of the IMU-based estimated position remained less than 1 m. It propagated at a scale of approximately 5% of the traveled distance.

a–c Position estimation in 3D based on IMU compared to true position provided by the accurate position tracking system. d Error propagation during the cockroach walking. The scale of the error bar is 10% of the travel distance scale, which was significantly higher than the 2D positioning error. The empty data point was due to a marking missing in the external optical tracking system. Estimated positions are shown in blue, while true positions are depicted in red.

Performance of the thermal source-based navigation algorithm

With the external optical tracking system

The human detection algorithm is effective when enough information about the subject is present in the thermal image obtained from the camera. The current IR camera allows for high-accuracy identification of people in the 0.5 m to 1.5 m range. However, as the distance from the camera to the subject increases, the model’s reliability decreases. An alternative method can be implemented by identifying the direction of the heat source in the camera and then controlling a hybrid system to approach the heat source for increased subject information. Besides, the algorithm should not be too complicated to operate the model onboard and in real-time.

The Thermal Source-Based Navigation Algorithm successfully navigated the insect toward the target object in 28 out of 30 trials (93.3%, N = 3 insects). The average navigation time was 111.5 s and the average linear speed of the insect was 3.7 cm/s. The position of the target was generally estimated three times per navigation. The average distance of the insect to the target was reduced from 4 m to approximately 2.5 m and 1.0 m after arriving at the first and second estimated destinations, indicating a successful position estimation (Fig. 4a). As the navigation progressed, the thermal information, measured by the number of pixels in the IR image representing the target, increased as the hybrid system approached, confirming the algorithm’s effectiveness in Phase II.

a The thermal information’s increase (28 °C to 38 °C) and the decrease in the insect’s distance to the oven show that it is controlled toward the oven without knowing its position. Data are shown as mean. b A typical navigation trajectory that uses the optical tracking system for localization is the blue curve. After the oven falls into the IR camera’s FoV, the algorithm is activated. The orange circles denote the destination arrival regions, their radius is 0.2 m. c A typical navigation trajectory that uses the onboard IMU-based localization system is the blue curve. The algorithm is triggered once the oven enters the field of view (FoV) of the infrared (IR) camera. If the thermal source is not visible in the camera’s field of view during movement, the algorithm will utilize the yaw angle from the IMU to regulate the hybrid system and align its orientation in order to consistently track the thermal source.

The two failed trials were caused by the hybrid system exceeding the allocated time to reach the oven. This issue arose due to a misalignment caused by rapid turning during stimulation when the IR camera captured the oven. Thus, the system’s orientation at that moment differed from its orientation when the thermal image was processed. As a result, an incorrect target direction was assigned. After the hybrid system reached the first target, the hybrid system failed to capture a new thermal image of the oven, preventing the algorithm from assigning the next target.

Overshooting was another challenge, occurring in 27 out of 28 successful trials (Fig. 4b). The third estimated destination often lay behind the target due to the fixed approaching step size (L). To mitigate this issue, the step size could be reduced or adaptively adjusted based on thermal information. However, these adjustments may increase power consumption due to the higher operational rate of the IR camera.

An alternate solution would be promptly switching from Phase II to Phase III when the thermal information is sufficient for a reliable classification process. As introduced, such a process would require the IR image to contain at least 4.9% of thermal information within the range of 28 °C to 38 °C. Implementing this as a phase-switching criterion, Phase III could promptly start after the insect arrived at the second destination (in 27/28 trials, ~96.4%).

Utilizing an onboard IMU-based localization system

In the previous section, the position and orientation data from an external optical tracking system were utilized to navigate a hybrid system towards a heat source, identified as the oven. The distance between the oven and the hybrid system and the predetermined approaching step L guided the process. The blob detection algorithm was activated only thrice to determine the heat source’s direction and set temporary targets. This method was designed to minimize processing delays. However, there was a chance of misdirection because of potential errors in determining the heat source’s direction when the hybrid system rotated. Furthermore, the fixed step size L often resulted in overshooting the target.

To address these issues, our Thermal Source-Based Navigation Algorithm was refined by developing a new approach that leverages the IMU’s yaw angle for enhanced orientation correction. Consequently, the blob detection was enabled to capture the heat source as the hybrid system walked during phase II. Whenever the heat source moves beyond the camera’s FOV, the algorithm utilizes orientation feedback from the IMU to control the hybrid system and turn it back to recapture the heat source. This adjustment resulted in a success rate of 100%, an increase from 93.3% achieved with the external optical tracking system. Additionally, the onboard IMU-based localization system caused the average navigation time to extend to 124.7 s and the average linear speed to increase to 5.0 cm/s. These statistics show an 11.8% longer navigation time and a 35.1% faster speed than the external tracking-based Thermal Source-Based Navigation Algorithm, which had previously recorded an average time of 111.5 s and a speed of 3.7 cm/s. The navigation time has lengthened, and the speed has increased due to our upgraded navigation algorithm. This improvement constantly tracks the heat source and leverages the orientation data from IMU to fine-tune the movement of our hybrid system throughout phase II. Thus, frequent adjustments by electrical stimulation are required to realign the IR camera’s field of view with the oven (Fig. 4c).

These improvements underscore the efficacy of the onboard IMU-based localization system in the thermal source-based navigation algorithm, enabling the hybrid system to navigate more effectively toward heat sources in phase II of our study.

Enhancing stochastic exploration strategies through IMU integration

Selection of the stochastic exploration strategy

The stochastic exploration strategies were evaluated to compare their coverage rates and target detection performance. The natural walking hybrid system exhibited the slowest coverage rate, likely due to its strong wall-following tendency (Fig. 5a, c). Fixed Length performed better with a coverage of 61% after 24 h (Fig. 5b). However, its emphasis on local searches resulted in a slower coverage rate relative to global search tactics. (Fig. 5a, c).

a–c Their performance in covering empty space. Brownian Walk, Uniform Distribution, and Levy Walk provide the fastest coverage rates. Under natural walk, the insect spends much time staying near the walls. Fixed Length provides a slow coverage rate as it favors searching local regions. b–d Their performance in searching for an unknown target. Levy Walk edges Brownian and Uniform Distribution via its rations between local and distant searches. e the blue dot and cyan lines denote the target and the robot’s trajectory, respectively. Data are shown as mean ± standard deviation.

Among the remaining three strategies, all achieved a final coverage of more than 90%. Brownian Walk favored distant explorations and showed the fastest increase in coverage rate, while Lévy Walk balanced local and distant searches. Although its coverage rate was slower than Brownian Walk (Fig. 5a, c), Lévy Walk slightly outperformed both Uniform Distribution and Brownian Walk in locating the unknown target promptly, with an average search time of 221 min ~13% faster than the other two strategies (Fig. 5d).

While Lévy Walk’s performance advantage was modest in this study, its ability to combine local searches with distant jumps improved the chance of detecting the target quickly (Fig. 5e). As a trade-off, this local search characteristic contributed to a small number of failed cases (Fig. 5d). Unlike in other studies 21,41, where Lévy Walk demonstrated clear superiority for sparsely distributed targets in vast spaces, its performance in this bounded environment declined slightly42.

Nevertheless, given its slight edge in search time and overall efficiency, Lévy Walk was chosen as the stochastic exploration strategy for Phase I. During this phase, the hybrid system regularly gathers information about its surroundings using the onboard infrared camera.

Stochastic exploration strategy with IMU

An Inertial Measurement Unit (IMU) has been integrated into the backpack to calculate the rotational and positional data (Fig. 6a). This means that the previous reliance on the external optical tracking system has been eliminated. The experimental area was partitioned into 600 squares, each measuring 10 × 10 cm, and was deemed to be covered once crossed by the hybrid system. During the experiments, three hybrid systems were used in 9 trials (N = 3, n = 9). The average coverage reached within the first ten minutes was 22.8%, with a standard deviation of 5.5% (Fig. 6c). An essential characteristic of this strategy is implementing a Lévy Walk exploration pattern. This approach enables periodic focus on adjacent objectives, alternating with substantial movements toward more distant locations, so optimizing search efficiency by reducing the need to revisit previously explored spaces (Fig. 6b). In addition, the system gains advantages from the incorporation of an infrared camera, capable of detecting heat sources at a maximum range of 4.2 m. This dramatically expands the range of operations during the initial phase of exploration (Phase I).

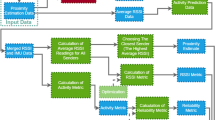

a The process begins with a Levy walk-based target assignment to generate new exploration points and assign specific target coordinates. The method calculates the orientation angle (θ) and the distance (D) to the target using the yaw angle and position data obtained from the gait-adaptive IMU. The determination to accelerate, turn left, or turn right is based on comparing the angle θ with the stated threshold angle (θt) of 20 degrees, and evaluating the distance D against the threshold distance (Dt) of 10 cm. The process repeats, directing the hybrid system towards a specific arrival zone described by Dt, and then selecting a new target, thereby allowing the hybrid system to explore the specified area. b The exploration path of the hybrid system utilizing the Levy walk strategy over a duration of 10 min, achieving an area coverage of approximately 18.3%. The yellow dot symbolizes the initial point, while the blue line depicts the path taken by the hybrid system during its exploration. The white color highlights the area being searched. c Incremental area coverage over 10 min, demonstrating the exploration efficiency (N = 3 hybrid systems, n = 9 trials). Data are shown as mean ± standard deviation.

Design and demonstration of the exploration strategy

As previously mentioned, the exploration strategy has three distinct phases: Phase I involves implementing the Levy Walk technique, Phase II utilizes Thermal Source-Based Navigation, and Phase III employs the IR image-human detection algorithm. The thermal data from the IR image can be utilized as a parameter for initiating Phase III, which requires a minimum of 4.9% of pixels falling within the temperature range of 28 °C to 38 °C, approximately equivalent to 50 pixels. This information can also serve as a reference point for the commencement of Phase II. The blob detection technique exhibits a high level of reliability within a range of 4.2 m or less, with an accuracy exceeding 86.9% (Fig. 7a). Hence, a thermal information level of 0.8% (equivalent to 8 pixels) within this effective working range can serve as the threshold for transitioning from Phase I to Phase II (Fig. 7a).

a The blob detection technique’s accuracy reduces as the distance between the IR camera and the target increases. 4.2 m (*) could be selected as the maximum distance for acceptable accuracy. This distance’s corresponding thermal information (28 °C to 38 °C) is 0.8%. Data are shown as mean. b IR images and their detected blobs (i.e., red dots) at different distances. The square boxes indicating the image’s hottest region are used to evaluate the blobs’ accuracy. c Flowchart of the exploration strategy for both indoor and outdoor applications. The Indoor Exploration Strategy utilizes the thermal information from the IR image within a specific range to facilitate the transition between three distinct phases. An Outdoor Extension Adjustment module enhances this strategy for outdoor environments by analyzing pixels in a human range near the thermal blob’s center. Besides, If a heat source is detected but then lost when the hybrid system has moved out of the camera’s field of view, the algorithm will navigate the hybrid system and turn back to reattempt capturing the heat source. The algorithm reverts to Phase I: Stochastic Exploration when it continuously fails to find the heat source, forcing a repeated search for thermal sources in outdoor areas.

The efficacy of the exploration technique was demonstrated in an indoor environment, where the hybrid system effectively detected and recognized a human without any prior information about their location (Supplementary Video 1). This was accomplished in an enclosed environment with a surrounding temperature of around 25 degrees Celsius. Nevertheless, doing trials outdoors at night presented extra difficulties due to the fluctuating external temperatures ranging from 28 °C to 29 °C. Additionally, the ambient temperature fluctuations and the heat emitted by lighting and air conditioning units can cause inaccuracies in the Thermal Source-Based Navigation Algorithm.

To address these challenges, we have improved our approach to differentiate human heat signatures from other environmental heat sources. For example, the temperatures of lights and air conditioning systems above the typical range of human body temperatures, which often varies from 29.3 to 34.9 degrees Celsius. To ensure correct phase transitions, we used a temperature range threshold of 29 °C to 35 °C. In addition, ambient temperature fluctuations add complexity because their range coincides with human temperatures. However, the dispersed quality of the air presented a distinct chance for improvement. By examining the distribution of pixel temperatures surrounding the central point of the identified thermal source, we have developed a dependable detection procedure. If the IR image contains more than 25 pixels within the range of human temperatures, with a minimum of 12 of those pixels concentrated in a 5 × 5 pixels area at the center, the algorithm will navigate the hybrid system toward that target (Fig. 7c). However, this modification required a compromise, resulting in a reduction of our blob detection’s effective range from 4.2 m to 1.8 m (Fig. 7a).

Occasionally, changes in the surrounding temperature may initially satisfy the criterion for detection but then fail in subsequent checks because of their temporary nature. When the thermal source-based navigation system is unable to identify the thermal source again, the algorithm relies on the IMU’s yaw angle data to modify the orientation of the hybrid system. For instance, if the hybrid system initially turns to the left and fails to detect the heat source, the algorithm will subsequently command it to turn right to recapture the thermal source. If a complete 90-degree turn to the right does not find the source, the hybrid system is controlled to perform a comparable left turn of up to 90 degrees. In the event that the target is not discovered, the algorithm disregards the prior detection and returns to Phase I. This prompts the hybrid system to resume its search, as shown in Fig. 7c.

The outdoor demonstrations, employing the enhanced algorithm, successfully mitigated interference from non-human heat sources such as lights and air conditioning units. Regarding the warm air in the environment, the system encountered it three times; each instance, although initially meeting detection criteria, vanished in subsequent captures. Consequently, after attempts to recapture these fleeting thermal sources, the algorithm disregarded these detections, returning to Phase I. The strategy persisted until the hybrid system located a human seated at an intersection, with a proximity of approximately 1.7 meters, thereby demonstrating the efficacy of our adaptive approach in complex environmental conditions. The effectiveness of the improved exploration technique was shown in an outdoor setting, where the combined system successfully explored and identified a human without any prior knowledge of the human’s position (Supplementary Video 2).

Discussion

This study presents a comprehensive exploration strategy for terrestrial insect-machine hybrid systems, integrating stochastic search algorithms, thermal source-based navigation, and IMU-based localization. The experimental results demonstrate the effectiveness of the strategy in autonomously locating human targets without prior knowledge of their positions, both indoors and outdoors. Notably, the onboard IMU-based localization system enables robust operation in outdoor environments, eliminating the need for external tracking systems.

Regarding the IMU-based localization module, the error accumulation could result from misalignment between the IMU orientation and the actual moving direction of the cockroaches caused by the posture change, as well as from the false movement detection. The cockroaches tend to lift their bodies to examine when they encounter an obstacle and attempt to climb over the obstacle as a negotiation method, which leads to a rise in the pitch angle of the IMU-mounted PCB. The algorithm registered it as a walking movement, which caused an error in positioning. Even though the climbing attempt would be canceled due to the height of the obstacles, errors accumulated would continuously increase, especially in the positive Z direction.

Crucially, the entire exploration technique devised in this work was executed entirely on the onboard device (Fig. 7c). The memory utilized by the blob detection technique, an image processing task, and the human detection, a machine learning model, was relatively minor. It occupied roughly 1% (~23.2 kB) of Flash memory and 64% (~163.5 kB) of SRAM memory. In addition, the onboard IMU-based localization method consumes ~0.2% (~4.6 kB) of flash memory and 5% (~12.9 kB) of SRAM. This allows for additional features, such as the filter method. The computational time of blob detection, human detection, and IMU-based localization was approximately 350 ms, 95 ms, and 10 ms, respectively. These processing times are sufficiently fast to maintain real-time processing, given the navigated insect’s speed of around 5.0 cm/s. In comparison to previous research, this technique has shown enhancements. For example, the range for localizing the seeking target can reach up to 4.2 m in Phase II, which significantly improves from the previous range of 1 m achieved by acoustic information16. In addition, integrating this technology into the device would decrease power consumption during data transmission. For example, the energy required to capture infrared (IR) photos was roughly 24 mW, about half the energy necessary to stream the auditory data16.

Taken together, these advancements represent a significant step toward practical applications of insect-machine hybrid systems in real-world scenarios, such as search and rescue missions in complex terrains. Future work will focus on enhancing the localization accuracy, expanding the system’s range of operation, and testing it under more diverse environmental conditions.

Methods

Hybrid system and its onboard human detection algorithm

This study reassembled the insect-machine hybrid systems used in ref. 2 (Fig. 1a, b). Madagascar hissing cockroaches (Gromphadorhina portentosa, ~6 g, 6 cm) were selected as the insect platform (Fig. 1a). The insect’s locomotion was controlled via the electrical stimulation of its cerci2. The stimulation of individual cercus caused opposite turns, whereas simultaneously stimulating two cerci resulted in accelerations. The backpack was inherited from2 (Fig. 1b). It featured a wireless communication ability (CC1352, TI, 48 MHz, 352 kB of Flash, 8 kB of SRAM), an IR camera of 32 × 32 pixels (90° × 90° FoV, Heimann Sensor GmbH), and four stimulation channels (Fig. 1b).

The Steve & Leif Super Sticky Double-Sided Tissue Tape was used to attach the backpack to a mounting plate. The mounting plate (10 × 10 mm²) was fabricated using a 3D printer and secured to the cockroach’s second and third thoracic segments using the same double-sided tape. To enhance adhesion, we used sandpaper to remove the thin, smooth cuticle from the contact area of these thoracic segments and make the surface rougher.

The hybrid robot is powered by rechargeable Lithium Polymer (LiPo) battery with 3.7 V nominal voltage, 120 mAh capacity, equivalent to 444 mWh. The power consumption of the backpack is estimated at 64.84 mW (20% duty cycle on Bluetooth transmission and 100% duty cycle of other main components). Assuming a 90% power efficiency for the regulator circuit, the system can operate for over 6 h on a full charge.

Onboard human detection algorithm

We use the IR camera (32 × 32 resolution) to capture the thermal image of hot objects. The HOG (Histogram of Oriented Gradients) feature descriptor and SVM (Support Vector Machine) classifier were utilized to detect the human. The HOG method calculates the intensity and direction of pixel gradients in the infrared images (32 × 32 resolution) to create feature vectors representing detected objects’ shape and appearance. The HOG cell sizes (4 × 4) were used to balance computational efficiency and accuracy. The Linear SVM was selected due to its low computational overhead and sufficient accuracy for the onboard system. The model was developed and validated using MATLAB®, and the optimized kernel parameters were implemented in the backpack’s microcontroller.

Despite employing a low-resolution IR camera, the human detection algorithm was reportedly able to classify between humans and everyday thermal items (e.g., laptops, ovens) with the success of up to 90% within the range from 0.5 to 1.5 m, equivalent to at least 4.9% of the image contained information about human temperature, i.e., 28 °C to 38 °C2.

Design of the thermal source-based navigation algorithm

In phase II, this algorithm estimates the target’s position and then proceeds with navigation (Supplementary Fig. 1). The target’s position is estimated as follows. First, a blob detection technique would locate the target’s coordinate (u, v) inside the IR image (Supplementary Fig. 1a)19. After using a Median filter (3 × 3), this technique smoothed the image with a Gaussian filter and then nominated its hot spots (potentially representing the target) with a Laplacian filter (3 × 3)19 (Supplementary Fig. 1a). Three Gaussian filters were used, including 33 × 33 (σ = 5), 27 × 27 (σ = 4), and 21 × 21 (σ = 3). The coordinate (u, v) was selected between the nominated spots with the lowest Laplacian output.

The thermal source-based navigation algorithm aims to estimate the position of a heat source and navigate the hybrid system toward it. Two cases were developed: (1) using an external optical tracking system (VICON) and (2) using an onboard IMU-based localization system. Both cases follow the same overall approach but differ in how position and orientation data are acquired and utilized (Supplementary Fig. 2).

External optical tracking system for thermal source navigation

The blob detection algorithm first locates the heat source’s coordinates (u,v). The coordinate in the thermal image. The coordinate is mapped to an angular position α (deg), relative to the IR camera’s horizontal field of view (Eq. 1) (Supplementary Fig. 1b). Finally, the angle α was used with the insect’s location (XI, YI, θI) and the approaching step L (m) to estimate the target’s position (XT, YT) (Eq. 2) - (Eq.4) (Supplementary Fig. 1b). L was tuned to 1.5 m based on the reliable working range of the human detection algorithm 2.

When the hybrid system reaches the estimated destination, a new thermal image is captured for either a new estimation or the classification (Phase III). If the heat source is outside the IR camera’s field of view, a recovery strategy is initiated, involving additional movements between two destinations with an approaching step of ΔL = 0.2 m to recapture the heat source and adjust the hybrid system’s orientation (Supplementary Fig. 2a).

Onboard IMU-based localization system for thermal source navigation

In this case, the hybrid system utilizes yaw angles derived from the IMU to calculate angular displacement and adjust its orientation. The heat source’s coordinates (u,v) are determined as described earlier. Based on the blob detection results, navigation decisions are made according to the blob’s horizontal position within the thermal image (u-axis). For example, the system turns left if the position falls within 1 to 10 pixels, turns right if within 23 to 32 pixels, and accelerates if within 11 to 22 pixels (Supplementary Fig. 1d). By utilizing the yaw angle (θI) from the gait-adaptive IMU, we calculate the rotating angle before and after the system is navigated. This rotating angle is compared with the target’s angular position (α) when the heat source is outside the IR FoV. This comparison helps estimate the angular displacement, enabling the algorithm to adjust the hybrid system’s orientation and recapture the heat source during movement (Supplementary Fig. 2b).

Evaluation of the thermal source-based navigation algorithm

Two experiments were conducted. In the first experiment, the algorithm was examined using a batch of 3 hybrid systems navigated by the algorithm to approach a thermal target i.e., an oven (28–50 °C), placed 4 m from their initial location (Supplementary Fig. 1c). The oven’s position was unknown to the algorithm. Successful navigations were recorded when the insect arrived at the target, i.e., less than 0.5 m away from the oven, within 180 s; otherwise, a failed navigation was recorded. The experimental arena was 4.8 × 6.6 m2. The ambient temperature was set at 25 °C. The hybrid system’s initial orientation was randomized. The target’s position was estimated when the IR image contained more than 0.2% thermal information within 28 °C–38 °C. For external localization, the insect’s position was monitored via an external optical tracking system (VICON®, 10 cameras, 100 fps, Supplementary Fig. 1c). 10 trials were conducted for each hybrid system (n = 30). For self-localization, the hybrid system’s orientation was determined through an integrated IMU (100 fps, Supplementary Fig. 1e). This part of the experiment was conducted over 9 trials (n = 9).

The second experiment was to set the switching criteria between the three phases. Several sets of IR images containing human were collected at different distances (Fig. 7a, b). The IR camera was situated on the floor at a height of 20 mm, approximating the insect’s height2. The ambient temperature was set at 25 °C. Inside the camera’s FoV, a human was presented at different positions (Fig. 7b). The distance between the human and the camera was changed from 0.6 m to 5.4 m with a step of 0.6 m. At each distance, at least 200 images were captured and processed to extract the human coordinate inside them using the blob detection technique (Fig. 7b). If this coordinate was inside the hottest region of the image, the blob detection was judged as performing successfully. This region was constructed from the segmentation algorithm proposed in ref. 18.

Development of the ROS Simulation Environment

As introduced, a ROS simulation environment was built. ARGoS and its foot-bot model were selected as the engine and platform to simulate (Supplementary Fig. 3c)43. Three motions were programmed for the robot: forward motion, directional turn, and stationary, representing the 2D model of a living insect’s locomotion (Gromphadorhina portentosa, Blattella germanica)44,45. The robot emulated two statuses of the insect: natural walking or under navigation. The insect’s linear and angular speeds depended upon each status (i.e., v, ω, Supplementary Table 1). The insect’s natural walk could be modeled as a finite-state machine, switching between walking in open space and following walls (Supplementary Fig. 2b, Supplementary Table 1)44,45.

-

In an open space, the insect would switch between moving and standing still with the probability of Pstop45. The stopping duration, Tstop (s), was drawn from a normal distribution (Supplementary Table 1)45.

-

Each move contained a straight walk of L cm, followed by an instantaneous turn of α deg, drawn from normal and Mises distributions, respectively44,45,46 (Supplementary Fig. 1a). The insect tended to repeat its previous turning direction with the probability Ppersist (Supplementary Table 1)45.

-

The insect started following a wall when it was 2 cm or smaller near the wall45. The wall-following motion consisted of walking a distance of L cm or stopping in Tstop s, selected via Pstop45.

-

The wall exit occurred with a probability of Pexit (Supplementary Table 1)45. The wall-departing angle β followed a log-normal distribution (Supplementary Fig. 2a, Supplementary Table 1)44.

The Predictive Feedback Navigation Program and the insect’s locomotory reaction to its cerci stimulation were embedded in the simulation environment to emulate controlled insects2. The reaction was experimentally collected with N = 4 insects, n = 80 trials (Supplementary Table 1).

Simulation and evaluation of stochastic exploration strategies

In Phase I, the stochastic exploration strategy would provide the hybrid system with different destinations. After each arrival, a new destination was established. The stochastic exploration should distribute these destinations efficiently to maximize the acquired information. Herein, four stochastic exploration strategies were simulated. In these strategies, the relative orientation between the destination and the insect would be uniformly drawn from 0° to 360°21. Each strategy would govern its distance to the insect differently.

-

Strategy 1: The distance was fixed as 0.5 m, the minimal effective working range of the human detection algorithm2. Thus, this strategy was named “Fixed Length.”

-

Strategy 2: The distance was drawn from a Levy distribution, which consecutively generated short distances and occasionally inputted a significantly long one, commonly discussed as an efficient stochastic exploration strategy 21,41. The process of generating this distribution was inherited from21. This strategy was named “Levy Walk.” The minimum distance was set as 0.5 m.

-

Strategy 3: The distance was drawn uniformly from 0.5 m to 20 m, thus named “Uniform Distribution.”

-

Strategy 4: The strategy emulated the Brownian motion47, i.e., the hybrid system changed its destination whenever encountering walls. This process was done in a bounded terrain by fixing the distance as the terrain’s longest length (30 m for this study). This strategy was named “Brownian Walk.”

Herein, different searching mechanisms were studied. Fixed Length and Brownian Walk would favor local and global explorations, respectively. Levy Walk & Uniform Distribution might have a more balanced approach. The insect’s natural motion was also simulated. The simulative arena was 20 × 20 m2 (Supplementary Fig. 2c). The hybrid system’s initial coordinate was (−9.5, −9.5) m (Supplementary Fig. 2c). The simulative duration was 24 h. Two simulation scenarios were simulated, with and without a searching target. The coverage percentage and the search time were used for comparison. The hybrid system was equipped with a target detection sensor with a circular working range of 0.5 m radius. When the system was 10 cm near the wall due to a distant destination, it would be first directed away from the wall. Then, a new destination would be generated. The target’s coordinate was (4.0, −4.5) m randomly selected (Supplementary Fig. 2c).

Demonstration setup

Indoor demonstration

The exploration strategy was implemented onboard to search for a sitting human inside the 4.8 × 6.6 m2 region. The hybrid system was initially placed in the region’s left corner. Its orientation faced away from the human, who was at the top right corner. The hybrid system did not know the human’s location. The ambient temperature was 25 °C. The external optical tracking system (VICON®) monitored the hybrid system’s position. The IR camera operated at a speed of 1 Hz.

Outdoor demonstration

For the outdoor demonstration, which utilized a gait-adaptive IMU for localization, the experiment took place on the pavement surrounding our building, covering an area of 3.5 × 6.0 m². A human was seated at an intersection within the pavement area, making them invisible to the hybrid system’s camera unless it approached this intersection, located ~3 m from the starting point. The ambient temperature was 28 °C. The hybrid system was equipped with a backpack-mounted IMU, providing yaw angle and position data, and the IR camera continued to operate at 1 Hz (Fig. 1c).

IMU data collection of free-walking insect

The acceleration and heading of the IMU on a free-walking insect were recorded at a 100 Hz data rate. Cockroaches were left walking freely in an 8 × 12 m arena. The data generated by the IMU’s Digital Motion Processor (3D accelerations and Quaternions) was recorded to onboard flash memory during the free-walking procedure to obtain the highest possible logging data rate provided by the system. Data was retrieved after the experiment for post-processing.

IMU-based localization system for hybrid system in 2D and 3D

First, the controller backpack rested on the arena with its body-fixed coordinate system aligned with the coordinate system of the external optical tracking system or referencing coordinate system. The backpack was rotated around the z, y, and x-axis so that yaw, roll, and pitch values changed from varied in full rotation (full −180° to 180° scale for yaw and roll angle and −90° to 90° scale for pitch angle). The output Euler angle was compared with accurate orientation from the optical tracking system to ensure their difference did not exceed 3.6° (1% of the full-scale range). The backpack would be restarted and recalibrated if the previous condition did not meet the requirement threshold. Next, the backpack was mounted on the mounting plate on the back of the insect using double-sided tape. Then, the cockroach was placed back to the origin of the referencing coordinate system and held in place for a brief period. Afterward, the experimenter released the cockroach for free walking for approximately 5 s. Distance traveled calculated by onboard IMU-based localization system and external optical tracking system was compared for gain-k recalibration by the equation:

where \({K}_{{seed}}\) was set at 3.5.

The recalibration was required once for each cockroach and would be used for all subsequent experiments with that cockroach.

Once the gain k had been calibrated for the cockroach, it would be updated wirelessly on the backpack controller. Realignment of the body-attached coordinate system and referencing coordinate system was re-applied, and the cockroach was allowed to walk freely around the arena. Experiment would re-direct the moving direction of the cockroach by slightly tough the antenna of the cockroach if it approached the boundary of the tracking area of the optical tracking system to ensure minor data loss of referencing position. Positions of the insect were calculated on-board and streamed to the center station GUI by BLE 5.1 to compare with the referencing position.

For the 3D investigation, cockroaches carrying backpacks were guided to walk on a foam slope with an incline angle of 7.9°. The experimenter directed the insects along the predetermined path and made sure they did not fall off the surface. The backpack controller calculated the insects’ positions and sent them to the center station GUI in real time by BLE 5.1 to compare with the referencing position.

Data availability

The data were uploaded to Figshare platform by this link: https://figshare.com/s/7e6fb7cd43cad75dc663.

Code availability

The code (in MATLAB Software) was uploaded to Figshare platform by this link: https://figshare.com/s/7e6fb7cd43cad75dc663.

References

Bozkurt, A., Lobaton, E. & Sichitiu, M. A biobotic distributed sensor network for under-rubble search and rescue. Computer 49, 38–46 (2016).

Tran-Ngoc, P. T. et al. Intelligent insect–computer hybrid robot: installing innate obstacle negotiation and onboard human detection onto hybrid system. Adv. Intell. Syst. n/a, 2200319 (2023).

Drew, L. This cyborg cockroach could be the future of earthquake search and rescue. Nature (2023).

Whitmire, E., Latif, T. & Bozkurt, A. in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). 1470–1473 (IEEE).

Cao, F. et al. A Biological Micro Actuator: Graded and Closed-Loop Control of Insect Leg Motion by Electrical Stimulation of Muscles. PLOS ONE 9, e105389 (2014).

Sanchez, C. et al. Locomotion control of hybrid cockroach robots. Journal of The Royal Society Interface 12, 20141363 (2015).

Doan, T. T. V., Li, Y., Cao, F. & Sato, H. Cyborg beetle: thrust control of free flying beetle via a miniature wireless neuromuscular stimulator. In 2015 28th IEEE International Conference on Micro Electro Mechanical Systems (MEMS). 1048–1050 (IEEE, 2015).

Nguyen, H. D., Dung, V. T., Sato, H. & Vo-Doan, T. T. Efficient autonomous navigation for terrestrial insect-machine hybrid systems. Sensors Actuators B: Chem. 376, 132988 (2023).

Hoover, A. M., Steltz, E. & Fearing, R. S. In 2008 IEEE/RSJ international conference on intelligent robots and systems. 26-33 (IEEE, 2008).

Birkmeyer, P., Peterson, K. & Fearing, R. S. DASH: A dynamic 16g hexapedal robot. In 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems. 2683–2689 (IEEE, 2009).

Dickinson, M. H. et al. How animals move: an integrative view. Science 288, 100–106 (2000).

Baba, Y., Tsukada, A. & Comer, C. M. Collision avoidance by running insects: antennal guidance in cockroaches. J. Exp. Biol. 213, 2294–2302 (2010).

Latif, T., Whitmire, E., Novak, T. & Bozkurt, A. Towards fenceless boundaries for solar powered insect biobots. In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 1670–1673 (IEEE, EMBC, 2014).

Tsukuda, Y. et al. Calmbots: Exploring possibilities of multipleinsects with on-hand devices and flexible controls as creation interfaces. In CHI Conference on Human Factors in Computing Systems Extended Abstracts. 1–13 (2022).

Ruan, X., Ren, D., Zhu, X. & Huang, J. Mobile robot navigation based on deep reinforcement learning. In 2019 Chinese control and decision conference (CCDC). 6174–6178 (IEEE, 2019).

Latif, T., Whitmire, E., Novak, T. & Bozkurt, A. Sound localization sensors for search and rescue biobots. IEEE Sensors J. 16, 3444–3453 (2016).

Calitoiu, D. In 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications. 1–6 (IEEE, 2009).

Doulamis, N., Agrafiotis, P., Athanasiou, G. & Amditis, A. Human object detection using very low resolution thermal cameras for urban search and rescue. In ACM International Conference Proceeding Series, Association for Computing Machinery. 311–318. https://doi.org/10.1145/3056540.3076201.

Lindeberg, T. Detecting salient blob-like image structures and their scales with a scale-space primal sketch: a method for focus-of-attention. Int. J. Comput. Vision 11, 283–318 (1993).

Lindeberg, T. Feature detection with automatic scale selection. Int. J. Comput. Vision 30, 79–116 (1998).

Sutantyo, D. K., Kernbach, S., Levi, P. & Nepomnyashchikh, V. A. In 2010 IEEE Safety Security and Rescue Robotics. 1–6 (IEEE, 2010).

Hutchinson, M., Ladosz, P., Liu, C. & Chen, W.-H. In 2019 International Conference on Robotics and Automation (ICRA). 7720–7726 (IEEE, 2019).

Nguyen, H. D., Tan, P., Sato, H. & Doan, T. T. V. In 2019 IEEE International Conference on Cyborg and Bionic Systems (CBS). 11–16 (IEEE, 2019).

Kaplan, E. D. & Hegarty, C. Understanding GPS/GNSS: principles and applications. (Artech house, 2017).

Nirjon, S. et al. COIN-GPS: Indoor localization from direct GPS receiving. In Proc. 12th annual international conference on Mobile systems, applications, and services. 301–314 (Association for Computing Machinery, New York, NY, 2014).

Wang, C. et al. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 109–116 (IEEE, 2017).

Tsai, P. S., Hu, N. T. & Chen, J. Y. In 2014 Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing. 978–981 (IEEE, 2014).

Al-Kaff, A., Meng, Q., Martín, D., de la Escalera, A. & Armingol, J. M. In 2016 IEEE intelligent vehicles symposium (IV). 92–97 (IEEE, 2016).

Qian, K., Ma, X., Fang, F., Dai, X. & Zhou, B. Mobile robot self-localization in unstructured environments based on observation localizability estimation with low-cost laser range-finder and RGB-D sensors. Int. J. Adv. Robot. Syst. 13, 1729881416670902 (2016).

Duque Domingo, J., Cerrada, C., Valero, E. & Cerrada, J. A. An improved indoor positioning system using RGB-D cameras and wireless networks for use in complex environments. Sensors 17, 2391 (2017).

Raharijaona, T. et al. Local positioning system using flickering infrared leds. Sensors 17, 2518 (2017).

Díez, L. E., Bahillo, A., Otegui, J. & Otim, T. Step length estimation methods based on inertial sensors: a review. IEEE Sensors J. 18, 6908–6926 (2018).

Wu, Y., Zhu, H.-B., Du, Q.-X. & Tang, S.-M. A survey of the research status of pedestrian dead reckoning systems based on inertial sensors. Int. J. Automation Comput. 16, 65–83 (2019).

Hou, X. & Bergmann, J. Pedestrian dead reckoning with wearable sensors: a systematic review. IEEE Sensors J. 21, 143–152 (2020).

Cole, J., Bozkurt, A. & Lobaton, E. Localization of biobotic insects using low-cost inertial measurement units. Sensors 20, 4486 (2020).

Li, M. & Mourikis, A. I. High-precision, consistent EKF-based visual-inertial odometry. Int. J. Robot. Res. 32, 690–711 (2013).

Leutenegger, S., Lynen, S., Bosse, M., Siegwart, R. & Furgale, P. Keyframe-based visual–inertial odometry using nonlinear optimization. Int. J. Robot. Res. 34, 314–334 (2015).

Kuutti, S. et al. A survey of the state-of-the-art localization techniques and their potentials for autonomous vehicle applications. IEEE Internet Things J. 5, 829–846 (2018).

Jimenez, A. R., Seco, F., Prieto, C. & Guevara, J. In 2009 IEEE International Symposium on Intelligent Signal Processing. 37–42 (IEEE, 2009).

Bender, J. A. et al. Kinematic and behavioral evidence for a distinction between trotting and ambling gaits in the cockroach Blaberus discoidalis. J. Exp. Biol. 214, 2057–2064 (2011).

Katada, Y., Hasegawa, S., Yamashita, K., Okazaki, N. & Ohkura, K. Swarm crawler robots using lévy flight for targets exploration in large environments. Robotics 11, 76 (2022).

Khaluf, Y., Havermaet, S. V. & Simoens, P. Collective Lévy walk for efficient exploration in unknown environments. In International Conference on Artificial Intelligence: Methodology, Systems, and Applications:18th International Conference. 260–264 (Springer, 2018).

Pinciroli, C. et al. ARGoS: a modular, parallel, multi-engine simulator for multi-robot systems. Swarm Intell. 6, 271–295 (2012).

Jeanson, R. et al. A model of animal movements in a bounded space. J. Theor. Biol. 225, 443–451 (2003).

Dirafzoon, A. et al. Biobotic motion and behavior analysis in response to directional neurostimulation. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 2457–2461 (IEEE, 2017).

Daltorio, K. A. Obstacle Navigation Decision-Making: Modeling Insect Behavior for Robot Autonomy (Case Western Reserve University, 2013).

Einstein, A. Über die von der molekularkinetischen Theorie der Wärme geforderte Bewegung von in ruhenden Flüssigkeiten suspendierten Teilchen (English translation: on the movement of small particles suspended in a stationary liquid demanded by the molecular-kinetic theory of heat). Annalen der physik 4, 549–560 (1905).

Acknowledgements

The authors thank Mr. Ho Yow Min, Mr. Ying Da Tan, Mr. Terence Goh at KLASS Engineering & Solutions Pte. Ltd, Mr. Cheng Wee Kiang, Mr. Ong Ka Hing, Ms. Rui Huan AW at Home Team Science & Technology Agency (HTX), and distinguished officers at Singapore Civil Defence Force (SCDF) for their helpful comments and advice, Ms. Kerh Geok Hong Wendy, Mr. Roger Tan Kay Chia for their support. A part of this work was supported by KLASS Engineering & Solutions Pte. Ltd (NTU REF 2019-1585).

Author information

Authors and Affiliations

Contributions

H.S., P.T.T.N., H.D.N., and D.L.L. conceived and designed the research. H.D.N., D.L.L., and P.T.T.N. developed hardware and software for the backpack. P.T.T.N., H.D.N., and B.S.C. developed the thermal source-based navigation algorithm. H.D.N. and P.T.T.N. developed the Stochastic algorithm. P.T.T.N., H.D.N. developed the exploration strategy. D.L.L. developed the IMU-based localization. P.T.T.N. and R.L. prepare the hybrid systems. P.T.T.N., H.D.N., D.L.L., and R.L. conducted the experiment and analysis. P.T.T.N., H.D.N., D.L.L., B.S.C, and H.S. wrote and edited the manuscript. H.S. supervised the research. All authors read and edited the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tran-Ngoc, P.T., Nguyen, H.D., Le, D.L. et al. Gait-adaptive IMU-enhanced exploration strategy for autonomous search and rescue with insect-machine hybrid system. npj Robot 3, 20 (2025). https://doi.org/10.1038/s44182-025-00037-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44182-025-00037-0