Abstract

Awe is a complex emotion that encompasses conflicting affective feelings inherent to its key appraisals, but it has been studied as either a positive or a negative emotion, which has made its ambivalent nature underexplored. To address whether and how awe’s ambivalent affect is represented both behaviorally and neurologically, we conducted a study using virtual reality (VR) and electroencephalography (N = 43). Behaviorally, the subjective ratings of awe intensity for VR clips were accurately predicted by the duration and intensity of ambivalent feelings. In the electrophysiological analysis, we identified a latent neural-feeling space for each participant that shared valence representations across individuals and stimuli, using deep representational learning and decoding analyses. Within these spaces, ambivalent feelings were represented as spatially distinct from positive and negative ones, with large individual differences in their separation. Notably, this variability significantly predicted subjective awe ratings. Lastly, hidden Markov modeling revealed that the multiple band powers, particularly in the frontoparietal channels, were significantly associated with differentiation of valent states during awe-inducing VR watching. Our findings consistently highlight the salience of ambivalent affect in the subjective experience of awe at both behavioral and neural levels. This work provides a nuanced framework for understanding the complexity of human emotions with implications for affective neuroscience.

Similar content being viewed by others

Introduction

Awe is an extraordinary emotion felt when encountering exceptional objects, such as vast landscapes, masterpieces in art, or sublime lives. Such emotion encourages a shift away from egocentric thinking, leading individuals to expand their mental scheme to fully process these remarkable subjects1,2. Consequently, awe has long been regarded as a “life-changing” emotion that helps people persevere through adversity3,4. Even in daily life, awe fosters a broader perspective that can enhance subjective well-being5,6, promote prosocial behavior7,8, and increase resilience9,10. Thus, awe offers a unique lens through which we explore the complexity of human emotion and its broader implications for life.

To understand the unique emotional qualities of awe, it is important to first define various terms related to emotion (e.g., ‘emotion’, ‘feeling’, ‘affect’). Since the definitions of these terms rely on the emotion theories, we adopt somatic marker theory11,12 as our conceptual framework. Somatic marker theory offers a holistic viewpoint that explains how physiological responses to external stimuli are represented in the brain and how affective feelings emerge from these processes, without underestimating the role of cognitive appraisals13. In this respect, the theory provides a promising foundation for bridging key appraisals of awe (i.e., perceived vastness and need for accommodation) with physiological dynamics they shape and consequent affective profiles – e.g., the ref. 14. Within this framework, ‘emotion’ is defined as a set of physiological reactions evoked by external sensory inputs that do not necessarily entail conscious experience, whereas ‘feeling’ refers to the conscious awareness that originates from the interoceptive evaluation of these bodily signals11. Meanwhile, ‘affect’ is understood as a broader concept defined by the dimensions of valence (i.e., pleasant – ‘positive’ or unpleasant – ‘negative’) and arousal (i.e., intense or mild)15,16,17, which encompasses both emotion and feeling. Although recent perspectives have proposed viewing awe as an altered state of consciousness rather than as an emotion or feeling18,19, in this paper, we conceptualize awe as an emotion and feeling imbued with an affective dimension.

Awe’s distinctive affective profile is closely tied to its two key appraisals: ‘perceived vastness’ and ‘need for accommodation’1. Perceived vastness refers to encountering something physically or conceptually massive beyond one’s current mental structure, and is typically associated with positive feelings (e.g., admiration, elevation, aesthetic pleasures)1. In contrast, the need for accommodation involves adjusting existing mental structures to resolve the cognitive dissonance triggered by such stimuli, and is more often linked to negative feelings (e.g., confusion, obscurity)1. Indeed, an empirical survey has shown that these appraisals scores display negative and positive correlations with negative feelings during the experience of awe, respectively20. Based on this conceptual model, we hypothesize that the inherent duality of awe’s core appraisals may lead to its affective character as ambivalent feeling, defined by the simultaneous presence of both positive and negative affect21,22,23. Recent studies support this view, suggesting that awe is better characterized not as a purely positive or negative emotion, but as a mixed one24,25.

However, affective science has faced challenges in investigating awe’s ambivalent qualities, frequently categorizing awe based on dominant affective components into ‘positive’ and ‘threat’ awe – e.g., ref. 7,26. This framework has also influenced neuroimaging studies, which has primarily explored distinct neural correlates for each subtype rather than focusing on the integration of these dual valences — e.g., the ref. 27,28.

Research on the ambivalent affect of awe at behavioral and neural levels remains constrained by methodological and theoretical challenges. Methodological issues arise in evoking awe and measuring its valence. Standardized affective stimuli, such as the International Affective Picture System (IAPS), have shown limited validity and reliability to elicit complex emotions like awe29,30. Moreover, when measuring the valence of awe, conventional unidimensional valence scales (e.g., bipolar continuum model) fail to account for the simultaneous coexistence of positive and negative feelings inherent to ambivalent affect. Psychometric research has shown that positive and negative valences do not always correlate inversely, and multi-dimensional valence scales offer a better fit for affective variance31,32. Furthermore, neuroscience research on rodent models reveals that the brain’s affective circuits do not encode valence in a unidimensional manner33. Human neuroimaging studies also suggest that positive and negative valence systems, while sometimes overlapping, may also operate independently in certain circuits34,35,36. Consequently, effective experimental frameworks that can induce awe and accurately measure the ambivalent affect they evoke are needed.

A theoretical challenge in understanding the neural basis of ambivalent affect has been the debate over whether distinct neural systems for ambivalent affect exist. Constructivist theory argues that the valence of core affect has an irreducible bipolar unidimensional structure15. Based on this, it proposes that a particular feeling cannot be both pleasing and displeasing at the same time and predicts that there would be no specialized neural representation for ambivalent emotions encompassing both37. Conversely, more recent perspectives recognize the possibility of neural circuits specifically responsible for ambivalent affect38,39. For example, the hierarchical model of ambivalent affect hypothesizes that competing bodily signals encoded in the brainstem nuclei are combined with emotion-related knowledge and memories in the orbitofrontal and anterior cingulate cortices, producing a global ambivalent representation in the insula cortex39. However, whether a distinct neural system for ambivalent affect exists, and if it manifests unique cortical-level patterns separate from positive and negative affect, remains an open empirical question.

To address these methodological and theoretical challenges, we employ a comprehensive approach in this study that combines virtual reality (VR), electroencephalogram (EEG), multi-dimensional valence scales, and deep representational learning to examine awe’s ambivalent nature at behavioral and neural levels. VR has been shown to effectively elicit more reliable and intensive awe in controlled environments based on its immersive characteristics than conventional static stimuli40,41,42,43. Furthermore, its compatibility with EEG recordings captures fine-grained physiological dynamics of affective feeling during naturalistic awe. Indeed, the insula cortex, a potential key node in the hierarchical model of ambivalent affect, has been shown to integrate bodily signals into a global affective representation within approximately 125 ms44,45, underscoring the need for measurements with high temporal resolution.

To test whether ambivalent affect displays distinct neural representation, we leverage CEBRA46, a contrastive learning-based nonlinear dimensionality reduction approach. Using valence self-reports synchronized to EEG signals as an auxiliary variable, CEBRA effectively learns ‘latent neural-feeling space’ at the individual level by clustering EEG samples with the same valence category, maximizing the separation between samples from different valence labels. This approach is particularly useful for testing hypotheses about ambivalent affect’s neural correlates; if EEG samples during self-reported ambivalent feeling do not form a distinct cluster and instead disperse broadly between positive and negative valence clusters, this will support the constructivist view of ambivalent affect as merely a fluctuation between opposing valences. Conversely, identifying a distinct neural pattern of self-reported ambivalent feeling would reveal latent representations of ambivalent affect previously undetected by traditional feature engineering approaches (e.g., frontal alpha asymmetry). Given the nonlinear relationship between brain signals and valence33,47,48, as well as the heterogeneity in affective neural representations across individuals and sensory modalities49,50,51, our strategy of learning personalized latent neural-feeling spaces offers a breakthrough for capturing both unique affective representations and their commonalities across individuals and stimuli.

This study aims to determine whether awe, in its behavioral and electrophysiological manifestations, can be better explained as an ambivalent affect that simultaneously encompasses both positive and negative affect. To this end, we explore three questions: (1) Is self-reported intensity of awe in the VR setting more associated with the intensity and duration of ambivalent feelings than those of simply positive or negative feelings? (2) Do ambivalent feelings during awe have distinct neural representations that are significantly separated from positive and negative ones? (3) If so, how do geometric structures of these neural representations of ambivalent feelings explain self-reported awe intensity? (4) Which EEG features mainly engage in differentiating valence representation in the brain? By addressing these questions, we aim to propose a more integrative framework for understanding awe’s ambivalent nature and discuss its potential implications for affective neuroscience.

Methods

Participants

We recruited 50 healthy young adult Koreans enrolled in psychology courses at Seoul National University for this study. Participants were excluded if they met any of the following criteria: (1) currently taking psychiatric medication, (2) history of psychiatric treatment, (3) left-handedness, (4) vestibular neuritis or balance disorder, 5) visual acuity before correction less than 0.2, (6) non-Korean native speakers, and (7) consumption of alcohol or use of hair rinse 24 hours before the experiment. Following these exclusion criteria, 43 individuals’ data were included in the analyses (20 males and 23 females; Mage = 20.2 years, SDage = 1.7 years). Seven participants were excluded due to technical issues (N = 3), discontinuance due to motion sickness (N = 2), and lack of fidelity in online valence ratings (i.e., showing accuracy below 90% in the training session for valence keypress – see ‘Procedures’ section; N = 2). Since previous studies have not reported the effect size of behavioral and neural representations of ambivalent affect in predicting awe, conducting an a priori power analysis was not feasible. Instead, we determined our sample size based on recent EEG studies that investigated emotional responses during movie watching52,53,54,55, ensuring comparability in experimental design and statistical power. Participants provided written informed consent before the experiment, and all procedures were approved by the Institutional Review Board of Seoul National University. This study was not pre-registered. See Fig. 1a for the overall sampling procedure.

a sampling flow. b experimental framework. c conceptual scheme of CEBRA-based learning of latent neural-feeling spaces. CEBRA performs nonlinear dimensionality reduction using contrastive learning, which clusters EEG signals from the same valence types as closely as possible while maximizing the separation between signals from different valences. This method is applied to each individual’s EEG data and key-pressed valence sequences for each video to train a personalized latent neural-feeling space. In the ‘aligned’ condition of the pairwise decoding task, canonical correlation analysis (CCA) is applied to the embeddings of two individuals (or clips) to align their latent embeddings and test the shared representational structure. d framework of pairwise decoding tasks – ‘across participants’ and ‘across clips’.

Experimental paradigm

VR clip design

We collaborated with a professional filmmaker to design four audio-integrated 360° immersive videos using Unreal Engine (version 5.03). Each video lasted 120 seconds. Three of the videos were designed to evoke awe: Space (SP), City (CI), and Mountain (MO), while the other one, Park (PA), served as a control stimulus, designed not to include any awe-related factors. To investigate whether ambivalence is consistently observed in various awe-related contexts, we varied the clip themes, the VR cues of awe-related two dimensions – perceived vastness and the need for accommodation, and the perceptual features across the awe-inducing videos. (See Supplementary Note 1 and Supplementary Fig. 1 for detailed descriptions of stimuli design and its validation). We synchronized visual content with ambient sounds using open-source audio samples from Freesound (https://freesound.org) and GarageBand (version 10.4.6).

To validate the awe elicitation, we conducted a preliminary survey with 27 independent young adult Koreans (22 males and five females; Mage = 20.3 years, SDage = 1.9 years), who rated awe intensity using the Awe Experience Scale (AWE-S)20 after watching each clip in VR. Participants reported significantly higher AWE-S scores for three awe clips than the control clip, with large effect sizes (SP vs. PA: t(26) = 12.815, PFDR = 8 × 10−13, d = 2.466; CI vs. PA: t(26) = 11.393, PFDR = 6 × 10-12, d = 2.193; MO vs. PA; t(26) = 5.984, PFDR = 1×10-6, d = 1.520). Two-sided paired t-test was conducted to test statistical differences in AWE-S scores.

Baseline self-report

Before the experiment, participants provided information on their biological sex, age, baseline mood states, and dispositional traits of experiencing awe in daily life. Self-identified gender and race/ethnicity information were not additionally collected. These variables were collected to control their potential confounding effects in predicting AWE-S ratings for each clip. Baseline mood states were assessed using the Korean version of the Positive and Negative Affect Schedule (PANAS) validated by the ref. 56. No participants showed exceptional mood states beyond 1.5 × interquartile range (Mpositive = 33.581, SDpositive = 6.284; Mnegative = 23.140; SDnegative = 6.331). Trait awe was measured using the awe-related items from the Dispositional Positive Emotions Scale (DPES)57 translated into Korean (M = 29.628, SD = 6.626). The Korean-translated DPES items showed acceptable reliability (Cronbach’s alpha = .827).

Procedures

Participants sat on a sofa in a noise-isolated room and wore an Enobio 20 EEG device (Neuroelectrics) and a Quest 2 VR headset (Oculus). After checking EEG signal quality (see ‘EEG data acquisition’ section), the experiment proceeded as follows: baseline EEG recording, keypress training, VR watching task, post-trial measurement, and a break (see Fig. 1b for overall experimental procedures).

Firstly, participants’ resting EEG signals were recorded for 120 seconds with their eyes closed (baseline EEG recording). These baseline signals were used to normalize signals recorded during the movie-watching trials. Secondly, they practiced real-time feeling keypress reporting (keypress training). Here, participants were explicitly asked to report their feelings in real-time keypress with the following auditory instruction:

“While watching the video, please report your affective feeling by pressing a number pad: ‘1 (positive)’ for pleasing feeling, ‘2 (ambivalent)’ for mixed feeling encompassing both pleasing and displeasing feelings at the same time, and ‘3 (negative)’ for displeasing feelings. If you do not feel any feelings, please do not press anything. If a specific feeling persists, continue to press and hold the corresponding key. It is important to report your subjective feelings rather than which feelings the video intends to elicit”.

Participants practiced this for 60 seconds using 30 Korean sentences describing affective responses to prevent confusion about which key they should press. Individuals who showed performance accuracy below 90% in this step were excluded in the main analysis (N = 2). Then, participants watched four VR clips in a pseudorandom order, reporting their feelings using online keypress (VR watching task).

After each trial, they reported awe intensity, overall valence, arousal, and motion sickness using controllers (post-trial measurement). Awe intensity was measured by the Korean-translated AWE-S, valence by Evaluative Space Grid58, arousal by a conventional 9-point Likert scale59, and motion sickness by a single 7-point Likert scale item. The Korean-translated AWE-S demonstrated acceptable reliability (Cronbach’s alpha = 0.928). Participants took a 30-second break with their eyes closed after each trial (break).

EEG data acquisition

We recorded EEG signals using 19 dry electrodes: AF3, AF4, F7, F3, Fz, F4, F8, FC5, FC6, C3, Cz, C4, P7, P3, Pz, P4, P8, O1 and O2 with Neuroelectrics Enobio 20. Ground and reference electrodes were attached to the right earlobe. The embedded software in the Enobio system assessed signal quality using three levels: ‘good’, ‘medium’, and ‘bad’. We ensured that no electrodes displayed bad signals before starting the signal acquisition. We adopted the automated preprocessing pipeline based on EEGLAB (version 2023.1), validated by the ref. 60. EEG signals for each trial were time-locked to the initiation of the video, excluding the last three seconds to avoid end-of-task effects (e.g., loss of attention). High-pass filtering above 0.5 Hz and Artifact Subspace Reconstruction were performed. Unlike the original pipeline, we used interpolation to maintain consistent recording lengths across participants and trials rather than excluding time windows with poor signal quality. We conducted independent component analysis-based artifact rejection to remove noise components, such as eye or head movements, with over 90% probability. The preprocessed signal for each trial was normalized by subtracting the average resting signal value for each channel.

After preprocessing, we performed Short Time Fourier transform (STFT) to calculate the spectral power of five frequency bands for each channel: delta (1–4 Hz), theta (4–8 Hz), alpha (8–14 Hz), beta (14-31 Hz), and gamma (31–49 Hz). For STFT, a Hanning window with a 500-sample window size (i.e., 1000 ms) and a 250-sample hop size (i.e., 500 ms) was applied. Participants’ feeling keypresses were embedded as event markers in EEG signals, categorizing EEG samples into one of four valence categories – i.e., neutral, positive, negative, and ambivalent. The valence label of each 500-sample window after STFT was defined as the mode of the corresponding samples’ valence labels. STFT was performed using scipy package61.

Behavioral analysis

Using data from 43 participants who had acceptable behavioral data (see Fig. 1a), we conducted following behavioral analyses to assess the association between AWE-S ratings and 14 behavioral features measured before, during, and after each trial: sex, age, PANAS positive and negative affect scores, DPES awe trait score (before trial), duration of positive, ambivalent, negative, and neutral feelings (during trial), arousal, motion sickness score, and intensity of positive, ambivalent, and negative feelings (after trial). The duration of each valence type was calculated as the ratio of keypresses for that valence type to the total running time of each clip. The valence intensity was calculated based on the Evaluative Space Grid responses: positivity (x-axis value), negativity (y-axis value), and ambivalence (minimum value between positivity and negativity, following previous literature25,62,63).

Firstly, we performed two-sided paired t-tests to examine statistical differences in AWE-S scores, duration and intensity of each valence type, and arousal between the three awe clips and the control clip at PFDR < 0.05. Next, to evaluate the explanatory power of the 14 metrics for AWE-S ratings, we fit linear mixed effect models with each regressor and two random intercepts for participants and clips (i.e., total 14 models) using the lmerTest package64. Assumptions of normality were examined using the DHARMa package65. We confirmed that the distribution of residuals did not significantly deviate from a normal distribution using the Kolmogorov-Smirnov test (all Ps > 0.05). Last, we conducted machine learning-based multivariate predictive modeling with 14 behavioral variables for AWE-S ratings, considering potential nonlinear interactions among predictors. Using the h2o package66, we split the dataset into training and test sets with a 4:1 ratio and conducted 5-fold cross-validation. Among 22 models constructed in this process, we selected the gradient boost model (GBM) as the best model, which displayed the lowest fold-averaged test RMSE value. Its predictive performance was compared to a baseline ridge linear regression model. To identify the most influential features, we calculated variable importance and shapley values for each variable with the best model.

Electrophysiological analysis

Among 43 participants in the behavioral analysis, we excluded 16 individuals due to poor-quality EEG signals based on visual inspection (N = 6) and lack of ambivalent keypresses in at least one awe-inducing trial (i.e., who reported ambivalent feelings for less than 5% of the total duration across all videos; N = 10). These selection criteria were based on the premise that a consistent reporting of ambivalent feelings across stimuli would provide a clearer signal for assessing its neural correlates. To investigate the latent neural representation of ambivalent feelings during awe, this specific sampling approach was necessary. We ensured that included and excluded participants in this step did not show significant differences in key demographic measures and AWE-S ratings for all awe-evoking clips at PFDR < 0.05, suggesting that this selection may not introduce sampling bias (see Supplementary Table 1). As a result, we employed 27 participants’ data for the following electrophysiological analyses.

Learning a latent neural-feeling space for each participant and clip

The first objective of the electrophysiological analysis was to investigate whether ambivalent feelings during awe display distinct neural representations and to evaluate their relationship with the subjective awe ratings. To this end, we identified a latent neural-feeling space for each individual and awe clip’s datum by applying CEBRA46 to 27 participants’ temporally aligned STFT-processed EEG features and valence keypress in the three awe clips (i.e., 27 participants × 3 clips). CEBRA allows us to extract latent neural-feeling spaces since it employs contrastive learning-based dimensionality reduction to seek latent embeddings from the neural signals and self-reported valence dynamics, maximizing the attraction of EEG samples with the same valence labels and repelling those with different labels (see Fig. 1c for conceptual scheme of CEBRA analysis).

As the optimal dimensionality of latent neural-feeling spaces was unknown, we fitted CEBRA models for each participant-clip pair across dimensions ranging from one to nine (see ‘Dimensionality selection for the latent neural-feeling spaces’ section for dimensionality selection process). The following hyperparameters were applied: batch_size = length of STFT EEG features, model_architecture = ‘offset-10 model’, number_of_hidden_units = 38, learning_rate = 0.001, the number_of_iterations = 500, and hybrid = False.

Decoding feeling dynamics with latent neural-feeling spaces

We performed pairwise decoding analysis to test whether the personalized latent neural-feeling spaces hold significant information that can be generalizable across different individuals and clips. To this end, a pairwise decoding task was conducted based on two tasks (‘across participants’ and ‘across clips’) × three conditions (‘random’, ‘not-aligned’, and ‘aligned’) design (see Fig. 1d for schematic descriptions of the decoding tasks).

For the ‘across participants’ task, a k-nearest neighbor (kNN) classifier trained on participant A’s embeddings in latent neural-feeling space and keypressed valence labels were used to predict the valence keypress of participant B’s embeddings for the same clip. For the ‘across clips’ task, a kNN classifier trained on clip X’s embeddings and valence sequences was used to predict the valence of clip Y’s embeddings within the same participant.

Decoding performances were evaluated under three conditions: (1) a baseline null test with a classifier trained on randomly shuffled valence labels (‘random’), (2) prediction using personalized latent neural-feeling embeddings without any alignment between train and test sets (‘not-aligned’), and (3) prediction using aligned latent neural-feeling embeddings between train and test sets (‘aligned’). Neural alignment motivates the exploration of commonality among individual latent neural spaces67,68. To align train and test embeddings, we applied canonical correlation analysis (CCA) and the canonical components of the train and test sets corresponding to the dimensionality of each latent embedding were utilized as ‘aligned embeddings’.

In all decoding analyses, six participants who reported all four valence labels for at least 5% of the total duration across three awe-inducing clips were selected to facilitate the multi-class classification. Following the conventional heuristic, neighborhood parameters of all kNN classifiers were fixed at 15 (i.e., the nearest odd number to the square root of the input embedding length). Considering the imbalance in valence keypress labels, prediction performance was evaluated using the weighted F1 scores.

Last, we tested the statistical differences in weighted F1 scores between CEBRA-based and PCA/FAA-based embeddings for each of the three conditions in each decoding task. Additionally, we also examined the statistical differences in weighted F1 scores under the CEBRA-based embedding across conditions. Since F1 scores were calculated for each participant pair, violating the assumption of sample independence, we fit two linear mixed models with condition and embedding type as regressors, respectively, to explain the variance in the weighted F1 score. Using emmeans package69, a FDR-corrected post-hoc test was then conducted with fitted models.

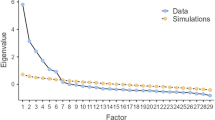

Dimensionality selection for latent neural-feeling spaces

We selected the optimal dimensionality of latent neural-feeling spaces based on two assumptions: (1) The space shares the same dimensionality across individuals and clips. (2) The space displays as good as predictive performances across individuals and clips with other neural-feeling spaces based on higher dimensionality, even with fewer dimensions.

The second assumption was based on the idea that generalizability, as reflected in pairwise decoding performances, should be the criterion for determining the optimal dimensions in dimensionality reduction techniques70. However, it also acknowledges that such predictive performance may be overestimated as the number of dimensions increases70,71. We calculated each dimensionality’s averaged weighted F1 score in the ‘aligned’ condition and performed hierarchical clustering analysis to group dimensionalities with similar predictive performance. To determine the number of clusters, we explored the number of clusters between 2 and 9 that yielded the highest silhouette coefficient, and the lowest dimension in the highest-performing cluster was selected. As a result, dimensions 6, 7, 8, and 9 formed the high-performance cluster for the ‘across participants’ task, and dimensions 7, 8, and 9 for the ‘across clips’ task. Thus, embeddings in the 7D neural-feeling space were used in the following analyses.

As a control analysis, we compared the predictive performance of the frontal alpha asymmetry (FAA; i.e., traditional handcrafted feature of valence), principal component analysis embeddings (PCA; i.e., a conventional dimensionality reduction method), and the CEBRA-driven 7D embeddings across decoding analyses for each of the six conditions (see Supplementary Note 2 for detailed description how to extract FAA values and optimal PCA embeddings).

Assessing statistical significance of latent valence representations

From 27 participants’ personalized 7D neural-feeling embeddings for three awe clips, we evaluated CEBRA’s identification of valence representation in the latent neural spaces. To this end, we used the silhouette coefficient of each valence category in the latent space as a summary metric for its neural representation. Given that CEBRA’s contrastive learning method optimizes the latent space to maximize separation between valence labels, a higher silhouette coefficient suggests that the representation for a given valence is more distinctly identifiable. While InfoNCE loss value is the default performance index of contrastive learning such as CEBRA, it lacks scaling and does not allow valence type-specific calculations, so we used silhouette coefficients instead.

We computed the average silhouette coefficient for each valence category. Since silhouette coefficients can be overestimated for clusters in the latent space constructed in a supervised manner, such as CEBRA, we assessed its statistical significance through a permutation test. We randomly shuffled valence keypresses and trained the CEBRA model again based on the identical hyperparameter set. The average silhouette coefficient of EEG samples for each valence group was calculated from this pseudo-embedding, and we obtained its null distribution by repeating this 1,000 times. The P-value from the permutation test is defined as the proportion of pseudo-embeddings with a score higher than the silhouette coefficient. Consequently, in each participant’s latent space for each movie clip, silhouette coefficients of neutral, positive, negative, and ambivalent neural representations and their statistical significance were respectively assessed. All P-values were FDR corrected.

Quantifying ‘cortical distinctiveness’ of valence in the brain

To quantify the distinctiveness of ambivalence-related neural representations, we calculated a metric we named ‘cortical distinctiveness’ of the valence cluster k, \({\phi }_{k}\). It is defined as the average cosine distance between the reference cluster k and the other clusters. We applied cosine distance as a cluster distance metric instead of other conventional metrics (e.g., Euclidean distance), considering that latent CEBRA embeddings are distributed on the hypersphere space rather than Euclidean space. We initially measured cosine distance between the clusters with the average cluster distance (i.e., the mean cosine distance between each point in k to every point). In addition to ambivalent-labeled embeddings, we also computed cortical distinctiveness values for the other valence groups. As a sensitivity check, we also calculated the cluster distance using the medoid distance approach. Medoid distance measures the distance between clusters by calculating the cosine distance between ‘medoid samples’ of each cluster, which shows the closest average cosine distance with samples within each cluster. Consequently, when a participant reports \(n\) valence categories for a specific clip via keypress, a total of \(2n\) cortical distinctiveness values are computed from that space. To assess the association between these metrics and subjective awe intensity, we fitted linear mixed models and AutoML-based multivariate predictive models using the same approach as in the self-report analysis (see ‘Behavioral analysis’ section).

Hidden Markov modeling (HMM)

Lastly, we employed HMM to identify EEG features related to distinguishing between four different valence types during awe-inducing movie watching. HMM was used to infer hidden neural states from EEG signals, and we hypothesized that if the state transitions of a specific EEG feature significantly aligned with self-reported valence transitions in the valence keypress, that feature would encode distinct representations for each valence type.

To test this, we fitted independent HMMs to each participant’s EEG features and self-reported valence keypress data for each video. The analysis was conducted on six participants who reported all valence labels for at least 5% of the total runtime across three awe-inducing clips. The number of states in the HMM was informed by the number of transitions observed in the self-reported valence keypress. We defined a neural transition as a “match” if its timepoint fell within ±3 seconds of a self-reported valence transition. The match rate was then calculated as the proportion of matched transitions relative to the total number of neural transitions, serving as a measure of how well EEG state transitions tracked subjective valence shifts.

To assess the statistical significance of these match rates, we conducted 1000 permutation tests. Given the substantial variability in the number of valence transitions reported across movies (SP: min = 2, max = 17; CI: min = 0, max = 19; MO: min = 3, max = 49), we computed P-values based on the mean difference between observed match rates and null match rates rather than using raw match rate values. See Supplementary Note 3 for detailed explanations. This approach, informed by previous literature72, effectively accounts for inter-subject differences in the frequency of valence transitions, ensuring that observed neural-affective alignments are not biased by individual variability.

The analysis was conducted at two levels: band frequency and channel. At the band frequency level, we aggregated all features from the 19 EEG channels corresponding to each frequency for fitting the HMM, allowing us to examine valence-related distinctions in frequency-specific neural dynamics. At the channel level, we grouped all features from the five frequency bands within each individual channel, enabling us to identify which brain regions exhibit valence-specific state transitions and how their frequency components contribute to affective differentiation. This two-tiered approach provides a more comprehensive understanding of how neural state transitions track affective shifts during awe. All HMM analyses were performed using “BrainIAK” package73(version 0.12).

Bayes factor estimation to test confounding effects

We tested three potential sources of confounding throughout the study. First, we examined whether unpleasantness due to motion sickness during viewing could explain participants’ AWE-S scores or the duration and intensity of ambivalent feelings. Second, we tested whether the silhouette score of embedding clusters labeled as ambivalent in each individual’s latent neural-feeling space was confounded by the cluster size. Third, we tested whether AWE-S ratings for each clip could be alternatively explained by the frontal alpha asymmetry (FAA) metric instead of cortical distinctiveness.

For all tests, we used linear mixed models with each suspected confounder as a fixed effect and random intercepts for participants and clips. To assess the fixed effects, we used both frequentist inference and Bayes Factor analysis to evaluate support for the null hypothesis (i.e., no explanatory power of the confounder). The Bayes factor of the null model (BF01) was estimated using BIC approximation via the BayesFactor package74, comparing each full model to a corresponding null model including intercepts only.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Results

Awe is associated with longer and stronger ambivalent feelings, but not with univalent ones

We first explored participants’ feeling dynamics reported by keypress. The feeling dynamics showed idiosyncratic temporal patterns (see Fig. 2a), but they were also significantly intertwined to visual and acoustic features known to predict perceivers’ affective responses - e.g., color hue75,76, brightness77, and loudness78 (see Supplementary Table 2). These results validate our real-time feeling rating paradigm, showing that individual feeling ratings are systematically connected to affect-related sensory inputs, despite their temporal idiosyncrasy.

a feeling dynamics self-reported by real-time keypress from 43 participants during watching each VR stimulus. b AWE-S ratings evaluated post-trial for each clip, reflecting the intensity of awe. c, the duration (left) and intensity (right) of ambivalent feelings for each clip, comparing responses between awe-conditioned (purple) and control (gray) clips. Results are based on 43 participants’ self-reports for all VR clips. All statistical differences were tested through a paired two-sided t-test. *PFDR < 0.05; **PFDR < 0.01; ***PFDR < 0.001.

Next, we performed a manipulation check — if awe-inducing clips were associated with higher awe and ambivalence-related metrics. Participants reported significantly higher AWE-S ratings for all awe clips than the control (SP vs. PA: t(42) = 12.043, 95% CI = [1.333, 1.869], PFDR = 1 × 10-14, d = 1.837; CI vs. PA: t(42) = 9.789, 95% CI = [1.041, 1.581], PFDR = 3 × 10−12, d = 1.493; MO vs. PA; t(42) = 9.000, 95% CI = [0.940, 1.483], PFDR = 2 × 10−11, d = 1.373; see Fig. 2b). The three awe clips also led to significantly longer and stronger ambivalent feelings than the control (SP vs. PA: tduration(42) = 3.685, 95% CIduration = [0.068, 0.232], Pduration/FDR = 0.001, dduration = 0.562, tintensity(42) = 2.957, 95% CIintensity = [0.126, 0.665], Pintensity/FDR = 0.005, dintensity = 0.451; CI vs. PA: tduration(42) = 3.372, 95% CIduration = [0.058, 0.230], Pduration/FDR = 0.002, dduration = 0.514, tintensity(42) = 2.946, 95% CIintensity = [0.132, 0.705], Pintensity/FDR = 0.005, dintensity = 0.449; MO vs. PA: tduration(42) = 5.179, 95% CIduration = [0.128, 0.292], Pduration/FDR = 2 × 10−5, dduration = 0.790, tintensity(42) = 5.118, 95% CIintensity = [0.451, 1.038], Pintensity/FDR = 2×10-5, dintensity = 0.780; see Fig. 2c). Contrarily, behavioral metrics of positive and negative feelings did not show consistently significant differences between conditions, except for the duration of positive feelings and arousal (see Supplementary Table 3).

These results suggest that awe is more closely related to ambivalent feeling compared to simply pleasing or displeasing ones.

Ambivalent feelings emerge as salient predictors of awe

We tested the predictive power of ambivalence-related metrics on the subjective intensity of awe. Before assessing the predictive relationship between ambivalent feeling and awe, we first tested whether its two appraisal dimensions are related to mutually opposing feelings. To this end, we fitted mixed-effects linear regression models predicting each clip’s self-reported intensity of positive and negative affects, using the AWE-S averaged scores for ‘vastness’ and ‘accommodation’ factors as regressors. The vastness appraisal showed a significant positive fixed effect on positive affect (\(\beta\) = .247, P = 2 × 10-4, 95% CI = [0.122, 0.371]), but no significant fixed effect on negative affect (\(\beta\) = 0.007, P = 0.907, 95% CI = [-0.118, 0.133]). In contrast, the accommodation appraisal showed a significant positive fixed effect on negative affect (\(\beta\) = 0.234, P = 0.002, 95% CI = [0.086, 0.382]) and significant negative fixed effect on positive affect (\(\beta\) = −0.394, P = 4 × 10-7, 95% CI = [−0.541, −0.248]). These findings support our conceptual model, suggesting that each appraisal is linked to opposite affect, allowing awe to be better characterized as an ambivalent feeling compared to simply univalent ones.

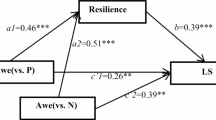

Next, we evaluated whether awe is better characterized as an ambivalent affect than simply univalent ones. The linear mixed effects model analysis revealed that only the duration and intensity of ambivalent feelings and the intensity of positive feelings showed significant fixed effects (duration of ambivalent feelings: \(\beta\) = 0.565, P = 0.039, 95% CI = [0.033, 1.097]; intensity of ambivalent feelings: \(\beta\) = 0.220, P = 0.001, 95% CI = [0.090, 0.351]; intensity of positive feelings: \(\beta\) = 0.094, P = 0.035, 95% CI = [0.007, 0.180]; see Fig. 3a), implying that intensity of awe is primarily explained by the self-reported metrics of ambivalent feelings.

a beta coefficients of each behavioral metric in the linear mixed effect models. Error bars denote 95% confidence intervals of fixed effects’ estimates. Purple bars show the estimates of ambivalence-related features. Bolded statistics indicate statistically significant results at P < 0.05. b predictive performances of AutoML-based best model (GBM) and linear ridge regression model (GLM). c variable importance value of behavioral metrics in the GBM-based prediction. Importance value was scaled from 0 to 1 by the value of duration of ambivalent feelings. Purple bars show the importance of ambivalence-related features. d shapley values of behavioral metrics in the GBM-based prediction. Results are based on 43 participants’ self-reports for all VR clips.

In the machine learning-based multivariate predictive modeling, our best model – GBM showed superior predictive performance than a linear regression model (see Fig. 3b). In this model, the duration of ambivalent feelings displayed the highest variable importance value than any other behavioral metrics (see Fig. 3c). Unexpectedly, arousal and PANAS positive affect score (i.e., baseline positive mood) exhibited higher variable importance values than the intensity of ambivalent feeling (see Fig. 3c). In the case of shapley values, both the duration and intensity of ambivalent feelings showed high predictive contributions, and arousal also consistently demonstrated prominent importance (see Fig. 3d).

Last, to test whether motion sickness, the common discomfort in VR environments, may have acted as a confounder between awe and ambivalent feelings, we fitted linear mixed-effects models predicting the intensity and duration of ambivalent feelings and AWE-S scores using motion sickness scores as a regressor. As a result, motion sickness scores did not significantly predict AWE-S scores or the intensity and duration of ambivalent feelings (AWE-S: \(\beta\) = 0.041, P = 0.243, 95% CI = [−0.028, 0.111], BF01 = 3.940; duration of ambivalent feeling: \(\beta\) = 0.009, P = 0.331, 95% CI = [−0.009, 0.027], BF01 = 13.690; intensity of ambivalent feeling: \(\beta\) = 0.048, P = 0.208, 95% CI = [−0.027, 0.123], BF01 = 2.910), suggesting that the predictive relationship between ambivalent feelings and awe ratings was not confounded by motion sickness.

Our univariate and multivariate predictive analyses provide compelling evidence that the ambivalent feeling is significantly related to intensive awe. Additionally, they suggest that heightened arousal and baseline positive mood state also mainly engage in intensive awe under the interaction with ambivalent feelings.

Aligned latent neural-feeling spaces share geometric structures of valence representation across individuals and stimuli

To evaluate the generalizability and explore optimal dimensionality of personalized latent neural-feeling space identified by CEBRA, we performed pairwise decoding analysis. Since the optimal dimensionality of the latent neural-feeling spaces was unknown, we explored which of the 1 to 9-dimensional latent embeddings showed decoding performance similar to high-dimensional embeddings with the simplest number of dimensions (see ‘Methods’ section). Hierarchical clustering analysis revealed that in the ‘across participants’ decoding task, 6-, 7-, 8-, and 9-dimensional embeddings showed better decoding performance than other dimensional embeddings, and in the ‘across clips’ task, 7-, 8-, and 9-dimensional embeddings showed better decoding performance (see Fig. 4a). Based on these results, we chose 7D space as the optimal dimensionality of latent neural-feeling spaces. We applied the same framework to identify optimal dimensionality of PCA-driven spaces – our baseline feature space for decoding tasks – and found 6D space as its optimal dimensionality (see Supplementary Fig 2). For this reason, we used the decoding performances of 6D PCA embedding and FAA value (i.e., unidimensional) as a comparative baseline model for the performance of 7D CEBRA embedding.

a dimensionality selection for CEBRA-driven latent neural-feeling spaces. Hierarchical clustering analysis identified two optimal clusters in terms of decoding performances in the aligned condition. The purple plots indicate dimensionalities with greater performances compared to other ones. b decoding performance of latent embeddings derived from CEBRA, PCA, and FAA across three conditions. Black asterisks show decoding performance differences between analytic frameworks in each condition, while purple asterisks indicate how CEBRA embeddings perform differently across conditions. Each error bar represents 95% confidence intervals of weighted F1 scores from pairwise decoding analysis. For ‘across participants’ tasks, total 90 decoding scores (i.e., all possible participant pairings × number of clips) were used, while for ‘across clips’ tasks, total 36 scores (i.e., all possible clip pairings × number of participants) were included, as shown in the heatmap on the right of each plot. All statistical differences were tested through post-hoc test of linear mixed models including condition or embedding type variable as a regressor. Results are based on six participants’ data in three awe-inducing trials. *PFDR < 0.05; **PFDR < 0.01; ***PFDR < 0.001.

In the ‘across participants’ decoding task, CEBRA-based embeddings predicted others’ feeling dynamics in the same clip significantly better than random chance regardless of their alignment (aligned vs. random: t(718) = 7.829, 95% CI = [0.080, 0.133], PFDR = 5 × 10−14, d = 0.674; not-aligned vs. random: t(718) = 3.297, 95% CI = [0.018, 0.072], PFDR = 0.001, d = 0.284; see Fig. 4b). Nevertheless, aligned embeddings significantly outperformed not-aligned ones (aligned vs. not-aligned: t(718) = 4.532, 95% CI = [0.035, 0.088], PFDR = 1 × 10−5, d = 0.390). Also, they provided more accurate predictions than PCA- and FAA-based in the aligned and not-aligned conditions except for random condition (see Fig. 4b and Supplementary Table 4 for detailed statistics).

In the ‘across clips’ task, only aligned CEBRA embeddings showed significant predictive power in predicting feeling dynamics across clips (aligned vs. random: t(286) = 2.882, 95% CI = [0.017, 0.089], PFDR = 0.007, d = 0.392; not-aligned vs. random: t(286) = 0.014, 95% CI = [−0.036, 0.036], PFDR = 0.989, d = 0.002; see Fig. 4b). Aligned embeddings outperformed not-aligned ones (aligned vs. not-aligned: t(286) = 2.868, 95% CI = [0.017, 0.088], PFDR = 0.007, d = 0.390). Aligned CEBRA embeddings more accurately predicted feeling dynamics than PCA-based and FAA-based embeddings, but not in random and not-aligned conditions (see Fig. 4b and Supplementary Table 4 for detailed statistics).

These findings suggest that aligned CEBRA-based latent neural-feeling spaces capture a shared structure of valence representations, including ambivalent feelings, across individuals and stimuli, which could not be fully identified by conventional approaches such as PCA or FAA.

The more distinctively ambivalent feelings are represented in the cortices, the more saliently individuals report awe

We observed that the silhouette coefficients of ambivalent clusters in the latent neural-feeling spaces varied widely across participants (see Fig. 5a), while positive and negative valence-related embeddings showed relatively consistent significant silhouette coefficients (see Supplementary Fig. 3). Specifically, among all participant-video pairs, 70.0% and 77.9% of the pairs showed significant silhouette coefficients for positive and negative feelings, respectively, whereas only 58.0% of the pairs exhibited significant silhouette coefficients for ambivalent feelings. This individual variability in the ambivalence-related representations suggests that the integration of positive and negative affect during awe may manifest differently across individuals. Concerned about potential confounding effects of cluster size on silhouette coefficients’ statistical significance, we tested the explanatory power of the number of ambivalence-labeled EEG samples in the cluster (i.e., duration of ambivalent feelings) to the P-values of silhouette coefficients. The cluster size of ambivalence-labeled embeddings did not show significant beta coefficients (\(\beta\) = 0.374, P = 0.066, 95% CI = [−0.019, 0.768], BF01 = 1.570), suggesting that the observed variability in silhouette coefficients for ambivalence may be not merely due to differences in the duration of reported ambivalent feeling.

a silhouette coefficients of ambivalent feelings’ cluster in each participant-clip’s latent neural-feeling spaces. Color intensity indicates the statistical significance of the silhouette coefficients. b concept of ‘cortical distinctiveness’ for the reference cluster k, \({\phi }_{{{\rm{k}}}}\). cortical distinctiveness is defined as the average of cosine distances between the reference cluster and the others. In the equation, N is the number of the other clusters, ci is the i-th cluster, and dcos is a cosine distance. As a sensitivity check, we calculated dcos by average and medoid distance indices. c explanatory power of each valence cluster’s cortical distinctiveness value in the linear mixed-effects models. Error bar denotes 95% confidence interval of fixed effects’ estimates. Purple bars show the estimates of ambivalence-related features. Bolded statistics indicate statistically significant results at P < 0.05. d performance gain observed when cortical distinctiveness of ambivalent feelings was added to the behavioral prediction model. e variable importance value of cortical distinctiveness of ambivalent feelings and other behavioral features in the GBM-based prediction. Importance value was scaled from 0 to 1 by the value of cortical distinctiveness of ambivalent feelings. Purple bars show the importance of ambivalence-related features. Results are based on 27 participants’ data in three awe-inducing trials.

We then tested whether individual differences in the neural representation of ambivalent feeling could account for the variance in awe ratings. To quantify how distinct the representation of ambivalent affect is from simply positive or negative feelings’ patterns, we calculated the ‘cortical distinctiveness’ metric based on their cosine distance. Specifically, this metric of the valence label k, \({\phi }_{k}\), indicates how distinguishable representations cluster k displays from other valence clusters by averaging cosine distance with other clusters (see Fig. 5b and ‘Methods’ section). We calculated the cortical distinctiveness value of reported valence categories for each participant-clip paired datum. Then, we constructed four linear mixed-effect models including \(\phi\) value of each valence cluster and two random intercepts of participants and clips.

We observed that \({\phi }_{{ambivalent}}\) significantly predicted awe intensity (\(\beta\) = 0.860, 95% CI = [0.241, 1.478], P = 0.008; see Fig. 5c), but the other valence types’ \(\phi\) values did not. The predictive power of \({\phi }_{{ambivalent}}\) persisted even when using medoid cluster distance for calculating this metric (\(\beta\) = 0.697, 95% CI = [0.152, 1.241], P = 0.014; see Fig. 5c), demonstrating the robustness of the findings to the specific method of calculating cortical distinctiveness. As a control analysis, we conducted the same analysis with time-averaged FAA values and found that FAA did not predict awe ratings significantly (\(\beta\) = 0.000, 95% CI = [−0.004, 0.003], P = 0.852, BF01 = 8.780), highlighting the specificity of the relationship between awe and the neural representation of ambivalence.

In machine learning-based predictive modeling, adding \({\phi }_{{ambivalent}}\) metrics to a model predicting awe ratings with 14 behavioral variables improved the R2 value by 15.7% (see Fig. 5d). This supports the meaningful contribution of ambivalence representation distinctiveness to the subjective ratings of awe. Furthermore, \({\phi }_{{ambivalent}}\) demonstrated higher predictive power than other behavioral metrics in the predictive model (see Fig. 5e), suggesting that the cortical distinctiveness of neural representations for ambivalent feelings may be a key factor driving individual differences in awe ratings. Additionally, adding \({\phi }_{{ambivalent}}\) in behavioral predictive model boosted the variable importance value of DPES awe trait score, which originally displayed low importance value without the \({\phi }_{{ambivalent}}\).

These results indicate that individual differences in the distinctiveness of cortical representation of ambivalent feelings and its interaction with baseline psychological factors such as trait awe and positive mood specifically account for the awe intensity.

The multiple band frequency powers and frontoparietal channels mainly distinguish different affective states during awe

Finally, to interpret which EEG features exhibit distinct patterns corresponding to each valent state during awe, we applied hidden Markov modeling (HMM) as a compromise to the black-box nature of CEBRA. By calculating the match rate between neural transition timepoints identified by HMM and self-reported feeling transition timepoints from valence keypresses, we investigated which features’ temporal dynamics consistently aligned with feeling transitions (see ‘Methods’ for details).

First, at the band-frequency level, power in all frequency bands except for beta showed significant match rates with feeling transitions across all channels (delta: match rate = 64.9%, P = 0.025; theta: match rate = 64.5%, P = 0.025; alpha: match rate = 65.4%, P = 0.037; beta: match rate = 64.2%, P = 0.069; gamma: match rate = 64.0%, P = 0.047; see Fig. 6a).

a band-frequency level results. The black horizontal bars represent the mean difference between the observed match rate with feeling dynamics and the mean of null match rates, averaged across participants and clip stimuli. The null distribution consists of the mean differences between each null match rate and its corresponding null distribution mean, also averaged across participants and video types (see ‘Methods’ for details). The P-value is defined as the proportion of null samples with a mean difference greater than the observed match rate’s mean difference. Features shaded in purple indicate significant match rates at P < 0.05. b channel level results. Results are based on data from six participants across three awe-inducing trials. *P < 0.05; **P < 0.01; ***P < 0.001.

Second, at the channel level, AF4, FC5, Fz, and Pz channels exhibited significant match rates with feeling transitions across all frequency bands (AF4: match rate = 34.8%, P = 0.014; FC5: match rate = 32.9%, P = 0.024; Fz: match rate = 35.5%, P = 0.002; Pz: match rate = 33.6%, P = 0.006; see Fig. 6b and Supplementary Table 5 for statistics of the other channels).

Overall, the band-level analysis revealed that affective state transitions were broadly reflected in oscillatory power across multiple frequency bands, while the channel-level analysis identified significant neural transitions in frontal and parietal channels. These results suggest that affective transitions are encoded both through widespread frequency bandpowers and localized cortical dynamics.

Discussion

Our study reveals that awe is primarily characterized as an ambivalent affect, challenging traditional unidimensional models of valence to human’s complex emotion and feeling. By combining immersive VR, EEG, multi-dimensional valence scale, and deep representational learning, we demonstrate that self-reported awe intensity is accurately predicted by ambivalence-related behavioral and neural features. In particular, the duration and intensity of ambivalent feelings were identified as the most important predictors of awe intensity in our multivariate predictive model, while univalent feeling metrics contributed less. In the personalized latent neural-feeling space identified through a deep dimensionality reduction technique, neural representations of ambivalent feelings exhibited geometric patterns distinct from those of positive and negative feelings. The degree of this distinction – ‘cortical distinctiveness’ of ambivalent feeling – outperformed behavioral metrics of ambivalence in predicting awe intensity. This distinction was mainly driven by power features across various frequencies in frontal and parietal EEG channels. Our findings establish a direct link between the neural signature of blended feelings and the phenomenological aspects of awe, offering insights into the long-standing debates about the neural systems of ambivalent affect in affective neuroscience. This approach not only illuminates the intricate nature of awe but also provides a comprehensive framework for understanding and quantifying the neural basis of human’s complex emotion beyond awe.

Our finding that the duration and intensity of ambivalent feeling accurately predict the subjective awe intensity supports the hypothesis that awe is inherently an ambivalent emotion. This finding aligns with previous studies in psychology; for example, despite some cultural differences, both Western and Asian populations rated various stimuli inducing awe as having stronger ambivalence than ones evoking happiness or fear24,25,79. This consistency across cultures and stimuli suggests that the ambivalent affect of awe may be a fundamental feature of this emotion.

Our analysis of ambivalence-related neural representations during awe revealed that aligned embeddings of personalized latent neural-feeling spaces significantly decoded feeling dynamics across participants and stimuli, outperforming traditional methods like PCA and FAA. This superiority aligns with known limitations of FAA in valence indexing80,81,82,83 and extends beyond PCA’s linear and unsupervised approaches. This result implies that deep representational learning technique can better capture the generalizable patterns of valence representation by incorporating nonlinear relationship between brain signals and affective valence33,47,48 and heterogeneity of valence representation across individuals and stimuli49,50,51. Moreover, this personalized model fitting helped us explore individual differences in valence representation and their relationship with awe.

Our investigation of individual variability in ambivalence representation and awe provides a chance to reconcile conflicting perspectives about neural correlates of ambivalent affect. Our latent neural space analysis revealed distinct neural representations for ambivalent feelings, consistent with studies indicating that ambivalent affect have unique neural patterns in cortical regions72,84. This finding seems to directly challenge the constructivists’ view that ambivalent affect lacks specific neural system. Nevertheless, our findings of idiosyncrasy in these neural representations may integrate these opposing arguments into a single framework. We observed individual variability in how neural representations of ambivalent feelings are cohesive in the latent neural space, which paradoxically supports the constructivist perspective that psychological factors (e.g., attentional focus or emotional granularity) shape how mixed affect is experienced and processed85. Although we did not explicitly test the source of this variability in this study, our findings potentially bridge these divergent views in affective neuroscience, demonstrating the nuanced nature of ambivalence processing in the brain.

Another key finding of our study is that the cortical distinctiveness of ambivalent feelings was a stronger predictor of awe than behavioral indexes such as the intensity or duration of ambivalent feelings. Since this metric quantifies the geometric relationship between neural representations of ambivalent feelings and other affective states, our results reveal a multilayered relationship between ambivalence and awe that would be invisible to traditional analyses focused on activation magnitude within specific regions or networks. One possible interpretation is that the meaning-making process inherent to awe – particularly facilitated by self-distancing86,87 – may be reflected in the heightened cortical distinctiveness of ambivalent feelings. Indeed, self-distancing has been identified as a core cognitive appraisal that integrates conflicting affects to generate unique meaning across diverse contexts, including daily emotional experiences, art appreciation, and resilience from adversity88,89,90,91. Through self-distancing, the coexisting positive and negative feelings elicited by the perceived vastness and need for accommodation inherent to awe would converge into a distinct affective feeling that cannot be reduced to a sum of positive and negative feelings (i.e., exhibiting qualitatively distinct neural representations).

Our speculation that self-distancing bridges the cortical distinctiveness of ambivalent feelings with subjective experience of awe can be extended into a hypothesis about how awe results in two distinct self-related implications: self-diminishment and self-transcendence. While self-diminishment refers to a perceived reduction in the size or significance of the self19,92,93, self-transcendence denotes a shift of attention from the self, which can foster active ‘authentic self-pursuit’ through connection with something greater unlike self-diminishment86,94. Recent integrative framework suggests these experiences are not mutually exclusive despite their differences: awe may simultaneously trigger self-diminishment while encouraging self-transcendence86. In this view, self-distancing is the core appraisal mechanism that enables both consequences by reducing self-importance yet broadening awareness of the self’s wider context to provide a more expansive and non-egocentric perspective. Thus, the ambivalent feeling of awe via self-distancing may underlie awe’s psychological benefits, a proposition that calls for further empirical testing.

Hidden Markov model analyses suggest that temporal dynamics of electrophysiological powers across various frequency bands are closely associated with differentiating the valence of affective feelings self-reported during awe. Previous EEG studies that conducted frequency-level analyses support our finding that a broad range of frequency bands are systematically involved in affective introspection and subsequent valence differentiation, which may engage in our keypress-based valence report paradigms. Audiovisual inputs during movie viewing may contribute to the formation of an initial affective tone, with the saliency detection95,96,97 and early sensory–affective transformation77,98 likely mediated by delta oscillations. Subsequently, during the evaluation of this emotional tone and formation of valence, theta waves may facilitate the retrieval of emotional memories and knowledge95,99,100,101,102, while alpha waves may support efficient introspection by gating attention to suppress extraneous sensory inputs and memories95,103,104,105. The distinct valence representation patterns shaped by the above processes may be reflected in state transitions of gamma waves, which likely reflects the integration of dispersed affective signals into a global valence representation106,107,108. Given beta oscillation’s primary role in motor planning109,110,111 and its non-significant relationship with valence differentiation in our study, their association needs to be further examined.

At the channel level, our analysis further revealed that significant correspondence between neural state transitions and valence transitions was observed exclusively in frontal and parietal channels. This finding aligns well with numerous fMRI studies indicating that valence representation is predominantly localized within the ventromedial prefrontal cortex (vmPFC). For example, an fMRI study demonstrated that the temporal dynamics of affective states during drama viewing closely resembled state transitions specifically in the vmPFC112. In addition to encode affect dynamics, the vmPFC has been shown to exhibit consistent activity patterns during periods in which ambivalent feelings were reported while watching a film72. Its engagement in ambivalent feeling may be partially explained by well-controlled experiments suggesting that, together with regions such as the anterior cingulate cortex and the insula cortex, the prefrontal cortex plays a role in resolving and integrating conflicting affective information113,114,115.

On the other hand, our findings regarding the parietal channels support existing evidence of the parietal cortex’s involvement in valence processing. Specifically, the temporo-parietal junction (TPJ) has been shown to represent the continuously reported polarity and ambivalence of emotions during film viewing in a gradient manner84, and voxels within the parietal cortex have been crucial in classifying the neural representations of various emotion categories along the valence dimension116. Taken together, our HMM results collectively suggest that band power features across the full frequency range, particularly in the frontal and parietal channels, are mainly involved in the real-time differentiation of affective state representations, including the ambivalent affect of awe.

Considering several previous studies on the interactions among emotion regulation, ambivalent affect, and awe, we tentatively propose that the marked individual differences observed in both behavioral metrics and neural representations of ambivalent feelings and their predictive power for subjective awe may stem from variations in individual emotion regulation strategies. Indeed, ambivalent feelings are thought to stem from the reappraisal process39,117, where initial affective responses are re-evaluated through memory retrieval or knowledge associated with opposite valences. Though we did not directly measure emotion regulation strategies in this study, studies show that reappraisal is positively correlated to awe ratings for recalled memories118,119. Studies on affect dynamics also show emotion regulation types correlate with the duration of negative affect91,120,121, but not its intensity122. These findings raise the intriguing possibility that the extended duration of ambivalent feelings we observed during awe and its notable predictive power for awe ratings might originate from the engagement of implicit reappraisal processes. To further explore this hypothesis, future research could employ cognitive task analysis or think-aloud protocols during VR movie watching to assess how reappraisal strategies influence ambivalent feelings and awe at both behavioral and neural levels.

In addition to ambivalent feelings, our behavioral analyses underscored the importance of baseline positive mood and dispositional awe traits in predicting individual awe ratings. Specifically, the PANAS positive mood state remained a robust predictor of awe ratings, both before and after accounting for the cortical distinctiveness associated with ambivalent feelings. Moreover, the DPES awe trait score emerged as the second most significant predictor once the cortical distinctiveness variable was considered. This suggests that individual differences in awe responses to the same stimulus may be partially explained by personality traits. For example, trait openness has been consistently associated with an increased likelihood of experiencing positive moods123, awe30,124,125, and mixed emotions126 in daily life. Although our study did not directly examine personality factors — as this lies beyond its primary scope of delineating awe’s affective valence — future research should systematically explore how personality traits influence awe responses within virtual reality contexts, particularly examining the role played by the duration and intensity of ambivalent feelings. Furthermore, an open and important question remains regarding how individual personality traits modulate neural representations of awe, such as the cortical distinctiveness of ambivalent feelings.

Limitations

Our study has limitations that necessitate further investigation. First, our sample was comprised of East Asian participants, who may report more ambivalent feelings for awe-evoking stimuli than Western participants24,79. Future studies should investigate cross-cultural differences in the neural representation of ambivalent feelings during awe. Second, using real-time valence ratings through keypresses might have inadvertently influenced participants’ emotion generation127,128, though recent studies have validated such methods during naturalistic affective science research72,84,129,130. Last, our contrastive learning approach might have overlooked overlapped neural systems for opposing valence such as an engagement of the amygdala, insula, and medial prefrontal cortex in both positive and negative affect36,37. Thus, it needs to be elucidated how these regions specifically contribute to merging polarized valence signals in the future.

Conclusion

In conclusion, our study provides insights into the ambivalent affect of awe based on a comprehensive VR-EEG-deep representational learning approach. By demonstrating the link between ambivalent feelings, their distinct cortical representation, and the subjective intensity of awe, our findings advance the field of affective neuroscience and contribute to a more nuanced understanding of this complex and powerful emotion. Extending beyond awe, this work lays the groundwork for further research into the neurocomputational mechanisms that underpin mixed emotions such as emotion regulation or personality traits.

Data availability

The four VR video stimuli used in this study are available upon request to the first author of the paper, Jinwoo Lee (adem1997@snu.ac.kr), with a document that the stimuli will be used only for research purposes. All preprocessed self-report and EEG data analyzed in this paper are freely available through our Open Science Framework repository: https://osf.io/g7k6c.

Code availability

The code to reproduce all results from this paper is available in our GitHub repository: https://github.com/jinw00-lee/ambivalent-awe.

References

Keltner, D. & Haidt, J. Approaching awe, a moral, spiritual, and aesthetic emotion. Cogn. Emot. 17, 297–314 (2003).

Shiota, M. N., Keltner, D. & Mossman, A. The nature of awe: Elicitors, appraisals, and effects on self-concept. Cogn. Emot. 21, 944–963 (2007).

Schneider, K. J., Awakening to awe: Personal stories of profound transformation. Rowman & Littlefield (2009).

Keltner, D., Awe: the transformative power of everyday wonder. Random House (2023).

Bai, Y. et al. Awe, the diminished self, and collective engagement: Universals and cultural variations in the small self. J. Personal. Soc. Psychol. 113, 185 (2017).

Rudd, M., Vohs, K. D. & Aaker, J. Awe expands people’s perception of time, alters decision making, and enhances well-being. Psychol. Sci. 23, 1130–1136 (2012).

Piff, P. K. et al. Awe, the small self, and prosocial behavior. J. Pers. Soc. Psychol. 108, 883 (2015).

Seo, M., Yang, S. & Laurent, S. M. No one is an island: Awe encourages global citizenship identification. Emotion 23, 601 (2023).

Anderson, C. L., Monroy, M. & Keltner, D. Awe in nature heals: Evidence from military veterans, at-risk youth, and college students. Emotion 18, 1195 (2018).

Yin, Y. et al. Awe fosters positive attitudes toward solitude. Nat. Mental Health 1–11 (2024).

Damasio, A. & Carvalho, G. B. The nature of feelings: evolutionary and neurobiological origins. Nat. Rev. Neurosci. 14, 143–152 (2013).

Damasio, A. R. The somatic marker hypothesis and the possible functions of the prefrontal cortex. Philos. Trans. R. Soc. Lond. Ser. B: Biol. Sci. 351, 1413–1420 (1996).

Damasio, A. R. Emotions and feelings. in Feelings and emotions: The Amsterdam symposium. Cambridge University Press, Cambridge (2004).

Takano, R. & Nomura, M. A closer look at the time course of bodily responses to awe experiences. Sci. Rep. 13, 22506 (2023).

Barrett, L. F. & Bliss‐Moreau, E. Affect as a psychological primitive. Adv. Exp. Soc. Psychol. 41, 167–218 (2009).

Feldman, M., E. Bliss-Moreau, and K. Lindquist, The neurobiology of interoception and affect. Trends in Cognitive Sciences, 2024.

Russell, J. A. Core affect and the psychological construction of emotion. Psychol. Rev. 110, 145 (2003).

Yaden, D. B. et al. The overview effect: awe and self-transcendent experience in space flight. Psychol. Conscious.: Theory, Res., Pract. 3, 1 (2016).

Yaden, D. B. et al. The varieties of self-transcendent experience. Rev. Gen. Psychol. 21, 143–160 (2017).

Yaden, D. B. et al. The development of the Awe Experience Scale (AWE-S): A multifactorial measure for a complex emotion. J. Posit. Psychol. 14, 474–488 (2019).

Larsen, J. T., McGraw, A. P. & Cacioppo, J. T. Can people feel happy and sad at the same time? J. Personal. Soc. Psychol. 81, 684 (2001).

Williams, P. & Aaker, J. L. Can mixed emotions peacefully coexist? J. Consum. Res. 28, 636–649 (2002).

Watson, D. & Stanton, K. Emotion blends and mixed emotions in the hierarchical structure of affect. Emot. Rev. 9, 99–104 (2017).

Stellar, J. E. et al. Culture and awe: understanding awe as a mixed emotion. affective. Science 5, 160–170 (2024).

Chaudhury, S. H., Garg, N. & Jiang, Z. The curious case of threat-awe: A theoretical and empirical reconceptualization. Emotion 22, 1653 (2022).

Gordon, A. M. et al. The dark side of the sublime: Distinguishing a threat-based variant of awe. J. Personal. Soc. Psychol. 113, 310 (2017).

Takano, R. & Nomura, M. Neural representations of awe: Distinguishing common and distinct neural mechanisms. Emotion 22, 669 (2022).

Guan, F. et al. The neural correlate difference between positive and negative awe. Frontiers in Human. Neuroscience 13, 206 (2019).

Chirico, A. et al. The potential of virtual reality for the investigation of awe. Front. Psychol. 7, 223153 (2016).

Silvia, P. J. et al. Openness to experience and awe in response to nature and music: personality and profound aesthetic experiences. Psychol. Aesthet. Creat. Arts 9, 376 (2015).

An, S. et al. Two sides of emotion: Exploring positivity and negativity in six basic emotions across cultures. Front. Psychol. 8, 253368 (2017).

Briesemeister, B. B., Kuchinke, L. & Jacobs, A. M. Emotional valence: A bipolar continuum or two independent dimensions? Sage Open 2, 2158244012466558 (2012).

Berridge, K. C. Affective valence in the brain: modules or modes? Nat. Rev. Neurosci. 20, 225–234 (2019).

Lammel, S. et al. Input-specific control of reward and aversion in the ventral tegmental area. Nature 491, 212–217 (2012).

Norman, G. J. et al. Current emotion research in psychophysiology: The neurobiology of evaluative bivalence. Emot. Rev. 3, 349–359 (2011).

Lee, S. A. et al. Brain representations of affective valence and intensity in sustained pleasure and pain. Proc. Natl Acad. Sci. 121, e2310433121 (2024).

Lindquist, K. A. et al. The brain basis of positive and negative affect: evidence from a meta-analysis of the human neuroimaging literature. Cereb. Cortex 26, 1910–1922 (2016).

Man, V. et al. Hierarchical brain systems support multiple representations of valence and mixed affect. Emot. Rev. 9, 124–132 (2017).

Vaccaro, A. G., Kaplan, J. T. & Damasio, A. Bittersweet: the neuroscience of ambivalent affect. Perspect. Psychol. Sci. 15, 1187–1199 (2020).

Chirico, A. et al. Effectiveness of immersive videos in inducing awe: an experimental study. Sci. Rep. 7, 1218 (2017).

Chirico, A. et al. Designing awe in virtual reality: An experimental study. Front. Psychol. 8, 293522 (2018).

Quesnel, D. & Riecke, B. E. Are you awed yet? How virtual reality gives us awe and goose bumps. Front. Psychol. 9, 403078 (2018).

Kahn, A. S. & Cargile, A. C. Immersive and interactive awe: Evoking awe via presence in virtual reality and online videos to prompt prosocial behavior. Hum. Commun. Res. 47, 387–417 (2021).

Picard, F. & Craig, A. Ecstatic epileptic seizures: a potential window on the neural basis for human self-awareness. Epilepsy Behav. 16, 539–546 (2009).

Wittmann, M. The inner sense of time: how the brain creates a representation of duration. Nat. Rev. Neurosci. 14, 217–223 (2013).

Schneider, S., Lee, J. H. & Mathis, M. W. Learnable latent embeddings for joint behavioural and neural analysis. Nature 617, 360–368 (2023).

Viinikainen, M. et al. Nonlinear relationship between emotional valence and brain activity: evidence of separate negative and positive valence dimensions. Hum. Brain Mapp. 31, 1030–1040 (2010).

Aftanas, L. I. et al. Non-linear dynamic complexity of the human EEG during evoked emotions. Int. J. Psychophysiol. 28, 63–76 (1998).