Abstract

Personalized, smartphone-based coaching improves physical activity but relies on static, human-crafted messages. We introduce My Heart Counts (MHC)-Coach, a large language model fine-tuned on the Transtheoretical Model of Change. MHC-Coach generates messages tailored to an individual’s psychology (their “stage of change”), providing personalized support to foster long-term physical activity behavior change. To evaluate MHC-Coach’s efficacy, 632 participants compared human-expert and MHC-Coach interventions encouraging physical activity. Among messages matched to an individual’s stage of change, 68.0% (N = 430) preferred MHC-Coach-generated messages (P < 0.001). Blinded behavioral science experts (N = 2) rated MHC-Coach messages higher than human-expert messages for perceived effectiveness (4.4 vs. 2.8) and Transtheoretical Model alignment (4.1 vs. 3.5) on a 5-point Likert scale. This work demonstrates how language models can operationalize behavioral science frameworks for personalized health coaching, showing the potential for promoting long-term physical activity and reducing cardiovascular disease risk at scale.

Similar content being viewed by others

Introduction

Physical inactivity is one of the strongest risk factors for nearly all chronic diseases of aging1,2. Despite the well-documented benefits of regular physical activity, a majority of adults in the United States remain sedentary3. Mean daily steps is a commonly used proxy for physical activity, with even modest, short-term increases (+500 steps) associated with cardiovascular benefits4. However, recent large-scale studies show that the average American achieves only 4700 daily steps, well below the recommended threshold for health benefits4,5. These findings emphasize the need for scalable interventions that increase physical activity and support long-term adherence to ensure sustained health benefits over time.

Personalized coaching has consistently demonstrated efficacy in increasing physical activity by addressing individuals’ unique needs, motivations, and barriers6,7,8. Yet, sustaining long-term behavior change is a complex and dynamic process shaped by multiple interacting factors. Behavioral science offers structured, evidence-based frameworks for personalization to the individual by addressing the psychological, social, and environmental factors that influence behavior change9. One well-established framework is the Transtheoretical Model (TTM)10, which focuses on the stages of change individuals undergo when adopting new healthy behaviors. The TTM categorizes individuals into five distinct stages of change: pre-contemplation, contemplation, preparation, action, and maintenance10. The TTM has proven effective in guiding behavior change for chronic disease prevention, with tailored interventions at each stage demonstrating higher efficacy in increasing physical activity11. Because the model’s interventions evolve with users as they progress through the stages of change (from precontemplation to maintenance) it supports both short- and long-term improvements in physical activity10. Moreover, coaching strategies grounded in the TTM framework have fostered sustained physical activity across diverse populations12,13,14. Despite its success in fostering behavior change, TTM-based coaching has largely relied on manual creation and delivery, limiting scalability. Digital health tools, such as smartphone apps and wearables, offer a promising avenue for expanding access to TTM-based interventions.

Digital health tools, particularly smartphone applications and wearable devices such as smartwatches, have been employed to encourage physical activity15,16. Interventions using these digital health tools have demonstrated a positive effect on physical activity, with a recent meta-analysis reporting an average increase of 1,850 steps per day when digital interventions include personalized feedback and notifications17. While these findings highlight the potential of digital interventions, existing tools are currently limited in that they focus on short-term behavior change, with a lack of attention on sustained engagement and long-term impact. As a result, their effects on chronic disease prevention and treatment, such as cardiovascular disease, remain unknown. Moreover, while several digital trials are being conducted to assess the efficacy of TTM-based personalized coaching, they remain constrained by using preprogrammed, static messages designed by human experts and localized, center-based recruitment, limiting their scalability and adaptability18,19.

In response to the challenge of balancing scalability and personalization in digital health, recent research has begun leveraging large language models (LLMs) to deliver personalized health coaching at scale. By enabling the automated generation of tailored, high-quality messages, LLMs make it feasible to reach large, diverse populations without requiring manual input for each user. Recent studies have explored the use of various off-the-shelf LLMs, including GPT-4, LamDa, and LLaMA-2-7B-chat to answer health-related questions and facilitate goal-setting conversations through chat interfaces. Messages generated through structured prompting techniques were consistently rated as more behaviorally aligned, empathetic, and actionable than standard outputs. For example, Jörke et al. demonstrated that GPT-4 could simulate motivational interviewing and goal-setting with expert-level fluency using template-based prompts incorporating user data (e.g., activity levels, goals, barriers)20. Hegde et al. used a chat-based format in which LaMDA responded to user-described behavioral challenges framed by capability, opportunity, and motivation, and messages reranked by a BERT-based model were rated as more motivational than unrevised outputs21. Ong et al. directly compared LLaMA-2-7B-chat and human-generated messages in the sleep domain, finding that users rated the LLM responses as similarly empathetic and helpful22.

Despite these advances, current LLM-based systems remain reactive, responding through multi-turn conversations only when users initiate interaction, rather than proactively delivering timely and motivationally tailored messages informed by behavioral psychology. Proactive paradigms such as Just-in-Time Adaptive Interventions (JITAIs)23, context-aware recommender systems24, and ecological momentary interventions25 have shown promise in improving engagement and adherence. However, many of these systems rely on rules-based triggers from physiological or environmental sensors and fail to account for a user’s evolving motivational profile. Hence, there remains a critical need to integrate behavioral science frameworks with LLMs to enable a new class of interventions that draw on the architectural logic of proactive paradigms to deliver adaptive support by grounding messages in users’ evolving motivational profiles.

We pursued this integration by building upon the My Heart Counts (MHC) Cardiovascular Health Study, a large-scale digital health platform designed to promote physical activity. The My Heart Counts Cardiovascular Health Study was launched in 2015 as one of the first smartphone applications using Apple’s ResearchKit. On launch, it became the fastest-recruiting medical study of all time with >40,000 participants in the first two weeks26,27,28,29,30,31. MHC is a smartphone-based observational study designed to gather frequent, remote data on fitness, activity, and sleep30. It has since enabled several digital trials, including a personalized intervention that increased step counts using handcrafted messages tailored to individual activity levels31. However, that intervention was limited in both scale and duration, focusing only on short-term behavioral change over a 7-day period.

To address the lack of proactive, scalable, and psychologically grounded personalization to support sustained behavior change in digital health, we developed MHC-Coach, a fine-tuned LLM32 trained on TTM behavioral science principles33 and domain-specific knowledge in physical activity and cardiovascular health. MHC-Coach autonomously generates personalized, text-based coaching messages to increase physical activity, evolving with users’ changing needs, motivations, and readiness for behavior change. To assess this approach, we conduct three evaluations. First, unlike prior LLM physical activity coaching studies that relied on small evaluator groups (5 to 46 participants)20,21,34,35,36,37,38,39,40,41,42,43, we conducted a large-scale survey (N = 632) with participants of the MHC study, comparing their preference for MHC-Coach messages vs. traditional human expert-designed messages. Second, behavioral science experts systematically rated MHC-Coach and expert messages based on their motivational effectiveness and alignment with behavioral science principles. Finally, we compared the linguistic and stylistic features of messages generated by MHC-Coach, the unmodified LLaMA3-70B model, retrieval-augmented generation (RAG)44, few-shot prompting45, and human experts to better understand how each method expresses motivation and behavioral specificity. Together, these evaluations assess the potential of integrating behavioral science frameworks with LLM-driven coaching to improve personalization, enhance motivational quality, and enable scalable, long-term behavior change.

Results

Qualitative evaluation of fine-tuned MHC-Coach and Base LLaMA Model

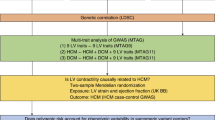

MHC-Coach was developed by fine-tuning LLaMA 3-70B on a dataset of 3268 human expert and peer-generated messages written in response to stage-specific scenarios33, a domain-specific corpus on health and exercise to provide background information on the importance of physical activity in preventing cardiovascular disease1,10,46,47,48,49,50,51,52,53, and detailed information about the TTM model and characteristics of individuals in each stage of change10,54 (see Methods and Fig. 1). The combined fine-tuning dataset contained 215,553 tokens.

To assess the impact of this fine-tuning approach, we qualitatively compared MHC-Coach outputs to those of the base LLaMA 3-70B model, evaluating their integration of behavioral science principles and ability to generate stage-specific motivational messages (see Fig. 2). For example, when asked to produce a motivation message for a user in the contemplation stage (i.e., those beginning to think about how to change their physical activity behavior), the base LLM generated: “Take the first step towards a healthier you! Schedule exercise into your daily routine and start feeling the benefits in just a few weeks.” While this message encourages users to plan their exercise, it is overly general. It does not specify the type of exercise, the duration, or when to start, which makes it harder for users to visualize or act on the suggestion.

Key differences include specificity in behavior recommendations and alignment with behavioral science principles, such as addressing ambivalence in the Contemplation stage and sustaining motivation through variety in the Action stage. Icons attributed in Fig. 1 legend.

In contrast, MHC-Coach generated the following message: “Ready to feel great? Schedule 10-minute daily walks into your calendar & reap energy, mood, & health benefits. Start Monday!” This version demonstrates a significant improvement in actionable specificity by recommending “10-minute daily walks” and incorporating practical planning advice, such as integrating the activity “into your calendar.” The use of immediacy (“Start Monday!”) gives a clear starting point, while the explicit mention of benefits such as “energy, mood, & health benefits” creates a stronger connection between the action and its immediate outcomes. By combining actionable specificity, real-life planning, and outcome clarity, the fine-tuned message effectively integrates TTM behavioral science principles, making it more likely to motivate users to take action. Figure 2 illustrates these improvements with annotated examples from the contemplation and action stages of the TTM.

Comparative evaluation of MHC-Coach and human expert messages

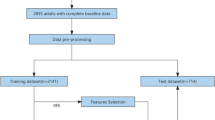

Survey invitations were sent via email to 62,616 users of the MHC smartphone app who had previously consented to re-contact. Surveys were conducted using the Qualtrics platform, and only participants who completed the entire survey were included in the analysis. Among the 1004 initial survey respondents, 853 met the inclusion criteria of being at least 18 years old and English-speaking. Of these 853 eligible participants, 632 provided complete responses and were included in the final analysis (see Fig. 3). The survey respondents included 79.4% males and 19.8% females, with the majority of participants (50.7%) aged 60+ years. The racial/ethnic distribution was predominantly White (80.8%), with smaller proportions identifying as Asian (6.1%), Hispanic/Latino (4.3%), and Black/African American (1.6%). Demographic characteristics of survey participants are summarized in Table 1.

Of 65,179 My Heart Counts participants who indicated openness to being contacted for future research, 62,616 were emailed the survey. A total of 1004 participants responded, of which 853 met the inclusion criteria (English speakers aged ≥18 years), and 632 of the eligible participants completed the entire survey and were included in the final analysis.

To evaluate the efficacy of MHC-Coach in generating motivational messages, we surveyed participants with a series of forced-choice A/B questions. Figure 4 illustrates the overall survey flow along with example questions. All participants received five forced-choice A/B questions comparing general motivational messages that were not tailored to a specific stage of change, with 85.4% (N = 540) expressing a preference for the general MHC-Coach-generated messages and 14.6% (N = 92) preferring the human expert-designed messages. Across the general motivational messages, there was a significant preference for the MHC-Coach-generated messages (Chi-squared P < 0.001, see Table 2 and Fig. 5).

Users answered demographic questions and were asked to choose the prompt that they preferred on evaluation of 10 total message pairs, including 5 generally motivational messages and 5 pairs tailored to each user’s specific stage of change. TTM Stage of change was assigned based on the question shown in the bottom right, and the red text maps each response option to a corresponding stage of change.

a Preferences of 632 surveyed users of the MyHeart Counts platform indicate a higher preference for LLM-generated messages across all stages of change. b Behavioral science experts rated the perceived effectiveness of messages on a 5-point Likert scale, with the fine-tuned LLM consistently outperforming human expert-generated messages. c Behavioral science experts evaluated how well each message aligned with the corresponding stage of change, with the fine-tuned LLM again achieving higher scores in most categories. Vertical lines for each bar represent the standard deviation. The “human expert” icon is by insdesign from The Noun Project, used under CC BY 3.0. The remaining icons are attributed in the Fig. 1 legend.

Users were then stratified to a specific TTM stage of change and received three forced-choice A/B questions on which message they preferred: an MHC-Coach-generated or a human expert-written coaching message, tailored to their individual stage of change. Across the stage-matched messages, 68.0% (N = 430) of users showed a preference for the MHC-Coach-generated messages, and 32.0% (N = 202) preferred the human expert messages (Chi-squared P < 0.001, see Table 2). This preference was significant and consistent across all stages of change in a sensitivity analysis, with sample sizes ranging from N = 37 for the pre-contemplation stage to N = 318 for the maintenance stage. Detailed breakdowns of preferences across specific stages of change, along with associated statistical results, are presented in Table 2 and Fig. 5.

Notably, female participants and those under 50 showed significantly stronger preferences for LLM-generated messages (P < 0.001 for both), with effect sizes in the small-to-moderate range (Cramér’s V = 0.069 and 0.086, respectively). A post hoc power analysis using the observed preference rate of 68% (vs. 50% under the null) and sample size (N = 632) yielded a power estimate greater than 0.999.

Two behavioral science experts reviewed and rated the MHC-Coach-generated messages and human expert-written messages (see Fig. 5). Across all stages of change, the average perceived effectiveness rating was 2.8 for the human-written messages and 4.4 for the MHC-Coach-generated messages on a 5-point Likert scale. Similarly, the average rating for how well the messages matched the requested TTM stage of change was 3.5 for the human-written messages and 4.1 for the MHC-Coach-generated messages. The most pronounced difference was observed in the pre-contemplation stage, where the human-written messages received an effectiveness rating of 2.2 and a match rating of 2.2, compared to the MHC-Coach-generated messages, which scored 4.3 for effectiveness and 3.1 for the stage alignment. A detailed breakdown of these ratings across all stages of change is presented in Fig. 5.

Linguistic and stylistic feature analysis across generation methods

To further compare the outputs of MHC-Coach with other generation strategies and expert-written messages, we analyzed seven linguistic and stylistic features that are commonly associated with effective behavior change communication: word count, sentiment, action-verb frequency, temporal reference, lexical diversity (type–token ratio), exclamation usage, and readability55,56,57,58. For each feature, values were averaged across 20 messages generated by each method, four messages for each of the five stages of change. These descriptive comparisons revealed notable differences in stylistic tendencies across generation types (See Table 3). For example, fine-tuned messages exhibited the highest action-verb count per message (mean = 5.2) and the most temporal anchoring (75% of messages), while expert messages had the lowest use of exclamation marks (0.15 per message) and relatively high readability (Flesch score = 68.26). Representative examples from each method are shown in Supplementary Table 1.

To assess whether these observed differences were statistically significant, we conducted Kruskal–Wallis tests across generation methods for each feature. Generation method had a significant effect on word count (H(4) = 60.65, p < 0.001), action-verb frequency (H(4) = 36.55, p < 0.001), temporal reference (H(4) = 16.58, p = 0.011), and exclamation usage (H(4) = 54.65, p < 0.001). No significant differences were observed for sentiment (p = 0.069), lexical diversity (p = 0.282), or readability (p = 0.098). Full results are reported in Table 4.

Discussion

Digital health offers the potential of a paradigm shift in scalability and individualization for physical activity coaching59. Here, we developed and tested a tool, MHC-Coach, with substantial potential to address the gap in personalization via fine-tuning of a large language model to incorporate an evidence-based and validated psychological framework for behavior change. We show that MHC-Coach can provide autonomous, proactive, and personalized text-based coaching interventions, based on a user’s underlying behavioral psychology. Finally, we showed that MHC-Coach-generated messages were judged preferable by both behavioral science experts and participants in the My Heart Counts Cardiovascular Health study, as compared to human expert-written messages.

A key aspect of this work is demonstrating how fine-tuning LLMs with behavioral science principles enhances their ability to support behavior change. Generic LLMs, despite their fluency and coherence, lack an inherent understanding of psychological motivation, resulting in responses that may be readable but not effective for long-term engagement. By embedding the TTM framework into an LLM’s latent space, the MHC-Coach model delivers structured, stage-specific coaching tailored to users’ behavioral readiness, surpassing both the base LLM and the human expert messages it was trained on. Fine-tuning also appears to more effectively internalize behavior change principles than prompting-based methods like RAG or few-shot learning, yielding messages with more consistent motivational features. Despite being crafted by experienced behavior-change professionals and academics who could draw on diverse motivational strategies, the expert messages were still outperformed by MHC-Coach’s TTM-grounded outputs, highlighting the advantage of structured, theory-driven messaging. Building on this behavioral backbone, future versions of MHC-Coach can layer personalization using real-time activity data, contextual signals, and language preferences60,61,62. Pairing MHC-Coach with emerging techniques like agentic systems63, memory-augmented architectures64, and reinforcement learning from user feedback65 could enable deeper integration of these datastreams, enabling ever-more-personalized and proactive health coaching at scale.

We further note that our study is unique amongst studies of LLM and health behavior for its scale and recruitment approach, reaching and engaging 632 MHC users seeking health interventions in the final analysis. This contrasts with the majority of preceding LLM-based physical activity coaching studies, which relied on small, pre-selected groups of fewer than 46 annotators20,21,34,35,36,37,38,39,40,41,42,43. We have collected user preference data and have made it openly available for further development of LLM-based health coaching leveraging the TTM framework (see Methods). Additionally, while there has been growing development of LLM applications in healthcare focused on clinical decision support, this work addresses preventive, behavior-focused coaching that can complement such systems66,67.

A few limitations of our study should be noted. First, our cohort reflects the user characteristics of the original My Heart Counts Cardiovascular Health Study and may not fully capture the diversity of the broader United States population26,27,28,29,30,31. The survey respondents skewed older than the general MHC cohort29, with half of the participants over the age of 60, which may limit generalizability to younger groups. Moreover, MHC-Coach-generated messages were in English and hence restricted participation to English speakers. Research is ongoing to assess the efficacy of MHC-Coach-generated messages in non-English speakers, of whom there are significant numbers within the United States. The expert evaluation included only two raters, limiting interrater diversity, and was intended as a supplementary analysis to triangulate findings from the larger user preference study. While our findings offer promising signals of motivational alignment, future work is needed to evaluate downstream behavioral and cardiovascular impacts. Finally, while MHC-Coach was fine-tuned on scientific health data to enhance accuracy, we did not conduct a systematic evaluation of hallucinations. This represents an important area for future work, especially as models begin to incorporate patient-specific information or deliver more targeted health advice68,69.

Concerning implementation, our fine-tuning approach is model-agnostic and compatible with open-source LLMs of varying sizes, enabling flexible, on-device deployment without reliance on external infrastructure. This further allows for stronger data privacy with user data kept local and enabling organizational control over data use70. Additionally, unlike prompting-based methods, fine-tuning eliminated the need for runtime context engineering, simplifying integration and lowering latency.

In summary, MHC-Coach demonstrates the promise of integrating behavioral science frameworks with LLMs to deliver scalable, stage-specific health coaching. In contrast to generic LLMs, MHC-Coach goes beyond providing information about behavioral science and exercise; it operationalizes these principles in the language it generates, ensuring that coaching messages align with motivation-sensitive, stage-specific interventions. This study builds a foundation for future efforts, which include integrating MHC-Coach into the My Heart Counts smartphone application, expanding coaching to multilingual and culturally diverse settings, incorporating real-time activity data for automated TTM stage classification, and evaluating long-term adherence and cardiovascular health outcomes through longitudinal studies.

Methods

MHC-Coach message generation

A fine-tuning approach was selected for MHC-Coach as it supports low-latency, single-turn interactions without the runtime overhead of multi-step prompting, making it well-suited for mobile-health deployment.

Data

Our fine-tuning dataset consists of 3,268 motivational messages grounded in the TTM framework, designed to promote exercise behavior change across the five stages of change. These messages were collected through a separate study conducted in 201733. In that study, 377 messages were authored by 25 human experts, including fitness coaches, behavioral coaches, and researchers in health psychology who were recruited through direct outreach. The experts were selected based on their applied or academic experience in exercise promotion and behavior change. The remaining messages were written by a larger group of peer contributors recruited via Amazon Mechanical Turk. Although not explicitly trained in the TTM for the study, they were presented with scenarios of individuals in different stages of behavioral change and instructed to create messages that they believed would be most motivating for each. As such, they were free to draw on any behavioral strategy or approach from their professional experience, not limited to the TTM alone71.

In addition to these human-designed messages, we incorporated content from ten manuscripts that were chosen to capture the seminal Transtheoretical-Model theory, reflect consensus guideline statements on exercise and cardiovascular risk, and provide diverse empirical perspectives, ranging from longitudinal cohort data to global surveillance and equity analyses on physical-activity measurement and outcomes. We limited the set to ten manuscripts1,10,46,47,48,49,50,51,53,54 to maintain a focused, coherent corpus, a common practice in domain-specific fine-tuning that helps the model internalize key constructs without diluting them72. These manuscripts thus enriched the training corpus with evidence-based insights linking physical activity, behavior change, and cardiovascular outcomes.

The combined fine-tuning dataset, consisting of human-written messages, content extracted from research papers, and detailed information about the TTM model and characteristics of users in each phase10,54, contained a total of 215,553 tokens. This dataset was used to fine-tune MHC-Coach, enabling it to generate contextually relevant and personalized messages for behavior change in physical activity (see Fig. 1). By integrating expert-designed messages with research-based content, we aimed to ensure accurate health information aligned with clinical and behavioral science guidelines, while grounding the language in evidence-based psychology tailored to each TTM stage.

Language model

LLaMA 3-70B32 was selected as the primary LLM architecture in this study due to its strong instruction-following abilities, open-source weights and model architecture, and a favorable balance between model size and performance for mobile health deployment. It has also demonstrated competitive performance on reasoning and language understanding tasks32, along with recent findings showing its responses to health-related questions matched or exceeded those of human coaches in quality, empathy, and accuracy, further supporting its suitability for health coaching applications22.

LLaMA 3-70B is a decoder-only transformer mode with >70 billion parameters, optimized for diverse tasks such as reasoning, instruction following, and dialog generation. For our experiments, we utilized LLaMA 3-70B with the following hyperparameters: temperature of 0.8 for response diversity, maximum token length of 800, and top-p (nucleus sampling) set to 0.9.

Fine-tuning

We used an instruction-tuning73 variant to fine-tune LLaMA 3-70B on our dataset. Given the unstructured nature of the research papers and the need to align expert messages with the appropriate stage of change, we developed a custom template to guide the fine-tuning process. We structured the input data using a custom template designed to train the model to generate stage-specific motivational messages. Each training example consisted of three key components: (1) a prompt section, describing the user’s stage of change and requesting a tailored motivational message, (2) a stage section label specifying the user’s stage of change, and (3) a completion section, containing the human-designed motivational message as the target response. To ensure clarity and consistency, a designated token was used to separate messages (see Fig. 1). To provide additional context, we appended unstructured text from manuscripts detailing the impact of regular exercise on cardiovascular health1,10,46,47,48,49,50,51,53,54, along with a detailed explanation of the TTM and the characteristics of individuals at each stage of change10,54.

We used Low-Rank Adaptation74 for fine-tuning, a technique that updates pre-trained models. This approach enables efficient fine-tuning by adding trainable low-rank matrices as additional parameters, while keeping the original model weights frozen. We performed a parameter search over learning rates (5e-5, 1e-5), LoRA rank (16, 32) with corresponding LoRA alpha values set to twice the rank (32, 64), and weight decay (0, 0.01), testing each configuration over one epoch. The final configuration consisted of a learning rate of 1e-5, a LoRA rank of 32 with alpha set to 64, LoRA applied across all linear layers without dropout, a cosine learning rate scheduler, a warmup ratio of 0.03, and a batch size of 8 over 5 epochs. Training was performed on 8×A100 80GB GPUs.

Large-scale user study design and participant recruitment

Ethical approval for the study was obtained from Stanford University’s Research Compliance Office (IRB-75836) and was conducted in accordance with the Declaration of Helsinki. Participants provided informed consent to join the My Heart Counts Cardiovascular Health Study, including agreement to be re-contacted for future research. Participation in the follow-up survey was voluntary.

Participant recruitment and eligibility criteria

The My Heart Counts (MHC) smartphone application was first launched in March 2015 via the Apple App Store for United States, United Kingdom, and Hong Kong residents26,27,28,29,30,31. Individuals aged 18 years or older who could read and understand English were eligible to participate in the My Heart Counts Cardiovascular Health Study.

Survey invitations were sent via email to MHC users who consented to being contacted for future research. Survey responses were collected using the Qualtrics survey platform. Respondents who did not complete the full survey were excluded from the analysis, as complete responses were required for group-level analyses. Of the initial 1,004 respondents, 632 met the inclusion criteria, completed the entire survey, and were retained for the final analyses (see Fig. 3).

Survey design

Throughout the survey, participants were shown pairs of messages, one human expert-designed message and an MHC-Coach-generated message, in a blinded fashion and without knowledge that the study involved the TTM. For each message pair, they made a forced A/B choice, selecting the message they felt was more empathetic, realistic, actionable, and encouraging. The human expert-designed messages used for all subsequent comparisons were sourced from the same crowdsourcing dataset used to fine-tune the model, involving 25 human experts in fitness, behavior, and health psychology33. To match the 20-word length requested in model inputs, expert messages were randomly sampled from the top 50% of messages by word count, improving length comparability while preserving sufficient sample size. All participants were shown the same set of message pairs. This design allowed us to directly evaluate whether the model could reproduce the set of expert-quality messages it was trained on. Examples of both human expert-crafted and MHC-Coach-generated messages that users compared are provided in Supplementary Table 2.

Participants performed two sets of comparisons, one with generally motivating messages and one with messages that were tailored to their stage of change. The first set of questions asked participants to choose their preferred coaching message in an A/B forced-choice set of five questions featuring general motivational messages. These messages were created by a human expert33 or generated by MHC-Coach with the sole objective of encouraging regular physical activity, independent of the stage of change.

Participants were then stratified into one of five stages of change using an evidence-based TTM stage-identification question, which assessed the duration and consistency of their regular exercise habits75. This question, illustrated in Fig. 4, defined regular exercise as planned physical activities (e.g., brisk walking, jogging, swimming) performed 3–5 times per week for 20–60 min to improve fitness. Regular exercise was defined as planned physical activities—such as brisk walking, aerobics, jogging, bicycling, swimming, or rowing—performed 3 to 5 times per week for 20–60 min per session to improve physical fitness75. After categorization, participants answered five questions comparing messages tailored to their specific stage of change. In this section, both the MHC-Coach-generated and human expert messages were stage-aligned: expert messages were selected from the dataset based on the stage-specific scenarios used during data collection33, while MHC-Coach was prompted with the corresponding stage label and descriptive context (see Fig. 1).

Statistical analysis

The Chi-squared test was used to statistically evaluate differences in participants’ preferences for human expert-designed versus MHC-Coach-generated messages, under the null hypothesis that preferences for both types of messages were equal. Analyses were conducted across the five specific stages of change (pre-contemplation, contemplation, preparation, action, and maintenance) and for generic motivational messages. To account for the six comparisons performed (five stage-specific tests and one generic message test), a Bonferroni correction was applied to control the family-wise error rate, adjusting the significance threshold from α = 0.05 to αadjusted = 0.0083 (0.05/6). Participants were classified as preferring MHC-Coach if they selected a majority of MHC-Coach-generated messages, and as preferring the human expert if they selected a majority of human expert-generated messages in A/B forced-choice questions in analyses of the general motivational and TTM stage-specific question sets. To test the robustness of our findings, we conducted sensitivity analyses stratified by gender (male, female) and age group (above and below 50 years), using chi-squared tests with Cramér’s V76 to estimate effect sizes. All P-values were two-sided and Holm-adjusted for multiple comparisons. Finally, a post hoc power analysis was performed using the observed effect size and sample size.

Expert evaluation of coaching messages

We engaged two expert reviewers with active research in applying the TTM to independently evaluate and rate both the fine-tuned MHC-Coach-generated messages and the expert-written messages (selection procedure described in Methods Section 2) in a blinded fashion33. The evaluators were blinded as to whether a given message was MHC-Coach- or expert-generated. They assessed each message on a 5-point Likert scale across two criteria: (1) the perceived effectiveness of the message in promoting increased physical activity and (2) the degree to which the message aligned with the recipient’s current stage of change.

Linguistic and stylistic features across generation methods

To compare generation strategies suitable for proactive, single-turn coaching, we created a set of twenty messages (four for each of the five TTM stages) using five techniques: base LLaMA 3-70B model, MHC-Coach, few-shot prompting73, retrieval-augmented generation (RAG)44, and human experts.

Pretrained baseline

The unmodified LLaMA 3-70B-chat model was prompted with a brief TTM stage description and a request to generate a 20-word motivational message.

Few-shot prompting

We first inserted a brief system instruction: “You are a health coach trained in the Transtheoretical Model of Behavior Change.” This was followed by the stage-specific task prompt used in the baseline condition and then three demonstration messages matched to that stage. These exemplars were drawn from the expert corpus33 but were withheld from the subset of expert messages used for quantitative comparisons. This single concatenated prompt was provided to the unmodified LLaMA 3-70B model for each stage.

Retrieval-augmented generation

We embedded the full fine-tuning corpus, consisting of 3268 stage-labeled expert messages from the crowdsourcing study33 and the exercise-science excerpts as outlined earlier in the data portion of the Methods, using the BAAI/bge-small-en-v1.5 encoder and indexed it with Llama-Index (version 0.10.38). At inference time, the index returned the two 1000-token segments with the highest cosine similarity to the stage-specific query. These retrieved segments were prepended to the 20-word prompt used for the baseline condition, and the full context was then passed to the unmodified LLaMA-3-70B model for generation.

Expert messages

Twenty expert-written messages were randomly sampled from the crowdsourced corpus33 (selection procedure described in Methods Section 2).

Prompts used for each message generation strategy are provided in Supplementary Table 1.

Corpus and preprocessing

We performed a systematic comparison of the linguistic and stylistic properties of the messages produced in each generation technique, as described in the data portion of the Methods. Each of the five generation methods produced 20 messages, yielding a corpus of 100 messages.

All analyses were performed in Python 3.1. Linguistic annotation, including part-of-speech tagging and dependency parsing, was performed with spaCy (version 3.7.2)77. Sentiment analysis used VADER (version 0.3.3)78, and readability scores were computed using Textstat (version 0.7.3)79.

Feature definition and extraction

The selected features reflect complementary dimensions of message quality, including length, lexical richness, emotional tone, behavioral focus, temporal specificity, and readability. Each feature is defined below, along with the method used for its extraction.

Word count

Calculates the length of each message, using spaCy (version 3.7.2, en_core_web_sm model) by counting alphabetic tokens only, excluding numerals and punctuation.

Compound sentiment score

Assesses the overall emotional tone of a message, using the VADER sentiment analysis tool (version 0.3.3). VADER outputs a composite polarity score ranging from –1 (strongly negative) to +1 (strongly positive).

Action-verb frequency

Measures the number of syntactically central actions in a message. Action verbs were dentified using spaCy by selecting tokens with part-of-speech tag VERB, and excluding those with auxiliary or copular dependency roles. This excludes verbs serving grammatical support functions (e.g., is, have), focusing on core predicates expressing meaningful actions.

Temporal reference

Indicates whether the message is temporally anchored. This binary feature was assigned a value of 1 if spaCy recognized a DATE or TIME entity, or if the text included predefined temporal keywords (e.g., today, tomorrow, routine). Messages without such markers were assigned a value of 0.

Type–token ratio

Measures lexical diversity, calculated as the ratio of unique lowercase alphabetic tokens to the total number of such tokens in the message. Tokenization was performed using spaCy.

Exclamation count

Captures emphatic punctuation usage, quantified by the number of exclamation marks in each message.

Flesch Reading-Ease Xscore

Estimates message readability using the Textstat 0.7.3 library, where higher scores correspond to simpler, more accessible text80.

For descriptive comparisons across generation methods, each of the seven features was averaged across the 20 messages produced by a given technique.

Statistical comparison across methods

To complement the descriptive comparisons, we conducted a statistical test to assess whether feature distributions differed significantly across generation methods. For each feature, we first tested the normality of per-message values within each method using the Shapiro–Wilk test81. When distributions met the assumption of normality, we applied one-way ANOVA; otherwise, we used the non-parametric Kruskal–Wallis test. All tests examined the main effect of the generation method on individual feature distributions. All statistical analyses were conducted in Python using the SciPy library (version 1.13)82.

Data availability

The fine-tuning corpus used to train MHC-Coach included 3,268 stage-specific motivational messages collected in a separate 2017 study33. These messages were authored by human experts and crowd contributors and are not publicly available. Researchers interested in accessing this dataset may contact the author of the original study. Additional fine-tuning content included ten research papers1,10,46,47,48,49,50,51,53,54 focused on behavior change and exercise. Seven were open-access publications, and the remaining three were accessed through our institution’s academic subscription. The full texts were used solely for academic research under fair use provisions and are not redistributed or publicly released.The health coaching data that was collected and analyzed during the survey study is available on GitHub (https://github.com/SriyaM/MHC-Coach). The dataset has been anonymized to ensure participant privacy, and no personally identifiable information is included. The repository includes code for data processing and customization to enable further analyses. The set of messages and participant responses from the large-scale survey study are available within the GitHub repository in the preference_survey folder. The set of messages generated across all five techniques (MHC-Coach, base LLaMA, few-shot prompting, RAG, and expert) and used for the linguistic and stylistic analysis is available in the linguistic_comparison folder.

Code availability

The code used to generate RAG messages and to conduct the quantitative linguistic analysis comparing all five generation techniques (MHC-Coach, base LLaMA, few-shot prompting, RAG, and human expert) is available on GitHub (https://github.com/SriyaM/MHC-Coach) in the linguistic_comparison folder. In addition, the fine-tuned MHC-Coach model, including weights and an inference example, is openly hosted on the Hugging Face Hub (https://huggingface.co/SriyaM/MHC-Coach). Both the data and code are provided under the MIT License, allowing users to modify, reuse, and redistribute the materials in accordance with the license terms. All software versions, key hyperparameters, and generation settings used in the study are detailed in the Methods.

References

Gibbs, B. Physical activity as a critical component of first-line treatment for elevated blood pressure or cholesterol: who, what, and how?: a scientific statement from the American Heart Association. Hypertension 78, e26–e37 (2021).

Booth, F. W., Roberts, C. K., Thyfault, J. P., Ruegsegger, G. N. & Toedebusch, R. G. Role of inactivity in chronic diseases: Evolutionary insight and pathophysiological mechanisms. Physiol. Rev. 97, 1351–1402 (2017).

Nazik, E. A. & Kramarow, C. Physical activity among adults aged 18 and over: United States, 2020. Centers for Disease Control and Prevention (2020).

Hall, K. S. et al. Systematic review of the prospective association of daily step counts with risk of mortality, cardiovascular disease, and dysglycemia. Int. J. Behav. Nutr. Phys. Act. 17, 78 (2020).

Tudor-Locke, C. et al. How many steps/day are enough? For older adults and special populations. Int. J. Behav. Nutr. Phys. Act. 8, 80 (2011).

Monteiro-Guerra, F., Rivera-Romero, O., Fernandez-Luque, L. & Caulfield, B. Personalization in real-time physical activity coaching using mobile applications: A scoping review. IEEE J. Biomed. Health Inform. 24, 1738–1751 (2020).

Bäccman, C., Bergkvist, L. & Wästlund, E. Personalized coaching via texting for behavior change to understand a healthy lifestyle intervention in a naturalistic setting: Mixed methods study. JMIR https://doi.org/10.2196/preprints.47312 (2023).

Brink, S. M., Wortelboer, H. M., Emmelot, C. H., Visscher, T. L. S. & van Wietmarschen, H. A. Developing a personalized integrative obesity-coaching program: A systems health perspective. Int. J. Environ. Res. Public Health 19, 882 (2022).

Sucala, M., Ezeanochie, N. P., Cole-Lewis, H. & Turgiss, J. An iterative, interdisciplinary, collaborative framework for developing and evaluating digital behavior change interventions. Transl. Behav. Med. 10, 1538–1548 (2020).

Prochaska, J. O. & Velicer, W. F. The transtheoretical model of health behavior change. Am. J. Health Promot. 12, 38–48 (1997).

Hashemzadeh, M., Rahimi, A., Zare-Farashbandi, F., Alavi-Naeini, A. M. & Daei, A. Transtheoretical model of health behavioral change: A systematic review. Iran. J. Nurs. Midwifery Res. 24, 83–90 (2019).

Liu, K. T., Kueh, Y. C., Arifin, W. N., Kim, Y. & Kuan, G. Application of the transtheoretical model on behavioral changes, and amount of physical activity among university’s students. Front. Psychol. 9, 2402 (2018).

Pirzadeh, A., Mostafavi, F., Ghofranipour, F. & Feizi, A. Applying transtheoretical model to promote physical activities among women. Iran. J. Psychiatry Behav. Sci. 9, e1580 (2015).

Jiménez-Zazo, F., Romero-Blanco, C., Castro-Lemus, N., Dorado-Suárez, A. & Aznar, S. Transtheoretical Model for physical activity in older adults: Systematic review. Int. J. Environ. Res. Pub. Health 17, 9262 (2020).

Longhini, J. et al. Wearable devices to improve physical activity and reduce sedentary behaviour: An umbrella review. Sports Med. Open 10, 9 (2024).

Harries, T. et al. Effectiveness of a smartphone app in increasing physical activity amongst male adults: a randomised controlled trial. BMC Pub. Health 16, 925 (2016).

Laranjo, L. et al. Do smartphone applications and activity trackers increase physical activity in adults? Systematic review, meta-analysis and metaregression. Br. J. Sports Med. 55, 422–432 (2021).

Mohd Saad, N., Mohamad, M. & Mat Ruzlin, A. N. Web-based intervention to act for weight loss in adults with type 2 diabetes with obesity (Chance2Act): Protocol for a nonrandomized controlled trial. JMIR Res. Protoc. 13, e48313 (2024).

Takahashi, A. et al. Effectiveness of a lifestyle improvement support app in combination with a wearable device in Japanese people with type 2 diabetes mellitus: STEP-DM study. Diab. Ther. 15, 1187–1199 (2024).

Jörke, M. et al. GPTCoach: Towards LLM-based physical activity coaching. in Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems 1–46 (ACM, New York, NY, USA, 2025). https://doi.org/10.1145/3706598.3713819.

Hegde, N. et al. Infusing behavior science into large language models for activity coaching. PLOS Digit. Health 3, e0000431 (2024).

Ong, Q. C. et al. Advancing health coaching: A comparative study of large language model and health coaches. Artif. Intell. Med. 157, 103004 (2024).

Nahum-Shani, I. et al. Just-in-time adaptive interventions (JITAIs) in mobile health: Key components and design principles for ongoing health behavior support. Ann. Behav. Med. 52, 446–462 (2018).

van Dantzig, S., Bulut, M., Krans, M., van der Lans, A. & de Ruyter, B. Enhancing physical activity through context-aware coaching. in Proceedings of the 12th EAI International Conference on Pervasive Computing Technologies for Healthcare 187–190 (ACM, New York, NY, USA, 2018). https://doi.org/10.1145/3240925.3240928.

Dao, K. P. et al. Smartphone-delivered ecological momentary interventions based on ecological momentary assessments to promote health behaviors: Systematic review and adapted checklist for reporting ecological momentary assessment and intervention studies. JMIR MHealth UHealth 9, e22890 (2021).

Johnson, A. et al. Mobile health study incorporating novel fitness test. J. Cardiovasc. Transl. Res. 16, 569–580 (2023).

Shcherbina, A. et al. The effect of digital physical activity interventions on daily step count: a randomised controlled crossover substudy of the MyHeart Counts Cardiovascular Health Study. Lancet Digit. Health 1, e344–e352 (2019).

Kim, D. S. et al. Unlocking insights: Clinical associations from the largest 6-minute walk test collection via the my Heart Counts Cardiovascular Health Study, a fully digital smartphone platform. Prog. Cardiovasc. Dis. https://doi.org/10.1016/j.pcad.2025.01.010 (2024).

Hershman, S. G. et al. Physical activity, sleep and cardiovascular health data for 50,000 individuals from the MyHeart Counts Study. Sci. Data 6, 24 (2019).

McConnell, M. V. et al. Feasibility of obtaining measures of lifestyle from a smartphone app: The MyHeart Counts cardiovascular health study. JAMA Cardiol. 2, 67–76 (2017).

Javed, A. et al. Personalized digital behaviour interventions increase short-term physical activity: a randomized control crossover trial substudy of the MyHeart Counts Cardiovascular Health Study. Eur. Heart J. Digit. Health 4, 411–419 (2023).

Grattafiori, A. et al. The Llama 3 herd of models. arXiv [cs.AI] (2024).

De Vries, R. A. J., Zaga, C., Bayer, F., Drossaert, C. H. C., Truong, K. P. & Evers, V. Experts get me started, peers keep me going: Comparing crowd- versus expert-designed motivational text messages for exercise behavior change. Proc. 11th EAI Int. Conf. Pervasive Computing Technologies for Healthcare, 155–162. https://doi.org/10.1145/3154862.3154875 (2017).

Meywirth, S., Janson, A. & Söllner, M. Personalized coaching for lifestyle behavior change through large language models: A qualitative study. Proc. Hawaii Int. Conf. System Sciences (HICSS), 3379–3388 (2025).

Lim, S., Schmälzle, R. & Bente, G. Artificial social influence: Rapport-building, LLM-based embodied conversational agents for health coaching. Proc. CONNECT Workshop, 24th ACM Int. Conf. on Intelligent Virtual Agents (IVA 2024).

Huang, Z. et al. Comparing large language model AI and human-generated coaching messages for behavioral weight loss. J. Technol. Behav. Sci. https://doi.org/10.1007/s41347-025-00491-5 (2025).

Løvås, S.E. AI chatbots in health: Implementing an LLM-based solution to promote physical activity. Master’s thesis, UiT The Arctic (University of Norway 2024).

Song, H. et al. Investigating the relationship between physical activity and tailored behavior change messaging: Connecting contextual bandit with large language models. arXiv preprint arXiv:2506.07275 [cs.LG].

Mitchell, E. G. et al. T2 coach: A qualitative study of an automated health coach for diabetes self-management. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems 1–17 (ACM, New York, NY, USA, 2025). https://doi.org/10.1145/3706598.3714404.

Haag, D. et al. The last JITAI? Exploring large language models for issuing just-in-time adaptive interventions: Fostering physical activity in a prospective cardiac rehabilitation setting. in Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems 1–18 (ACM, New York, NY, USA, 2025). https://doi.org/10.1145/3706598.3713307.

Akrimi, S., Schwensfeier, L., Düking, P., Kreutz, T. & Brinkmann, C. ChatGPT-4o-generated exercise plans for patients with type 2 diabetes mellitus-assessment of their safety and other quality criteria by coaching experts. Sports 13, 92 (2025).

Moore, R., Al-Tamimi, A.-K. & Freeman, E. Investigating the potential of a conversational agent (Phyllis) to support adolescent health and overcome barriers to physical activity: Co-design study. JMIR Form. Res. 8, e51571 (2024).

Fang, C. M. et al. PhysioLLM: Supporting personalized health insights with wearables and large language models. arXiv [cs.HC] (2024).

Lewis, P. et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. arXiv [cs.CL] (2020).

Brown, T. B. et al. Language Models are Few-Shot Learners. arXiv [cs.CL] (2020).

Gonzalez-Jaramillo, N. et al. Systematic review of physical activity trajectories and mortality in patients with coronary artery disease. J. Am. Coll. Cardiol. 79, 1690–1700 (2022).

Ding, D. et al. Towards better evidence-informed global action: lessons learnt from the Lancet series and recent developments in physical activity and public health. Br. J. Sports Med. 54, 462–468 (2020).

Kraus, W. E. et al. Daily step counts for measuring physical activity exposure and its relation to health. Med. Sci. Sports Exerc. 51, 1206–1212 (2019).

Hawes, A. M. et al. Disentangling race, poverty, and place in disparities in physical activity. Int. J. Environ. Res. Public Health 16, 1193 (2019).

Rippe, J. M. Physical activity and health: Key findings from the physical activity guidelines for Americans 2018. in Lifestyle Medicine, Fourth Edition 200–208 (CRC Press, Boca Raton, 2024). https://doi.org/10.1201/9781003227793-20.

Althoff, T. et al. Large-scale physical activity data reveal worldwide activity inequality. Nature 547, 336–339 (2017).

Carlson, S. A., Fulton, J. E., Pratt, M., Yang, Z. & Adams, E. K. Inadequate physical activity and health care expenditures in the United States. Prog. Cardiovasc. Dis. 57, 315–323 (2015).

Myers, J. et al. Physical activity and cardiorespiratory fitness as major markers of cardiovascular risk: their independent and interwoven importance to health status. Prog. Cardiovasc. Dis. 57, 306–314 (2015).

Velicer, W. F., Prochaska, J. O., Fava, J. L., Norman, G. J. & Redding, C. A. Detailed overview of the transtheoretical model. Homeostasis 38, 216–233 (1998).

Hawkins, R. P., Kreuter, M., Resnicow, K., Fishbein, M. & Dijkstra, A. Understanding tailoring in communicating about health. Health Educ. Res. 23, 454–466 (2008).

Ferrer, R. A. & Mendes, W. B. Emotion, health decision making, and health behaviour. Psychol. Health 33, 1–16 (2018).

Kreuter, M. W. & Farrell, D. W. Tailoring Health Messages. (Routledge, London, England, 2017).

Mohamadlo, A. et al. Analysis and review of the literatures in the field of health literacy. JMIS 6, 58–72 (2020).

Kim, D. S., Eltahir, A. A., Ngo, S. & Rodriguez, F. Bridging the gap: How accounting for social determinants of health can improve digital health equity in cardiovascular medicine. Curr. Atheroscler. Rep. 27, 9 (2024).

Choi, Y. J., Lee, M. & Lee, S. Toward a multilingual conversational agent: Challenges and expectations of code-mixing multilingual users. in Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems vol. 85 1–17 (ACM, New York, NY, USA, 2023).

Chatterjee, A., Pahari, N., Prinz, A. & Riegler, M. AI and semantic ontology for personalized activity eCoaching in healthy lifestyle recommendations: a meta-heuristic approach. BMC Med. Inform. Decis. Mak. 23, 278 (2023).

Mandyam, A., Jörke, M., Denton, W., Engelhardt, B. E. & Brunskill, E. Adaptive interventions with user-defined goals for health behavior change. arXiv [cs.LG] (2023).

Park, J. S. et al. Generative agents: Interactive simulacra of human behavior. arXiv [cs.HC] (2023).

Wang, W. et al. Augmenting language models with long-term Memory. arXiv [cs.CL] (2023).

Ouyang, L. et al. Training language models to follow instructions with human feedback. arXiv [cs.CL] (2022).

Gaber, F. et al. Evaluating large language model workflows in clinical decision support for triage and referral and diagnosis. NPJ Digit. Med. 8, 263 (2025).

Ting, D. S. W. et al. Development and testing of a novel Large Language Model-based Clinical Decision Support Systems for medication safety in 12 clinical specialties. Res. Sq. https://doi.org/10.21203/rs.3.rs-4023142/v1 (2024).

Huang, L. et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. https://doi.org/10.1145/3703155 (2024).

Ahmad, M., Yaramic, I. & Roy, T.D. Creating trustworthy LLMs: Dealing with hallucinations in healthcare AI. https://doi.org/10.20944/preprints202310.1662.v1 (2023).

Wiest, I. C. et al. Privacy-preserving large language models for structured medical information retrieval. NPJ Digit. Med. 7, 257 (2024).

de Vries, R. A. J., Truong, K. P., Kwint, S., Drossaert, C. H. C. & Evers, V. Crowd-designed motivation. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (ACM, New York, NY, USA, 2016). https://doi.org/10.1145/2858036.2858229.

Sun, J. et al. Dial-insight: Fine-tuning large language models with high-quality domain-specific data preventing capability collapse. arXiv [cs.CL] (2024).

Wei, J. et al. Finetuned language models are zero-shot learners. arXiv [cs.CL] (2021).

Hu, E. J. et al. LoRA: Low-Rank Adaptation of large language models. arXiv [cs.CL] (2021).

Marcus, B. H., Selby, V. C., Niaura, R. S. & Rossi, J. S. Self-efficacy and the stages of exercise behavior change. Res. Q. Exerc. Sport 63, 60–66 (1992).

Cramer, H. Mathematical Methods of Statistics (PMS-9), Volume 9. (Princeton University Press, Princeton, NJ, 1999).

spaCy · Industrial-strength Natural Language Processing in Python. https://spacy.io.

Hutto, C. J. & Gilbert, E. VADER: A Parsimonious Rule-based Model for Sentiment Analysis of Social Media Text. Proc. Int. AAAI Conf. Web Soc. Media 8, 216–225 (2014).

Textstat: :memo: Python Package to Calculate Readability Statistics of a Text Object - Paragraphs, Sentences, Articles. (Github).

Flesch, R. A new readability yardstick. J. Appl. Psychol. 32, 221–233 (1948).

Shapiro, S. S. & Wilk, M. B. An analysis of variance test for normality (complete samples). Biometrika 52, 591–611 (1965).

Virtanen, P. et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Acknowledgements

D.S.K. is supported by the Wu-Tsai Human Performance Alliance as a Clinician-Scientist Fellow, the Stanford Center for Digital Health as a Digital Health Scholar, the Pilot Grant from the Stanford Center for Digital Health, the Robert A. Winn Excellence in Clinical Trials Career Development Award, NIH 1L30HL170306, the American Heart Association Career Development Award (25CDA1436622), and the American Diabetes Association (ADA) Pathway to Stop Diabetes Initiator Award (7-25-INI-11). F.R. was funded by grants from the NIH National Heart, Lung, and Blood Institute (R01HL168188; R01HL167974, R01HL169345), the American Heart Association/Harold Amos Medical Faculty Development Program, and the Doris Duke Foundation (Grant #2022051). E.L. is supported by NIH grants K24AR075060, K12TR004930, and R01AR082109. A.C.K. is supported by NIH grants 5R01AG07149002 and 1R44AG071211, and a seed grant from the Stanford Institute for Human-Centered Artificial Intelligence. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

D.S.K. and E.A.A. led the overall study design. S.M. fine-tuned the model, generated messages using five different techniques, and conducted the quantitative comparisons and data analysis from the large-scale survey study. A.J. managed participant recruitment and developed the survey for the large-scale user study. M.O. contributed to the behavioral science framing, including the design of stage classification questions and survey development. M.R.-P. conducted initial message review. P.S., E.L., A.C.K., F.R., A.T., and R.D. provided ongoing consultation on behavioral science, mobile health app requirements, and inclusive digital health research design, which informed the overall approach and interpretation. All authors reviewed and approved the final manuscript.

Corresponding authors

Ethics declarations

Competing interests

D.S.K. reports grant support from Amgen and the Bristol Myers Squibb Foundation (via the Robert A. Winn Excellence in Clinical Trials Career Development Award). F.R. reports equity from Carta Healthcare and HealthPals, and consulting fees from HealthPals, Novartis, Novo Nordisk, Esperion Therapeutics, Movano Health, Kento Health, Inclusive Health, Edwards, Arrowhead Pharmaceuticals, HeartFlow, and iRhythm outside the submitted work. E.A.A. reports advisory board fees from SequenceBio, Foresite Labs, Pacific Biosciences, and Versant Ventures. E.A.A. has ownership interest in Personalis, Deepcell, Svexa, Candela, Parameter Health, Saturnus Bio, outside the submitted work. E.A.A. is a non-executive director of AstraZeneca and Svexa. E.A.A. receives collaborative research support from Illumina, Pacific Biosciences, Oxford Nanopore, Cache, Cellsonics, outside the submitted work. The remainder of the authors report no potential conflicts of interest. S.M., A.J., M.O., N.S., A.T., R.D., C.M.M., A.L., M.R.-P., P.S., E.L., and A.C.K. declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Mantena, S., Johnson, A., Oppezzo, M. et al. Fine-tuning LLMs in behavioral psychology for scalable health coaching. npj Cardiovasc Health 2, 48 (2025). https://doi.org/10.1038/s44325-025-00083-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44325-025-00083-5