Abstract

Additive manufacturing (AM) provides agile and flexible manufacturing in modern supply chains; however, there are concerns that AM may be vulnerable to inferior materials, poor process control, or counterfeit parts. There is a need for new measurement technologies capable of monitoring AM suppliers and certifying the quality and authenticity of AM parts and materials. This study demonstrates a method to predict the source of AM parts from part photographs using a deep learning model. A total of 9192 parts were produced from 21 printers, with three unique designs and four AM processes. A 2D photo scanner captures high-resolution images of each part; these images train a deep-learning model to predict the specific printer that produced each part. A novel analytical framework is developed for analyzing the high resolution image data. This framework achieves >98% prediction accuracy identifying the origin of 1050 parts. The model can authenticate the source of parts without cooperation from the manufacturer, potentially enabling applications in part authentication and detecting changes in materials or production processes. The research demonstrates the potential for high-resolution image data to be used for deep learning in manufacturing and shows that image-based source identification can monitor the quality and authenticity of AM parts.

Similar content being viewed by others

Introduction

Identifying the source of a manufactured part is essential for a variety of important manufacturing tasks such as process control and optimization1, identifying the root cause of defects and other problems2, evaluating and managing contract manufacturers in a supply chain3, and ensuring that a manufactured part is authentic4. Existing solutions for manufacturing source identification typically use part labels or trackers, such as paper labels affixed to a part or container of parts, radio frequency identification tags5, or markings fabricated directly into the material of a part, for example, using laser engraving6. Unfortunately, these solutions are easily disrupted as labels can be copied, removed, or disassociated from the parts. Tracking and authenticating manufactured components is extremely challenging because modern manufacturing supply chains use networks of contract manufacturers to perform manufacturing operations such as part fabrication, post-processing, and assembly7. The supplier network may consist of four or more layers before physical goods arrive at the original equipment manufacturer (OEM). These extended supply chains are opaque: in most cases the OEM does not know who is supplying their supplier. Distributed supply chains offer risks for certain types of manufacturing. Additive manufacturing (AM) may be particularly vulnerable to supply chain risks, owing to the potential for AM to support distributed manufacturing, and the deep links between feedstock quality, process controls, and resulting part shape and composition. Thus, there is an urgent need to develop technologies that are capable of managing AM suppliers and validating AM materials, processes, and parts8,9,10,11. To address these challenges, this paper proposes a new concept for source identification of additively manufactured components using high-resolution photographs and supervised deep-learning models.

Several approaches have been proposed for tracking AM parts, using either visible part labels or covert tags. While the most common practice in the industry is to use printed labels that accompany the part along with detailed logs and auditable paper trail, labels integrated with the part design are becoming more common12. Such integrated labels may be applied to the part through laser engraving or designed directly into the computer-aided design (CAD) model such that the label is monolithically integrated with the overall shape of the part. There is a growing body of published research that is exploring concepts for covert labels such as intrinsic tags13,14 or physically unclonable functions (PUFs)15 for label-free part tracking and part authentication. The digital 3D model of a part may include hidden watermarks embedded by a printer13. While intrinsic part tags offer promising potential, this approach requires the cooperation of the manufacturer and has inherent vulnerabilities to disruption. A relatively unexplored solution for part authentication and anti-counterfeiting is source identification, which determines part origin from the physical or chemical attributes of the part. The unique part attributes that link a part to its origin may be referred to as a manufacturing fingerprint.

Source identification has been studied for printed documents for the purposes of document authentication and anti-counterfeiting. Methods for source identification of printed documents typically identify patterns or artifacts inherent to the document printing process16. For example, document printers may produce printed pages where the printed text has unique geometric distortions17 of a page of printed text and local texture patterns18. The 3D profile of printed features can also be used to identify different cartridges from the same laser printer19. Such techniques have been extended to images collected from a smartphone, where lettering differences and printer-specific noise can predict the source printer using neural networks20. Similar methods can detect handwriting forgery21. Across these and other examples in the literature, it has been clearly shown that physical documents have unique characteristics that can be detected in images and that can be used to identify the specific source of printed material for forensic applications.

While there is a large body of work on source identification for physical documents, there has been significantly less published research focusing on source identification for AM, despite common characteristics between document printing and AM. A few publications have focused on source identification for fused deposition modeling (FDM) which produces a clearly identifiable texture on the part surface22,23,24. These studies are, however, limited to small numbers of printers and parts and focus exclusively on consumer-grade desktop printers25,26. AM, however, is growing into an industrially relevant manufacturing technology, with many examples of scalable manufacturing of high-quality end-use parts in real-world applications27,28,29 It has, however, not yet been shown whether source identification is possible for AM processes and equipment used for scalable production and end-use applications. It is also unknown whether source identification can be applied to diverse AM materials and part designs or whether it is possible to identify more granular information, such as the location at which a part was printed in a build tray.

A key issue for manufacturing source identification is the method for determining the manufacturing fingerprint. Previous publications on AM source identification mostly rely on a human expert to determine specific textural features analyzed using computational geometry23 or even manual analysis24. A few studies have shown how deep learning can analyze images of FDM layer texture to predict printer of origin26 or process parameters25. Deep learning methods are promising because they may identify patterns that are not detectable by humans. Notably, FDM parts have obvious patterns in their layer textures that can be distinguished by humans. However, it has not yet been demonstrated whether deep learning can be used for AM source identification when the part textural features are not detectable by humans. Thus, there is a need to understand whether the source of such parts can be identified and, if so, whether deep learning has an advantage over other methods for AM source identification.

This paper reports source identification of AM parts using deep learning, performing a task that is not possible for humans. To demonstrate generalizability and scalability, we produced 9192 parts from 21 sources, with three unique designs and six materials. The study focuses on scalable AM processes used in industry for production and end-use applications, with four types of AM equipment and seven different models of printers. We develop a novel analytical framework based on deep learning that achieves >98% prediction accuracy in source identification. The same model can identify the manufacturing process, material, or location in the machine at which the part was made.

Results

Overview

Figure 1 shows the workflow for fingerprinting the manufacturing attributes of an AM part using optical images. First, parts are produced in a factory with selected manufacturing attributes, including the specific machine, type of additive process, and material. The manufactured parts are then imaged on a high-resolution document scanner that can quickly capture images with 5.3 µm resolution. Deep learning models are trained to learn the abstract relationship between part image and manufacturing attributes using the scanned image as the model input and the manufacturing attributes as the outputs. The trained model can analyze the optical fingerprint to accurately predict the manufacturing attributes of previously unseen parts.

Design of experiments and manufacturing

Figure 2 shows a summary of the parts manufactured for this study. A large and diverse set of parts was designed and additively manufactured from 21 unique machines across four different manufacturing processes and materials. Figure 2a shows three different part designs: the connector, the plug, and the lattice. The designs are inspired by real-world mechanical components used in industrial applications with diverse geometric features of different sizes. Each part is uniquely serialized for tracking purposes. Each design fits within a volume of 27 mm × 19 mm × 8 mm, and the parts can be arranged into grids on the printer build trays. The material color was black across all the processes to maintain a consistent appearance during imaging. Figure 2b summarizes the dataset consisting of four additive processes: digital light synthesis30 (DLS), multi jet fusion31 (MJF), stereolithography32 (SLA), and FDM33. The DLS parts were manufactured in RPU 70 material on either the Carbon M2 or the large format Carbon L2. The Carbon M2 parts were printed at six different contract manufacturers, described as Suppliers 1 through 6. The MJF parts were manufactured in PA12 on the HP Jet Fusion 4200 or 5200. PA12 parts come out of the printer as a light grey color and were dyed black in a heated bath by the manufacturer. SLA parts were manufactured in Formlabs Black material on Formlabs 3B+ printers. FDM parts were manufactured in ABS on the Stratasys Fortus 450mc or the large format Stratasys Fortus 900mc. The parts were produced by six commercial contract manufacturers using industrial processes and quality systems. The parts were produced in builds with uniform grids of parts sized based on the available build area. DLS printers produced 36 parts per build, while MJF produced 108, SLA produced 24, and FDM produced 176. Each build contains parts of a single design.

Part imaging

Parts were scanned on a high-resolution flatbed scanner (Epson Perfection V39) with 4800-dpi resolution resulting in 5.3 μm pixels. Each part was scanned twice: once on the large face and once on the side with the serial number. Exposure and color settings were the same for all scans. Parts were scanned in batches of 21 to increase data collection throughput, and the part location on the scanner was randomized.

Figure 3 shows scanned images of the connector design for the four AM processes and two machines for each process. There are clear differences between the different AM processes, owing to the different materials, process physics, and machine architectures. FDM shows patterns associated with the nozzle and filament; MJF shows roughness from the powder; DLS and SLA show surface textures from the pixelated light source. The observed surface finish and texture patterns are normal and expected for these processes and materials30,34,35,36. The different parts show small but noticeable differences in geometric accuracy, especially visible in the accuracy of sharp interior corners. The images show some dust particles as well as image artifacts arising from the scanner limitations. The different processes can be easily distinguished from these images; however, it is not possible to distinguish between different machines.

Fingerprint model architecture

Figure 4 shows the machine learning framework for fingerprinting. First, image preprocessing downscales the high-resolution images to make the input smaller for the image models. The degree of image downscaling is a tunable parameter, where more downscaling presents the model with the larger observed area at the expense of lower resolution. The images are normalized to achieve grayscale pixel values with zero mean and a standard deviation of one. The parts are then randomly sampled into training and testing partitions without replacement so there is no data leakage. During training, the model randomly samples a 448 × 448 pixel region of interest (ROI) from each part image with an average pixel value threshold to ensure that the ROI includes mostly the part and not the background. The input size of 448 × 448 pixels doubles the resolution of the widely used ImageNet37 data set. Higher input resolution improves accuracy in complex ML tasks38. The ROI serves as the model’s training input, using a manufacturing attribute, such as the process, machine, or material of the part, as the target label for the image. This process repeats for 200 training iterations or epochs, with model training on each training part once per epoch. During training, the ROI is randomly sampled for each training image in each epoch.

Part scans are preprocessed using downscaling and pixel normalization. Data is split randomly into testing and training sets. During training, the model learns the relationship between a random region of interest (ROI) sampled from part images and the manufacturing attributes of the part. During testing, the model predicts manufacturing attributes by evaluating multiple ROIs from the same part image and applying a voting procedure.

After training, the model predicts the manufacturing attributes of the testing set. For each testing part, multiple ROIs are sampled from the testing image and used as input into the model in a single testing batch. For each ROI, the model outputs a SoftMax vector that normalizes the prediction into a probability distribution for each of the manufacturing attributes. The probabilities for each ROI are aggregated in a voting scheme resulting in a prediction for each testing part.

Fingerprint model results

Figure 5 shows the fingerprint model results for a testing set of 21 machines. The confusion matrix shows the predicted and true printer for all parts. The diagonal of the confusion matrix shows accurate predictions, with incorrect predictions highlighted in gray. To fingerprint 21 machines, 2100 parts were used for training, and 1050 were used for testing, with each machine using 100 training parts and 50 testing parts. The parts were randomly selected, and the set of testing parts was not seen by the model during training. Of the 1050 testing parts, only 16 are misclassified, and the errors are limited to different machines of the same process. The model applies an EfficientNetV239 architecture and achieved an accuracy of 98.5%, however, comparable accuracies greater than 95% were also achieved with various transformer and convolution-based architectures. Across all machines, 12 machines were correctly classified with no errors, five machines were classified with one error, one machine was classified with two errors, and three machines were classified with three errors. This iteration of the model did not use downscaling: the ROIs were 448 × 448 pixels, corresponding to 2.4 × 2.4 mm patches at a 5.3 μm pixel size. The testing vote used 16 random ROIs sampled from each part image. The results of Fig. 5 are for one model; however, we can consistently achieve similarly high accuracy when shuffling the part images into different training and testing splits.

For some applications, the fingerprinting technology may be most useful if focusing on only one manufacturing process. Table 1 summarizes the fingerprinting results for DLS parts only. The model perfectly predicts material composition from three materials: rigid polyurethane (RPU), additive epoxy (EPX), and Urethane methacrylate (UMA). All the parts are black, however there are small differences in color and surface texture that are identifiable by a human expert. The DLS parts were made by six different suppliers, and the model predicts the supplier with 98.7% accuracy. The model also detects the specific location in the printer at which each part was made. The DLS parts were arranged in a uniform grid on the build tray in one of 36 different locations. The model predicts the part location on the build tray with 82.1% accuracy. Most misclassified positions occurred in adjacent positions on the build tray, such that the model predicts location or adjacent location with 98.1% accuracy. The parts were spaced with a center-to-center distance of ~5 cm; thus, the model learns the position of the parts on the build tray with an accuracy of ~5 cm. The model is also capable of determining which parts were made in the same build. Here, “build” refers to a set of parts made at the same time in a single machine, material, and build tray. Across the DLS parts, there were 147 unique builds. Using the same architecture as described above, a new model was trained to identify parts made together in the same build. The model achieves 86.8% accuracy in predicting the correct build of origin. Thus, even when considering a single manufacturing process, the fingerprint model demonstrates the ability to identify detailed manufacturing attributes without the need for extensive part labelling and tracking. A further description of the material, build location, and build fingerprinting can be found in the Supplementary Information.

Image resolution and sampled area

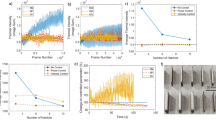

We investigated how the image resolution and sampled area affects the prediction accuracy. Figure 6a shows the relationship between image size, sampled area, and resolution. The image is downscaled by up to a factor of 24 by using pixel interpolation to transfer information to a lower resolution. The ROI sets the size of the sampled area by selecting a fixed number of pixels, and then bilinear upsampling increases the image size to 448 × 448 to ensure compatibility with the model architecture. Figure 6b shows model accuracy over a range of resolutions and sampled areas. To investigate sample size, models with an EfficientNetv2 architecture were trained for each process using a fixed sample size to identify three printers. The sample size must be larger than ~0.1 mm for the model to outperform random guessing. As the sample size increases, model accuracy sharply improves as critical features become visible with adequate resolution. However, as the sample size becomes too large, covering almost the entire part, accuracy decreases because the resolution is insufficient to capture the critical feature details needed for fingerprinting. DLS parts are accurately identified with the sample size below ~200 µm. The other processes require larger sample sizes to process unique features; MJF and SLA require a sample size 1 mm to exceed 90% accuracy and FDM parts require a sample size of 3 mm to exceed 90% accuracy. At the largest sample sizes, the model performance decreases for DLS, MJF, and SLA as critical identification features are lost due to inadequate resolution, however FDM parts with large deposition features maintain a high accuracy.

a The sampling framework selects an ROI from the part image, which is defined by the sample size and the pixel size. b Model accuracy as a function of the sample size for the four different processes and three machines per process. Random guessing results in an accuracy of 33.3% shown by the dashed line.

Finally, we explore how sample size and image resolution affect prediction accuracy and consider how the method might be extended to other types of image sensors. Figure 7 shows the model prediction accuracy for FDM parts as a function of sample size and pixel size. The models are the same as those shown in Fig. 6 and include additional models with inputs covering more of the sample size and pixel size parameter space. Points represent trained models, with point color indicating model accuracy. Example ROIs are shown for one FDM part, showing both a constant pixel size and a constant sample size. As the sample size increases, the model performance also improves across a range of resolutions. At smaller pixel sizes, the models exceed 95% accuracy with smaller sample sizes that still capture critical features. The models trained on images from the document scanner demonstrate accurate fingerprinting with pixel sizes between 5.3 μm and 63.6 μm and sample sizes between 2 mm and 20 mm due to the large manufacturing fingerprint features of the FDM process. The results for the other three processes, which are similar to FDM but with different viable pixel sizes and sample size ranges, can be found in the Supplementary Information.

Points show model accuracy for document scanner images, indicating high accuracy for a variety of pixel and ROI sizes but degraded performance as sample size decreases. Example ROIs labeled in the plot are shown for the same FDM part; the top row shows four images with constant pixel size, and the bottom row shows four images with constant sample size. Contour lines show the imaging capability of various image sensors, indicating the achievable pixel size at a specific sample size based on the device specifications. Lens-based imaging methods have contour lines with a trade-off between pixel size and sample size, while scanners have horizontal lines at the highest possible resolution without any trade-off with sample size. Labels A–G on the plot refer to example images showing ROI size and resolution.

The contour lines in Fig. 7 show the resolution and sample size capabilities of various imaging methods. The pixel size and image size will be different for different types of image sensors, presenting trade-offs in the selection of image sensor. For a static lens-based image sensor such as the Zeiss Smart Zoom40, iPhone 15 main camera41, or a DSLR42 with a macro lens, an increase in sample size requires a corresponding increase in pixel size, and hence, lower resolution. Scanners such as the Creaform Handyscan43, Keyence IM 700044, and the Alicona Infinite Focus45 maintain a consistent pixel size and resolution while moving over the ROI. Many of the imaging capability contours intersect with high-performing document scanner models, suggesting the generalizability of fingerprinting across other image methods. Achieving a prediction accuracy greater than 90% for FDM parts requires a sample size larger than 2 mm and a pixel size smaller than 64 μm, which is possible with all the imaging devices considered.

Discussion

This paper introduces a deep learning method that predicts the attributes of an additively manufactured part from a photograph. Across 1050 parts made on 21 printers, the model predicts the machine that produced the parts with 98.7% accuracy. Even higher accuracy is achieved for identifying processes or materials. The model is trained on a novel data set of 9192 parts imaged on a document scanner. We developed a novel ML model strategy suitable for fingerprinting that analyzes high-resolution images of part surface texture using image downscaling, random sampling, and a voting scheme. The model is generalized across four AM processes and can distinguish between machines that manufacture individual parts using images that cannot be similarly interpreted by humans.

The source identification uses part images that clearly exhibit textural features that result from process physics; however, the model learns only from training images and does not depend upon process parameters, material properties, or information about the part design. The model architecture is adaptable and can learn identifiable features to predict other manufacturing attributes, including the process that is produced apart and the material of the part, with 100% accuracy. Additionally, the architecture can predict the specific build that produced a part and the position of the part on the build tray with over 80% accuracy. Previous publications have explored image-based analysis to determine manufacturing attributes using specific geometric measurements of known textural features24,46. Unlike previous publications that require foreknowledge of the surface texture, this paper uses deep learning methods that detect patterns that may or may not be observed by humans and that require no foreknowledge about the part. The present approach allows the fingerprint method to be applied to AM processes that do not have obvious and detectable features. For example, the MJF parts have no machine-specific patterns that are detectable by humans, but nevertheless, the part images can be attributed to their machine of origin with 98.0% accuracy.

The data-driven approach to source identification provides insights into how process physics creates differences at the part level. The model accurately predicts manufacturing attributes over a wide range of image resolutions and ROIs, with different features being learned for each process. For Carbon DLS, a small region of the part just 200 μm × 200 μm is adequate for fingerprinting (Fig. 6). At this scale, surface texture created by the interaction of projected light and resin feedstock dominates the field of view. It is unlikely that the model is observing larger-scale features such as edge shapes or dimensional accuracy because these features are not observable in most images. FDM machines require larger ROIs for accurate source identification, indicating that unique features occur at a somewhat larger scale than for Carbon printers. FDM images are dominated by features that occur from the nozzle and filament, which are identifiable even with the image significantly downscaled. Each process has an ROI size beyond which there is either not enough surface area for fingerprinting or the part is not imaged at adequate resolution losing the fidelity of fine manufacturing fingerprint features.

The fingerprint technology may be useful for manufacturing quality control and root cause problem-solving. This study focuses on parts made using scalable processes and industrial printers in real factories that make industrial parts for end-use applications. Industrial manufacturing employs sophisticated quality control systems that can find defective parts and identify the root cause of those defects, such that processes can be improved2. Modern quality control systems seek to maintain the full manufacturing history of a part47 because the part history can help to determine the root cause of manufacturing problems and rectify those problems48. Consider, for example, a part in a factory or a warehouse that is found to be defective. If the part is missing a label or tag, or if the tag is incorrect, it would not be possible to identify the manufacturing source and fix the root cause of the problem. The fingerprint technology can predict the machine or factory of origin and other manufacturing attributes in situations where the tag is missing or incorrect, or if the part history is otherwise unknown.

The fingerprint technology may also be used to monitor and manage manufacturing supply chains. Ensuring the authenticity of parts is critical for applications such as commercial and military aviation49,50 where inferior parts and premature failure could lead to physical damage or loss of life. Some alternate methods such as intrinsic part labels or PUFs can ensure part authenticity, however such methods require trust and cooperation throughout multiple levels of a supply chain. For some products, manufacturers must guarantee the country of origin to comply with import restrictions or tariff requirements, and there exist many examples of malicious actors attempting to circumvent these restrictions51,52. Many industrial customers require suppliers to obtain permission before making changes in the factory. However, it can be difficult to detect unauthorized process changes without an in-person factory audit. The fingerprint model can determine if a supplier uses the correct process, machine, and material and can do so independently without requiring the cooperation of the supplier.

Fingerprinting may be able to support critical industries for which there are significant consequences from incorrect or counterfeit parts. Some industries, such as aviation and medicine, require 100% part inspection and part traceability from the factory to the application. Counterfeit parts in aviation supply chains have led to significant economic impacts49 and even loss of life53. Other industries, such as nuclear energy and defense electronics, also have significant consequences when an incorrect part or material is inserted into the supply chain. Counterfeit components54 and record tampering55 have led to increased inspection and shutdown of nuclear powerplants56. Integrated circuits have been targets of counterfeiting and unreported recycling, creating risks to the end user, including the potential for early failure57,58. Fingerprint source identification can help to secure supply chains by confirming the valid origin and providing assurance that parts are authentic. Future work could extend this concept to anomaly detection that could flag suspicious and outlying parts for additional protection against counterfeit parts.

Source identification and forensic analysis using machine learning have been explored in a variety of applications outside of manufacturing. For example, forensic analysis of image data has successfully determined the printer that produced a document, the artist that produced a painting59, or the camera that captured an image. Image source forensics using deep learning can identify the camera that captured a digital image for purposes of authentication and forgery prevention. Convolutional neural networks and unsupervised learning have extracted imperceptible differences, such as sensor pattern noise, to accurately predict the camera or smartphone that captured an image60,61. The fingerprint model of the present work uses a deep learning feature extractor to identify small differences in AM parts for manufacturing source prediction. These identifiable manufacturing fingerprint features may be captured using various imaging techniques.

This work uses deep learning to solve a problem in manufacturing using concepts and methods that were developed for tasks completely unrelated to manufacturing. ML methods adopted for manufacturing must address specific needs and requirements that may not be present in other application domains. For example, manufacturing often uses high-resolution data streams from physical sensors as well as image and video data. Processing these inputs requires specialized ML architectures to avoid high computational costs or extensive downscaling, which could lose critical details needed for insights62. These data sets may have information at multiple length and time scales. For instance, in the present work, the high-resolution images contain both fine-grain information as well as global information about the overall part shape and tolerances. Unlike other large-scale machine learning applications such as satellite image analysis63 and autonomous driving64 that focus on a small section of the data input, manufacturing ML tasks require full high-resolution images to capture surface information and geometric dimensions. The fingerprint model leverages high-resolution large-scale data through randomized cropping and voting, where many sections of the parts are analyzed in high detail, and the results are combined to generate an accurate prediction. Manufacturing data also presents a unique fine-grained problem where small inter-class variation challenges existing ML practices65.

The fingerprint technology is generalizable to many different situations, as shown by extensive testing on a large and diverse data set of 9192 parts. We were able to build accurate classifiers using a variety of ML architectures, including convolutional neural nets39,66,67, transformers68, and fine-grained image classification models69. The models may achieve high-accuracy predictions after training with as few as 100 parts. From these results, we hypothesize that it will be possible to extend the method to additional tasks such as fingerprinting on continuous manufacturing processes, predict and control process parameters, detect anomalies, or assist measurements of dimensional accuracy. Future work could explore these opportunities and their integration into quality control or manufacturing execution systems.

Future research could also focus on further development of the ML methods. The fingerprinting was successful when using common ML backbones, with modifications that allowed us to work with high-resolution images using random sampling and multi-patch voting. There is an opportunity to develop ML architectures specialized for the fingerprint task and the evaluation of high-resolution images of surface texture. Future work could also extend these methods to semi-supervised or unsupervised models. Unsupervised models train without requiring manually labeled data, reducing the effort required to expand the fingerprint model to new machines and processes. Unsupervised models would enable new applications beyond the work presented here, for example unsupervised models could determine the number of machines used in a supplier factory by evaluating only photographs of parts made in the factory, without any other knowledge of the supplier operations. Unsupervised models could also perform anomaly detection tasks to identify counterfeit parts from an unknown source or detect deviations in the manufacturing process of a supplier.

This paper provides three contributions to manufacturing science and technology. First, we discovered that AM equipment has unique, machine-specific patterns that are realized in the surface texture of every part that comes out of a machine. The machine-specific texture was confirmed for four AM processes and 21 different machines. There is exciting potential that machine-specific textures can be found in other AM processes and even other types of manufacturing. Second, the surface texture signature can be identified by a deep learning model. The model can also recognize the manufacturing process, material, specific build in which the part was printed, and the specific location in the build. Most of the fingerprint tasks demonstrated cannot be performed by a human. Third, we developed machine learning methods useful for interpreting high-resolution images of additively manufactured parts and their surface textures. The method is compatible with a variety of machine learning backbone architectures and can make highly accurate predictions with tuned enhancements suited for high-resolution images, such as random sampling of resized image patches, and voting. The work opens many opportunities for future work focused on deep learning for manufacturing science and technology.

Methods

Part tracking

A total of 9192 parts were designed and manufactured for this study, and each part had a unique six-digit serial number that was used to track the part attributes in a database. The database included the following attributes for every part: the machine and specific build that produced the part, material, location on the build tray, and location on the scanner where the part was imaged. Each serial number was applied to the 3D CAD model with 0.3 mm debossing on the side of the part. The serial numbers used a limited set of characters with the OCR-B font to ensure readability across the various additive processes. The serial numbers were also randomized to prevent any systematic effects on the part; however, models were never trained on images showing the serial number.

Part sampling and model training strategy

Each model used a subset of the manufactured parts for testing and training. Balancing the data set by using the same number of training parts for each printer ensured that each printer was equally represented in the model, preventing biased predictions. The testing set was also balanced with the same number of parts for each machine, ensuring accuracy metrics reflect the performance of the model across all machines. The smallest number of parts for a machine was 216 due to manufacturing constraints, creating an upper bound for the number of parts usable in testing and training. For each machine, 150 parts were randomly sampled for training and testing, allocating 100 for training and 50 for testing. Training and testing parts were always kept separate throughout the training and testing process to prevent data leakage. Training with more than 100 parts per machine did not improve accuracy but greatly increased the computational cost. A study of the data efficiency of the models can be found in the Supplementary Information.

Model development

Effectively processing high-resolution part images greatly improves the performance of the fingerprint model. Initially, images were downscaled to 224 × 224 pixels to be compatible with off-the-shelf models pretrained with ImageNet37. However, the image downscaling removed visible details, resulting in a fingerprinting accuracy below 80%. To allow the model to see higher-resolution information, full-resolution images were divided into six uniform patches and resized to 224 × 224, which improved accuracy to over 90%. Increasing the resolution of input images from 224 × 224 to 448 × 448 further increased accuracy by providing models with more detailed high-resolution input data. Larger input images increased the computational cost and required training models from scratch without pretrained weights. However, the larger input size allowed the models to process more image information which improved accuracy to over 95%. Finally, implementing the randomized cropping strategy improved the depth and complexity of data augmentation during training and enabled the model to evaluate multiple patches through voting which increased accuracy to over 98%. A further discussion of the model development and the processing of high-resolution data can be found in the Supplementary Information.

Fingerprint models were trained for 200 epochs on an RTX 6000 Ada graphics card with 48 GB of VRAM. Hyperparameter tuning was performed in random parameter sweeps, adjusting downscaling, backbone architecture, learning rate, and weight decay. The EfficientNetV2 model achieved 98.5% accuracy in fingerprinting 21 printers using a learning rate of 0.001 and an exponential decay with a 0.99 decay rate. The model also used a LAMB optimizer with a weight decay rate of 0.005. The parameter sweep was performed on four identical workstations allowing for a large exploration of the parameter space across over 5000 models and 10000 hours of GPU time during development. During training, the model used a batch size of 16 and transformed full-resolution images with random horizontal and vertical reflections followed by the down-sampling cropping transformation. An adaptive threshold was applied to the mean value of the randomized crops to ensure that patches contained the part and not the background of the part image. Randomized crops that did not have a mean pixel value greater than the threshold were automatically resampled. A description of the model parameter sweep can be found in the Supplementary Information.

Model feature extraction selection and alternative architectures

We evaluated alternative architectures using different learning models as feature extraction modules. Table 2 shows five architectures explored along with the number of model parameters and accuracy achieved for the fingerprinting process39,66,67,68,69 to predict the manufacturing source of parts from 21 machines. EfficientNetV2 achieves the best accuracy and has the fastest training time due to the comparatively fewer parameters and efficient layer design enabling faster training. In general, architectures with sufficient parameters can perform fingerprinting with an accuracy difference of only a few parts. The compatibility of the fingerprint model with various deep learning architectures improves application flexibility. By selecting appropriate model backbones, models could be specialized to the quantity of training data, time constraints for inferencing in-line inspection, and model size requirements for edge devices with limited computational resources.

Voting method

For each testing part, a set of random patches are extracted from the image. The number of patches is an adjustable parameter, and 16 patches were selected for the fingerprint models. The 16 patches for each part are evaluated by the model as a testing batch generating a SoftMax classification output for each patch. The output of the model for each patch is \(p=f(z)\in {R}^{n}\), where p denotes the probability that the input patch has a specific attribute out of n classes, and \(f(\cdot )\) denotes the SoftMax output of the model. The SoftMax outputs p are summed across each output class \(\mathop{{p}_{i}}\limits^{\bar{} }=\sum _{j=1}^{m}{p}_{i,j}\) where \({p}_{i,j}\) is the output for class i from patch j out of m number of patches. The attribute prediction is selected as \(\hat{y}=argma{x}_{i}(\mathop{{p}_{i}}\limits^{\bar{} })\), \({for\; i}=\mathrm{0,1,2},\ldots n\) where argmax(⋅) takes the index of the largest summed SoftMax output \(\mathop{{p}_{i}}\limits^{\bar{} }\). \(\hat{y}\) is the fingerprint model prediction. The voting procedure leverages multiple sections of the high-resolution image during the prediction, using the SoftMax output to weigh model confidence during the testing vote. A study of the model accuracy as a function of the number of testing patches sampled from each part can be found in the Supplementary Information

Data availability

The code and data sets supporting the findings of the study are openly available on GitHub at https://github.com/wpklab/fingerprinting.

References

Kim, H., Lin, Y. & Tseng, T.-L. B. A review on quality control in additive manufacturing. Rapid Prototyp. J.24, 645–669 (2018).

Vo, B., Kongar, E. & Suarez-Barraza, M. F. Root-cause problem solving in an industry 4.0 context. IEEE Eng. Manag. Rev. 48, 48–56 (2020).

Handley, S. & Gray, J. Managing quality in a heterogeneous contract manufacturing environment. Decision Sci. 46, 1011–1048 (2015).

Gupta, N., Tiwari, A., Bukkapatnam, S. T. S. & Karri, R. Additive manufacturing cyber-physical system: supply chain cybersecurity and risks. IEEE Access 8, 47322–47333 (2020).

Segura Velandia, D. M., Kaur, N., Whittow, W. G., Conway, P. P. & West, A. A. Towards industrial internet of things: Crankshaft monitoring, traceability and tracking using RFID. Robot Comput. Integr. Manuf. 41, 66–77 (2016).

Ha, B.-H. et al. Fast and direct engraving of iridescent lettering on original product surface using laser interference to prevent counterfeiting. Optics Lasers Eng. 107, 214–220 (2018).

Manyika, J. Manufacturing the future: the next era of global growth and innovation. (McKinsey Global Institute, 2012).

Seifi, M. et al. Progress towards metal additive manufacturing standardization to support qualification and certification. JOM 69, 439–455 (2017).

McGregor, D. J., Bimrose, M. V., Tawfick, S. & King, W. P. Large batch metrology on internal features of additively manufactured parts using X-ray computed tomography. J. Mater. Process. Technol. 306, 117605 (2022).

Everton, S. K., Hirsch, M., Stravroulakis, P., Leach, R. K. & Clare, A. T. Review of in-situ process monitoring and in-situ metrology for metal additive manufacturing. Mater. Des. 95, 431–445 (2016).

Brenken, B., Barocio, E., Favaloro, A., Kunc, V. & Pipes, R. B. Development and validation of extrusion deposition additive manufacturing process simulations. Addit. Manuf. 25, 218–226 (2019).

Frankel, A. Advancing additive #5: part serialization enables a traceable digital thread. Siemens https://blogs.sw.siemens.com/additive/aa-e5/ (2020).

Delmotte, A., Tanaka, K., Kubo, H., Funatomi, T. & Mukaigawa, Y. Blind watermarking for 3-D printed objects by locally modifying layer thickness. IEEE Trans. Multimedia 22, 2780–2791 (2020).

Salas, D. et al. Embedding hidden information in additively manufactured metals via magnetic property grading for traceability. Addit. Manuf.60, 103261 (2022).

Wigger, B., Koinzer, I., Meissner, T., Barth, M. & Zimmermann, A. Robust and fast part traceability in a production chain exploiting inherent, individual surface patterns. Robot. Comput Integr Manuf. 63, 101925 (2020).

Tsai, M.-J. & Yuadi, I. Digital forensics of microscopic images for printed source identification. Multimed. Tools Appl. 77, 8729–8758 (2018).

Wu Y., Kong X., You X. G., & Guo Y. Printer forensics based on page document’s geometric distortion. In: 2009 16th IEEE International Conference on Image Processing (ICIP) 2909–2912 (IEEE, Cairo, Egypt, 2009). https://doi.org/10.1109/ICIP.2009.5413420.

Joshi, S. & Khanna, N. Single classifier-based passive system for source printer classification using local texture features. IEEE Trans. Inform. Forensic Secur. 13, 1603–1614 (2018).

Jiang, Z. et al. Prediction of laser printers and cartridges based on three-dimensional profiles via discrimination analysis. Forensic Sci. Int. 363, 112186 (2024).

Joshi, S., Saxena, S. & Khanna, N. Source printer identification from document images acquired using smartphone. J. Inform. Secur. Appl. 84, 103804 (2024).

Nandanwar, L. et al. A conformable moments-based deep learning system for forged handwriting detection. IEEE Trans. Neural Netw. Learn. Syst. 35, 5407–5420 (2024).

Reddy, V. et al. Study on surface texture of fused deposition modeling. Procedia Manuf. 25, 389–396 (2018).

Li, Z. et al. PrinTracker: fingerprinting 3D printers using commodity scanners. In: Proceedings of the 2018 acm sigsac conference on computer and communications security 1306–1323 (ACM, Toronto Canada, 2018). https://doi.org/10.1145/3243734.3243735.

Aronson, A., Elyashiv, A., Cohen, Y. & Wiesner, S. A novel method for linking between a 3D printer and printed objects using toolmark comparison techniques. J. Forensic Sci. 66, 2405–2412 (2021).

Shim, B. S., Choe, J. H. & Hou, J.-U. Source Identification of 3D Printer Based on Layered Texture Encoders. IEEE Trans. Multimedia 25, 8240–8252 (2023).

Shim, B. S., Shin, Y. S., Park, S. W. & Hou, J.-U. SI3DP: Source identification challenges and benchmark for consumer-level 3d printer forensics. In: Proceedings of the 29th ACM International Conference on Multimedia 1721–1729 (ACM, Virtual Event China, 2021). https://doi.org/10.1145/3474085.3475316.

Blakey-Milner, B. et al. Metal additive manufacturing in aerospace: a review. Mater. Des. 209, 110008 (2021).

Aimar, A., Palermo, A. & Innocenti, B. The role of 3D printing in medical applications: a state of the art. J. Healthcare Eng. 2019, 1–10 (2019).

Wester, C. BMW: the road to one million 3D printed parts. Addinor https://addinor.eu/articles/bmw-road-mass-production-3d-printing/ (2018).

Tumbleston, J. R. et al. Continuous liquid interface production of 3D objects. Science 347, 1349–1352 (2015).

HP multi jet fusion technology: a disruptive 3d printing technology for a new era of manufacturing. (2017).

Guide to Stereolithography (SLA) 3D printing. Formlabs https://formlabs.com/blog/ultimate-guide-to-stereolithography-sla-3d-printing/.

Rachael Wratkowski. et al. A characterization of the repeatability and performance of stratasys fused deposition modeling (fdm) systems. (2024).

Kim, M. K., Lee, I. H. & Kim, H.-C. Effect of fabrication parameters on surface roughness of FDM parts. Int. J. Precis. Eng. Manuf. 19, 137–142 (2018).

Sagbas, B., Gümüş, B. E., Kahraman, Y. & Dowling, D. P. Impact of print bed build location on the dimensional accuracy and surface quality of parts printed by multi-jet fusion. J. Manuf. Processes 70, 290–299 (2021).

Onuh, S. O. & Hon, K. K. B. Optimising build parameters for improved surface finish in stereolithography. Int. J. Mach. Tools Manuf. 38, 329–342 (1998).

Deng, J. et al. ImageNet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, Miami, FL, 2009). https://doi.org/10.1109/CVPR.2009.5206848.

Sabottke, C. F. & Spieler, B. M. The effect of image resolution on deep learning in radiography. Radiol. Artif. Intell. 2, e190015 (2020).

Tan, M. & Le, Q. V. EfficientNetV2: smaller models and faster training. arxiv at http://arxiv.org/abs/2104.00298 (2021).

Carl Zeiss Industrial Metrology. Smartzoom 5 - Technical Specifications. Carl Zeiss Industrial Metrology. https://www.zeiss.com/metrology/us/systems/industrial-microscopy/light-and-digital-microscopes/smartzoom-5.html (2024).

Apple Inc. iPhone 15 - Tech Specs. https://www.apple.com/iphone-15/specs/ (2023).

Canon Inc. EOS Rebel T7 Specifications. Canon. https://downloads.canon.com/nw/camera/products/eos/rebel-t7/specifications/canon-eos-rebel-t7-specifications-chart.pdf (2018).

Creaform. HandySCAN 3D | BLACK Series Technical Specifications. https://www.creaform3d.com/en/portable-3d-scanner-handyscan-3d/technical-specifications (2023).

Keyence Corporation. Image Dimension Measurement System IM-7500. https://www.keyence.com/landing/measure-sys/pr_im-7000.jsp (2021).

Bruker Alicona. Infinite Focus G6. https://www.alicona.com/en/products/infinitefocus (2022).

Kundu, P., Luo, X., Qin, Y., Cai, Y. & Liu, Z. A machine learning-based framework for automatic identification of process and product fingerprints for smart manufacturing systems. J. Manuf. Processes 73, 128–138 (2022).

Yang, H. et al. Six-sigma quality management of additive manufacturing. Proc. IEEE 109, 347–376 (2021).

Rodriguez Delgadillo, R., Medini, K. & Wuest, T. A DMAIC framework to improve quality and sustainability in additive manufacturing—a case study. Sustainability 14, 581 (2022).

Final report and recommendations to prevent unapproved parts in the aviation supply chain. (2024).

Horvath, B. Not all parts are created equal. The impact of counterfeit parts in the Air Force supply chain. (Air War College, 2017).

Romer, K., Guo, J., Messick, M. & Peaslee, E. The trade fraud detective: planet money http://www.npr.org/2024/08/23/1197961495/the-trade-fraud-detective (2024).

Kellenberg, D. & Levinson, A. Misreporting trade: tariff evasion, corruption, and auditing standards. Rev. Int. Econ. 27, 106–129 (2019).

Cohen, R. S. An F-16 pilot died when his ejection seat failed. Was it counterfeit? Air Force Times (2022).

Identifying counterfeit square D circuit breakers. (2008).

AREVA has informed ASN of irregularities concerning components manufactured in its Creusot forge plant. (2016).

Hobbs, C., Naser, Z., Salisbury, D. & Tzinieris, S. Nuclear security implications of counterfeit, fraudulent and suspect items entering the nuclear supply chain. In: (IAEA International Conference on Nuclear Security (ICONS 2024), 2024).

Guin, U. et al. Counterfeit integrated circuits: a rising threat in the global semiconductor supply chain. Proc. IEEE 102, 1207–1228 (2014).

DiMase, D., Collier, Z. A., Carlson, J., Gray, R. B. & Linkov, I. Traceability and risk analysis strategies for addressing counterfeit electronics in supply chains for complex systems. Risk Anal. 36, 1834–1843 (2016).

Li, J. ia, Yao, L. ei, Hendriks, E. & Wang, J. Z. Rhythmic brushstrokes distinguish van gogh from his contemporaries: findings via automated brushstroke extraction. IEEE Trans. Pattern Anal. Mach. Intell. 34, 1159–1176 (2012).

Li, C.-T. Unsupervised classification of digital images using enhanced sensor pattern noise. In: Proceedings of 2010 IEEE International Symposium on Circuits and Systems 3429–3432 (IEEE, Paris, France, 2010). https://doi.org/10.1109/ISCAS.2010.5537850.

Tuama, A., Comby, F. & Chaumont, M. Camera model identification with the use of deep convolutional neural networks. In: 2016 IEEE International Workshop on Information Forensics and Security (WIFS) 1–6 (IEEE, Abu Dhabi, United Arab Emirates, 2016). https://doi.org/10.1109/WIFS.2016.7823908.

Bakhtiarnia, A., Zhang, Q. & Iosifidis, A. EfFicient high-resolution deep learning: a survey. ACM Comput. Surv. 56, 1–35 (2024).

Wang, Y. et al. Remote sensing image super-resolution and object detection: Benchmark and state of the art. Expert Syst. Appl. 197, 116793 (2022).

Thavamani, C., Li, M., Cebron, N. & Ramanan, D. FOVEA: foveated image magnification for autonomous navigation. arXiv at http://arxiv.org/abs/2108.12102 (2021).

Wei, X.-S. et al. Fine-grained image analysis with deep learning: a survey. arXiv at https://doi.org/10.48550/ARXIV.2111.06119 (2021).

Yu, W., Zhou, P., Yan, S. & Wang, X. InceptionNeXt: when inception meets ConvNeXt. arXiv at http://arxiv.org/abs/2303.16900 (2023).

Woo, S. et al. ConvNeXt V2: co-designing and scaling convnets with masked autoencoders. arXiv at http://arxiv.org/abs/2301.00808 (2023).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. arXiv at http://arxiv.org/abs/2010.11929 (2021).

Yu, W. et al. MetaFormer is actually what you need for vision. arXiv at http://arxiv.org/abs/2111.11418 (2022).

Acknowledgements

The authors acknowledge no funding source for this research.

Author information

Authors and Affiliations

Contributions

M.B., S.T., and W.K. developed the study concept. All authors contributed to experiment design, methodology, and data interpretation. M.B. developed the software and curated the data. M.B., S.T., and W.K. wrote the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bimrose, M.V., McGregor, D.J., Wood, C. et al. Additive manufacturing source identification from photographs using deep learning. npj Adv. Manuf. 2, 20 (2025). https://doi.org/10.1038/s44334-025-00031-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44334-025-00031-2