Abstract

Life cycle assessment (LCA) is a method that evaluates the environmental impact of products, technologies or services across their life cycles. LCA data record the inputs and outputs of products and processes over their lifespan. However, owing to inconsistent methodologies and limited compatibility across tools and formats, LCA data face challenges related to quality and interoperability. In this Review, we discuss approaches for LCA data harmonization. A unified LCA data system can help to streamline data management and sharing, and better support decision-making. Through collaborative efforts and the integration of artificial intelligence and blockchain technologies, it might also be possible to create a more integrated LCA data system. For instance, open-source tools can contribute to easier access and transparency in LCA data. Establishing unified rules, methods and infrastructures to develop interoperable databases can help to achieve broader and more effective LCAs.

Key points

-

Standardizing data methodologies is crucial for enhancing the reliability and comparability of life cycle assessment (LCA), and better informing environmental policies and strategies.

-

Adopting open-source tools in LCA can aid access to complex data analysis and enable a broader range of stakeholders to participate in decision-making.

-

Collaborative efforts across industries, academia and policy domains are essential for overcoming data silos and improving the overall utility of LCA data.

-

Integrating artificial intelligence and blockchain technologies into LCA practices can lead to more efficient data management systems, making it easier for stakeholders to track and reduce their environmental footprints.

-

A unified LCA data framework needs to be prioritized to optimize investments and resources.

Similar content being viewed by others

Introduction

The environmental impact of a product, technology or service, from raw material extraction to recycling or disposal, can be assessed using life cycle assessment (LCA)1,2. LCA can help to guide sustainable consumption and production by providing a system-wide evaluation of environmental impacts across sectors3,4. For instance, in the automotive industry, LCAs of electric vehicles compared with conventional internal combustion engine vehicles provide insights into the overall benefits and trade-offs, considering factors such as battery production, energy source for electricity, and vehicle lifespan5,6. In the renewable energy sector, LCAs of solar panels or wind turbines assess the energy output and the environmental costs associated with their production, use and disposal7,8,9. LCA ensures that efforts to implement clean and low-carbon technologies effectively lead to reduced environmental burdens and carbon footprints10.

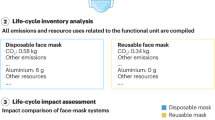

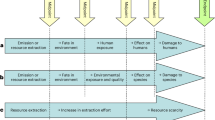

Generally, the LCA framework includes four main steps (Fig. 1). Starting with the definition of the goal and scope of the assessment, the initial phase of LCA involves defining the purpose of the assessment, the intended application and the target audience. In this phase, the system boundaries, functional unit and key methodological assumptions are also established. In the second step, the life cycle inventory (LCI) analysis, data are collected and calculated to quantify the inputs and outputs of the different processes within the life cycle of a product. The environmental impacts associated with the inputs and outputs identified in the LCI are then calculated during the life cycle impact assessment (LCIA) step. This third step involves classification (assigning LCI results to impact categories), characterization (assessing the magnitude of potential impacts), and, optionally, normalization, grouping and weighting. The final interpretation phase identifies issues, draws conclusions and provides recommendations.

The core life cycle assessment (LCA) framework, as defined by ISO 14040/14044, consists of four iterative phases: goal and scope definition, life cycle inventory analysis, life cycle impact assessment and interpretation. The outcomes of an LCA support diverse applications including product development, strategic planning, policymaking and marketing, emphasizing its role in facilitating sustainable decision-making across sectors.

Although LCA is a useful tool for decision-making, its application in practice is not without challenges11,12. In particular, the effectiveness of LCA is contingent on the quality and availability of data. Data unavailability, inaccuracy and inconsistency can substantially affect the usefulness of LCA results, leading to potentially flawed decision-making13,14. The complexity of collecting comprehensive and reliable data across global supply chains for a solid LCA poses a notable challenge, as does the need for standardized data formats, system boundaries and units to ensure comparability and integrity in assessments15,16.

In this Review, we examine challenges and opportunities in LCA data development. We discuss prevalent issues and inconsistencies that affect LCA data, including data traceability, format incompatibilities and the absence of interoperability across different systems. Subsequently, we examine the need for common rules, infrastructure and toolset designed to overcome these hurdles, with the standardization of data methodologies, enhancement of transparency and the promotion of open-source tools emerging as critical steps in forging a more coherent and accessible LCA data ecosystem. Finally, we discuss the role of artificial intelligence and blockchain technologies in LCA data development in terms of data management, security and sharing.

The role of data in LCA

Data used in LCA generally fall into two levels. The first level is unit process data, which include inputs (resources, materials and energy) and outputs (product, byproducts, emissions and waste) of producing a unit of a product in a process17. The second level is LCI data, corresponding to the total amount of inputs and outputs for the life cycle, complete or partial, of a product18. LCI data are calculated based on unit process data of the processes included in the life cycle19. We hereafter use LCA data to represent both unit process data and LCI data, and specify when necessary.

Based on their data collection methods, LCA data can be categorized into primary data and secondary data20,21. Primary data are collected and measured directly from processes, making them more accurate and specific22. They include raw process-specific data, supplier and distributor data, and information regarding the use phase of the product. By contrast, secondary data are obtained from pre-existing sources such as standardized LCI databases and are typically used to fill gaps where primary data are unavailable. Secondary data provide industry-average parameters, such as energy consumption, material use and pollutant emissions23. To ensure the robustness of an LCA model, secondary data should be representative in terms of time, space and technology, and should reflect current practices24.

Data development

The development of LCA data requires careful planning, collection and validation to ensure accuracy and reliability25. The process typically begins with the definition of the goal, which sets the direction for the scope, system boundaries, data quality requirements and methodological framework of the assessment. Subsequently, the scope is defined, including the system boundaries, functional units and data quality requirements, which provides the basis for data collection and analysis26,27 (Fig. 2).

The complete workflow of the development of a life cycle assessment (LCA) database includes preparation, collection, processing and validation. It begins with goal and scope definition, followed by data gathering from measurements, reports, literature and existing databases. Data are then harmonized through normalization and aggregation, validated against peer datasets and accompanied by uncertainty analyses to ensure transparent and traceable outputs.

Once the scope is established, data collection follows to gather inputs and outputs of each process within the defined system boundaries28,29. Data can be sourced from direct measurements, industry reports, scientific literature and LCA databases, among others30,31. The quality and representativeness of the data are critical, as they directly influence the accuracy and applicability of the LCA results32,33. For each data point, the source, temporal–spatial information, collection method and any assumptions made must be documented to ensure transparency and traceability.

After data collection, the next step is data processing and validation. This stage involves converting raw data into a format that can be used in LCA calculations, often requiring normalization, valuation and, sometimes, estimation when relevant data are unavailable34. Once processed, the data are validated through peer review and comparison with other datasets to ensure that the data align with industry standards and reflect real-world conditions35. An essential part of validation involves uncertainty and sensitivity analyses, which help in addressing variability and limitations in LCA data17,18. For example, Monte Carlo simulations can quantify the uncertainty of LCA parameters based on probability distributions and repeated sampling, providing confidence intervals for the results and strengthening the statistical robustness of decision-making36. Sensitivity analysis complements uncertainty analysis by evaluating how variations in specific input parameters influence the overall results, enhancing the reliability and interpretability of the evaluation37.

For LCA practitioners, these procedures are often done using professional LCA software tools that provide built-in functions to guide data entry, processing and analysis38,39,40. Commonly used software tools include, for example, SimaPro, GaBi and openLCA, which provide predefined lists of flows such as products, resources, energies, emissions and waste41,42. By contrast, when building LCA databases, professional developers often start by selecting or referencing data packages that include lists of flows tailored to their needs, such as nomenclature, industrial sector, or specific product systems under consideration. They might use customized software tools, often developed by themselves for the specific requirements of their projects. Unlike practitioners, professional developers establish internal guidelines and methodologies to ensure the quality and consistency of their data. Professional developers follow a structured workflow, ensuring that every stage of data production — from collection to validation and integration — is meticulously managed43. Internal controls, such as regular audits, peer reviews and statistical analysis, are implemented to ensure data quality44. This rigorous process ensures that the LCA database produced is reliable, comprehensive and suitable for a wide range of applications.

LCA databases

LCA databases differ in their industry coverage, geographical scope and other characteristics such as methodologies, LCIA methods and access model45 (Supplementary Table 1). Some databases, for example ecoinvent, offer extensive global datasets for multiple industries, making them versatile for a wide range of applications46. Other databases focus on particular sectors in specific countries or regions47,48, such as Agribalyse for the French agriculture sector49,50 and the Cobalt Institute database for cobalt products51,52. The developers of LCA databases play a key role in shaping the focus of databases. Government agencies typically develop databases to support national environmental policies and provide standardized and authoritative data for public use (for example ADEME, the French ecological transition agency), whereas non-governmental organizations often focus on broad applicability by providing comprehensive data for global use53. Commercial developers tend to offer proprietary datasets tailored to industry needs, often emphasizing specialized coverage, frequent updates and customer support54.

The quantity of data also varies across databases. Large databases can have tens of thousands of data entries32, whereas specialized databases might contain only a few data entries tailored to specific materials or industries. These smaller databases are valuable for niche applications, despite not providing the breadth necessary for more general LCAs.

Challenges of LCA databases

The complexity and transparency in data sourcing, accessibility and standardization create challenges that can hinder a robust assessment and decision-making using LCA databases26,55,56. In this section, we identify key challenges including the lack of traceability of data sources, limitations in accessing information, unbalanced data coverage across sectors, and inconsistencies in nomenclature and interoperability. By examining these drawbacks, we highlight areas in which improvements in transparency and data management can strengthen the credibility and applicability of LCA, ultimately supporting more reliable environmental assessments and policymaking.

Untraceable and inaccessible data sources

The traceability of data sources ensures transparency and reliability of LCA practices. However, LCA databases often have poor traceability due to complex cross-referencing57. Although primary data typically consist of proprietary measured values, secondary databases often lack explicit documentation regarding version-specific assumptions about technological representativeness, geographical applicability and temporal relevance.

According to ISO 14044 requirements, LCI databases should be identified by their names and providers18. However, the architectural opacity in secondary databases can undermine reproducibility, as it is difficult to verify whether the selected databases accurately match the research context58,59,60,61. This lack of transparency prevents stakeholders from verifying the accuracy and relevance of LCA results. For example, a solar panel manufacturer assessing the environmental impact of their panels using incomplete or non-transparent data on the silicon purification process might underestimate the carbon footprint, or their claims might not be verifiable62,63. An inaccurate assessment can make policymakers or investors favour a technology over more effective alternatives.

Moreover, the accessibility of data sources further complicates the transparency problem16,64. Issues such as paywalls, outdated web links, or sources that only exist in physical formats inaccessible to the broader community make it difficult to verify and use the data effectively65. Additionally, data security concerns can further reduce the traceability of LCA data66. For example, primary data from companies often include proprietary or sensitive business information23. To mitigate these issues, systematic improvements are being made in how LCA data are managed and documented67,68. Efforts are underway to enhance the traceability of sources, possibly through standardized documentation practices and improved accessibility measures69. Such efforts aim to ensure that all sources are identifiable and accessible to all stakeholders, reducing disparities in data access and supporting more informed decision-making.

Hidden life cycle inventories

LCIs are fundamental to LCAs as they represent the processes and flows of materials and energy throughout a product life cycle. However, the effectiveness and reliability of LCAs are often compromised by the low-quality LCIs used as secondary data. When the details of LCI are not fully disclosed, users cannot verify the scope, assumptions, methodologies, or quality of the data used. This lack of transparency can then lead to doubts about the accuracy and credibility of the LCA results70,71.

An important issue is the lack of clarity around how LCI data are collected, evaluated and processed. Critical information such as geographical specificity, technological relevance or time-related appropriateness often remains undisclosed72,73. This obscurity prevents users from fully understanding the environmental impacts associated with different life cycle stages.

Further compounding the challenge is the opacity surrounding data valuation and processing choices within LCI systems74. These systems often involve intricate cross-referencing in which processes reference multiple sources, and the sources themselves are cited across different processes (Fig. 3). Such interconnections create a complex web of information that obscures the direct lineage and rationale behind data valuation or processing choices. The complexity of these interconnections hinders the ability of users to understand the embedded assumptions and methodologies.

Cross-referencing workflow across multiple processes (process 1 to n), each linked to different data sources (source A to N). Traceable, accessible, and reliable or unreliable reflect the overall credibility of the data process. The complexity of cross-referencing can compromise transparency and undermine the reliability and relevance of life cycle assessment results by concealing the lineage of data.

Unbalanced data coverage across sectors

A comprehensive LCA database should provide data for common processes in all economic sectors. However, data availability tends to vary unevenly across regions and sectors (Fig. 4 and Supplementary Fig. 1). This variation can be due to regional characteristics. For example, countries or regions with a large agriculture sector tend to have relatively abundant data for agricultural processes. This disparity might also be caused by the different levels of effectiveness and completeness of the data infrastructure in different countries and regions. For instance, locations with a high Human Development Index generally have more data available than those with a low index.

Data availability varies across International Standard Industrial Classification (ISIC) sections between locations with high and low Human Development Index (HDI). The sectoral coverage of the ecoinvent database 3.10 was used as a benchmark, focusing on data for transforming activities in the selected ISIC categories. Data with unclear attribution, such as Latin America and the Caribbean and Rest-of-World, were excluded. The data were weighted for different cities based on the Human Development Report 2023/2024 of the United Nations Development Programme194, dividing locations into high HDI (ranked 1–69) and low HDI (ranked 70 and below).

Inconsistent characterization approaches

Substantial variability in the characterization factors used by different LCIA methods can lead to discrepancies in results and affect the conclusions drawn from LCAs75,76. For example, a case study on the European electricity consumption mix showed marked differences in land use impacts using different LCA methods (ReCiPe 2016 and International Reference Life Cycle Data System (ILCD) 2011), due to divergent definitions and modelling approaches77. Whereas ReCiPe 2016 considers land type competition, ILCD 2011 also includes land occupation and its transformation78,79.

Compounding this issue, many databases lack transparency in documenting the provenance and calculation methodologies of these factors, potentially compromising their accuracy and cross-method comparability80. Furthermore, using outdated data sources, such as factors from the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (IPCC AR5) despite the availability of AR6, might misalign with current environmental conditions81. Enhancing consistency between LCIA methods can help to improve result comparability and reduce uncertainty in decision-making based on LCAs.

Inconsistent data requirements and nomenclature

Establishing standardized data requirements and a unified nomenclature system is paramount for LCA databases82,83. Each LCA database usually follows its own rules and uses diverse nomenclature systems, severely limiting the ability to compare, merge and integrate data across databases84,85.

The impact of this variance is particularly evident in LCAs that incorporate data from multiple databases86,87. Inconsistencies in nomenclature and data requirements can lead to duplicated or omitted inputs during valuation, skewing the outcomes of LCAs and affecting the accuracy and reliability of these assessments (Supplementary Table 2). The Global LCA Data Network (GLAD, a United Nations platform for LCA data sharing) and ecoinvent have recognized this issue and implemented mapping procedures to address the challenge78,88,89. Although mapping represents an important step forward, its complexity and the difficulties in applying it across diverse datasets limit its ability to achieve complete harmonization, leaving discrepancies in LCA outcomes unresolved.

Limited cross-tool interoperability

Many LCI databases are designed to operate within a specific software environment. However, the integration of these databases into a single software system is often needed for practical applications32. Without proper integration, the use of multiple LCI databases in a single LCA project can become complex, resource intensive and impractical90.

Identifier inconsistency further exacerbates data integration challenges55. When the same database is accessed through different LCA software, identifiers — unique codes used to specify data entries — might differ. This difference arises from the different ways each software system interprets or stores data. Such discrepancies make it more difficult to track and use data consistently across platforms. The need to reconcile different identifiers for the same data points can introduce errors and inefficiencies, complicating data tracking and usage.

Errors and losses in format conversion

Converting LCA data from one format to another is a common requirement from LCA practitioners who use multiple software systems91. The technical difficulties of this conversion can lead to errors and data corruption92. Moreover, different LCA software packages handle data formats differently, which further exacerbates these conversion issues93. These conversion challenges substantially affect the efficiency of LCA94,95, as a considerable amount of time and resources is needed to troubleshoot these issues96,97.

Typical errors during data conversion include the loss of critical data and the misinterpretation or miscategorization of data by other software systems98 (Fig. 5). For example, when ILCD datasets exported from SimaPro are processed in Look@LCI, flows with ‘geographical location global’ (GLO) data might be mischaracterized or lost because of location field incompatibilities99. Moreover, not all data formats support multilingualism. As a result, there is a risk of losing data information or context when transferring data across platforms or regions with different language requirements100,101.

Errors caused by format conflicts can be categorized into four types. Data in format 1 in software A are converted and exported as format 2 for import into software B. If the import is successful, a data comparison step is conducted to ensure consistency between the original and converted data. However, during this data conversion process, certain errors may arise. If discrepancies are found during the comparison step following a successful import, this might indicate data loss, particularly in output flows (error 1). If the import fails, it might be due to the need for an additional mapping file to align data between different formats (error 2), or because certain processes or units are missing when large volumes of data are imported, which can result in an unsuccessful import (error 3). The process might also fail if software B does not support the specific data format (error 4).

Data system for harmonization

A data system is an organized and integrated set of structures, tools and governance mechanisms designed to collect, store, manage and deliver data in a reliable, traceable and accessible manner. As LCA data grow in volume, complexity and relevance, a systematic approach is essential to ensure their harmonization and interoperability. Although product category rules offer methodological guidance for specific product types — such as defining the scope of the assessment, selecting functional units, setting system boundaries, processing data, allocating inputs and outputs, and calculating environmental impacts — a unified, open and shared data system enables these methodological rules to be applied consistently and transparently102 (Fig. 6 and Supplementary Fig. 2). A robust data system is defined by its ability to avoid or minimize issues related to untraceable data, lack of transparency, inconsistent data requirements, errors in data conversion and limited interoperability. A unified data system also means more efficient and cost-effective data management55.

Common rules for data development

Standardizing rules for LCA data development can minimize discrepancies in data collection and reporting practices103,104. These standards should encompass database components, data collection requirements, data quality indicators, uncertainty reporting methods, and guidelines for methodological choices such as allocation procedures43,105. The standards should also systematically incorporate the characterization and management of uncertainties and sensitivities inherent in LCA data, using techniques such as Monte Carlo simulations to quantify variability and support robust decision-making106,107.

Database builders should identify and document material, energy and emission flows associated with each unit process, including their origins, transformations and uses. Data obtained through on-site investigation or specific processes should be accompanied by evidence. Standardized documentation practices should be implemented to enhance traceability, ensuring that all sources are identifiable and accessible to all stakeholders. Ensuring transparency in LCIs is also crucial. Developers should fully disclose the details of LCI data, including the assumptions, methodologies, and quality of the data used. Maintaining a dynamic LCI that can be updated and revised as new data and information emerge ensures that the LCI remains timely and relevant93,108,109.

Encouraging stakeholders to improve LCI fosters a continuous cycle that drives the constant evolution and advancement of LCI methodologies110. An open feedback mechanism in which stakeholders can review, question and suggest improvements enables rigorous peer review to help to identify and correct potential errors111,112. A transparent process aids in knowledge sharing and academic progress, allowing for a broader base of expertise to contribute to the robustness of LCA data113,114. By adhering to these practices, database builders can create a robust and meaningful LCA data system to enhance the traceability, transparency and reliability of LCAs.

Globally unified infrastructure

To address the fragmentation and interoperability issues in LCA practices, a globally unified infrastructure is needed, referring to common data structures and flow lists that support systematic data organization115,116. This infrastructure should underpin high-quality collaborative data development to ensure consistency and comparability across different regions and sectors, thereby reducing conversion efforts and minimizing interpretation errors across software platforms115. The ILCD offers one of the earliest comprehensive frameworks for such integration, serving as a foundation for later systems. In line with this approach, the TianGong Data System (TIDAS) illustrates another example: as an open-source, JSON-based system, it integrates methodology, format specifications and data resources, supported by intelligent tools to enable standardized, transparent and automated data management117.

Beyond structure and format, a unified identification system for elementary flows (exchanges between the product system and the environment) and product flows (exchanges between processes within the product system) should be developed and continuously updated118. This unified system ensures that each flow or process is uniquely identifiable across all databases and software to facilitate seamless data integration and improve traceability. Maintaining such a single, open, unified identification system for these flows can greatly enhance the consistency, accuracy and relevance of LCA data119,120.

In addition, mechanisms for collaboration involving critical stakeholders, including policymakers, database developers, researchers and corporate users, are needed to offer real-world insights for a global LCA network to build a more open, shared and comprehensive database with practical applicability121,122,123,124. By aligning LCA practices with global sustainability frameworks such as the United Nations Sustainable Development Goals, this infrastructure can play a crucial role in informing evidence-based policymaking and sustainable business strategies worldwide.

Open-source software tools

Open-source software can help to achieve standardization in LCA data125,126 as it usually allows users to examine and verify the underlying algorithms and methodologies127. This transparency helps in building trust in LCA results and enables scientific scrutiny112. Additionally, open-source solutions minimize financial barriers, making LCA resources more accessible. For example, open-access software can benefit small and medium organizations that might have limited resources to purchase proprietary software128.

Moreover, the LCA community can actively collaborate to improve and refine open-source software tools, and remain up to date with the latest scientific advancements and evolving user needs129,130,131.

Beyond the benefits of open-source tools, there is a critical need for a suite of interoperable tools across the entire LCA landscape, including both open-source and commercial software. The interoperability of these tools is essential to ensure compatibility across existing databases, maintaining consistency throughout different stages of LCAs and improving the comparability of results. The adoption of open-source software as standard tools in LCA might help to improve the quality and accessibility of LCAs and drive broader participation in life cycle management.

Collaboration in LCA data development

LCA database development spans multiple sectors and regions, involving complex data collection, verification and maintenance. Increasingly, stakeholders are collaborating across regions, sectors and organizations to share data, expertise and resources.

Building LCA databases can be resource intensive

Building and maintaining LCA databases can be a resource-intensive endeavour, requiring substantial financial investment and time commitment132,133. The creation of comprehensive LCA databases involves extensive data collection, verification and regular updates to ensure alignment with current environmental standards and technological advancements11,134. These activities are costly and time consuming, often requiring years to fully develop and maintain a robust database31.

Given the high costs associated with LCA databases, commercialization provides a source of funding for continuous improvements and integration of new data92. However, it also introduces limitations, particularly related to accessibility and interoperability135,136. One major limitation of commercial LCA databases is the design choices driven by business considerations137. In addition to restricted accessibility owing to paywalls, commercial interests often lead to the development of databases that are not fully interoperable with other systems, hindering the harmonization of LCA methodologies and data across different platforms138,139. This lack of interoperability complicates the comparison and integration of data from various sources140,141. As a result, these business-driven constraints limit the collaborative potential of LCAs and lead to inconsistencies in results, ultimately reducing the effectiveness of LCA in informing decision-making142.

Collaborative efforts

From the early 2000s, the development of LCA databases was primarily an independent endeavour. Databases such as ecoinvent, GaBi and ELCD were created by research groups, companies and government agencies working largely in isolation143,144. Although these efforts produced valuable datasets, the lack of a comprehensive collaborative framework often led to inconsistencies and limited accessibility, making it difficult to integrate or compare data across different sources55,145.

Advancements in information and communications technology have since enabled more collaborative approaches to LCA data development146. These new tools and platforms enable stakeholders to efficiently and effectively work together, resulting in shared LCA databases that are more consistent, accessible and globally applicable147. Collaboration has become crucial for pooling resources, expertise and data that would otherwise be isolated, and creating more comprehensive and interoperable datasets92,148.

Several initiatives illustrate the shift towards collaboration in LCA data development. Globally, GLAD, which is maintained by the United Nations Environment Programme (UNEP), represents a major effort to support data accessibility across various sources149. On a regional scale, for example, the European Commission’s Life Cycle Data Network provides a centralized platform for LCA data exchanges across Europe, setting regional standards to ensure consistent, reliable access to datasets150. At the national level, the LCA Commons led by the US Department of Agriculture brings together research institutions, government agencies and industry stakeholders to develop a region-specific LCA database151. In China, the TianGong Initiative, jointly developed by academia and industry, represents another major collaborative effort to establish a database. Corporate collaboration also plays a role in advancing collaborative LCA data development. Initiatives such as Carbon Minds and Départ de Sentier contribute to the creation and dissemination of datasets. These collaborative efforts represent progress towards a more integrated and cooperative approach to LCA data development. By continuing to build on these initiatives, the LCA community can enhance the quality, accessibility and global impact of LCA data152.

Emerging methodologies

The successful development and sharing of LCA data depend on overcoming technical barriers, enhancing efficiency, ensuring data security and promoting data sharing. Emerging technologies, such as generative artificial intelligence (AI) and blockchain, might help to improve LCA data management and sharing.

Generative artificial intelligence

By leveraging intelligent agents and generative models, generative AI can automate data development to produce comprehensive datasets with greater efficiency153,154. Generative AI enables experts to focus on LCA methodology and data, and to minimize the time needed to understand every detail of the underlying data system155,156. This automation reduces the time and expertise required to build robust LCA inventories157,158. Furthermore, AI agents can facilitate multilingual knowledge sharing, enabling seamless collaboration between stakeholders who speak different languages159. In addition, generative AI can automatically classify and structure unorganized data, identify inconsistencies and fill in missing information160,161,162. Generative AI automation reduces the time required to prepare datasets for analysis and ensures a higher degree of data quality163,164. Predictive analytics powered by AI can help stakeholders to identify trends and optimize supply chains165,166.

To ensure that AI-driven tools are seamlessly integrated into broader LCA infrastructures rather than functioning as isolated components, efforts are underway to embed advanced AI capabilities into modular and interoperable system architectures. First, a professional assistant based on retrieval-augmented generation technology has been introduced to lower the knowledge barrier and enhance LCA application efficiency. By dynamically retrieving and synthesizing pertinent information from heterogeneous sources, the retrieval-augmented generation-based system accelerates data validation and integration, and facilitates more informed decision-making167. Second, to further improve semantic interoperability, an enhanced retrieval functionality leveraging the common knowledge embedded within large language models can handle cross-language queries, synonym identification and the accurate matching of standardized identifiers used across LCA databases168,169. Ensuring that diverse expressions of similar concepts are cohesively linked, the semantic retrieval approach is expected to improve data access170. Finally, an intelligent AI agent can perform automated data quality assessments by systematically validating field-specific content171. For instance, the agent can verify that entries in the geographic information field conform to formats and accurately reflect location data, ensuring consistency and integrity across the dataset. These capabilities are increasingly being integrated with existing LCA data management frameworks, such as multicomponent processing platforms, to enable contextual reasoning, adaptive learning and automated quality control172.

Blockchain and privacy-preserving technologies

Ensuring data security is paramount when sharing sensitive LCA data173,174. Blockchain technology provides a decentralized and secure framework for managing and sharing data175. Blockchain’s transparency and immutability ensure that data cannot be altered without leaving a trace, thus maintaining data integrity176,177. Blockchain typically involves uploading processed or validated LCA results onto a blockchain system, generating digital fingerprints to ensure data traceability26. To further enhance transparency, blockchain technology can record data sources, process metadata and version histories directly on-chain, making updates traceable throughout the product life cycle178,179. Additionally, smart contracts enable automated data access control, typically by linking on-chain logic with off-chain data storage, ensuring that only authorized parties can view or modify specific datasets180,181. This level of security builds trust among stakeholders, encouraging them to share data more freely without fear of misuse182,183.

Furthermore, when handling sensitive or proprietary information, blockchain can be combined with privacy-enhancing computation techniques to enable data analysis, statistical evaluations and other computations without revealing raw data184,185. Typical privacy-enhancing techniques include homomorphic encryption, federated learning and secure multiparty computation, each offering different mechanisms to protect data confidentiality during processing (Supplementary Table 3). These techniques enable collaborative processing by either performing operations directly on encrypted data, distributing model training across local datasets, or securely computing functions across multiple parties without revealing individual inputs26. Such features ensure that stakeholders can obtain insights from aggregated data without exposing sensitive information, promoting a higher level of trust and willingness to share data.

Advances in LCA methodology

Methodological advancements play a critical role in enhancing the precision and credibility of LCA data, particularly through spatiotemporal modelling and dynamic inventory integration. For instance, geographic information system-LCA integrates spatial information with environmental impact data, enabling consideration of region-specific resources, energy mixes and emission characteristics186,187. Moreover, dynamic-LCA incorporates temporal dimensions into LCA, bridging the gap between static LCA models and the temporal dynamics, developmental trends and real-world conditions in which systems operate, for long-term policy evaluation and future scenario forecasting188,189. At the same time, comprehensive sustainability assessment requires consideration of not only environmental but also social factors. Social-LCA expands the scope of traditional LCA by quantifying social aspects across the product life cycle190.

These methodological refinements are essential for improving the precision and reliability of LCA results and enhance the quality and robustness of sustainability assessments. Methodology development requires LCA databases to evolve toward hybrid data architectures capable of storing and querying multiscale spatial geometries, high-resolution temporal datasets and provenance-linked metadata13,191. Such capabilities include enabling spatiotemporal joins between inventory records and contextual datasets, implementing temporal versioning of process data, and supporting interoperability with geospatial and real-time data platforms via standardized application programming interfaces and semantic schemas.

Summary and future perspectives

In this Review, we have explored the key challenges and opportunities in LCA data and database development. LCA data face some challenges with traceability, format incompatibilities and the lack of interoperability, which hinder effective use and can limit the potential to inform decision-making. Standardizing methodologies, enhancing transparency and promoting open-source tools can help to create a more consistent and accessible LCA data environment. A shift from isolated to collaborative data development can be achieved with new technologies and communication platforms enabling pooling of resources and expertise. Emerging technologies such as generative AI, blockchain and privacy-preserving technologies can facilitate collaboration as well as management, security and sharing of data.

Future LCA data development requires active participation of all critical stakeholders in the creation of a unified LCA data methodology and infrastructure. The UNEP Life Cycle Initiative, with its experience in leading the GLAD, is well positioned to spearhead this effort. By coordinating global efforts, UNEP can help to build more open, shared and comprehensive databases that are accessible and interoperable across regions and sectors.

Collaborative initiatives should adopt a global-unified methodology and infrastructure to build a global LCA network, in which data can be seamlessly interoperated and harmonized. Such a network would help to improve the ability of LCA data to contribute to global sustainability by enabling more informed and consistent decision-making.

A top priority moving forward is to encourage broader participation from diverse stakeholders, particularly the private sector. Although corporate collaborations have already made contributions to the LCA ecosystem, there is a need to further incentivize businesses to share data and collaborate in the development of open, accessible databases192,193. Creating policies and frameworks that encourage data sharing while safeguarding intellectual property and competitive interests will be important to expand participation.

Addressing the technical and institutional barriers that hinder data sharing and integration is equally important. Developing standardized protocols for data exchange, establishing clear guidelines for data quality and transparency, and creating platforms that facilitate seamless collaboration among various entities are critical steps to advance collaborative LCA data development174.

To fully realize these benefits, the LCA community must work together to integrate emerging technologies into existing databases and tools. Developing a future-ready infrastructure that leverages cutting-edge technologies will be crucial for building a more open, secure and efficient data ecosystem. These advancements will empower researchers, corporations, the public and policymakers to make more informed decisions.

Change history

25 November 2025

A Correction to this paper has been published: https://doi.org/10.1038/s44359-025-00127-0

References

Hellweg, S., Benetto, E., Huijbregts, M. A. J., Verones, F. & Wood, R. Life-cycle assessment to guide solutions for the triple planetary crisis. Nat. Rev. Earth Environ. 4, 471–486 (2023).

Granovskii, M., Dincer, I. & Rosen, M. A. Exergetic life cycle assessment of hydrogen production from renewables. J. Power Sources 167, 461–471 (2007).

Hashemi, F., Mogensen, L., Van Der Werf, H. M. G., Cederberg, C. & Knudsen, M. T. Organic food has lower environmental impacts per area unit and similar climate impacts per mass unit compared to conventional. Commun. Earth Environ. 5, 250 (2024).

Moore, D. et al. Offsetting environmental impacts beyond climate change: the circular ecosystem compensation approach. J. Environ. Manage. 329, 117068 (2023).

Horesh, N., Trinko, D. A. & Quinn, J. C. Comparing costs and climate impacts of various electric vehicle charging systems across the United States. Nat. Commun. 15, 4680 (2024).

Bilich, A. et al. Life cycle assessment of solar photovoltaic microgrid systems in off-grid communities. Environ. Sci. Technol. 51, 1043–1052 (2017).

Dias, P. et al. Comprehensive recycling of silicon photovoltaic modules incorporating organic solvent delamination — technical, environmental and economic analyses. Resour. Conserv. Recycl. 165, 105241 (2021).

Bonou, A., Laurent, A. & Olsen, S. I. Life cycle assessment of onshore and offshore wind energy-from theory to application. Appl. Energy 180, 327–337 (2016).

Chandel, S. S. & Agarwal, T. Review of cooling techniques using phase change materials for enhancing efficiency of photovoltaic power systems. Renew. Sustain. Energy Rev. 73, 1342–1351 (2017).

Khoo, H. H. LCA of plastic waste recovery into recycled materials, energy and fuels in Singapore. Resour. Conserv. Recycl. 145, 67–77 (2019).

Olanrewaju, O. I., Enegbuma, W. I. & Donn, M. Challenges in life cycle assessment implementation for construction environmental product declaration development: a mixed approach and global perspective. Sustain. Prod. Consum. 49, 502–528 (2024).

Liu, H., Huang, Y., Yuan, H., Yin, X. & Wu, C. Life cycle assessment of biofuels in China: status and challenges. Renew. Sustain. Energy Rev. 97, 301–322 (2018).

Hellweg, S. & Milà I Canals, L. Emerging approaches, challenges and opportunities in life cycle assessment. Science 344, 1109–1113 (2014).

Reap, J., Roman, F., Duncan, S. & Bras, B. A survey of unresolved problems in life cycle assessment: part 1: goal and scope and inventory analysis. Int. J. Life Cycle Assess. 13, 290–300 (2008).

Corominas, L. L. et al. Life cycle assessment applied to wastewater treatment: state of the art. Water Res. 47, 5480–5492 (2013).

Röck, M. et al. Embodied GHG emissions of buildings – the hidden challenge for effective climate change mitigation. Appl. Energy 258, 114107 (2020).

International Organization for Standardization. ISO 14040:2006 Environmental Management — Life Cycle Assessment — Principles and Framework (ISO, 2006).

International Organization for Standardization. ISO 14044:2006 Environmental Management — Life Cycle Assessment — Requirements and Guidelines (ISO, 2006).

Saavedra-Rubio, K. et al. Stepwise guidance for data collection in the life cycle inventory (LCI) phase: building technology-related LCI blocks. J. Clean. Prod. 366, 132903 (2022).

International Organization for Standardization. ISO 14067:2018 Greenhouse Gases — Carbon Footprint of Products — Requirements and Guidelines for Quantification (ISO, 2018).

Miah, J. H. et al. A framework for increasing the availability of life cycle inventory data based on the role of multinational companies. Int. J. Life Cycle Assess. 23, 1744–1760 (2018).

Kellens, K., Dewulf, W., Overcash, M., Hauschild, M. Z. & Duflou, J. R. Methodology for systematic analysis and improvement of manufacturing unit process life-cycle inventory (UPLCI) — CO2PE! initiative (cooperative effort on process emissions in manufacturing). Part 1: Methodology description. Int. J. Life Cycle Assess. 17, 69–78 (2012).

Baitz, M. et al. LCA’s theory and practice: like ebony and ivory living in perfect harmony? Int. J. Life Cycle Assess. 18, 5–13 (2013).

Silvestre, J. D., Lasvaux, S., Hodková, J., de Brito, J. & Pinheiro, M. D. NativeLCA — a systematic approach for the selection of environmental datasets as generic data: application to construction products in a national context. Int. J. Life Cycle Assess. 20, 731–750 (2015).

European Commission, Joint Research Centre & Institute for Environment and Sustainability. International Reference Life Cycle Data System (ILCD) Handbook: General Guide for Life Cycle Assessment — Detailed Guidance (Publications Office of the European Union, 2010).

Zhang, A., Zhong, R. Y., Farooque, M., Kang, K. & Venkatesh, V. G. Blockchain-based life cycle assessment: an implementation framework and system architecture. Resour. Conserv. Recycl. 152, 104512 (2020).

Testa, F., Nucci, B., Tessitore, S., Iraldo, F. & Daddi, T. Perceptions on LCA implementation: evidence from a survey on adopters and nonadopters in Italy. Int. J. Life Cycle Assess. 21, 1501–1513 (2016).

Velandia Vargas, J. E., Falco, D. G., da Silva Walter, A. C., Cavaliero, C. K. N. & Seabra, J. E. A. Life cycle assessment of electric vehicles and buses in Brazil: effects of local manufacturing, mass reduction, and energy consumption evolution. Int. J. Life Cycle Assess. 24, 1878–1897 (2019).

Corominas, L. et al. The application of life cycle assessment (LCA) to wastewater treatment: a best practice guide and critical review. Water Res. 184, 116058 (2020).

Hospido, A., Vazquez, M. E., Cuevas, A., Feijoo, G. & Moreira, M. T. Environmental assessment of canned tuna manufacture with a life-cycle perspective. Resour. Conserv. Recycl. 47, 56–72 (2006).

Hou, P., Cai, J., Qu, S. & Xu, M. Estimating missing unit process data in life cycle assessment using a similarity-based approach. Environ. Sci. Technol. 52, 5259–5267 (2018).

Miranda Xicotencatl, B. et al. Data implementation matters: effect of software choice and LCI database evolution on a comparative LCA study of permanent magnets. J. Ind. Ecol. 27, 1252–1265 (2023).

Khoo, H. H., Isoni, V. & Sharratt, P. N. LCI data selection criteria for a multidisciplinary research team: LCA applied to solvents and chemicals. Sustain. Prod. Consum. 16, 68–87 (2018).

Roche, L., Muhl, M. & Finkbeiner, M. Cradle-to-gate life cycle assessment of iodine production from caliche ore in Chile. Int. J. Life Cycle Assess. 28, 1132–1141 (2023).

Vigon, B. et al. Review of LCA datasets in three emerging economies: a summary of learnings. Int. J. Life Cycle Assess. 22, 1658–1665 (2017).

Wang, S. et al. Life cycle assessment and life cycle cost of sludge dewatering, conditioned with Fe2+/H2O2, Fe2+/Ca(ClO)2, Fe2+/Na2S2O8, and Fe3+/CaO based on pilot-scale study data. ACS Sustain. Chem. Eng. 11, 7798–7808 (2023).

Bruijn, H. et al. (eds) Handbook on Life Cycle Assessment: Operational Guide to the ISO Standards (Springer, 2002).

Seto, K. E., Panesar, D. K. & Churchill, C. J. Criteria for the evaluation of life cycle assessment software packages and life cycle inventory data with application to concrete. Int. J. Life Cycle Assess. 22, 694–706 (2017).

Viere, T. et al. Teaching life cycle assessment in higher education. Int. J. Life Cycle Assess. 26, 511–527 (2021).

Yeung, J. et al. An open building information modelling based co-simulation architecture to model building energy and environmental life cycle assessment: a case study on two buildings in the United Kingdom and Luxembourg. Renew. Sustain. Energy Rev. 183, 113419 (2023).

Mahmud, M. A. P., Huda, N., Farjana, S. H. & Lang, C. Life-cycle impact assessment of renewable electricity generation systems in the United States. Renew. Energy 151, 1028–1045 (2020).

Pan, W. & Teng, Y. A systematic investigation into the methodological variables of embodied carbon assessment of buildings. Renew. Sustain. Energy Rev. 141, 110840 (2021).

Edelen, A. & Ingwersen, W. W. The creation, management, and use of data quality information for life cycle assessment. Int. J. Life Cycle Assess. 23, 759–772 (2018).

Curran, M. A. & Young, S. B. Critical review: a summary of the current state-of-practice. Int. J. Life Cycle Assess. 19, 1667–1673 (2014).

Li, J., Tian, Y. & Xie, K. Coupling big data and life cycle assessment: a review, recommendations, and prospects. Ecol. Indic. 153, 110455 (2023).

Wernet, G. et al. The ecoinvent database version 3 (part I): overview and methodology. Int. J. Life Cycle Assess. 21, 1218–1230 (2016).

Althaus, H.-J. & Classen, M. Life cycle inventories of metals and methodological aspects of inventorying material resources in ecoinvent. Int. J. Life Cycle Assess. 10, 43–49 (2005).

Martínez-Arce, A., O’Flaherty, V. & Styles, D. State-of-the-art in assessing the environmental performance of anaerobic digestion biorefineries. Resour. Conserv. Recycl. 207, 107660 (2024).

Duval-Dachary, S. et al. Life cycle assessment of bioenergy with carbon capture and storage systems: critical review of life cycle inventories. Renew. Sustain. Energy Rev. 183, 113415 (2023).

Nitschelm, L. et al. Improving estimates of nitrogen emissions for life cycle assessment of cropping systems at the scale of an agricultural territory. Environ. Sci. Technol. 52, 1330–1338 (2018).

Bamana, G., Miller, J. D., Young, S. L. & Dunn, J. B. Addressing the social life cycle inventory analysis data gap: insights from a case study of cobalt mining in the Democratic Republic of the Congo. One Earth 4, 1704–1714 (2021).

Ding, S., Cucurachi, S., Tukker, A. & Ward, H. The environmental benefits and burdens of RFID systems in Li-ion battery supply chains — an ex-ante LCA approach. Resour. Conserv. Recycl. 209, 107829 (2024).

Frischknecht, R. et al. The ecoinvent database: overview and methodological framework (7 pp). Int. J. Life Cycle Assess. 10, 3–9 (2005).

Lasvaux, S., Habert, G., Peuportier, B. & Chevalier, J. Comparison of generic and product-specific life cycle assessment databases: application to construction materials used in building LCA studies. Int. J. Life Cycle Assess. 20, 1473–1490 (2015).

Ingwersen, W. W. et al. A new data architecture for advancing life cycle assessment. Int. J. Life Cycle Assess. 20, 520–526 (2015).

Liu, B. & Rajagopal, D. Life-cycle energy and climate benefits of energy recovery from wastes and biomass residues in the United States. Nat. Energy 4, 700–708 (2019).

Aryan, Y., Dikshit, A. K. & Shinde, A. M. A critical review of the life cycle assessment studies on road pavements and road infrastructures. J. Environ. Manage. 336, 117697 (2023).

Kim, T., Benavides, P. T., Kneifel, J. D., Beers, K. L. & Hawkins, T. R. Cross-database comparisons on the greenhouse gas emissions, water consumption, and fossil-fuel use of plastic resin production and their post-use phase impacts. Resour. Conserv. Recycl. 198, 107168 (2023).

Gavankar, S. & Suh, S. Fusion of conflicting information for improving representativeness of data used in LCAs. Int. J. Life Cycle Assess. 19, 480–490 (2014).

Yang, Z. et al. Life cycle assessment and cost analysis for copper hydrometallurgy industry in China. J. Environ. Manage. 309, 114689 (2022).

Sanjuan-Delmás, D. et al. Environmental assessment of copper production in Europe: an LCA case study from Sweden conducted using two conventional software-database setups. Int. J. Life Cycle Assess. 27, 255–266 (2022).

Maalouf, A., Okoroafor, T., Jehl, Z., Babu, V. & Resalati, S. A comprehensive review on life cycle assessment of commercial and emerging thin-film solar cell systems. Renew. Sustain. Energy Rev. 186, 113652 (2023).

Agostini, A., Colauzzi, M. & Amaducci, S. Innovative agrivoltaic systems to produce sustainable energy: an economic and environmental assessment. Appl. Energy 281, 116102 (2021).

Turner, D. A., Williams, I. D. & Kemp, S. Greenhouse gas emission factors for recycling of source-segregated waste materials. Resour. Conserv. Recycl. 105, 186–197 (2015).

Terlouw, T., Bauer, C., Rosa, L. & Mazzotti, M. Life cycle assessment of carbon dioxide removal technologies: a critical review. Energy Environ. Sci. 14, 1701–1721 (2021).

Six, L. et al. Using the product environmental footprint for supply chain management: lessons learned from a case study on pork. Int. J. Life Cycle Assess. 22, 1354–1372 (2017).

Coulon, R., Camobreco, V., Teulon, H. & Besnainou, J. Data quality and uncertainty in LCI. Int. J. Life Cycle Assess. 2, 178 (1997).

Li, T., Zhang, H., Liu, Z., Ke, Q. & Alting, L. A system boundary identification method for life cycle assessment. Int. J. Life Cycle Assess. 19, 646–660 (2014).

Durucan, S., Korre, A. & Munoz-Melendez, G. Mining life cycle modelling: a cradle-to-gate approach to environmental management in the minerals industry. J. Clean. Prod. 14, 1057–1070 (2006).

Bishop, G., Styles, D. & Lens, P. N. L. Environmental performance comparison of bioplastics and petrochemical plastics: a review of life cycle assessment (LCA) methodological decisions. Resour. Conserv. Recycl. 168, 105451 (2021).

Hertwich, E. et al. Nullius in Verba1: advancing data transparency in industrial ecology. J. Ind. Ecol. 22, 6–17 (2018).

Khadem, S. A., Bensebaa, F. & Pelletier, N. Optimized feed-forward neural networks to address CO2-equivalent emissions data gaps — application to emissions prediction for unit processes of fuel life cycle inventories for Canadian provinces. J. Clean. Prod. 332, 130053 (2022).

Nordelöf, A., Grunditz, E., Tillman, A.-M., Thiringer, T. & Alatalo, M. A scalable life cycle inventory of an electrical automotive traction machine — Part I: Design and composition. Int. J. Life Cycle Assess. 23, 55–69 (2018).

Williams, E. D., Weber, C. L. & Hawkins, T. R. Hybrid framework for managing uncertainty in life cycle inventories. J. Ind. Ecol. 13, 928–944 (2009).

Teng, Y., Li, C. Z., Shen, G. Q. P., Yang, Q. & Peng, Z. The impact of life cycle assessment database selection on embodied carbon estimation of buildings. Build. Environ. 243, 110648 (2023).

Safari, K. & AzariJafari, H. Challenges and opportunities for integrating BIM and LCA: methodological choices and framework development. Sustain. Cities Soc. 67, 102728 (2021).

Rybaczewska-Błażejowska, M. & Jezierski, D. Comparison of ReCiPe 2016, ILCD 2011, CML-IA baseline and IMPACT 2002+ LCIA methods: a case study based on the electricity consumption mix in Europe. Int. J. Life Cycle Assess. 29, 1799–1817 (2024).

United Nations Environment Programme. ecoinventEFv3.7-ILCD-EFv3.0.xlsx [data set]. GitHub https://github.com/UNEP-Economy-Division/GLAD-ElementaryFlowResources/blob/master/Mapping/Output/Mapped_files/ecoinventEFv3.7-ILCD-EFv3.0.xlsx (2022).

Huijbregts, M. A. J. et al. ReCiPe2016: a harmonised life cycle impact assessment method at midpoint and endpoint level. Int. J. Life Cycle Assess. 22, 138–147 (2017).

Guo, J. et al. Shedding light on the shadows transparency challenge in background life cycle inventory data. J. Ind. Ecol. 29, 766–776 (2025).

Intergovernmental Panel on Climate Change. Climate Change 2023: Synthesis Report (IPCC, 2023).

Pauliuk, S. Critical appraisal of the circular economy standard BS 8001:2017 and a dashboard of quantitative system indicators for its implementation in organizations. Resour. Conserv. Recycl. 129, 81–92 (2018).

Frischknecht, R. Notions on the design and use of an ideal regional or global LCA database. Int. J. Life Cycle Assess. 11, 40–48 (2006).

Hosseinzadeh-Bandbafha, H., Tabatabaei, M., Aghbashlo, M., Khanali, M. & Demirbas, A. A comprehensive review on the environmental impacts of diesel/biodiesel additives. Energy Convers. Manag. 174, 579–614 (2018).

Hischier, R. et al. Guidelines for consistent reporting of exchanges/to nature within life cycle inventories (LCI). Int. J. Life Cycle Assess. 6, 192 (2001).

Arshad, F. et al. Life cycle assessment of lithium-ion batteries: a critical review. Resour. Conserv. Recycl. 180, 106164 (2022).

Gallego-Schmid, A. & Tarpani, R. R. Z. Life cycle assessment of wastewater treatment in developing countries: a review. Water Res. 153, 63–79 (2019).

Igos, E., Benetto, E., Meyer, R., Baustert, P. & Othoniel, B. How to treat uncertainties in life cycle assessment studies? Int. J. Life Cycle Assess. 24, 794–807 (2019).

ecoinvent. lcia/3.10/methods_mapped. GitHub https://github.com/ecoinvent/lcia/tree/master/3.10/methods_mapped (2024).

Bergerson, J. A. et al. Life cycle assessment of emerging technologies: evaluation techniques at different stages of market and technical maturity. J. Ind. Ecol. 24, 11–25 (2020).

Wilkinson, M. D. et al. The FAIR guiding principles for scientific data management and stewardship. Sci. Data 3, 160018 (2016).

Recchioni, M., Blengini, G. A., Fazio, S., Mathieux, F. & Pennington, D. Challenges and opportunities for web-shared publication of quality-assured life cycle data: the contributions of the life cycle data network. Int. J. Life Cycle Assess. 20, 895–902 (2015).

Liu, J. et al. New indices to capture the evolution characteristics of urban expansion structure and form. Ecol. Indic. 122, 107302 (2021).

Ohms, P., Andersen, C., Landgren, M. & Birkved, M. Decision support for large-scale remediation strategies by fused urban metabolism and life cycle assessment. Int. J. Life Cycle Assess. 24, 1254–1268 (2019).

Feifel, S., Walk, W. & Wursthorn, S. LCA, how are you doing today? A snapshot from the 5th German LCA workshop. Int. J. Life Cycle Assess. 15, 139–142 (2010).

Wang, X. et al. Emergy analysis of grain production systems on large-scale farms in the North China plain based on LCA. Agric. Syst. 128, 66–78 (2014).

Hoogmartens, R., Van Passel, S., Van Acker, K. & Dubois, M. Bridging the gap between LCA, LCC and CBA as sustainability assessment tools. Environ. Impact Assess. Rev. 48, 27–33 (2014).

Ghose, A. Can LCA be FAIR? Assessing the status quo and opportunities for FAIR data sharing. Int. J. Life Cycle Assess. 29, 733–744 (2024).

Donaldson, A. & PRé Sustainability. How to Export from SimaPro to ILCD Packages (PRé Sustainability, 2023).

Safwat, S. M., Mohamed, N. Y. & El-Seddik, M. M. Performance evaluation and life cycle assessment of electrocoagulation process for manganese removal from wastewater using titanium electrodes. J. Environ. Manage. 328, 116967 (2023).

Zeug, W., Bezama, A. & Thrän, D. A framework for implementing holistic and integrated life cycle sustainability assessment of regional bioeconomy. Int. J. Life Cycle Assess. 26, 1998–2023 (2021).

International Organization for Standardization. ISO 14025:2006 Environmental Labels and Declarations — Type III Environmental Declarations — Principles and Procedures (ISO, 2006).

Sugiyama, H., Fukushima, Y., Hirao, M., Hellweg, S. & Hungerbühler, K. Using standard statistics to consider uncertainty in industry-based life cycle inventory databases. Int. J. Life Cycle Assess. 10, 399–405 (2005).

Hélias, A. & Servien, R. Normalization in LCA: how to ensure consistency? Int. J. Life Cycle Assess. 26, 1117–1122 (2021).

Herrmann, I. T., Hauschild, M. Z., Sohn, M. D. & McKone, T. E. Confronting uncertainty in life cycle assessment used for decision support. J. Ind. Ecol. 18, 366–379 (2014).

Duan, Y., Dai, M., Wang, J. & Wang, Y. Bamboo pulp as a sustainable alternative in China’s pulp industry: economic and environmental assessment. Environ. Impact Assess. Rev. 115, 107966 (2025).

Cai, Z. et al. Life cycle greenhouse gas emissions and mitigation opportunities of high-speed train in China. J. Clean. Prod. 504, 145422 (2025).

Collet, P., Lardon, L., Steyer, J.-P. & Hélias, A. How to take time into account in the inventory step: a selective introduction based on sensitivity analysis. Int. J. Life Cycle Assess. 19, 320–330 (2014).

Steubing, B., Mendoza Beltran, A. & Sacchi, R. Conditions for the broad application of prospective life cycle inventory databases. Int. J. Life Cycle Assess. 28, 1092–1103 (2023).

Mathe, S. Integrating participatory approaches into social life cycle assessment: the SLCA participatory approach. Int. J. Life Cycle Assess. 19, 1506–1514 (2014).

Lahtinen, S. & Yrjölä, M. Managing sustainability transformations: a managerial framing approach. J. Clean. Prod. 223, 815–825 (2019).

Seidel, C. The application of life cycle assessment to public policy development. Int. J. Life Cycle Assess. 21, 337–348 (2016).

Bjørn, A. et al. Mapping and characterization of LCA networks. Int. J. Life Cycle Assess. 18, 812–827 (2013).

Muntwyler, A., Braunschweig, A. & Rosa, F. Collaboration within and beyond the LCA community: success stories, obstacles, and solutions — 79th LCA discussion forum on life cycle assessment, 18 November 2021. Int. J. Life Cycle Assess. 27, 623–626 (2022).

Fritter, M., Lawrence, R., Marcolin, B. & Pelletier, N. A survey of life cycle inventory database implementations and architectures, and recommendations for new database initiatives. Int. J. Life Cycle Assess. 25, 1522–1531 (2020).

Lopes Silva, D. A. et al. Why using different life cycle assessment software tools can generate different results for the same product system? A cause–effect analysis of the problem. Sustain. Prod. Consum. 20, 304–315 (2019).

TianGong LCA. TianGong LCA data system (TIDAS) — introduction. TianGong LCA https://tidas.tiangong.earth/en/docs/intro (2025).

Edelen, A. et al. Critical review of elementary flows in LCA data. Int. J. Life Cycle Assess. 23, 1261–1273 (2018).

Kuczenski, B., Davis, C. B., Rivela, B. & Janowicz, K. Semantic catalogs for life cycle assessment data. J. Clean. Prod. 137, 1109–1117 (2016).

Yang, S. et al. Cement production life cycle inventory dataset for China. Resour. Conserv. Recycl. 197, 107064 (2023).

Jegen, M. Life cycle assessment: from industry to policy to politics. Int. J. Life Cycle Assess. 29, 597–606 (2024).

Rosenbaum, R. K. et al. USEtox — the UNEP-SETAC toxicity model: recommended characterisation factors for human toxicity and freshwater ecotoxicity in life cycle impact assessment. Int. J. Life Cycle Assess. 13, 532–546 (2008).

Sonnemann, G. et al. Process on ‘global guidance for LCA databases’. Int. J. Life Cycle Assess. 16, 95–97 (2011).

Moreno, O. A. V. et al. Implementation of life cycle management practices in a cluster of companies in bogota, colombia. Int. J. Life Cycle Assess. 20, 723–730 (2015).

Pauliuk, S., Majeau-Bettez, G., Mutel, C. L., Steubing, B. & Stadler, K. Lifting industrial ecology modeling to a new level of quality and transparency: a call for more transparent publications and a collaborative open source software framework. J. Ind. Ecol. 19, 937–949 (2015).

Stephan, A., Crawford, R. H. & Bontinck, P.-A. A model for streamlining and automating path exchange hybrid life cycle assessment. Int. J. Life Cycle Assess. 24, 237–252 (2019).

Ciroth, A. ICT for environment in life cycle applications openLCA — a new open source software for life cycle assessment. Int. J. Life Cycle Assess. 12, 209–210 (2007).

Testa, F., Tessitore, S., Buttol, P., Iraldo, F. & Cortesi, S. How to overcome barriers limiting LCA adoption? The role of a collaborative and multi-stakeholder approach. Int. J. Life Cycle Assess. 27, 944–958 (2022).

Jørgensen, A., Hauschild, M. Z., Jørgensen, M. S. & Wangel, A. Relevance and feasibility of social life cycle assessment from a company perspective. Int. J. Life Cycle Assess. 14, 204–214 (2009).

Speck, R., Selke, S., Auras, R. & Fitzsimmons, J. Life cycle assessment software: selection can impact results. J. Ind. Ecol. 20, 18–28 (2016).

Moreira, N., de Santa-Eulalia, L. A., Aït-Kadi, D., Wood-Harper, T. & Wang, Y. A conceptual framework to develop green textiles in the aeronautic completion industry: a case study in a large manufacturing company. J. Clean. Prod. 105, 371–388 (2015).

De Wolf, C., Cordella, M., Dodd, N., Byers, B. & Donatello, S. Whole life cycle environmental impact assessment of buildings: developing software tool and database support for the EU framework level(s). Resour. Conserv. Recycl. 188, 106642 (2023).

Heidari, M. D., Mathis, D., Blanchet, P. & Amor, B. Streamlined life cycle assessment of an innovative bio-based material in construction: a case study of a phase change material panel. Forests 10, 160 (2019).

Jeong, M.-G., Morrison, J. R. & Suh, H.-W. Approximate life cycle assessment via case-based reasoning for eco-design. IEEE Trans. Autom. Sci. Eng. 12, 716–728 (2015).

Finogenova, N., Bach, V., Berger, M. & Finkbeiner, M. Hybrid approach for the evaluation of organizational indirect impacts (AVOID): combining product-related, process-based, and monetary-based methods. Int. J. Life Cycle Assess. 24, 1058–1074 (2019).

Ghose, A., Lissandrini, M., Hansen, E. R. & Weidema, B. P. A core ontology for modeling life cycle sustainability assessment on the semantic web. J. Ind. Ecol. 26, 731–747 (2022).

Turner, I., Smart, A., Adams, E. & Pelletier, N. Building an ILCD/EcoSPOLD2–compliant data-reporting template with application to Canadian agri-food LCI data. Int. J. Life Cycle Assess. 25, 1402–1417 (2020).

Von Greyerz, K., Tidåker, P., Karlsson, J. O. & Röös, E. A large share of climate impacts of beef and dairy can be attributed to ecosystem services other than food production. J. Environ. Manage. 325, 116400 (2023).

Mba Wright, M. et al. Life cycle inventory availability: status and prospects for leveraging new technologies. ACS Sustain. Chem. Eng. 12, 12708–12718 (2024).

Bengtsson, M., Carlson, R., Molander, S. & Steen, B. An approach for handling geographical information in life cycle assessment using a relational database. J. Hazard. Mater. 61, 67–75 (1998).

Meinshausen, I., Müller-Beilschmidt, P. & Viere, T. The EcoSpold 2 format — why a new format? Int. J. Life Cycle Assess. 21, 1231–1235 (2016).

Marsh, E., Allen, S. & Hattam, L. Tackling uncertainty in life cycle assessments for the built environment: a review. Build. Environ. 231, 109941 (2023).

Frischknecht, R. et al. The ecoinvent database: overview and methodological framework. Int. J. Life Cycle Assess. 10, 3–9 (2005).

Spatari, S., Betz, M., Florin, H., Baitz, M. & Faltenbacher, M. Using GaBi 3 to perform life cycle assessment and life cycle engineering. Int. J. Life Cycle Assess. 6, 81–84 (2001).

Steubing, B. & De Koning, D. Making the use of scenarios in LCA easier: the superstructure approach. Int. J. Life Cycle Assess. 26, 2248–2262 (2021).

Vandepaer, L. & Gibon, T. The integration of energy scenarios into LCA: LCM2017 conference workshop, Luxembourg, September 5, 2017. Int. J. Life Cycle Assess. 23, 970–977 (2018).

Toniolo, S., Pierli, G., Bravi, L., Liberatore, L. & Murmura, F. Digital technologies and circularity: trade-offs in the development of life cycle assessment. Int. J. Life Cycle Assess. https://doi.org/10.1007/s11367-025-02436-9 (2025).

Ostojic, S. & Traverso, M. Application of life cycle sustainability assessment in the automotive sector — a systematic literature review. Sustain. Prod. Consum. 47, 105–127 (2024).

Valente, A. et al. Elementary flow mapping across life cycle inventory data systems: a case study for data interoperability under the Global Life Cycle Assessment Data Access (GLAD) initiative. Int. J. Life Cycle Assess. 29, 789–802 (2024).

Mancini, L. et al. Potential of life cycle assessment for supporting the management of critical raw materials. Int. J. Life Cycle Assess. 20, 100–116 (2015).

Bartl, K., Verones, F. & Hellweg, S. Life cycle assessment based evaluation of regional impacts from agricultural production at the Peruvian coast. Environ. Sci. Technol. 46, 9872–9880 (2012).

Dervishaj, A. & Gudmundsson, K. From LCA to circular design: a comparative study of digital tools for the built environment. Resour. Conserv. Recycl. 200, 107291 (2024).

Petricek, T. et al. AI assistants: a framework for semi-automated data wrangling. IEEE Trans. Knowl. Data Eng. 35, 9295–9306 (2023).

Richards, D., Worden, D., Song, X. P. & Lavorel, S. Harnessing generative artificial intelligence to support nature-based solutions. People Nat. 6, 882–893 (2024).

Ghoroghi, A., Rezgui, Y., Petri, I. & Beach, T. Advances in application of machine learning to life cycle assessment: a literature review. Int. J. Life Cycle Assess. 27, 433–456 (2022).

Cornago, S., Ramakrishna, S. & Low, J. S. C. How can transformers and large language models like ChatGPT help LCA practitioners? Resour. Conserv. Recycl. 196, 107062 (2023).

Pigné, Y. et al. A tool to operationalize dynamic LCA, including time differentiation on the complete background database. Int. J. Life Cycle Assess. 25, 267–279 (2020).

Beemsterboer, S., Baumann, H. & Wallbaum, H. Ways to get work done: a review and systematisation of simplification practices in the LCA literature. Int. J. Life Cycle Assess. 25, 2154–2168 (2020).

McArthur, S. D. J. et al. Multi-agent systems for power engineering applications — Part I: Concepts, approaches, and technical challenges. IEEE Trans. Power Syst. 22, 1743–1752 (2007).

Wu, Y., Wang, J., Miao, X., Wang, W. & Yin, J. Differentiable and scalable generative adversarial models for data imputation. IEEE Trans. Knowl. Data Eng. 36, 490–503 (2024).

Chen, J. et al. Zero-shot and few-shot learning with knowledge graphs: a comprehensive survey. Proc. IEEE 111, 653–685 (2023).

Duch, W., Setiono, R. & Zurada, J. M. Computational intelligence methods for rule-based data understanding. Proc. IEEE 92, 771–805 (2004).

Omoyele, O. et al. Increasing the resolution of solar and wind time series for energy system modeling: a review. Renew. Sustain. Energy Rev. 189, 113792 (2024).

Chen, M., Shao, H., Dou, H., Li, W. & Liu, B. Data augmentation and intelligent fault diagnosis of planetary gearbox using ILoFGAN under extremely limited samples. IEEE Trans. Reliab. 72, 1029–1037 (2023).

Ren, Z. J. et al. Data science for advancing environmental science, engineering, and technology. Environ. Sci. Technol. Lett. 10, 963–964 (2023).

Damert, M., Feng, Y., Zhu, Q. & Baumgartner, R. J. Motivating low-carbon initiatives among suppliers: the role of risk and opportunity perception. Resour. Conserv. Recycl. 136, 276–286 (2018).

Tu, Q., Guo, J., Li, N., Qi, J. & Xu, M. Mitigating grand challenges in life cycle inventory modeling through the applications of large language models. Environ. Sci. Technol. 58, 19595–19603 (2024).

Balaji, B. et al. Emission factor recommendation for life cycle assessments with generative AI. Environ. Sci. Technol. 59, 9113–9122 (2025).

Gu, X., Chen, C., Fang, Y., Mahabir, R. & Fan, L. CECA: an intelligent large-language-model-enabled method for accounting embodied carbon in buildings. Build. Environ. 272, 112694 (2025).

Preuss, N., Alshehri, A. S. & You, F. Large language models for life cycle assessments: opportunities, challenges, and risks. J. Clean. Prod. 466, 142824 (2024).

Su, S., Ju, J., Yuan, J., Chang, Y. & Li, Q. Interactive and dynamic insights into environmental impacts of a neighborhood: a tight coupling of multi-agent system and dynamic life cycle assessment. Environ. Impact Assess. Rev. 110, 107708 (2025).

TianGong LCA. TianGong LCA data system (TIDAS) — MCP AI services. TianGong LCA https://tidas.tiangong.earth/en/docs/integration/tidas-to-ai/ (2025).

Panesar, D. K., Seto, K. E. & Churchill, C. J. Impact of the selection of functional unit on the life cycle assessment of green concrete. Int. J. Life Cycle Assess. 22, 1969–1986 (2017).

Ingwersen, W. W., Kahn, E. & Cooper, J. Bridge processes: a solution for LCI datasets independent of background databases. Int. J. Life Cycle Assess. 23, 2266–2270 (2018).

Zhang, P. et al. BC-EdgeFL: a defensive transmission model based on blockchain-assisted reinforced federated learning in IIoT environment. IEEE Trans. Ind. Inform. 18, 3551–3561 (2022).

Lei, Y.-T. et al. A renewable energy microgrids trading management platform based on permissioned blockchain. Energy Econ. 115, 106375 (2022).

Bellaj, B., Ouaddah, A., Bertin, E., Crespi, N. & Mezrioui, A. Drawing the boundaries between blockchain and blockchain-like systems: a comprehensive survey on distributed ledger technologies. Proc. IEEE 112, 247–299 (2024).

Ribeiro da Silva, E., Lohmer, J., Rohla, M. & Angelis, J. Unleashing the circular economy in the electric vehicle battery supply chain: a case study on data sharing and blockchain potential. Resour. Conserv. Recycl. 193, 106969 (2023).

Shou, M. & Domenech, T. Integrating LCA and blockchain technology to promote circular fashion – a case study of leather handbags. J. Clean. Prod. 373, 133557 (2022).

Kirli, D. et al. Smart contracts in energy systems: a systematic review of fundamental approaches and implementations. Renew. Sustain. Energy Rev. 158, 112013 (2022).

Han, D. et al. A blockchain-based auditable access control system for private data in service-centric IoT environments. IEEE Trans. Ind. Inform. 18, 3530–3540 (2022).

Tang, J. & Ding, W. Assessing local economic impact of urban energy transition through optimized resource allocation in renewable energy. Sustain. Cities Soc. 107, 105433 (2024).

Pei, J. A survey on data pricing: from economics to data science. IEEE Trans. Knowl. Data Eng. 34, 4586–4608 (2022).

Zhang, S., Rong, J. & Wang, B. A privacy protection scheme of smart meter for decentralized smart home environment based on consortium blockchain. Int. J. Electr. Power Energy Syst. 121, 106140 (2020).

Mu, C. et al. Energy block-based peer-to-peer contract trading with secure multi-party computation in nanogrid. IEEE Trans. Smart Grid 13, 4759–4772 (2022).

Mutel, C. L., Pfister, S. & Hellweg, S. GIS-based regionalized life cycle assessment: how big is small enough? Methodology and case study of electricity generation. Environ. Sci. Technol. 46, 1096–1103 (2012).

Li, J., Tian, Y., Zhang, Y. & Xie, K. Spatializing environmental footprint by integrating geographic information system into life cycle assessment: a review and practice recommendations. J. Clean. Prod. 323, 129113 (2021).