Abstract

Accurate differential diagnosis of dementia is essential for guiding timely treatment, particularly as anti-amyloid therapies become more widely available and require precise patient characterization. Here, we developed a radiomics-based machine learning (ML) approach to enhance neuroimaging assessments in distinguishing Alzheimer’s disease (AD) from other imaging-evident dementias (OIED). We retrospectively analyzed 1041 individuals from the National Alzheimer’s Coordinating Center with confirmed dementia diagnoses and at least one T1 or T2/FLAIR MRI scan. Using FastSurfer and a Lesion Prediction Algorithm, we extracted volumetric and lesion features, which were then used to train ML models. Model performance was compared to the independent evaluations of seven fellowship-trained neuroradiologists. The classifier achieved an AUROC of 0.79 ± 0.01 for AD and 0.66 ± 0.03 for OIED, performing comparably to expert assessments. Interpretation using SHAP values showed strong alignment with imaging features known to align with AD or OIED, respectively. These findings highlight the potential of radiomics to augment neuroimaging workflows.

Similar content being viewed by others

Introduction

Dementia is a clinical syndrome characterized by progressive cognitive impairment resulting from a variety of underlying causes. These causes frequently overlap, making it challenging to pinpoint the specific pathological diagnosis. Neuroimaging assessments play an important role in the diagnostic workup of dementia1. Due to overlapping imaging features and subtle abnormalities, interobserver variability among neuroradiologists can complicate accurate diagnosis2. Recent advancements in novel anti-amyloid therapies for Alzheimer’s disease (AD) further underscore the importance of accurate radiological assessments, as these interventions rely on precise identification of suitable candidates with specific neurodegenerative etiologies. For instance, current guidelines for lecanemab specify that brain MRI findings indicative of non-AD dementias serve as an exclusion criterion for clinical use3. Similarly, donanemab appropriate use recommendations also exclude individuals with major vascular contributions to cognitive impairment, as well as significant neurological disease other than AD affecting the central nervous system4.

The primary roles of a neuroradiologist in the evaluation of a patient with suspected dementia are to exclude reversible causes of cognitive impairment and to otherwise point to atrophy of different brain regions or signal characteristics that would suggest or support AD or non-AD causes of dementia5,6,7,8. Certain types of dementia have more classic, near pathognomonic MRI features, such as cortical ribbon diffusion restriction in Creutzfeldt-Jakob disease, and the “hot cross bun sign,” which, while seen in several conditions, is classically associated with multiple system atrophy9,10. For other types of dementia, visual rating scales have been proposed for grading associated MRI findings10, such as the Fazekas scale for white matter disease, and atrophy grading for AD11. Such approaches have been demonstrated to be useful in research settings, with visual rating scales allowing clinicians to discriminate between different dementia etiologies, including AD, dementia with Lewy bodies, and frontotemporal lobar degeneration (FTLD)12. However, visual rating scales may depend on radiologist training and experience and ultimately depend upon rater subjectivity13. Radiomic analysis driven by machine learning (ML) holds promise in addressing such challenges in differential diagnosis of dementia14, especially in the context of overlapping clinical presentations and subtle imaging signatures10.

AI has been demonstrably useful in supporting neuroradiology diagnostics. Several AI models, now incorporated even by companies such as RAPID and Viz.ai15, can detect potential intracranial hemorrhage and other acute intracranial pathologies, which could be used to expedite human interpretation and surgical management or assist in diagnosis. As opposed to these more discrete classification systems, other forms of joint AI and radiologist decision systems might utilize “heat maps” with indices of suspicion to highlight areas of concern, helping radiologists to adjust their search patterns16. Collectively, supporting radiologist interpretation with AI inference has been demonstrated to improve diagnostic accuracy across several tasks17,18,19,20,21. Thus, even if a radiology practice were to utilize visual rating scales in its day-to-day workflow, we posit that the incorporation of AI-based support could prove helpful in improving accuracy and consistency.

Several studies have utilized ML models for imaging-based analyses of neurodegeneration, with the majority focused on assessing cognitive status, distinguishing AD from normal cognition22, or prognostic measures, such as conversion from moderate cognitive impairment (MCI) to AD14,23. Prognostic modeling efforts have largely centered on MCI to AD conversion using MRI, PET, CSF, and clinical features, with multimodal approaches tending to outperform single-modality approaches14,24. The TADPOLE Challenge prospectively evaluated >90 prognostic modeling algorithms on the ADNI dataset across a variety of outcomes: clinical diagnosis, ADAS-Cog13, and ventricular volume25. The study demonstrated the feasibility of forecasting future diagnoses and ventricular changes. Importantly, however, several studies have highlighted concerns about generalizability when moving beyond tightly controlled cohorts such as ADNI26,27. Methodologies used in prognostication include traditional classifiers, such as logistic regression, SVM, random forests, and CNN, but have also included novel frameworks such as SuStaIn28, which is an event-based probabilistic model that aims to uncover and model disease-specific temporal trajectories, and hybrid CNN-LSTM deep learning networks that allow for deep learning of temporal features29.

While these advancements are significant, they do not specifically address the common radiological challenge of differentiating between often overlapping dementia etiologies in individuals with cognitive impairment30. Relatively few investigations have tackled multi-etiology classifications, and those that do typically use modest, homogeneous cohorts from single centers or often ignore the practical reality of mixed dementias3. Among such studies investigating radiomic approaches for etiological classifications of dementia30, validation against expert interpretations is also rare. Of note, previous work has demonstrated that ML models were able to classify AD, frontotemporal dementias, and controls with comparable accuracy to that of radiologists31. Furthermore, a recent study by Xue and colleagues has shown that multimodal frameworks integrating structural MRI with demographic and clinical data can significantly improve the assessment of dementia etiologies32. There is also increasing interest in leveraging radiomic tools to enhance radiological workflows through assistive frameworks that complement neuroradiologist expertise. Furthermore, computerized systems could optimize clinical trial recruitment workflows, thereby accelerating the development of novel therapeutics33. These aspects underscore the importance of radiomic techniques in addressing the current challenges in dementia workup effectively.

In this study, we sought to evaluate the potential of radiomic analysis to augment neuroradiologists’ assessment of neuroimaging in the context of AD and other imaging-evident dementia (OIED) etiologies. On participant data from the National Alzheimer’s Coordinating Center (NACC), we extracted radiomic features from T1-weighted and T2/FLAIR brain MRI scans and used them to train gradient-boosted decision tree classifiers to predict the independent probabilities of AD and OIED etiologies in individuals with prior clinical diagnoses of dementia. This approach accounts for the possibility that imaging features relevant to AD as well as OIED may be present for a given subject and aligns with common neuroradiological workflows. The use of both T1 and T2/FLAIR sequences is motivated by their complementary diagnostic value and is supported by prior work demonstrating the diagnostic value of multimodal imaging: T1-weighted scans are critical for detecting cortical and subcortical atrophy typical of AD and frontotemporal dementias, while T2/FLAIR imaging is essential for identifying white matter hyperintensities (WMHs) and vascular lesions relevant to vascular and prion-related dementias34. This framework was evaluated using interpretability methodologies and was benchmarked against expert assessments from neuroradiologists to determine the extent to which the radiomic model could enhance expert evaluations.

Results

Model performance and validation

Age, gender, education, and race did not significantly differ between the testing and training groups [p > 0.05] (Table 1). ROC curves generated from the AD and OIED models, using 5-fold cross-validation, illustrated that both significantly outperformed chance alone (Fig. 1a) [p < 0.001]. Mean cross-validated area under the receiver operating characteristic curve (AUROC) for the AD model was 0.82 ± 0.05 (±sd); whereas mean AUROC for the OIED model was slightly lower at 0.76 ± 0.02. Additionally, the AD model achieved a mean average precision (AP) of 0.95 ± 0.02; whereas the OIED model achieved an AP of 0.37 ± 0.09 (Fig. 1b). Differences in AP between the AD and OIED may be exacerbated by underlying skewness in the diagnostic distribution of cases, as labels with fewer positive instances can lead to lower recall and, consequently, lower AP scores. In the test set, the Youden-index threshold yielded balanced performance for both classes—AD: sensitivity 0.49, specificity 0.79, F1 0.60; OIED: sensitivity 0.82, specificity 0.31, F1 0.58, whereas thresholds optimized for ≥95% sensitivity boosted recall but halved specificity, and thresholds optimized for ≥95% specificity did the converse, nearly eliminating false positives while sharply reducing true-case detection. Intermediate 80% targets offered a compromise, modestly favoring the chosen metric while preserving a balanced prediction profile (Supplementary Table 7). Using each model’s Youden-index threshold, we calculated mixed-etiology performance for the subset of test cases carrying both AD and OIED labels (support = 11/70), obtaining accuracy 0.70, sensitivity 0.45, specificity 0.75, precision 0.25, and F1 0.32 Among cases in the test set for which post-mortem grades were available (n = 26/70), model-predicted probability of AD was significantly associated with neurofibrillary degeneration (p < 0.001) (Fig. S1).

Cross-validated receiver operating characteristic (ROC) and precision-recall (PR) curves for the training set (n = 971) showing performance for two classification tasks: Alzheimer’s disease (AD, blue) and other imaging-evident dementias (OIED, orange). a The mean ROC curve, with the area under the curve (AUC) for AD as 0.82 ± 0.05 and for OIED as 0.76 ± 0.02. b The mean PR curve, with the average precision (AP) for AD as 0.95 ± 0.02 and for OIED as 0.37 ± 0.09. The shaded regions represent ±1 standard deviation.

Validation against neuroradiologist assessments

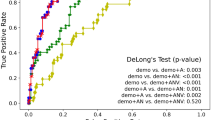

Neuroradiologist interrater agreement with respect to etiological classification was moderate. The mean Spearman correlation coefficient between unique neuroradiologist pairs for the classification of AD was 0.46 (95% CI: 0.23, 0.62). For OIED, it was 0.33 (95% CI: −0.07, 0.62). The estimated performance of the neuroradiologist augmented by the model was derived by averaging neuroradiologist confidence scores (0–100%) with model probabilities (0–100%) at equal weights for each diagnostic label and case (Fig. 2). The performance of the neuroradiologist combined with the model outperformed the neuroradiologist acting alone on both diagnostic tasks and for both performance metrics (p < 0.05). Model augmentation improved the mean AUROC and mean AP for the classification of AD by 5.44% and 5.21%, respectively. Similarly, model augmentation improved mean AUROC and mean AP for the classification of OIED by 7.64% and 8.25%, respectively. To further explore how varying levels of AI assistance may influence diagnostic performance, we evaluated the effect of different weighting ratios for the fusion of model probabilities with neuroradiologist confidence scores (Fig. S2). As measured by AUROC and AUPR, the model performance for AD improved monotonically with increasing model weight. For OIED, performance peaked at 80% model weight before declining slightly.

Performance comparison between independent neuroradiologists (n = 7) and neuroradiologists aided by the AI model for the classification of AD and OIED, based on confidence scores for (n = 70) cases (demographics detailed in Table 1). The green dashed line represents the performance of the AI model alone on the same cases. a AUROC scores for AD (left) and OIED (right). b AUPR scores for AD (left) and OIED (right). Neuroradiologist + AI model confidence scores were computed by taking the arithmetic mean of the independent neuroradiologist scores with the AI model scores for each task. The boxplots represent the distribution of neuroradiologist and neuroradiologist + AI model performance, with whiskers extending to 1.5 times the interquartile range (IQR), boxes representing the first and third quartiles, and the central line denoting the median. The orange points represent the specific data points for each neuroradiologist’s performance, connected by lines to indicate changes in performance when aided by the AI model. Statistical comparisons were conducted using a one-sided Wilcoxon signed-rank test. For AD AUROC, W = 28, p = 0.0078; for OIED AUROC, W = 28, p = 0.0078; for AD AUPR, W = 27, p = 0.016; and for OIED AUPR, W = 28, p = 0.0078. These results indicate a statistically significant improvement (α = 0.01) in performance when neuroradiologists are aided by the AI model across both classification tasks.

Model parameter-based feature importance

We derived importance metrics, including feature gain and feature weight, from the trained model parameters (Supplementary Tables 2 and 3). Parameter-derived feature importance for T1-weighted MRI highlights brain regions relevant to AD’s well-defined patterns of neurodegeneration, including the hippocampus, cingulate gyrus, and lateral temporal lobes (Fig. 3a). The model places high importance on brainstem volumes, but neuroradiologists showed poor interrater agreement for ratings of brainstem atrophy (Table 2, Figs. S4 and S5). On T2-weighted imaging, a widespread pattern affecting much of the cerebrum and basal ganglia was observed with the AD model. In contrast, OIEDs revealed more widespread brain involvement on T1 and T2 imaging, likely due to the more global brain involvement of PRD and VD and the heterogeneity of disease entities (Fig. 3c). WMHs had high feature weights for both AD (Fig. 3b) and OIEDs (Fig. 3d), likely due to the known relationships between WMHs and both VD and AD. Additionally, demographic variables such as age and sex have high feature importance across AD and OIED models (Fig. 3b, d), suggesting that imaging and demographic variables are necessary to contextualize volume loss. The influence of demographic variables such as age, race, and sex is more pronounced in AD than in OIEDs, though years of education are more significant in OIEDs than in AD35.

SHAP analysis

Regions like the right entorhinal cortex, left amygdala, right hippocampus, and inferior parietal volumes exhibited negatively correlated SHapley Additive exPlanations (SHAP) values for AD (Fig. S3), fitting with the patterns of atrophy seen in AD. Variables such as age, right hippocampal volume, and right inferior parietal volume showed positive associations for AD and negative associations for OIEDs (Fig. 3). Left lateral orbitofrontal volume was highly important for OIED predictions, likely due to the influence of volume loss seen in FTD. Supporting this observation, subgroup SHAP analysis for individuals with FTD contributing etiologies (n = 135) demonstrated high feature importance of left lateral orbitofrontal volume (Supplementary Table 4). Likewise, subgroup SHAP analysis for individuals with VD (n = 77) demonstrated high importance of white matter hypointensities (Supplementary Table 5), but PRD subgroup analysis was largely unremarkable, perhaps due to the smaller sample size (n = 31) (Supplementary Table 6).

To quantify the degree to which model SHAP values were aligned with expert assessments, neuroradiologists independently provided ratings for atrophy and pathological changes within specific brain regions, including the temporal and frontal lobes, insula, limbic systems, fusiform gyrus, and whole-brain considerations (Table 2; Figs. S4 and S5) for cases within the test set (n = 70). The calculated pairwise Spearman correlation coefficients, representing interrater reliability among the neuroradiologists, revealed varying levels of agreement. The greatest interrater agreement was found in regions commonly implicated in dementia pathologies. For example, interrater agreement was high for ratings of atrophy for the hippocampus (R ρ = 0.7, L ρ = 0.6), temporal lobes (R ρ = 0.69, L ρ = 0.66), amygdala (R ρ = 0.7, L ρ = 0.60), and to a lesser extent, the parietal lobes (R ρ = 0.48, L ρ = 0.50), coinciding with currently accepted imaging markers of AD, which in late disease, is characterized by mesial temporal lobe atrophy, particularly in the hippocampus and entorhinal and perirhinal cortices, and temporoparietal cortical atrophy. Similarly, general atrophy of the frontal lobe (ρ = 0.51) ratings had high rates of agreement, which is consistent with frontal lobe atrophy being the most characteristic imaging finding for FTD36. Agreement on the presence of hyperintensities on FLAIR imaging (0.55) was also high, in line with accepted imaging findings for VD and PRD37,38; however, other whole-brain findings, including other vascular measures, such as the presence of previous infarcts or hemorrhages, and hemisphere symmetry, had poor interrater agreement. We compared neuroradiologists’ assessments and model SHAP values for AD and OIED and found high levels of agreement on key imaging regions (Table 2). For instance, median neuroradiologist ratings of various temporal lobe structures, including the mesial temporal lobe, middle and inferior temporal gyrus, hippocampus, and amygdala atrophy generally showed strong positive associations with AD SHAP values and negative associations for OIED SHAP values (p < 0.001). Additionally, median neuroradiologist ratings of T2/FLAIR hyperintensities were strongly correlated (ρ = 0.605, p < 0.001) with AD SHAP values, which is particularly relevant given the high importance of such hyperintensity features in the trained models (Figs. 3 and S3). Frontal lobe atrophy ratings were similarly correlated with frontal lobe SHAP values for OIED (p < 0.05).

Agreement between SHAP values and neuroradiologist assessments was weaker for certain regions such as the anterior cingulate gyrus, precentral gyrus, fusiform gyrus, and cerebellum, despite high levels of interrater reliability between neuroradiologists on these areas (Table 2). It is unclear whether such discrepancies may be caused by poor discriminative capacity of these regions for AD and OIED, which does impact SHAP values, or if it truly reflects inconsistency between the model and neuroradiologists. On the other hand, neuroradiologists themselves had poor interrater reliability for other regions, such as the parahippocampus, parietal lobe, and occipital lobe, so poor agreement between neuroradiologist ratings and SHAP values for these regions was expected.

Discussion

In this study, we demonstrate the potential of a radiomics-driven ML framework to align with and potentially enhance expert neuroimaging assessments for identifying signatures of AD and OIEDs, such as vascular, frontotemporal, and prion diseases. We utilized XGBoost, a lightweight, interpretable classifier, which offers strong predictive performance, ease of implementation, the ability to handle missing data, and rapid inference. By leveraging features from T1-weighted and T2/FLAIR MRI sequences, our models captured neurodegenerative changes relevant to AD and OIED with relatively high classification performance. Interpretability analyses highlighted the model’s ability to identify imaging signatures associated with different dementia types.

The performance metrics reported in this study were relatively lower than that of existing literature39,40,41,42. Unlike many studies that use homogenous, single-center datasets, we used the multi-institutional NACC cohort, which introduces variability in imaging protocols and populations. We also included individuals with mixed pathologies and various comorbid conditions, reflecting real-world complexity but making classification more challenging. Additionally, our model relied solely on structural MRI and basic demographics, which are tools routinely available in radiological practice as opposed to incorporating advanced modalities like EEG/fMRI or extensive non-imaging data such as neuropsychological testing. As such, the metrics reported in this study may reflect performance under more realistic conditions. While many studies have explored AI frameworks for neuroimaging and cognitive impairment, most have focused on classifying cognitive status rather than identifying the etiological origins of dementia. In contrast, our study addresses radiologically relevant tasks by predicting the presence of AD and other etiologies in individuals already diagnosed with dementia, and accounts for co-occurring presentations of pathology.

Additionally, our work has relevance given the increasing demand for recruitment and screening in AD clinical trials33. First, by distinguishing AD from OIEDs, the model can serve as an efficient pre-screening tool to exclude individuals with non-AD etiologies who are unlikely to benefit from amyloid-targeting agents and may be at higher risk of treatment-related complications such as amyloid-related imaging abnormalities (ARIAs)43. This may reduce reliance on more costly or less accessible biomarkers like amyloid PET, streamlining participant selection and lowering screening-related costs. Second, we demonstrate that the model’s quantitative predictions augment neuroradiologist assessments, improving classification performance and suggesting the model’s potential to enhance the accuracy and efficiency of radiological screening workflows, especially where manual reads are limited or inconsistent. Future work may include expansion of the framework for the direct prediction of ARIAs to further enhance its utility in current clinical trial workflows.

Our modeling approach enables users to understand and trust the model’s output. Here, we have shown that SHAP-based analysis provides person-level insights into dementia-related imaging findings that were aligned with radiologist-interpreted neuroimaging features. Automated assessments of these features, such as atrophy, can be challenging due to the need to contextualize volume loss in relation to other brain structures and age-related changes44. Yet we have demonstrated the flexibility of our framework to handle these complex interactions without explicit handling. Future work may investigate how explicit normative modeling of imaging features compares to our framework and whether it can be integrated into the proposed framework45. Commercial quantitative MRI (qMRI) tools already leverage automated volumetry to deliver age-adjusted z-scores for individual MRI sequences46, aiding radiologists to recognize atrophy. Our framework extends the utility of such tools by integrating multisequence data (T1 and T2/FLAIR) and contextualizing dozens of z-scores into the context of specific dementia etiologies. By integrating computer models with interpretability frameworks like ours into clinical workflows, neuroradiologists could benefit from model-generated dementia probability estimates paired with feature importance maps highlighting prediction-relevant atrophy or white matter lesions, complementing the current state of qMRI tools.

Our study has a few limitations. First, as a retrospective analysis of the NACC cohort, the findings are subject to biases inherent in the data and may not fully reflect clinical scenarios. Second, our analysis of potential improvements to radiologist performance is confined to post-hoc analyses rather than true prospective studies involving human-computer-interaction. Further studies are necessary to confirm whether the integration of AI tools into clinical workflows leads to measurable improvements in accuracy and efficiency under real-world conditions. Furthermore, while the study focuses on AD and OIEDs such as vascular, frontotemporal, and prion diseases, it does not account for other neurodegenerative diseases. For instance, conditions like normal pressure hydrocephalus, which can readily be seen on imaging, were excluded due to sample size limitations. Expanding the framework to include such conditions could broaden the applicability of the model. Additionally, the sample size for certain etiologies remains a limitation, which may affect the model’s ability to generalize across rarer forms of dementia. In the same vein, the model’s generalizability to mixed-pathology cases warrants further study, given the limited performance of the model in predicting mixed-pathology cases within the test set and the relatively high incidence of mixed dementias in certain clinical settings47,48. It is possible that the limited performance is a result of the low incidence of mixed-pathology samples in the NACC training data (approximately 5%) or that these modest scores reflect the complex and nontrivial nature of identifying mixed dementias. Efforts to include larger and more diverse datasets will be critical to address these issues and improve the robustness of the predictions. Finally, our model was developed using T1-weighted and T2/FLAIR MRI sequences, which, while informative, limit its applicability to other imaging modalities. Incorporating advanced techniques such as diffusion-weighted imaging or susceptibility-weighted imaging could enhance its capacity to identify and differentiate a broader range of neurodegenerative etiologies.

Our study highlights the promise of ML-driven analysis in enhancing radiological assessments of various dementias by providing quantitative insights that complement clinician expertise. The ensemble learning classifier demonstrated robust performance in differentiating AD from OIEDs, leveraging multisequence MRIs to identify key neurodegenerative features. The incorporation of SHAP values to explain model predictions further underscores its relevance, offering transparency. While this work is retrospective, it establishes a strong foundation for future prospective studies to validate its utility in real-world settings.

Methods

Study population

This study involved secondary analysis of de-identified data from the NACC, which aggregates data collected across multiple Alzheimer’s Disease Research Centers (ADRCs) in the United States. All original data collection at the ADRCs was performed in accordance with the Declaration of Helsinki, with informed consent obtained from participants or their legal guardians and approved by the institutional review boards at each participating ADRC. No new informed consent was required for this secondary analysis, as the data were de-identified and posed no risk to participants. Participants were selected from the NACC database (2000–2022) with a clinical diagnosis of dementia and at least one T1-weighted (T1) or T2 Fluid-Attenuated Inversion Recovery (T2/FLAIR) MRI, yielding a total of 1041 individuals who met the inclusion criteria (Table 1). AD was classified as a primary, contributing, or non-contributing cause of cognitive impairment based on clinical diagnoses recorded in the NACC database. OIEDs included vascular pathologies (VD), FTLD and its variants (e.g., corticobasal degeneration, progressive supranuclear palsy, FTLD with or without amyotrophic lateral sclerosis), and dementia due to prion disease (PRD). These etiologies were selected based on evidence in the literature indicating that these pathologies exhibit discernible structural imaging abnormalities30. Conditions lacking consistent structural MRI signatures, such as dementia with Lewy bodies or Parkinson’s disease dementia, were excluded. Additional etiologies, such as normal pressure hydrocephalus (n = 8), were excluded due to insufficient sample sizes. Imaging visits were matched to the closest date of clinical diagnosis, with a median interval of 63 days between imaging and diagnosis dates.

Imaging processing and modeling

Figure 4 depicts the approach to feature extraction and modeling. For each subject’s imaging visit, when both 3D T1-weighted and T2/FLAIR MRI scans were available, the T2/FLAIR sequence was co-registered to the T1 sequence to ensure anatomical alignment. T1 images were skull-stripped, bias-corrected, and segmented using the FastSurfer tool (v2.2.0)49 for cortical, subcortical, cerebellar, and ventricular segmentation of 120 ROIs, which are documented in Supplementary Table 8. T2/FLAIR images were processed using the Lesion Prediction Algorithm from the Lesion Segmentation Toolbox (LST) version 3.0.0 in MATLAB50,51. Lesion probability masks were thresholded at p = 0.5, consistent with a range of well-validated thresholds identified by prior literature52,53, to generate binary lesion masks. Regional WMH burden was estimated by applying the binarized lesion probability map (\({\text{L}}\left(x,y,z\right)\)) to the T1 segmentation mask (\({\text{S}}\left(x,y,z\right)\)), accounting for voxel volume (\({{\text{V}}}_{\text{voxel}}\)), where

In cases where only a T1-weighted image was available, the T2/FLAIR pipeline was omitted. Conversely, when only a T2/FLAIR image was present, the regional WMH estimation was foregone, and only the global WMH volume was estimated. Subject demographics, including age, sex, race, Hispanic ethnicity, Hispanic origin, and years of education, were extracted from the closest visit to the scan date and used as inputs to the ML classifier.

We utilized an ensemble ML classifier, XGBoost, to independently train models for binary classifications of AD and OIED. This approach accounted for the possibility that one, both, or neither etiology might be present for any given subject. Hyperparameter tuning was performed using a 5-fold cross-validation procedure, optimizing the AUROC through a Bayesian search process implemented with the Weights & Biases (W&B) package.

Validation

Model performance was evaluated through 5-fold cross-validation using receiver operator characteristic (ROC) and precision-recall (PR) curves. Head-to-head performance comparisons against expert assessments made by (n = 7) fellowship-trained neuroradiologists were also conducted on the held-out test set of 70 cases. The neuroradiologists in this study had 12.3 ± 5.7 (mean ± std) years of experience. We also assessed alignment of model probabilities with post-mortem evidence on the held-out test set, when available. Additionally, we computed confusion matrices and threshold-dependent performance metrics, including sensitivity, specificity, precision, recall, and F1-score, on the test set using various class-specific thresholds derived during training and cross-validation. These thresholds were selected based on the Youden index, as well as thresholds achieving 95% and 80% sensitivity or specificity.

Each neuroradiologist (n = 7) independently completed an online REDCap questionnaire on the test cases (n = 70). They were provided with available imaging sequences, encompassing T1, T2/FLAIR, SWI, and diffusion MRIs, and assessed regional brain atrophy and pathological changes in specific brain sub-regions, rating the severity of these changes as None, Mild, Moderate, or Severe. Additionally, they identified the presence or absence of disease markers such as infarcts and T2/FLAIR hyperintense lesions. For diagnostic purposes, they provided confidence scores ranging from 0 to 100, where 0 represented absolute exclusion of a particular etiology, and 100 indicated certainty in the diagnosis. Assessments of brain atrophy and pathological changes, the presence or absence of disease markers, and confidence scores were all made independently and without prior knowledge of model results or performance. To assess interrater agreement among experts, we computed the pairwise Spearman correlation coefficients for the ratings provided by each pair. To estimate the mean pairwise correlation coefficient and its 95% confidence interval, we used a bootstrapping procedure. This involved resampling clinicians from the original dataset and calculating pairwise Spearman correlation coefficients within each resampled group, ensuring that the bootstrapping process reflected the original sampling design. Each expert’s highest confidence score across the categories of vascular dementia, FTLD, and prion diseases was considered as their confidence score for the OIED label to facilitate comparisons with the model.

We also sought to assess the capacity of our model to augment expert diagnoses. To this end, we computed the arithmetic mean of model predictions (0–100%) and neuroradiologists’ confidence scores (0–100%) for each label and case. We evaluated the model-augmented confidence scores with the same metrics against the non-augmented confidence scores.

Feature importance analysis

We leveraged two frameworks to evaluate which imaging features most influenced the model’s predictions: feature importance metrics based on trained parameters, including feature weight and feature gain, and SHAP. Feature weights show how often each factor was relied on during decision-making in the model, and feature gain measures how much each factor contributed to the model’s accuracy. SHAP values have been explored extensively in the context of explainable AI54. In our study, they served as a measure of feature importance specific to each case. For cases in the held-out test set, we computed the Spearman correlation coefficient between median expert ratings of various imaging findings and Shapley values for the most analogous feature groups to assess model alignment with expert assessments.

Computational hardware and software

All analyses were conducted using Python (v3.10.14) and MATLAB (R2024b Update 3) on the Shared Computing Cluster, administered by Boston University’s Research Computing Services. Neuroimaging preprocessing and segmentation tasks were performed using the LST (v3.0.03) in MATLAB alongside SPM12 (revision 7771) and FSL (v6.0.4). Cortical and subcortical segmentation of T1-weighted images was conducted using FastSurfer (v2.2.0). ML modeling was implemented using the XGBoost library (v2.1.1). Feature importance analyses employed the SHAP package (v0.45.1), and supporting statistical analyses were conducted using pandas (v2.2.2), scikit-learn (v1.5.1), and NumPy (v1.26.4). Hyperparameter optimization and experiment tracking were managed using the W&B package (v0.16.6). Data from expert raters were collected and managed using REDCap (v11.1.3).

Data availability

All data used in this study can be downloaded from NACC at https://naccdata.org/. Python scripts used in this study are made available on GitHub (https://github.com/vkola-lab/npjdementia2025/).

References

Furtner, J. & Prayer, D. Neuroimaging in dementia. Wien. Med. Wochenschr. 171, 274–281 (2021).

Pasquier, F. et al. Inter- and intraobserver reproducibility of cerebral atrophy assessment on MRI scans with hemispheric infarcts. Eur. Neurol. 36, 268–272 (1996).

Cummings, J. et al. Lecanemab: appropriate use recommendations. J. Prev. Alzheimers Dis. 10, 362–377 (2023).

Rabinovici, G. D. et al. Donanemab: appropriate use recommendations. J. Prev. Alzheimers Dis. 12, 100150 (2025).

Harper, L., Barkhof, F., Scheltens, P., Schott, J. M. & Fox, N. C. An algorithmic approach to structural imaging in dementia. J. Neurol. Neurosurg. Psychiatry 85, 692–698 (2013).

Frisoni, G. B., Fox, N. C., Jack, C. R., Scheltens, P. & Thompson, P. M. The clinical use of structural MRI in Alzheimer disease. Nat. Rev. Neurol. 6, 67–77 (2010).

McKeith, I. G. et al. Diagnosis and management of dementia with Lewy bodies. Neurology 89, 88–100 (2017).

Kipps, C. M. et al. Clinical significance of lobar atrophy in frontotemporal dementia: application of an MRI visual rating scale. Dement. Geriatr. Cogn. Disord. 23, 334–342 (2007).

Scheltens, P. et al. Atrophy of medial temporal lobes on MRI in “probable” Alzheimer’s disease and normal ageing: diagnostic value and neuropsychological correlates. J. Neurol. Neurosurg. Psychiatry 55, 967–972 (1992).

Harper, L., Barkhof, F., Fox, N. C. & Schott, J. M. Using visual rating to diagnose dementia: a critical evaluation of MRI atrophy scales. J. Neurol. Neurosurg. Psychiatry 86, 1225–1233 (2015).

Fazekas, F., Chawluk, J. B., Alavi, A., Hurtig, H. I. & Zimmerman, R. A. MR signal abnormalities at 1.5 T in Alzheimer’s dementia and normal aging. Am. J. Roentgenol. 149, 351–356 (1987).

Burton, E. J. et al. Medial temporal lobe atrophy on MRI differentiates Alzheimer’s disease from dementia with Lewy bodies and vascular cognitive impairment: a prospective study with pathological verification of diagnosis. Brain 132, 195–203 (2009).

Harper, L. et al. MRI visual rating scales in the diagnosis of dementia: evaluation in 184 post-mortem confirmed cases. Brain 139, 1211–1225 (2016).

Borchert, R. J. et al. Artificial intelligence for diagnostic and prognostic neuroimaging in dementia: a systematic review. Alzheimers Dement 19, 5885–5904 (2023).

Bushnaq, S. et al. A comparison of CT perfusion output of RapidAI and Viz.ai Software in the evaluation of acute ischemic stroke. Am. J. Neuroradiol. 45, 863–870 (2024).

Gommers, J. J. J. et al. Influence of AI decision support on radiologists’ performance and visual search in screening mammography. Radiology 316, https://doi.org/10.1148/radiol.243688 (2025).

Yun, T. J. et al. Deep learning based automatic detection algorithm for acute intracranial haemorrhage: a pivotal randomized clinical trial. npj Digit. Med. 6, https://doi.org/10.1038/s41746-023-00798-8 (2023).

Matsoukas, S. et al. Pilot deployment of Viz–Intracranial Hemorrhage for intracranial hemorrhage detection: real-world performance in a stroke code cohort. Stroke 53, https://doi.org/10.1161/strokeaha.122.039711 (2022).

Sohn, B. et al. Deep learning–based software improves clinicians’ detection sensitivity of aneurysms on brain TOF-MRA. Am. J. Neuroradiol. https://doi.org/10.3174/ajnr.A7242 (2021).

Seah, J. C. Y. et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study. Lancet Digit. Health 3, e496–e506 (2021).

Ahn, J. S. et al. Association of artificial intelligence-aided chest radiograph interpretation with reader performance and efficiency. JAMA Netw. Open 5, https://doi.org/10.1001/jamanetworkopen.2022.29289 (2022).

Qiu, S. et al. Development and validation of an interpretable deep learning framework for Alzheimer’s disease classification. Brain 143, 1920–1933 (2020).

Romano, M. F. et al. Deep learning for risk-based stratification of cognitively impaired individuals. iScience 26, 107522 (2023).

Arya, A. D. et al. A systematic review on machine learning and deep learning techniques in the effective diagnosis of Alzheimer’s disease. Brain Inform. 10, https://doi.org/10.1186/s40708-023-00195-7 (2023).

Marinescu, R. V. et al. TADPOLE Challenge: Accurate Alzheimer’s Disease Prediction Through Crowdsourced Forecasting of Future Data. In Predictive Intelligence in Medicine (eds Rekik, I., Adeli, E. & Park, S.). Lecture Notes in Computer Science, Vol. 11843 (Springer, Cham, 2019).

Mårtensson, G. et al. The reliability of a deep learning model in clinical out-of-distribution MRI data: a multicohort study. Med. Image Anal. 66, https://doi.org/10.1016/j.media.2020.101714 (2020).

Vermeulen, R. J. et al. Limited generalizability and high risk of bias in multivariable models predicting conversion risk from mild cognitive impairment to dementia: a systematic review. Alzheimer’s Dement. 21, https://doi.org/10.1002/alz.70069 (2025).

Young, A. L. et al. Uncovering the heterogeneity and temporal complexity of neurodegenerative diseases with Subtype and Stage Inference. Nat. Commun. 9, https://doi.org/10.1038/s41467-018-05892-0 (2018).

Jomeiri, A., Navin, A. H. & Shamsi, M. Longitudinal MRI analysis using a hybrid DenseNet-BiLSTM method for Alzheimer’s disease prediction. Behav. Brain Res. 463, https://doi.org/10.1016/j.bbr.2024.114900 (2024).

Koikkalainen, J. et al. Differential diagnosis of neurodegenerative diseases using structural MRI data. Neuroimage Clin. 11, 435–449 (2016).

Kloppel, S. et al. Accuracy of dementia diagnosis—a direct comparison between radiologists and a computerized method. Brain 131, 2969–2974 (2008).

Xue, C. et al. AI-based differential diagnosis of dementia etiologies on multimodal data. Nat. Med. 30, 2977–2989 (2024).

Langbaum, J. B. et al. Recommendations to address key recruitment challenges of Alzheimer’s disease clinical trials. Alzheimers Dement. 19, 696–707 (2023).

Chouliaras, L. & O’Brien, J. T. The use of neuroimaging techniques in the early and differential diagnosis of dementia. Mol. Psychiatry 28, 4084–4097 (2023).

Beyer, L. et al. Cognitive reserve hypothesis in frontotemporal dementia: a FDG-PET study. Neuroimage Clin. 29, 102535 (2021).

Meeter, L. H., Kaat, L. D., Rohrer, J. D. & van Swieten, J. C. Imaging and fluid biomarkers in frontotemporal dementia. Nat. Rev. Neurol. 13, 406–419 (2017).

Macfarlane, R. G., Wroe, S. J., Collinge, J., Yousry, T. A. & Jager, H. R. Neuroimaging findings in human prion disease. J. Neurol. Neurosurg. Psychiatry 78, 664–670 (2007).

van Straaten, E. C., Scheltens, P. & Barkhof, F. MRI and CT in the diagnosis of vascular dementia. J. Neurol. Sci. 226, 9–12 (2004).

Möller, C. et al. Alzheimer disease and behavioral variant frontotemporal dementia: automatic classification based on cortical atrophy for single-subject diagnosis. Radiology 279, 838–848 (2016).

Wen, J. et al. Convolutional neural networks for classification of Alzheimer’s disease: overview and reproducible evaluation. Med. Image Anal. 63, https://doi.org/10.1016/j.media.2020.101694 (2020).

Zheng, Y. et al. Machine learning-based framework for differential diagnosis between vascular dementia and Alzheimer’s disease using structural MRI features. Front. Neurol. 10, https://doi.org/10.3389/fneur.2019.01097 (2019).

Castellazzi, G. et al. A machine learning approach for the differential diagnosis of Alzheimer and vascular dementia fed by MRI selected features. Front. Neuroinform. 14, https://doi.org/10.3389/fninf.2020.00025 (2020).

Ramanan, V. K. et al. Antiamyloid monoclonal antibody therapy for Alzheimer disease. Neurology 101, 842–852 (2023).

Fox, N. C., Freeborough, P. A. & Rossor, M. N. Visualisation and quantification of rates of atrophy in Alzheimer’s disease. Lancet 348, 94–97 (1996).

van Loenhoud, A. C. et al. Cognitive reserve and clinical progression in Alzheimer disease. Neurology 93, https://doi.org/10.1212/wnl.0000000000007821 (2019).

Pemberton, H. G. et al. Technical and clinical validation of commercial automated volumetric MRI tools for dementia diagnosis—a systematic review. Neuroradiology 63, 1773–1789 (2021).

Schneider, J. A., Arvanitakis, Z., Bang, W. & Bennett, D. A. Mixed brain pathologies account for most dementia cases in community-dwelling older persons. Neurology 69, 2197–2204 (2007).

Jellinger, K. A. The enigma of mixed dementia. Alzheimer’s Dement. 3, 40–53 (2007).

Henschel, L. et al. FastSurfer—a fast and accurate deep learning based neuroimaging pipeline. NeuroImage 219, https://doi.org/10.1016/j.neuroimage.2020.117012 (2020).

Egger, C. et al. MRI FLAIR lesion segmentation in multiple sclerosis: Does automated segmentation hold up with manual annotation? Neuroimage Clin. 13, 264–270 (2017).

Schmidt, P. Bayesian Inference for Structured Additive Regression Models for Large-Scale Problems with Applications to Medical Imaging LMU München (2017).

Waymont, J. M. J., Petsa, C., McNeil, C. J., Murray, A. D. & Waiter, G. D. Validation and comparison of two automated methods for quantifying brain white matter hyperintensities of presumed vascular origin. J. Int. Med. Res. 48, 300060519880053 (2020).

Ribaldi, F. et al. Accuracy and reproducibility of automated white matter hyperintensities segmentation with lesion segmentation tool: a European multi-site 3T study. Magn. Reson. Imaging 76, 108–115 (2021).

Sathyan, A., Weinberg, A. I. & Cohen, K. Interpretable AI for bio-medical applications. Complex Eng. Syst. 2, https://doi.org/10.20517/ces.2022.41 (2022).

Acknowledgements

This project was supported by grants from the National Institute on Aging (NIA) Artificial Intelligence and Technology Collaboratories (P30-AG073104, P30-AG073105), the American Heart Association (20SFRN35460031), Gates Ventures, and the National Institutes of Health (R01-HL159620, R01-AG062109, R01-AG083735, and R01-NS142076). The authors acknowledge grant support from Boston University, CTSI 1UL1TR001430, for the REDCap Survey. Finally, the authors acknowledge grant support from the National Institute of Biomedical Imaging and Bioengineering, 5T32EB001631-21. The NACC database is funded by an NIA grant U24-AG072122. NACC data are contributed by the following NIA-funded ADRCs: P30 AG062429 (PI James Brewer, MD, PhD), P30 AG066468 (PI Oscar Lopez, MD), P30 AG062421 (PI Bradley Hyman, MD, PhD), P30 AG066509 (PI Thomas Grabowski, MD), P30 AG066514 (PI Mary Sano, PhD), P30 AG066530 (PI Helena Chui, MD), P30 AG066507 (PI Marilyn Albert, PhD), P30 AG066444 (PI John Morris, MD), P30 AG066518 (PI Jeffrey Kaye, MD), P30 AG066512 (PI Thomas Wisniewski, MD), P30 AG066462 (PI Scott Small, MD), P30 AG072979 (PI David Wolk, MD), P30 AG072972 (PI Charles DeCarli, MD), P30 AG072976 (PI Andrew Saykin, PsyD), P30 AG072975 (PI David Bennett, MD), P30 AG072978 (PI Neil Kowall, MD), P30 AG072977 (PI Robert Vassar, PhD), P30 AG066519 (PI Frank LaFerla, PhD), P30 AG062677 (PI Ronald Petersen, MD, PhD), P30 AG079280 (PI Eric Reiman, MD), P30 AG062422 (PI Gil Rabinovici, MD), P30 AG066511 (PI Allan Levey, MD, PhD), P30 AG072946 (PI Linda Van Eldik, PhD), P30 AG062715 (PI Sanjay Asthana, MD, FRCP), P30 AG072973 (PI Russell Swerdlow, MD), P30 AG066506 (PI Todd Golde, MD, PhD), P30 AG066508 (PI Stephen Strittmatter, MD, PhD), P30 AG066515 (PI Victor Henderson, MD, MS), P30 AG072947 (PI Suzanne Craft, PhD), P30 AG072931 (PI Henry Paulson, MD, PhD), P30 AG066546 (PI Sudha Seshadri, MD), P20 AG068024 (PI Erik Roberson, MD, PhD), P20 AG068053 (PI Justin Miller, PhD), P20 AG068077 (PI Gary Rosenberg, MD), P20 AG068082 (PI Angela Jefferson, PhD), P30 AG072958 (PI Heather Whitson, MD) and P30 AG072959 (PI James Leverenz, MD).

Author information

Authors and Affiliations

Contributions

S.P. designed and developed the ML framework. S.P., O.T.Z., K.K., M.F.R., S.Y., and A.A. performed the analysis. V.C.A.A., C.W.F., A.Z.M., A.B.P., S.R., B.N.S., and J.E.S. are practicing neuroradiologists who reviewed the cases. S.P. and V.B.K. wrote the manuscript. All authors reviewed, edited, and approved the manuscript. V.B.K. conceived, designed, and directed the study.

Corresponding author

Ethics declarations

Competing interests

V.B.K. is a co-founder and equity holder of deepPath Inc. and Cognimark, Inc. (formerly CogniScreen). He also serves on the scientific advisory board of Altoida Inc. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Puducheri, S., Zhou, O.T., Kapadia, K. et al. Augmenting radiological assessment of imaging evident dementias with radiomic analysis. npj Dement. 1, 27 (2025). https://doi.org/10.1038/s44400-025-00031-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s44400-025-00031-1