Abstract

During the COVID-19 pandemic, deaths per case in the United States decreased from 7.46% in April 2020 to 1.76% in April 2021. One mechanism that could explain this decline is a learning effect associated with testing of new treatments by hospitals. Hospitals that participated in clinical trials developed better organizational capabilities to diagnose and treat COVID-19 patients. Simultaneously, hospitals used health information technologies (IT) that integrated health information across healthcare providers to facilitate greater learning and sharing of best practices. Using US county-level data on clinical trial participation, use of health IT, and COVID-19 cases and deaths, we show that hospitals in counties that participated in clinical trials, and those with greater IT capabilities, exhibited a lower rate of COVID-19 deaths. Consistent with the learning effect hypothesis, counties with greater hospital IT capabilities performed relatively better at treating COVID-19 patients several months into the pandemic. Counties with hospitals that participated in COVID-19 clinical trials also learned faster, with the learning effect of clinical trials being moderated by hospital health IT capability. We posit that clinical trials and use of health IT systems can help hospitals to achieve lower mortality rates in the long run by enhancing learning effects.

Similar content being viewed by others

Introduction

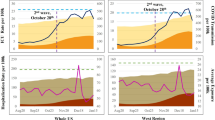

In March of 2020, public health experts recommended restricting social contact in order to ‘flatten the curve’ of COVID-19 transmission. In compliance with this guidance, the US federal government announced, “Fifteen Days to Slow the Spread” (later changed to 30 Days). This guideline, and the state and local rules that followed, greatly reduced social mobility and infection rates, but also economic output1. While social distancing had direct economic costs, there is a strong reason to think that this strategy was effective in increasing the average level of care received by COVID-19 patients. As shown in Fig. 1, US deaths per detected case of COVID decreased from 7.46% in April 2020 to 1.76% in April 2021. If the reduction in death rate can be attributed in part to doctors learning how to better treat COVID-19 patients, then understanding how to accelerate such learning is of utmost importance.

In this research, we investigate the role of clinical trials (CT) and health information technology (IT) in the prevention of deaths due to COVID-19. We focus on CT and health IT because they represent two important tools that hospitals can utilize to develop and deploy better treatments. Hospital participation in CTs enables healthcare providers to learn through experimentation and improve best practices for patient treatment. Furthermore, a robust electronic health records (EHR) system can help doctors to collect, organize, and share health data on patient diagnosis and outcomes, and was vital for syndromic surveillance during the COVID-19 pandemic. Other health IT systems, such as clinical decision support (CDS) systems, can provide best-practice alerts that keep doctors informed on the most cutting-edge modalities for patient care. Similarly, health information exchange (HIE) capabilities allow healthcare providers to share patient health data with other providers, which enables timely diagnosis and treatment.

We utilize US county-level data on hospital participation in CT, hospital IT intensity, COVID-19 cases, and deaths. While our IT and CT measures are collected at the hospital level, the data on COVID-19 cases and deaths are available at the county level. Since almost all deaths (93%) in the US happened in hospitals or in medical facilities (Schumacher and Wedenoja2), we convert our hospital-level measures to county-level measures since any effect that is prevalent at the hospital level should also be exhibited at the county-level2. Our primary outcome is COVID-19 deaths, measured as the number of new deaths as a proportion of detected COVID-19 cases, measured at a county level. We observe a negative and significant relationship between COVID-19 deaths per capita and health IT intensity. In our baseline regressions, we found that a standard deviation increase in the county-level index of IT intensity was related to 0.18 fewer deaths per thousand residents. This significant relationship is valid after including fixed effects for county and time, based on the number of months into the COVID-19 pandemic.

Our preferred model includes these fixed effects and generates an estimate of 0.095 fewer COVID-19 deaths per 1000 residents. Since omitted variables and endogeneity could bias such estimates, we took two approaches to establish the robustness of this result. The first was to include a large number of county-level controls. The second was to instrument for health IT intensity with measures of Internet availability. Both approaches provide qualitatively similar results.

Our next set of results investigates how death rates changed across counties over time. We found that counties with high health IT outperformed low health IT-intensity counties in the long run. However, high health IT counties actually underperformed in the first month of the pandemic, while our empirical results suggest that counties with hospitals that possess high levels of health IT improved faster over time. Further, we observe that hospital participation in CTs resulted in learning through experimentation, which was in turn associated with lower mortality rates over time.

Although high health IT-intensive counties were poor performers initially, these counties outperformed their low-health IT counterparts in the long run, thereby saving lives. The poor performance of high IT counties during the initial months may be attributed, in part, to a higher rate of experimentation with new treatments for COVID-19 patients. This is consistent with changing patient care guidance, in which several initially promising drug candidates (such as Hydroxychloroquine) were rejected as counterproductive. In other words, high health IT counties were able to learn faster as a result of experimentation through CT, and lower their COVID-19 mortality rates faster, compared to low IT counties.

One of the reasons that learning and diffusion of new treatment modalities was important during the COVID-19 pandemic was the rapidly changing state of knowledge on COVID-19. Figure S1 in the Supplement provides a timeline of updates to the Infectious Diseases Society of America’s (IDSA) recommendations for the treatment of COVID-19 patients, with other events related to the spread of the COVID-19 pandemic also included, for context. The timeline shows a gradual accumulation of knowledge about best practices for the treatment of COVID-19. For example, prior to FDA approval, drugs such as Hydroxychloroquine and Remdesivir may have been prescribed and used off-label. We note that IDSA recommendations are lagging indicators of clinical best practices, as changes in guidelines were often based on trials undertaken during the crisis, and that had only been undertaken for 1–2 months previously. On the other hand, the guidelines are leading indicators for clinicians who are hesitant to change their treatments for COVID-19 patients. Although doctors are not required to follow these recommendations, they are used to updated CDS systems (after a lag) and as a point of reference for malpractice cases. The timeline highlights how perceptions have changed about the use of many drugs over time.

Hydroxychloroquine, a steroid previously indicated for arthritis and malaria, is the most well-known example of a COVID-19 treatment about which public perceptions changed. On March 19th, 2020, it was called a “game changer” by President Trump, and on March 28th, it was authorized for emergency use by the FDA. In the first version of the IDSA guidelines, Hydroxychloroquine was presented as a promising but unproven treatment, recommended only for use in CT. A large-scale NIH study duly began on April 2, 2020. On June 20, 2020, the NIH ended its study, stating that the drug was very unlikely to be beneficial3. Two months later, in version 3.0 of its recommendations, the IDSA officially recommended against COVID-19 treatment with Hydroxychloroquine.

Similarly, Tocilizumab, a drug previously used for rheumatoid arthritis sufferers, went from being a promising candidate for further investigation in early IDSA reports, to not being recommended in version 3.3 of the IDSA’s report. On the positive side, Remdesivir (an antiviral drug previously investigated for use during the 2014 Ebola epidemic) and a few varieties of corticosteroids, such as Dexamethasone, went from promising possibilities to being strongly recommended for use in specific cases. There were also other non-drug innovations, such as using prone positioning to help patients breathe and delaying the use of ventilators, in updated IDSA guidelines (Tibble et al.)4.

A report on declining COVID-19 death rates confirms the key role of ‘hard-won experience’ at hospitals that have had more time to learn about the disease5. One doctor emphasized learning “how to use steroids and a shift away from unproven drugs and procedures.” Other doctors confirmed the roles played by uncertainty and over-treatment in the initial poor response to the disease. “Unfortunately, a lot of initial discourse was complicated by noise about how this disease was entirely different or entirely new,” said one doctor, and another agreed that “it took time to realize that standard treatments were among the most effective.” Hydroxychloroquine and Tocilizumab were mentioned as innovative treatments that were eventually discarded as counterproductive.

Health IT plays an important role in ensuring the timely and accurate dissemination of information on best practices across healthcare providers. Specifically, hospitals that developed advanced health information sharing capabilities were more likely to share updated information on clinical best practices and treatment regimens for COVID-19 patients, which is especially important when patients are treated by multiple providers across different facilities6,7. Hence, the ability to share and exchange patient health information is a critical measure of hospital health IT capability that is likely to have a beneficial impact on public health outcomes.

A few empirical studies have explored the relationship between hospital participation in CT and their reported health outcomes. Majumdar et al.8 studied the role of hospital participation in CT as part of a large-scale study evaluating whether rapid risk stratification of unstable angina patients suppressed adverse outcomes with early implementation of the American College of Cardiology/American Heart Association guidelines8. They observed that patients treated at hospitals that participated in CT reported significantly lower mortality than patients treated at non-participating hospitals. Specifically, based on a large cohort of US hospitals treating acute coronary syndromes, they found that only 2.6% of patients were ever enrolled in a clinical trial for that condition and, during more than 4 years of observation, almost one-third of hospitals never included a patient in a clinical trial. They also observed that hospitals that participated in CT delivered better quality care, and had significantly lower rates of early mortality compared to hospitals that did not enroll patients in CT. Similarly, Du Bois et al.9 studied the outcomes of 476 patients with ovarian cancer who were treated at 165 German hospitals9. They reported that compared to hospitals that did not participate in trials, participating hospitals reported a 77% increase in guideline-concordant chemotherapy and a 28% reduction in long-term mortality.

While the prior literature has documented the impact of CT on patient health outcomes, research on the impact of learning in relation to organizational experience with CT has been limited. Specifically, our research question can be summarized as follows: What is the cumulative impact of learning from organizational experience with clinical trials on COVID-19 outcomes? In other words, does the duration of time elapsed since a hospital first started treating COVID-19 patients moderate the relationship between their participation in CT and mortality risk? Hence, in this study, we focus on the impact of the duration of organization learning through participation in CT and its impact on COVID-19 mortality risk.

When organizations perform certain tasks repeatedly, they learn, develop, and adapt routines to their needs and environment10. Organizational learning is a process of seeking, selecting, amending, and adapting new routines to improve performance. Organizational learning accumulates over time through repetitive execution11. During the course of CT, healthcare providers contribute to the learning process by observing the effect of new drugs and treatments on patients and sharing lessons learned with each other. Without organizational learning and information exchange, cumulative experience alone cannot guarantee significant performance12.

Hence, we argue that hospitals that participated in CT and developed greater experience in treating COVID-19 cases are more likely to develop relevant capabilities and best practices in dealing with COVID-19 patients, who may suffer from several comorbidities. Hence, we hypothesize that:

H1: Organizational experience has a greater effect on COVID-19 mortality rates in counties with high hospital participation in clinical trials, compared to counties with low rates of hospital clinical trial participation.

Although COVID-19 patients may have different medical needs depending on their prior medical history and vulnerabilities, their treatment protocols share many similarities on the basis of their clinical guidelines. Health IT systems can facilitate sharing of best practices related to COVID-19 treatment and procedures within and across healthcare organizations, and thereby, allow providers to benefit from the collective knowledge and experience of others through a knowledge spillover effect. Although the theory of organizational learning was developed to study the role of learning in IT implementation, very little is known about how health IT can contribute toward the dissemination of real-time knowledge in the context of a pandemic outbreak or treatment of other infectious diseases13.

Health IT has the potential to change every stage of the CT process, from researching existing research on CT to making patient enrollment more efficient and improving medication adherence. Specifically, hospitals and healthcare providers can use artificial intelligence (AI) to parse EHR data to recruit patients into CT, utilize distributed database technology to decentralize CT across different sites, efficiently search real-world data sources to supplement clinical trial results, and conduct in silico trials by simulating patient populations. In this section, we describe some of the ways in which health IT systems and technologies can improve the effectiveness of CT.

First, efficient searching for and matching of patients for CT remains one of the major challenges in running large-scale CT. Natural language processing (NLP) can help extract and analyze relevant clinical information from a patient’s EHR records, compare it to eligibility criteria for ongoing trials, and recommend matching studies. Extracting information from medical records—including EHRs and lab images—is one of the most sought-after applications of artificial intelligence in healthcare. For example, the digital health firm, Deep6 AI uses NLP to extract clinical data—such as symptoms, diagnoses, and treatments—from patient records. Its software can identify patients with conditions not explicitly mentioned in EHR data, and automatically verify inclusion and exclusion criteria, thereby improving the match rate between patients and CT.

Second, health IT systems which adhere to widely accepted interoperability standards also allow hospitals to share patient clinical data across disparate data sources. For example, different hospitals and providers that treat the same patient may not use the same EHR system to enter patient health data. In many CT, researchers still fax requests for patient records to hospitals, who often send the data back as PDFs or images, including pictures of handwritten notes14. Hence, access to interoperable IT infrastructure enables hospitals to share patient data across organizational boundaries, thereby supporting the semantic interoperability requirements necessary for efficient patient matching and clinical trial execution. Advanced health IT systems which meet interoperability criteria and participate in HIEs are more likely to support hospitals in sharing the results of CT and patient health data with other healthcare providers.

Third, IT systems can improve the effectiveness of monitoring and adherence during CT. AI-enabled devices and wearables offer real-time, continuous monitoring of physiological and behavioral changes in patients, potentially reducing the cost, frequency, and difficulty of on-site check-ups. The COVID-19 pandemic has spurred adoption of new technologies which can improve the efficiency and cost of CT15. Adaptive design—which involves a more flexible approach to conducting CT—has been a key trend as researchers grapple with COVID-19. For example, trials conducted by Sanofi using Regeneron, involve evaluation of an antibody treatment for COVID-19 patients, followed adaptive design. Another IT-driven trend is the use of open online research forums to expedite potential findings and conclusions. For example, AI-based CT company Mendel.ai partnered with DCM Ventures to create a COVID-19 search engine where researchers could pull data to run their respective studies. The COVID-19 driven adoption of telemedicine and remote monitoring solutions has spurred further interest in virtual or decentralized CT. This model presents an opportunity to better accommodate patient participation and improve the focus of CT, thereby increasing their overall effectiveness16. The potential benefits of virtual CT include reduced costs, a wider network of eligible patients, and better patient retention.

Hospitals with low health IT capabilities lack the infrastructure necessary to share patient health information and treatment protocols across healthcare providers, conduct decentralized CT, or monitor patient adherence to clinical protocols, thereby resulting in higher costs and poor patient retention. A recent report by the CT Transformation Initiative (CTTI) highlights the role of a robust IT infrastructure in improving the quality and efficiency of CT14. Low health IT hospitals are dependent on learning through trial and error, based on their own experience in dealing with COVID-19 patients, and CT that may be conducted within their own organization (i.e., an experience effect). However, a lack of robust health IT capabilities precludes them from learning through knowledge sharing with other providers and healthcare organizations.

Hence, we argue that learning effects from clinical trial participation and presence of high IT capabilities are likely to experience significant reduction in COVID-19 mortality risk, since counties with high IT capability hospitals are more likely to benefit through two pathways: (a) the spillover effect of knowledge sharing with other healthcare providers, and (b) improved efficacy of patient recruitment and participation in CT, organizational experience, and better monitoring of patient outcomes.

H2: Hospitals in counties with high levels of participation in clinical trials and health IT use exhibit lower COVID-19 mortality risk compared to hospitals in counties with low clinical trial participation and health IT use.

Results

Our research data fall into four categories. Our primary outcome of interest is the number of patient deaths attributed to COVID-19, which is measured monthly at the county level. We measured COVID-19 cases at the same level of resolution, i.e., county-level. Individual measures of hospital health IT comprise our second data set. We also gathered data on CT being conducted in hospitals within each county. Finally, we collected a battery of covariates and instruments for our robustness analysis.

COVID-19 mortality and case data

Our data on COVID-19 cases and deaths was obtained from the data repository curated by the Center for Systems Science and Engineering at Johns Hopkins University (Dong et al.17), which obtained daily data at the county level from numerous resources, including the US Centers for Disease Control and local public health agencies17. Figure 2 plots the distribution of cumulative cases and deaths across all counties in the US as of April 5, 2021. These plots reflect the long-tailed nature of the distribution, which showed that ~50 counties in the United States accounted for a disproportionate share of COVID-19 cases and deaths.

The dependent variable in our panel regressions to test our learning hypothesis (H1 and H2) is the ratio of the number of new deaths in a county to the number of new cases, lagged by 2 weeks. Cases were lagged by 2 weeks, since a fatality attributed to COVID-19 is typically detected as a positive case ~2 weeks before death18,19. Our results are robust to dropping this lag and also for lags of up to 3 weeks. Our primary measure of time was the number of months elapsed since the first 10 COVID-19 cases were detected in a county. Counties with longer time elapsed since initial exposure are likely to exhibit higher cumulative deaths because they were exposed to the pandemic for a longer period.

County-level hospital IT measures

Our data on hospital IT was sourced from the Healthcare Information and Management Systems Society (HIMSS). Specifically, we utilized the Electronic Medical Record Adoption Model (EMRAM) in 2020 to develop our measure of hospital Health IT20. HIMSS data is widely used in the health IT literature to measure IT adoption by hospitals21,22.

The EMRAM is a widely accepted industry standard that is used to measure the maturity of a hospital’s EHR implementation and level of adoption of health IT, with respect to support for patient care, reducing medication errors, and optimizing operational throughput in a paperless environment. It is assessed at the hospital level (for a multi-facility system, each hospital could have a different score). Every hospital is assigned to a “stage” ranging from 0–7, depending on its overall level of hospital IT implementation and adoption. If a hospital is assigned a rating at stage 6 or 7, it is deemed to have a high degree of health IT maturity and considered as an advanced Health IT organization. Hospitals in stage 6 or stage 7 have implemented and actively use advanced CDS systems, technology-enabled medication reconciliation, risk reporting, completely electronic medical record adoption (no paper) and data analytics, and participate in electronic HIE (where patient data is shared with other hospitals). Hence, the EMRAM categories represent different dimensions of hospital digital maturity and influence how hospitals may react to health emergencies, including spread of the COVID-19 pandemic. Additional details about EMRAM and hospital capabilities associated with each stage are available in Table S1 of the Supplement.

Our key treatment variable is a health IT index that measures the IT maturity of all hospitals within a county. To construct this index, we first assigned a binary variable according to whether a hospital was rated at either stage 6 or 7 of the EMRAM model. To convert our hospital health IT metric into a county-level health IT index, we used the normalized weighted average of health IT indices across all hospitals in the county, weighted by their number of beds (based on HIMSS annual hospital survey data). Weighting based on number of hospital beds yields a more accurate measure of the effect of health IT implementation across a county based on the size of hospital(s) located in the county. In other words, if large hospitals in a county deployed advanced health IT systems (i.e., EMRAM stage 6 or 7), then the county-wide, weighted average based on hospital size should be reflected in the measurement of the Health IT index. Hence, our independent variable, “Health IT index,” represents a county-level measure of health IT capability across all hospitals in the county, weighted by hospital size. Although this measure does not include IT use across other healthcare providers (such as nursing homes, physician offices, etc.), it represents a useful starting point to study the impact of health IT, especially since most severe cases of COVID-19 are treated at a hospital at some point during their treatment.

Clinical trials data

Data on CT related to COVID-19 were retrieved from the website clinicaltrials.gov on March 8, 2021. There were 4968 CT in the database related to COVID-19 that had been posted on or before this date. Information on the database is provided by the sponsor or PI of the clinical study. Federal and state laws require many types of CT to be registered with this database, but the data are not strictly comprehensive23.

CT were classified as interventional or non-interventional, based on their description. Interventional CT were further classified into treatments judged to be Good, Bad, or Mixed/Neutral, based on the best judgment of efficacy available as of April 2021. When more than one type of clinical trial was present in the county, we coded it as the best of these varieties (our two most important references for best practices in COVID-19 treatment were the IDSA’s recommendations [American Journal of Managed Care, Staff of24] and the continually updated NYT COVID-19 Drug and Treatment feature)24. In other words, CT were classified into their respective category based on evidence-based data that was used by IDSA to develop recommendations. Using this methodology, the final split of counties based on CT was: Interventional Good (30 counties), Interventional Bad (80 counties), Interventional Mixed/Neutral (38 counties), and Observational (31 counties); in the vast majority of counties (2140 counties), there was no ongoing clinical trial.

Complementary data sources

We utilized the 2018 American Community Survey (ACS) data to include a rich set of demographics (age, gender, race) and economic variables (income, income inequality, employment composition, employment density) as controls in our econometric models. ACS is a nationally representative survey conducted by the US Census Bureau and is sent to over 3.5 million households across the US every year. We complemented this data with Rural-Urban Continuum Codes (RUCC) from the Economic Research Service of the US Department of Agriculture (USDA)25. These codes distinguish counties into nine categories based on their degree of urbanization and connection to urban centers.

To control for pre-COVID-19 hospital quality, we used data on hospital-level mortality from the Centers for Medicare and Medicaid Services (CMS). To control for mobility in a county, we used data from Google’s COVID-19 community mobility reports (Google COVID-19 Community Mobility Reports)26. Using location data from Android phones, Google calculates the relative change in population mobility across various types of locations in each county against baseline mobility in January 2020 (i.e., pre-COVID). Using this data, we calculated the average change in county-level mobility relative to pre-COVID levels of mobility in the same county during the duration of our study.

To control for user mobility inflows into a county (from other counties), we used data from the University of Maryland Transportation Institute’s COVID-19 impact analysis platform and calculated total inflows into a county during our study period27. This dataset was aggregated from several different mobile location data providers, and was previously used to study the impact of mobility on COVID-19 infections28. We augmented these datasets with data on the availability of airports in a county (from OurAirports.com) and data on trust in science-based information sources at the state level (from the MIT COVID-19 Beliefs, Behaviors and Norms survey)29.

Hence, we utilized a rich set of county-level demographic and hospital-related controls—such as pre-COVID hospital quality (mortality), number of hospitals, population demographics, income, employment composition, mobility, airport availability, rural–urban classification, and trust in science (see the section on “Demographics and County Characteristics” in the Supplementary for details). These variables capture many of the same dimensions of county-level, hospital capacity and institutional type that other variables such as full-time equivalent employees and hospital teaching status measure. Tables 1 and 2 provide summary statistics of our dependent and independent variables.

Clinical trials and learning

Did hospital participation in CT result in a reduction in COVID-19 mortality rates? We hypothesize that an important pathway through which participation in CT may help hospitals prevent COVID-19 fatalities is by enabling hospitals to learn, share, and implement clinical best practices for the treatment of COVID-19 patients. In testing hypothesis H1, we argue that counties with hospitals that participate in CT can improve their treatment and management of COVID-19 cases relatively faster, compared to counties with little or no CT participation.

In Fig. 3, we present model-free evidence for our hypothesis (H1). It shows the number of deaths per thousand cases over time (lagged by 2 weeks, which represents the 14-day incubation period for COVID-19 cases), across counties that participated in CT. Counties are categorized into five groups according to their hospitals’ participation in CT. County averages were weighted by the logarithm of the county’s population. Time was measured as the number of months since the first ten cases were diagnosed in the county.

The trend plots shown in Fig. 3 indicate that counties with hospitals that participated in CT fared worse initially than counties with no clinical trial participation, with respect to death rates per 1000 new COVID-19 cases. However, COVID-19 death rates in counties that participated in any type of clinical trial decreased at a much faster rate than in counties without any CT. Later in the pandemic, counties without any CT performed worse than counties with CT in terms of death rate per 1000 new cases. In other words, mortality rates in counties with hospitals that participated in CT decreased at a faster rate compared to counties with no CT participation.

To account for the possibility that differences in learning rates between counties with varying levels of participation with different types of CT were being driven by systematic differences in covariates, we also present the ordinary least squares (OLS) regression results in Table 3 (Column 1). The estimates are based on OLS estimation of the model specified in equation (1). The negative coefficient of CTc (with a baseline of no clinical trial participation) supports our hypothesis H1 that counties with hospital participation in CT experienced lower COVID-19-related death rates compared to counties with hospitals with no CT participation. Specifically, we observe that the coefficients of CT Interventional Bad (coeff. = −2.563, p < 0.01) and CT Interventional Good (coeff. = −0.764, p < 0.01) are negative and significant in column 1 of Table 3. These coefficients are significant in column (2) after accounting for the direct effect of Health IT. Further VIF analysis, as reported in Table S2 of the Supplement, shows that the models in Column 1 and 2 of Table 3 do not suffer from multicollinearity.

Hence, our results indicate that counties with hospitals that participated in any kind of clinical trial (whether good or bad) reported lower COVID-19 mortality rates compared to counties with no CT participation. Our results highlight the importance of experimentation and learning effects based on CT participation in terms of their impact on COVID-19 mortality rates.

Health IT and learning

To test hypothesis H2, we first need to understand whether health IT capability is associated with a reduction in COVID-19 mortality. We hypothesize that an important pathway through which health IT can help hospitals reduce COVID-19 fatalities is through the sharing of clinical best practices for the treatment of COVID-19 cases. In this respect, we argue that counties with hospitals that exhibit an advanced level of health IT capability (measured using the EMRAM maturity score) are more likely to exhibit lower COVID-19 mortality rates. The results in column (2) of Table 3 indicate that counties with high levels of hospital health IT (coeff. = −0.528, p < 0.01) reported lower mortality rates due to COVID-19, compared to low health IT counties.

To identify whether the effect of CT on organizational learning is moderated by the effect of high health IT capability, and account for the possibility that differences in the COVID-19 death rates were driven by systematic differences in covariates, we performed an OLS analysis where we interact CT with health IT using the model specified in equation (2).

We present the results of this model in Table 3 (Column 3). The significant effect of the interaction term CTc × ITc supports hypothesis H2 with respect to the interaction effect of clinical trial participation and hospital health IT capability. In other words, in counties where hospitals that experimented with interventional or observational CT, the effect of health IT on the COVID-19 death rate was much higher compared to counties with no CT (base level in model) or in counties that experimented with “bad” CT (i.e., CT considered with treatments that were later found to be ineffective).

We also found evidence that faster learning among counties with CT is driven by IT. Specifically, our results in column (3) indicate that the coefficient of CT Interventional Good x High IT is negative and statistically significant (coeff. = −4.312, p < 0.01). In other words, counties with hospitals that participated in interventional CT based on good (or neutral) scientific evidence and greater levels of health IT capability (High IT) also reported lower COVID-19 mortality rates compared to counties that did not participate in CT or had low health IT capabilities. Further, we observe that the coefficient of CT Interventional Bad x High IT is positive but not statistically significant (coeff. = 9.292, p > 0.1). This result suggests that interventional trials based on poor scientific evidence do not have a significant impact on COVID-19 mortality even in the presence of high health IT capability.

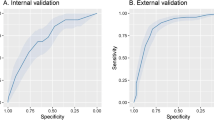

Our robustness analysis involved several additional checks to address potential endogeneity concerns as well as the potential for estimation bias due to confounding factors. We present these results in the Supplement. These included controlling for several demographic and county characteristics in Table S4, controlling for pre-COVID-19 hospital quality in Table S5 (using pre-COVID mortality as a control), and controlling for work from home and mobility changes in Table S6. We also deployed instrumental variable (IV) analysis by instrumenting hospital health IT with measures of broadband Internet availability and download speeds. This approach allowed us to establish causality with respect to the relationship between health IT and COVID-19 mortality rates at the county level. Our two-stage least squares (2SLS) estimation results are provided in Tables S7 and S8 in the Supplement and indicate that our Health IT measure is associated with a reduction in COVID-19 mortality rates.

Learning in action

Previously, we argued that learning how to better treat COVID-19 patients should be reflected in lower hospital mortality rates over time (at the county level). To study whether counties with more health IT-capable hospitals learned faster, we compared the rate at which their death rates decreased, and not just the total amount of deaths.

Figure 4 presents model-free evidence with respect to this question. It shows the number of deaths per thousand cases (lagged by 2 weeks, the 14-day incubation period for COVID-19 cases), by county, based on time elapsed since the first 10 cases. Counties are split into two categories—low and high health IT—based on the health IT capability of hospitals in that county. The results remained qualitatively unchanged even after conducting the analysis without any lags, as well as lags ranging from 1–3 weeks. County averages were weighted by the logarithm of the county population. Time was measured as the number of months since the first ten cases were diagnosed in the county.

As observed, high health IT counties initially fared worse than low IT counties with respect to death rates per 1000 new COVID-19 cases. However, COVID-19 death rates for high health IT counties decreased at a much faster rate overall compared to low IT capability counties. From the first month of the pandemic to the last month of our study period, high IT counties saw their death rate per thousand new cases decrease from 80.09 (95% CI [74.64,85.54]) to 20.09 (95% CI [18.76,21.40]), whereas low health IT counties witnessed their death rates per capita decrease from 45.85 (95% CI [42.14,49.57]) to 24.54 (95% CI [22.39,26.69]).

Failing faster, clinical trials and long-run performance

A natural question is whether CT are a complement to or substitute for health IT in accelerating learning effects related to COVID-19 treatment and patient care. We were also interested in studying what share of the observed effect of faster learning in high health IT counties is driven by the presence of CT. Figure S3 in the Supplement replicates Fig. 4 but restricts its focus to counties without any COVID-19 CT. This demonstrates a faster rate of learning among high health IT counties with respect to decline in mortality rates over time, although the size of this effect was slightly attenuated. This result provides further evidence that a faster rate of learning in high health IT counties is only partially explained by differences in their participation and learning from CT.

Next, we also studied whether health IT capabilities and CT were complements with respect to organizational learning effects. We found that while health IT capabilities and CT are commonly co-located, they are actually substitutes for learning best practices over time. In other words, counties with at least moderate health IT capabilities are more likely to conduct CT. Our observations are consistent with health IT enabling counties to learn from CT in neighboring counties, providing evidence of a spillover effect as clinicians learn from peer experience.

We study the impact of CT and health IT on learning by making two further distinctions. First, we distinguish between counties with hospitals that pursued CT of drugs that were eventually determined to be unhelpful (such as hydroxychloroquine) versus those counties that implemented CT that were eventually determined to be helpful (such as Regeneron and other monoclonal antibody treatments). Figure 5 provides point estimates which show that counties with hospitals that participated in good clinical trials and exhibited high health IT capabilities performed the best, as evidenced by the bold yellow line, which represents the lowest cumulative deaths per capita.

On the other hand, counties with hospitals that did not participate in CT and had low health IT capabilities performed the worst, supporting hypothesis H2. Figure 5 indicates that counties with high health IT capabilities and different types of CT participation exhibited lower cumulative deaths compared to counties with low IT and little or no clinical trial participation. Second, we distinguish between three types of learning: learning from COVID cases in the same county, learning from COVID cases in other counties, and learning from the severity of the level of COVID cases per capita in the county. To do so, we organized counties according to three different notions of time—months since first 10 cases, calendar month, and cumulative cases over beds in a county—and plotted their mortality rates (Supplementary Fig. S3). We observe that counties with CT fared better than counties with no CT across all scenarios, providing further support for hypothesis H2.

Discussion

We studied the impact of CT and health IT capability on COVID-19 mortality rates across the United States, focusing on county-level data of hospital implementation of IT systems and hospital participation in CT. Specifically, we focused on the role of organizational learning using CT as an important mechanism through which learning effects may be manifested, in terms of their impact on public health outcomes. Our empirical analyses are based on a rich data set of COVID-19 cases and mortality rates across the United States, and the corresponding use of health IT systems by hospitals measured at the county level. Using a battery of controls as well as instrumental variable analysis, we can exclude several non-causal explanations for the relationships of interest.

Our results suggest that adoption and use of health IT reduced COVID-19 mortality rates by improving the rate of organizational learning in counties with greater health IT capabilities. We also showed that high health IT counties can learn much faster than low health IT counties over time, resulting in a significant reduction in mortality rates compared to low IT counties. Our results also indicate that counties with greater hospital participation in CT performed better than counties with little or no participation in CT. Further, we observed that hospital participation in CT resulted in learning through experimentation, which was associated with lower mortality rates over time. Although counties with high health IT hospitals were poor performers initially, these counties outperformed their low health IT counterparts in the long run, thereby saving lives. Our research suggests that health IT systems allow counties to develop greater digital capabilities related to experimentation and knowledge sharing, based on their participation in CT. In turn, these organizational capabilities enable clinicians to determine and improve on best practices for the treatment of COVID-19 patients, gather real-time information on patients’ health status and test results, and share patient information with disease surveillance registries, thereby reducing COVID-19 mortality rates.

Our research is not only one of the first studies to document the impact of health IT capabilities on health outcomes during the COVID-19 pandemic outbreak, but also among the earliest studies to highlight the role of IT-enabled learning and experimentation effects on organizational performance. Specifically, with respect to causal mechanisms, our results show that high health IT counties have a much higher rate of CT which, in turn, contributes to improvements in the rate of learning over time. In other words, information transfer and exchange of knowledge about best practices for treatment of COVID-19 patients, based on learning from CT, can explain the significant reduction in observed mortality rates in high IT counties.

What is the size of this effect? Our estimation results indicate that, after flexibly controlling for when a county was first exposed to COVID-19, and using state-level fixed effects, a one standard deviation increase in the county-level, health IT index is associated with .063 fewer deaths per thousand residents. For a US population of 331 million, this suggests that a one standard deviation increase in county-level, health IT capability across all hospitals would have reduced COVID deaths by 20,853 in the United States through April 5, 2021. This estimate represents a substantial reduction in mortality rate that may have been achieved if hospitals and counties had invested in relevant health IT capabilities to improve their digital capabilities and support the dissemination of public health information and best practices to clinicians involved in treating COVID-19 patients.

Our study has several implications for research and practice. While the body of knowledge on best practices for treatment of COVID-19 patients has evolved in the relatively short period of time since the advent of the pandemic, scant attention has been paid to the impact of health IT on the performance of healthcare provider organizations and public health outcomes. Such IT-enabled capabilities provide clinicians with real-time information on patient health data and test results, as well as CDS supported by timely knowledge of best practices, which is useful in reducing the spread of pandemic outbreaks, resulting in lower mortality rates.

Our research represents one of the first studies to explore the impact of CT and health IT systems on public health outcomes during the COVID-19 pandemic. Our findings suggest that counties should invest in more resources to improve health IT infrastructure and encourage local hospitals to engage in knowledge dissemination through participation in CT. In this respect, our empirical research suggests the presence of single- and double-loop learning effects, as clinicians not only absorb best practices learned from their peers, but also rely on CT to question conventional wisdom and refine their understanding of best practices for the treatment of COVID-19 patients. These learning effects can be attributed to hospital participation in CT which contributed to a reduction in COVID-19 death rates over time through a process of experimentation with different types of treatment.

Our research is not without limitations. A major limitation is that our data includes lagged archival information about hospital health IT, which is reported annually, which precludes us from studying the impact of changes in IT use at a granular level (such as weekly or monthly time window). Further, our measure of the county-level, health IT index is based solely on hospital adoption and use of health IT and does not include the use of health IT in outpatient clinics, nursing homes, and other tertiary care centers, a measure that is not easily available in research databases such as HIMSS or the AHA IT Supplement. Finally, we are limited by the availability of COVID-19 death and case information only at the county level, which smooths out the heterogeneity across many hospitals in populous counties like New York County. If data on COVID-19 or other pandemics is available at the hospital level, such an analysis would further strengthen our arguments. Nevertheless, our research represents one of the first attempts to study the impact of hospital IT capabilities on county-level, mortality rates of COVID-19 patients across the United States. Furthermore, it provides a useful lens to explore the role of organizational learning and experimentation through hospital participation in CT and their impact on public health outcomes.

Methods

Model specification

We employ a panel data approach to study the relationship between hospital participation in CT, health IT capabilities, and COVID-19 mortality at the US county level. The dependent variable is the COVID-19 death rate, defined as the ratio of new deaths to new confirmed cases, lagged by 2 weeks to account for the typical interval between diagnosis and mortality. Our key explanatory variables are county-level measures of hospital health IT maturity, based on the HIMSS EMRAM framework, and participation in COVID-19-related CT, coded according to the nature and quality of the intervention. To construct county-level indices, we aggregated hospital-level data using a bed-weighted average. We include county fixed effects, as well as a rich set of demographic, economic, and hospital quality covariates.

Our baseline specification is shown in Eq. (1).

where DeathRatec,t denotes the COVID-19 death rate in county c at time t, Mc,t is the number of months since the first 10 cases, CTc indicates clinical trial participation, and Xc is a vector of controls.

To examine the moderating role of health IT, we extend the model to the specification shown in equation (2), which includes the interaction between hospital participation in CT and health IT capability.

where ITc denotes the IT capability of county c.

To address potential endogeneity and omitted variable bias, we conducted robustness checks including instrumental variable estimation, broadband Internet availability and download speeds as instruments for hospital health IT capability. Our estimation results using these two models are discussed in the “Results” section.

Data availability

Data for this study was obtained from a variety of public and proprietary data sources. Please send all data questions or access requests to the corresponding author, who will direct them accordingly.

References

Chetty, R. et al. How did COVID-19 and stabilization policies affect spending and employment? A new real-time economic tracker based on private sector data. NBER 91, 1689–1699 (2020).

Schumacher, P. & Wedenoja, L. Home or Hospital: What Place of Death Can Tell Us about COVID-19 and Public Health. (Rockefeller Institute of Government Report, 2022).

Self, W. H. et al. Effect of hydroxychloroquine on clinical status at 14 days in hospitalized patients with COVID-19: a randomized clinical trial. JAMA 324, 2165–2176 (2020).

Tibble, H. et al. Real-world severe COVID-19 outcomes associated with use of antivirals and neutralising monoclonal antibodies in Scotland. npj Prim. Care Respir. Med. 34, 17 (2024).

Ledford, H. Why do covid death rates seem to be falling? Nature 587, 190–192 (2020).

Ayabakan, S., Bardhan, I., Zheng, Z. & Kirksey, K. The impact of health information sharing on duplicate testing. MIS Q. 41, 1083–1104 (2017).

Janakiraman, R., Park, E., Demirezen, E. & Kumar, S. The effects of health information exchange access on healthcare quality and efficiency: an empirical investigation. Manag. Sci. 69, 791–811 (2021).

Majumdar, S. R. et al. Better outcomes for patients treated at hospitals that participate in clinical trials. Arch. Intern. Med. 168, 657–662 (2008).

Du Bois, A. et al. Consensus statements on the management of ovarian cancer: final document of the 3rd international gynecologic cancer intergroup ovarian cancer consensus conference (GCIG OCCC 2004). Ann. Oncol. 16, viii7–viii12 (2005).

Nelson, R. R. & Winter, S. G. The Schumpeterian tradeoff revisited. Am. Econ. Rev. 72, 114–132 (1982).

Pisano, G. P., Bohmer, R. M. & Edmondson, A. C. Organizational differences in rates of learning: evidence from the adoption of minimally invasive cardiac surgery. Manag. Sci. 47, 752–768 (2001).

Tucker, A. L., Nembhard, I. M. & Edmondson, A. C. Implementing new practices: an empirical study of organizational learning in hospital intensive care units. Manag. Sci. 53, 894–907 (2007).

Argyris, C. Organizational learning and management information systems. Account. Organ. Soc. 2, 113–123 (1977).

Bechtel, J. et al. Improving the quality conduct and efficiency of clinical trials with training: recommendations for preparedness and qualification of investigators and delegates. Contemp. Clin. Trials 89, 105918 (2020).

Abernethy, N. F. et al. Rapid development of a registry to accelerate COVID-19 vaccine clinical trials. npj Digit. Med. 8, 251, https://doi.org/10.1038/s41746-025-01666-3 (2025).

Huckman, R. S. & Zinner, D. Does focus improve operational performance? Lessons from the management of clinical trials. Strateg. Manag. J. 29, 173–193 (2008).

Dong, E., Du, H. & Gardner, L. An interactive web-based dashboard to track COVID-19 in real time. Lancet Infect. Dis. 20, 533–534 (2020).

Flaxman, S. et al. Estimating the effects of non-pharmaceutical interventions on COVID-19 in Europe. Nature 584, 257–261 (2020).

Kraemer, M. U. et al. The effect of human mobility and control measures on the COVID-19 epidemic in China. Science 368, 493–497 (2020).

HIMSS. Electronic Medical Record Adoption Model (EMRAM). https://www.himss.org/what-we-do-solutions/digital-health-transformation/maturity-models/electronic-medical-record-adoption-model-emram (2025).

Kwon, J. & Johnson, M. E. Proactive versus reactive security investments in the healthcare sector. MIS Q. 38, 451–A3 (2014).

Lee, J., McCullough, J. S. & Town, R. J. The impact of health information technology on hospital productivity. RAND J. Econ. 44, 545–568 (2013).

ClinicalTrials.gov. Background information about clinical trial online registry. https://clinicaltrials.gov/ct2/about-site/background (2021).

The American Journal of Managed Care. A timeline of COVID-19 developments in 2020. URL https://www.ajmc.com/view/a-timeline-of-covid19-developments-in-2020 (2020).

United States Department of Agriculture (USDA). Rural-urban continuum codes documentation. https://www.ers.usda.gov/data-products/rural-urban-continuum-codes/documentation/ (2025).

Google. COVID-19 Community Mobility Reports. Accessed 5 April 2021. https://www.google.com/covid19/mobility/.

Zhang, L. et al. Interactive COVID-19 mobility impact and social distancing analysis platform. Transp. Res. Rec. 2677, 168–180 (2023).

Xiong, C., Hu, S., Yang, M., Luo, W. & Zhang, L. Mobile device data reveal the dynamics in a positive relationship between human mobility and covid-19 infections. Proc. Natl Acad. Sci. USA 117, 27087–27089 (2020).

Collis, A. et al. Global survey on covid-19 beliefs, behaviors, and norms. Nat. Hum. Behav. 6, 1310–1317 (2022).

Acknowledgements

We gratefully acknowledge the guidance and constructive feedback from the editor and review team. I.B. is grateful for the financial support of the Charles and Elizabeth Prothro Regents Chair and the Dean’s Excellence Research Grant at the McCombs School of Business at the University of Texas at Austin.

Author information

Authors and Affiliations

Contributions

The authors, C.N., A.C., S.B. and I.B., confirm they have read and approved the manuscript. The authors agree to be held accountable for the work performed therein. C.N. was responsible for the data analysis, while all authors shared the tasks of interpreting the results and writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Nicolaides, C., Collis, A., Benzell, S. et al. Learning from COVID-19: clinical trials, health information technology, and patient mortality. npj Health Syst. 2, 37 (2025). https://doi.org/10.1038/s44401-025-00042-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44401-025-00042-3