Abstract

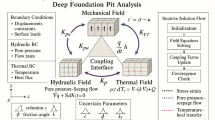

Hydraulic excavators are among the most energy-intensive machines in construction and mining, with conventional hydraulic systems often operating under fixed pressure and flow settings that lead to significant energy loss. Improving energy efficiency while ensuring safety and adaptability under uncertain operating conditions remains a critical challenge. This study proposes a novel adaptive control framework that integrates Bayesian inference with reinforcement learning (RL) to enhance energy recuperation in hydraulic excavator arms. The framework explicitly models system dynamics, including hydraulic cylinders, pumps, valves, and accumulators, while accounting for uncertainties from soil resistance, temperature-dependent viscosity, component wear, and sensor noise. A Bayesian particle filter is employed to continuously estimate latent states such as soil resistance multipliers and accumulator pre-charge offsets, enabling belief-space reinforcement learning to make informed control decisions. The learned control policy adjusts pump pressure and valve commands in real time, while a safety-projection layer enforces strict operational constraints (5–35 MPa hydraulic pressure, 12–28 MPa accumulator window, valve rate limits, and section-level relief protections).

Similar content being viewed by others

Data availability

Code and co-simulation assets, together with configuration files, uncertainty trajectories, evaluation logs, and figure scripts are archived on Zenodo: https://zenodo.org/records/17072083 (DOI: 10.5281/zenodo.17072083/) and https://zenodo.org/records/17375877 (DOI: 10.5281/zenodo.17375877/).

References

Zhang, S., Minav, T., Pietola, M., Kauranne, H. & Kajaste, J. The effects of control methods on energy efficiency and position tracking of an electro-hydraulic excavator equipped with zonal hydraulics. Autom. Constr. 100, 129–144 (2019).

Khan, A. U. & Huang, L. Toward zero emission construction: a comparative life cycle impact assessment of diesel, hybrid, and electric excavators. Energies 16, 6025 (2023).

Mahato, A. C. & Ghoshal, S. K. Energy-saving strategies on power hydraulic system: An overview. Proc. Instit. Mech. Engineers, Part I: J. Syst. Control Eng. 235, 147–169 (2021).

Hou, H. et al. Energy-related carbon emissions mitigation potential for the construction sector in China. Environ. Impact Assess. Rev. 89, 106599 (2021).

Chughtai, A., Uqaili, M. A., Hussain Mirjat, N., Shaikh, F. & Khatri, S. A. Demand-side management scenario analysis for the energy-efficient future of Pakistan: Bridging the gap between market interests and national priorities. Front. Energy Res. 12, 1391973 (2024).

Mulholland, E., Miller, J., Bernard, Y., Lee, K. & Rodríguez, F. The role of NOx emission reductions in Euro 7/VII vehicle emission standards to reduce adverse health impacts in the EU27 through 2050. Transport. Eng. 9, 100133 (2022).

Aridi, R. et al. Energy recovery in air conditioning systems: Comprehensive review, classifications, critical analysis, and potential recommendations. Energies 14, 5869 (2021).

Bhagwat, P. et al. Enhancing hydraulic system performance through intelligent control and energy efficiency. Asian Rev. Mech. Eng. 13, 17–26 (2024).

Azzam, I., Pate, K., Garcia-Bravo, J. & Breidi, F. Energy savings in hydraulic hybrid transmissions through digital hydraulics technology. Energies 15, 1348 (2022).

Li, R. et al. Review of the progress of energy saving of hydraulic control systems. Processes 11, 3304 (2023).

Xie, P. et al. Optimization-based power and energy management system in shipboard microgrid: A review. IEEE Syst. J. 16, 578–590 (2021).

Erhueh, O. V., Nwakile, C., Akano, O. A., Aderamo, A. T. & Hanson, E. Advanced maintenance strategies for energy infrastructure: Lessons for optimizing rotating machinery. Global J. Res. Sci. Technol. 2, 065–093 (2024).

Einola K & Kivi A. (2024) Hydraulic hybrid cut-to-length forest harvester—evaluation of effects on Productivity and Fuel Efficiency. In: Actuators: MDPI, 126.

Rosário, A. T. & Dias, J. C. How has data-driven marketing evolved: Challenges and opportunities with emerging technologies. Int. J. Inform. Manag. Data Insights 3, 100203 (2023).

Liu W, Luo X, Zhang J, Niu D, Deng J, Sun W & Kang J. (2022) Review on control systems and control strategies for excavators. In: Journal of Physics: Conference Series: IOP Publishing. 012023.

Coronato, A., Naeem, M., De Pietro, G. & Paragliola, G. Reinforcement learning for intelligent healthcare applications: A survey. Artif. Intell. Med. 109, 101964 (2020).

Afsar, M. M., Crump, T. & Far, B. Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 55, 1–38 (2022).

Khetarpal, K., Riemer, M., Rish, I. & Precup, D. Towards continual reinforcement learning: A review and perspectives. J. Artif. Intell. Res. 75, 1401–1476 (2022).

Ibarz, J. et al. How to train your robot with deep reinforcement learning: lessons we have learned. Int. J. Robotics Res. 40, 698–721 (2021).

Singh, B., Kumar, R. & Singh, V. P. Reinforcement learning in robotic applications: a comprehensive survey. Artif. Intell. Rev. 55, 945–990 (2022).

Prudencio, R. F., Maximo, M. R. & Colombini, E. L. A survey on offline reinforcement learning: Taxonomy, review, and open problems. IEEE Transact. Neural Netw. Learning Syst. 35, 10237 (2023).

Hambly, B., Xu, R. & Yang, H. Recent advances in reinforcement learning in finance. Math. Financ. 33, 437–503 (2023).

Cao, D. et al. Reinforcement learning and its applications in modern power and energy systems: A review. J. Modern Power Syst. Clean Energy 8, 1029–1042 (2020).

Pourahari, A., Amini, R. & Yousefi-Khoshqalb, E. Advancing nodal leakage estimation in decentralized water networks: Integrating Bayesian optimization, realistic hydraulic modeling, and data-driven approaches. Sustain. Cities Soc. 112, 105612 (2024).

Ladosz, P., Weng, L., Kim, M. & Oh, H. Exploration in deep reinforcement learning: A survey. Inform. Fusion 85, 1–22 (2022).

Ding Z, Huang Y, Yuan H & Dong H. Introduction to reinforcement learning. Deep reinforcement learning: fundamentals, research and applications, 47–123 (2020).

Shakya, A. K., Pillai, G. & Chakrabarty, S. Reinforcement learning algorithms: A brief survey. Expert Syst. Appl. 231, 120495 (2023).

Le, N., Rathour, V. S., Yamazaki, K., Luu, K. & Savvides, M. Deep reinforcement learning in computer vision: a comprehensive survey. Artif. Intell. Rev. https://doi.org/10.48550/arXiv.2108.11510 (2022).

Pateria, S., Subagdja, B., A-h, T. & Quek, C. Hierarchical reinforcement learning: A comprehensive survey. ACM Comput. Surveys (CSUR). 54, 1–35 (2021).

Wang, Z. & Hong, T. Reinforcement learning for building controls: The opportunities and challenges. Appl. Energy 269, 115036 (2020).

Hou, D., Hassan, I. & Wang, L. Review on building energy model calibration by Bayesian inference. Renew. Sustain. Energy Rev. 143, 110930 (2021).

Nott D J, Drovandi C & Frazier D T. Bayesian inference for misspecified generative models. Annual Review of Statistics and Its Application, 11 (2023).

Gundry, L. et al. Recent advances and future perspectives for automated parameterisation, Bayesian inference and machine learning in voltammetry. Chem. Commun. 57, 1855–1870 (2021).

McLachlan, G., Majdak, P., Reijniers, J. & Peremans, H. Towards modelling active sound localisation based on Bayesian inference in a static environment. Acta Acustica 5, 45 (2021).

Rappel, H., Beex, L. A., Hale, J. S., Noels, L. & Bordas, S. A tutorial on Bayesian inference to identify material parameters in solid mechanics. Archives Comput. Meth. Eng. 27, 361–385 (2020).

Lin, C.-H.S. & Garrido, M. I. Towards a cross-level understanding of Bayesian inference in the brain. Neurosci. Biobehav. Rev. 137, 104649 (2022).

Pandey, A., Singh, A. & Gardoni, P. A review of information field theory for Bayesian inference of random fields. Struct. Saf. 99, 102225 (2022).

Funding

This research was funded by the National Natural Science Foundation of China (62303493), the Furong Plan Young Talent Project (2025RC3177), the National Key Research and Development Program (2022YFD2202103), and the Hunan Provincial Natural Science Foundation Project under (2024JJ6720).

Author information

Authors and Affiliations

Contributions

Peng Hu : conceptualization, methodology, software, experiments, writing—original draft. Tao Wen : system modeling, experiment design, writing—review & editing. Daqing Zhang : industrial input, parameter validation, writing—review & editing. Haifei Chen: data processing, evaluation/visualization, writing—review & editing. Jun Gong : supervision, safety/constraint design, writing—review & editing. All authors approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hu, P., Wen, T., Zhang, D. et al. Bayesian reinforcement learning for adaptive control of energy recuperation in hydraulic excavator arms. Sci Rep (2026). https://doi.org/10.1038/s41598-026-35391-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-026-35391-y