Abstract

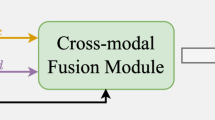

In the last few years, the fusion of multi-modal data has been widely studied for various applications such as robotics, gesture recognition, and autonomous navigation. Indeed, high-quality visual sensors are expensive, and consumer-grade sensors produce low-resolution images. Researchers have developed methods to combine RGB colour images with non-visual data, such as thermal, to overcome this limitation to improve resolution. Fusing multiple modalities to produce visually appealing, high-resolution images often requires dense models with millions of parameters and a heavy computational load, which is commonly attributed to the intricate architecture of the model. We propose LapGSR, a multimodal, lightweight, generative model incorporating Laplacian image pyramids for guided thermal super-resolution. This approach uses a Laplacian Pyramid on RGB colour images to extract vital edge information, which is then used to bypass heavy feature map computation in the higher layers of the model in tandem with a combined pixel and adversarial loss. LapGSR preserves the spatial and structural details of the image while also being efficient and compact. This results in a model with significantly fewer parameters than other SOTA models while demonstrating excellent results on two cross-domain datasets viz. ULB17-VT and VGTSR datasets.

Similar content being viewed by others

Data availability

The work utilized publicly available standard datasets, namely ULB17-VT and VGTSR. These two datasets are available at https://github.com/uverma/LapGSR.

References

Okarma, K., Tecław, M. & Lech, P. Application of super-resolution algorithms for the navigation of autonomous mobile robots. Image Process. Commun. Challeng. 6, 145–152 (2015).

Nasrollahi, K. & Moeslund, T. B. Super-resolution: A comprehensive survey. Mach. Vis. Appl. 25, 1423–1468 (2014).

Mei, Y., Fan, Y. & Zhou, Y. Image super-resolution with non-local sparse attention. In: Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 3517–3526 (2021).

Almasri, F. & Debeir, O. Multimodal sensor fusion in single thermal image super-resolution. In Computer Vision–ACCV 2018 Workshops: 14th Asian Conference on Computer Vision, Perth, Australia, December 2–6, 2018, Revised Selected Papers 14, 418–433 (Springer, 2019).

Guo, J., Ma, J., García-Fernández, Á. F., Zhang, Y. & Liang, H. A survey on image enhancement for low-light images. Heliyon (2023).

Zhao, Z., Zhang, Y., Li, C., Xiao, Y. & Tang, J. Thermal uav image super-resolution guided by multiple visible cues. IEEE Trans. Geosci. Remote Sens. 61, 1–14 (2023).

Liang, J., Zeng, H. & Zhang, L. High-resolution photorealistic image translation in real-time: A laplacian pyramid translation network. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 9387–9395 (2021).

Yang, J., Wright, J., Huang, T. S. & Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 19, 2861–2873 (2010).

Zeyde, R., Elad, M. & Protter, M. On single image scale-up using sparse-representations. In Curves and Surfaces: 7th International Conference, Avignon, France, June 24-30, 2010, Revised Selected Papers 7, 711–730 (Springer, 2012).

Dong, C., Loy, C. C., He, K. & Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38, 295–307 (2014).

Kim, J., Lee, J. K. & Lee, K. M. Accurate image super-resolution using very deep convolutional networks. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 1646–1654 (2015).

Lim, B., Son, S., Kim, H., Nah, S. & Lee, K. M. Enhanced deep residual networks for single image super-resolution. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 1132–1140 (2017).

Wang, X. et al. Esrgan: Enhanced super-resolution generative adversarial networks. In ECCV Workshops (2018).

Lai, W.-S., Huang, J.-B., Ahuja, N. & Yang, M.-H. Deep laplacian pyramid networks for fast and accurate super-resolution. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 5835–5843 (2017).

Metzger, N., Daudt, R. C. & Schindler, K. Guided depth super-resolution by deep anisotropic diffusion. In: Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18237–18246 (2023).

He, Z. & Liu, L. Hyperspectral image super-resolution inspired by deep laplacian pyramid network. Remote Sensing 10, 1939 (2018).

Almasri, F. & Debeir, O. Multimodal sensor fusion in single thermal image super-resolution. In ACCV Workshops (2018).

Gupta, H. & Mitra, K. Toward unaligned guided thermal super-resolution. IEEE Trans. Image Process. 31, 433–445 (2021).

Kasliwal, A., Seth, P., Rallabandi, S. & Singhal, S. Corefusion: Contrastive regularized fusion for guided thermal super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 507–514 (2023).

Shacht, G., Danon, D., Fogel, S. & Cohen-Or, D. Single pair cross-modality super resolution. In: Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6378–6387 (2021).

Gupta, H. & Mitra, K. Pyramidal edge-maps and attention based guided thermal super-resolution. In Computer Vision–ECCV 2020 Workshops: Glasgow, UK, August 23–28, 2020, Proceedings, Part III 16, 698–715 (Springer, 2020).

Dong, X., Yokoya, N., Wang, L. & Uezato, T. Learning mutual modulation for self-supervised cross-modal super-resolution. In European Conference on Computer Vision, 1–18 (Springer, 2022).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

Mao, X. et al. Least squares generative adversarial networks. 2017 IEEE International Conference on Computer Vision (ICCV) 2813–2821 (2016).

Goodfellow, I. et al. Generative adversarial nets. Advances in neural information processing systems27 (2014).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Fardo, F., Conforto, V., de Oliveira, F. & Rodrigues, P. A formal evaluation of psnr as quality measurement parameter for image segmentation algorithms, 2016 (2016).

Ndajah, P., Kikuchi, H., Yukawa, M., Watanabe, H. & Muramatsu, S. Ssim image quality metric for denoised images. In Proc. 3rd WSEAS Int. Conf. on Visualization, Imaging and Simulation, 53–58 (2010).

Shocher, A., Cohen, N. & Irani, M. ”zero-shot” super-resolution using deep internal learning. In Computer Vision and Pattern Recognition (2017).

Li, Y., Huang, J.-B., Ahuja, N. & Yang, M.-H. Joint image filtering with deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1909–1923 (2017).

Qiao, X. et al. Self-supervised depth super-resolution with contrastive multiview pre-training. Neural Netw. 168, 223–236. https://doi.org/10.1016/j.neunet.2023.09.023 (2023).

Zamir, S. W. et al. Restormer: Efficient transformer for high-resolution image restoration. In: Proc. IEEE/CVF conference on computer vision and pattern recognition, 5728–5739 (2022).

Han, T. Y., Kim, Y. J. & Song, B. C. Convolutional neural network-based infrared image super resolution under low light environment. In 2017 25th European Signal Processing Conference (EUSIPCO), 803–807 (IEEE, 2017).

Zhao, Z., Wang, C., Li, C., Zhang, Y. & Tang, J. Modality conversion meets superresolution: A collaborative framework for high- resolution thermal uav image generation. IEEE Trans. Geosci. Remote Sens. 62, 1–14. https://doi.org/10.1109/TGRS.2024.3354878 (2024).

de Lutio, R., D’aronco, S., Wegner, J. D. & Schindler, K. Guided super-resolution as pixel-to-pixel transformation. 2019 IEEE/CVF International Conference on Computer Vision (ICCV) 8828–8836 (2019).

Almasri, F. & Debeir, O. Multimodal sensor fusion in single thermal image super-resolution. arXiv preprint arXiv:1812.09276 (2018).

Goodfellow, I. J. et al. Generative adversarial networks. In Other Conferences (2021).

Arjovsky, M., Chintala, S. & Bottou, L. Wasserstein gan. ArXiv abs/1701.07875 (2017).

Lim, J. H. & Ye, J. C. Geometric gan. ArXiv abs/1705.02894 (2017).

Acknowledgements

We thank Mars Rover Manipal, an interdisciplinary student team at Manipal Institute of Technology, Manipal Academy of Higher Education, for the resources needed to conduct our research.

Funding

Open access funding provided by Manipal Academy of Higher Education, Manipal

Author information

Authors and Affiliations

Contributions

Aditya Kasliwal was responsible for conceptualizing the methodology, validating it, experimenting, and writing the manuscript. I.G. and Aryan Kamani implemented the codebase needed for evaluation and validating results. P.S and U. V contributed to the formal analysis, methodology and writing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kasliwal, A., Gakhar, I., Kamani, A. et al. Laplacian reconstructive network for guided thermal super-resolution. Sci Rep (2026). https://doi.org/10.1038/s41598-026-36027-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-026-36027-x