Abstract

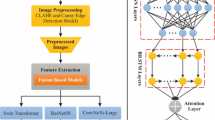

Despite recent advances in deep learning (DL) for sign language recognition (SLR), most existing systems remain limited to monolingual datasets, lack interpretability, and are too computationally intensive for real-time edge deployment. With the growing need for inclusive and real-time communication technologies, efficient and deployable SLR systems are of critical importance. This paper presents TinyMSLR, an explainable, lightweight framework designed for isolated-sign (gloss) classification on resource-constrained devices. TinyMSLR combines a ConvNeXt-Tiny encoder for fine-grained local visual cues with a Swin Transformer encoder for long-range spatio-temporal context, and integrates an adaptive fusion gate to balance both streams. To further improve accuracy under strict compute and memory budgets, we introduce a dual-teacher knowledge distillation (KD) scheme that transfers complementary spatial and contextual knowledge from high-capacity CNN and Transformer teachers to the compact student model. We evaluate TinyMSLR in a controlled multilingual setting using two public datasets (DGS RWTH-PHOENIX-Weather 2014T and Mandarin CSL) by constructing a shared subset of 20 semantically aligned sign classes and segmenting RWTH continuous sequences into single-gloss clips. Therefore, all reported results correspond to isolated-sign recognition rather than continuous sentence-level multilingual CSLR. On this benchmark, TinyMSLR achieves 99.28% training accuracy and 99.01% validation accuracy, with an F1-score of 98.96%, while keeping the parameter count under 2.7M. Inference latency is 24 ms on standard CPUs and under 13.5 ms on edge GPUs. Overall, TinyMSLR demonstrates a practical accuracy–efficiency–explainability trade-off that is well aligned with deployment-ready multilingual isolated-sign systems on the edge.

Similar content being viewed by others

Data availability

The original data presented in the study are openly available. The RWTH-PHOENIX-Weather 2014 Dataset: https://www-i6.informatik.rwth-aachen.de/koller/RWTH-PHOENIX/, accessed on 16 September 2025. The Chinese Sign Language (CSL) Dataset: https://service.tib.eu/ldmservice/dataset/chinese-sign-language, accessed on 16 September 2025.

References

Zhang, Y. & Jiang, X. Recent advances on deep learning for sign language recognition. Comput. Model. Eng. Sci. 139 (3), 2399–2450 (2024).

Essahraui, S. et al. Human behavior analysis: A comprehensive survey on techniques, applications, challenges, and future directions. IEEE Access 13, 128379–128419. https://doi.org/10.1109/ACCESS.2025.3589938 (2025).

Ugale, M., Rodrigues, O., Shinde, A., Desle, K. & Yadav, S. A review on sign language recognition using CNN. In International Conference on Advanced Machine Intelligence and Data Analytics (pp. 251–259) (2023).

Khan, S. et al. Transformers in vision: A survey. ACM Computing Surveys (CSUR) 54(10s), 1–41 (2022).

Jamil, S., Piran, M. J. & Kwon, O. J. A comprehensive survey of transformers for computer vision. Drones 7(5), 287 (2023).

Wang, Y. et al. Vision transformers for image classification: A comparative survey. Technologies 13(1), 32 (2025).

Karche, A. S., Kamble, A. V., Maru, K. A., Kedari, S. S. & Sarpate, D. D. American sign language recognition application. In 2025 International Conference on Emerging Smart Computing and Informatics (ESCI) (pp. 1–6). (IEEE, 2025).

Khan, A. et al. Deep learning approaches for continuous sign language recognition: A comprehensive review. IEEE Access 13, 55524–55544. https://doi.org/10.1109/ACCESS.2025.3554046 (2025).

Salmani Pour Avval, S., Eskue, N. D., Groves, R. M. & Yaghoubi, V. Systematic review on neural architecture search. Artif. Intell. Rev. 58(3), 73 (2025).

Tao, T., Zhao, Y., Liu, T. & Zhu, J. Sign language recognition: A comprehensive review of traditional and deep learning approaches, datasets, and challenges. IEEE Access 12, 75034–75060 (2024).

Ridwan, A. E., Chowdhury, M. I., Mary, M. M. & Abir, M. T. C. Deep neural network-based sign language recognition: A comprehensive approach using transfer learning with explainability. arXiv preprint arXiv:2409.07426 (2024).

Rachha, A. & Seyam, M. Explainable AI in education: Current trends, challenges, and opportunities. In Proceedings of SoutheastCon 2023 (pp. 232–239) (2023).

Renjith, S., Suresh, M. S. & Rashmi, M. An effective skeleton-based approach for multilingual sign language recognition. Eng. Appl. Artif. Intell. 143, 109995 (2025).

Lamaakal, I. et al. A comprehensive survey on tiny machine learning for human behavior analysis. IEEE Internet Things J. 12(16), 32419–32443. https://doi.org/10.1109/JIOT.2025.3565688 (2025).

Tsoukas, V., Gkogkidis, A., Boumpa, E. & Kakarountas, A. A review on the emerging technology of TinyML. ACM Comput. Surv. 56(10), 1–37 (2024).

Trpcheska, A., Zevnik, F. & Bader, S. Towards real-time vision-based sign language recognition on edge devices. In Proceedings of the 2024 IEEE Sensors Applications Symposium (SAS) (pp. 1–6). (IEEE, 2024).

Kallimani, R., Pai, K., Raghuwanshi, P., Iyer, S. & López, O. L. TinyML: Tools, applications, challenges, and future research directions. Multimed. Tools Appl. 83(10), 29015–29045 (2024).

Rajapakse, V., Karunanayake, I. & Ahmed, N. Intelligence at the extreme edge: A survey on reformable TinyML. ACM Comput. Surv. 55(13s), 1–30 (2023).

Lamaakal, I. et al. A tiny inertial transformer for human activity recognition via multimodal knowledge distillation and explainable AI. Sci. Rep. 15, 42335. https://doi.org/10.1038/s41598-025-26297-2 (2025).

Lamaakal, I. et al. Tiny deep learning models with hybrid compression techniques for gesture-based air handwriting recognition of English alphabets on edge device. IEEE Internet Things J. 13(1), 801–820. https://doi.org/10.1109/JIOT.2025.3624283 (2026).

Saini, M. & Susan, S. Tackling class imbalance in computer vision: A contemporary review. Artif. Intell. Rev. 56(Suppl 1), 1279–1335 (2023).

Shabaninia, E., Nezamabadi-pour, H. & Shafizadegan, F. Multimodal action recognition: A comprehensive survey on temporal modeling. Multimed. Tools Appl. 83(20), 59439–59489 (2024).

Miah, A. S. M., Hasan, M. A. M., Nishimura, S. & Shin, J. Sign language recognition using graph and general deep neural network based on large scale dataset. IEEE Access 12, 34553–34569 (2024).

Das, S., Imtiaz, M. S., Neom, N. H., Siddique, N. & Wang, H. A hybrid approach for Bangla sign language recognition using deep transfer learning model with random forest classifier. Expert Syst. Appl. 213, 118914 (2023).

Arooj, S., Altaf, S., Ahmad, S., Mahmoud, H. & Mohamed, A. S. N. Enhancing sign language recognition using CNN and SIFT: A case study on Pakistan sign language. J. King Saud Univ. - Comput. Inf. Sci. 36(2), 101934 (2024).

Aldhahri, E. et al. Arabic sign language recognition using convolutional neural network and MobileNet. Arab. J. Sci. Eng. 48, 2147–2154. https://doi.org/10.1007/s13369-022-07144-2 (2023).

Das, S., Biswas, S. K. & Purkayastha, B. A deep sign language recognition system for Indian sign language. Neural Comput. Appl. 35, 1469–1481. https://doi.org/10.1007/s00521-022-07840-y (2023).

Alnabih, A. F. & Maghari, A. Y. Arabic sign language letters recognition using Vision Transformer. Multimed. Tools Appl. 83, 81725–81739. https://doi.org/10.1007/s11042-024-18681-3 (2024).

Liu, Y. et al. Sign language recognition from digital videos using feature pyramid network with detection transformer. Multimed. Tools Appl. 82, 21673–21685. https://doi.org/10.1007/s11042-023-14646-0 (2023).

Cui, Z. et al. Spatial–temporal transformer for end-to-end sign language recognition. Complex Intelligent Systems 9, 4645–4656. https://doi.org/10.1007/s40747-023-00977-w (2023).

Kothadiya, D. R., Bhatt, C. M., Saba, T., Rehman, A. & Bahaj, S. A. SIGNFORMER: Deep vision transformer for sign language recognition. IEEE Access 11, 4730–4739. https://doi.org/10.1109/ACCESS.2022.3231130 (2023).

Woods, L. T. & Rana, Z. A. Modelling sign language with encoder-only transformers and human pose estimation keypoint data. Mathematics 11(9), 2129. https://doi.org/10.3390/math11092129 (2023).

Aly, M. & Fathi, I. S. Recognizing American Sign Language gestures efficiently and accurately using a hybrid transformer model. Sci. Rep. 15(1), 20253. https://doi.org/10.1038/s41598-025-06344-8 (2025).

Lamaakal, I. et al. An explainable Tiny-Fast Kolmogorov-Arnold network for gesture-based air handwriting recognition of Tifinagh letters in resource-constrained IoT device. IEEE Internet Things J. 12(24), 55756–55773. https://doi.org/10.1109/JIOT.2025.3625087 (2025).

Yahyati, C. et al. A systematic review of state-of-the-art TinyML applications in healthcare, education, and transportation. IEEE Access 13, 204513–204562. https://doi.org/10.1109/ACCESS.2025.3633575 (2025).

Lamaakal, I., Yahyati, C., Ouahbi, I., El Makkaoui, K. & Maleh, Y. A survey of model compression techniques for TinyML applications. In 2025 International Conference on Circuit, Systems and Communication (ICCSC) (pp. 1–6). https://doi.org/10.1109/ICCSC66714.2025.11135279 (IEEE, 2025).

Shin, J. et al. Korean sign language recognition using transformer-based deep neural network. Appl. Sci. 13, 3029. https://doi.org/10.3390/app13053029 (2023).

Kondo, T., Narumi, S., He, Z., Shin, D. & Kang, Y. A performance comparison of Japanese sign language recognition with ViT and CNN using angular features. Appl. Sci. 14(8), 3228. https://doi.org/10.3390/app14083228 (2024).

Koller, O., Forster, J. & Ney, H. Continuous sign language recognition: Towards large vocabulary statistical recognition systems handling multiple signers. Comput. Vis. Image Underst. 141, 108–125 (2015).

Zhu, Q., Li, J., Yuan, F., Fan, J. & Gan, Q. A Chinese continuous sign language dataset based on complex environments. arXiv Preprint, arXiv:2409.11960 (2024).

Zhong, Q. et al. Key frame extraction algorithm of motion video based on priori. IEEE Access 8, 174424–174436 (2020).

Corley, I., Robinson, C., Dodhia, R., Ferres, J. M. L. & Najafirad, P. Revisiting pre-trained remote sensing model benchmarks: Resizing and normalization matters. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3162–3172) (2024).

Liu, J., Liu, B., Zhou, H., Li, H. & Liu, Y. TokenMix: Rethinking image mixing for data augmentation in vision transformers. In European Conference on Computer Vision (pp. 455–471). (Cham: Springer, 2022).

Kumar, T., Brennan, R., Mileo, A. & Bendechache, M. Image data augmentation approaches: A comprehensive survey and future directions. IEEE Access 12, 1–23. https://doi.org/10.1109/ACCESS.2024.3470122 (2024).

El-Makkaoui, K., Lamaakal, I., Ouahbi, I., Maleh, Y. & Abd El-Latif, A.A. (Eds.). Tiny Machine Learning Techniques for Constrained Devices (1st ed.). Chapman and Hall/CRC. https://doi.org/10.1201/9781003544449 (2026).

Chen, L., Vivone, G., Nie, Z., Chanussot, J. & Yang, X. Spatial data augmentation: Improving the generalization of neural networks for pansharpening. IEEE Trans. Geosci. Remote Sens. 61, 1–11. https://doi.org/10.1109/TGRS.2023.3262262 (2023).

Bozgeyikli, L. L., Bozgeyikli, E., Schnell, C. & Clark, J. Exploring horizontally flipped interaction in virtual reality for improving spatial ability. IEEE Trans. Vis. Comput. Graph. 29(11), 4514–4524. https://doi.org/10.1109/TVCG.2023.3320241 (2023).

Lee, E., Kim, S., Kang, W., Seo, D. & Paik, J. Contrast enhancement using dominant brightness level analysis and adaptive intensity transformation for remote sensing images. IEEE Geosci. Remote Sens. Lett. 10(1), 62–66. https://doi.org/10.1109/LGRS.2012.2192412 (2012).

Deshmukh, M. & Bhosle, U. A survey of image registration. Int. J. Image Process. (IJIP) 5(3), 245 (2011).

Yang, L., Liu, S. & Salvi, M. A survey of temporal antialiasing techniques. Comput. Graph. Forum 39(2), 607–621 (2020).

Ferdous, G. J., Sathi, K. A., Hossain, M. A. & Dewan, M. A. A. SPT-Swin: A shifted patch tokenization Swin transformer for image classification. IEEE Access 12, 117617–117626. https://doi.org/10.1109/ACCESS.2024.3448304 (2024).

Liu, Z. et al. A ConvNet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 11976–11986) (2022).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (pp. 10012–10022) (2021).

Lamaakal, I. et al. Tiny language models for automation and control: Overview, potential applications, and future research directions. Sensors 25(5), 1318 https://doi.org/10.3390/s25051318 (2025).

Lamaakal, I. et al. A TinyDL model for gesture-based air handwriting Arabic numbers and simple Arabic letters recognition. IEEE Access 12, 76589–76605 (2024).

Dwivedi, R. et al. Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Comput. Surv. 55(9), 1–33 (2023).

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

Ismail Lamaakal, Chaymae Yahyati: Conceptualization, Methodology, Software, Writing - Original Draft. Ismail Lamaakal, Chaymae Yahyati, Yassine Maleh, Khalid El Makkaoui, Ibrahim Ouahbi: Methodology, Investigation, Writing - Review & Editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Lamaakal, I., Yahyati, C., Maleh, Y. et al. An explainable hybrid CNN–transformer model for sign language recognition on edge devices using adaptive fusion and knowledge distillation. Sci Rep (2026). https://doi.org/10.1038/s41598-026-38478-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-026-38478-8