Abstract

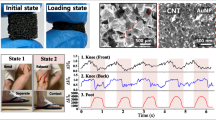

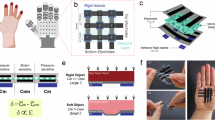

Wearable systems that incorporate soft tactile sensors that transmit spatio-temporal touch patterns may be useful in the development of biomedical robotics. Such systems have been employed for tasks such as typing and device operation, but their effectiveness in converting pressure patterns into specific control commands lags behind that of traditional finger-operated electronic devices. Here, we describe a tactile oral pad with a touch sensor array made from a carbon nanotube and silicone composite. The oral pad can be operated by moving either the tongue or teeth, and it can detect various strains so that it functions like a touchscreen. Combined with a recurrent neural network, we show that the oral pad can be used for typing, gaming and wheelchair navigation through cooperative control of tongue sliding (below 50 kPa pressure) and teeth clicking (above 500 kPa pressure).

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data that support the plots within this paper and other findings of the study are available from the corresponding authors upon reasonable request.

Code availability

The code is available from the corresponding authors upon reasonable request.

References

Papadopoulos, N. et al. Touchscreen tags based on thin-film electronics for the Internet of Everything. Nat. Electron. 2, 606–611 (2019).

Bai, H. D. et al. Stretchable distributed fiber-optic sensors. Science 370, 848–852 (2020).

Flesher, S. N. et al. A brain–computer interface that evokes tactile sensations improves robotic arm control. Science 372, 831–836 (2021).

Schultz, M., Gill, J., Zubairi, S., Huber, R. & Gordin, F. Bacterial contamination of computer keyboards in a teaching hospital. Infect. Control Hosp. Epidemiol. 24, 302–303 (2003).

Nguyen, P. et al. TYTH-typing on your teeth: tongue–teeth localization for human–computer interface. In Proc. 16th Annual International Conference on Mobile Systems, Applications, and Services 269–282 (ACM 2018).

Moin, A. et al. A wearable biosensing system with in-sensor adaptive machine learning for hand gesture recognition. Nat. Electron. 4, 54–63 (2020).

Wang, M. et al. Gesture recognition using a bioinspired learning architecture that integrates visual data with somatosensory data from stretchable sensors. Nat. Electron. 3, 563–570 (2020).

Gu, G. et al. A soft neuroprosthetic hand providing simultaneous myoelectric control and tactile feedback. Nat. Biomed. Eng. 7, 589–598 (2021).

Luo, Y. et al. Learning human–environment interactions using conformal tactile textiles. Nat. Electron. 4, 193–201 (2021).

Georgarakis, A.-M., Xiloyannis, M., Wolf, P. & Riener, R. A textile exomuscle that assists the shoulder during functional movements for everyday life. Nat. Mach. Intell. 4, 574–582 (2022).

Libanori, A., Chen, G., Zhao, X., Zhou, Y. & Chen, J. Smart textiles for personalized healthcare. Nat. Electron. 5, 142–156 (2022).

Yu, Y. et al. All-printed soft human-machine interface for robotic physicochemical sensing. Sci. Robot. 7, eabn0495 (2022).

Proietti, T. et al. Restoring arm function with a soft robotic wearable for individuals with amyotrophic lateral sclerosis. Sci. Transl. Med. 15, eadd1504 (2023).

Kim, K. K. et al. A substrate-less nanomesh receptor with meta-learning for rapid hand task recognition. Nat. Electron. 6, 64–75 (2022).

Jung, Y. H. et al. A wireless haptic interface for programmable patterns of touch across large areas of the skin. Nat. Electron. 5, 374–385 (2022).

Zhou, Z. et al. Sign-to-speech translation using machine-learning-assisted stretchable sensor arrays. Nat. Electron. 3, 571–578 (2020).

Hou, B. et al. An interactive mouthguard based on mechanoluminescence-powered optical fibre sensors for bite-controlled device operation. Nat. Electron. 5, 682–693 (2022).

Guo, H. et al. A highly sensitive, self-powered triboelectric auditory sensor for social robotics and hearing aids. Sci. Robot. 3, eaat2516 (2018).

Kim, J. et al. The tongue enables computer and wheelchair control for people with spinal cord injury. Sci. Transl. Med. 5, 213ra166 (2013).

Ajiboye, A. B. et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet 389, 1821–1830 (2017).

Degenhart, A. D. et al. Stabilization of a brain–computer interface via the alignment of low-dimensional spaces of neural activity. Nat. Biomed. Eng. 4, 672–685 (2020).

Willett, F. R., Avansino, D. T., Hochberg, L. R., Henderson, J. M. & Shenoy, K. V. High-performance brain-to-text communication via handwriting. Nature 593, 249–254 (2021).

Mohammadi, M., Knoche, H., Gaihede, M., Bentsen, B. & Andreasen Struijk, L. N. S. A high-resolution tongue-based joystick to enable robot control for individuals with severe disabilities. In Proc. 2019 IEEE 16th International Conference on Rehabilitation Robotics (ICORR) 1043–1048 (IEEE, 2019).

Mohammadi, M., Knoche, H., Bentsen, B., Gaihede, M. & Andreasen Struijk, L. N. S. A pilot study on a novel gesture-based tongue interface for robot and computer control. In Proc. 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE) 906–913 (IEEE, 2020).

Mohammadi, M. et al. Eyes-free tongue gesture and tongue joystick control of a five DOF upper-limb exoskeleton for severely disabled individuals. Front. Neurosci. 15, 739279 (2021).

Pirrera, A., Meli, P., De Dominicis, A., Lepri, A. & Giansanti, D. Assistive technologies and quadriplegia: a map point on the development and spread of the tongue barbell piercing. Healthcare 11, 101 (2022).

Andreasen Struijk, L. N. S., Bentsen, B., Gaihede, M. & Lontis, E. R. Error-free text typing performance of an inductive intra-oral tongue computer interface for severely disabled individuals. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 2094–2104 (2017).

Kirtas, O., Mohammadi, M., Bentsen, B., Veltink, P. & Struijk, L. N. S. A. Design and evaluation of a noninvasive tongue–computer interface for individuals with severe disabilities. In Proc. 2021 IEEE 21st International Conference on Bioinformatics and Bioengineering (BIBE) 1–6 (IEEE, 2021).

Park, H. & Ghovanloo, M. An arch-shaped intraoral tongue drive system with built-in tongue–computer interfacing SoC. Sensors 14, 21565–21587 (2014).

Lee, S. et al. A transparent bending-insensitive pressure sensor. Nat. Nanotechnol. 11, 472–478 (2016).

Mannsfeld, S. C. et al. Highly sensitive flexible pressure sensors with microstructured rubber dielectric layers. Nat. Mater. 9, 859–864 (2010).

Park, J., Kim, M., Lee, Y., Lee, H. S. & Ko, H. Fingertip skin-inspired microstructured ferroelectric skins discriminate static/dynamic pressure and temperature stimuli. Sci. Adv. 1, e1500661 (2015).

Huang, Y.-C. et al. Sensitive pressure sensors based on conductive microstructured air-gap gates and two-dimensional semiconductor transistors. Nat. Electron. 3, 59–69 (2020).

Zhang, J. H. et al. Versatile self-assembled electrospun micropyramid arrays for high-performance on-skin devices with minimal sensory interference. Nat. Commun. 13, 5839 (2022).

Zhao, Y. et al. A battle of network structures: an empirical study of CNN, transformer, and MLP. Preprint at arxiv.org/abs/2108.13002 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

Yao, P. et al. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Chen, P., Liu, R., Aihara, K. & Chen, L. Autoreservoir computing for multistep ahead prediction based on the spatiotemporal information transformation. Nat. Commun. 11, 4568 (2020).

Fushiki, T. Estimation of prediction error by using K-fold cross-validation. Stat. Comput. 21, 137–146 (2009).

Mahler, J. et al. Learning ambidextrous robot grasping policies. Sci. Robot. 4, eaau4984 (2019).

Li, X. et al. A transparent, wearable fluorescent mouthguard for high-sensitive visualization and accurate localization of hidden dental lesion sites. Adv. Mater. 32, e2000060 (2020).

Shih, B. et al. Electronic skins and machine learning for intelligent soft robots. Sci. Robot. 5, eaaz9239 (2020).

Sim, K. et al. An epicardial bioelectronic patch made from soft rubbery materials and capable of spatiotemporal mapping of electrophysiological activity. Nat. Electron. 3, 775–784 (2020).

Kim, J.-H. et al. A conformable sensory face mask for decoding biological and environmental signals. Nat. Electron. 5, 794–807 (2022).

Yin, B., Corradi, F. & Bohté, S. M. Accurate and efficient time-domain classification with adaptive spiking recurrent neural networks. Nat. Mach. Intell. 3, 905–913 (2021).

Cohen, I. et al. in Noise Reduction in Speech Processing (eds Cohen, I. et al.) 1–4 (Springer, 2009).

Zhao, M. et al. Centroid-predicted deep neural network in Shack-Hartmann sensors. IEEE Photonics J. 14, 1–10 (2022).

Acknowledgements

This work is supported by the RIE2025 Manufacturing, Trade and Connectivity Programmatic Fund (Award No. M21J9b0085) and the National Research Foundation, Prime Minister’s Office, Singapore, under its Competitive Research Program (Award No. NRF-CRP23−2019-0002) and under its Investigatorship Programme (Award No. NRF-NRFI05-2019-0003). We thank H. Zhao for technical assistance. We thank Z. Sheng for technical assistance on biocompatibility and the mechanical damage test.

Author information

Authors and Affiliations

Contributions

X.L., L.Y. and B.H. conceived and designed the project. X.L. supervised the project. D.Y. and X.R. characterized the materials and developed the software. L.Y. conducted the numerical simulations and designed the algorithms. B.H. fabricated the sensors and electrical devices. B.H., L.Y. and D.Y. conducted the experimental validation. B.H. and L.Y. wrote the manuscript. X.L. edited the manuscript. All the authors participated in discussions about and the analysis presented in the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Electronics thanks Alejandro Castillo and Lotte Andreasen Struijk for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–12.

Supplementary Video 1

Characteristic of piezoresistive film.

Supplementary Video 2

Mouse function demonstration.

Supplementary Video 3

Keyboard function demonstration.

Supplementary Video 4

Wheelchair control in a narrow space.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hou, B., Yang, D., Ren, X. et al. A tactile oral pad based on carbon nanotubes for multimodal haptic interaction. Nat Electron 7, 777–787 (2024). https://doi.org/10.1038/s41928-024-01234-9

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s41928-024-01234-9

This article is cited by

-

Mechanomedicine

Nature Reviews Bioengineering (2026)

-

Flexible Tactile Sensing Systems: Challenges in Theoretical Research Transferring to Practical Applications

Nano-Micro Letters (2026)