Abstract

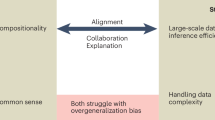

Chomsky’s generative linguistics has made substantial contributions to cognitive science and symbolic artificial intelligence. With the rise of neural language models, however, the compatibility between generative artificial intelligence and generative linguistics has come under debate. Here we outline three ways in which generative artificial intelligence aligns with and supports the core ideas of generative linguistics. In turn, generative linguistics can provide criteria to evaluate and improve neural language models as models of human language and cognition.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to the full article PDF.

USD 39.95

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Change history

24 November 2025

A Correction to this paper has been published: https://doi.org/10.1038/s43588-025-00937-z

References

Chomsky, N. Three models for the description of language. IRE Trans. Inf. Theory 2, 113–124 (1956).

Chomsky, N. Syntactic Structures (De Gruyter Mouton, 1957).

Chomsky, N. & Miller, G. A. Finite state languages. Inform. Control 1, 91–112 (1958).

Chomsky, N. & Schützenberger, M. P. in Studies in Logic and the Foundations of Mathematics (eds Braffort, P. & Hirschberg, D.) Vol. 26, 118–161 (Elsevier, 1959).

Chomsky, N. On certain formal properties of grammars. Inform. Control 2, 137–167 (1959).

Chomsky, N. in Handbook of Mathematical Psychology (eds Luce, R. D. et al.) 2 (Wiley, 1963).

Chomsky, N. The Logical Structure of Linguistic Theory (Plenum, 1975).

Chomsky, N. Current Issues in Linguistic Theory (Mouton & Co., 1964).

Chomsky, N. Aspects of the Theory of Syntax (MIT Press, 1965).

Chomsky, N. Cartesian Linguistics: a Chapter in the History of Rationalist Thought (Cambridge Univ. Press, 1966).

Srivastava, A. et al. Beyond the imitation game: quantifying and extrapolating the capabilities of language models. In Trans. Machine Learning Research (2023); https://openreview.net/forum?id=uyTL5Bvosj

Mahowald, K. et al. Dissociating language and thought in large language models. Trends Cogn. Sci. 28, 517–540 (2024).

Pater, J. Generative linguistics and neural networks at 60: foundation, friction and fusion. Language 95, e41–e74 (2019).

Potts, C. A case for deep learning in semantics: response to Pater. Language 95, e115–e125 (2019).

Piantadosi, S. T. Modern language models refute Chomsky's approach to language. In From Fieldwork to Linguistic Theory: A Tribute to Dan Everett (eds Gibson, E. & Poliak, M.) 353–414 (Language Science Press, 2024).

Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. On the dangers of stochastic parrots: can language models be too big? In Proc. 2021 ACM Conference on Fairness, Accountability and Transparency 610–623 (ACM, 2021).

Baroni, M. On the proper role of linguistically-oriented deep net analysis in linguistic theorizing. In Algebraic Systems and the Representation of Linguistic Knowledge (ed. Lappin, S.) 5–22 (Taylor and Francis, 2022).

Marcus, G. Deep learning alone isn’t getting us to human-like AI. Noema (11 August 2022); https://www.noemamag.com/deep-learning-alone-isnt-getting-us-to-human-like-ai/

van Dijk, B., Kouwenhoven, T., Spruit, M. & van Duijn, M. J. Large language models: the need for nuance in current debates and a pragmatic perspective on understanding. In Proc. 2023 Conference on Empirical Methods in Natural Language Processing (2023); https://openreview.net/forum?id=DOlbbJhJ1A

Kodner, J., Payne, S. & Heinz, J. Why linguistics will thrive in the 21st century: a reply to Piantadosi. Preprint at https://lingbuzz.net/lingbuzz/007485 (2023).

Katzir, R. Why large language models are poor theories of human linguistic cognition: a reply to Piantadosi. Biolinguistics 17, e13153 (2023).

Chomsky, N., Roberts, I. & Watumull, J. Noam Chomsky: the false promise of ChatGPT. The New York Times (8 March 2023).

Lappin, S. Assessing the strengths and weaknesses of large language models. J. Logic Lang. Inform. 33, 9–20 (2024).

Futrell, R. & Mahowald, K. How linguistics learned to stop worrying and love the language models. Preprint at https://arxiv.org/abs/2501.17047 (2025).

Chomsky, N. The Range of Adequacy of Various Types of Grammars. Technical Report, MIT RLE Quarterly Progress Report (MIT Press, 1956); https://dspace.mit.edu/bitstream/handle/1721.1/51946/RLE_QPR_041_XIV.pdf?sequence=1&isAllowed=y

Chomsky, N. On the Limits of Finite-State Description. Technical Report, MIT RLE Quarterly Progress Report (MIT Press, 1956); https://dspace.mit.edu/bitstream/handle/1721.1/51970/RLE_QPR_042_XIII.pdf?sequence=1

Chomsky, N. Some Properties of Phrase Structure Grammars. Technical Report, MIT RLE Quarterly Progress Report (MIT Press, 1958); https://dspace.mit.edu/bitstream/handle/1721.1/51946/RLE_QPR_041_XIV.pdf?sequence=1&isAllowed=y

Chomsky, N. Context-Free Grammars and Pushdown Storage. Technical Report, MIT RLE Quarterly Progress Report (MIT Press, 1962).

Chomsky, N. & Schützenberger, M. P. in Computer Programming and Formal Systems Vol. 35 of Studies in Logic and the Foundations of Mathematics (eds Braffort, P. & Hirschberg, D.) 118–161 (Elsevier, 1963); https://doi.org/10.1016/S0049-237X(09)70104-1. https://www.sciencedirect.com/science/article/pii/S0049237X09701041

Chomsky, N. & Miller, G. in Handbook of Mathematical Psychology (eds Luce, D. R. et al.) 269–321 (Wiley, 1963).

Joshi, A. K., Levy, L. S. & Takahashi, M. Tree adjunct grammars. J. Comput. Syst. Sci. 10, 136–163 (1975).

Aho, A. V. Indexed grammars-an extension of context-free grammars. J. ACM 15, 647–671 (1968).

Gazdar, G. Applicability of Indexed Grammars to Natural Languages 69–94 (Springer, 1988); https://doi.org/10.1007/978-94-009-1337-0_3

Bar-Hillel, Y., Gaifman, C. & Shamir, E. Language and Information (Addison-Wesley, 1964).

Gazdar, G., Klein, E., Pullum, G. K. & Sag, I. A. Generalized Phrase Structure Grammars (Blackwell, 1985).

Joshi, A. K., Vijay-Shanker, K. & Weir, D. J. in Foundational Issues in Natural Language Processing (eds Sells, P. et al.) (MIT Press, 1991).

Peters, P. S. & Ritchie, R. W. On the generative power of transformational grammars. Inf. Sci. 6, 49–83 (1973).

Torenvliet, L. & Trautwein, M. A note on the complexity of restricted attribute-value grammars. In Proc. Computational Linguistics in the Netherlands, CLIN V (eds Moll, M. & Nijholt, A.) 145–164 (Neslia Paniculata, 1995); https://www.clinjournal.org/CLIN_proceedings/V/torenvliet.pdf

Stabler, E. P. in The Oxford Handbook of Linguistic Minimalism (ed. Boeckx, C.) 617–642 (Oxford Univ. Press, 2012); https://doi.org/10.1093/oxfordhb/9780199549368.013.0027

Michaelis, J. in Logical Aspects of Computational Linguistics (ed. Moortgat, M.) 179–198 (Springer, 2001).

Icard, T. F. Calibrating generative models: the probabilistic Chomsky-Schützenberger hierarchy. J. Math. Psychol. 95, 102308 (2020).

Deletang, G. et al. Neural networks and the Chomsky hierarchy. In Proc. Eleventh International Conference on Learning Representations (2023); https://openreview.net/forum?id=WbxHAzkeQcn

Lan, N., Geyer, M., Chemla, E. & Katzir, R. Minimum description length recurrent neural networks. Trans. Assoc. Comput. Linguist. 10, 785–799 (2022).

Chomsky, N. Lectures on Government and Binding: The Pisa Lectures (Foris Publications, 1981).

Chomsky, N. The Minimalist Program (MIT Press, 1995).

Chomsky, N. et al. Merge and the Strong Minimalist Thesis. Elements in Generative Syntax (Cambridge Univ. Press, 2023).

Marcolli, M., Chomsky, N. & Berwick, R. Mathematical structure of syntactic merge: an algebraic model for generative linguistics (Linguistic Inquiry Monographs, MIT Press, 2025).

Rich, E. & Cline, A. K. Reasoning: An Introduction to Logic, Sets and Functions (Univ. Texas, 2014); https://www.cs.utexas.edu/~dnp/frege/subsection-178.html

Chomsky, N. Recent contributions to the theory of innate ideas: summary of oral presentation. Synthese 17, 2–11 (1967).

Frank, M. C. Bridging the data gap between children and large language models. Trends Cogn. Sci. 27, 990–992 (2023).

Truong, T. H., Baldwin, T., Verspoor, K. & Cohn, T. Language models are not naysayers: an analysis of language models on negation benchmarks. In Proc. 12th Joint Conference on Lexical and Computational Semantics (*SEM 2023) (eds Palmer, A. & Camacho-collados, J.) 101–114 (Association for Computational Linguistics, 2023); https://doi.org/10.18653/v1/2023.starsem-1.10

Alhamoud, K. et al. Vision-language models do not understand negation. In Proc. Computer Vision and Pattern Recognition Conference 29612–29622 (2025).

Liu, Z. & Jasbi, M. The development of English negative constructions and communicative functions. Lang. Learn. Dev. 2025, 1–35 (2025).

de Carvalho, A. & Dautriche, I. 20-month-olds can use negative evidence while learning word meanings. Cognition 262, 106171 (2025).

Feiman, R., Mody, S., Sanborn, S. & Carey, S. What do you mean, no? Toddlers’ comprehension of logical ‘no’ and ‘not’. Lang. Learn. Dev. 13, 430–450 (2017).

Riskin, J. The defecating duck, or, the ambiguous origins of artificial life. Crit. Inq. 29, 599–633 (2003).

Harris, Z. S. Methods in Structural Linguistics (Univ. Chicago Press, 1951).

Shannon, C. E. Prediction and entropy of printed English. Bell Syst. Tech. J. 30, 50–64 (1951).

Shannon, C. E. A mathematical theory of communication. Bell Syst. Tech. J. 27, 379–423 (1948).

Chomsky, N. Systems of syntactic analysis. J. Symb. Log. 18, 242–265 (1953).

Hewitt, J. & Manning, C. D. A structural probe for finding syntax in word representations. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies Vol. 1 (Long and Short Papers) 4129–4138 (Association for Computational Linguistics, 2019).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics (eds Burstein, J. et al.) 4171–4186 (Association for Computational Linguistics, 2019); https://doi.org/10.18653/v1/N19-1423; https://aclanthology.org/N19-1423

Radford, A. et al. Language Models are Unsupervised Multitask Learners (2019); https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf

Kim, Y., Dyer, C. & Rush, A. Compound probabilistic context-free grammars for grammar induction. In Proc. 57th Annual Meeting of the Association for Computational Linguistics (Korhonen, A. et al.) 2369–2385 (Association for Computational Linguistics, 2019); https://aclanthology.org/P19-1228

Drozdov, A., Verga, P., Yadav, M., Iyyer, M. & McCallum, A. Unsupervised latent tree induction with deep inside-outside recursive auto-encoders. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol. 1 (Long and Short Papers) 1129–1141 (Association for Computational Linguistics, 2019); https://aclanthology.org/N19-1116

Zhu, H., Bisk, Y. & Neubig, G. The return of lexical dependencies: neural lexicalized PCFGs. Trans. Assoc. Comput. Linguist. 8, 647–661 (2020).

Portelance, E., Reddy, S. & O’Donnell, T. J. Reframing linguistic bootstrapping as joint inference using visually-grounded grammar induction models. J. Memory Lang. 145, 104672 (2025).

Portelance, E. & Jasbi, M. The roles of neural networks in language acquisition. Lang. Linguist. Compass 18, e70001 (2024).

Osherson, D. N. & Weinstein, S. in Formal Learning Theory 275–292 (Springer, 1984); https://doi.org/10.1007/978-1-4899-2177-2_14

Osherson, D., de Jongh, D., Martin, E. & Weinstein, S. in Handbook of Logic and Language (eds Van Benthem, J. & Ter Meulen, A.) 737–775 (Elsevier, 1997).

Osherson, D. N., Stob, M. & Weinstein, S. in Fundamentals of Learning Theory 7–24 (MIT Press, 1990).

Gold, E. M. Language identification in the limit. Inf. Control 10, 447–474 (1967).

Hamburger, H. & Wexler, K. N. Identifiability of a class of transformational grammars. In Approaches to Natural Language (eds Hintikka, K. J. J. et al.) 153–166 (Springer, 1973); https://doi.org/10.1007/978-94-010-2506-5_5

Hamburger, H. & Wexler, K. A mathematical theory of learning transformational grammar. J. Math. Psychol. 12, 137–177 (1975).

Wexler, K. N. & Hamburger, H. On the insufficiency of surface data for the learning of transformational languages. In Approaches to Natural Language (eds Hintikka, K. J. J. et al.) 167–179 (Springer, 1973); https://doi.org/10.1007/978-94-010-2506-5_6

Chomsky, N. Principles and parameters in syntactic theory. In Explanation in Linguistics: The Logical Problem of Language Acquisition (eds Hornstein, N. & Lightfoot, D.) 32–75 (Longman, 1981).

Gibson, E. & Wexler, K. Triggers. Linguist. Inq. 25, 407–454 (1994).

Sakas, W. G. & Fodor, J. D. Disambiguating syntactic triggers. Lang. Acquis. 19, 83–143 (2012).

Yang, C. A selectionist theory of language acquisition. In Proc. 37th Annual Meeting of the Association for Computational Linguistics on Computational Linguistics, ACL ’99 429–435 (Association for Computational Linguistics, 1999); https://doi.org/10.3115/1034678.1034744

Yang, C. Knowledge and Learning in Natural Language. PhD thesis, MIT (2000).

Chomsky, N. Approaching UG from below. In Interfaces + Recursion = Language? Chomsky’s Minimalism and the View from Syntax-Semantics (eds Sauerland, U. & Gärtner, H.-M.) (Mouton de Gruyter, 2007).

Chomsky, N. Three factors in language design. Linguist. Inq. 36, 1–22 (2005).

Ziv, I., Lan, N., Chemla, E. & Katzir, R. Large language models as proxies for theories of human linguistic cognition. Preprint at https://arxiv.org/abs/2502.07687 (2025).

Shi, H., Mao, J., Gimpel, K. & Livescu, K. Visually grounded neural syntax acquisition. In Proc. 57th Annual Meeting of the Association for Computational Linguistics 1842–1861 (Association for Computational Linguistics, 2019); https://aclanthology.org/P19-1180

Zhao, Y. & Titov, I. Visually grounded compound PCFGs. In Proc. 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP) 4369–4379 (Association for Computational Linguistics, 2020); https://www.aclweb.org/anthology/2020.emnlp-main.354

Jin, L. & Schuler, W. Grounded PCFG induction with images. In Proc. 1st Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing 396–408 (Association for Computational Linguistics, 2020); https://aclanthology.org/2020.aacl-main.42

Wan, B., Han, W., Zheng, Z. & Tuytelaars, T. Unsupervised vision-language grammar induction with shared structure modeling. In Proc. International Conference on Learning Representations (2022); https://openreview.net/forum?id=N0n_QyQ5lBF

Perniss, P. Why we should study multimodal language. Front. Psychol. 9, 1109 (2018).

Dressman, M. Multimodality and language learning. In The Handbook of Informal Language Learning (eds Dressman, M. & Sadler, R. W.) Ch. 3, 39–55 (Wiley, 2019); https://doi.org/10.1002/9781119472384.ch3

Cohn, N. & Schilperoord, J. A Multimodal Language Faculty: a Cognitive Framework for Human Communication (Bloomsbury, 2024).

Acknowledgements

E.P. thanks the participants and panelists of the Université du Québec á Montréal (UQÁM)’s Institut des Sciences Cognitives 2024 Summer School Chatting Minds: The Science and Stakes of Large Language Models, where the idea for this piece originally took form. This research was supported by E.P.’s IVADO Professor program.

Author information

Authors and Affiliations

Contributions

Both E.P. and M.J. contributed to the conceptualization and writing of this paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Computational Science thanks Spencer Caplan and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Fernando Chirigati, in collaboration with the Nature Computational Science team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Portelance, E., Jasbi, M. On the compatibility of generative AI and generative linguistics. Nat Comput Sci 5, 745–753 (2025). https://doi.org/10.1038/s43588-025-00861-2

Received:

Accepted:

Published:

Version of record:

Issue date:

DOI: https://doi.org/10.1038/s43588-025-00861-2