Abstract

Oracle bone inscription (OBI) rubbings record the shape, structure, and details of the inscriptions, serving as essential resources for OBI research and digital historical libraries. However, OBIs often suffer from degradation issues such as cracks, erosion, noise, and interference patterns. Existing restoration methods struggle to handle the complex noise in real-world scenarios, frequently leading to glyph distortion or insufficient noise removal. In this paper, we propose a glyph extraction-driven image generation network for high-precision OBI restoration. Our method leverages character glyphs as supplementary information to address complex degradations while preserving the original glyph structures. Additionally, a multi-scale feature extraction strategy is introduced to process noise at varying scales. Comparative evaluations on multiple real-world datasets demonstrate that our method significantly outperforms state-of-the-art approaches, thereby validating its effectiveness for practical OBI image restoration.

Similar content being viewed by others

Introduction

Oracle bone inscriptions (OBIs) are the earliest systematic writing in China, first appearing around the 14th century BCE, during the Shang Dynasty approximately 3600 years ago1,2. These inscriptions were primarily inscribed on turtle shells and animal bones. The content mainly pertains to divination, royal activities, rituals, warfare, agriculture, and meteorology3. The discovery and study of OBIs have provided us with invaluable historical records. By deciphering these characters, we have the opportunity to understand the political, economic, cultural, and religious life of ancient Chinese society4,5. To date, more than 150,000 pieces of OBIs have been unearthed, significantly helping to fill in the gaps in ancient history.

OBI rubbings refer to the technique of transferring the inscriptions of OBIs from physical objects onto papers6. This technique has facilitated the digitization of OBIs, which not only protects the original artifacts but also aids in research and dissemination7. OBI rubbing images faithfully record the shape, structure, and details of the inscriptions, making them essential materials for the OBI studies and constructions of the historical digital library. A user-oriented knowledge base of Chinese ancient inscription rubbings has been developed, focusing on cultural communication and art appreciation, which reflects the importance of rubbing images for understanding and learning historical culture8. However, as a historical heritage excavated in the modern era, OBIs are extremely fragile and prone to damage9,10. This leads to the degradation of OBI images, specifically manifested as follows: (1) the brittle material caused by natural aging, resulting in cracks or breakage on the surface; (2) erosion noises caused by environmental factors, such as wind erosion noise; and (3) damages caused by improper archeological excavation or preservation. Additionally, improper rubbing processes can introduce extra noise to oracle bone images. We have shown several examples in the Fig. 1. Such image degradation issues significantly interfere with the display of OBIs in images, posing substantial challenges to vision-based OBI research11,12,13,14.

a Images of whole pieces of oracle bone. b–e Examples of OBI character images with complex degradation include various degradation types and scales. f OBI image with mixed types of noise. g OBI image with varying levels of mixed erosion noise. h OBI image with synthetic Gaussian noise. i OBI image with synthetic salt-and-pepper noise.

Image restoration is a classic task in the computer vision area, aiming to restore clear image content from damaged or noisy images. For example, BM3D denoises general images via inverse 3D transform and spectrum shrinkage15. MSPB uses wavelet thresholding to effectively despeckle SAR images while preserving edges, enabling real-time remote sensing16. Recent methods adopt domain-specific strategies, such as NSST-based multivariate modeling for CT image denoising17, and DOST-domain guided filtering for real-time SAR restoration, combining noise suppression with edge preservation18. With the development of deep learning, Convolutional Neural Networks (CNNs) are applied in image restoration. The BM3D-Net combines the traditional BM3D algorithm and CNNs, further enhancing the restoration effects of BM3D through deep learning, particularly in handling real photographic noise19 and medical noises20. End-to-end image generation frameworks have been explored, such as the U-Net architecture21 and its variants, such as UFormer22 and MICU23, which use skip connections to fuse low and high-level features, have proven effective in image restoration in complex noise environments. Recent advancements in diffusion models have also proven effective for cultural heritage restoration tasks. Hu et al.24 proposed GuidePaint, a mask-free, lossless diffusion model for ancient mural restoration, using unsupervised learning and similarity-constrained sampling for high-fidelity reconstruction. Notably, adversarial network-based methods like Noise2Noise25 train a generator network to produce restored images and use a discriminator network to distinguish between restored and real images, thus eliminating the need for clean images as a reference for training the model. A considerable number of works26,27,28,29 applied the GAN architecture in various areas for image denoising tasks and verified the superiority of the architecture through a large number of experiments.

In recent years, the application and study of character images via deep neural networks have expanded considerably. Acquiring clear historical character images through image restoration represents a compelling research topic30,31. Inspired by general image restoration methods, current research on character image restoration mainly focuses on removing synthetic noise. Such methods aim to simulate real-world conditions by using synthetic noise generated through probability density functions32, such as Gaussian noise33. Representative studies have explored the restoration of character images through the use of denoising convolutional neural networks (DnCNN)34,35, and the mitigation of synthetic noises in historical document images utilizing adversarial autoencoders36. CIDG introduced a method of generating training data by adding Gaussian and salt-and-pepper noise to clean images for calligraphic character denoising33. SinGAN introduced a unified unpaired approach for both denoising and super-resolution of document images37. Yalin et al.38 proposed IDCCW, a restoration method for ancient Chinese character images based on a writing standard model, employing simulated ancient document noise for adversarial training.

A diffusion-based method DiffACR39 is proposed for automated ancient Chinese character restoration that synthesizes eroded images as a form of cold diffusion and extracts prior masks from eroded images. AncientGlyphNet40 proposes an advanced deep learning architecture combining Haar wavelet transform, attention-based modules, and a specialized dataset to robustly detect ancient Chinese characters in complex scenes. However, these methods often perform poorly in practical applications. This is because the real-world degradation of images is more complex than such synthetic noise41, as seen in the erosion noise in OBI images. Additionally, the degradation of OBI images includes mixed noise categories with different noise levels, making this task more challenging. Therefore, it is necessary to develop image restoration methods specifically for OBI rubbing images to improve image quality and further assist vision-based OBI research.

To address the issues previously outlined, we propose an end-to-end image restoration framework, specifically tailored for high-precision OBI restoration, employing a strategy centered around glyph extraction. This method aims to utilize character glyphs42 as one of the main supplementary pieces of information for OBI image restoration to handle complex real-world noise types and multiple noise levels. Specifically, we introduce a parallel image generation task to capture glyph information and infuse it into the backbone network for image restoration, which maintains the consistency of OBIs during the restoration process. Simultaneously, we incorporate multi-scale feature extraction to enhance the processing of various noise levels and types to address the mixed noise commonly found in OBI images. The proposed framework mainly utilizes a glyph extractor and a deep feature extractor to deal with complex OBI image degradation. The deep feature extractor comprises novel multi-scale dense blocks (MSdense), where each block employs multi-scale layers connected densely for local feature extraction. Additionally, we propose glyph feature blocks (GFBs) to capture glyph information that will be infused into the multi-scale feature extractor module, where the glyph extractor is introduced to constrain the learning of GFBs. Overall, the proposed character image restoration method enhances the visual clarity and structural integrity of ancient character images and provides a robust technical basis for broader cultural heritage restoration. Compared to previous works, our study introduces glyph extraction specifically tailored to preserve essential glyph structures amidst complex, real-world degradations, directly addressing previously unfilled knowledge gaps. This study is significant not only for improving image quality but also for supporting the digital preservation and deep visual analysis of one of the oldest written systems in human history. These elements underscore the significant contributions of our proposed method:

-

We propose a novel end-to-end OBI image restoration method. It effectively handles various real-world degradation in oracle bone images while preserving the OBI from damage by maintaining the inherent glyphs.

-

We have developed a novel framework for the restoration of ancient character images, which is predicated on the strategic infusion of supplementary glyph features. Within this framework, GFBs are committed to extracting glyph information, and the MSdense blocks aim to enhance the corresponding features with multiple scales.

-

We compared our method with existing image restoration methods across datasets with different levels of restoration complexity. The promising results demonstrate the effectiveness of our proposed method, especially in complex OBI restoration scenarios.

The rest of this paper is organized as follows. Section “Methods” introduces the technical challenges faced in OBI image restoration and the benefits of maintaining character glyphs for OBI restoration, and provides a detailed description of our proposed OBI restoration network and its key innovative modules. In section “Results”, we validate our method on multiple datasets, discussing the experimental setup and the results of comparative experiments. Finally, section “Discussion” discusses its real-world application and future work and concludes the paper.

Methods

Intuitive discussion

OBI image degradation

OBIs, as a kind of historical heritage unearthed in recent decades, are prone to damage and erosion, leading to the degradation of OBI images. Figure 1a shows examples of image degradation in the whole pieces of oracle bone. Such degradation affects the visual characteristics of the images, making it difficult to distinguish the details of the inscriptions, which poses significant challenges for archeologists studying OBI. For instance, we present several representative examples from the OBI image dataset43 in Fig. 1. Figure 1b contains cracking noise caused by improper excavation; Figure 1c contains erosion noise; Fig. 1d shows the image has broken edges due to natural aging; and Fig. 1e shows interference patterns caused by improper rubbing procedure. Additionally, we show images Fig. 1f, g, representing mixed noise types, and mixed erosion noise with varying levels, respectively.

We can observe that the texture and shape of these noises closely resemble the OBI characters. These real-world noises present in OBI images are different from synthetic noises generated by probability density functions, which results in poor performance of OBI image restoration methods based on fitting synthetic noises. Figure 1h, i show examples of simulating real-world scenarios using synthetic noise, respectively. However, these synthetic noises can be readily distinguished from OBIs due to the disparate scale and texture of the degradation. Given these observations, we believe it is necessary to develop image restoration methods specifically for OBI rubbings to improve their image quality.

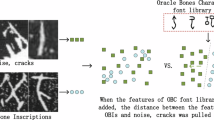

The glyph of characters

A glyph, constituted by character strokes, represents the primary semantic feature of logographic characters, such as OBIs44. Instead of using other common features like fonts, OBIs are predominantly distinguished by their glyphs45,46. Therefore, in the task of OBI image restoration, glyph information should be properly preserved to reconstruct clean OBIs from complex degradation. Otherwise, OBIs will become unrecognizable or misrecognized. Glyph information can be used to present the structure and structural features of OBIs. For example, Fig. 2b demonstrates several examples of character glyphs, where Fig. 2c presents their corresponding clean OBI images. Therefore, we are inspired to enhance the restoration of OBI images by incorporating glyph information into the image restoration framework.

Objective Statement

The objective of our task is to reconstruct a clean OBI image \({I}_{OBI}\in {{\mathbb{R}}}^{H\times W}\) from a noisy observed image \({I}_{N}\in {{\mathbb{R}}}^{H\times W}\). Firstly, we are clarifying the OBI image restoration task by modeling the real-world complex degradation. Conventional image restoration methods, such as DnCNN47, often presuppose a straightforward image degradation model IN = IOBI + D, where D is a specific type of noise distribution. These techniques are designed to identify and eliminate the noise component D in IN to achieve image restoration. However, the degradation model applicable to real-world character images, such as degraded OBIs, is significantly more intricate and diverges from the synthetic noise model48. It includes degradation unrelated to OBI characters, such as cracking damage and interference patterns. Furthermore, the real-world degraded OBI image IN is characterized by a complex mixture of noise types and levels. Therefore, we model the degraded OBI image IN as follows:

where Di denotes a representation of a noise category; f( ⋅ ) represents a specific noise level function of Di, and the cumulative of f(Di) characterizes the representation of mixed noise categories and levels.

Overall of the proposed method

This research aims to solve the problem of visual degradation in OBI images. The primary objective is to accurately eliminate various types of noise from a degraded OBI image without changing its intrinsic glyph, as shown in Fig. 2a, c. However, due to the complexity of noise in OBI images, existing methods do not achieve satisfactory image restoration results. In Fig. 2d, we provide two examples of failed OBI restoration: one representing glyph damage due to excessive denoising and the other showing noise interference due to insufficient noise removal. The failed areas are highlighted with red boxes. Based on the above observations and discussions, our research on OBI image restoration primarily focuses on trying to remove different levels of noise from OBI images while preserving their glyphs.

We propose a novel framework for OBI rubbing image restoration, as illustrated in Fig. 3. The framework is informed by the critical role that glyphs play in conveying the semantic information of OBI characters. To this end, we have developed a glyph extractor that comprises both a glyph generator and a glyph discriminator, tasked with learning and refining glyph features. Furthermore, to guarantee that our framework can effectively manage different noise levels, we have introduced a multi-scale feature extractor module. It utilizes receptive fields of various sizes, enabling the framework to address and mitigate various types and degrees of noise, thereby further improving the performance of image reconstruction. Besides, an input projector and an output projector are introduced to bridge the input and high-quality image output in the proposed OBI restoration framework. Subsequent sections will outline the overall architecture of the proposed framework and offer a comprehensive explanation of each individual component.

Input projector

The input projector extracts shallow features from the input OBI images, aiming to provide a stable foundation for early visual processing49. It employs a 3 × 3 convolutional layer with LeakyReLU, which is adept at extracting spatial features. For the noisy OBI image IN, the following operation is performed:

where InputProjector( ⋅ ) signifies to the calculation undertaken by the input projector; whereas Fp represents the shallow features extracted, with Fp residing in the dimensional space \({{\mathbb{R}}}^{H\times W\times C}\). This operation proficiently transforms the input OBI image into an elevated-dimensional feature space.

Glyph extractor

In the section “Intuitive discussion”, we emphasized the pivotal role of glyphs in maintaining the semantics of OBI characters, which is essential for optimizing restoration performance. Thus, we proposed a glyph extractor, which is specifically designed to extract glyph features for infusing into the backbone network, ensuring that structural information is learned during the reconstruction of clean OBI character images. The glyph extractor operates through generative adversarial training to reconstruct OBI glyphs and is comprised of two key components: a glyph generator and a glyph discriminator, which collectively guide the extraction of glyph features.

Glyph generator

The glyph generator, as a backbone for reconstructing glyphs \({I}_{G}^{GT}\), is designed as a U-shaped encoder-decoder architecture assembled from n glyph feature blocks (GFBs). Each GFB consists of several 3 × 3 convolution layers, batch normalization, and ReLU activations, linked via a residual structure50, as shown in the green block in Fig. 3. The glyph features \({F}_{g}^{i}\) output by the ith GFB are defined as:

When i = 1, \({F}_{g}^{i-1}\) originates from Fp.

Glyph discriminator

The glyph discriminator aims to distinguish between the generated OBI glyph image \({I}_{G}^{R}\) and the real glyph image \({I}_{G}^{GT}\). The introduction of the glyph discriminator mitigates noise impact from OBI rubbings on glyph generation and enhances the model’s ability to maintain the structural consistency of OBI characters. The discriminator consists of convolutional layers, batch normalization, and ReLU blocks connected by a residual structure, and concludes with a fully connected layer FC.

Multi-scale feature extractor

After obtaining the preliminary shallow features Fp via the input projector, we fed them into the multi-scale feature extractor. The objective is to enhance such features by integrating the multi-scale representations of OBI images and the glyph features of OBI characters. A U-shaped encoder-decoder architecture equipped with multiple multi-scale dense blocks (MSdense) is introduced for such goals.

Multi-scale dense blocks

As discussed in the section “Intuitive discussion”, addressing the challenge of restoring real-world images requires the capability of models to process mixed noise types and various levels of degradation in an OBI rubbing image. In this module, we introduce MSdense blocks, specifically designed to handle complex noise in OBI rubbing images. MSdense blocks are connected densely51, where each layer is directly connected to all previous layers. The blue part in Fig. 3 shows the dense connections. By taking all previous feature maps as input, such multi-scale blocks facilitate feature reuse and multi-scale fusion.

Multi-scale layers

We proposed the multi-scale layers to construct receptive fields with different sizes to model complex degradations, including both short-range and long-range dependencies. Each MSdense consists of three multi-scale layers. We employ concatenation to merge outputs from different scales, including small, medium, and large scales. Given the notable rise in computational burden associated with increasing the kernel size of convolutional layers directly52, we have implemented multi-scale dilated convolutional layers within the MSdense blocks. These layers incorporate grouped dilated convolutional layers, each characterized by distinct dilation rates ω. For the ith MSdense block, with Fi denoting the input feature map, the resulting feature maps at various scales are delineated as follows:

where DilatedConv( ⋅ ) processes the feature maps, ω ∈ {1, 2, 3}, namely ωS = 1,ωM = 2, and ωL = 3. When i = 1, Fi = Fp. Therefore, the feature map produced after the ith MSdense block is:

where MSdense( ⋅ ) represents the operation performed by the MSdense, and \({F}_{ms}^{i}\in {{\mathbb{R}}}^{H\times W\times C}\).

Glyph information infusion

Following each MSdense, the glyph features extracted by the GFB blocks are infused into Fms as a supplement to character feature learning, aiming to enhance the learning of character structural consistency. The glyph information infusion performed after the ith MSdense is represented as:

where Fi serves as the subsequent input for the MSdensei+1. Additionally, to facilitate effective information transfer across different depths and enhance spatial detail restoration of the OBI image, skip connections are employed between MSdenses in both the encoder and decoder. The process of generating intermediate depth features Fi by the multi-scale feature extractor module is described as follows:

where MSdensei( ⋅ ) represents the processing by the ith MSdense blocks, with i = {0, 1, 2. . . T}.

Output projector

Following the extraction of multi-scale features, the derived features \({F}_{ms}^{n}\) are channeled into the output projector for the reconstruction of the OBI characters. The resultant output, expressed as \({I}_{OBI}^{R}\in {{\mathbb{R}}}^{H\times W\times {C}_{i}}\), is formulated as follows:

where the OutputProjector( ⋅ ) comprises a sequence of three 3 × 3 CNN layers, which are designed to facilitate precise pixel-level image reconstruction, while also promoting rapid convergence of the model.

Loss functions

Our proposed framework introduces four loss functions to constrain the restoration of OBI rubbing images, including OBI pixel loss, OBI feature loss, glyph pixel loss, and glyph discrimination loss. The character pixel loss \({{\mathcal{L}}}_{OBI}^{P}\) is applied in pixel-level image reconstruction tasks as follows:

where H and W are the height and width of the OBI rubbing image, respectively, with h ∈ H and w ∈ W. \({I}_{OBI}^{GT}\) is the ground truth noise-free OBI image, and OBIR is the generated restoration OBI image.

To complement the pixel-based loss, we also introduced a feature-based loss, \({{\mathcal{L}}}_{OBI}^{F}\), to fine-tune the training of our model. This loss considers the comparison of feature-level information and global discrepancies. We apply the VGG16 network VGG( ⋅ ) that is pre-trained on the ImageNet dataset, and then utilize it to output OBI character features. The perceptual loss for \({I}_{OBI}^{R}\) is defined as:

Additionally, we introduce a glyph pixel loss \({{\mathcal{L}}}_{G}^{P}\) and a glyph discrimination loss \({{\mathcal{L}}}_{G}^{D}\), aimed at enhancing the model’s ability to learn glyph features and to preserve the structural consistency of the restored OBI images. The glyph of the real OBI character image IN, denoted as \({I}_{G}^{R}\), is obtained through a skeletonization method 9. The glyph pixel loss \({{\mathcal{L}}}_{G}^{P}\) is calculated by comparing the real glyph \({I}_{G}^{GT}\) with the output glyph image \({I}_{G}^{R}\), which is defined as:

where H and W are the height and width of the glyph image, respectively, with h ∈ H and w ∈ W. The loss function for the glyph discriminator \({{\mathcal{L}}}_{G}^{D}\) introduces a GAN loss53, expressed as:

where the function GD( ⋅ ) represents the prediction of the glyph discriminator. As a result, the overall loss function for the proposed framework, denoted as \({\mathcal{L}}\), is represented as:

Results

In this section, we are evaluating our proposed OBI restoration method through various experiments. Firstly, experimental setups are detailed, where datasets with different features are involved to facilitate a comprehensive comparison of our method against current state-of-the-art techniques. Subsequently, we outline the comparative methods and the metrics employed to assess the restoration outcomes. The evaluation of results, both quantitative and qualitative, is presented in sequence. Additionally, we demonstrate the practical utility of the proposed OBI restoration method in real-world applications.

Datasets

To comprehensively evaluate the proposed method on real-world ancient character images, we constructed a degraded OBI image dataset by ourselves that includes complex real-world degradation. The degraded OBI images in our dataset were sourced from historical books on Shang and Zhou Bronze Inscription documents43, where OBI images in this book were obtained from ink rubbings created through traditional techniques applied to excavated oracle bone fragments. Additionally, we employed object detection techniques54 to automatically identify and segment characters from entire oracle bone images, thereby collecting degraded individual OBI character images. Moreover, through archeological expertise in classifying and denoising these images, we ultimately generated three sub-datasets containing “noisy-clean" image pairs to support the training and testing of models. These datasets are defined as follows:

-

DDenoise: A benchmark dataset for OBI character image restoration, created by removing noise from raw OBI character images. The dataset involves 900 OBI images in total, where 90% of them are used for training and the rest are used for testing.

-

DRestore: A benchmark dataset for the restoration task of OBI character images, developed by removing noise and repairing damaged glyphs from raw images. The dataset involves 568 OBI images in total, where we use the same sample separating settings as DDenoise for model training and testing.

-

DSynthetic: A dataset for the synthetic denoising task of ancient OBI character images, created by introducing synthetic noise into two types of clean OBI images, DDenoise and DRestore.

The construction of the aforementioned sub-datasets was intended to facilitate a comparative analysis of our method against existing methods in the restoration task on OBI character images with real noise, where we present examples in Fig. 4. Additionally, to enable a more thorough evaluation of our approaches, synthetic noise was introduced to clean OBI images, labeled as DSynthetic. Specifically, we emulated a denoising scenario by incorporating random Gaussian noise (noise variance σ = [10, 50]) into clean character images, as depicted in Fig. 4.

Evaluation metrics

In the field of image processing, the structural similarity index (SSIM) and peak signal-to-noise ratio (PSNR)55 serve as prevalent metrics for evaluating image quality, particularly within the scope of image restoration tasks. PSNR is extensively employed to gauge the quality of restored images and videos. It quantifies the ratio between the maximal potential power of a signal and the power of the corrupting noise that impairs the fidelity of its representation. When comparing two images, x and y, the metric PSNR is defined as follows:

where, MaxI represents the maximum possible pixel value of the image (e.g., 255 for 8-bit images), while MSE( ⋅ ) denotes the mean squared error between two images. Within our experiments, image x corresponds to the ground truth clean OBI image, and image y pertains to the restored OBI image.

The SSIM serves as another metric to evaluate the similarity between two images. SSIM is crafted to provide a different assessment of image quality compared to methods like PSNR, by focusing on changes in structural information perceived in the image degradation, rather than mere differences in pixel intensity. The SSIM value ranges from −1 to 1, with 1 indicating perfect similarity. Giving two images x and y, SSIM is defined as:

where μx and μy are the average values of x and y, \({\sigma }_{x}^{2}\) and \({\sigma }_{y}^{2}\) are the variances of x and y, σxy is the covariance of x and y, c1 and c2 are constants used to stabilize the division with a weak denominator. Note that x and y refer to the ground truth and restored images in our settings. Elevated values of PSNR and SSIM suggest that the restoration process preserves or enhances the image quality effectively.

Baseline methods

We selected the state-of-the-art character image restoration solutions as the comparative methods, including image denoising for Chinese character writing (IDCCW)38, DnCNN47, the deep residual network for historical image denoising (DeepRes)56, InvDN57, self-supervised denoising approach Noise2same58, the generative-based denoising method SinGAN37, the classic denoising algorithms which are widely used for historical character image restoration tasks, including BD3M59, Wavelet filtering and Bilateral filtering60, and diffusion-based method DiffACR39 which is a currently popular image denoising method. It is important to note that all evaluated methods were either specifically designed for (ancient) character image restoration or are commonly used in general denoising tasks. To ensure a fair comparison, identical training conditions and datasets were provided for all methods.

Quantitative evaluation

Table 1 presents a quantitative comparison of our method against other image restoration methods across three OBI datasets, where two core metrics PSNR and SSIM are utilized. Additionally, we present the metrics for the raw OBI images to visually demonstrate the relative effectiveness of each method compared to the raw images. The results indicate that the proposed method surpasses all comparative methods on the complex noise datasets DDenoise and DRestore, and also shows promising performance on the synthetic noise dataset DSynthetic. In the DDenoise and DRestore datasets, classic model-based restoration methods such as Wavelet filtering and BM3D do not exhibit effective image restoration results, showing non-significant improvements in PSNR and SSIM when compared to the raw images. This is due to the fact that real-world noise is often more complex and cannot be easily modeled by probabilistic functions. Deep learning-based approaches demonstrate competitive effects, with SinGAN and InvDN achieving more substantial improvements in OBI restoration results. Notably, the significant performance of our method on DDenoise and DRestore validates its efficacy in restoring real-world OBI images with complex degradation.

Observations reveal that most restoration methods perform more effectively on dataset DSynthetic compared to those involving more complex noise conditions, suggesting that synthetic noise poses less of a challenge than real-world degradation scenarios. Notably, SinGAN and our proposed method achieved the highest SSIM and PSNR scores, respectively. Furthermore, deep learning-based approaches such as DeepRes, IDCCW, and InvDN also demonstrated competitive performance. These results on DSynthetic highlight the effectiveness of our method across diverse OBI restoration contexts, thereby enhancing its broader applicability. Note that the varied performance of the methods on synthetic versus complex noise datasets underscores the difficulties of restoring real-world degraded OBI images.

To further assess the robustness and generalization ability of the proposed method, we conducted a 5-fold cross-validation on the constructed OBI datasets, including DDenoise, DRestore, and DSynthetic. In this experimental setup, the entire dataset was randomly partitioned into five equal subsets. In each fold, four subsets (i.e., 80% of the data) were used for training, and the remaining subset (i.e., 20% of the data) was used for testing. This process was repeated five times, ensuring that each sample was tested exactly once. Table 2 summarizes the PSNR and SSIM results obtained across the five folds for each dataset. As observed, the proposed method consistently achieves stable performance across different folds. These results demonstrate the strong stability, reliability, and generalization capability of our method when faced with different data partitions, further confirming its effectiveness for real-world OBI image restoration tasks.

Qualitative evaluation

In Fig. 5, we present a qualitative comparison of the introduced methods, with the first four images sourced from DDenoise and the subsequent three from DRestore. The proposed method’s restoration results for OBI images are visually closer to the ground truth, distinctly demonstrating the superiority of this glyph extraction-based method in preserving OBI characters and removing noise. We also observe that model-based restoration methods fail to effectively remove real noise in OBI images, only managing to mitigate smaller noise interference. A similar outcome is evident with the DeepRes and Noise2Same methods. Such instances often arise because these methods do not account for image degradation beyond the preset restoration scenarios, resulting in large noise clusters in OBI images not being effectively identified and removed. We noted some progress with deep learning-based methods like InvDN producing cleaner images; however, these methods sometimes damage glyphs, leading to incomplete OBI characters, severely impacting subsequent protection and recognition of OBI. Additionally, these models sometimes fail to effectively remove parts of mixed noise, a problem arising from different noise levels presenting noise of varying sizes, thereby challenging the current methods. In Fig. 6, we also present a visual comparison of different methods on the DSynthetic dataset. We find that most methods are effective against synthetic noise, particularly IDCCW, InvDN, SinGAN, and our method, all of which demonstrate competitive noise reduction results. The above comparisons demonstrate that our proposed method has proven its efficacy in dealing with complex degradation and preserving the inherent glyphs of OBI character images, also demonstrating stable results across various OBI image restoration scenarios.

Following the qualitative evaluations, we further analyze the structural fidelity using intensity profiles. To further evaluate the restoration quality at a structural level, we conducted an intensity profile analysis along a randomly selected line crossing key structural regions of the oracle bone character images. The results are illustrated in Fig. 7. In each comparison, the left subfigure presents the restored patch, while the right subfigure shows the corresponding intensity profile curves. The ground truth curve is plotted in green solid lines, the raw noisy image in gray dashed lines, and the restored results from different methods in distinct solid colors, as indicated in the legend.

The legend on the top left indicates the color coding for each method. Restored patches (left) and corresponding intensity profiles (right) are displayed for each method, where the x-axis represents pixel positions along the sampling line and the y-axis represents pixel intensities. The dashed gray line denotes the raw image, the green solid line denotes the ground truth, and colored solid lines represent the results of various restoration methods.

The intensity profiles clearly reveal how each method reconstructs the original structural transitions. Ideally, the restored curve should closely follow the ground truth curve while effectively smoothing out the high-frequency noise present in the raw image. From Fig. 7, it can be observed that conventional methods such as BM3D, Wavelet, and Bilateral Filtering tend to oversmooth the intensity variations, leading to the loss of fine structural details. Deep learning-based methods like IDCCW, InvDN, and SinGAN perform better in preserving edges but still exhibit slight deviations or artifacts. Notably, our proposed method (shown in red) demonstrates the highest fidelity to the ground truth profile, especially in maintaining sharp transitions at character boundaries and suppressing background noise. The close alignment between our restored curves and the ground truth confirms that our method effectively balances noise removal with structure preservation, achieving superior restoration performance both quantitatively and perceptually. These results further validate the robustness of our approach in real-world oracle bone inscription restoration tasks.

Model complexity analysis

To further evaluate the computational efficiency of the proposed method, we compared its model complexity against several benchmark and representative approaches. As shown in Table 3, we report the number of parameters, the total floating-point operations (FLOPs), and the average inference time per image. It is important to note that Wavelet Filtering60, Bilateral Filtering60, and BM3D59 are traditional methods, which involve negligible parameters and FLOPs, whereas the remaining methods, including our proposed method, are based on deep learning and are therefore suitable for model complexity comparison.

From Table 3, it can be observed that although our method introduces slightly more parameters and FLOPs, it maintains a highly competitive inference speed compared to other deep learning-based methods. Notably, our model is significantly lighter and faster than generative models such as SinGAN37 and DiffACR39. Moreover, as evidenced by the results in Table 1 and Fig. 5, our method achieves the best overall performance among all compared methods. These results demonstrate that our framework strikes an excellent balance between restoration performance and computational cost, making it highly practical for real-world applications.

Ablation study

Validation of the glyph extractor

One of the main contributions of our study is the infusion of glyph information into the backbone network via a glyph extractor, aiming to enhance the restoration of OBI character images. To demonstrate the efficacy of the glyph extractor and its GFB blocks in improving restoration outcomes, we conducted an ablation experiment using the dataset Ddenoise. We employed a backbone network without incorporating glyph information. Backbone+GlyphG represents the performance with glyph information infused via a glyph generator, whereas Backbone+GlyphG+GlyphD denotes the use of both a glyph generator and a discriminator for infusing information into the backbone network. The results in Table 4 indicate that the infusion of glyph information significantly enhances the effectiveness of OBI image restoration, with the combined use of both the generator and discriminator yielding the best results, as proposed in this study. Additionally, we visualized the generated glyphs in Fig. 8, which includes four sets, each consisting of the original image on the left, the restored image in the middle, and the generated glyph on the right. It is proven that the OBI images are restored and the glyphs are also effectively extracted.

Validation of the multi-scale feature extractor

As another main contribution of this research, we introduced the multi-scale feature extractor and its blocks MSdense. We attempt to validate its effectiveness using the dataset Ddenoise. We replaced MSdense with CNN layers linked residually as the backbone network, whereby Backbone+MSdense represents the proposed method. Additionally, we apply another multi-scale layer Backbone+MSres, which is linked residually, as a comparative experimental group. The experimental results, as shown in Table 5, indicate that the use of the MSdense blocks outperformed the other comparison groups, demonstrating the effectiveness of the proposed multi-scale feature extractor in the restoration of OBI images.

User study

We have undertaken both qualitative and quantitative analyses to evaluate the performance of our method relative to existing techniques across various datasets of OBI image restoration. To validate the practical applicability of our proposed method within the realm of OBI research more comprehensively, we integrated this method into an established ancient script research website and made it accessible to users. The link of the website is https://www.jidagwz.com/guwenzipc/cv. Figure 9 showcases the interface of the image restoration feature on the website. For this experiment, we engaged experts in the field of ancient scripts to test this functionality and introduced three dimensions to assess both raw and restored OBI character images: clarity, integrity, and interference-free. The scales of all three dimensions are rated from 1 to 10, with higher scores indicating superior quality of the OBI images. A total of five participants were involved in this experiment, testing 126 images, with the scoring results displayed in Fig. 10. We observed marked improvements in all three dimensions for the restored images in comparison to the raw images, especially in terms of clarity and interference-free, thereby substantiating the potential of our method to enhance human character recognition as well.

Theoretical and practical implications

In this section, we conducted extensive experiments by comparing our method against existing methods across multiple datasets and by evaluating real-world OBI image restoration scenarios to demonstrate the superiority and feasibility of our method. Theoretically, our method advances the understanding of effectively leveraging deep learning to interpret and restore severely degraded historical texts. Our method significantly differs by employing glyph extraction, crucially improving upon earlier methods unable to maintain glyph integrity under diverse noise conditions. By integrating glyph extraction techniques with state-of-the-art neural network architectures, our method not only enhances the visual quality of ancient inscriptions but also facilitates more accurate extraction of related information, which is crucial for ancient inscription studies and management. From an application perspective, our research impacts the broader field of cultural heritage preservation. By improving the readability of ancient inscriptions, our method enables historians, linguists, and scholars to access previously indecipherable inscriptions, thereby deepening the understanding of historical contexts and cultural nuances that may have been lost for thousands of years. Moreover, the enhanced images produced by our method are vital for digital archives, playing a crucial role in preserving endangered scripts for future generations.

Discussion

In this study, we first introduced the significance of oracle bone inscription (OBI) image restoration and discussed the challenges posed by various types of degradation to existing restoration techniques. Motivated by the observation that glyph information plays a critical role in character image restoration, we proposed an end-to-end image restoration framework centered around glyph extraction. This framework effectively addresses complex degradations while preserving the structural integrity of OBI glyphs, substantially improving upon previous methods that often failed to maintain glyph consistency under diverse noise conditions. Additionally, we incorporated a multi-scale feature extraction module to enhance the robustness of degradation handling across different noise levels. Extensive comparisons, including quantitative evaluation, qualitative evaluation, model complexity analysis, and ablation studies, against state-of-the-art restoration techniques on multiple datasets demonstrate the superior performance of our method. Furthermore, a case study validates its practical applicability in real-world settings.

This study has several potential real-world applications. First, it supports the digital preservation of ancient scripts, specifically oracle bone inscriptions (OBIs), by producing clean, structurally intact images suitable for archiving, education, and research. It is also applicable in museum digital display systems and online cultural heritage platforms. Second, the proposed framework can be adapted to other ancient scripts, such as bronze inscriptions or Dunhuang manuscripts. Third, due to its strong performance on real-world noise, the method may serve as a technical foundation for interactive virtual restoration tools in heritage studies. In the future, we aim to extend this research to unsupervised or weakly supervised settings to reduce the dependency on paired training data. We also plan to integrate multimodal historical context, e.g., textual annotations, components of the characters, to further improve restoration fidelity by introducing more valuable information. In addition, we will work to enhance the scalability of the proposed method by extending its application to a wider range of ancient script images and other domains within cultural heritage, such as bronze inscriptions, cuneiform script, and mural restoration.

Data availability

The datasets used during the current study are available from the corresponding author on reasonable request.

Code availability

The code developed in this paper will be publicly accessible at https://github.com/XiaoleiDiao/Oracle-Image-Restoration.

References

Shaughnessy, E. L. Zhouyuan oracle-bone inscriptions: entering the research stage? Early China 11, 146–163 (1985).

Mickel, S. Oracle Bones. Classical Chinese Literature: An Anthology of Translations. Vol. 10, 1 (2000).

Zhen-Tao, X., Stephenson, F. & Yao-Tiao, J. Astronomy on oracle bone inscriptions. Q. J. R. Astronom. Soc. 36, 397 (1995).

Keightley, D. N. The Shang State as seen in the oracle-bone inscriptions. Early China 5, 25–34 (1979).

Anzhu, L. Oracle-bone inscriptions and cultural memory. Front. Art. Res. 2, 63–73 (2020).

Keightley, D. N. Sources of Shang history: the oracle-bone inscriptions of Bronze Age China (Univ of California Press, 1985).

Takashima, K. I. Towards a more rigorous methodology of deciphering oracle-bone inscriptions. T'oung Pao 86, 363–399 (2000).

Qiu, K., Xia, C. & Liu, Q. Development of ancient chinese inscription rubbings knowledge base in the context of cultural communication and art appreciation. Digit. Transform. Soc. 4, 73–89 (2025).

Shi, D. et al. RCRN: Real-world character image restoration network via skeleton extraction. In Proceedings of the 30th ACM International Conference on Multimedia. 1177–1185 (ACM, 2022).

Liu, G. et al. Gca-pvt-net: group convolutional attention and pvt dual-branch network for oracle bone drill chisel segmentation. Herit. Sci. 12, 260 (2024).

Feng, G., Jing, X. & Yong-ge, L. Recognition of fuzzy characters on oracle-bone inscriptions. In 2015 IEEE International Conference on Computer and Information Technology; Ubiquitous Computing and Communications; Dependable, Autonomic and Secure Computing; Pervasive Intelligence and Computing. 698–702 (IEEE, 2015).

Xing, J., Liu, G. & Xiong, J. Oracle bone inscription detection: a survey of oracle bone inscription detection based on deep learning algorithm. In Proceedings of the International Conference on Artificial Intelligence, Information Processing and Cloud Computing, 1–8 (Association for Computing Machinery, 2019).

Yuan, J. et al. R-GNN: recurrent graph neural networks for font classification of oracle bone inscriptions. Herit. Sci. 12, 30 (2024).

Fu, X., Zhou, R., Yang, X. & Li, C. Detecting oracle bone inscriptions via pseudo-category labels. Herit. Sci. 12, 107 (2024).

Dabov, K., Foi, A., Katkovnik, V. & Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. image Process. 16, 2080–2095 (2007).

Singh, P., Shankar, A., Diwakar, M. & Khosravi, M. R. Mspb: intelligent sar despeckling using wavelet thresholding and bilateral filter for big visual radar data restoration and provisioning quality of experience in real-time remote sensing. Environ. Dev. Sustain. 24, 1–31 (2022).

Diwakar, M. & Singh, P. Ct image denoising using multivariate model and its method noise thresholding in non-subsampled shearlet domain. Biomed. Signal Process. Control 57, 101754 (2020).

Singh, P. et al. An algorithmic approach towards remote sensing imagery data restoration using guided filters in real-time applications. Can. J. Remote Sens. 49, 2257323 (2023).

Yang, D. & Sun, J. Bm3d-net: A convolutional neural network for transform-domain collaborative filtering. IEEE Signal Process. Lett. 25, 55–59 (2017).

Alnuaimy, A. N. et al. BM3D denoising algorithms for medical image. In 2024 35th Conference of Open Innovations Association (FRUCT), 135–141 (IEEE, 2024).

Siddique, N., Paheding, S., Elkin, C. P. & Devabhaktuni, V. U-net and its variants for medical image segmentation: a review of theory and applications. IEEE Access 9, 82031–82057 (2021).

Wang, Z., Cun, X., Bao, J. & Liu, J. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), 17683–17693 (IEEE, 2022).

Chen, Y., Xia, R., Yang, K. & Zou, K. Micu: Image super-resolution via multi-level information compensation and U-Net. Expert Syst. Appl. 245, 123111 (2024).

Hu, J., Yu, Y. & Zhou, Q. Guidepaint: lossless image-guided diffusion model for ancient mural image restoration. npj Herit. Sci. 13, 118 (2025).

Lehtinen, J. et al. Noise2noise: Learning image restoration without clean data. In International Conference on Machine Learning. 2965–2974 (PMLR, 2018).

He, D. et al. De-noising of photoacoustic microscopy images by attentive generative adversarial network. IEEE Trans. Med. imaging 42, 1349–1362 (2022).

Hu, Y., Wang, Y. & Zhang, J. Dear-gan: degradation-aware face restoration with gan prior. IEEE Trans. Circuits Syst. Video Technol. 33, 4603–4615 (2023).

Cui, J. et al. 3D point-based multi-modal context clusters GAN for low-dose PET image denoising. IEEE Transactions on Circuits and Systems for Video Technology (IEEE, 2024).

Li, S., Wang, Y., Higashita, R., Fu, H. & Liu, J. A contrast-aware edge enhancement GAN for unpaired anterior segment OCT image denoising. IEEE Transactions on Circuits and Systems for Video Technology (IEEE, 2024).

Chi, Y. et al. Zinet: Linking chinese characters spanning three thousand years. In Findings of the Association for Computational Linguistics: ACL 2022, 3061–3070 (ACL, 2022).

Diao, X. et al. Rzcr: zero-shot character recognition via radical-based reasoning. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, 654–662 (IJCAI, 2023).

Guo, S., Yan, Z., Zhang, K., Zuo, W. & Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1712–1722 (IEEE, 2019).

Zhang, J., Guo, M. & Fan, J. A novel generative adversarial net for calligraphic tablet images denoising. Multimed. Tools Appl. 79, 119–140 (2020).

Chen, H. et al. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12299–12310 (IEEE, 2021).

Chen, J. et al. TransUNet: Rethinking the U-Net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 97, 103280 (2024).

Zhang, Y. et al. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), 286–301 (Springer, 2018).

Srivastava, D. & Harit, G. Simultaneous denoising and super resolution of document images. Sādhanā 49, 35 (2024).

Yalin, M., Li, L., Yichun, J. & Guodong, L. Research on denoising method of chinese ancient character image based on chinese character writing standard model. Sci. Rep. 12, 19795 (2022).

Li, H. et al. Towards automated chinese ancient character restoration: A diffusion-based method with a new dataset. In Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 38, 3073–3081 (AAAI, 2024).

Qi, H. et al. Ancientglyphnet: an advanced deep learning framework for detecting ancient Chinese characters in complex scene. Artif. Intell. Rev. 58, 88 (2025).

Pan, Y., Ren, C., Wu, X., Huang, J. & He, X. Real image denoising via guided residual estimation and noise correction. IEEE Trans. Circuits Syst. Video Technol. 33, 1994–2000 (2022).

Shi, D. et al. Charformer: a glyph fusion based attentive framework for high-precision character image denoising. In Proceedings of the 30th ACM International Conference on Multimedia, 1147–1155 (ACM, 2022).

Wu, Z. Shang and Zhou Bronze Inscriptions and Image Integration (Shanghai Classics Publishing House, 2012).

Cai, J., Peng, L., Tang, Y., Liu, C. & Li, P. Th-gan: Generative adversarial network based transfer learning for historical chinese character recognition. In 2019 International Conference on Document Analysis and Recognition (ICDAR), 178–183 (IEEE, 2019).

Gao, Y. & Wu, J. Gan-based unpaired chinese character image translation via skeleton transformation and stroke rendering. In Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34, 646–653 (AAAI, 2020).

Li, M., Wang, J., Yang, Y., Huang, W. & Du, W. Improving gan-based calligraphy character generation using graph matching. In 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), 291–295 (IEEE, 2019).

Zhang, K., Zuo, W., Chen, Y., Meng, D. & Zhang, L. Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 26, 3142–3155 (2017).

Kim, D.-W., Ryun Chung, J. & Jung, S.-W. GRDN: Grouped residual dense network for real image denoising and GAN-based real-world noise modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (IEEE, 2019).

Xiao, T. et al. Early convolutions help transformers see better. Adv. Neural Inf. Process. Syst. 34, 30392–30400 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 770–778 (IEEE, 2016).

Huang, G., Liu, Z., Pleiss, G., Van Der Maaten, L. & Weinberger, K. Q. Convolutional networks with dense connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 44, 8704–8716 (2019).

Yu, F. & Koltun, V. Multi-scale context aggregation by dilated convolutions. Proceedings of the Fourth International Conference on Learning Representations. (ICLR, 2016).

Goodfellow, I. et al. Generative adversarial networks. Commun. ACM 63, 139–144 (2020).

Diao, X. et al. Toward zero-shot character recognition: A gold standard dataset with radical-level annotations. In Proceedings of the 31st ACM International Conference on Multimedia, 6869–6877 (ACM, 2023).

Hore, A. & Ziou, D. Image quality metrics: Psnr vs. ssim. In 2010 20th International Conference on Pattern Recognition. 2366–2369 (IEEE, 2010).

Chen, G., Chen, Q., Zhu, X. & Chen, Y. A study of historical documents denoising. In 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI). 1–4 (IEEE, 2017).

Liu, Y. et al. Invertible denoising network: a light solution for real noise removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13365–13374 (IEEE, 2021).

Xie, Y., Wang, Z. & Ji, S. Noise2same: optimizing a self-supervised bound for image denoising. Adv. Neural Inf. Process. Syst. 33, 20320–20330 (2020).

Danielyan, A., Katkovnik, V. & Egiazarian, K. Bm3d frames and variational image deblurring. IEEE Trans. Image Process. 21, 1715–1728 (2011).

Huang, Z.-K., Li, Z.-H., Huang, H., Li, Z.-B. & Hou, L.-Y. Comparison of different image denoising algorithms for chinese calligraphy images. Neurocomputing 188, 102–112 (2016).

Acknowledgements

This research is supported by the National Natural Science Foundation of China (No.62476111), the Department of Science and Technology of Jilin Province, China (20230201086GX), the “Paleography and Chinese Civilization Inheritance and Development Program” Collaborative Innovation Platform (No.G3829), the National Social Science Foundation of China (No. 23VRC033), and the interdisciplinary cultivation project for young teachers and students at Jilin University, China (No. 2024-JCXK-04).

Author information

Authors and Affiliations

Contributions

X.D. developed the research idea and wrote this manuscript; D.S. provided overall guidance and supervision of the study and proposed an optimized protocol; X.D. and W.C. conducted the experiments in this manuscript; D.S. and T.W. assisted in the literature review and helped with the calibration of this article; C.L. and R.Q. provided specialized knowledge of ancient characters and provided experimental-related datasets and the ground truth. H.X. revised and provided valuable suggestions for this manuscript. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Diao, X., Shi, D., Cao, W. et al. Oracle bone inscription image restoration via glyph extraction. npj Herit. Sci. 13, 321 (2025). https://doi.org/10.1038/s40494-025-01795-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s40494-025-01795-8

This article is cited by

-

OraGAN: a deep learning based model for restoring Oracle Bone Script Images

npj Heritage Science (2025)