Abstract

Figure-ground organisation is a perceptual grouping mechanism for detecting objects and boundaries, essential for an agent interacting with the environment. Current figure-ground segmentation methods rely on classical computer vision or deep learning, requiring extensive computational resources, especially during training. Inspired by the primate visual system, we developed a bio-inspired perception system for the neuromorphic robot iCub. The model uses a hierarchical, biologically plausible architecture and event-driven vision to distinguish foreground objects from the background. Unlike classical approaches, event-driven cameras reduce data redundancy and computation. The system has been qualitatively and quantitatively assessed in simulations and with event-driven cameras on iCub in various scenarios. It successfully segments items in diverse real-world settings, showing comparable results to its frame-based version on simple stimuli and the Berkeley Segmentation dataset. This model enhances hybrid systems, complementing conventional deep learning models by processing only relevant data in Regions of Interest (ROI), enabling low-latency autonomous robotic applications.

Similar content being viewed by others

Introduction

In all perceptual modalities1,2,3, the organisation into object-like entities dramatically reduces the dimensionality of the input space, transforming high-dimensional raw sensor data into a much smaller space of object-like entities. Additionally, the clear distinction between foreground and background allows an agent to focus on items in the foreground, which are, in most cases, behaviourally more relevant than the background4. Humans can perform figure-ground organisation (seemingly) without effort in a wide range of different conditions. Parsing the visual field by detecting objects and determining their boundaries against their background sets the basis for the correct perception of the world.

The perception and formation of figures are intricately tied to the notion of shared borders, defining what is known as border ownership5. This concept originates from Gestaltism5, along with other principles that govern our perception of the surroundings, such as similarity, continuation, closure, proximity, and figure-ground relationships. In line with this, border-ownership selective cells, discovered in the primate visual cortex in 20006, mirror the concept of border ownership as included within the Gestalt laws, specifically the continuity principle. These cells actively group visual information, discerning salient regions within a scene where objects may potentially reside.

An artificial agent that is able to perform foreground-background organisation can focus attention solely on relevant parts of the scene, therefore processing only small parts of the visual field and thus significantly decreasing the amount of computation. The resource-efficient nature of this process makes it well-suited for robotic tasks characterised by tight power and latency constraints. A robot operating in an unconstrained environment can exploit the perceptual organisation of its surroundings to establish a foundation for tasks like reaching, tracking, and searching for specific items within the scene. The use of the Gestalt laws of perceptual grouping can improve an agent’s perception of the environment, constrain the detailed visual processing preferentially to regions of the scene containing objects, and thus reduce the overall computational effort.

Bioinspired event-driven cameras produce sensor signals closely resembling those found in mammalian vision and have strong potential to be integrated with bioinspired visual processing. Similar to many mammalian visual systems, event-driven cameras generate “spikes” only when and where a temporal contrast change occurs7. These cameras draw loose inspiration from the magnocellular pathway of primate vision7. This selective event generation leads to a drastic decrease in data compared to frame-based cameras with typical frame rates7. By eliminating redundant data streams, event-driven cameras substantially reduce computational costs, enhance processing speed, and minimise latency7. These advantageous properties make event-based processing particularly well-suited for robotics applications. Most of the latest saliency-based approaches use Convolutional Neural Networks (CNNs)8,9 which do not exploit the event-based sparse computation advantageous for robotic applications10, especially when fully spiking-based methods are employed11,12,13. For instance, Paulun et al.13 presented a notable attempt at recognising moving objects by leveraging the spike-based nature of events, utilising deep unsupervised and supervised learning and employing spike timing dependent plasticity (STDP) mechanisms for classification tasks. Another approach to promoting event-based computation in visual attention was introduced by Thorpe et al. in 199914. They addressed the issue of resource limitations by employing a spike-based architecture for object recognition tasks, achieving response times under one second with an accuracy of 96%.

The integration of figure-ground organisation and event-driven sensing is pivotal in robotic applications, enabling autonomous agents to perceive and interpret their environment efficiently. By incorporating these principles, robots can achieve real-time responsiveness and make effective decisions based on the dynamic visual information they receive. The problem of figure-ground organisation has been defined as crucial. For instance, in ref. 15 it is claimed that autonomous agents should utilise what the environment offers, rather than relying on a predetermined set of features. While many approaches to the segmentation problem using deep learning16 exist, one of the few examples of using figure-ground organisation by a humanoid robot is the embodied approach by Arsenio17. In this approach, the robot segments the visual scene “by demonstration,” by exploiting a human-aided object segmentation algorithm18 in which the robot learns object segmentation from a human that waves an arm on top of the image of objects to be segmented from the background. Mishra et al.19 propose an active segmentation method focused on the fixation region of the robot. In this work19, their strong biological motivation explores the operations carried out in the human visual system during fixation. They, therefore, propose a method focused on segmenting individual parts of the scene rather than the entire visual field. While these methods17,19 introduce an active process within the loop, which is crucial for robotic interaction, they rely on traditional RGB images. As a result, they do not harness sparse computation to effectively minimise latency and processing. All the methods mentioned either employ traditional segmentation approaches or incorporate specific biological techniques to mitigate the problem’s complexity, but they do not fully delve into the comprehensive exploration of a bioinspired approach.

In this work, we integrate the new generation of bioinspired cameras with a biologically plausible network, laying the groundwork for a spiking-based approach on a neuromorphic platform. This fully bioinspired robotic application aims to minimise computational load while utilising bioinspired hardware alongside a biologically plausible algorithm. Our implementation introduces a complete bioinspired architecture for the event-based visual figure-ground organisation for the humanoid robot iCub. In its current state, the integration of bioinspired software and hardware significantly reduces latency by minimising redundant data through events20. Thus, our work paves the way for exploiting event-based robotic applications that make use of figure-ground segmentation.

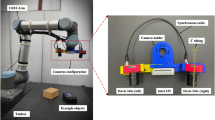

The overall structure of the implementation (See Fig. 1) is inspired by a previous study conducted by Hu et al.21. The proposed system is a follow-up of the event-driven visual attention model22 we developed for the neuromorphic humanoid robot iCub23, furnished with a pair of Asynchronous Time-based Image Sensor (ATIS) GEN27 event-driven cameras. The model is applied to a variety of test stimuli, from simple circular discs to unconstrained real-world office scenes, as well as with a standard benchmark test set, the Berkeley Segmentation Data Set (BSDS)24,25. We find that performance is comparable to a frame-based implementation. The contributions of this work are as follows:

-

Adaptation of the frame-based bioinspired figure-ground organisation model21 to work with biologically inspired cameras, and characterisation of the model as the first attempt towards a fully spiking-based online robotic application.

-

Reduction of the computations by leveraging the event cameras’ spatial gradient detection through the use of lightweight Gaussian Multivariate instead of Combination of Receptive Fields (CORF) filters used by Hu et al.21 to obtain local edge orientation information.

-

Quantitative and qualitative experiments on the BSDS dataset26, and additional online experiments using the neuromorphic iCub.

A comparison of the frame-based figure-ground segmentation model21 (FBFG) and the event-driven figure-ground segmentation model (EDFG). a Overview of the frame-based figure-ground (FBFG) model. Information flows from left to right. The input is an RGB image. The outputs are the Figure-Ground map and the Grouping map. “S”, “B” and “G” represent simple cells, border ownership cells and grouping cells, respectively. b Overview of the event-based figure-ground (EDFG) model. The input is the event frame from events collected from a period of time. The outputs are the Figure-Ground map and the Grouping map. In both schemes, red lines with triangles represent excitatory connections and blue with circles inhibitory connections. Bidirectional connections represent feedback for the recurrence. The Orientation Matrices show pixel-by-pixel the orientation of the edge. The Figure Ground Maps shows pixel-by-pixel the orientation of an arrow pointing towards the object’s centre. Hence, in accordance with the top-right wheel’s colour-coded legend (Figure Ground map - wheel legend), if the arrow points to the right, the pixel will appear red, indicating the object’s centre to the right. This holds true if the pixel location falls within the left side of the object’s border. Numbers in parentheses correspond to the equations in Section "Methods".

In computational models of figure-ground segregation in biological information processing, border-ownership selective cells are reciprocally connected with grouping cells whose receptive field spans a range of scales1,27 and that group together multiple border-ownership cells, responding to consistent object-like shapes, or proto-objects. The term “proto-object” serves as a descriptor for areas with potential objects, as introduced by refs. 28,29. The perceptual grouping mechanisms of the visual input are implemented by neuronal populations that represent proto-objects6. The neurons in such populations consolidate input from lower-order cells that encode the geometrical properties of a proto-object through their firing patterns, relying on the concept of “border ownership”, that is therefore foreground object “owns” the border hence helping to differentiate the figure from the background, contextual information from the visual input is necessary to determine which of the two regions adjacent to each border corresponds to the nearer, occluding surface. For example, the movement of the foreground object imposes the movement of the border30,31,32. Both cell types, border-ownership and grouping cells tile the visual scene. Models also incorporate specific features of visual scenes, for instance, a good continuation of contours, proximity bias, closure, and symmetry33. Many of these “rules” are implemented explicitly in the structure of the grouping cell representation of proto-objects described above. A recent study reported the existence of grouping cells in primate area V434. Their existence has also been inferred from recordings of correlated border-ownership cell responses at millisecond precision35 as well as from theoretical work36,37. Additionally, the number of grouping cells is likely orders of magnitude smaller than that of border-ownership cells1. Thanks to these hierarchical mechanisms, the fundamental importance of differentiating the figure from the background, defined as figure-ground organisation, can be implemented as a perceptual organisation requiring only very limited resources.

In a first attempt, classical approaches to the figure-ground organisation problem have been proposed exploiting mathematical models of combinatorial optimisation38 to encode figure-ground discrimination or using quadratic boolean optimisation39. Later studies tried to overcome classical supervised approaches40,41,42 by proposing an unsupervised figure-ground learning method43 using edge-based methods. Figure-ground has been also tackled by converting the problem into a pixel classification problem44 or learning the segmentation45 when the object categories are not available during training (that is zero-shot). All these methods require, however, a large amount of training data and a long period of training. Other approaches avoid extensive training exploiting the differences of image statistics between foreground and background, either in local contrast46 or in the spectral domain47. These approaches46,47 work in simulation exploiting a frame-based input but do not use a bioinspired approach which could be directly suitable for a bioinspired event-based input.

Of particular interest is a class of models that assume the existence of an explicit neural structure for perceptual grouping1,27,48,49,50. These models hypothesise the existence of neural populations detecting objects in the scene directly in their firing activity. More precisely, what is represented are not objects but rather perceptual precursors to objects which only contain limited information, such as their approximate location and size, but do not require (nor provide) the results of an object-recognition process6. Craft et al.1 proposed a model emulating border-ownership assignment thanks to feedback and feedforward inhibitory connections between Border ownership cells and Grouping cells. Later in 2014, taking information from the saliency-based visual attention model by Itti et al.51, Russel et al.27 proposed an updated version of the system with three channels of information (intensity, colour opponency and orientation) introducing the “proto-object” concept. Each channel feeds the Border-ownership and Grouping layers, where the border-ownership cells are emulated with a curved kernel, the von Mises filter introduced by Russell et al.27 in a previously published proto-object based visual attention model. Based on earlier work by Craft et al.1, Hu et al.48 presented a model network of feedforward and feedback excitatory connections with lateral inhibition integrating local features to form proto-objects.

The proposed model takes inspiration from21 and it expands on our previous event-driven work that uses proto-objects for visual scene segmentation22,52,53. The first event-based adaptation of the frame-based proto-object model27 has been proposed by Iacono et al.54 where the humanoid robot iCub detected proto-objects in different static and dynamic scenarios. Subsequently, the model was extended to include a disparity map to the border-ownership cells52, thereby imposing perceptual saliency items that the robot could reach and potentially interact with. Both systems served as proofs of concept, running on a GPU and producing a saliency map in ~100 ms in an end-to-end neuromorphic architecture. Building on these works, the system was further modified to interface with a Spiking Neural Network (SNN)53 running on a neuromorphic platform, SpiNNaker, generating spikes in salient areas in ~16 ms, thus significantly reducing the latency over the previous approach. The implementation of SpiNNaker successfully bridged the gap between bioinspired hardware and software. All implementations we have proposed so far make use of the proto-object concept in the computation of saliency. The implementation we propose here further refines border ownership relations of proto-objects in the entire scene by adding feedback from the Grouping layer back to the border ownership pyramid, enhancing the distinction between foreground and background. The proposed model adapts the frame-based implementation21 to work with event-driven cameras, serving as the basis for accuracy comparison and assessing the feasibility of the system towards a spike-based implementation.

Results

Qualitative and quantitative experiments were performed to evaluate the capabilities and limitations of the proposed event-driven figure-ground organisation algorithm in two different scenarios: a common segmentation dataset26 for comparison to both the frame-based implementation and the ground truth segmentation, and a typical office scenario where the robot iCub visually explores the scene.

Berkeley Segmentation Data Set

The comparison was performed to the original frame-based figure-ground organisation model in qualitative and quantitative experiments conducted on the BSDS dataset26, which includes RGB images along with their annotated human segmentations.

To obtain events from the BSDS dataset, RGB images were converted directly to the event frame required as input to EDFG. To create a video mimicking the microsaccade-like motion that the mammalian eye would perform when observing the scene, a 1-pixel shift of a converted greyscale image in four directions (top, right, bottom, left) has been performed. The event camera simulator ESIM55 generated events (that is at locations of image intensity gradient), which were integrated to form event frames following Equation (1).

Where \(\nabla {{\mathscr{L}}}\) is the gradient of the brightness image, and \({{\mathcal{V}}}\) is the motion field. The brightness change at pixel x and time tk during a given interval of time Δt is: \({{\Delta }}L\cong \frac{\partial {{\mathscr{L}}}(x;{t}_{k})}{\partial t}{{\Delta }}t\). For every pixel x, \(| {{\Delta }}{{\mathscr{L}}}| \le\) C (that is the brightness change is bounded by the desired contrast threshold C of the simulated event camera). Equation (1) works under the assumption of Lambertian surfaces, brightness constancy56, and linearity of local image brightness changes and it is a first order Taylor expansion. Typical RGB and resulting artificially generated event frames are shown in Fig. 2 (RGB Input and Event Frame).

Comparison of grouping maps between FBFG21 and the proposed EDFG on a random selection of the images from BSDS24. Both models used identical parameters as in Table T1. The legend on the right for the Grouping Maps shows the detection response from the model, where 0 is the background (blue) and 1 is the foreground (red). Red and blue frames to highlight the pictures for the description in the text.

Figure 2 shows a random selection of the qualitative comparison between the FBFG and the EDFG, showing RGB Input, Event Frames, FB and ED Figure Ground Maps and Grouping Maps. The correct assignment of foreground direction (inside the object) and background direction (outside the object) for pixels on the object edge defines the figure-ground maps organisation. The Figure-ground Maps are difficult to read given the details of the cluttered scenes. Overall both models seem to detect the centre of proto-objects when their size falls within the correct range of detectable sizes. The events generation decreases the chances of a clean figure-ground map in highly cluttered scenes (#28075 and #159091, red frames in Fig. 2). The models’ varied responses strongly depend on the sparsity of event generation; therefore, the number of events representing the scene in the event frame. This is clear in #12074, #28075, and #35070 (blue frames in Fig. 2) where the response from the grouping corresponds to the portion of the image with a higher density of events. The events generation can be indeed modulated depending on a parameter threshold regulating the sensitivity of the simulator which empirically we found to be 0.10 for these data (See Fig. S1) in Supplementary Material (S)).

The major difference between the FBFG and the EDFG model responses strongly depends on the input given to the model. RGB frames and events, while both representing the scene, convey different information. Whilst RGB frames provide information on colour intensity and are static, events come with inherent motion cues and light intensity changes in time.

Figure 3 shows examples of the comparison between the FBFG and the EDFG Grouping maps on a selection of images with specific low lighting conditions or high contrast. The figure demonstrates the different responses depending on the nature of the input provided to the models. Therefore, the EDFG responses obtained by feeding the system with events generated by the event-based simulator55 are more sensitive to small contrast changes over dark backgrounds.

Comparison of grouping maps between FBFG21 and EDFG on a specific selection of images with low lighting conditions or high light contrast from the BSDS dataset24. Both models used identical parameters as in Table T1 (in Supplementary Material (T)). The legend on the right for the Grouping Maps shows the detection response from the model, where 0 is the background (blue) and 1 is the foreground (red).

This is evident in #Image 59078, where the FBFG focuses on the part with high contrast, whereas the EDFG effectively segregates the door with small contrast changes over a darker background on the left. In #Image 238011, the EDFG implementation clearly segregates the trees in the night environment compared to the FBFG. The last image, #271031, again provides a good example of how the different nature of the input affects the response, with the FBFG incorrectly segregating the entire background while the EDFG only detects the camel and the horizon. However, it is important to consider that these factors arise solely from the different nature of the input provided to the systems, rather than from the actual High Dynamic Range (HDR) properties of the physical event-based visual sensor.

A conversion of the human segmentation into pixel-by-pixel angle information has been done to obtain a quantitative comparison with the figure-ground maps of the two models. The RGB images of the BDSD dataset are annotated with foreground/background contours, that is around each foreground object there is a 2-pixel wide contour, the inside contour indicating the border of the foreground object and the outside contour indicating the beginning of the background (see Fig. 4). The angle information was obtained by finding the normal to the line in a 5 × 5 matrix, shifting pixel by pixel across the ground truth segmentation. A subset of random images was selected to produce ground-truth figure-ground maps (see Fig. 4c). Table 1 shows the angle error by comparing pixel-by-pixel the converted ground truth (see Fig. 4c) to the figure ground maps produced with different sensitivities of the simulator, respectively [0.10, 0.15, 0.20, 0.30, 0.40].

Generation of ground-truth figure-ground maps from the BSDS dataset26. a a reference RGB image, b the edges indicating the outer bound of the foreground object (yellow) and the starting of the background (blue), and (c) the generated figure-ground map indicating the angle towards the object from each edge pixel.

Subsequently, a comparison between the angle information of the ground-truth segmentation maps26 against the frame-based and event-driven figure-ground maps can be seen in Fig. 5. The selection of the Images shows the best (#135069, #105019 and #118035, green frames in Fig. 5) and worst (#8143, red frames in Fig. 5) results, alongside particular cases. Image #8143 represents a complex cluttered scene where both the FB and the ED Angle Maps fail at segregating the object. In image #134008, blue frames in Fig. 5, only the FBFG fails to find any edge in the case of the foreground with a complex pattern, while the EDFG clearly detects the pattern thanks to the nature of the generation of the events on contrast changes. Images #12003 and #134008 (blue frames in Fig. 5) show how humans have a definition of the entire object correctly labelling the entire star as foreground, whilst both models do not have higher cognitive mechanisms to perceptually group the patterns into the whole object. Due to the size range the models work, indeed, both the frame-based and the event-based models segment each small item of the star as proto-objects. The comparison between the event-based figure-ground model against the frame-based implementation, and both models against the Ground-Truth, computing the structural similarity (SSIM) and the Mean Square Error (MSE) on the Angle Maps and the Ground-Truth is shown in Fig. 6. The results of the SSIM comparisons Fig. 6 show similar results of the frame-based and event-based implementation against the Ground-Truth whilst the MSE is slightly higher in our case. Furthermore, unlike the variability observed in the MSE results, due to its global assessment of similarity, there is no significant variability in the SSIM results, computing structural similarities across the three comparisons. The SSIM was computed using standard Matlab libraries with the default window size of 11 × 11 pixels. This facilitated the evaluation of how closely the event-based implementation aligns with the original frame-based implementation and assesses both models against the ground truth.

Comparison of the Ground-Truth segmentation from the BDSD dataset26, frame-based (FB) and event-driven (ED) Angle maps containing the angle information (wheel legend shown at the bottom right) pixel-by-pixel of the edges belonging to the foreground. Red, green and blue frames highlight the pictures for the description in the text.

Structural similarity (SSIM), Mean Square Error (MSE); Mean and standard deviations of three different comparisons. The range for the SSIM is from 0 to 1 with 1 as perfect similarity; and the MSE from 0 to ∞, where 0 indicates that the images are identical. From the left to the right: the event-based (ED) implementation against the frame-based (FB) one21 ‘EDvsFB’, FB against the ground truth (GT) from the Berkeley Dataset26 ‘FBvsGT’, and ED against GT ‘EDvsGT’.

The Neuromorphic iCub in the office

The proposed EDFG is evaluated using real data from the event cameras mounted on the iCub. The robot is in an office and looks at various objects, as well as printouts of a range of objects, examples of which are shown in Fig. 7. The EDFG processes events which come directly from the event-driven cameras mounted on the humanoid robot iCub (104cm tall, plus ~20cm for the support). All the stimuli are placed on a desk (72cm high), while FBFG is evaluated directly feeding the model with the digital correspondent images. Events are generated as the iCub performs eye motions, through the motorised actuation of the “eye muscles” mimicking the same microsaccadic motion done for the ESIM simulator (see Section "Berkeley Segmentation Data Set"). The eye motion completes 72 positions around a circle, with each update occurring every 5 ms, resulting in a total duration of 360 ms and a pan-tilt angle of 1° per position. Event-based edge maps are generated by integrating all events that fire over the microsaccadic period of 85 ms which is enough to obtain a clear and defined edge map of the scene, as the eye moves in a small circular pattern. The event frame is therefore produced by aggregating asynchronous events over the specified time window.

To start with a simple environment, the EDFG is evaluated on printouts of objects placed in view of the iCub. Results shown in Fig. 8 qualitative compare the Figure Ground and the Grouping Maps from both implementations. Both models do a great job in identifying the correct centre of the object, therefore, segmenting the foreground apart from two cases: the TV and the cat examples. In the first case, both models fail at detecting the entire TV as a whole item. In the second case, the ED Figure Ground Maps fails at detecting the centre of the object on the right side of the cat, due to the complex concatenation of concave and convex curves and the accumulation of the events which decreases the clear detection of those curves.

Comparison of the results between the EDFG model and the FBFG model21. Colour wheel insets in the second and third columns and the colour bar in the third and fourth columns are as in Fig. S2. The events stream comes directly from the robot’s eyes, for this reason, the size and position of the image contents of the RGB Input do not agree exactly with that in the Events panels (ED Figure Ground and Grouping map). The direction of border ownership in the figure-ground maps (third and fourth column) is defined by the colour wheels, top right in each of these panels. The colour scale next to the grouping maps (fifth and sixth column) represents the response from the model where 0 is the background (blue) and 1 is the foreground (red).

To obtain a real-world response from the model, iCub has also been placed in front of real objects (mugs, a water bottle, a keyboard, and a computer mouse) with different colours and light reflections in an office scenario. Results in Fig. 9c) shows a correct figure-ground map for the black bottle and the small blue container. The figure-ground map of the red mug shows an imperfect response probably due to iCub’s point of view. Both the blue container and the red mug show missing parts due to the sparse events collection for the event frame. The grouping maps clearly detect the bottle and both mugs. Figure 9g) shows a perfect result on the top, left and the bottom of the keyboard but it fails on the right side. The keyboard exceeds the detectable size of the model and the model cannot segment it properly due to the absence of the concept of the entire object (same as the TV case of Fig. 8). The mouse is correctly detected both on the figure-ground map and the grouping map. The keyboard is flipped to avoid noisy events due to the keys.

From the left to the right: Input RGB (a) and (e), Event Frames (b) and (f), Figure-Ground Map (c) and (g), and Grouping Map response (d) and (h), on the real object seen by the iCub in Fig. 7. The colour scale next to the grouping maps (fourth column) represents the response from the model where 0 is the background (blue) and 1 is the foreground (red).

Discussion

This work characterises the first event-driven figure-ground segmentation model taking inspiration from a biologically plausible architecture. The proposed model bridges bioinspired hardware and software taking advantage of the event-based architecture. The model adapts the RGB implementation on Matlab21 to work with the event-driven cameras, towards an online robotic application for the humanoid robot iCub.

The only difference between the EDFG and the FBFG outcomes is dependent on the nature of the inputs. The response in the proposed case is strongly dependent on the generation of the events which implies the inherent information of motion in real-world dynamic scenarios. Both models fail at detecting big objects as entire items if they fall out of the detectable range of sizes. Cluttered scenes where the object camouflages are, as expected, difficult to segment from both models. Given the nature of events, clutter objects on a plain background are better detected from the EDFG model. The model qualitatively and quantitatively perform a comparable outcome to the FBFG model and they both obtain a comparable response against the Ground-Truth. This is due to the obvious human superiority in segmenting objects into simple and complex scenarios integrating different cognitive mechanisms and cues. The difference in variability of the results from the SSIM and the MSE can be explained by the different approaches of these metrics. In one case the SSIM serves as a means to detect structural information, whilst the MSE detects gross differences and therefore can be disproportionately affected by outliers. Further experiments on dark backgrounds confirm the superiority of the EDFG, based on its inherent capability to operate on small contrast changes over dark backgrounds. Currently, these results are promising, and thanks to the inherent HDR of event-based cameras57, they warrant further exploration. Additional experiments, specifically targeting the collection of a comprehensive new dataset under various lighting conditions, alongside ground truth data with human subjects, would properly investigate this matter further. Additional work will be necessary to clarify the direct comparison between the models’ responses and the ground truth. In natural viewing scenarios, the ground truth represents a measurement of overt attention. Therefore, considering the model’s objective to predict overt attention, this comparison stands as the most reliable standard method for evaluating model performance. While this assessment remains approximate, it is regarded as the optimal approach for evaluating the model’s response. In conclusion, further deliberation on these findings focuses on our selection of R0. The Berkeley dataset encompasses such a wide range of sizes that additional optimisation is impractical beyond current achievements. Without a clearly defined target size, attaining an optimal solution remains elusive.

The characterisation qualitatively assesses the model’s performance showing its capabilities and limitations both in simple and model-complex real-world scenarios proving the feasibility of the online model on the robot in unconstrained environments. Since iCub’s head is equipped with event-based cameras, mounting an RGB camera on iCub’s head would have resulted in misaligned frames with a different visual perspective. In this case, the frame-based implementation serves as a baseline, demonstrating the system’s feasibility in real-world scenarios. The model is generally accurate correctly detecting the right angle information and therefore determining the centre of the detected foreground. The model’s smallest error in detecting the correct edge angle pixel-by-pixel is 5.29°, which is reasonable for any robotic visual task. The nature of the model to focus on closer items is discussed in our previous visual attention implementation52 where only information of depth is not sufficient to detect an object and/or the foreground. Therefore this implementation is a step forward to detect objects prioritising them if they are within the robot space of interoperability. One of the limitations of the model, as for the Berkeley results (see section "Discussion" 3), is the lack of knowledge of abstract objects in their integrity depending on its size range of detectable proto-objects, such as the TV case, fourth row in Fig. 8 or the keyboard in Fig. 9. This problem can be addressed by enlarging the range of detectable sizes, adding layers for scale invariance to the model, consequently increasing the computational burden, or choosing the appropriate R0 for the environment. This issue affects all computer vision models and warrants further detailed investigation.

Overall the model well respond to the complexity added using the BDSD dataset26 benchmark proving the workability for any robotic application where the robot explores the environment. The event-based approach of the proposed system dramatically reduces the amount of information to be processed taking advantage of the event-driven cameras. Therefore, working only on the events stream with low latency and power consumption allows the model to run online on the robot. The proposed model could undoubtedly take advantage of a fully spiking-based pipeline to widen the range of size detectable while not affecting the performances to obtain a response thanks to the parallel computation of neuronal populations. Indeed, a fully spiking-based model, not only would maximise the usage of the event-based architecture but would also avoid the time lost convolving the kernel with the frame of the event. The same pipeline, built on a neuroinspired platform such as Speck58,59, Loihi60, SpiNNaker61 or DYNAPs62 would certainly decrease the latency and the power consumption53. This implementation is, therefore, a first attempt towards a fully spiking-based pipeline. Both implementations are offline systems with a code not optimised for an online robotic application. A discussion about latencies is then out of the scope of this work. The possible online system could certainly take advantage of the implementation on a neuromorphic platform dramatically reducing the overall latency of the system (that is53). Future work will involve testing the implementation in more complex real-world, dynamic and cluttered scenarios to gain a deeper understanding of the model’s responses, which may require structural modifications. The model can take part in a complete robotic control system, allowing dynamic interactions with the environment with maximal efficacy and minimal latency. This will allow the creation of a real-time implementation of the robot interacting dynamically in its unconstrained environment.

Methods

Frame-based figure ground organisation model

The frame-based system of figure-ground organisation proposed by Hu et al.21 implements a recurrent network to extract edges from the input image using CORF operators (each sensitive to a particular orientation), which is a model of V1 simple cells63. Each simple cell operator is composed of different Centre-Surround cells modelled with Difference of Gaussian (DoG) distributions with different polarity, (-1 for centre-off, and +1 for centre-on), namely the Centre-OFF and Centre-ON Surround cells. Opposite polarity aligned groups of Centre-Surround cells compose a sub-unit sensitive to a specific orientation (see Fig. 1a), Eq. (2)63.

where the four-tuple (δi, σi, ρi, ϕi) represents the properties of a pool of afferent model LGN cells, called a sub-unit63. Specifically, δi represents the polarity of the Centre-Surround receptive fields (DoG distribution), σi the standard deviation of the outer Gaussian function of the involved DoG functions, and ρi,ϕi are the polar coordinates of the sub-units centre with respect to the receptive field’s centre.

The output of this network stage is a map of edge orientations in the scene (see Orientation Matrix Fig. 1). The Orientation Matrix is obtained using the edge maps at different orientations from each S cell, where each pixel contains the angle information for each edge in the image. The second layer of the model is the “Border Ownership Pyramid”(see B cells in Fig. 1) fed with the Orientation Matrix. This layer simulates the Border Ownership cells observed in V26 (see Eq. (3)) as von Mises (VM) filters, a curved kernel first introduced in this context by Russell et al.27, at different orientations and different scales to achieve scale invariance.

where R0 represents the distance of the VM filter from the centre and will later define the radius of the opposite orientation of von Mises filters, grouping the information to detect the proto-object defining the range of detectable sizes; and tan−1 takes two arguments and returns values in radians in the range (-π,π).

The Border Ownership Pyramid is sensitive to closed contours in the input, discerning between dark objects on a light background and vice versa (see Eq. (5) and (4)).

\({{{\mathscr{B}}}}_{\theta,D}^{k}\), the border ownership activity for a dark object on a light background is given by:

where vθ(x, y) is the VM filter and the factor 2j−k normalises the vθ(x, y) operator across scales, * the convolution operator, and \({{{\mathscr{S}}}}_{\theta,D}^{k}(x,y)\) the Simple Cell of a given θ orientation. And \({{{\mathscr{B}}}}_{\theta,L}^{k}\), the border ownership activity for a light object on a dark background:

The output from this layer is fed into the Grouping cells (G) where the information is pooled to estimate figure-ground assignment at a pixel level, that determines whether a pixel corresponds to the foreground or to the background.

The closed contours corresponding to the edges of the items will be detected by the VM filters, thereby defining the foreground. Each pixel corresponding to the foreground contains information on the orientation and strength of a vector pointing to the centre of the detected proto-object. The G signal is fed back to the B cells (see Eq. (5) and (4)), creating feedback which modulates the activity of the B cells response, creating their Border Ownership sensitivity (see Fig. 1). Recurrent connections allow for integration of local and global object information, resulting in fast scene segmentation.

Event driven figure-ground organisation model

The model we propose builds upon the foundations of the attention model we proposed22 and extends it, drawing inspiration from the model by Hu et al.21.

Frame-based vs. event-based orientation matrix computation

The CORF operator, the S cell, is composed of different aligned Centre-Surround cells computing the edge extraction (see Fig. 1). Every Simple cell exhibits sensitivity to a specific orientation. The integration of information from all the simple cells, each with its unique orientation, forms the Orientation Matrix that is fed into the Border Ownership Pyramid. The Orientation Matrix provides angle information of the edges present in the scene on a pixel-by-pixel basis to the system.

The output of classical frame-based cameras, used by this model21, has a very different spatiotemporal structure than that generated by biological retinae. We, therefore, use event-driven cameras whose output is much closer to that of biological eyes. Such cameras exclusively produce events only on edges due to relative motion. Leveraging this inherent edge response, the implementation is based on the assumption that the outcome of event-driven cameras can be compared to edge extraction. Our proposed event-based implementation bypasses the computation of edge extraction, effectively reducing computational loads.

No changes occur for static scenes seen by a stationary camera. Therefore, to generate events from a still picture motion has been introduced by generating small stereotyped circular camera movements, akin to micro-saccades observed in a large number of vertebrate species64. These movements are produced by moving the cameras (“eyes”) of the iCub robot23. The edge map is then simply the integration of events over a time window, resulting in an event map of the scene, see Fig. S2(b). The event-based Orientation Matrix is built from oriented edge maps obtained by convolving the event frames with oriented 2D Multivariate DoGs (see Eq. (8) and Eq. (9)) from 0° to 315° with a stride of 22.5° (14 orientations). Where μ1 = (0,0) which is equal to μ2; Σ1 =\(\left[\begin{array}{cc}0.8&0\\ 0&0.4\end{array}\right]\) and Σ2=\(\left[\begin{array}{cc}0.8&0\\ 0&0.3\end{array}\right]\). These parameters are obtained empirically by characterising the model response with a baseline, an ideal circular input of different sizes (see Fig. S2). The choice of using oriented 2D Multivariate DoGs eases the computation of oriented edges, obtaining the Orientation Matrix while avoiding the complex implementation of a bank of oriented Centre Surround cells as done with the CORF63 implementation (see section “Frame-based Figure Ground Organisation Model”).

To distinguish between opposite angles (for example, 0° and 180°) events with opposite polarities are split into separate event frames, obtaining the two opposite sides of the contour of the item. Such separation naturally happens when moving an object as the pixels on one side of the object will detect an illumination increase and those on the other side a decrease. The edge maps at different orientations are then used to create the Orientation Matrix, and they provide input to the Border Ownership Pyramid layer.

Using the event-based approach obviates the use of the CORF computations, thus bypassing the Centre-Surround computation required for edge extraction. This results in a reduced computational load and lower system latency. The event frame accumulates events for a period of time dependent on image contrast. If the scene exhibits high contrast changes, this will result in a higher number of events being produced, determining the sparsity or density of the event collection within a frame.

Border ownership and grouping pyramids

We retain the overall architecture of the Hu et al. model21 for the Border Ownership and Grouping cells (see Fig. 1). This implementation of the system preserves all the equations presented in section 1. In both cases, feedforward and feedback connectivity produce a figure-ground map and a grouping map representing the figure-ground assignment based on proto-object detection and grouping cell activity respectively. The aim of the foreground-background organisation is not to reject background clutter, but to successfully identify the inside and outside of any object. Our model is implemented in Python for use in online robotic applications.

Parameter choices

The implementation has been developed in Python and MATLAB for offline analysis. See https://github.com/event-driven-robotics/figure-ground-organisation or section “Code availability” for the link to the code.

The first analysis investigates the response to various item sizes using different values for the radius R0 of the Grouping cells which defines the kernel radius and determines the range of object sizes that the model can represent [2px, 4px, 8px, 10px, 12px], see Eq.(3). The response evaluation is qualitatively and quantitatively determined using simple circle stimuli on a white canvas as the baseline for the model response (see Fig. S2(a)). The same analysis has been done for the frame-based implementation obtaining similar results. This allows us to compare results with those from the Hu et al. study21. In the range of circle sizes presented to the models (from 2 cm to 8 cm in diameter, see Fig. S3), R0=8 is the best value to detect a greater number of circles (red response from the Grouping Maps in Fig. S3) from both, FBFG and EDFG implementation. This value is chosen by looking at the SSIM over the MSE results prioritising the localisation of responses at specific points across the visual field, rather than a global assessment. The concept of objects changes in accordance with the detectable sizes, depending on the radius of the grouped cells. Parameters used for both implementations are shown in Table T1.

Data availability

Link to the data: https://doi.org/10.5281/zenodo.12581712.

Code availability

Link to the code: https://github.com/event-driven-robotics/figure-ground-organisation. Github https://doi.org/10.5281/zenodo.14644515.

References

Craft, E., Schütze, H., Niebur, E. & von der Heydt, R. A neural model of figure–ground organization. J. Neurophysiol. 97, 4310–4326 (2007).

Bregman, A. S. et al. Auditory scene analysis: The perceptual organization of sound. (MIT Press,1994).

Pawluk, D., Kitada, R., Abramowicz, A., Hamilton, C. & Lederman, S. J. Figure/ground segmentation via a haptic glance: attributing initial finger contacts to objects or their supporting surfaces. IEEE Trans. Haptics 4, 2–13 (2010).

Hu, B., Kane-Jackson, R. & Niebur, E. A proto-object based saliency model in three-dimensional space. Vis. Res. 119, 42–49 (2016).

Wagemans, J. et al. A century of gestalt psychology in visual perception: I. perceptual grouping and figure–ground organization. Psychological Bull. 138, 1172 (2012).

Zhou, H., Friedman, H. S. & Von Der Heydt, R. Coding of border ownership in monkey visual cortex. J. Neurosci. 20, 6594–6611 (2000).

Posch, C., Matolin, D. & Wohlgenannt, R. A QVGA 143 dB dynamic range frame-free PWM image sensor with lossless pixel-level video compression and time-domain CDS. In IEEE Journal of Solid-State Circuits, 46, 259–275 (2011).

Guo, Z., Hou, Y., Xiao, R., Li, C. & Li, W. Motion saliency based hierarchical attention network for action recognition. Multimed. Tools Appl. 82, 4533–4550 (2023).

Zong, M., Wang, R., Ma, Y. & Ji, W. Spatial and temporal saliency based four-stream network with multi-task learning for action recognition. Appl. Soft Comput. 132, 109884 (2023).

Monforte, M., Arriandiaga, A., Glover, A. & Bartolozzi, C. Exploiting Event Cameras For Spatio-temporal Prediction Of Fast-changing Trajectories. In 2020 2nd IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), 108–112. IEEE, (2020).

Schnider, Y. et al. Neuromorphic Optical Flow And Real-time Implementation With Event Cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4128–4137 (2023).

Vitale, A., Renner, A., Nauer, C., Scaramuzza, D. & Sandamirskaya, Y. Event-driven Vision And Control For uavs On A Neuromorphic Chip. In 2021 IEEE International Conference on Robotics and Automation (ICRA), 103–109. IEEE, (2021).

Paulun, L., Wendt, A. & Kasabov, N. A retinotopic spiking neural network system for accurate recognition of moving objects using neucube and dynamic vision sensors. Front. Computational Neurosci. 12, 42 (2018).

VanRullen, R. & Thorpe, S. J. Spatial attention in asynchronous neural networks. Neurocomputing 26, 911–918 (1999).

Eklundh, J.-O., Uhlin, T., Nordlund, P. & Maki, A. Active vision and seeing robots. In Robotics Research: The Seventh International Symposium, pages 416–427. Springer, (1996).

Minaee, S. et al. Image segmentation using deep learning: A survey. IEEE Trans. pattern Anal. Mach. Intell. 44, 3523–3542 (2021).

Arsenio, A. M. Figure/ground segregation from human cues. In 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), 4, 3244–3250. IEEE, (2004).

Comaniciu, D. & Meer, P. Robust analysis of feature spaces: Color image segmentation. In Proceedings of IEEE computer society conference on computer vision and pattern recognition, 750–755. IEEE, (1997).

Mishra, A., Aloimonos, Y. & Fermuller, C. Active segmentation for robotics. In 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, 3133–3139. IEEE, (2009).

Censi, A. & Scaramuzza, D. Low-latency event-based visual odometry. In 2014 IEEE International Conference on Robotics and Automation (ICRA), 703–710. IEEE, (2014).

Hu, B., von der Heydt, R. & Niebur, E. Figure-ground organization in natural scenes: Performance of a recurrent neural model compared with neurons of area v2. eNeuro, 6, 3 (2019).

Iacono, M. et al. Proto-object based saliency for event-driven cameras. In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 805–812. IEEE, (2019).

Bartolozzi, C. et al. Embedded neuromorphic vision for humanoid robots. In CVPR 2011 workshops, 129–135. IEEE, (2011).

Fowlkes, C. C., Martin, D. R. & Malik, J. Local figure–ground cues are valid for natural images. J. Vis. 7, 2–2 (2007).

Martin, D., Fowlkes, C., Tal, D. & Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proc. 8th Int’l Conf. Computer Vision, 2, 416–423 (2001).

Martin, D., Fowlkes, C., Tal, D. & Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, 2, 416–423. IEEE, (2001).

Russell, A. F., Mihalaş, S., von der Heydt, R., Niebur, E. & Etienne-Cummings, R. A model of proto-object based saliency. Vis. Res. 94, 1–15 (2014).

Rensink, R. A. The dynamic representation of scenes. Vis. Cognition 7, 17–42 (2000).

Driver, J., Davis, G., Russell, C., Turatto, M. & Freeman, E. Segmentation, attention and phenomenal visual objects. Cognition 80, 61–95 (2001).

Nakayama, K., Shimojo, S. & Silverman, G. H. Stereoscopic depth: its relation to image segmentation, grouping, and the recognition of occluded objects. Perception 18, 55–68 (1989).

Nakayama, K., He, Z. J. & Shimojo, S. Visual Surface Representation: A Critical Link Between Lower-level And Higher-level Vision. In S. Kosslyn and D. Osherson, editors, Visual Cognition: An Invitation to Cognitive Science, volume 2, chapter 1, 1–70. The MIT Press, 2nd edition, (1995).

Rubin, E. et al.Visuell wahrgenommene Figuren. (Gyldendalske, Copenhagen, 1921).

Koffka, K. & Cabral, Á. Princípios de psicologia da Gestalt. (Cultrix São Paulo, 1975).

Franken, T. P. & Reynolds, J. H. Columnar processing of border ownership in primate visual cortex. eLife 10, e72573 (2021).

Martin, A. B. & von der Heydt, R. Spike synchrony reveals emergence of proto-objects in visual cortex. J. Neurosci. 35, 6860–6870 (2015).

Wagatsuma, N., von der Heydt, R. & Niebur, E. Spike synchrony generated by modulatory common input through NMDA-type synapses. J. Neurophysiol. 116, 1418–1433 (2016).

Wagatsuma, N., Hu, B., von der Heydt, R. & Niebur, E. Analysis of spiking synchrony in visual cortex reveals distinct types of top-down modulation signals for spatial and object-based attention. PLoS Computational Biol. 17, e1008829 (2021).

Herault, L. & Horaud, R. Figure-ground discrimination: a combinatorial optimization approach. IEEE Trans. Pattern Anal. Mach. Intell. 15, 899–914 (1993).

Stricker, M. & Leonardis, A. Figure-ground Segmentation Using Tabu Search. In Proceedings of International Symposium on Computer Vision-ISCV, 605–610. IEEE, (1995).

Pham, V.-Q., Takahashi, K. & Naemura, T. Bounding-box Based Segmentation With Single Min-cut Using Distant Pixel Similarity. In 2010 20th international conference on pattern recognition, 4420–4423. IEEE, (2010).

Yang, W., Cai, J., Zheng, J. & Luo, J. User-friendly interactive image segmentation through unified combinatorial user inputs. IEEE Trans. Image Process. 19, 2470–2479 (2010).

Lempitsky, V., Kohli, P., Rother, C. & Sharp, T. Image Segmentation With A Bounding Box Prior. In 2009 IEEE 12th international conference on computer vision, 277–284. IEEE, (2009).

Hsiao, Y.-M. & Chang, L.-W. Unsupervised Figure-ground Segmentation Using Edge Detection And Game-theoretical Graph-cut Approach. In 2015 14th IAPR International Conference on Machine Vision Applications (MVA), 353–356. IEEE, (2015).

Liang, Y., Zhang, M. & Browne, W. N. Figure-ground Image Segmentation Using Genetic Programming And Feature Selection. In 2016 IEEE Congress on Evolutionary Computation (CEC), 3839–3846. IEEE, (2016).

Naha, S. & Wang, Y. Object Figure-ground Segmentation Using Zero-shot Learning. In 2016 23rd International Conference on Pattern Recognition (ICPR), 2842–2847. IEEE, (2016).

Nishimura, H. & Sakai, K. Determination of border-ownership based on the surround context of contrast. Neurocomputing 58, 843–8 (2004).

Ramenahalli, S., Mihalas, S. & Niebur, E. Local spectral anisotropy is a valid cue for figure-ground organization in natural scenes. Vis. Res. 103, 116–126 (2014).

Hu, B. & Niebur, E. A recurrent neural model for proto-object based contour integration and figure-ground segregation. J. computational Neurosci. 43, 227–242 (2017).

Sun, Y. & Fisher, R. Hierarchical selectivity for object-based visual attention. In International Workshop on Biologically Motivated Computer Vision, 427–438. Springer, (2002).

Grossberg, S. & Mingolla, E. Neural Dynamics Of Perceptual Grouping: Textures, Boundaries, And Emergent Segmentations. In The adaptive brain II, 143–210. Elsevier, (1987).

Itti, L., Koch, C. & Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. pattern Anal. Mach. Intell. 20, 1254–1259 (1998).

Ghosh, S. et al. Event-driven proto-object based saliency in 3d space to attract a robot’s attention. Sci. Rep. 12, 7645 (2022).

D’Angelo, G., Perrett, A., Iacono, M., Furber, S. & Bartolozzi, C. Event driven bio-inspired attentive system for the icub humanoid robot on spinnaker. Neuromorphic Comput. Eng. 2, 024008 (2022).

Iacono, M., Weber, S., Glover, A. & Bartolozzi, C. Towards event-driven object detection with off-the-shelf deep learning. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 1–9. IEEE, (2018).

Rebecq, H., Gehrig, D. & Scaramuzza, D. Esim: an open event camera simulator. In Conference on Robot Learning, pages 969–982. PMLR, (2018).

Szeliski, R. Computer Vision: Algorithms And Applications. (Springer Nature, 2022).

Jiang, Y. et al. Event-based Low-illumination Image Enhancement. IEEE Transactions on Multimedia, (2023).

Sorbaro, M., Liu, Q., Bortone, M. & Sheik, S. Optimizing the energy consumption of spiking neural networks for neuromorphic applications. Front. Neurosci. 14, 662 (2020).

Liu, Q. et al. Live demonstration: face recognition on an ultra-low power event-driven convolutional neural network ASIC. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pages 0–0, (2019).

Davies, M. et al. Loihi: A neuromorphic manycore processor with on-chip learning. Ieee Micro 38, 82–99 (2018).

Furber, S. B., Galluppi, F., Temple, S. & Plana, L. A. The spinnaker project. Proc. IEEE 102, 652–665 (2014).

Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (dynaps). IEEE Trans. Biomed. circuits Syst. 12, 106–122 (2017).

Azzopardi, G., Rodríguez-Sánchez, A., Piater, J. & Petkov, N. A push-pull corf model of a simple cell with antiphase inhibition improves SNR and contour detection. PLoS One 9, e98424 (2014).

Martinez-Conde, S. & Macknik, S. L. Fixational eye movements across vertebrates: comparative dynamics, physiology, and perception. J. Vis. 8, 28–28 (2008).

Acknowledgements

G.D. acknowledges the financial support from the European Union’s HORIZON-MSCA-2023-PF-01-01 research and innovation programme under the Marie Skłodowska-Curie grant agreement ENDEAVOUR No 101149664.

Author information

Authors and Affiliations

Contributions

G.D. (Corresponding Author) conceived the main idea behind the work, developed the theory to build the implementation, and contributed to experiments, result analysis, and the entire manuscript writing. S.V. implemented the model and ran the initial characterisation. M.I. helped with the implementation of the ground truth segmentation conversion experiment. A.G. helped and supervised the entire manuscript, also analysing and discussing the results and suggesting parts of the comparison experiments. E.N. helped and supervised the entire manuscript, also analysing and discussing the results. C.B. conceived the main idea behind the work and supervised the entire work from the start, giving constant feedback and suggestions for all the experiments, and also analysing and discussing the results.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Alejandro Rodriguez, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

D’Angelo, G., Voto, S., Iacono, M. et al. Event-driven figure-ground organisation model for the humanoid robot iCub. Nat Commun 16, 1874 (2025). https://doi.org/10.1038/s41467-025-56904-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-56904-9