Abstract

How does the motor cortex combine simple movements (such as single finger flexion/extension) into complex movements (such as hand gestures, or playing the piano)? To address this question, motor cortical activity was recorded using intracortical multi-electrode arrays in two male people with tetraplegia as they attempted single, pairwise and higher-order finger movements. Neural activity for simultaneous movements was largely aligned with linear summation of corresponding single finger movement activities, with two violations. First, the neural activity exhibited normalization, preventing a large magnitude with an increasing number of moving fingers. Second, the neural tuning direction of weakly represented fingers changed significantly as a result of the movement of more strongly represented fingers. These deviations from linearity resulted in non-linear methods outperforming linear methods for neural decoding. Simultaneous finger movements are thus represented by the combination of individual finger movements by pseudo-linear summation.

Similar content being viewed by others

Introduction

Coordinated finger movements are fundamental to many everyday living activities, enabling tasks as simple as picking up an apple to as complex as playing a Chopin nocturne. While the human hand exhibits more than 20 degrees of freedom,1, naturally occurring finger movements are structured2,3. Indeed, many complex hand movements can be broken down into simple combinations of flexion/extension or abduction/adduction movements of individual finger joints. The principles that govern how the neural representation of these individual movements is combined to produce complex, multi-finger movements remain largely unknown.

One hypothesis is that neural representation for a movement is equal to a simple combination of the neural representation of its parts (‘compositional coding’). The simplest compositional hypothesis for multiple finger movements is the linear summation of constituent single finger movements (Fig. 1A). This hypothesis has been successful in explaining the motor cortical representation of homologous movements across limbs as the linear summation of a movement-specific and a limb-specific component4,5.

A–C Hypotheses for the neural geometry of multiple finger movements in neural state space, where each axis corresponds to the activity of a neuron. A represents compositional coding where the neural activity for multiple finger movements is equal to the activity for single finger movements combined by linearity summation. B Neural activity for multiple finger movements is distinct from single finger movement activities, such that the resulting activity is largely orthogonal to the subspace spanned by single finger movements in the neural state space. C represents a variant of compositional coding in (A), where the single finger movements are combined by pseudo-linear summation. D Research setup. Intracortical neural activity is recorded using two Utah arrays placed in the hand-knob area of the dominant precentral gyrus while the participant attempts finger movements cued using an animated hand in 3D.

An alternative hypothesis is that the neural representation of multiple finger movements is distinct and not predictable in a simple way from the neural representation of constituent single-finger movements. This hypothesis can be geometrically described in the neural state space, with the axes corresponding to the activity of neurons in the circuit. In this model, the neural activity representing single and multiple finger movements may exist in largely orthogonal subspaces (Fig. 1B), allowing a large number of movements to be distinctly represented along unique neural dimensions.

The compositional coding hypothesis in its simplest form predicts that the magnitude of neural activity increases with the number of moving fingers, which may be unrealistic in a biological neural circuit, in which firing rates of individual neurons reach a saturation limit. To address this potential issue, a more sophisticated compositional hypothesis adjusts the initial simple linear summation by adding magnitude normalization (“pseudo-linear” summation, Fig. 1C). This revised compositional hypothesis limits representational capacity as a large number of movements are constrained to a low dimensional space spanned by single finger movements.

While previous studies have presented evidence for overlapping neural representations of multiple finger movements and single finger movements6,7,8,9,10,11, a detailed mathematical characterization of the neural geometry at the level of spiking neural ensembles has not been done. We used investigated the hypotheses outlined above using intracortical multi-electrode array recordings from the human premotor cortex as research participants with paralysis attempted single, paired, or higher-order finger movements (Fig. 1D) designed to explore a broad parameter space including both natural gestures and arbitrary combinations of flexion and extension.

Our results were most consistent with the pseudo-linear compositional hypothesis in which the neural activity for multiple-finger movements was aligned with the linear summation of constituent single-finger movement activities, but the magnitude was lower compared to linear summation (consistent with normalization).

Results

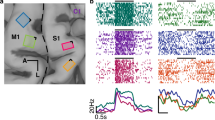

Intracortical neural recordings were obtained from two participants in the BrainGate2 pilot clinical trial (‘T5’ and ‘T11’) as they attempted single finger and multi-finger movements. Both T5 (69 years old) and T11 (36 years old) are right-handed men, with tetraplegia secondary to a high-level spinal cord injury. Both had two 96-channel intracortical Utah arrays placed in the ‘hand knob’ area of the left (dominant) precentral gyrus. All research sessions focused on the fingers of the right hand.

Neural geometry of single-finger movements

We began by characterizing the neural representation of single finger movements. Participant T5 attempted movements of the right hand, starting from a neutral rest position and ending in either attempted flexion or extension of a single finger. The movement for each trial was cued using a 3D animated hand and consisted of preparatory (1.5 s) and move (1 s) periods (Fig. 1D, Supplementary fig. 1). Movements were repeated across three research sessions resulting in a total of ~1000 trials.

Neural activity on single electrodes, measured by the number of threshold crossing events, differed across the movement conditions, confirming tuning for single finger movements (Fig. 2A). Both finger velocity and finger position were represented in the neural activity (Supplementary fig. 2). Neural population activity occupied a low-dimensional space, with five principal components (out of 576 ambient dimensions = concatenation of 192 channels of trial-averaged activity across three sessions) capturing > 95% of the variance (Fig. 2B).

A Peristimulus time histograms of recorded neural activity from 3 example channels during attempted flexion and extension of individual fingers on the contralateral (right) hand (lines show the average for different conditions, shaded regions show standard error). B Cumulative fraction of variance explained by the principal components of the trial and time-averaged neural activity. The top five components captured > 90% of the variance (ambient dimensionality 576 resulting from concatenation of 192 channels across three sessions), suggesting a low dimensional representation. C Schematic description of two metrics for characterizing the geometry of different movements in neural state space, where each axis corresponds to the activity of a particular neuron. Representational dissimilarity matrix (RDM) is estimated by measuring Euclidean distance (dij) between pairs of movements. Relative arrangement of the direction along which each finger modulates the activity in the neural state space is given by the cosine of angle (θ) between lines joining flexion-extension movements of a given finger. D RDM for neural activity during the hold period, averaged across three sessions for participant T5. Black indicates higher similarity. Off-diagonal elements were significantly larger than zero (p < 0.001). Significance level was computed by comparing the observed distances with a null distribution of 1000 distances. These null distribution distances were calculated by permuting neural activity across the two movements. E Similarity of the modulation directions for pairs of fingers. Nearby fingers have similar modulation direction, except for the thumb. F, G Visualization of neural activity using cross validated Targeted Dimensionality Reduction (TDR) followed by PCA. TDR directions identified by regressing from the kinematic variables (five dimensions, one for each finger, +1 for flexion/ −1 for extension, and 0 for rest) to trial & time-averaged neural activity in the last 0.4 s of each trial. Colors indicate fingers, line style indicates different movements (flexion/extension) and black dots indicate the ‘go’ cue. Arrows indicate the evolution of neural activity during the course of the trial. Two views of the 3D geometry are shown. Source data are provided in the Source Data file.

The geometry of different finger positions in the neural state space was assessed using a Representational Dissimilarity matrix (RDM12), estimated by measuring the cross-validated Euclidean distance of the population activity between all pairs of movements (flexion/extension across fingers) after trial and time averaging (see Methods, Fig. 2C). The distances between flexion and extension movements were large, resulting in a block diagonal structure (Fig. 2D). This structure is similar to the RDM of the kinematics and muscle activity during finger movements in able-bodied people11,13,14.

In addition to the RDM, the angle between the lines joining different movement conditions was used to evaluate the relative arrangement of the directions along which finger movements modulate the activity in the neural state space (Fig. 2E). The cosine of this angle measures the correlation between the corresponding neural activity patterns across neurons. First, within each finger, the neural activity for flexion and extension evolved in opposite directions (Supplementary fig. 3A). Second, within each movement type (flexion or extension), nearby fingers evolved in similar directions (Supplementary fig. 3B, C). Third, the lines joining flexion and extension movements were more aligned for nearby fingers (as expected), but the thumb was anti-correlated to the little finger, which was not expected based on previously published studies15,16.

To intuitively visualize these features, the neural activity was projected into a three-dimensional space identified using five-dimensional cross-validated targeted dimensionality reduction (TDR) followed by PCA (see Methods). For each finger, neural activity corresponding to flexion and extension movements evolved along diametrically opposite directions from the origin, and nearby fingers occupied similar directions. In the top two PCs, thumb flexion overlapped with little finger extension, and thumb extension overlapped with little finger flexion (Fig. 2F), explaining the negative correlation between thumb and little finger directions in Fig. 2E. However, the neural activity for the thumb and little fingers were separated in the third PC dimension (Fig. 2G), maintaining the ability to distinguish between them.

This structure was preserved across left and right hands and was not influenced by different palm orientations, with the limb-specific and/or pose-specific component linearly translating the activity in the neural state space (Supplementary Fig. 417).

Neural activity for multi-finger movement is consistent with the sum of its parts

Given the neural representation of single finger movements, how are they combined to represent multi-finger movements? First, we confirmed that attempted multi-finger movements are robustly represented in our neural recordings. For a collection of 38 natural hand movements consisting of gestures from the American Sign Language (ASL) alphabet and some stereotypical single/multiple finger movements (Fig. 3A), a linear decoder achieved 76% classification accuracy (chance 2.6%) using the neural activity recorded from T5 (Fig. 3B). Since most of these gestures have correlated movements for nearby fingers and do not span the complete space of finger movements, we repeated this analysis with a collection of 80 combinatorial finger movements. For these combinatorial movements, each finger was independently cued to be either flexed, extended, or at rest. To ensure that enough repetitions of each combination could be collected within a single research session, the ring and little fingers were linked together (referred to throughout as a single “finger” entity, the “ring-little finger”) to reduce the number of movement conditions to 80. Certain gestures, such as flexion of the thumb, index, and middle finger with extension of the ring/little fingers, require improbable coordination patterns of finger movements and may be difficult to perform, resulting in “non-natural” movements. A linear decoder achieved 40% classification accuracy (chance: 1.3%, Fig. 3C). This shows that various natural and non-natural multi-finger movements are distinctly represented in the hand area of the premotor cortex, permitting a study of their neural geometry.

A Neural activity was recorded as T5 attempted 38 hand movements on the right hand, consisting of gestures from the American Sign Language (ASL) alphabet & single finger movements. Each movement was attempted 22 times in trials consisting of 1 s prep, 1 second move, and 2 s hold periods. Copyright © Meta Platforms Technologies, LLC and its affiliates. All rights reserved. B A confusion matrix, where the (i, j)th entry is colored by the percentage of trials where movement j was decoded when movement i was cued. A linear support vector classifier was used for decoding. Classification accuracy was 76%, substantially above the chance accuracy of 2.6%. C The same decoding analysis was applied to a collection of 80 combinatorial gestures, where four finger groups were varied independently (the ring and small fingers constrained to the same movement). Each finger group was independently cued to be flexed, extended, or idle (15 trials were collected per condition, with a 1 s preparatory and a 2 s movement period). Movements are indicated with the inset images, with flexion, idle, and extension indicated with black, gray, and white colors, respectively. The classification accuracy was 40%, substantially above the chance accuracy of 1.3%. Source data are provided in the Source Data file.

Next, we tested the compositional coding hypothesis for multi-finger movements, beginning with the simultaneous pairwise movement of finger groups. Two types of finger groups were considered: thumb versus all other fingers (Fig. 4A, B with participants T5 and T11 respectively) and individual fingers (Fig. 4C with T5). Each finger group was independently cued for flexion/extension or rest. The neural subspace that was most modulated by each pairwise finger group movement was visualized with TDR after trial averaging and removing the condition-invariant signal. Activity for pairwise finger movements evolved in a region of the neural state space that was ‘in-between’ that of the corresponding single finger movements, as expected from linear summation of the constituent movements. Next, we assessed the degree of linearity quantitatively by fitting a linear summation model to a large set of 80 combinatorial finger movements, with four independently varying finger groups. A linear model was fit to all movement conditions using an eight-dimensional one-hot encoding of kinematics, with separate dimensions for each finger group and movement direction. For each finger, we found that the magnitude of the linear model weights was higher for flexion compared to extension, and for both types of movements, the magnitude was higher for thumb and ring-little fingers compared to index and middle fingers (Fig. 4D). The linear model predicted neural activity significantly better than chance (Fig. 4E) and captured 56% of the variance (presented later in Fig. 5E).

A Participant T5’s neural activity for all movement combinations of two “finger groups” 1. the thumb, and 2. all other fingers linked together. For each movement condition, threshold crossings were trial-averaged and concatenated (over the electrode dimension) across two sessions. Neural activity visualized after subtracting the condition invariant signal (CIS) and projecting out the associated neural dimensions, followed by cross-validated targeted dimensionality reduction (TDR, see Methods). Lines indicate trajectories for each condition which start at rest, move outwards, and settle at a final position representing the target gesture. Trajectories where only one finger group moves are colored, while gray lines correspond to conditions where both groups move in combination (as shown by inset images). Copyright © Meta Platforms Technologies, LLC and its affiliates. All rights reserved. B Same as (A), using data from one session in participant T11 (see Methods). T11 followed the same experimental protocol for the pairwise finger movement task as T5. C Same as (A), using data from five sessions for pairwise combinations of four finger groups (thumb, index, middle, and ring-little combined). D A linear encoding model was fit to predict trial-averaged neural activity recorded from T5 during the hold period (after removing the CIS) using the finger movement kinematics. Kinematics were represented with an eight-dimensional one-hot encoding, with each dimension corresponding to the presence (=1) or the absence of (=0) of a combination of finger and movement type (flexion/extension). Bar heights indicate the magnitude (length) of the vector of regression coefficients corresponding to each finger. Error bars indicate the standard deviation across 100 resamplings of data. Neural activity modulated more with flexion than extension, and thumb, ring & little, index, and middle modulated activity in a decreasing order. E Distribution of the cross-validated correlation between observed neural activity during the hold period and predictions from a linear encoding of kinematics (blue) in (D). The linear model predicts the neural geometry better than the null distribution (orange) generated by randomly shuffling the neural activity and the kinematics labels with respect to each other and measuring the correlation with the linear model predictions. Source data are provided in the Source Data file.

A Magnitude of the recorded neural activity (Euclidean norm of the high-dimensional activity) during the hold period after removing the condition invariant signal (red dots) for an example set of two finger-group movements (thumb vs. all other fingers). Distance from the origin corresponds to the magnitude of neural activity, with different movement conditions represented along different directions. Predictions from linearly summing the neural activity of single finger-group movements are indicated in green. The magnitude of the recorded neural activity for two finger-group movements was lower than what would be expected from the sum of the corresponding single finger-group parts. B A comparison of recorded activity magnitude (red) and predictions from a linear model (black) for 80 combinatorial movements of four finger-groups. Each point is a distinct movement combination. Movements are grouped by the number of fingers either flexed or extended (x-axis). A linear model using a one-hot encoding of kinematics (8 dimensions, one dimension for each combination of finger and movement direction) predicts that magnitude will increase as more fingers move, but the recorded activity magnitudes appear relatively constant. The linear model under-predicts the neural activity magnitude for a small number of moving finger-groups, as the linear model is fit to all movement conditions, and there are a large number of conditions with multiple finger-groups moving. Statistical significance of the difference in means between the data and model fit for each group of conditions with a particular number of fingers moving is evaluated with a two-sided t-test. Significance level is indicated by the number of stars (‘*’), with n stars indicating p < 10−n. C A linear-nonlinear model (with 10 hidden units) better captures the magnitude of neural activity (compared to (B)). The difference in means was not statistically significant (two-sided t-test). D The linear-nonlinear model schematic which applies a tanh(.) non-linearity to map kinematics to predicted neural activity patterns; this nonlinearity should allow the model to capture activity magnitudes more accurately via saturation. A and B denote linear maps from kinematics to hidden unit inputs and hidden unit activity to the observed neural activity respectively. E Fraction of variance explained across the 80 combinatorial movements by the linear-nonlinear model with an increasing number of hidden layers (red). A low-rank linear model was used for comparison (gray). Linear-nonlinear models outperform the linear model, and performance saturates at a small number of hidden dimensions of ~10. Cross-validated performance was averaged across 100 resamplings of trials used for training and evaluation (shaded regions indicate standard deviation). F Similar analysis as (E), but testing generalization across movements—models were trained on a random subset of 64 movements (out of 80) and tested on the remaining movements. Since the performance varies based on which movements are partitioned into the test set, the performance change was measured compared to the linear-nonlinear model with the highest number of hidden units, and averaged across resamplings. Linear-nonlinear model generalizes better than a linear model to novel movement conditions. G Cumulative distribution of hidden unit activity in the linear-nonlinear model for conditions with different numbers of moving finger-groups across 100 resamplings. Note the greater saturation with a larger number of moving finger-groups. Source data are provided in the Source Data file.

Overall neural activity magnitude is conserved for multi-finger movements

While the linear summation model is simple and intuitive, it makes a rather strong prediction: that the magnitude of neural activity should increase with the number of moving fingers (i.e., the total number of fingers either flexed or extended). We tested this prediction by comparing the magnitude of the neural activity to that predicted by a linear model. We measured the magnitude of the neural activity during the hold period (thereby avoiding potential trial-to-trial variability in reaction time and movement speed), using an unbiased estimate of activity magnitude4,18. For two finger-group movements, the magnitude of neural activity for simultaneous movement was smaller than what would be expected from summing the constituent single finger-group movements (Fig. 5A shows an example of two finger-groups, Supplementary fig. 5 shows others). For combinatorial movements of four finger-groups, neural activity magnitude remained constant as the number of moving fingers increased. The linear model fit to all movement conditions failed to capture this phenomenon and instead predicted that magnitude should increase with the number of moving fingers. This resulted in an under-prediction of neural activity magnitude for single and two-finger group movements (Fig. 5B). In summary, linearly summing the contributions of single finger movements over-predicts the magnitude for simultaneous movements (Fig. 5A), and fitting a linear model to all the movements under-predicts the magnitude for single finger movements while matching the magnitude of simultaneous movements (Fig. 5B). Both observations are consistent with normalization and show the failure of linear summation.

Extensions of the linear model were evaluated to find an accurate but parsimonious description of the observed neural activity. First, we tested if the magnitude normalization can be captured by a linear model on normalized kinematics (kinematics represented using one-hot encoding, normalized by their magnitude). The predictions accurately estimated the magnitude of neural activity for a small number of moving fingers (Supplementary fig. 6A, B). However, when the number of fingers increased, the predictions consistently underestimated the magnitude, resulting in no overall improvement in prediction accuracy. Hence, the observed magnitude normalization does not arise from a simple normalization of kinematics.

Next, we tested a quadratic model, which can incorporate interactions between different finger groups. This model drastically improved the prediction accuracy and captured the magnitude across different numbers of finger groups (Supplementary fig. 6A). However this model was not parsimonious, as it had four times as many parameters as the linear model.

Finally, we tested a linear-nonlinear model that can achieve normalization of neural activity through saturating nonlinearities in the mapping from kinematics to neural activity. Neural activity (y, during the hold period) is modeled in terms of one-hot encoding of kinematics (u) as:y=A tanh(Bu), where B defines a linear map from kinematics to the hidden units and A defines a linear map from the hidden units to the neural activity (Fig. 5E). The linear-nonlinear model outperformed the linear model when trained on an identical set of movement conditions as test data (Fig. 5F) and could generalize successfully across movements (test movements different from train movements, Fig. 5G). Additionally, the performance of the linear-nonlinear model saturated with ~10 hidden units, which is much smaller than either the number of conditions (80) or the dimensionality of the neural state space (448). We use the term “pseudo-linear” to describe a linear-nonlinear model with a small number of hidden units. Compared to the quadratic model, the pseudo-linear model is more parsimonious with roughly the same number of parameters as the linear model, and explains a similar amount of variance in neural activity (Supplementary Fig. 6A). A pseudo-linear model with rectifying nonlinearity instead of tanh nonlinearity achieved comparable performance with many hidden units, but performed worse with fewer units (Supplementary Fig. 6A, C).

The pseudo-linear model captured magnitude normalization, i.e., how neural activity remained at a relatively constant magnitude with an increasing number of moving fingers (Fig. 5C). The model achieved this via saturating nonlinearity, as shown by the greater saturation of hidden unit activity with an increasing number of moving finger groups (Fig. 5G). Overall, the pseudo-linear model can be interpreted as explaining the neural activity by linearly combining the kinematic variables and then non-linearly projecting it onto a low-dimensional manifold described by the hidden unit activities.

Pseudo-linear representation limits linear decodability of fingers with weaker modulation

What is the impact of pseudo-linear compositional representation on the ability to use a linear decoder to identify the finger positions during multi-finger movements? This can be assessed by measuring how the representation of a given finger changes across contexts, i.e., how it changes with particular movement combinations of the other fingers. In the neural state space, the line connecting a pair of multi-finger movements which only differ in the final position of a target finger (i.e., either flexed or extended) but which are identical in the final position of other fingers describes the target finger’s “population tuning”, i.e., how the population activity changes as a function of a finger’s movement in a given context. Changes in the population tuning across contexts measure how a decoder might generalize to multi-finger movement it was not trained on. A linear decoder, for example, might decode the motion of each finger by projecting neural activity along each finger’s population tuning direction – in this case, generalization would be impaired if the population tuning changes.

We analyzed the changes in the population tuning direction and magnitude for each finger across contexts using neural activity during the hold period for combinatorial movements of four fingers (Fig. 6A, Supplementary Movie 1). The variation in tuning direction was characterized by the distribution of angles between the population tuning directions across all pairs of contexts (Fig. 6B). While the population tuning vectors showed a context-dependent variation for all finger-groups, they were more consistently aligned across contexts for the thumb and ring-little finger groups as compared to the middle and ring fingers. While a linear model, by definition, cannot describe the changes in tuning direction across contexts, a pseudo-linear model (ten hidden units with tanh nonlinearity) captured the variation across contexts within a finger-group and across finger-groups (Fig. 6B). Similarly, the population tuning magnitude varied across contexts within each finger group, with a lower variation for thumb and ring-little compared to index and middle finger groups (Fig. 6C). Similar to tuning direction, while a linear model cannot capture these variations, a pseudo-linear model was able to capture the changes in population tuning magnitudes (Fig. 6D).

A Visualizing ‘population tuning’ vectors joining the points corresponding to the flexion and extension movements for fixed movement of other fingers. Three principal components capturing the average neural activity for flexion-extension movements across fingers are computed and visualized with a two-dimensional projection that aligns the flexion-extension movements of a particular finger along the y-axis. Colored lines indicate the marginalized (average across conditions) neural activity for flexion (dotted) and extension (solid) movements of fingers. Dots indicate the average neural activity during the hold period while the participant attempted a combinatorial finger movement. Gray (black) dots indicate conditions where the target finger was flexed (extended). Lines join movement pairs from the same context (i.e., other fingers have the same cued movement but the target finger has different cued movements). B Histogram of alignment of population tuning directions (cosine of angle) across pairs of contexts in data (left) and linear-nonlinear model fits (right). Population tuning directions are more aligned for thumb and ring/little finger groups in both data and the linear-nonlinear model. By construction, the linear model exhibits complete alignment of all contexts (all values at 1). C How neural population tuning magnitude for a given finger group is affected by the movement of other fingers. Data are shown from 80 combinatorial movements of four finger groups. Contexts (specific movements of other fingers) are sorted by tuning magnitude. Error-bars indicate standard deviation across 100 resamplings of the data. D Context dependence of population tuning magnitude in a linear-nonlinear model from Fig. 5D. While the linear-nonlinear model captures the variations in tuning magnitude, a linear model has constant tuning magnitude by construction (arrows). Error-bars similar to (C). E Performance of a linear decoder (support vector classifier) when classifying between flexion/extension positions of a finger across contexts. Element at position (i,j) corresponds to training the classifier on context j and testing on context i. Mean within-context (diagonal values) accuracy was high for all finger groups, whereas across-context (off-diagonal values) accuracy was low for middle and index fingers compared to the thumb and ring-little group. F Examples of cross-context decoding performance for the thumb, little, and ring finger. Two gesture pairs are shown for each finger. For the first pair, the target finger is either flexed or extended while the other fingers are at rest. For the second pair, the target finger is either flexed or extended while all other fingers have an identical movement. A linear classifier for thumb position trained on isolated movements showed 100% accuracy when tested on the second pair of movements (Thumbs Up vs ASL sign for letter S). This accuracy dropped to nearly chance performance in a similar analysis for the little and ring fingers. Source data are provided in the Source Data file. Copyright © Meta Platforms Technologies, LLC and its affiliates. All rights reserved.

We assessed the impact of these population tuning changes on the generalizability of linear decoding across contexts using two tasks. For combinatorial movements of four finger groups, the within-context decoding performance was high across all fingers (88-92%); across-context performance was much lower for middle and index fingers (58% and 63% respectively) compared to thumb and ring-little fingers (73% and 76% respectively) (Fig. 6F).

These observations were replicated for pairs of natural gestures. Specifically, a linear decoder trained on isolated flexion/extension movements of the thumb generalized to gesture pairs where all other fingers were flexed but the thumb was either extended (‘thumbs up’ gesture) or flexed (American Sign Language for symbol S, Fig. 6G). However, for other gesture pairs, a linear decoder trained on isolated movements of the ring finger or the little finger performed poorly (Fig. 6I), suggesting that the population tuning for the movement of ring and little fingers separately changes more compared to moving them as a finger group. For decoding the continuously varying finger positions over the course of a trial, non-linear decoders such as a recurrent neural network and a temporal convolutional network performed better than linear (Kalman) filters with a larger difference in performance for index and middle fingers (see Supplementary fig. 7). This suggests the context-dependent changes in population tuning observed during static gestures may be present for continuous finger movements as well.

In summary, the population tuning of weakly-represented fingers changed more based on the movement of other fingers, suggesting that peak performance for intracortical brain-computer interface finger control requires the use of non-linear decoders that jointly decode the movement of all fingers. However, the amount of training data increases with complexity of the decoder. For example, quadratic decoder may provide high decoding accuracy by capturing all pairwise interactions if a large amount of training data is available (Supplementary Fig. 6A).

Discussion

We discovered a pseudo-linear compositional neural code for how individual finger movements are combined to represent multi-finger movements. While the neural activity evolved in a direction consistent with the linear summation of its constituent movements (Fig. 4), the magnitude of neural activity was normalized, i.e., it was independent of the number of moving fingers (Fig. 5). As a consequence, fingers that were weakly represented (such as middle) showed a greater change in their tuning magnitude and direction as a function of the movement of other fingers (Fig. 6). Hence, while the linear model explained a large fraction of the variance in the neural activity, decoding multiple finger movements can be substantially improved with a joint, non-linear decoder (Supplementary fig. 7).

The context-dependent changes in population tuning magnitude and direction across finger groups can be understood by the differences in the strength of neural representation of different finger groups (Fig. 4D) and the impact of magnitude normalization on linear summation. To understand this geometrically, consider the population tuning direction of a finger when it is moved in isolation (red, Fig. 7). When the contribution of other finger movements is linear, the displaced population vector (orange) has the same magnitude and direction as the population tuning vector during isolated movements. However, if the linear contribution is large, the resulting neural activity has a large magnitude, which is not realistic in a biological neural circuit. The observed neural activity will thus differ from the prediction of linearity due to this magnitude constraint. As a result, the observed population tuning vector may have a different magnitude and direction compared to that of isolated movements (blue). Conversely, if the contribution of other fingers is small, the linearly summed neural activity satisfies the constraint on neural activity magnitude, and the population tuning vector preserves the magnitude and direction. In our recordings, the index and middle finger were more weakly represented than the thumb and ring-little fingers.

Points indicate neural activity corresponding to different gestures, with gray (black) indicating the gestures with flexion (extension) of a particular finger (finger 1). The red line joins a pair of gestures when finger 1 moves alone (i.e., other fingers are at rest), and indicates the population tuning vector during isolated movement. The contribution of the movement of other fingers is indicated by displacement along the black arrow. The displaced population tuning vector under linear summation is indicated in orange. This results in preserved population tuning magnitude and direction (red and orange lines are parallel and of the same length). However, the magnitude of neural activity in biological neural circuits is limited (indicated by the black circle). Hence, the experimentally observed neural activity differs from the prediction of linear summation, and the observed population tuning vector (blue) shows a change in magnitude and direction compared to the neural tuning vector for the finger movement in isolation (red). When the relative contribution from other fingers is large (such as for the index and middle fingers), the changes in population tuning magnitude and direction are greater.

The pseudo-linear composition of individual finger movements is consistent with recent work in monkeys19, which showed that linear summation of neural activity for single finger movement explains two-finger movements. However, our analysis of the two finger and four finger-group movements in our participant with paralysis (enabled by the ability of human participants to attempt complex and flexible tasks) revealed a characteristic deviation from a pure linear summation. While the dominant fingers (e.g., thumb) had consistent tuning across different combinations (as predicted with linear summation), the weakly-represented fingers (e.g., middle) showed a larger deviation from the linear model. This is explained with magnitude normalization after linear summation, and is consistent with recent publications. First, prior work in monkeys showed that in a task requiring production of different amounts of force, a linear model needs to be supplemented by a condition-dependent gain to predict EMG activity from neural activity20. Second, there exists a mild degree of non-linear encoding for two finger movements in monkeys, explaining why non-linear decoders have been shown to improve closed loop performance21. These observations are consistent with the widespread observation of normalization as a canonical neural computation in many neural circuits during sensation and decision making22,23.

Multi-finger movements have previously been studied extensively using functional MRI (fMRI) in able-bodied volunteers. A study by Ejaz et al.13 showed that the neural activity for multi-finger movements could not be predicted linearly from single-finger representations, indicating that nonlinear interactions between different fingers need to be taken into account. Subsequently, the neural representation of multi-finger stimulation in the somatosensory cortex and the motor cortex24,25, which may be similar to the neural activity during active movement26, was better explained by a linear-nonlinear model compared to a linear model. The pseudo-linear summation model is potentially a unifying concept that both explains interaction effects16 and offer an explanation of their origin.

In addition to finger movements, pseudo-linear composition may explain the neural activity for simultaneous limb and multi-joint movements as well4,27,28,29,30,31,32,33,34,35,36,37,38. Future work will verify if magnitude normalization can explain the observed suppression of the non-dominant limb for simultaneous bimanual movements and if there exists a unified theory of how the brain represents simultaneous multiple movements.

What circuit mechanisms could explain the mild degree of nonlinearity and magnitude normalization? One hypothesis is that the observed non-linearities could be a consequence of inputs with different strengths processed by a network of neurons with saturating nonlinearities. We tested if a simple recurrent neural network (RNN) with static input drive of unequal magnitudes and saturating unit activations could capture the observed properties. The inputs correspond to different fingers, and the unequal input magnitudes reflect the unequal modulation observed for different fingers. Starting from random unit activation, the RNN was simulated until the activity reached a steady state. This steady state activity captured all the properties observed for neural recordings—it was low dimensional, with a largely constant magnitude that cannot be approximated by a linear model of finger positions, and the population tuning direction for weaker inputs changed more compared to the stronger inputs (Supplementary fig. 7).

An alternative hypothesis arises from the balance of excitatory and inhibitory inputs to the neurons39,40,41,42. Specifically, the preserved magnitude of neural activity might be explained by the simultaneous increase in both excitatory and inhibitory activity as the number of moving fingers increases. However, testing this possibility in human participants is challenging due to experimental limitations.

This work helps to address a fundamental question of how neuronal ensembles represent multiple task-related variables. While a neural representation that linearly composes individual task variables is simple, and a decoder trained to decode a particular task variable in one context generalizes to other contexts, the overall capacity to accurately represent multiple combinations of task variables is limited. An alternative representation using non-linear composition of task variables provides a higher capacity but poorer decoder generalization across contexts. Similar to the pseudo-linear compositional principle described here, several studies have presented evidence of a largely linear composition with a mild non-linearity—a compromise between the two extremes43,44,45,46. However, most of the literature on multi-task computation has been limited to simulated networks47,48, neural recordings in non-human primates44,49, or functional magnetic resonance imaging in humans50. Along with some recent works4,5, we extend the principle of compositionality to neural recordings in the human motor cortex at single neuron resolution, providing further evidence that compositionality may be a general feature of brain representations.

Overall, we show that the compositional coding hypothesis with pseudo-linear summation (Fig. 1B) is strongly supported by the neural geometry of multi-finger movements in the human premotor cortex. This principle may explain the neural structure in other motor and cortical tasks and may aid in a rapid development of future intracortical brain computer interface applications such as multi-finger typing, playing a piano, or whole-body movement.

Methods

Participant information

Research sessions were conducted with volunteer participants enrolled in the BrainGate2 pilot clinical trial (ClinicalTrials.gov Identifier: NCT00912041). The trial is approved by the U.S. Food and Drug Administration under an Investigational Device Exemption (#G090003, Caution: Investigational device. Limited by Federal law to investigational use) and the Institutional Review Boards of Stanford University Medical Center (protocol #52060), Brown University (#0809992560), and Partners HealthCare/Massachusetts General Hospital ((#2009P000505).

Participant T5 was a right-handed man who was 69 years old at the time of the study. He was diagnosed with C4 AIS-C spinal cord injury eleven years prior to this study. T5 was able to speak and move his head, and had residual movement of his left bicep as well as trace movement in most muscle groups (See ref.4 for further details). T5 gave informed consent for this research and associated publications.

Participant T11 was a 36-year-old right-handed man with a history of tetraplegia secondary to C4 AIS-Aspinal cord injury that occurred 11 years prior. T11 gave informed consent for this research and associated publications.

Both participants had two 96-channel intracortical microelectrode arrays placed chronically into the hand knob area of the left precentral gyrus (PCG).

Neural recordings

For the present study, neural control and task cuing closely followed51 and were controlled by custom software running on the Simulink/xPC real-time platform (The Mathworks, Natick, MA), enabling millisecond-timing precision for all computations. Neural data were collected by the NeuroPort System (Blackrock Microsystems, Salt Lake City, UT) and available to the real-time system with 5 ms latency. Neural signals were analog filtered from 0.3 Hz to 7.5 kHz and digitized at 30 kHz (250 nV resolution). Next, a common average reference filter was applied that subtracted the average signal across the array from every electrode in order to reduce common mode noise. Finally, a digital bandpass filter from 250 to 3000 Hz was applied to each electrode before spike detection. For threshold crossing detection, we used a − 4.5 x RMS threshold applied to each electrode over 1 ms bins, where RMS is the electrode-specific root mean square (standard deviation) of the voltage time series recorded on that electrode and downsampled at 15 or 20 ms bins. For most analyses the binned activity was also smoothened with a gaussian filter (σ = 300 ms).

For T11 data, neural activity was recorded using the Brown Wireless Device (BWD) described previously52. BWD transmitted data at 20 kHz and 12 bits per sample, which was then upsampled to 30 kHz and 16 bits per sample. The raw activity was then passed through a fourth-order bandpass butterworth filter (250–7500 Hz). A common average reference filter was applied, threshold crossing counts were computed for 1 ms bins (threshold = −4 x RMS), and discretized at 15 or 20 ms bins and Z-scored (normalized) for each channel.

While sorted units could be identified using our recordings, this study uses multi-unit activity to simplify the methods and multi-unit activity has similar neural geometry and neural decoding performance as sorted units53,54,55.

Task design

The finger and hand visualization was developed in Unity Software (Unity Technologies, San Francisco) using a pre-fabricated hand56,57. The fingers and hand were animated by either using the Animation toolbox in Unity to continuously interpolate between specified starting and ending positions (American Sign Language gestures), or specifying the trajectory of joint positions and rotations directly from an external program using Redis (Redis Enterprise, all other tasks, which have a single axis of motion per finger). When specified directly, motion involved only the flexion-extension movements of individual fingers, and joint positions and angles were interpolated between a complete flexion and extension position.

Trial structure

All trials were “open-loop” and consisted of watching an animated hand smoothly transitioning from one gesture to another. The participant was asked to attempt to make those same motions with his own hand, even though paralysis prevented him from doing so. Each trial consisted of distinct phases: a preparatory period, a go cue followed by a movement period, and a hold period (optional). During the preparatory period, participants were shown the final target finger positions but did not move their hands. At the go cue, they were instructed to slowly move their hands to the target positions. During the hold period, they were instructed to hold their hands steady at the target positions. The final target position was shown at the beginning of each trial, either using a separate hand that changed configuration at the beginning of each trial (for natural gestures in Fig. 3B) or using overlay markers that indicate the desired configuration of fingers at the end of each trial (other tasks).

Block structure

The trial within a block followed a structure similar to the 2D cursor control task common in motor neuroscience58). Borrowing the terminology from 2D cursor control tasks, trials alternated between “center-out” and “return” trials. Center-out trials began with the hand at a rest position, which then proceeded to move to a target gesture (equivalent to the “going-out” phase of a center-out cursor control task). During return trials, the hand moved back to the rest posture. Only center-out trials were analyzed.

Single finger movement task (Fig. 2, Supplementary Figs. 2, 3, 4 )

A single finger was either fully flexed or extended in each trial. Each trial consisted of a 1.5 s preparatory phase and a 1 s movement phase. Each block consisted of 81 gestures (162 trial total as center-out and return trials alternate). Center-out single finger movements were also performed across contexts (different hands and palm-poses), either changing contexts across blocks (but fixing the context within each block) or alternating across contexts within a block.

Natural gestures task (Fig. 3A, B )

Sign-language task consisted of 38 total gestures, with some corresponding to signs from the American Sign language and others added probe single-finger movements, coordinated movements of all fingers (e.g., all finger flexion/extension), or decorrelating the movements of all fingers (e.g., Sign Alternating Fingers). Each trial had 1 s preparatory time, 1.5 s move tim,e and 2 s hold time.

Pairwise finger movement task (Figs. 4, 5A, Supplementary Fig. 5 )

Two finger groups were independently cued (each at full flexion/full extension/rest), resulting in 9 unique movements, with one movement being rest. The two finger groups either consisted of thumb vs all other fingers tied together (Fig. 4AB, Fig. 5A), or individual fingers (Fig. 4B, Supplementary fig. 5). Each trial consisted of a 1.5 s preparatory phase and a 1 s movement phase.

Combinatorial four-finger movement task (Figs. 3C, 4, 5, 6, Supplementary Fig. 6 )

For combinatorial movements of four finger-groups (the ring and little fingers were constrained to move together), the target position for each finger-group was sampled independently (full flexion/full extension/rest), resulting in 81 unique movements, with one movement being rest. These 81 movements were randomly divided into three groups consisting of 27 movements each. Each block only contained movements from one of these groups, and each movement was repeated three times in a row. The multiple repetitions of each movement were designed to improve behavioral reproducibility since the movements were often non-intuitive and non-natural. Hence, three open-loop blocks with different subsets of target configurations give three trials of all 81 target gestures. Each trial had 1 s preparatory time and 2 s move time (slightly longer move time reduces behavioral variability).

Neural geometry analysis

We assessed the geometry of the activity elicited by different movement conditions in the multi-dimensional neural state space, where each dimension corresponds to the activity of a particular channel and each movement condition corresponds to a single point in the state space. The geometry was assessed by measuring (i) the angle between lines connecting origin to two movements or equivalently the correlation between corresponding neural activity patterns (Fig. 2E, Fig. 6C, Supplementary fig. 3), (ii) the magnitude of neural activity for individual movement conditions (Fig. 5, Supplementary fig. 5), (iii) distance between pairs of conditions (Fig. 2D, Fig. 6B), and (iv) magnitude of neural activity generated by linear summation of two movement conditions (Fig. 5A). In each case, neural activity during the hold period was used for analyzing the geometry.

These measurements are straightforward for noise-free observations, such as predictions of an encoding model. However, for the recorded neural activity, we only have access to multiple trials of noisy neural activity for each condition.

Notation

Let ni be the neural state space representation of recorded activity for trial i. Let n is the noise-free activity, and the noise is considered additive, zero-mean, and independent across trials (ϵi), giving \({n}_{i}=n+{\epsilon }_{i}\). Denote the sample mean (trial average) of T trials is given by \(\hat{n}=\frac{{\sum }_{i=1}^{i=T}{n}_{i}}{T}=n+\frac{{\sum }_{i=1}^{i=T}{\epsilon }_{i}}{T}=n+\hat{\epsilon }\).

Estimating angle between neural activity patterns

Let the neural activity for two movement conditions be denoted by na and nb respectively. The angle between these movements after centering on a reference activity μ is given by the inner product \(\theta={co}{s}^{-1}\left(\frac{{({n}^{a}-\mu )}^{T}({n}^{b}-\mu )}{{||}{n}_{a}-\mu {||}.{||}{n}_{b}-\mu {||}}\right)\). The numerator is estimated using sample averages as \({\left({\hat{{n}}_{a}}-\hat{\mu }\right)}^{T}{\hat{{n}}_{b}}-\hat{\mu }\), and the estimation of denominator is described below. This analysis was done for Supplementary Fig. 3, with the reference vector μ being defined as the mean across all movement vectors.

For paired movements on a finger, the angle between lines joining flexion/extension of a given finger is measured as \(\theta={co}{s}^{-1}(\frac{{({n}_{{finger}1,{flexion}}-{n}_{{finger}1,{extension}})}^{T}({n}_{{finger}2,{flexion}}-{n}_{{finger}2,{extension}})}{{||}{n}_{{finger}1,{flexion}}-{n}_{{finger}1,{extension}}{||}.{||}{n}_{{finger}2,{flexion}}-{n}_{{finger}2,{extension}}{||}})\). Similar to the above, the numerator is estimated using sample averages, and the estimation of the denominator is described below. This analysis was performed for Fig. 2E.

Estimating neural activity magnitude

A “naive” estimator of the magnitude (\({||n|}{|}^{2}\)) using sample estimates \(|\left|\hat{n}\right|{|}^{2}\) is biased, which can be shown as follows. Expanding the naive estimator results in \({||}\hat{n}|{|}^{2}={||}\bar{n}|{|}^{2}+2\hat{\epsilon }.\bar{n}+{||}\hat{\epsilon }|{|}^{2}\). Since the expected value of \(\hat{\epsilon }\) is zero, the second term is zero. However, \({||}\hat{\epsilon }|{|}^{2} > 0\), making the expected value of \({||}\hat{n}|{|}^{2}\), \({E||}\hat{n}|{|}^{2}={E||n|}{|}^{2}+{E||}\hat{\epsilon }|{|}^{2} > {E||n|}{|}^{2}\), shows that the naive estimate is biased.

An unbiased estimator can be developed to estimate neural magnitudes and distances18,59,60. We use the implementation outlined in (Willett et al. 2020). With \({\hat{{n}}_{-i}}=\frac{{\sum }_{l=1..T,l\ne i}{n}_{l}}{T-1}\) denoting the empirical mean of all trials except the ith trial, the estimator \(\xi \left({{||n||}}^{2}\right)\) is defined as \(\frac{{\sum }_{i=1}^{i=T}{n}_{i}.{\bar{n}}_{-i}}{T}.\) Note that, \({\hat{n}}_{-i}=n+\frac{{\sum }_{l=1..T,l\ne i}{\epsilon }_{l}}{T-1}=n+{\hat{{\epsilon }}_{-i}}.\) The expected value of each term in the summation is given by:

Since the noise is zero mean and independent across trials, the last three terms evaluate to zero, making the summation unbiased.

When using a movement condition was cued across multiple sessions, we average the session-specific estimates of neural activity magnitude. Note that the unbiased estimator could give negative values, which we interpret as evidence that the true magnitude is near zero.

Estimating neural distances

Let na and nb correspond to the neural activity for conditions a and b. A naive estimator using sample averages \({||}\hat{{n}^{a}}-\hat{{n}^{b}}|{|}^{2}\) is biased, as explained above. An unbiased estimator can be estimated by expanding the square and plugging in the unbiased estimator for each term in the expansion:

If nb is deterministic (not random), the second term simplifies to \({||}{n}^{b}|{|}^{2}\). The representational dissimilarity matrix is constructed by calculating the neural distance between all pairs of movement conditions within a session and averaging across sessions.

Estimating the magnitude of neural activity under linear summation

Similar to the estimator for distances, the magnitude of neural activity when two neural activity patterns are added together (\({||}{n}^{a}+{n}^{b}|{|}^{2}\)) is given by

Encoding models for predicting neural activity from movement kinematics

For the encoding model analysis presented in Figs. 4D, E, 5B-G, 6, and the Supplementary Figs. 6, 7, we use data from the “combinatorial four-finger movement task” outlined above. Trials were split into a disjoint training set (70% of the trials) and testing set (30%) for each session and condition. Neural activity was thresholded, binned, smoothened (details above), and only channels with high mean activity were kept (mean firing rate > 1.3 Hz). For each condition, neural activity was further trial-averaged, time-averaged (for the last quarter of trial duration corresponding to hold period), and concatenated across sessions, resulting in 960 dimensions. Condition invariant signal (average of the neural activity for different movements) was calculated from the training data and subtracted from both training and testing data.

Movement kinematics were represented as a one-hot vector with separate dimensions for flexion and extension of each finger group, resulting in an eight-dimensional movement encoding. In addition to splitting the trials, the cross-condition generalization analysis (Fig. 5F) involved splitting the 80 movements into training (80%) and testing (20%) conditions.

Linear encoding model

A linear model approximates the observed neural activity as \({n}_{i}=A{k}_{i}+a\), where ni is the neural activity vector for condition i, ki is the one-hot encoding of kinematics, A, a are the learned encoding matrix and the bias respectively. For the low-rank linear model, A was constrained with low-rank matrices (X, Y): \(A=X{Y}^{T}\). Performance on test data was measured using mean-squared-error, estimated with Eq. 2 (with one random and one deterministic component) and averaged over 100 resamples of data.

Linear-nonlinear encoding model

The linear model was augmented with saturating nonlinearities (f) modeling the neural activity as: \({n}_{i}={Bf}(A{k}_{i}+a)+b\). The nonlinearity \(f=\tanh (.)\) for all analysis. Analysis with recifying non-linearity (\(f(x)=\max (x,0)\)) is presented in Supplementary Fig. 6. The parameters of this model \((A,a,B,b)\) were optimized by minimizing an unbiased estimate of the MSE (Eq. 2) using Adam optimizer (learning rate = 0.0001, stopped when change in loss <1e-7).

Discrete decoding of movement kinematics from neural activity

Neural activity was classified into the corresponding movements for natural gestures (Fig. 3B), combinatorial movement of four finger groups (Fig. 3C), or position of a particular finger during simultaneous movements (Fig. 6E, F). To categorize neural activity into discrete movements, threshold crossings were first binned (10 ms bins) and smoothed (by convolving with a Gaussian kernel, σ = 20 ms). Binned threshold crossing was then averaged across time for the entire duration of the movement, yielding a single neural feature vector for each trial. Inactive channels (as defined by <10 Hz activity) were removed from the feature vector, and channels were Z-scored within each block.

The ability to distinguish between different gestures was assessed using a multi-class linear classifier (support vector classifier from the sklearn package61, linear kernel, C = 0.025), which combines the output of multiple binary (one vs. one) classifiers. Data for Fig. 3B consist of 22-24 trials per condition; data for Fig. 3C consist of 14−18 trials per condition when at least one finger is moving and 30 trials for the condition with no fingers moving. Accuracy and confusion matrices were estimated using ten-fold cross-validation.

For the analysis in Fig. 6E and Fig. 6F, classifiers were trained on one pair of gestures and evaluated on different pairs. For Fig. 6F, instead of using the mean activity for the entire duration of the trial, the accuracy is reported with a featurization of neural activity corresponding to a concatenation over 30 bins of the trial. Accuracy and confusion matrices were estimated using distinct conditions for training and testing (e.g., in Fig. 6E) or five-fold (Fig. 6F).

Visualizing the factor-specific components of neural activity

The high-dimensional neural activity is projected to a two or three-dimensional subspace and visualized to highlight movement-related components using Targeted Dimensionality Reduction (Fig. 2F, G, 4 A–C) and marginalized principal component analysis (Fig. 6A).

Cross-validated targeted dimensionality reduction (TDR)

Targeted dimensionality reduction62 projects the neural activity into a subspace that can be linearly mapped from movement kinematics. The procedure is detailed below.

Let ns be \({N}_{s}\times T\times C\) dimensional the neural activity for research session s with Ns trials, trial length T and C channels. Each neural activity pattern is associated with a kinematic movement condition ms of K dimensions. The following steps are repeated multiple times:

-

1.

Partition data: For each session and movement condition, the trials are partitioned into training and testing samples (random 50% split for each combination of session and movement condition).

-

2.

Marginalize: The training data are trial averaged for each movement condition; time-averaged for the last section of the trial corresponding to continuously holding a given finger position; and concatenated across sessions. Hence \({\bar{n}}_{{train}}\) is \({SC}\times L\) dimensional, with L being the total number of conditions. The test trials are similarly marginalized, but the temporal dimension is not collapsed, to give \({\bar{n}}_{{test}}\) of dimension SC×T×L.

-

3.

Remove condition-invariant signal: Movement condition-invariant component is estimated from the training data and removed from \({\bar{n}}_{{train}}\) and \({\bar{n}}_{{test}}\). The movement condition-invariant neural activity is estimated by averaging all training neural activity (not averaged over time) and concatenating it across sessions to give ncis of dimensions \(T\times {SC}\). A low (three)-dimensional approximation ncis is computed and \({\bar{n}}_{{train}}\) and \({\bar{n}}_{{test}}\) are projected orthogonal to this space.

-

4.

Linear regression: The movement kinematics are encoded in a \((K+1)\times L\) matrix M, with each column corresponding to a different movement condition, and the first K rows correspond to positions of the fingers, and the last row =1 is for estimating a bias. Finger positions are encoded as +1 for flexion, −1 for extension, and 0 for rest. The number of non-zero elements in each column (excluding the last element corresponding to bias) indicates the number of fingers not at rest. The encoding matrix A of dimensions \({SC}\times K\) is estimated by solving \({\bar{n}}_{{train}}={AM}\) using ordinary least squares. It is important to note that only the static neural activity pattern computed by averaging the activity at the end of a trial is taken into account. This corresponds to the duration for which the participant is holding a cued gesture. The top three left singular vectors from the SVD of A are retained for projecting neural activity (For SVD of \(A={US}{V}^{T}\), the projection matrix corresponds \(P=U(:,1:3)\), the left three columns of U).

-

5.

Alignment (for repetition > 1): The projection direction can vary across repetitions of this procedure. In the absence of any estimation noise, the projection directions can be sign-flipped across repetitions. In the presence of estimation noise, the projection directions can also be permuted or, more generally, rotated across repetitions. While we do not attempt to correct for the rotations, we attempt to correct for permutations and sign-flips by aligning the projections estimated in later repetitions (> 1) to the first repetition by greedily matching the absolute value of the inner product between projection directions.

-

6.

Projection: The non-time averaged test data \({\bar{n}}_{{test}}\) is projected onto the three dimensional space (with projected activity at time t being \({{\hat{n}}_{{test}}(:,t,:)=P}^{T}{\bar{n}}_{{test}}(:,t,:)\)) and averaged with previous repetitions.

This method was used in Figs. 2F, G, 4 A–C. The entire procedure is repeated 500 times for Fig. 2F, G and 100 times for Fig. 4A-C. Three sessions were used for Fig. 2F, G and five sessions for Fig. 4A, C; and one session for Fig. 4B.

Marginalized principal component analysis

The visualization of neural activity in Fig. 6A aims to isolate the contribution of each finger group during the combinatorial movements of the four finger groups (thumb, index, middle, and ring-little constrained together). Briefly, we identify a three-dimensional subspace that captures the activity across all single-finger movements and visualize the activity in this subspace after aligning the tuning directions for a particular finger to be vertical.

Neural activity for each trial was binned and smoothened, resulting in T = 130 samples per trial. The analysis in this section used the trial-averaged activity for each movement condition. The trial-averaged activity was concatenated across sessions, resulting in C ( = 192, number of channels) x S (=5, number of sessions) dimensional observations. Trial-averaging within a session minimizes noise correlation between channels, and concatenating the channels across sessions is justified if we assume that we get a random readout of the same underlying task-related manifold for each session. Let the trial-averaged and session-concatenated activity for movement condition i be represented by \(T\times {CS}\) dimensional matrix ni.

First, condition invariant signal is calculated by averaging the activity across all movement conditions. A rank-3 approximation of CIS is computed using singular value decomposition, and all ni are projected orthogonal to it.

Next, the contribution of each finger movement is computed by marginalizing-out the movements of other fingers. Specifically, the contribution of thumb-flexion is calculated as \({n}_{{thumb}-{flexion}}=\frac{{\sum }_{i:{thumb\; is\; flexed\; in\; condition \, i}}{n}_{i}}{|\{{i :\, thumb\; is\; flexed\; in\; condition \, i}\}|}\) and is of dimensions T×CS. Next, these marginalizations are concatenated for the flexion/extension movements on each finger and all four finger groups and to give a 8T×CS dimensional matrix. Projection onto the top three PCs (reducing the matrix to 8T×3) gives the 3D visualization of all finger-movement combinations.

Across the panels in Fig. 6A, this space can be rotated to align flexion-extension movement of each finger in the north-south direction. Dots are generated by projecting the neural activity of each movement combination (ni) onto this 3D space and calculating the average of the last quarter of the trial duration (presumably corresponding to holding a given gesture). Only conditions when the target finger is not idle are considered, with black dots corresponding to extension and gray for flexion; lines join condition pairs where other fingers have the same movement.

Recurrent neural network (RNN) simulation

A mechanistic explanation for the linear-nonlinear encoding model was explored using simulations of a randomly connected recurrent neural network in Supplementary Fig. 8. We simulated a recurrently connected network of n = 1000 neurons with tanh(.) nonlinearity and random connectivity J between units (the connection between neurons i and j was sampled independently using Ji,j~N(0, 1/n)). Two inputs (i1, i2) were applied to the network and varied between −10 and 10. Each input was projected onto the neurons using input weight coefficients sampled from an identical distribution (vector bl of length 1000), but the inputs had different scales (s1 = 5, s2 = 1). In Supplementary Fig. 8, we simultated an RNN with four inputs with different scales (s1 = 4, s2 = 4, s3 = 1, s4 = 1).

Network was simulated for each input combination in a uniformly sampled grid of the inputs, starting from a random initial state until the activity settled to a steady state (magnitude of change in state <1e-6). Simulations used the following update equation with τ=1 and discretization step size 0.01:

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability

Data needed to reproduce the key findings in this study are available at: https://doi.org/10.6084/m9.figshare.28396166 Source data are provided with this paper.

Code availability

The code needed to reproduce the key findings in this study is available at: https://doi.org/10.6084/m9.figshare.28396166.

References

Rahman, A. & Al-Jumaily, A. Design and development of a bilateral therapeutic hand device for stroke rehabilitation. Int J. Adv. Rob. Syst. 10, 405 (2013).

Santello, M., Flanders, M. & Soechting, J. F. Postural hand synergies for tool use. J. Neurosci. 18, 10105–10115 (1998).

Jarque-Bou, N. J., Scano, A., Atzori, M. & Müller, H. Kinematic synergies of hand grasps: a comprehensive study on a large publicly available dataset. J. Neuroeng. Rehabil. 16, 63 (2019).

Willett, F. R. et al. Hand knob area of premotor cortex represents the whole body in a compositional way. Cell 181, 396–409.e26 (2020).

Guan C., et al. Decoding and geometry of ten finger movements in human posterior parietal cortex and motor cortex. J. Neural Eng. 20 https://doi.org/10.1088/1741-2552/acd3b1 (2023).

Schieber, M. H. & Hibbard, L. S. How somatotopic is the motor cortex hand area? Science 261, 489–492 (1993).

Sanes, J. N., Donoghue, J. P., Thangaraj, V., Edelman, R. R. & Warach, S. Shared neural substrates controlling hand movements in human motor cortex. Science 268, 1775–1777 (1995).

Indovina, I. & Sanes, J. N. Combined visual attention and finger movement effects on human brain representations. Exp. Brain Res. 140, 265–279 (2001).

Schieber, M. H. Constraints on somatotopic organization in the primary motor cortex. J. Neurophysiol. 86, 2125–2143 (2001).

Yokoi, A. & Diedrichsen, J. Neural organization of hierarchical motor sequence representations in the human neocortex. Neuron 103, 1178–1190.e7 (2019).

Natraj, N., Silversmith, D. B., Chang, E. F. & Ganguly, K. Compartmentalized dynamics within a common multi-area mesoscale manifold represent a repertoire of human hand movements. Neuron 110, 154–174.e12 (2022).

Kriegeskorte, N., Mur, M. & Bandettini, P. Representational similarity analysis—connecting the branches of systems neuroscience. Front Syst. Neurosci. 2, 4 (2008).

Ejaz, N., Hamada, M. & Diedrichsen, J. Hand use predicts the structure of representations in sensorimotor cortex. Nat. Neurosci. 18, 1034–1040 (2015).

Guan C., et al. Stability of motor representations after paralysis. Elife. 11. https://doi.org/10.7554/eLife.74478 (2022).

Schieber, M. H. & Rivlis, G. Partial reconstruction of muscle activity from a pruned network of diverse motor cortex neurons. J. Neurophysiol. 97, 70–82 (2007).

Arbuckle, S. A. et al. Structure of population activity in primary motor cortex for single finger flexion and extension. J. Neurosci. 40, 9210–9223 (2020).

Vargas-Irwin, C. E. et al. Gesture encoding in human left precentral gyrus neuronal ensembles. bioRxiv [Preprint]. https://doi.org/10.1101/2024.08.23.608325 (2024).

Diedrichsen, J. et al. Comparing representational geometries using whitened unbiased-distance-matrix similarity. Neurons, Behavior, Data Analysis, and Theory 5, 1–31 (2021).

Nason, S. R. et al. Real-time linear prediction of simultaneous and independent movements of two finger groups using an intracortical brain-machine interface. Neuron 109, 3164–3177.e8 (2021).

Naufel, S., Glaser, J. I., Kording, K. P., Perreault, E. J. & Miller, L. E. A muscle-activity-dependent gain between motor cortex and EMG. J. Neurophysiol. 121, 61–73 (2019).

Willsey, M. S. et al. Real-time brain-machine interface in non-human primates achieves high-velocity prosthetic finger movements using a shallow feedforward neural network decoder. Nat Commun. 13, 6899 (2022).

Carandini, M. & Heeger, D. J. Normalization as a canonical neural computation. Nat. Rev. Neurosci. 13, 51–62 (2011).

Louie K., Glimcher P. W. Normalization Principles in Computational Neuroscience. Oxford Research Encyclopedia of Neuroscience. (Oxford University Press, 2019).

Brouwer, G. J., Arnedo, V., Offen, S., Heeger, D. J. & Grant, A. C. Normalization in human somatosensory cortex. J. Neurophysiol. 114, 2588–2599 (2015).

Arbuckle, S. A., Pruszynski, J. A. & Diedrichsen, J. Mapping the integration of sensory information across fingers in human sensorimotor cortex. J. Neurosci. 42, 5173–5185 (2022).

Berlot, E., Prichard, G., O’Reilly, J., Ejaz, N. & Diedrichsen, J. Ipsilateral finger representations in the sensorimotor cortex are driven by active movement processes, not passive sensory input. J. Neurophysiol. 121, 418–426 (2019).

Cisek, P., Crammond, D. J. & Kalaska, J. F. Neural activity in primary motor and dorsal premotor cortex in reaching tasks with the contralateral versus ipsilateral arm. J. Neurophysiol. 89, 922–942 (2003).

Rokni, U., Steinberg, O., Vaadia, E. & Sompolinsky, H. Cortical representation of bimanual movements. J. Neurosci. 23, 11577–11586 (2003).

Diedrichsen, J., Wiestler, T. & Krakauer, J. W. Two distinct ipsilateral cortical representations for individuated finger movements. Cereb. Cortex. 23, 1362–1377 (2013).

Wiestler, T., Waters-Metenier, S. & Diedrichsen, J. Effector-independent motor sequence representations exist in extrinsic and intrinsic reference frames. J. Neurosci. 34, 5054–5064 (2014).

Jin, Y. et al. Electrocorticographic signals comparison in sensorimotor cortex between contralateral and ipsilateral hand movements. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2016, 1544–1547 (2016).

Fujiwara, Y. et al. Neural pattern similarity between contra- and ipsilateral movements in high-frequency band of human electrocorticograms. Neuroimage 147, 302–313 (2017).

Bundy, D. T., Szrama, N., Pahwa, M. & Leuthardt, E. C. Unilateral, 3D arm movement kinematics are encoded in ipsilateral human cortex. J. Neurosci. 38, 10042–10056 (2018).

Ames K. C., Churchland M. M. Motor cortex signals for each arm are mixed across hemispheres and neurons yet partitioned within the population response. Elife. 8 https://doi.org/10.7554/eLife.46159 (2019).

Cross, K. P., Heming, E. A., Cook, D. J. & Scott, S. H. Maintained representations of the ipsilateral and contralateral limbs during bimanual control in primary motor cortex. J. Neurosci. 40, 6732–6747 (2020).

Downey, J. E. et al. The motor cortex has independent representations for ipsilateral and contralateral arm movements but correlated representations for grasping. Cereb. Cortex. 30, 5400–5409 (2020).

Dixon, T. C., Merrick, C. M., Wallis, J. D., Ivry, R. B. & Carmena, J. M. Hybrid dedicated and distributed coding in PMd/M1 provides separation and interaction of bilateral arm signals. PLoS Comput. Biol. 17, e1009615 (2021).

Merrick C. M., et al. Left hemisphere dominance for bilateral kinematic encoding in the human brain. Elife. 11 https://doi.org/10.7554/eLife.69977 (2022).

Shadlen, M. N. & Newsome, W. T. Noise, neural codes and cortical organization. Curr. Opin. Neurobiol. 4, 569–579 (1994).

Shadlen, M. N. & Newsome, W. T. The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J. Neurosci. 18, 3870–3896 (1998).

van Vreeswijk, C. & Sompolinsky, H. Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726 (1996).

Beck, S. & Hallett, M. Surround inhibition in the motor system. Exp. Brain Res. 210, 165–172 (2011).

Saxena, S. & Cunningham, J. P. Towards the neural population doctrine. Curr. Opin. Neurobiol. 55, 103–111 (2019).

Bernardi, S. et al. The geometry of abstraction in the hippocampus and prefrontal cortex. Cell 183, 954–967.e21 (2020).

Chung, S. & Abbott, L. F. Neural population geometry: An approach for understanding biological and artificial neural networks. Curr. Opin. Neurobiol. 70, 137–144 (2021).

Nogueira, R., Rodgers, C. C., Bruno, R. M. & Fusi, S. The geometry of cortical representations of touch in rodents. Nat. Neurosci. 26, 239–250 (2023).

Yang, G. R., Joglekar, M. R., Song, H. F., Newsome, W. T. & Wang, X.-J. Task representations in neural networks trained to perform many cognitive tasks. Nat. Neurosci. 22, 297–306 (2019).

Driscoll, L. N., Shenoy, K. & Sussillo, D. Flexible multitask computation in recurrent networks utilizes shared dynamical motifs. Nat Neurosci. 27, 1349–1363 (2024).

Xie, Y. et al. Geometry of sequence working memory in macaque prefrontal cortex. Science 375, 632–639 (2022).

Ito, T. & Murray, J. D. Multitask representations in the human cortex transform along a sensory-to-motor hierarchy. Nat. Neurosci. 26, 306–315 (2022).

Gilja, V. et al. Clinical translation of a high-performance neural prosthesis. Nat. Med. 21, 1142–1145 (2015).

Simeral, J. D. et al. Home use of a percutaneous wireless intracortical brain-computer interface by individuals with tetraplegia. IEEE Trans. Biomed. Eng. 68, 2313–2325 (2021).

Chestek, C. A. et al. Long-term stability of neural prosthetic control signals from silicon cortical arrays in rhesus macaque motor cortex. J. Neural Eng. 8, 045005 (2011).

Christie, B. P. et al. Comparison of spike sorting and thresholding of voltage waveforms for intracortical brain-machine interface performance. J. Neural Eng. 12, 016009 (2015).

Trautmann, E. M. et al. Accurate Estimation of Neural Population Dynamics without Spike Sorting. Neuron 103, 292–308.e4 (2019).