Abstract

Higher-order interactions underlie complex phenomena in systems such as biological and artificial neural networks, but their study is challenging due to the scarcity of tractable models. By leveraging a generalisation of the maximum entropy principle, we introduce curved neural networks as a class of models with a limited number of parameters that are particularly well-suited for studying higher-order phenomena. Through exact mean-field descriptions, we show that these curved neural networks implement a self-regulating annealing process that can accelerate memory retrieval, leading to explosive order-disorder phase transitions with multi-stability and hysteresis effects. Moreover, by analytically exploring their memory-retrieval capacity using the replica trick, we demonstrate that these networks can enhance memory capacity and robustness of retrieval over classical associative-memory networks. Overall, the proposed framework provides parsimonious models amenable to analytical study, revealing higher-order phenomena in complex networks.

Similar content being viewed by others

Introduction

Complex physical, biological, and social systems often exhibit higher-order interdependencies that cannot be reduced to pairwise interactions between their components1,2. Recent studies suggest that higher-order organisation is not the exception but the norm, providing various mechanisms for its emergence3,4,5,6. Modelling studies have revealed that higher-order interactions (HOIs) underlie collective activities such as bistability, hysteresis, and ‘explosive’ phase transitions associated with abrupt discontinuities in order parameters4,7,8,9,10,11.

HOIs are particularly important for the functioning of biological and artificial neural systems. For instance, they shape the collective activity of biological neurons12,13, being directly responsible for their inherent sparsity5,13,14,15 and possibly underlying critical dynamics16,17. HOIs have also been shown to enhance the computational capacity of artificial recurrent neural networks18,19. More specifically, ‘dense associative memories’ with extended memory capacity20,21,22,23 are realised by specific non-linear activation functions, which effectively incorporate HOIs. These non-linear functions are related to attention mechanisms of transformer neural networks24 and the energy landscape of diffusion models25,26, leading to the conjecture that HOIs underlie the success of these state-of-the-art deep learning models.

Despite their importance, existent studies of HOIs face significant computational challenges. Analytically tractable models that incorporate HOIs typically limit interactions to a single order (e.g., \(p\)-spin models22,27,28). Otherwise, attempting to represent diverse HOIs exhaustively results in a combinatorial explosion29. This issue is pervasive, restricting investigations of high-order interaction models—such as contagion9, Ising19, or Kuramoto30 models—to highly homogeneous scenarios3,16 or to models of relatively low-order9,11,31. While attempts have been made to model all orders of HOIs and perform theoretical analyses20,21,22,23,32,33,34,35,36,37, it is currently unclear how to construct parsimonious models to address the diverse effects of HOIs in a principled manner.

To address this challenge, here we employ an extension of the maximum entropy principle to capture HOIs through the deformation of the space of statistical models. When applied to neural networks, our approach generalises classical neural network models to yield a family of curved neural networks that effectively incorporate HOIs of all orders. The resulting models have rich connections with the literature on the statistical physics of neural networks21,22,27,34. These features enable the exploration of various aspects of HOIs using techniques including mean-field approximations, quenched disorder analyses, and path integrals.

Our analyses reveal how relatively simple curved neural networks exhibit some of the hallmark characteristics of higher-order phenomena, such as explosive phase transitions, arising both in mean-field models and in more complex transitions to spin-glass states. These phenomena are driven by a self-regulated annealing process, which accelerates memory retrieval through positive feedback between energy and an ‘effective’ temperature—a perspective that can also explain memory-retrieval dynamics in other modern artificial networks. Furthermore, we show—both analytically and experimentally—that this mechanism can lead to an increase in the memory capacity or robustness of memory retrieval in these neural networks. Overall, the core contributions of this work are (i) the development of a parsimonious neural network model based on the maximum entropy principle that captures interactions of all orders, (ii) the discovery of a self-regulated annealing mechanism that can drive explosive phase transitions, and (iii) the demonstration of enhanced memory capacity resulting from this mechanism.

Results

High-order interactions in curved manifolds

The maximum entropy principle (MEP) is a general modelling framework based on the principle of adopting the model with maximal entropy compatible with a given set of observations, under the rationale that one should not assume any structure beyond what is specified by the assumptions or features selected from the data38,39. The traditional formulation of the MEP is based on Shannon’s entropy40, and the resulting models correspond to Boltzmann distributions of the form \(p({{\boldsymbol{x}}})=\exp \left({\sum }_{a}{\theta }_{a}{f}_{a}({{\boldsymbol{x}}})-\varphi \right)\), where x = (x1, …, xn), φ is a normalising potential, and θa are parameters constraining the average value of observables \(\langle \, {f}_{a}({{\boldsymbol{x}}}) \rangle\). While observables are often set to low orders (e.g. fi(x) = xi, fij(x) = xixj, corresponding to first and second order statistics), higher-order interdependencies can be included by considering observables of the type fI(x) = ∏i∈Ixi, where I is a set of indices of order k = ∣I∣. Unfortunately, an exhaustive description of interactions up to order k ≫ 1 becomes unfeasible in practice due to an exponential number of terms (for more details on the MEP, see Supplementary Note 1).

The MEP can be expanded to include other entropy functionals such as Tsallis’41 and Rényi’s42. Concretely, maximising the Rényi entropy (with the scaling parameter γ ≥ −1)43

while constraining \(\langle \, {f}_{a}({{\boldsymbol{x}}})\rangle\) (i.e., the expectation of features by p(x)) results in models of the form (see Supplementary Note 1):

where φγ is a normalising constant given by

Above, the square bracket operator sets negative values to zero, \({\left[x\right]}_{+}=\max \{0,x\}\). We refer to distributions following (2) as the deformed exponential family, which maximises both Rényi and Tsallis entropies44,45. When γ → 0, Rényi’s entropy tends to Shannon’s and (2) to the standard exponential family42.

A fundamental insight explored in this study is that higher-order interdependencies can be efficiently captured by deformed exponential family distributions46,47. Starting from a standard Shannon’s MEP model with low-order interactions, it can be shown that varying γ in (2) results in a deformation of the statistical manifold which, in turn, enhances the capability of pγ(x) to account for higher-order interdependencies. In effect, the consequence of deformation can be investigated by rewriting (2) via Taylor expansion of the exponent

which is valid for the case 1 + γ∑aθafa(x) > 0, and otherwise pγ(x) = 0. This shows that the deformed manifold contains interactions of all orders even if fa(x) is restricted to lower orders while establishing a specific dependency structure across the orders, thereby avoiding a combinatorial explosion of the number of required parameters. The deformation resulting from the maximisation of a non-Shannon entropy has been shown to reflect a curvature of the space of possible models in information geometry42,45,48,49. This leads to a particular foliation of the space of possible models50 (an ‘onion-like’ manifold structure, Fig. 1), which has properties that allow to re-derive the MEP from fundamental geometric properties—for technical details, see Supplementary Note 1.

Illustration of a family of standard MEP models (right) and its deformed counterpart (bottom left). The space of MEP distributions with constraints of different orders constitute nested sub-manifolds29, giving rise to a hierarchy of sub-families of models of the form \({{{\mathcal{E}}}}_{k}^{\gamma }=\{{p}_{\gamma }^{(k)}({{\boldsymbol{x}}})={e}^{-{\varphi }_{\gamma }}{\left[1-\gamma \beta {E}_{k}({{\boldsymbol{x}}})\right]}_{+}^{1/\gamma }\}\) such that \({{{\mathcal{E}}}}_{1}^{\gamma }\subset {{{\mathcal{E}}}}_{2}^{\gamma }\subset \cdots \subset {{{\mathcal{E}}}}_{n}^{\gamma }\)42. The foliation depends on the curvature γ, and in general \({{{\mathcal{E}}}}_{k}^{\gamma }\ne {{{\mathcal{E}}}}_{k}^{0}\) but rather \({{{\mathcal{E}}}}_{k}^{\gamma }\cap {{{\mathcal{E}}}}_{r}^{0}\ne\) ∅ for k < r. For small values of ∣γ∣, it is possible to neglect higher-order terms in (4), and therefore certain subsets of \({{{\mathcal{E}}}}_{k}^{\gamma }\) effectively approximate \({{{\mathcal{E}}}}_{r}^{0}\).

Curved neural networks

Several well-known neural network models adhere to the MEP, such as Ising-like models51 and Boltzmann machines52. Interestingly, these models can encode patterns in their weights in the form of ‘associative memories’ as in Nakano-Amari-Hopfield networks53,54,55, being amenable for investigations using tools from equilibrium and nonequilibrium statistical physics literature56,57,58,59. Following the principles laid down in the previous section, we now introduce a family of recurrent neural networks that we call curved neural networks.

For this purpose, let us consider N binary variables x1, …, xN taking values in {1, −1} following a joint probability distribution

where φγ is a normalising constant. Above, we call E(x) and β the (stochastic) energy function (i.e., Hamiltonian) and the inverse temperature, due to their similarity with the Gibbs distribution in statistical physics when γ → 0. Note that, unlike exponential families, these models do not exhibit energy invariance under constant shifts. However, as demonstrated in Ref. 41, deformed exponential models can be related to energy-invariant models by rescaling their temperature, which can be seen as maximising entropy with respect to escort statistics rather than the original natural statistics.

Neural network models are typically defined by considering pγ(x) as defined in (5) with an energy function of the form

where Jij is the coupling strength between neurons xi and xj, and Hi are bias terms. In the limit γ → 0, p0(x) recovers the Ising model. Emulating classical associative memories, the weights Jij can be made to encode a collection of M neural patterns \({{{\boldsymbol{\xi }}}}^{a}=\{{\xi }_{1}^{a},\ldots {\xi }_{N}^{a}\}\), \({\xi }_{1}^{a}=\pm \! 1\) and a = 1, …, M by using the well-known Hebbian rule55,56

where J is a scaling parameter.

Before proceeding with our main analysis, one can gain insights into the effect of the curvature γ from the dynamics of a recurrent neural network that behaves as a sampler of the equilibrium distribution described by (5). For this, we adapt the classic Glauber dynamics to curved neural networks (see Supplementary Note 2) to obtain

where x\i denotes the state of all neurons except xi, \(\Delta E({{\boldsymbol{x}}})=2{x}_{i}({H}_{i}+\frac{1}{N}{\sum }_{j}\,{J}_{ij}{x}_{j})\) is the energy difference associated with detailed balance, and \({\beta }^{{\prime} }({{\boldsymbol{x}}})\) is an effective inverse temperature given by

Again, γ → 0 recovers the classic Glauber dynamics and \({\beta }^{{\prime} }({{\boldsymbol{x}}})=\beta\). Thus, the curvature affects the dynamics through the deformed nonlinear activation function (8) and the state-dependent effective temperature \({\beta }^{{\prime} }({{\boldsymbol{x}}})\) (9), with higher \({\beta }^{{\prime} }({{\boldsymbol{x}}})\) inducing lower degrees of randomness in the transitions. The effect of E(x) on \({\beta }^{{\prime} }({{\boldsymbol{x}}})\) depends then on the sign of γ. A negative γ increases \({\beta }^{{\prime} }({{\boldsymbol{x}}})\) during relaxation, reducing the stochasticity of the dynamics and accelerating convergence to a low-energy state. This, in turn, raises \({\beta }^{{\prime} }\), creating a positive feedback loop between energy and effective temperature. The effect is similar to simulated annealing, but the coupling of the energy and effective inverse temperature lets the annealing scheduling self-regulate to accelerate convergence. In contrast, positive γ decelerates the dynamics through negative feedback. Such accelerating or decelerating dynamics underlie non-trivial complex collective behaviours of the curved neural networks, which will be examined in the subsequent sections.

Mean-field behaviour of curved associative-memory networks

As with regular associative memories58, one can solve the behaviour of curved associative-memory networks through mean-field methods in the thermodynamic limit N → ∞ (Supplementary Note 3). Here the energy is extensive, meaning that it scales with the system’s size N. To ensure the deformation parameter remains independent of system properties such as size or temperature, we scale it as follows:

Under this condition, we calculate the normalising potential φγ by introducing a delta integral and calculating a saddle-node solution, resulting in a set of order parameters m = {m1, …, mM}, \({m}_{a}=\frac{1}{N}{\sum }_{i}{\xi }_{i}^{a}\langle {x}_{i}\rangle\) in the limit of size N → ∞. This calculation assumes 1 − γβE(x) > 0 so that \({[]}_{+}\) operators can be omitted and φγ is differentiable. The solution results in (for Hi = 0):

where \({\beta }^{{\prime} }\) is given by

and the values of the mean-field variables ma are found from the following self-consistent equations:

Similarly, using a generating functional approach59, we use the Glauber rule in (8) to derive a dynamical mean-field given by path integral methods (see Supplementary Note 4). This yields

where \({\beta }^{{\prime} }\) is defined as in (12) for each m. Note that in large systems, we recover the classical nonlinear activation function, and the deformation affects the dynamics only through the effective temperature \({\beta }^{{\prime} }\).

Explosive phase transitions

To illustrate these findings, let us focus on a neural network with a single associative pattern (M = 1), which is similar to the Mattis model60 and equivalent to a homogeneous mean-field Ising model61 (with energy \(E({{\boldsymbol{x}}})=-\frac{1}{N}J{\sum }_{i < j}{x}_{i}{x}_{j}\)) by changing a variable xi ← ξixi. Rewriting (13), we find that a one-pattern curved neural network follows a mean-field model given by

This result generalises the well-known Ising mean-field solution \(m=\tanh \left(\beta Jm\right)\), which is recovered for γ = 0.

By evaluating these equations, one finds that the model exhibits the usual order-disorder phase transition for positive and small negative values of \({\gamma }^{{\prime} }\) (Fig. 2a top). However, for large negative values of \({\gamma }^{{\prime} }\), a different behaviour emerges: an explosive phase transition8 that displays hysteresis due to HOIs (Fig. 2a bottom). The resulting phase diagram (Fig. 2b) closely resembles phase transitions in higher-order contagion models9,11 and higher-order synchronisation observed in Kuramoto models30.

a Phase transitions of the curved neural network with one associative memory, for J = 1 and values of \({\gamma }^{{\prime} }=-\!0.5\) (top, displaying a second-order phase transition) and \({\gamma }^{{\prime} }=-\!1.5\) (bottom, displaying an explosive phase transition). Solid lines represent the stable fixed points, and dotted lines correspond to unstable fixed points. b Phase diagram of the system. The areas indicated by P and M refer to the usual paramagnetic (disordered) and magnetic (ordered) phases, respectively. The area indicated by Exp represents a phase where ordered and disordered states coexist in an explosive phase transition characterised by a hysteresis loop. (c) Solutions of (15)-(16) for \({\beta }^{{\prime} },m,\beta\) (black line) for \({\gamma }^{{\prime} }=-\!1.2\), and projections to the plane m = 0, β = 0 and \({\beta }^{{\prime} }=0\), obtaining respectively the relation between \(\beta,{\beta }^{{\prime} }\) and solutions of the flat and the deformed models respectively (grey lines). (d) Mean-field dynamics of the single-pattern neural network for β = 1.001 (near criticality from the ordered phase) for some values of \({\gamma }^{{\prime} }\) in [ −1.5, 0]. For large negative \({\gamma }^{{\prime} }\) the dynamics ‘explodes’, with m (top) and \({\beta }^{{\prime} }\) (bottom) converging abruptly.

One can intuitively interpret the effect of the deformation parameter \({\gamma }^{{\prime} }\) by noticing that, for a fixed \({\beta }^{{\prime} }\), m is the solution of a function of \({\beta }^{{\prime} }\). For \({\gamma }^{{\prime} }=0\), this results in the mean-field behaviour of the regular exponential model, which assigns a value of m to each inverse temperature \(\beta={\beta }^{{\prime} }\). In the case of the deformed model, the possible pairs of solutions \((m,{\beta }^{{\prime} })\) are the same, but their mapping to the inverse temperatures β changes. Namely, this deformation can be interpreted as a stretching (or contraction) of the effective temperature, which maps each pair \((m,{\beta }^{{\prime} })\) to an inverse temperature \(\beta={\beta }^{{\prime} }(1+\frac{1}{2}{\gamma }^{{\prime} }J{m}^{2})\) according to (16). Thus, one can obtain the mean-field solutions of the deformed patterns as mappings of the solutions of the original model. This is illustrated in Fig. 2c, where the solution of \({\beta }^{{\prime} },m,\beta\) is projected to the planes β = 0 and \({\beta }^{{\prime} }=0\), obtaining the solutions for the flat (\({\gamma }^{{\prime} }=0\)) and the deformed (\({\gamma }^{{\prime} }=-1.2\)) models respectively.

In order to gain a deeper understanding of the explosive nature of this phase transition, we study the dynamics of the single-pattern neural network. By rewriting (14) for M = 1, and under the change of variables mentioned above to remove ξ, the dynamical mean-field equation of the system reduces to

where \({\beta }^{{\prime} }\) is calculated as in (16). Simulations of the dynamical mean-field equations for values of β just above the critical point are depicted in Fig. 2d. Trajectories with strongly negative \({\gamma }^{{\prime} }\) saturate earlier than smaller negative \({\gamma }^{{\prime} }\), confirming accelerated convergence. During this process, the effective inverse temperature \({\beta }^{{\prime} }\) rapidly increases until it saturates, creating a positive feedback loop between \({\beta }^{{\prime} }\) and m that gives rise to the explosive nature of the phase transition. This positive loop occurs only if \({\gamma }^{{\prime} }\) is negative; otherwise, negative feedback simply makes the convergence of m slower.

Overlaps between memory basins of attraction

A key property of associative-memory networks is their ability to retrieve patterns in different contexts. In the case of one-pattern associative-memory networks, the energy function \(E({{\boldsymbol{x}}})=-\frac{J}{N}{\sum }_{i < j}{x}_{i}{\xi }_{i}{\xi }_{j}{x}_{j}\) is a quadratic function with two minima at x = ± ξ, which configure global attractors. Instead, a two-pattern associative-memory network has an energy function with four minima (if sufficiently separated), but their attraction basins can overlap when the patterns are correlated.

To study the degree of the overlap between pairs of patterns, we analyse solutions of (13) for a network with two patterns with correlation \(\langle {\xi }_{i}^{1}{\xi }_{i}^{2}\rangle=C\) (see Supplementary Note 3.3 for details). In this scenario, the system is described by two mean-field patterns:

with w = 3 − 2a = ± 1 for a = 1, 2, and

Figure 3 shows how the hysteresis effect and explosive phase transitions persist in the case of two patterns for C = 0.2 with negative \({\gamma }^{{\prime} }\). This example shows two consecutive, overlapping explosive bifurcations (going from 1 to 2, and then to 4 fixed points), creating a hysteresis involving 7 fixed points within a more compressed parameter range of β than the classical case. Consequently, the memory-retrieval region for the four embedded memories expands. These results illustrate complex hysteresis cycles as well as an increased memory capacity for finite temperatures by negative values of \({\gamma }^{{\prime} }\). This enhanced capability for memory retrieval is further investigated through the replica analyses in the next section.

a Values of φγ for different mean-field values m1, m2, indicating the attractor structure of the network for different values of β with J = 1, C = 0.2 for \({\gamma }^{{\prime} }=0\) (top row) and \({\gamma }^{{\prime} }=-1.2\) (bottom row). b Bifurcations of the order parameters m1, m2. For \({\gamma }^{{\prime} }=0\) we observe an attractor bifurcating into two and then into four. For \({\gamma }^{{\prime} }=-1.2\), we observe the same sequence, but with a coexistence hysteresis regime in which 7 attractors are possible.

Memory retrieval with an extensive number of patterns

Next, we investigate how the deformation related to γ impacts the memory-storage capacity of associative memories. In classical associative networks of N neurons, the energy function is defined as \(E({{\boldsymbol{x}}})=-\frac{J}{N}{\sum }_{a=1}^{M}{\sum }_{i < j}{x}_{i}{\xi }_{i}^{a}{\xi }_{j}^{a}{x}_{j}\) with M = αN. As the number of patterns learned by the network increases, the system transitions to a disordered spin-glass state in the thermodynamic limit. Furthermore, one can analytically solve this model62,63,64,65. For example, using the replica-trick method can determine the memory capacity of the system62, and theoretically identify the critical value of α at which memory retrieval becomes impossible—leading to a disordered spin-glass phase. Here, we apply a similar approach to reveal how deformed associative memory networks afford an enhanced memory capacity.

Applying the replica trick in conjunction with the methods outlined in previous sections allows us to solve the system (see Supplementary Note 5). This method entails computing a mean-field variable m corresponding to one of the patterns ξ a and averaging over the others. For simplicity, a pattern with all positive unity values ξ a = (1, 1, …, 1) is considered, which is equivalent to any other single pattern just by a series of sign flip variable changes. The degree of similarity or overlap of this pattern with other patterns in the system introduces a new order parameter q, which contributes to measuring disorder in the system. After introducing the relevant order parameters and solving under a replica-symmetry assumption, the normalising potential is derived as

where J is a scaling factor, and the order parameters are defined as

with

As in previous cases, the model is governed by an effective temperature

This solution differs from the models in previous sections by the self-dependence of \({\beta }^{{\prime} }\).

To obtain a phase diagram, we solved (21)-(22) numerically for given \(\alpha,{\beta }^{{\prime} }\) at \({\gamma }^{{\prime} }=0\), and rescaled the inverse temperature as in the previous section to obtain the corresponding values of β for each \({\gamma }^{{\prime} }\). Using the resulting order parameters and calculating the free energy for each \(\alpha,\beta,{\gamma }^{{\prime} }\), we constructed the phase diagram of the system (similarly to regular associative memories58,62) characterised by the following distinct phases (Fig. 4):

-

A paramagnetic phase (P), corresponding to disordered solutions with m = q = 0, where memory-retrieval fails due to the dominance of fluctuations.

-

A ferromagnetic phase (F), corresponding to stable memory-retrieval solutions with m > 0 and q > 0.

-

A spin-glass phase (SG), exhibiting spurious-retrieval solutions with m = 0 and q > 0.

-

A mixed phase (M), where F and SG types of solutions coexist, being the spin-glass solutions a global minimum of the normalising potential φγ.

Phase diagram of a curved associative memory with an extensive number of encoded patterns M = αN and J = 1 for (a) different T = 1/β at \({\gamma }^{{\prime} }=0\) (black dashed lines), 0.8, − 0.8 (solid lines), and for (b) different \({\gamma }^{{\prime} }\) at β = 2. F indicates the ferromagnetic (i.e., memory retrieval) phase, SG the spin-glass phase (where saturation makes memory retrieval inviable), M a mixed phase, and P the paramagnetic region. Both in F and M, ferromagnetic and spin-glass solutions coexist, but we differentiate these by calculating respectively whether memory-retrieval or spin-glass solutions are the global minimum of the normalising potential φγ. The dotted lines in (a) near T = 0 indicate the AT lines, below which the replica-symmetric solution is not valid. Increasing \({\gamma }^{{\prime} }\) to larger negative values extends the retrieval phase into larger values of α, indicating an increased memory capacity, while larger positive values reduce the extension of the mixed phase, increasing robustness of memory retrieval.

For \({\gamma }^{{\prime} }=0\) (black dashed lines), the phase transition reflects the behaviour of associative memories near saturation58,62. With negative \({\gamma }^{{\prime} }\) (red lines), we observe an expansion of the ferromagnetic and mixed phases, indicating an enhanced memory-storage capacity by the deformation. Conversely, a positive value of \({\gamma }^{{\prime} }\) (yellow lines) decreases the memory capacity but reduces the extent of the mixed phase. In the mixed phase, retrieved memories (m > 0) are represented at a local—but not global—minimum of the normalising potential φγ in (20), indicating a larger probability of observing spurious patterns. Thus, we expect positive values of \({\gamma }^{{\prime} }\) to result in more robust memory retrieval.

The stability of the replica symmetry solution is given by the condition

which is captured by the dotted lines near zero temperature in Fig. 4a. Note that all solutions in Fig. 4b are stable under the replica symmetry assumption.

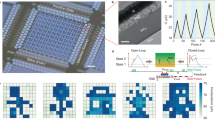

We complement the analysis from the previous section with an experimental study of a system encoding patterns from an image classification benchmark. The patterns are sourced from the CIFAR-100 dataset, which comprises 60,000 32 × 32 colour images66. To adapt the dataset to binary patterns suitable for storage in an associative memory, we processed each RGB channel by assigning a value of 1 to pixels with values greater than the channel’s median value and −1 otherwise (Fig. 5a). The resulting array of N = 32 ⋅ 32 ⋅ 3 binary values for each image was assigned to patterns ξ a. Note that associative memories (as well as our theory above) usually assume that patterns are relatively uncorrelated, and specific methods are required to adapt them to correlated patterns67,68. To simplify the problem, we conducted experiments using a selection of 100 images with covariance values smaller than \(10/\sqrt{N}\) (the standard deviation of the covariance values for uncorrelated patterns is \(1/\sqrt{N}\)). We used a random search to select patterns with low correlations: we randomly picked an image and replaced it if its correlation exceeded the threshold, repeating until all correlations were below it.

a Examples of CIFAR-100 images (top) and their RGB binarised versions (bottom). Every 32 × 32 × 3 binary RGB pixel value for each image a is assigned to the value of one position of pattern \({\xi }_{i}^{a}\). b, c Mean and variance of pattern retrieval values obtained in experiments, measured by the overlap between the final state of the network and the encoded pattern.

We evaluated the memory retrieval capacity of networks with various degrees of curvature γ by encoding different numbers of memories, as described in (7). As a measure of performance, we evaluated the stability of the network by assigning an initial state x = ξ a and calculating the overlap \(o={\sum }_{i}{x}_{i}{\xi }_{i}^{a}\) after T = 30N Glauber updates for β = 2, J = 1. The process was repeated R = 500 times from different initial conditions (different encoded patterns and different initial states) to estimate the value of m in (21). Experimental outcomes confirm our theoretical results, revealing that memory capacity increases with negative values of \({\gamma }^{{\prime} }\), while positive values reduce the memory capacity (Fig. 5b), but reduce the extent and magnitude of the high variability region in pattern retrieval (Fig. 5c), which is consistent with the reduction of the mixed phase. Note that the resulting memory capacity of the system observed in our experiments (i.e., the value of α at which the transition happens) is diminished due to the presence of correlations among some of the memorised patterns.

Finally, we investigated transitions near the spin-glass phase boundaries. First, we note that, for J → 0 and α = J−2, the model in (21)-(22) converges to (see Supplementary Note 5)

which at γ = 0 recovers the well-known Sherrington-Kirkpatric model69 (see Supplementary Note 6). While in the classical case, a phase transition occurs from a paramagnetic to a spin-glass phase, the curvature effect of \({\gamma }^{{\prime} }\ne 0\) modifies the nature of this transition. For small values of \({\gamma }^{{\prime} }\), the system exhibits a continuous phase transition akin to the Sherrington–Kirkpatrick spin-glass, where \(\frac{dq}{d\beta }\) shows a cusp (Fig. 6a). However, for \({\gamma }^{{\prime} }=-\!1\) the phase transition becomes second-order, displaying a divergence of \(\frac{dq}{d\beta }\) at the critical point (Fig. 6b). Moreover, increasing the magnitude of negative \({\gamma }^{{\prime} }\) leads to a first-order phase transition with hysteresis (Fig. 6c), resembling the explosive phase transition observed in the single-pattern associative-memory network. This hybrid phase transition combines the typical critical divergence of a second-order phase transition with a genuine discontinuity, similar to ‘type V’ explosive phase transitions8.

Phase transitions for order parameter q for replica-symmetric disordered spin models displaying (a) a cusp phase transition for \({\gamma }^{{\prime} }=-\!0.5\), (b) a second-order phase transition for \({\gamma }^{{\prime} }=-\!1.0\) and (c) an explosive phase transition for \({\gamma }^{{\prime} }=-\!1.2\). d Phase diagram of the explosive spin glass, displaying a paramagnetic (P), spin-glass (SG) and an explosive phase (Exp).

We analytically calculated the properties of these phase transitions (see Supplementary Note 6). By computing the solution at \({\gamma }^{{\prime} }=0\) and rescaling \({\beta }^{{\prime} }\), we determined that the critical point is located at \({\beta }_{c}=1+\frac{1}{2}{\gamma }^{{\prime} }\) (consistent with Fig. 6a–c). The slope of the order parameter around the critical point is, for \({\gamma }^{{\prime} }\le -\!1\), equal to \({(1+{\gamma }^{{\prime} })}^{-1}\), indicating the onset of a second-order phase transition as depicted in Fig. 6b. The resulting phase diagram of the curved Sherrington-Kirkpatrick model is shown in Fig. 6d.

Comparison with other dense associative memory models

Although our primary objective is to develop a parsimonious model of HOIs to explain higher-order phenomena, our framework can also be used to explain the behaviour of modern networks with HOIs, including the recently proposed relativistic Hopfield model32,33,34 and dense associative memories20,21. For this, let us consider the energy \({{\mathcal{F}}}[E]\) of the exponential family distribution \(p({{\boldsymbol{x}}}) \sim {e}^{-\beta {{\mathcal{F}}}[E]}\) given by the nonlinear transformation (denoted by \({{\mathcal{F}}}\)) of the classical energy E(x). The deformed exponential models in this study correspond to \({{\mathcal{F}}}[E]=-\frac{N}{{\gamma }^{{\prime} }}\ln (1-{\gamma }^{{\prime} }E/N)\), while the relativistic model corresponds to \({{\mathcal{F}}}[E]=-\frac{N}{{\gamma }^{{\prime} }}\sqrt{1-{\gamma }^{{\prime} }E/N}\). For the deformed exponential, the term \({{\mathcal{F}}}[E]\) can be expanded as

When E depends on the quadratic Mattis magnetisation (i.e., \(E=-{\sum }_{a}\frac{1}{N}{\left({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\right)}^{2}\)), then \({{\mathcal{F}}}[E]\) expands in terms of even-order HOIs of \({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\). For \({\gamma }^{{\prime} } < 0\), all coefficients of \({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\) in the expansion are negative, indicating that embedded memories have deeper energy minima than in the classical case. The same signs appear for each order in the relativistic energy with \({\gamma }^{{\prime} } < 0\). We also note that β in the free energy of both the deformed exponential and relativistic models in the limit of large N appears scaled according to an effective temperature given by \({\beta }^{{\prime} }=\beta {\partial }_{E}{{\mathcal{F}}}[E]\) (e.g., (11) and Eq. (6.2) in Ref. 34). Moreover, the input in the Glauber dynamics is approximated for large sizes as

The effective inverse temperatures \({\beta }^{{\prime} }=\beta {(1-{\gamma }^{{\prime} }E/N)}^{-1}\) for the deformed exponential and \({\beta }^{{\prime} }={2}^{-1}{(1-{\gamma }^{{\prime} }E/N)}^{-1/2}\) for the relativistic models are decreasing functions of E when \({\gamma }^{{\prime} } < 0\), resulting in an acceleration of memory retrieval—with lower energy E resulting in higher \({\beta }^{{\prime} }\) (lower temperature). While the relativistic model has been studied for \({\gamma }^{{\prime} } > 0\)32,33,34, we conjecture it may exhibit explosive phase transitions if \({\gamma }^{{\prime} } < 0\). Conversely, a positive \({\gamma }^{{\prime} }\) introduces alternating signs in even-order terms of \({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\), and a shallower energy landscape due to a reduction in \({\beta }^{{\prime} }\). This shallower energy landscape reduces the memory capacity of the deformed exponential networks by expanding the spin-glass phases (Fig. 4), but also enlarges the recall (ferromagnetic) region by mitigating the formation of spurious memories given by overlapping patterns in the mixed phase (in alignment with previous work32 on mitigation of spurious memories in the relativistic model).

This perspective on accelerated memory retrieval by nonlinearity extends to dense associative memories20,21, which achieve supralinear memory capacities through nonlinear pattern encoding. Specifically, their energy function is given by \({{\mathcal{F}}}=-\!{\sum }_{a}F({\sum }_{i}{\xi }_{i}^{a}{x}_{i})\) with F being e.g., a thresholded power function20, \(F(z)={\left[z\right]}_{\!+}^{p}\) or an exponential nonlinearity21 F(z) = ez at zero temperature. These nonlinearities narrow basins of attraction, reducing memory overlap and preventing transitions to the spin-glass phase. The jumps in the Glauber dynamics of such systems are weighed by an accelerating function. Namely, from our perspective, the dynamics of such systems can be described via positive feedback on weights linked to a specific memory, which increase during memory retrieval. This follows from the fact that, relating the linear difference in Mattis terms \(\Delta {\epsilon }_{k}^{a}\equiv 2{\xi }_{k}^{a}{x}_{k}\) with the nonlinear difference \(\Delta {F}_{k}^{a}\equiv F\left({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\right)-F\left({\sum }_{i}{\xi }_{i}^{a}{x}_{i}-\Delta {\epsilon }_{k}^{a}\right)\), the update of the kth neuron is determined by the sign of

Here, we show that the effective weight \({w}_{k}^{a}\equiv \frac{\Delta {F}_{k}^{a}}{\Delta {\epsilon }_{k}^{a}}\) becomes an increasing function of \({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\) when F is the power, exponential, or more generally, a convex function (See Supplementary Note 7). Thus, increasing \({\sum }_{i}{\xi }_{i}^{a}{x}_{i}\) as pattern ξ a is retrieved strengthens its basin of attraction and ensures positive feedback. Meanwhile, retrieval of ξ a reduces \({\sum }_{i}{\xi }_{i}^{b}{x}_{i}\) for orthogonal patterns ξ b, lowering their weights, suppressing their recall to minimise interference. This competitive mechanism highlights the higher memory capacity of these models compared to curved neural networks with uniform temperature scaling. Unlike the effective inverse temperature in curved networks, which depends only on the system’s state or energy, the effective weight in updating the k-th neuron additionally depends on the neuron’s state xk, thus no longer representing a global modulation of the energy.

Discussion

HOIs play a critical role in enabling emergent collective phenomena in natural and artificial systems. Modelling HOIs is, however, highly non-trivial, often requiring advanced analytic tools (such as simplicial complexes or hypergraphs) that entail an exponential increase in parameters for large systems. In this paper, we addressed this issue by leveraging the maximum entropy principle to effectively capture HOIs in models via a deformation parameter γ, which is associated with the Rényi entropy. Given their close connection with statistical physics, this family of models provides a useful setup to investigate the effect of HOIs on spin systems, including explosive ferromagnetic and spin-glass phase transitions, extending studies on anomalous phase transitions found in other systems2,7,8,9,11, and the capability of networks to store memories.

The observed effects in curved neural networks can be explained via an effective temperature, inducing a positive or negative feedback effect in memory retrieval. As we discussed above, this effect is present in different forms across other dense associative memories20,21,34. A similar argument may apply to diffusion models framed within dense associative memories25,26, where the energy follows a log-sum-exp nonlinearity. Thus, the accelerated mechanism found in this study clarifies memory retrieval in advanced associative networks, providing an important step toward designing extended memory capacities and improved noise scheduling.

Curved neural networks also provide insights into biological neural systems, where evidence suggests the presence of alternating positive and negative HOIs for even and odd orders, respectively. This alternation leads to sparse neuronal activity, which has been shown to be instrumental for enabling extended periods of total silence5,13,14,15,35. Interestingly, such sparse activity patterns may coexist with the accelerated memory retrieval dynamics, as both involve positive even-order HOIs. The attainment of enhanced memory, combined with sparse activity, presents a promising direction for understanding energy-efficient biological neuronal networks35,36. Future work may investigate how curved neural networks might support both energy efficiency and high memory capacities, potentially by adopting a thresholded, supralinear neuronal activation function20,35. Additionally, developing statistical methods for fitting these models to experimental data (i.e., theories for learning) represents an important, yet largely unexplored, research avenue. Together, these research directions offer a compelling path to uncover the principles of efficient information coding in biological neural systems.

Overall, our results demonstrate the benefits of considering the maximum entropy principle, emergent HOIs, and nonlinear network dynamics as theoretically intertwined notions. As showcased here, such an integrated framework reveals how information encoding, retrieval dynamics, and memory capacity in neural networks are mediated by HOIs, providing principled, analytically tractable tools and insights from statistical mechanics and nonlinear dynamics. More generally, the framework presented in this work extends beyond neural networks and contributes to a general theory of HOIs, paving the road toward a principled study of higher-order phenomena in complex networks.

Data availability

The CIFAR-100 dataset used in this study is available at https://www.cs.toronto.edu/~kriz/cifar.html.

Code availability

The code generated in this study is available in the GitHub repository, https://github.com/MiguelAguilera/explosive-neural-networks.

References

Lambiotte, R., Rosvall, M. & Scholtes, I. From networks to optimal higher-order models of complex systems. Nat. Phys. 15, 313–320 (2019).

Battiston, F. et al. The physics of higher-order interactions in complex systems. Nat. Phys. 17, 1093–1098 (2021).

Amari, S.-i, Nakahara, H., Wu, S. & Sakai, Y. Synchronous firing and higher-order interactions in neuron pool. Neural Comput. 15, 127–142 (2003).

Kuehn, C. & Bick, C. A universal route to explosive phenomena. Sci. Adv. 7, eabe3824 (2021).

Shomali, S. R., Rasuli, S. N., Ahmadabadi, M. N. & Shimazaki, H. Uncovering hidden network architecture from spiking activities using an exact statistical input-output relation of neurons. Commun. Biol. 6, 169 (2023).

Thibeault, V., Allard, A. & Desrosiers, P. The low-rank hypothesis of complex systems. Nat. Phys. 20, 294–302 (2024).

Angst, S., Dahmen, S. R., Hinrichsen, H., Hucht, A. & Magiera, M. P. Explosive ising. J. Stat. Mech.: Theory Exp. 2012, L06002 (2012).

D’Souza, R. M., Gómez-Gardenes, J., Nagler, J. & Arenas, A. Explosive phenomena in complex networks. Adv. Phys. 68, 123–223 (2019).

Iacopini, I., Petri, G., Barrat, A. & Latora, V. Simplicial models of social contagion. Nat. Commun. 10, 2485 (2019).

Millán, A. P., Torres, J. J. & Bianconi, G. Explosive higher-order Kuramoto dynamics on simplicial complexes. Phys. Rev. Lett. 124, 218301 (2020).

Landry, N. W. & Restrepo, J. G. The effect of heterogeneity on hypergraph contagion models. Chaos 30 (2020).

Montani, F. et al. The impact of high-order interactions on the rate of synchronous discharge and information transmission in somatosensory cortex. Philos. Trans. R. Soc. A: Math. Phys. Eng. Sci. 367, 3297–3310 (2009).

Tkačik, G. et al. Searching for collective behavior in a large network of sensory neurons. PLoS Comput. Biol. 10, e1003408 (2014).

Ohiorhenuan, I. E. et al. Sparse coding and high-order correlations in fine-scale cortical networks. Nature 466, 617–621 (2010).

Shimazaki, H., Sadeghi, K., Ishikawa, T., Ikegaya, Y. & Toyoizumi, T. Simultaneous silence organizes structured higher-order interactions in neural populations. Sci. Rep. 5, 9821 (2015).

Tkačik, G. et al. The simplest maximum entropy model for collective behavior in a neural network. J. Stat. Mech.: Theory Exp. 2013, P03011 (2013).

Tkačik, G. et al. Thermodynamics and signatures of criticality in a network of neurons. Proc. Natl Acad. Sci. 112, 11508–11513 (2015).

Burns, T. F. & Fukai, T. Simplicial Hopfield networks. In: The Eleventh International Conference on Learning Representations (2022).

Bybee, C. et al. Efficient optimization with higher-order Ising machines. Nat. Commun. 14, 6033 (2023).

Krotov, D. & Hopfield, J. J. Dense associative memory for pattern recognition. Adv. Neural Inform. Process. Syst. 29 (2016).

Demircigil, M., Heusel, J., Löwe, M., Upgang, S. & Vermet, F. On a model of associative memory with huge storage capacity. J. Stat. Phys. 168, 288–299 (2017).

Agliari, E. et al. Dense Hebbian neural networks: a replica symmetric picture of unsupervised learning. Phys. A: Stat. Mech. Appl. 627, 129143 (2023).

Lucibello, C. & Mézard, M. Exponential capacity of dense associative memories. Phys. Rev. Lett. 132, 077301 (2024).

Krotov, D. A new frontier for Hopfield networks. Nat. Rev. Phys. 5, 366–367 (2023).

Ambrogioni, L. In search of dispersed memories: Generative diffusion models are associative memory networks. Entropy 26, 381 (2024).

Ambrogioni, L. The statistical thermodynamics of generative diffusion models: Phase transitions, symmetry breaking, and critical instability. Entropy 27, 291 (2025).

Bovier, A. & Niederhauser, B. The spin-glass phase-transition in the Hopfield model with p-spin interactions. Adv. Theor. Math. Phys. 5, 1001–1046 (2001).

Agliari, E., Fachechi, A. & Marullo, C. Nonlinear PDEs approach to statistical mechanics of dense associative memories. J. Math. Phys. 63 (2022).

Amari, S.-i. Information geometry on hierarchy of probability distributions. IEEE Trans. Inf. theory 47, 1701–1711 (2001).

Skardal, P. S. & Arenas, A. Higher order interactions in complex networks of phase oscillators promote abrupt synchronization switching. Commun. Phys. 3, 218 (2020).

Ganmor, E., Segev, R. & Schneidman, E. Sparse low-order interaction network underlies a highly correlated and learnable neural population code. Proc. Natl Acad. Sci. 108, 9679–9684 (2011).

Barra, A., Beccaria, M. & Fachechi, A. A new mechanical approach to handle generalized Hopfield neural networks. Neural Netw. 106, 205–222 (2018).

Agliari, E., Barra, A. & Notarnicola, M. The relativistic Hopfield network: rigorous results. J. Math. Phys. 60 (2019).

Agliari, E., Alemanno, F., Barra, A. & Fachechi, A. Generalized guerra’s interpolation schemes for dense associative neural networks. Neural Netw. 128, 254–267 (2020).

Rodríguez-Domínguez, U. & Shimazaki, H. Alternating shrinking higher-order interactions for sparse neural population activity. Preprint at https://arxiv.org/abs/2308.13257 (2023).

Santos, S., Niculae, V., McNamee, D. & Martins, A. F. Hopfield-fenchel-young networks: a unified framework for associative memory retrieval. Preprint at https://arxiv.org/abs/2411.08590 (2024).

Hoover, B., Chau, D. H., Strobelt, H., Ram, P. & Krotov, D. Dense associative memory through the lens of random features. Adv. Neural Inform. Process. Syst. 38 (2024).

Jaynes, E. T. Probability Theory: The Logic of Science (Cambridge University Press, 2003).

Cofré, R., Herzog, R., Corcoran, D. & Rosas, F. E. A comparison of the maximum entropy principle across biological spatial scales. Entropy 21, 1009 (2019).

Jaynes, E. T. Information theory and statistical mechanics. Phys. Rev. 106, 620 (1957).

Tsallis, C., Mendes, R. & Plastino, A. R. The role of constraints within generalized nonextensive statistics. Phys. A: Stat. Mech. Appl. 261, 534–554 (1998).

Morales, P. A. & Rosas, F. E. Generalization of the maximum entropy principle for curved statistical manifolds. Phys. Rev. Res. 3, 033216 (2021).

Valverde-Albacete, F. & Peláez-Moreno, C. The case for shifting the Rényi entropy. Entropy 21, 46 (2019).

Umarov, S., Tsallis, C. & Steinberg, S. On aq-central limit theorem consistent with nonextensive statistical mechanics. Milan. J. Math. 76, 307–328 (2008).

Wong, T.-K. L. & Zhang, J. Tsallis and rényi deformations linked via a new λ-duality. IEEE Trans. Inf. Theory 68, 5353–5373 (2022).

Guisande, N. & Montani, F. Rényi entropy-complexity causality space: a novel neurocomputational tool for detecting scale-free features in EEG/iEEG data. Front. Comput. Neurosci. 18, 1342985 (2024).

Jauregui, M., Zunino, L., Lenzi, E. K., Mendes, R. S. & Ribeiro, H. V. Characterization of time series via rényi complexity–entropy curves. Phys. A: Stat. Mech. Appl. 498, 74–85 (2018).

Wong, T.-K. L. Logarithmic divergences from optimal transport and rényi geometry. Inf. Geom. 1, 39–78 (2018).

Vigelis, R. F., De Andrade, L. H. & Cavalcante, C. C. Properties of a generalized divergence related to Tsallis generalized divergence. IEEE Trans. Inf. Theory 66, 2891–2897 (2019).

Amari, S.-I. Information Geometry and its Applications Vol. 194 (Springer, 2016).

Roudi, Y., Dunn, B. & Hertz, J. Multi-neuronal activity and functional connectivity in cell assemblies. Curr. Opin. Neurobiol. 32, 38–44 (2015).

Montúfar, G. in Information Geometry and Its Applications: On the Occasion of Shun-ichi Amari’s 80th Birthday, IGAIA IV Liblice, Czech Republic, June 2016, (eds Ay, N., Gibilisco, P. & Matúš, F.) 75–115 (Springer, 2018).

Nakano, K. Associatron-a model of associative memory. IEEE Trans. Syst. Man Cybern. 3, 380–388 (1972).

Amari, S.-I. Learning patterns and pattern sequences by self-organizing nets of threshold elements. IEEE Trans. Comput. 100, 1197–1206 (1972).

Hopfield, J. J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl Acad. Sci. 79, 2554–2558 (1982).

Amit, D. J. Modeling Brain Function: the World of Attractor Neural Networks (Cambridge University Press, 1989).

Coolen, A. C., Kühn, R. & Sollich, P. Theory of Neural Information Processing Systems (OUP Oxford, 2005).

Coolen, A. In Handbook of Biological Physics (eds Moss, F. & Gielen, S.) Vol. 4, 553–618 (Elsevier, 2001).

Coolen, A. In Handbook of Biological Physics (eds Moss, F. & Gielen, S.) Vol. 4, 619–684 (Elsevier, 2001).

Mattis, D. Solvable spin systems with random interactions. Phys. Lett. A 56, 421–422 (1976).

Kochmański, M., Paszkiewicz, T. & Wolski, S. Curie–Weiss magnet—a simple model of phase transition. Eur. J. Phys. 34, 1555 (2013).

Amit, D. J., Gutfreund, H. & Sompolinsky, H. Storing infinite numbers of patterns in a spin-glass model of neural networks. Phys. Rev. Lett. 55, 1530 (1985).

Bovier, A., Gayrard, V. & Picco, P. Gibbs states of the Hopfield model with extensively many patterns. J. Stat. Phys. 79, 395–414 (1995).

Talagrand, M. Rigorous results for the Hopfield model with many patterns. Probab. theory Relat. fields 110, 177–275 (1998).

Shcherbina, M. & Tirozzi, B. The free energy of a class of Hopfield models. J. Stat. Phys. 72, 113–125 (1993).

Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images (University of Toronto, 2009).

Fontanari, J. F. & Theumann, W. On the storage of correlated patterns in Hopfield’s model. J. Phys. 51, 375–386 (1990).

Agliari, E., Barra, A., De Antoni, A. & Galluzzi, A. Parallel retrieval of correlated patterns: from Hopfield networks to Boltzmann machines. Neural Netw. 38, 52–63 (2013).

Sherrington, D. & Kirkpatrick, S. Solvable model of a spin-glass. Phys. Rev. Lett. 35, 1792 (1975).

Acknowledgements

The authors thank Ulises Rodriguez Dominguez for valuable discussions on this manuscript. M.A. is funded by a Junior Leader fellowship from ‘la Caixa’ Foundation (ID 100010434, code LCF/BQ/PI23/11970024), John Templeton Foundation (grant 62828), Basque Government ELKARTEK funding (code KK-2023/00085) and Grant PID2023-146869NA-I00 funded by MICIU/AEI/10.13039/501100011033 and cofunded by the European Union, and supported by the Basque Government through the BERC 2022-2025 program and by the Spanish State Research Agency through BCAM Severo Ochoa excellence accreditation CEX2021-01142-S funded by MICIU/AEI/10.13039/501100011033. P.A.M. acknowledges support by JSPS KAKENHI Grant Number 23K16855, 24K21518. F.R. is supported by the UK ARIA Safeguarded AI programme and the PIBBSS Affiliatership programme. H.S. is supported by JSPS KAKENHI Grant Number JP 20K11709, 21H05246, 24K21518, 25K03085.

Author information

Authors and Affiliations

Contributions

M.A., P.A.M., F.E.R., and H.S. designed and reviewed the research and wrote the paper. M.A. contributed the analytical and numerical results. P.A.M. contributed part of the analytical results of the replica analysis.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Luca Ambrogioni, and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Aguilera, M., Morales, P.A., Rosas, F.E. et al. Explosive neural networks via higher-order interactions in curved statistical manifolds. Nat Commun 16, 6511 (2025). https://doi.org/10.1038/s41467-025-61475-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-025-61475-w