Abstract

Transferring motion instructions from a user enables robots to perform new and unforeseen operations. Robot collectives, in particular, offer greater adaptability to changing tasks and environments. However, effectively transferring motion instructions becomes challenging as the collective’s shape and size evolve. These changes often require additional system constraints to maintain robust motion control, which typically depends on pre-programmed knowledge of new tasks, ultimately limiting the collective’s adaptability. To overcome the above challenges, we propose a physical and computational platform for user-guided control of self-reconfigurable modular robots. This platform consists of an optimization scheme for online processing of user commands, which prevents any modular robot actions that would violate system or environment constraints. The second component consists of Joint-space Joysticks, which match the robot’s morphology, enabling the user to control diverse and dynamically changing modular robot structures through direct physical interaction. We present a platform that enables users to safely control modular, shape-changing robots through a physical interface. We demonstrate the platform’s efficacy and generalizability across a diverse set of modular robot morphologies using two independent robotic systems — Mori3 and Roombots — performing a range of tasks including pick-and-place, human assistance, legged locomotion, and workspace expansion.

Similar content being viewed by others

Introduction

Controlling a fixed shape robot to perform one specific task in a predefined environment does not introduce new challenges for control. Controlling the same robotic system to perform several different tasks becomes more challenging, as the control design now needs to integrate the adaptability of the system to different tasks1,2. Controlling a multi-functional robot across different dynamic environments is very challenging, as adaptation to the environment imposes additional control requirements3.

Collectives of modular robots (MRs) are capable of changing their shape to perform a multitude of different tasks and can adapt to the environment, but are difficult to control. MRs can adapt to new environments and enlarge the task-space, excelling in applications where the environment or the task is unknown beforehand or inconsistent, such as disaster recovery4, space5, adaptive furniture6, structures7,8, tooling9, and education10. Each connection between modules adds mechanical joints, creating structures with increasingly higher degrees of freedom (DoFs) and kinematic redundancies, which further complicate motion planning and control11. Independent modules experience variable loads, which can be difficult to distinguish between internal and external forces, and therefore often rely on compliant transitional states12. Moreover, for every new set of tasks, a new controller or a set of target commands needs to be designed from scratch.

Users can control robots across diverse tasks and unfamiliar environments, leveraging the operator’s inherent intelligence, perception, and planning abilities. Prior works show user preference for physical over graphical interactive control modalities13,14, in the form of tangible user interfaces (TUIs) as devices for remote control15. TUIs have been investigated for robotics, although primarily with a focus on the generation of fixed programs rather than remote control13,14,16,17. TUIs have also been used to develop new MR structures18,19, but these works did not focus on the control of the system itself. Moreover, most TUIs rely on external sensors, rather than functioning as stand-alone interfaces with embedded sensing capabilities17,18,20.

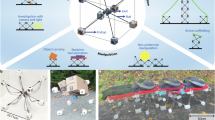

Figure 1 maps the literature on remote control of systems of various complexity, as well as TUIs based on their DoFs, application fields, and controller constraints. Low-DoF systems employ unconstrained remote control, designed for a fixed set of tasks. The limits of such systems are simple to handle and require no sophisticated constraints imposed on the input from the user, as the functionality is bounded and robot morphology is fixed21,22,23,24. Leader/follower configurations increase in system complexity, but impose additional constraints to prevent task failure, such as crashes during exploration25,26 or a patient harm in telesurgury27,28,29,30. The functionality of such systems is still bound to only a certain set of tasks and often requires highly trained professionals as operators. Humanoid robots are not bound to a specific set of tasks31,32,33, but user-guided control with classical methods is challenging due to high DoFs34,35, possible self-collisions, and loss of balance. To address this, human motion retargeting processes human motion via optimization frameworks and prepares commands that best match the action while respecting critical constraints36,37,38,39.

Unconstrained methods are used for fixed-shape low-DoF systems performing a few specific tasks. It becomes increasingly important for high risk operations to autonomously handle system and environment constraints as part of control, as is done in telesurgery, remote exploration, and our method. Highly multi-functional systems require sophisticated joystick solutions to efficiently specify intended motion such as for human motion retargeting, virtual interface, gesture-based methods, or the proposed method (JoJo). Furthermore, our work proposes the first single method for safeguarded and straightforward remote control of a diverse set of MR morphologies, bridging the gap in remote control solutions for systems with variable applications, morphologies, and DoFs.

With a collective of MRs, not only is the functionality of the robot unbounded, but so is the morphology. Classical input devices cannot be efficiently utilized as the set of operator commands changes for each new task and morphology40. Human arm tracking has been used to achieve gesture-based MR target pose setting for single module motion on a plane41,42 and for MR lighting control43. A virtual interface has been proposed that generates a new virtual input device to match MR morphology and enables interaction using hand motion tracking44. However, commanded motion feasibility and safety aspects were not considered, and previously observed user preferences for physical rather than virtual interfaces13,14 may also pose additional challenges in the adoption of such an approach.

If a robot’s shape is fixed, its DoFs can be coupled and abstracted according to tasks and intended purpose. In contrast, with an MR, the shape and function could change depending on the task, and coupled redundancies can limit a robots’ ability to perform a task. The user-guided control methodology, therefore, has to match the high multi-functionality and re-adaptability qualities of MR.

The main contribution of our work is an interactive control method that enables users to harness the benefits of a collective of MRs. This method is made possible by the interaction of its two main components: a constrained optimization, which ensures the feasibility and robustness of the movements under set joint targets, and a physical reconfigurable joint-space joystick (JoJo) that can match any MR morphology and is directly manipulated by a user. Our control method receives raw sensor data from the JoJo, to be incorporated into the control optimization objective function and subjected to system-, environment-, and user-related constraints. The control optimization deals comfortably with high DoFs, associated redundancies, and diverse robotic systems required to operate under various sets of constraints through a Quadratic Programming (QP) based controller formulation.

Results

We propose a control platform that matches the ambitious vision of modular robotics. The proposed method requires little to no prior training to enable users to control diverse and dynamic MR morphologies, performing different types of tasks. The proposed system is shown in Fig. 2a. Since the target robot’s kinematics vary depending on the MR type, JoJo’s physical structure is adapted accordingly, represented by square icons in the figure. The internal components (electronics, sensors, and embedded software) are the same across all JoJo devices. Sensors in the joints of the JoJos relay data into a QP-constrained optimization control problem formulation. The constrained optimization processes the JoJo sensor data given imposed constraints generated from the robot and task description, and relays the resulting control commands to the MR. The constraints can be added or removed as needed mid-operation, allowing for MRs to adapt to new tasks or morphology changes. The control platform can operate in open-loop with MRs that track control commands quickly and accurately, and in closed-loop for MRs with lower tracking performance or significant time delays.

a shows the full framework. Kinematic structures from MRs are combined with set hardware to create JoJos. Sensor data from JoJos and MRs are sent to the constrained optimization framework, which generates final optimal control commands. Components marked with a square are specific to the MR type, while the rest are directly generalizable across systems. b shows the physical JoJo for Mori3 (left) and Roombots (right). Both share the same type of internal hardware components; only the shapes differ to resemble the respective MR. c shows the response of the MR when subject to constraints in the QP. The robot closely follows the target values, except when the targets violate the constraints.

We demonstrate JoJo’s versatility on two independent MRs, Mori345 and Roombots6, with their respective JoJos shown in Fig. 2b resembling each MR’s kinematic structure. Mori3 and Roombots are chain-triangular and a lattice-cubic in shape, which is similar to many MRs and can be generalized46,47,48,49. The JoJo’s electronics, sensors, and embedded software are the same for different types of MRs.

Dynamically assessing constraints allows the optimization framework to only limit motion when the robot is subject to potentially dangerous or undesirable actions. Figure 2c shows the response of a system composed of two Mori3 modules subject to JoJo-based control. JoJos were operated to drive Mori3 over a conservative maximum angle QP constraint at low, medium, and high speeds. The modules follow the control signal when not subject to the imposed constraint, but when the angle reaches the constraint, QP filters the command to not violate the limits. The modules return to following the JoJo commands when the target’s constraints are no longer violated.

In this work, we define unsafe user commands as commands that could result in hardware damage, human injury or discomfort, or system instability. Specifically, we consider three categories: (1) exceeding joint torque limits, (2) causing collisions with humans, and (3) exceeding the balance limits of the legged system. To rigorously evaluate the effectiveness of our approach, we designed specific experiments where unsafe user commands were intentionally introduced. These include excessive joint torque (Supplementary Movie S1), human collision (Supplementary Movie S2), and balance loss in a legged system (Supplementary Movie S3). The goal was to validate the system’s ability to detect and override such commands, ensuring safety during remote operation of diverse MR morphologies.

Object manipulation and grasping

The versatility of MRs allows for the creation of various manipulator structures that can adapt to different requirements and conditions. We show the control of a robotic manipulator composed of Mori3 modules (Fig. 3a–d, Supplementary Movie S1). JoJos are assembled into a structure matching the Mori3-gripper morphology. A user interacts with the JoJos to control the configurations of the three gripper joints (Fig. 3b) to perform pick and place tasks of objects with different shapes and sizes (Fig. 3a, Supplementary Movie S1).

The user is able to remotely manipulate objects of different sizes and shapes (a) for a pick and place task. The optimization mid-layer controller prevents the system (b) from exceeding joint torque limits (c) while following user commands (d). A robotic arm made of Mori3 modules is controlled by a user interacting with JoJos. The arm (e) closely follows the commands, except when subject to constraints, which limit the proximity of the modular arm to the human subject and ground. f shows the physical set-up at different times, which correspond to the vertical dashed lines in the graphs. g and h show the evolution of sensor data and constraints throughout the experiment.

The challenge of this scenario lies in adapting to a wide variety of objects, which is difficult to achieve with a fixed pre-defined motion, autonomous task-space control or without contact and torque sensing. However, it is easily achieved by a user interacting with a tangible interface. The user’s physical interaction with the proposed JoJo system allows for the system control directly in the joint-space, enabling the user to take full advantage of the capabilities of the Mori3 manipulator system.

The objective of the task is to pick up an object and place it in a different location. The system is subject to several constraints, however, here we highlight a torque limit avoidance constraint (Eq. (5)) that can be violated if the gripper raises too high. The plot in Fig. 3c shows the evolution of modeled joint torques for the three active joints of the manipulator. At t = 19.8 s, the base joint torque hits the limit (−0.5 N m), which prevents the MR system from following the user commands (Fig. 3d) that would otherwise cause damage. The JoJo system allows the user to operate Mori3 without damaging the robot or failing to perform the pick and place task.

Human assistance task

People who need assistance with daily tasks have different needs. MRs can be configured into a structure suitable for the specific aid and be controlled remotely by a caretaker. We present an experiment in a human assistance application (Fig. 3e–h, Supplementary Movie S2). An assembly of four Mori3 modules form a robotic arm with a wooden fork attached to the end-effector. Four JoJo modules are connected to form the same structure. The user’s goal is to bring the fork close enough to the participant’s mouth so that they can complete the final movement in the feeding process themselves.

The challenge with this scenario is ensuring that all critical constraints are satisfied at all times, preventing dangerous situations. Several important constraints must be satisfied to ensure the safety and comfort of the participant, the robot, and the environment; including human-robot collision, robot self-collision (Eq. (6)) and robot-ground collision avoidance constraints (Eq. (7)). Additionally, the robot’s joint speeds are constrained to under 30% of their maximum capacity to minimize any risk of discomfort or perceived threat to the participant. Manually adjusting for both the main goal and safety can overload the operator; autonomous QP management of the constraints enables the operator to fully focus only on achieving the main task.

Figure 3g shows the distances between the human, the robot, and the ground, and constraint thresholds for collision avoidance (red dashed lines), for robot-human and robot-ground collision avoidance constraints respectively. When the robot is far from the constraints limits (Fig. 3e), it follows the user’s commands as intended (Fig. 3d). Exceeding the constraint limits (light gray plot regions) prevents the arm from following the user commands that would potentially cause damage.

Legged modular robot stability

The proposed control platform can serve diverse applications, beyond fixed-base MR morphologies. We demonstrate the same method being used to control a free-floating-base tripod structure constructed of four Mori3 modules. A user remotely controls the tripod by manipulating a corresponding JoJo representation of the robot to achieve locomotion, while underlying optimization ensures stability (Fig. 4a–d, Supplementary Movie S3).

a, b A tripod consisting of four Mori3 modules was controlled by a user interacting with a matching JoJo structure. The blue triangles represent the dynamic shape changes of the support polygon, as controlled by the operator. c, d Additional constraints were set, preventing the CoM of the robot from approaching the edges of the support polygon by less than 4 cm. Without the optimization, the robot would topple as a result of following directly the operator commands. Two Roombot modules are remotely controlled (e) to approach an out-of-reach end-effector target (f). The operator brings two JoJos together, which then form a contact constraint and establish a physical connection between modules. The distance between two frames of the newly created contact constraint remains zero (g) while the system joints targets are changed to achieve a far-reaching task (h).

Unlike the fixed-base arm and gripper MR morphologies, a tripod may fall as a result of user actions. A constraint on the position of the center of mass (CoM) is included in the QP problem to maintain the tripod’s static equilibrium (Eq. (8)), restricting the CoM to be ≥4 cm away from the support polygon’s border.

The evolution of the robot’s support polygon throughout the experiment is shown in the central XY plots, along with corresponding side views of the tripod and JoJos (Fig. 4b). Two legs of the tripod are simultaneously bent to approximately the same angle (10 s). Then, the third leg is pulled towards the center of the support polygon (20 s). When the third leg gets too close to the center, the CoM constraint is triggered (24.5 s to 32.2 s), which causes the MR motion to start deviating from JoJo commands to ensure that the MR tripod structure does not fall over.

The distance between a border of the constraint and the robot’s CoM is shown in Fig. 4c, with the corresponding JoJo and Mori3 angles for the pulled leg shown in the plot Fig. 4b. The user commands are closely followed by the robot at all times, except for when they would lead to the CoM constraint being violated and thus the robot falling (light gray plot regions). While the operator creates actions that cause the JoJo platform to tip over, the Mori3 structure instead continues to stand.

Human-guided self-reconfiguration

MRs are collaborative by nature. Self-reconfiguration allows modules to change their own system’s shape to perform tasks that cannot be done by a fixed structure. It is challenging to control systems with changing morphology using a fixed joystick and controller, as the control must adapt to the robot’s evolving structure. Our proposed method allows for MR self-reconfiguration. To showcase this additional area of application of our system and generalization of the proposed control platform we use a different MR platform for this experiment - Roombots6.

An operator interacts with JoJos to bring the faces of two Roombot modules into close proximity (Fig. 4e, Supplementary Movie S4). Once the faces are brought close by the user, an alignment task (Eq. (3)) is added to the QP objective function with a keyboard button click. The Roombots couple, creating a new kinematic tree for the overall system which is reflected in the QP controller formulation as a newly added contact constraint. The corresponding bilateral contact is realized on the JoJo via velcro tape. After the two modules couple, one of them breaks their connection with the ground by opening their corresponding bottom gripper. This second reconfiguration event is reflected on the control side by the removal of the module-ground contact constraint from the QP definition. The two modules now form a longer arm and can be controlled to reach targets that were not feasible with separate individual modules (Fig. 4f).

The QP controller adds a new contact constraint (Eq. (9)) mid-way through the experiment, accounting for the morphological change. Since the hemispheres of the two modules involved in the contact event can no longer collide with each other, the controller removes their collision avoidance constraint from the QP formulation. Figure 4g shows the temporal evolution of the distance between two module faces along three space axes (XYZ). In the first half of the experiment, the two contact frames are not constrained, so the distance varies freely. Once the connection is established successfully, the contact constraint is added (69 s), and the distance between connecting sides remains zero (light gray plot region). The user manipulates JoJo to perform a far-reaching task by changing the configuration of all joints of the system (Fig. 4h).

Discussions

MRs ambition is to change their structure to adapt to any new task. However, they typically require unique controllers for recognizing and executing each task. We enable users to control changing MR structures directly, leveraging humans’ innate ability to understand and perform a wide range of tasks across different situations. While user control allows MRs to adapt to new tasks, it also introduces the risk of operators unintentionally moving the robot into unsafe or damaging configurations. To address this, we introduce a new MR control framework that combines physical joystick representations with a QP optimization framework. This allows users to safely operate arbitrary MR structures without needing a new controller for each configuration.

We validated our framework across four different tasks; object pick and place, human assistance, legged locomotion, and workspace expansion via self-reconfiguration. Our control platform is generalizable and proven on two independent MR platforms - Mori3 and Roombots. For all of the presented experiments, the interface was adaptable between different MR morphologies within seconds. Our work demonstrates the feasibility and functionality of our proposed framework through a proof-of-concept approach, establishing its foundational viability. The results highlight its versatility across tasks and MR systems, paving the way for future studies. Comparing our method to state-of-the-art approaches limited to specific morphologies or platforms is unconstructive. Likewise, multi-morphology methods that ignore system redundancies and constraints risk safety violations, which our approach prevents. To our knowledge, no existing method is suitable for a direct experimental comparison.

The biggest future challenge of this work is in scaling up the number of controllable modules, as large numbers of JoJos can get unwieldy for an operator. Similarly, there is presently a delay when operating the JoJo framework, likely due to the wireless protocol. Increasing the number of JoJos adds to network congestion, which can in turn, add further delays to the system. In the Methods section, there is a further discussion on how to physically scale the number of JoJos. However, significantly increasing the number of modules is largely outside the scope of this work, as simply increasing the number of robots is an independent challenge50,51,52.

For all demonstrations, the user was physically next to the robot. In practice, the user would likely be located at a distance and viewing the action from an external device, such as a camera. The correlation between user perception, delay, and field of view is well studied53,54 and we can anticipate a user’s response to be similar to prior works. Future work will focus on the utilization of the proposed system at a distance, when only partial observation of the scene is available.

This work advances MR control, allowing for new morphologies to be tested and operated quickly. In research, this can enable the rapid testing of new MRs without developing a control stack for each specific task. Similarly, we enable rapidly adaptable user control of new robot morphologies, in a method that is safe for the robot, user, and environment. MRs are best suited for environments with unforeseen challenges and where there is a large overhead to deployment, such as space55. This work enables user control of MR systems, which can potentially replace single-purpose robots with adaptable systems in space and other challenging environments.

Methods

Constrained optimization formulated as a QP problem has proven to facilitate real-time control of high DoF systems in various applications, such as stair climbing56, aircraft manufacturing57, and human-robot interaction (HRI)58. QP with joint velocities as decision variables has been previously explored for MRs59 and one-to-one teleoperation60. However, these works did not consider morphology change mid-operation, joint torque limits or connector strength constraints, which are all of extreme importance for MR control.

The multi-contact formulation of the MR system in QP allows to account for reconfiguration (morphology change events). The acceleration and contact forces being the decision variables of QP, allow to directly account for torque limits and connector strength constraints. As MR systems change morphology with each newly added or removed connection, the stress imposed on the system’s joints and connectors by other modules also changes. This is why the combination of the multi-contact modeling of the system at the control level and acceleration-based QP formulation is of high interest for the MR control research community. To the best of our knowledge, this work is the first to describe the use of acceleration-based multi-contact formulation of QP control in the context of MR systems.

Trajectory optimization under constraints for modular robot interactive control

The QP controller treats joints acceleration and contact forces as decision variables, allowing joint torques and other dynamics limits to be imposed as constraints. The controller uses contact constraints to handle the reconfiguration mid-operation of MR, legged locomotion, interaction with the environment, or closed kinematic chains. These contract constraints can be added to or removed from the QP problem at any time for diverse applications. QP serves as a safeguarding tool that reduces a user load by autonomously ensuring commanded motion feasibility. We are the first to demonstrate mid-operation MR reconfiguration with QP control.

Through physical interaction with a JoJo, a user sets the joint targets for the MR structure incorporated in a vector qjojo. For an arbitrary MR morphology and operation environment, the raw JoJo data cannot be directly sent to the robot as commands because the resulting motion of the robot may be harmful or even unfeasible. To achieve feasible real-time remote control for arbitrary MR morphology and operation environment, a versatile constrained QP optimization61 is employed as an intermediate layer between the inputs given by the user and commanded robot motion.

The QP controller solves for joint accelerations \(\ddot{{{{\bf{q}}}}}\) and contact wrenches \({{{\bf{f}}}}=\{{{{{\bf{f}}}}}_{{{{{\rm{c}}}}}_{j}};j=1,\ldots,N\}\), where N is the total number of contact wrenches defined, which are subject to constraints described throughout this section and summarized in Table 1. The objective function to minimize is the following:

where wjojo is a priority weight assigned to the posture task from JoJo input data:

where kp is a proportional task gain and kv is a derivative gain set to \(2\sqrt{{k}_{p}}\) to attain error convergence to be close to that of a critically damped system, and Sjojo is a task DoF selection matrix. Note that \({\dot{{{{\bf{q}}}}}}_{{{{\rm{jojo}}}}}\) is assumed to be 0, since the goal of teleoperation in our current study is for MRs to match the joint position targets dictated by JoJos, while the velocity mismatches are not meant to be penalized. Velocity target matching and associated challenges could be a topic of further exploration.

The QP objective function can include multiple other tasks on top of Tjojo, with corresponding priority weights ∑wiTi for the system to fulfill while under remote control. Such tasks and corresponding weights are application/environment specific, such as a high weight objective to align connecting links prior to a reconfiguration event to ensure successful docking. If the MR coupling has a low tolerance for alignment error to achieve reconfiguration, below user-JoJo manipulation abilities, Talign (Eq.(3)) can be inserted into the objective function when connectors are close to being aligned, with a weight walign higher than wjojo:

where c1 and c2 are transformations (position and orientation) of two connectors links with respect to the world frame, and \({{{{\bf{J}}}}}_{{{{{\rm{c}}}}}_{1}}\) and \({{{{\bf{J}}}}}_{{{{{\rm{c}}}}}_{2}}\) are Jacobians associated with these frames. Once the reconfiguration is completed, this task can be removed from the objective function. A new fixed contact constraint (Eq. (9)) is added to the problem definition to account for the reconfiguration event, described in detail later in this section.

Given an MR module shape \({{{\mathcal{V}}}}\) (e.g., a mesh), a set of convex collision hulls \({{{\mathcal{C}}}}\) is generated by sampling mesh points. These two structures, combined with a set of contact surfaces \({{{\mathcal{S}}}}\), kinematic trees describing MR structures morphologies \({{{\mathcal{M}}}}\), and robots joint limits, together make up the full robot description \({{{\mathcal{D}}}}\). The robot limits are in terms of position \(\underline{{{{\bf{q}}}}},\overline{{{{\bf{q}}}}}\), velocity \(\underline{\dot{{{{\bf{q}}}}}},\overline{\dot{{{{\bf{q}}}}}}\), acceleration \(\underline{\ddot{{{{\bf{q}}}}}},\overline{\ddot{{{{\bf{q}}}}}}\), jerk \(\underline{\ddddot{{{{\bf{q}}}}}},\overline{\ddddot{{{{\bf{q}}}}}}\) and torque \(\underline{{{{\boldsymbol{\tau }}}}},\overline{{{{\boldsymbol{\tau }}}}}\), where under- and upper scores represent the lower and upper bounds, as constants. Depending on the MR, the bounds may be different depending on the joint type (e.g., two Roombots motors are similar, while the central motor is different and supports less torque). The robot’s innate constraints (Eq. (4)–(6)) are constructed from \({{{\mathcal{D}}}}\) and are always part of the QP problem, solved every Δt seconds for optimal joint accelerations \(\ddot{{{{\bf{q}}}}}\) and contact forces f.

The first of these constraints respects bounds for joint position, velocity, acceleration, and jerk. The constraint also assures that joint speed is reduced to zero when position bounds are approached via linear velocity damper:

where \({\dot{{{{\bf{q}}}}}}_{t-\Delta t}\) is a joint velocity vector computed in the previous algorithm iteration, ξ is a deceleration constant, \(\underline{{{{\bf{d}}}}}={{{\bf{q}}}}-\underline{{{{\bf{q}}}}}\,{{{\rm{and}}}}\,\overline{{{{\bf{d}}}}}=\overline{{{{\bf{q}}}}}-{{{\bf{q}}}}\) are respective joint distances to lower and upper position bounds, and di and ds are interaction and safety distance thresholds of the linear velocity damper.

Other robot innate constraints ensure that joint torque and rotatum bounds are respected:

where \(\underline{{{{\boldsymbol{\tau }}}}}\) and \(\overline{{{{\boldsymbol{\tau }}}}}\) denote joint torque lower and upper bounds, respectively, τ is a vector of robot joint torques, C is a vector of torques due to centrifugal and Coriolis effects and gravity, M is the mass matrix, \(K{\dot{{{{\bf{q}}}}}}_{t-\Delta t}\) is a damping term, and J contains stacked contact Jacobian matrices. This enforces force constraints and bounds the joint torques (\({{{\boldsymbol{\tau }}}}={{{\bf{M}}}}({{{\bf{q}}}})\ddot{{{{\bf{q}}}}}+{{{\bf{C}}}}-{{{{\bf{J}}}}}^{T}{{{\bf{f}}}}\)) to stay within joint torque and its derivative limits.

The last type of robot innate constraint ensures no collision between robot links. In its simplest form, it is expressed as d ≥ dsafe, where d = ∣∣p1 − p2∣∣ is a distance between a pair of closest points p1 and p2 on convex collision hulls \({{{{\mathcal{C}}}}}_{1}\) and \({{{{\mathcal{C}}}}}_{2}\) associated to robot links that must not be closer to one another than a predefined safety distance threshold dsafe (Fig. 5a). These collision avoidance constraints impose inequality conditions on the decision variable of the QP - the robot joints acceleration \(\ddot{{{{\bf{q}}}}}\):

where \({{{{\bf{J}}}}}_{{{{{\rm{p}}}}}_{1}}\) and \({{{{\bf{J}}}}}_{{{{{\rm{p}}}}}_{2}}\) are the robot Jacobian matrices associated with points p1 and p2. The vector n is a unit vector from p2 to p1, defined as \(\frac{{{{{\bf{p}}}}}_{1}-{{{{\bf{p}}}}}_{2}}{d}\). Vectors v1 and v2 are velocities of the two closest points, and \(\dot{d}=({{{{\bf{v}}}}}_{1}-{{{{\bf{v}}}}}_{2}){{{\bf{n}}}}\). To ensure closed-loop control stability, adaptive gains Kp and Kv are used for this type of constraints as described in ref. 62, which also ensures that \(\dot{d}\to 0\) as the collision limit is approached: d → dsafe.

a Collision avoidance (Eq. (6)) demonstrated on two Roombot modules. b Legged morphologies made of Mori3 and corresponding visualization of the CoM constraint elements (Eq. (8)). c Module to module connection modeled as bilateral contact. The contact is associated with a wrench \({{{{\bf{f}}}}}_{{{{{\rm{c}}}}}_{j}}\) (QP decision variable) consisting of moments \({m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{X}}}}},{m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Y}}}}},{m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Z}}}}}\) and tension-compression forces \({f}_{{{{{\rm{c}}}}}_{j}}^{X},{f}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Y}}}}},{f}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Z}}}}}\). The moment around the motor axis \({m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Y}}}}}\) can be bounded as part of joint torque constraints. d Increasing structure inclination introduces more stress in the form of higher wrenches applied to connector links. A bilateral contact constraint is defined between MR and the ground. Constraining these wrenches prevents users from driving the MR system to violate connector strength constraints (illustrated in red).

In addition to the robot's innate constraints, there may be application or environment-related constraints. Such constraints can be activated/deactivated mid-operation according to need. We impose constraints constructed via information about robot-environment collision avoidance, CoM constraints, and robot-environment contacts.

Eq. (7) defines collision avoidance with static objects (e.g., ground):

where \({{{{\bf{J}}}}}_{{{{{\rm{p}}}}}_{x}}\) is the Jacobian associated with a body point px and \({d}_{s}^{e}\) is the robot-environment safety distance threshold. The vector \({{{{\bf{n}}}}}^{e}=\frac{{{{{\bf{p}}}}}_{x}-{{{{\bf{p}}}}}_{e}}{{d}^{e}}\) is a unit vector from px to pe, where pe is the closest point on the environment static object collision convex hull to px and de = ∣∣px − pe∣∣. Vector vx is the velocity of point px and \({\dot{d}}^{e}={{{{\bf{v}}}}}_{x}{{{{\bf{n}}}}}^{e}\). \({K}_{p}^{e}\) and \({K}_{v}^{e}\) are adaptive constraints gains.

In certain applications, MR can be assembled into various legged robot structures. CoM constraints prevent such robots from losing balance and falling, regardless of morphology (e.g., number of legs). Static stability is ensured by imposing inequality conditions on the robot CoM, \({d}^{{{{\rm{CoM}}}}}\le {d}_{s}^{{{{\rm{CoM}}}}}\), where dCoM is the distance of the robot CoM from a plane defined by a normal np and an offset bp, and computed as dCoM = pCoMnp + bp. The CoM constraint plane elements np and bp are computed from robot ground contact locations and form a static stability support polygon \({{{\mathcal{P}}}}\) (Fig. 5b). When imposed on the robot’s joint accelerations, these CoM constraints are written as:

where JCoM is the robot CoM Jacobian, vCoM is CoM velocity, and \({\dot{d}}^{{{{\rm{CoM}}}}}={{{{\bf{v}}}}}^{{{{\rm{CoM}}}}}{{{{\bf{n}}}}}_{p}\). \({K}_{p}^{{{{\rm{CoM}}}}}\) and \({K}_{v}^{{{{\rm{CoM}}}}}\) are adaptive gains as defined in62.

Other examples of application or environment-related constraints include contact constraints. Those impose a robot body point velocity, \({{{{\bf{v}}}}}_{{b}_{x}}^{{r}_{1}}\), to be equal to the velocity of the other robot body point, \({{{{\bf{v}}}}}_{{b}_{y}}^{{r}_{2}}\) (robot-robot contact), or to remain zero (robot-static environment contact). This constraint also forces the error ϵp between two frames to remain the same, effectively fixing point \({{{{\bf{p}}}}}_{{b}_{x}}^{{r}_{1}}\). This is determined upon initial constraint definition, with associated Jacobian \({{{{\bf{J}}}}}_{{b}_{x}}^{{r}_{1}}\), maintaining contact with the environment or another robot:

The connector strength constraint is a very important aspect for any MR platform. Such constraint is already partially handled in the proposed method via joint torque constraints, which limit the torques around the connector motor axis (Fig. 5c). The joint torque constraint is often the dominant element for connector failure, but only covers one out of six contact wrench DoFs. If the remaining five DoFs of the contact wrench are left unconstrained, there is a possibility to drive the system into ca onfiguration where the connector will be mechanically broken due to the excessive force along any of the axes (Fig. 5d).

To prevent a user from driving MRs into such configurations, the principal contact points on each connector are assigned a wrench \({{{{\bf{f}}}}}_{{{{{\rm{c}}}}}_{j}}=({m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{X}}}}},{m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Y}}}}},{m}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Z}}}}},{f}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{X}}}}},{f}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Y}}}}},{f}_{{{{{\rm{c}}}}}_{j}}^{{{{\rm{Z}}}}})\), composed of three moment elements and three tension-compression force elements, which is part of the QP decision variables and is constrained as:

where \({{{{\bf{l}}}}}_{{{{{\rm{c}}}}}_{j}}\) and \({{{{\bf{u}}}}}_{{{{{\rm{c}}}}}_{j}}\) are lower and upper connector contact wrench bounds dictated by MR connector type.

In our experimental set-up, the initial state of the MR and JoJo is set to the same joint configuration and root position. If there is a mismatch between the JoJo and MR joint configuration when the controller initializes, the MR system will start to move towards qjojo immediately after the start of the controller. The root pose is not directly controlled by the JoJo system and thus any difference in root pose will not have any effect on robot motion. However, adjusting the root pose will affect the visualization of the controller state, which may be inconvenient for the user if it does not match the real robot. This root pose establishment can be addressed through integrating further sensor data, such as estimating robot root posture from IMU data and using it to initialize robot state in the controller.

Depending on whether the MR is position or velocity controlled, the QP solution is numerically integrated either once or twice and the result is sent as motor commands to the MR:

The employed QP formulation, outlined in this section, is implemented in the MC-RTC interface63. An overview of the methodology and experiments is provided in Supplementary Movie S5.

Discussion on the use of QP for self-reconfiguration

Robustness of self-reconfiguration is a difficult challenge in MRs. Couplings must be aligned within a mechanical tolerance, otherwise, the connection fails to establish52,64. Misalignments can be caused by a perception error, caused by physical properties beyond simulation, such as mechanical compliance between connectors, calibrations, or accumulated control errors. In the case of minor perception error, low-level reconfiguration facilitating methods, as in Section VI.A of65, are suitable tools to achieve robust self-reconfiguration. In case of severe perception errors, the proposed JoJo system can be used by users who would need to adapt to discrepancies between JoJo configuration and MR configuration to steer the system into reconfiguration-compatible state.

Details of the physical JoJo design and performance analysis

The kinematics of JoJos replicate that of the target robot, but do not have to match exactly. MR modules are of a fixed general weight, which can be unwieldy to a user to manipulate. The joysticks are rather designed to fit comfortably into user hands, both in terms of size and weight. As JoJos are unactuated and manually coupled, the cost and complexity is significantly less than that of MRs.

For Mori3, the sensors are located at the edges, about the axis of rotation, and the connection between modules. For Roombots, the sensors are located at every rotary joint in the module; within each sphere and between the two spheres. The JoJos are quick to manufacture, adaptable to new housings, and robust in operation. Through this hardware platform concept, any modular robot morphology can be directly controlled. In combination with the optimization framework, MRs can be manipulated by a user, allowing MRs to adapt to perform any new task without a prior high-level controller.

The JoJos share the same hardware elements between MRs: electronics, sensors, and embedded software. The only difference is the housings, which resembles the target robot. Each JoJo module contains rotary hall-effect sensors with embedded magnets to detect the angle between the links. By using hall-effect sensors, the JoJos can be connected at arbitrary angles mid-operation. Inside each module, the hall-effect sensors (AMS AS5048B) communicate with a microcontroller (Microchip PIC16F18323) via I2C, which then routes the data to a wireless module (Espressif ESP8266) routinely sending updates. On start-up, JoJos connect to a local MQTT broker and publish state updates at 50 Hz, which are then used by the QP to update the objective function.

The joint stiffness between JoJos can be adjusted, which is dependent on the kinematic shell. Changing the depth of the pin connecting two JoJos together adjusts the stiffness for the structures of the JoJos, controlling Mori3. Adjusting screws and adding foam for pressure adjust the stiffness of the JoJos representing Roombots. For all experiments, the JoJo joint stiffness was adjusted to a holding torque of 0.15 Nm.

To facilitate quantitative comparison with our work for future similar systems, we have added a table (Table 2) that summarizes the accuracy of the MR system’s tracking of JoJo commands in unconstrained regions. Different remote control systems are subject to various types and lengths of delays, which are affected by various factors such as network quality. The delays affect the leader-follower tracking accuracy. To allow for a quantitative comparison based on the appropriate system time delay category, the added table reports average accuracies for the maximum delay (raw data), data simulating minimal delay (time-aligned). The maximum amount of delay (last row) is calculated by aligning two time series until the error between them is minimized and determining the data time shift required for such alignment.

Discussion on physical JoJos for high DoF systems

Conventional controllers21,22,23,24 cannot be used for MR, due to the high DoFs, variations of the MR morphology, and areas of application. With modularity, the number of DoFs is theoretically unlimited. The controller needs to be adaptable to have the same modularity as the target robot. Increasing the number of modules increases the number of active joints, which can quickly become uncontrollable to an untrained user.

To overcome the difficulties in MR interactive control, we introduce the concept of JoJos, which are physical sensory embodiments of the target robot. Each JoJo module resembles the modules of the target MR and can connect together to mimic the MR morphology. For straightforward interactive control, the shape of JoJo should match the target MR. The JoJo modules measure the angles of the joints between them, which then act as targets for the MR to follow. The modules are robust to physical interaction and inexpensive in comparison to the robot, such that untrained operators can move them with minimal risk.

Potential methods for handling large DoFs

Controlling any high-DoF system is a very difficult task. In this work, we demonstrate one user operating a MR of up to six DoFs. For further system scalability, there are several strategies that can be used if the number of DoFs increases beyond what one user is capable of operating, depending on the purpose of the task. For real-time operation, multiple users can collaborate to operate the system. The proposed control platform can also use pre-disposed joint motions which ease the user’s actions, such as fixing the physical joints across several JoJos to a constant angle.

Currently, a JoJo is significantly cheaper than an MR. Should MRs be mass-produced to reduce cost, one could use a compliant and back-drivable module in place of the JoJo. However, as discussed previously, the module still has to fit comfortably in a user’s hand for manipulation to be effective. This approach will result in additional challenges in terms of complaint control, data management, and additional sensors, but could result in a single adaptive robot acting as both a robot and controller.

Future work could address how an operator can couple DoFs together to improve user manipulation. By configuring multiple joints to move together, an operator can control a higher number of DoFs, albeit the structure formed by the JoJos would no longer perfectly resemble the MR. For example, in the event of a multi-legged robot, the operator could couple the joints of different legs together, such that all coupled legs move synchronously, while the operator only has to control one.

A joint locking mechanism can be envisioned in future iterations of the platform to ensure that joints can be fixed to hold a specific shape in any configuration. If control is for a higher-DoF system to mimic the kinematics of a repeated task, then the task can be done step-wise across several DoFs at a time. In this case, groups of DoFs can be defined and selected by a user, with a graphical interface representing the system could allow switching between control of different DoF groups.

Data availability

The data displayed in the manuscript figures and the system performance table presented in the Methods section are provided in the associated Source Data file. Source data are provided with this paper.

Code availability

The source code for this work is publicly available under the BSD 2-Clause License66.

References

Chew, J. Y., Kawamoto, M., Okuma, T., Yoshida, E. & Kato, N. Adaptive attention-based human machine interface system for teleoperation of industrial vehicle. Sci. Rep. 11, 17284 (2021).

Shao, L., Migimatsu, T., Zhang, Q., Yang, K. & Bohg, J. Concept2robot: Learning manipulation concepts from instructions and human demonstrations. Int. J. Robot. Res. 40, 1419–1434 (2021).

Siva, S. & Zhang, H. Robot perceptual adaptation to environment changes for long-term human teammate following. Int. J. Robot. Res. 41, 706–720 (2022).

Yim, M, Duff, D.G., Roufas, K. Modular reconfigurable robots: an approach to urban search and rescue. In: International Workshop on Human-friendly Welfare Robotics Systems. Taejon, Korea; (2000).

Zykov, V., Mytilinaios, E., Desnoyer, M. & Lipson, H. Evolved and designed self-reproducing modular robotics. IEEE Trans. Robot. 23, 308–319 (2007).

Hauser, S. et al. Roombots extended: Challenges in the next generation of self-reconfigurable modular robots and their application in adaptive and assistive furniture. Robot. Auton. Syst. 127, 103467 (2020).

Swissler, P, Rubenstein, M. FireAnt: A modular robot with full-body continuous docks. In: IEEE International Conference on Robotics and Automation (ICRA). Brisbane, Australia; p. 6812–6817 (2018).

Liang, G, Luo, H, Li, M, Qian, H, Lam, T.L. Freebot: A freeform modular self-reconfigurable robot with arbitrary connection point-design and implementation. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Las Vegas, NV, USA; p. 6506–6513 (2020).

Brodbeck, L. & Iida, F. An extendible reconfigurable robot based on hot melt adhesives. Auton. Robots 39, 87–100 (2015).

Pacheco, M, Fogh, R, Lund, H.H., Christensen, D.J. Fable II: Design of a modular robot for creative learning. In: IEEE International Conference on Robotics and Automation (ICRA). Seattle, WA, USA; p. 6134–6139 (2015).

Kurokawa, H. et al. Distributed self-reconfiguration of M-TRAN III modular robotic system. Int. J. Robot. Res. 27, 373–386 (2008).

Saintyves, B., Spenko, M. & Jaeger, H. M. A self-organizing robotic aggregate using solid and liquid-like collective states. Sci. Robot. 9, eadh4130 (2024).

Bonaiuto, S, Cannavò, A, Piumatti, G, Paravati, G, Lamberti, F. Tele-operation of robot teams: a comparison of gamepad, mobile device and hand tracking-based user interfaces. In: IEEE 41st Annual Computer Software and Applications Conference (COMPSAC). vol. 2; p. 555–560 (2017).

Sapounidis, T. & Demetriadis, S. Tangible versus graphical user interfaces for robot programming: exploring cross-age children’s preferences. Personal. Ubiquit. Comput. 17, 1775–1786 (2013).

Bong, W.K., Chen, W, Bergland, A. Tangible user interface for social interactions for the elderly: a review of literature. Adv. Hum.-Comput. Interact. https://doi.org/10.1155/2018/7249378 (2018).

Lapides, P, Sharlin, E, Costa Sousa, M. Three Dimensional Tangible User Interface for Controlling a Robotic Team. In: Proceedings of the 3rd ACM/IEEE International Conference on Human Robot Interaction. New York, NY, USA: Association for Computing Machinery; p. 343–350 (2008). Available from: https://doi.org/10.1145/1349822.1349867

Cao, Y, Xu, Z, Glenn, T, Huo, K, Ramani, K. Ani-Bot: A Mixed-Reality Modular Robotics System. In: Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology. New York, NY, USA: Association for Computing Machinery; p. 119–121 (2017). Available from: https://doi.org/10.1145/3131785.3131833

Mutlu, M, Hauser, S, Bernardino, A, Ijspeert, A. Playdough to Roombots: Towards a Novel Tangible User Interface for Self-reconfigurable Modular Robots. In: IEEE International Conference on Robotics and Automation (ICRA). Brisbane, Australia; p. 1970–1977 (2018).

Nielsen, J. & Lund, H. H. Modular robotics as a tool for education and entertainment. Comput. Hum. Behav. 24, 234–248 (2008).

Piper, B, Ratti, C, Ishii, H. Illuminating Clay: A 3-D Tangible Interface for Landscape Analysis. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. New York, NY, USA: Association for Computing Machinery; p. 355–362 (2002). Available from: https://doi.org/10.1145/503376.503439

Chan, P.Y., Enderle, J.D. Automatic door opener. In: IEEE Annual Northeast Bioengineering Conference. Storrs, Connecticut, USA; p. 139–140 (2000).

Pan, G. et al. GeeAir: a universal multimodal remote control device for home appliances. Personal. Ubiquit. Comput. 14, 723–735 (2010).

Lee, K. M., Teng, W. G. & Hou, T. W. Point-n-Press: An intelligent universal remote control system for home appliances. IEEE Transs. Autom. Sci. Eng. 13, 1308–1317 (2016).

Susany, R., Rotar, R. Remote Control Android-based Applications for a Home Automation implemented with Arduino Mega 2560 and ESP 32. Technium 2(2) (2020).

Biesiadecki, J. J., Leger, P. C. & Maimone, M. W. Tradeoffs between directed and autonomous driving on the mars exploration rovers. Int. J. Robot. Res. 26, 91–104 (2007).

Brantner, G. & Khatib, O. Controlling Ocean One: Human–robot collaboration for deep-sea manipulation. J. Field Robot. 38, 28–51 (2021).

Guthart, G.S., Salisbury, J.K. The Intuitive/sup TM/telesurgery system: overview and application. In: IEEE International Conference on Robotics and Automation (ICRA). San Francisco, CA, USA; p. 618–621 (2000).

Marescaux, J. et al. Transatlantic robot-assisted telesurgery. Nature 413, 379–380 (2001).

Suzuki, H. & Wood, R. J. Origami-inspired miniature manipulator for teleoperated microsurgery. Nat. Mach. Intell. 2, 437–446 (2020).

Choi, P.J., Oskouian, R.J., Tubbs, R.S. Telesurgery: past, present, and future. Cureus 10(5) (2018).

Kaneko, K, Harada, K, Kanehiro, F, Miyamori, G, Akachi, K. Humanoid robot HRP-3. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). Nice, France; p. 2471–2478 (2008).

Pandey, A. K. & Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 25, 40–48 (2018).

Shigemi, S, Goswami, A., Vadakkepat, P. ASIMO and humanoid robot research at Honda. Humanoid robotics: A reference. p. 55–90 (2018).

Goodrich, M. A., Crandall, J. W. & Barakova, E. Teleoperation and beyond for assistive humanoid robots. Rev. Hum. fActors Ergon. 9, 175–226 (2013).

Khatib, O., Jorda, M., Park, J., Sentis, L. & Chung, S. Y. Constraint-consistent task-oriented whole-body robot formulation: Task, posture, constraints, multiple contacts, and balance. Int. J. Robot. Res. 41, 1079–1098 (2022).

Nakaoka, S. et al. Learning from observation paradigm: Leg task models for enabling a biped humanoid robot to imitate human dances. Int. J. Robot. Res. 26, 829–844 (2007).

Darvish, K. et al. Whole-body geometric retargeting for humanoid robots. In: IEEE-RAS International Conference on Humanoid Robots (Humanoids). Toronto, Canada; p. 679–686 (2019).

Di Fava, A, Bouyarmane, K, Chappellet, K, Ruffaldi, E, Kheddar, A. Multi-contact motion retargeting from human to humanoid robot. In: IEEE-RAS International Conference on Humanoid Robots (Humanoids). Cancun, Mexico; p. 1081–1086 (2016).

Ayusawa, K. & Yoshida, E. Motion retargeting for humanoid robots based on simultaneous morphing parameter identification and motion optimization. IEEE Trans. Robot. 33, 1343–1357 (2017).

Escalera, J.A., Ferre, M, Aracil, R, Baca, J. ROBMAT: Teleoperation of a modular robot for collaborative manipulation. In: International Conference on Knowledge-Based and Intelligent Information and Engineering Systems (KES). Vietri sul Mare, Italy; p. 1204–1213 (2007).

Özgur, A, Bonardi, S, Vespignani, M, Möckel, R, Ijspeert, A.J. Natural user interface for Roombots. In: IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). Edinburgh, Scotland; p. 12–17 (2014).

Chen, L., Li, C., Fahmy, A. & Sienz, J. GestureMoRo: an algorithm for autonomous mobile robot teleoperation based on gesture recognition. Sci. Rep. 14, 6199 (2024).

Mutlu, M. et al. Natural user interface for lighting control: Case study on desktop lighting using modular robots. In: IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN). New York City, New York, USA; p. 288–293 (2016).

Huang, J. L., Zhakypov, Z., Sonar, H. & Paik, J. A reconfigurable interactive interface for controlling robotic origami in virtual environments. Int. J. Robot. Res. 37, 629–647 (2018).

Belke, C.H., Holdcroft, K, Sigrist, A, Paik, J. Morphological flexibility in robotic systems through physical polygon meshing. Nat. Mach. Intell. (2023).

Gerbl, M. & Gerstmayr, J. Self-reconfiguration of shape-shifting modular robots with triangular structure. Robot. Auton. Syst. 147, 103930 (2022).

Daudelin, J. et al. An integrated system for perception-driven autonomy with modular robots. Sci. Robot. 3, eaat4983 (2018).

Kvalsund, M.K., Glette, K, Veenstra, F. Centralized and decentralized control in modular robots and their effect on morphology. arXiv preprint arXiv:220613366. (2022).

White, J, Post, M.A., Tyrrell, A. A Novel Connection Mechanism For Dynamically Reconfigurable Modular Robots. In: IEEE International Conference on Informatics in Control, Automation and Robotics (ICINCO): proceedings. Lisbon, Portugal; (2022).

Rubenstein, M., Cornejo, A. & Nagpal, R. Programmable self-assembly in a thousand-robot swarm. Science 345, 795–799 (2014).

Kaspar, C., Ravoo, B. J., van der Wiel, W. G., Wegner, S. V. & Pernice, W. H. The rise of intelligent matter. Nature 594, 345–355 (2021).

Seo, J., Paik, J. & Yim, M. Modular reconfigurable robotics. Annu. Rev. Control, Robot., Auton. Syst. 2, 63–88 (2019).

Sheridan, T. B. Space teleoperation through time delay: Review and prognosis. IEEE Trans. Robot. Autom. 9, 592–606 (1993).

Johnson, S, Rae, I, Mutlu, B., Takayama, L. Can you see me now? How field of view affects collaboration in robotic telepresence. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. Seoul, Republic of Korea; p. 2397–2406 (2015).

Yim, M. et al. Modular reconfigurable robots in space applications. Auton. Robots 14, 225–237 (2003).

Vaillant, J. et al. Vertical ladder climbing by the HRP-2 humanoid robot. In: IEEE-RAS International Conference on Humanoid Robots (Humanoids). Madrid, Spain; p. 671–676 (2014).

Kheddar, A. et al. Humanoid robots in aircraft manufacturing: The Airbus use cases. IEEE Robot. Autom. Mag. 26, 30–45 (2019).

Bolotnikova, A, Gergondet, P, Tanguy, A, Courtois, S, Kheddar, A. Task-Space Control Interface for SoftBank Humanoid Robots and its Human-Robot Interaction Applications. In: IEEE/SICE International Symposium on System Integration (SII). Iwaki, Fukushima, Japan; p. 560–565 (2021).

Liu, C. & Yim, M. A Quadratic Programming Approach to Manipulation in Real-Time Using Modular Robots. Int. J. Robot. Comput. 3, 121–145 (2021).

Gholami, S, Tassi, F, De Momi, E, Ajoudani, A A reconfigurable interface for ergonomic and dynamic tele-locomanipulation. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); p. 4260–4267 (2021).

Bouyarmane, K., Chappellet, K., Vaillant, J. & Kheddar, A. Quadratic programming for multirobot and task-space force control. IEEE Trans. Robot. 35, 64–77 (2018).

Djeha, M., Tanguy, A. & Kheddar, A. Adaptive-gains enforcing constraints in closed-loop qp control. IEEE Robot. Autom. Lett. 5, 6504–6511 (2020).

CNRS-AIST JRL, CNRS LIRMM.: Interface for simulation and robot control systems. Accessed: (2025-07-31). Available from: https://jrl.cnrs.fr/mc_rtc/.

Støy, K. Reconfigurable Robots. In: Kacprzyk J, Pedrycz W, editors. Handbook of Computational Intelligence. Berlin, Heidelberg: Springer-Verlag; p. 1407–1421 (2015).

Khodr, H., Mutlu, M., Hauser, S., Bernardino, A. & Ijspeert, A. An optimal planning framework to deploy self-reconfigurable modular robots. IEEE Robot. Autom. Lett. 4, 4278–4285 (2019).

Bolotnikova, A. et al. Optimized user-guided motion control of modular robots. https://doi.org/10.5281/zenodo.16633086 (2025).

Acknowledgements

We thank Alexander Sigrist for his initial work exploring sensing modules and excellent discussions. We thank Pierre Gergondet, Mehdi Benallegue and Mohamed Djeha for useful advice on optimization-based control. This research was supported by the EPFL Center for Intelligent Systems (CIS), the European Space Agency’s (ESA) Networking/Partnering Initiative (NPI 646-2018 and NPI 645-2018), and Swiss Space Innovation. This research was supported in part by: • EPFL Center for Intelligent Systems (CIS) AB. •European Space Agency’s (ESA) Networking/Partnering Initiative (NPI 646-2018, NPI 645-2018) KAH, CHB. • Swiss Space Innovation KAH, JP.

Author information

Authors and Affiliations

Contributions

• Conceptualization A.B., K.A.H., C.H.B. and J.P. • Funding acquisition A.B., A.I., J.P. • Investigation A.B., K.A.H., H.C. • Methodology A.B., K.A.H., and C.H.B. • Software A.B. and K.A.H. • Supervision A.I. and J.P. • Visualization A.B. and K.A.H. • Writing - original draft A.B. and K.A.H. • Writing - review & editing A.B., K.A.H., A.I., and J.P.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Consent to publish/participate

The authors affirm that any photos and videos of human likenesses consist strictly of the authors involved in the preparation of this manuscript, who consent to the publication of said videos and images.

Peer review

Peer review information

Nature Communications thanks Chao Liu, Luka Peternel, and Francesco Tassi for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Source data

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bolotnikova, A., Holdcroft, K., Cerbone, H. et al. Optimized user-guided motion control of modular robots. Nat Commun 16, 8675 (2025). https://doi.org/10.1038/s41467-025-63706-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-025-63706-6