Abstract

Machine learning (ML) has demonstrated its potential in atomistic simulations to bridge the gap between accurate first-principles methods and computationally efficient empirical potentials. This is achieved by learning mappings between a system’s structure and its physical properties. State-of-the-art models for potential energy surfaces typically represent chemical structures through (semi-)local atomic environments. However, this approach neglects long-range interactions (most notably electrostatics) and non-local phenomena such as charge transfer, leading to significant errors in the description of molecules or materials in polar anisotropic environments. To address these challenges, ML frameworks that predict self-consistent charge distributions in atomistic systems using the Charge Equilibration (QEq) method are currently popular. In this approach, atomic charges are derived from an electrostatic energy expression that incorporates environment-dependent atomic electronegativities. Herein, we explore the limits of this concept at the example of the previously reported Kernel Charge Equilibration (kQEq) approach, combined with local short-ranged potentials. To this end we consider prototypical systems with varying total charge states and applied electric fields. We find that charge equilibration-based models perform well in most situations. However, we also find that some pathologies of conventional QEq carry over to the ML variants in the form of spurious charge transfer and overpolarization in the presence of static electric fields. This indicates a need for new methodological developments.

Similar content being viewed by others

Introduction

One of the most significant advances in molecular and materials simulation over the past decade has been the introduction of atomistic machine learning interatomic potentials (MLIPs)1,2,3,4. These methods can approach the accuracy of ab initio techniques while scaling to systems containing hundreds of thousands of atoms, opening the door to discoveries previously thought impossible5,6,7. This breakthrough relies generally on the assumption that the total energy of a system can be decomposed into atomic contributions. These contributions are modeled as a function of each atom’s local chemical environment within a defined cutoff radius, using ML techniques such as Neural Networks (NN)8,9 or Gaussian Process Regression (GPR)10,11.

However, due to their inherent locality approximation, these models are fundamentally limited by their inability to account for long-range interactions and non-local effects such as charge transfer. This is often unproblematic since long-range effects are effectively screened in isotropic condensed phase systems. It can become significant in systems involving polar interfaces or complex ionic interactions, however12. In such cases, local models reach the boundaries of their applicability.

To address these limitations, considerable effort is being devoted to developing methods that incorporate interactions beyond the cutoff of local descriptors. One promising approach involves the use of message-passing neural networks (MPNNs), which extend the effective receptive field of the models by propagating information through a graph representation of the atomistic structure. This enables such models to capture interactions over longer distances, alleviating some of the constraints imposed by the locality assumption9,13,14,15,16. However, message passing is not effective for describing truly long-range interactions as each additional layer increases the computational cost. Furthermore, the large receptive fields of these MLIPs makes parallelization across devices inefficient, precluding their application to very large systems 17.

As a consequence of this, models with an explicit physics-based representation of long-range interactions have been of great interest in recent years, including the long-distance equivariant (LODE) framework18,19 and its extensions20, BpopNN21, deep potential long-range (DPLR) models using charges obtained from maximally localized Wannier functions22, or self-consistent field neural networks (SCFNN)23. On a related note, there has also been significant work on developing MLIPs capable of capturing the effects of an external electric field (e.g. for electrochemical or spectroscopic applications), without explicitly predicting charges or directly modeling long-range interactions 24,25,26,27.

For electrostatics in particular, arguably the most adopted approach is to build MLIP models that self-consistently predict atomic charges or charge distributions by minimizing a charge-dependent energy expression. This enables a physically correct description of long-range interactions, but also automatically provides a means to include external fields and varying total charge states on the same footing. The earliest implementation of this concept in ML was introduced in the CENT models28,29,30, where Goedecker and co-workers combined a classical Charge Equilibration (QEq) method with environment-dependent electronegativities predicted by neural networks (NNs). This methodology was later advanced into the Fourth Generation High-Dimensional Neural Network Potentials (4G-HDNNP), where a local NN potential is combined with a CENT-like model fitted to reproduce Hirshfeld charges31,32. This idea was subsequently also used in other NN potentials33,34, linear Atomic Cluster Expansion models35, and the Kernel Charge Equilibration (kQEq) method. In the latter case, the models were directly trained on observables such as energies, forces or dipole moments 36,37.

QEq has thus been found to be highly effective as a building block of long-ranged ML models. Nonetheless, there are some known pathologies of this approach, which have been discussed in the context of classical force fields38. While ML-based charge equilibration models (ML-QEq) are in general much more expressive and accurate than their classical counterparts37, it has not been thoroughly investigated to what extent these pathologies are inherited. This paper aims to answer this question. To this end, we first summarize the known limtations of QEq and analyze to what extent they can be expected to carry over to ML-QEq models. Subsequently, we investigate critical cases numerically by applying ML-QEq methods to systems with varying total charge, cluster fragmentation and applied electric fields.

Results

Classical charge equilibration methods

QEq is widely used to compute partial atomic charges for classical MD simulations, enabling the treatment of long-range electrostatic interactions and polarization effects via electronegativity equalization. As detailed in the methods section, the central idea behind QEq and related approaches is that the charge distribution in a system can be obtained by minimizing a charge-dependent energy function. This function describes the interplay between the Coulomb interaction among the partial charges (favoring charge separation), the electronegativity differences between atoms (favoring flow of negative charge from electropositive to electronegative elements), and the electronic hardness of each atom (limiting the total amount of charge that can be accommodated by each atom).

While QEq yields reasonable charge distributions near equilibrium geometries, it is well known to overestimate charge transfer between dissociated atoms or molecules39,40. This is particularly drastic in the atomized limit, where the off-diagonal elements of the hardness matrix (see Methods section) vanish, so that the atomic charges become determined solely by differences in atomic electronegativities and the sum of the diagonal hardness terms. To eliminate spurious charge transfer in this limit, atomic electronegativities would need to asymptotically vanish, or hardness parameters diverge to infinity. Neither of these is possible within the standard QEq formalism, where both quantities are treated as elemental constants 41.

One of the conceptual predecessors to modern environment-dependent ML-QEq models is the Charge Transfer with Polarization Current Equilibration (QTPIE) method39,40. QTPIE addresses key deficiencies of conventional QEq by introducing charge transfer variables that represent polarization currents between atom pairs. The atomic partial charge qi is then obtained as a sum of pairwise charge transfers to and from atom i. Here, unphysical long-range charge transfer is mitigated by dampening the polarization current with the distance. Interestingly, this atom-pairwise approach was found to be equivalent to a more familiar QEq-like picture that uses overlap integrals Sij to renormalize the atomic electronegativities, making each atom’s effective electronegativity dependent on the local electronic environment just as in ML-QEq methods 41.

This analogy raises the question whether ML-QEq methods suffer from the same limitations as classical QEq, or whether they are mitigated by the use of environment-dependent electronegativities. To answer this question, we focus on two situations where (semi)local MLIPs cannot be applied, namely on systems with variable total charge and under applied electric fields, using water clusters as prototypical test systems.

Variable total charges

As a first example, we consider water clusters in different states of (de)protonation with total charges of −1, 0, and 1 e. A local potential with a sufficiently large cutoff could in principle infer the total charge from the number of hydrogen atoms in such clusters. However, this must fail when the clusters are dissociated into their molecular components by a distance larger than the cut-off radius of the local descriptor.

To prepare the dataset of water clusters, the local minima of neutral water clusters containing 3 to 10 molecules, as provided by Xantheas and coworkers42, were used as a starting point. To generate charged configurations, either a proton or a hydroxyl group was removed from each neutral cluster, resulting in positively and negatively charged configurations. Non-equilibrium configurations of these clusters were then generated through molecular dynamics (MD) simulations employing the semiempirical GFN2-xTB method43,44,45. Finally, expanded and contracted clusters were generated by displacing the molecules relative to each cluster’s center of mass by factors ranging from 0.9 to 5.0 (see Fig. 1). For all configurations thus obtained, energies and forces were computed with the hybrid PBE0 functional. A full overview of the dataset is provided in Supplementary Note 1, with RMSE values for validation and test sets in Supplementary Table 1.

a Exemplary cluster in the equilibrium and dissociated state. b Learning curves for energies (blue) and forces (red). Mean and standard deviations for three random training sets are shown for each size. RMSEs are calculated on the validation set composed of 2000 randomly selected structures for each net charge state. c Relative distribution of energy errors for a test set composed of 5000 random structures for each net charge state (represented by different color bars), and the corresponding parity plot.

Figure 1 presents learning curves for training an MLIP composed of a short-range Gaussian Approximation Potential (GAP) and a long-ranged kQEq model, with all general conclusions derived below being independent of this particular type of short-ranged MLIP architecture. Full details on architecture and training of these models are provided in the methods section. With sufficient training data, this MLIP can accurately predict energies and forces for all charge states and intermolecular distances, achieving RMSEs of 2 meV/atom and 49 meV/Å. The figure also shows the energy error distributions of the test set in terms of histograms and correlation plots comparing DFT and GAP+kQEq energies. Neutral clusters exhibit lower energy errors relative to charged ones, but also span a smaller energy range. Additionally, neutral clusters constitute the majority of the training set (55% of all structures) and are the only group for which local minima are included. Overall, the performance for all charge states is thus satisfactory.

Notably, this accuracy also translates to an accurate description of long-range interactions. This is shown for the example of dissociation curves for three representative clusters (each corresponding to a different total charge state) in Fig. 2. When comparing the DFT energy with that obtained from the GAP+kQEq model (for comparisons with standalone GAP and kQEq models see Supplementary Fig. 1 and Supplementary Note 2), we can see that the potential energy surface (PES) is accurately described across the entire range of inter-molecular distances, including interactions outside of the descriptor cutoff distance. However, at longer distances (i.e. beyond a two-fold expansion) the ML-curves display small energy fluctuations which are absent at the DFT level. These will be analyzed in more detail below.

a Comparison between DFT (points) and the GAP+kQEq (full lines) energies. b Illustration of equilibrium and dissociated water clusters for each energy curve, where atoms are colored according to their atomic charges. For anionic and cationic clusters the OH− and H3O+ fragments are circled, respectively.

It is worth noting that all models discussed so far have not been trained on reference atomic charges. This contrasts with common practice in the development of long-range MLIPs, where schemes such as Hirshfeld partitioning are often used to generate reference charges31,34,35. On the other hand, models where charges are considered solely as learnable weights in long-range energy expressions are becoming increasingly popular46,47. Indeed, given that the partial charges of atoms in molecules are not physical observables, ML charges obtained via fitting potential energy surfaces can nonetheless acquire a meaningful interpretation and reflect the underlying physics of the system. This is somewhat analogous to density representations obtained with Resolution-of-the-Identity (RI) and Density Fitting (DF) techniques in quantum chemistry, which can also differ significantly depending on whether an overlap (density-based) or Coulomb (energy-based) metric is used48. Indeed, it has been argued that charge partitioning schemes can be considered a primitive form of RI 49.

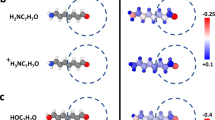

In order to judge the quality of the predicted charges, we therefore choose not to compare them to an (arbitrary) reference partitioning scheme. Instead, we consider how the sums of atomic charges for individual molecules fluctuate during the dissociation of the corresponding clusters. This provides insight into intermolecular charge transfer and polarization, which should be robustly captured by any reasonable partitioning. Figure 3 shows the evolution of the molecular charges for the dissociation of a representative test set cluster at each charge state. Here, we compare PBE0 Hirshfeld charges (top) with the kQEq charges of a model trained on energies and forces (center) and specialized kQEq models trained to reproduce Hirshfeld charges (bottom).

Charges are shown for each total charge state and DFT-based Hirshfeld partitioning (top), a GAP+kQEq model fitted on energies and forces (center), and kQEq models fitted on Hirshfeld charges (bottom). Different shades of lines represent different molecules in each cluster. Note that a single energy/force model was trained for all charge states, whereas individual models were trained for each charge state when fitting to charges.

At first glance, all methods capture the same basic trends. The total charges are delocalized among all molecules in the equilibrium geometry. Upon dissociation, the charge localizes on one fragment (H3O+ or OH−, respectively), while the H2O molecules become charge neutral. Interestingly, even the DFT reference displays a small deviation from integer charges in this limit (particularly for the anionic cluster), due to the well known delocalization error50. Because a hybrid functional is used (and because the electronegativity differences between the fragments are relatively small) DFT does approximately recover the correct limit of integer charges in this case, however. Deviations are more pronounced in the ML models, with deviations of up to 0.05 elementary charges. They thus inherit the overdelocalization tendency from the underlying QEq model, which is much more pronounced than for hybrid DFT41. The models that are explicitly fitted on charges are actually slightly worse in this respect, indicating that this is a fundamental property of ML-QEq models and not related to the specific training setup.

Beyond these general trends, there are also notable differences in how the methods approach their asymptotic limits. In the DFT case, the molecular charges monotonically approach the asymptotic values, whereas the ML models show much more variation and even local minima and maxima in some cases. This is also related to the fact that QEq-based models can arbitrarily transfer charge between molecules, as long as this is variationally convenient. This leads to an exaggerated response of the charges to small perturbations in the electrostatic potential, and leads to the small energy fluctuations upon dissociation seen in Fig. 2.

External fields

One of the key advantages of variational charge equilibration methods is their inherent ability to dynamically adapt the atomic charges in response to external fields. This feature is particularly important for MLIPs intended for applications involving non-isotropic environments, such as interfaces in battery systems or electrocatalysis. Here, local fields can vary significantly and do not tend to cancel out in the course of MD simulations 12.

To assess the ability of QEq-based methods for accurately describing the PES in the presence of an external electric field, we selected a subset of 3030 non-equilibrium neutral water cluster configurations from the dataset described in the previous section. Based on this, a second dataset in which each configuration was subjected to a uniform external electric field was generated. These fields were defined based on a randomly oriented unit vector scaled by a randomly selected magnitude ranging from 0.01 to 0.2 V/Å. This range was chosen to reflect realistic conditions at electrode interfaces, where local field strengths can reach up to 0.2 V/Å24,51,52. Higher fields, particularly around 0.35 V/Å, are known to induce water molecule dissociation53, and were therefore avoided to remain within the regime of non-destructive field strengths53.

To explore optimal training strategies in this setting, we constructed training sets following two complementary approaches, depicted in Fig. 4. In the first case, a set of 100 field-perturbed data points was augmented with a varying number of field-free configurations. In the second case, a set of 100 field-free configurations was augmented with a varying number of field-perturbed configurations. This strategy was chosen in order to determine how training data points with and without a field help or hinder the predictive performance of the model for both kinds of data. This reveals that incorporating field-perturbed structures into the training set is crucial for achieving optimal performance for both field-free and perturbed systems. While adding field-free configurations also initially improves the performance for perturbed systems, this benefit quickly tapers off as more structures are added. In contrast, adding field-perturbed structures is beneficial to both field-free and field perturbed systems. Nevertheless, an accuracy gap between the field-perturbed and field-free structures remains.

Shown are energy errors for a model trained on an initial set of 100 field-perturbed configurations, which is augmented by N field-free configurations (dashed lines) and a model trained on an initial set of 100 field-free configurations, which is augmented by N field-perturbed configurations (full lines). Color scheme: blue - test set of field-perturbed structures, red - test set of unperturbed structures.

Based on these findings, a final model was trained using a dataset comprising 2000 field-perturbed and 1000 field-free configurations. While overall low errors (ca. 2 meV/atom for energies and 60 meV/Å for forces) are achieved, the errors for field-perturbed cases remain significantly higher than for field-free ones. This increased difficulty points to an inherent limitation in how well QEq-based methods can describe polarization.

As described in the Methods section, the inclusion of an external electric field in the QEq energy expression corresponds to an additive perturbation of the atomic electronegativities. This perturbation depends solely on the atomic positions and the direction and magnitude of the field, leading to a quadratic response of the energy and a linear response of the charges with respect to the field strength. This means that the field response is effectively ML-free in QEq-based MLIPs. Nonetheless, incorporating fields in the training is beneficial, since it leads to an optimal mean-field parameterization for the given range of applied fields.

Figure 5 illustrates the qualitative disagreement between DFT and kQEq for a water cluster subjected to external electric fields of varying magnitude but fixed direction. As shown, the QEq-based MLIP fails to reproduce the correct shape of the energy response to the field. Figure 5 also shows the difference in atomic charges obtained under −0.2 V/Å and 0.2 V/Å fields. The kQEq model predicts that the external field induces a dipole aligned with the field direction, resulting in a charge redistribution within the cluster. In contrast, changes in DFT-based Hirshfeld charges indicate a polarization of each individual water molecule, but no charge redistribution between the water molecules. This behavior highlights the intrinsic limitations of QEq-based methods in adequately capturing polarization effects. A further example demonstrating this linear charge redistribution in periodic water slabs is provided in Supplementary Fig. 2 and in Supplementary Note 3.

a Energetic response of DFT and machine learning (GAP+kQEq) for a neutral water cluster subjected to external electric fields of varying magnitudes along a fixed direction. Energies are shifted so that the DFT energy is zero at ∣ϵ = 0.0∣. b Difference in atomic charges obtained from Hirshfeld partitioning (DFT) and kQEq under external fields of −0.2 V/Å and 0.2 V/Å.

More crucial than the quantitative disagreement between ML and DFT is the fact that the overpolarization tendency of QEq-based models can lead to pathological behavior under certain conditions. To illustrate this, we performed an MD simulation analogous to the work of Modine and co-workers38 (who used a classical QEq model). Specifically, we applied a constant external electric field of 0.2 V/Å in the z-direction to a water cluster consisting of 10 molecules. To confine the motion of the molecules in the z-direction, we imposed a harmonic constraint represented by planar boundaries at z = ± 30 Å.

As illustrated in Fig. 6, the water cluster begins to split into two distinct fragments around the 150 ps mark: one composed of two molecules moving in the direction of the applied field, and the other composed of eight molecules moving in the opposite direction. The larger subcluster accumulates a net negative charge and migrates against the field, while the smaller, positively charged subcluster moves along the field direction. The magnitudes of the net charges on both subclusters are approximately equal and opposite. Such charge separation is unphysical under realistic conditions, as it would require a potential difference approaching the ionization energy of water (12.6 eV)38, a threshold not reached under the simulated field strength. This behavior is a clear artifact of the QEq formalism, which enforces a linear and unconstrained charge response. The incorporation of environment-dependent ML electronegativities does not mitigate this limitation, indicating that the underlying QEq model remains fundamentally inadequate for accurately capturing polarization and charge transfer under strong external fields in non-metallic systems.

Discussion

Charge equilibration based on QEq-like ML-models is currently the state-of-the-art approach to introduce non-local charge transfer and electrostatic interactions into MLIPs. We find that such models can in principle accurately describe multiple total charge states and naturally incorporate a mechanism to describe the response to external fields. Despite their overall good performance, our results also showcase the limitations of ML-QEq models, however. While potential energy surfaces can be accurately reproduced, delocalization and overpolarization errors (that are known for classical QEq models) can lead to problematic behavior. This manifests in unphysical intermolecular charge transfer (particularly for fragmented systems) and a qualitatively incorrect response of both energies and charges to electric fields. A drastic consequence of this is pathological behavior in cases when a static electric field is applied in an MD simulation. Importantly, these issues are independent of the specific MLIP used herein, and can be traced to fundamental limitations of ML-QEq models.

Individual aspects of these problems can be addressed, e.g. by constraining the total charge of each subfragment or molecule. However, this strategy is not universally applicable. In particular, in reactive simulations (one of the main application areas of MLIPs), predefining individual fragments is not feasible. Nevertheless, QEq has some highly desirable properties, namely its variational nature and the capability to describe non-local effects. This points to a need to develop new ML charge equilibration approaches beyond the QEq paradigm. In the classical force-field domain, the Atom Condensed Kohn Sham (ACKS2) approach has proven to be the most promising successor for QEq, though it requires a complex atom pairwise parameterization54,55. Alternatively, a more complex (non-local) expression for the site energy potentially could also provide an avenue to overcoming the pathologies of QEq. The methods and datasets presented herein can provide a benchmark for such new developments.

Methods

Machine learning interatomic potentials

All ML models described herein are based on the sparse Gaussian Process Regression (GPR) methodology. Specifically, we approximate a target quantity y(x) as a linear combination of M basis functions \(\tilde{y}({\bf{x}})\)

where wm are regression weights that are fitted to match ab initio reference values, k is a kernel function, x is a representation vector (to be defined below) and M denotes the size of the representative set. Although this set can generally be chosen at random, in our case we select diverse points using CUR decomposition56, as is routinely done for Gaussian Approximation Potentials (GAP)1. In contrast to the representative set, N > M will denote the size of the total training set.

The GPR framework is used to create MLIPs that incorporate both short-range (SR) and long-range (LR) interactions. To this end, the total energy of the system is described via a SR part ESR (described by GAP) and a LR part EQEq described by (Kernel) Charge Equilibration (kQEq):

The SR energy of the system is expressed as a sum of atomic energy contributions ϵ(xi) that depend on local environments described by the descriptor xi.

where the summation goes over all atoms in the system Nat.

This local atomic contribution ϵ(xi) is determined using a straigthforward GPR model as expressed in Eq. (1):

The LR part of the energy is described by the QEq framework, where the total energy can be approximated as a second order Taylor expansion of on-site atomic charges qi combined with a screened pairwise Coulomb interaction (assuming Gaussian charge distributions with width αi):

Here, the screening parameter γij is computed from the width parameter, χi is the electronegativity of atom i and Ji is the non-classical contribution to the atomic hardness 37,57.

Self-consistent partial charges can be obtained by minimizing Eq. (5). As this expression is quadratic in the atomic charges qi, the ground-state charge distribution can be computed analytically. Specifically, setting the derivatives of the total energy with respect to the partial charges to zero yields a linear system of equations, which can be solved directly to obtain the self-consistent charges (under the constraint that total system charge, Qtot, is conserved):

with Aij being the element of the hardness matrix A defined as:

With this, ELR can be expressed in a matrix notation as

For a description of the incorporation of periodic boundary conditions, see Supplementary Note 4. In contrast to classical QEq models, where χ is an elemental constant, ML-based QEq models introduce environment-dependent electronegativities30,31. The kQEq model used herein achieves this via GPR, analogously to how the atomic energies are expressed in the SR energy 37:

These environment-dependent electronegativites are then used for the QEq scheme instead of constant element-dependent ones. For full details, please refer to our previous work 37.

The SR and LR models can in principle be trained separately. This is e.g. the strategy pursued for 4G-HDNNPs, where the QEq model is trained on Hirshfeld charges and kept fixed during the training of the short-ranged NN. However, in order to achieve a comprehensive description of various phenomena, such as polar interfaces, systems with varying total charge, or interactions with applied external fields, a joint training of both models is used herein.

External fields

One of the advantages of variational charge equilibration approaches like QEq is that they allow a straightforward description of the interactions of a system with an external electric field. This can be achieved using the method of finite fields, following the formalism of Chen and Martínez38,58. This leads to a modification of the QEq energy expression due to the field ϵ as

where R is a matrix of atomic coordinates. The summation in this equation is over the cartesian directions μ = x, y, z. As can be seen, applying an external field thus perturbs the electronegativies by a scalar Rμϵμ. Minimizing Eq. (10) in the same way as Eq. (5), an analogous system of linear equations is obtained.

Computational settings

Reference data for neutral and charged water clusters was obtained using FHI-Aims59 with the hybrid PBE0 functional60 and tight basis set and integration settings. MD simulations for generating non-equilibrium configurations of charged clusters were performed with the xtb code, employing the semiempirical tight-binding method GFN2-xTB at 300 K43,44,45.

We use the Smooth Overlap of Atomic Positions (SOAP) descriptor61 as a representation of atomic environments, as implemented in QUIP via the quippy Python interface62,63. For the model describing varying total charge states, two SOAP descriptors with parameters \({l}_{\max }=5\), \({n}_{\max }=6\) and rcut = 3 Å or rcut = 5 Å, respectively, were used. Regularization parameters of 10−6 eV for energies and 10−3 eV/Å for forces were used. Non classical hardness for both elements were set to 0 Hartree. For clusters with applied fields, two SOAP descriptors with parameters \({l}_{\max }=6\), \({n}_{\max }=8\), rcut = 2.4 Å, and \({l}_{\max }=3\), \({n}_{\max }=8\), rcut = 5 Å were used. Non-classical hardness for both elements were set to 0.1 Hartree. Regularization parameters of 0.001 eV for energies and 0.01 eV/Å for forces were used.

MD of the water cluster under the influence of an external field was performed with the Langevin thermostat in the NVT ensemble, using a time step of 0.5 fs at 300 K. A Hookean constraint plane were placed at z = ± 30 Å with k = 0.5 eVÅ−2. The system was evolved in time using the Atomic Simulation Environment (ASE)64,65 calculator implemented in q-pac.

Data availability

Final fitted model together with all data are publicly available via MPCDF gitlab https://gitlab.mpcdf.mpg.de/mvondrak/qpac_data.

Code availability

The code via gitlab https://gitlab.com/jmargraf/qpac/ (commit 6a43ce73d8735c6c6079682f649a8231cc68a4ce).

References

Deringer, V. L. et al. Gaussian process regression for materials and molecules. Chem. Rev. 121, 10073–10141 (2021).

Behler, J. Four generations of high-dimensional neural network potentials. Chem. Rev. 121, 10037–10072 (2021).

Unke, O. T. et al. Machine learning force fields. Chem. Rev. 121, 10142–10186 (2021).

Batatia, I. et al. A foundation model for atomistic materials chemistry. Preprint at https://arxiv.org/abs/2401.00096 (2024).

Deringer, V., Caro, M. & Csányi, G. A general-purpose machine-learning force field for bulk and nanostructured phosphorus. Nat. Commun. 11, 5461 (2020).

Deringer, V. L. et al. Origins of structural and electronic transitions in disordered silicon. Nature 589, 59–64 (2021).

Vandenhaute, S., Cools-Ceuppens, M., DeKeyser, S., Verstraelen, T. & Van Speybroeck, V. Machine learning potentials for metal-organic frameworks using an incremental learning approach. npj Comput. Mater. 9, 19 (2023).

Batatia, I., Kovács, D. P., Simm, G. N. C., Ortner, C. & Csányi, G. Mace: higher order equivariant message passing neural networks for fast and accurate force fields. Advances in Neural Information Processing Systems (NeurIPS) 2022.

Kovács, D. P., Batatia, I., Arany, E. S. & Csányi, G. Evaluation of the mace force field architecture: from medicinal chemistry to materials science. J. Chem. Phys. 159, 044118 (2023).

Bartók, A. P., Payne, M. C., Kondor, R. & Csányi, G. Gaussian approximation potentials: the accuracy of quantum mechanics, without the electrons. Phys. Rev. Lett. 104, 136403 (2010).

Klawohn, S. et al. Gaussian approximation potentials: theory, software implementation and application examples. J. Chem. Phys. 159, 174108 (2023).

Staacke, C. G. et al. On the role of long-range electrostatics in machine-learned interatomic potentials for complex battery materials. ACS Appl. Energy Mater. 4, 12562–12569 (2021).

Schütt, K. T., Arbabzadah, F., Chmiela, S., Müller, K. R. & Tkatchenko, A. Quantum-chemical insights from deep tensor neural networks. Nat. Commun. 8, 13890 (2017).

Schütt, K. T., Sauceda, H. E., Kindermans, P.-J., Tkatchenko, A. & Müller, K.-R. SchNet - a deep learning architecture for molecules and materials. J. Chem. Phys. 148, 241722 (2018).

Lubbers, N., Smith, J. S. & Barros, K. Hierarchical modeling of molecular energies using a deep neural network. J. Chem. Phys. 148, 241715 (2018).

Batzner, S. et al. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nat. Commun. 13, 2453 (2022).

Musaelian, A. et al. Learning local equivariant representations for large-scale atomistic dynamics. Nat. Commun. 14, 579 (2023).

Grisafi, A. & Ceriotti, M. Incorporating long-range physics in atomic-scale machine learning. J. Chem. Phys. 151, 204105 (2019).

Huguenin-Dumittan, K. K., Loche, P., Haoran, N. & Ceriotti, M. Physics-inspired equivariant descriptors of nonbonded interactions. J. Phys. Chem. Lett. 14, 9612–9618 (2023).

Faller, C., Kaltak, M. & Kresse, G. Density-based long-range electrostatic descriptors for machine learning force fields. J. Chem. Phys. 161, 214701 (2024).

Xie, X., Persson, K. A. & Small, D. W. Incorporating electronic information into machine learning potential energy surfaces via approaching the ground-state electronic energy as a function of atom-based electronic populations. J. Chem. Theory Comput. 16, 4256–4270 (2020).

Zhang, L. et al. A deep potential model with long-range electrostatic interactions. J. Chem. Phys. 156, 124107 (2022).

Gao, A. & Remsing, R. C. Self-consistent determination of long-range electrostatics in neural network potentials. Nat. Commun. 13, 1572 (2022).

Joll, K., Schienbein, P., Rosso, K. M. & Blumberger, J. Machine learning the electric field response of condensed phase systems using perturbed neural network potentials. Nat. Commun. 15, 8192 (2024).

Grisafi, A., Bussy, A., Salanne, M. & Vuilleumier, R. Predicting the charge density response in metal electrodes. Phys. Rev. Mater. 7, https://doi.org/10.1103/PhysRevMaterials.7.125403 (2023).

Rossi, M., Rossi, K., Lewis, A. M., Salanne, M. & Grisafi, A. Learning the electrostatic response of the electron density through a symmetry-adapted vector field model. J. Phys. Chem. Lett. 16, 2326–2332 (2025).

Bergmann, N., Bonnet, N., Marzari, N., Reuter, K. & Hörmann, N. G. Machine learning the energetics of electrified solid/liquid interfaces. Preprint at https://arxiv.org/abs/2505.19745 (2025).

Ghasemi, S. A., Hofstetter, A., Saha, S. & Goedecker, S. Interatomic potentials for ionic systems with density functional accuracy based on charge densities obtained by a neural network. Phys. Rev. B 92, 045131 (2015).

Faraji, S. et al. High accuracy and transferability of a neural network potential through charge equilibration for calcium fluoride. Phys. Rev. B 95, 104105 (2017).

Khajehpasha, E. R., Finkler, J. A., Kühne, T. D. & Ghasemi, S. A. CENT2: improved charge equilibration via neural network technique. Phys. Rev. B 105, 144106 (2022).

Ko, T. W., Finkler, J. A., Goedecker, S. & Behler, J. A fourth-generation high-dimensional neural network potential with accurate electrostatics including non-local charge transfer. Nat. Commun. 12, 398 (2021).

Gubler, M., Finkler, J. A., Schäfer, M. R., Behler, J. & Goedecker, S. Accelerating fourth-generation machine learning potentials using quasi-linear scaling particle mesh charge equilibration. J. Chem. Theory Comput. https://doi.org/10.1021/acs.jctc.4c00334 (2024).

Jacobson, L. D. et al. Transferable neural network potential energy surfaces for closed-shell organic molecules: extension to ions. J. Chem. Theory Comput. 18, 2354–2366 (2022).

Shaidu, Y., Pellegrini, F., Küçükbenli, E., Lot, R. & De Gironcoli, S. Incorporating long-range electrostatics in neural network potentials via variational charge equilibration from shortsighted ingredients. npj Comput. Mater. 10, 47 (2024).

Rinaldi, M., Bochkarev, A., Lysogorskiy, Y. & Drautz, R. Charge-constrained atomic cluster expansion. Phys. Rev. Mater. 9, 033802 (2025).

Staacke, C. G. et al. Kernel charge equilibration: efficient and accurate prediction of molecular dipole moments with a machine-learning enhanced electron density model. Mach. Learn. Sci. Technol. 3, 015032 (2022).

Vondrák, M., Reuter, K. & Margraf, J. T. q-pac: a Python package for machine learned charge equilibration models. J. Chem. Phys. 159, 054109 (2023).

Koski, J. P. et al. Water in an external electric field: comparing charge distribution methods using ReaxFF simulations. J. Chem. Theory Comput. 18, 580–594 (2022).

Chen, J. & Martínez, T. J. QTPIE: charge transfer with polarization current equalization. A fluctuating charge model with correct asymptotics. Chem. Phys. Lett. 438, 315–320 (2007).

Gergs, T., Schmidt, F., Mussenbrock, T. & Trieschmann, J. Generalized method for charge-transfer equilibration in reactive molecular dynamics. J. Chem. Theory Comput. 17, 6691–6704 (2021).

Chen, J., Hundertmark, D. & Martínez, T. J. A unified theoretical framework for fluctuating-charge models in atom-space and in bond-space. J. Chem. Phys. 129, 2034 (2008).

Rakshit, A., Bandyopadhyay, P., Heindel, J. P. & Xantheas, S. S. Atlas of putative minima and low-lying energy networks of water clusters n = 3-25. J. Chem. Phys. 151, 214307 (2019).

Bannwarth, C. et al. Extended tight-binding quantum chemistry methods. WIREs Comput. Mol. Sci. 11, e1493 (2021).

Grimme, S., Bannwarth, C. & Shushkov, P. A robust and accurate tight-binding quantum chemical method for structures, vibrational frequencies, and noncovalent interactions of large molecular systems parametrized for all spd-block elements (z = 1-86). J. Chem. Theory Comput. 13, 1989–2009 (2017).

Bannwarth, C., Ehlert, S. & Grimme, S. GFN2-xTB-an accurate and broadly parametrized self-consistent tight-binding quantum chemical method with multipole electrostatics and density-dependent dispersion contributions. J. Chem. Theory Comput. 15, 1652–1671 (2019).

Loche, P. et al. Fast and flexible long-range models for atomistic machine learning. J. Chem. Phys. 162, 142501 (2025).

Zhong, P., Kim, D., King, D. S. & Cheng, B. Machine learning interatomic potential can infer electrical response. Preprint at https://arxiv.org/abs/2504.05169 (2025).

Luenser, A., Schurkus, H. F. & Ochsenfeld, C. Vanishing-overhead linear-scaling random phase approximation by Cholesky decomposition and an attenuated coulomb-metric. J. Chem. Theory Comput. 13, 1647–1655 (2017).

Grimme, S. A simplified Tamm-Dancoff density functional approach for the electronic excitation spectra of very large molecules. J. Chem. Phys. 138, 454 (2013).

Jensen, F. Describing anions by density functional theory: fractional electron affinity. J. Chem. Theory Comput. 6, 2726–2735 (2010).

Ataka, K.-i, Yotsuyanagi, T. & Osawa, M. Potential-dependent reorientation of water molecules at an electrode/electrolyte interface studied by surface-enhanced infrared absorption spectroscopy. J. Phys. Chem. 100, 10664–10672 (1996).

Toney, M. F. et al. Voltage-dependent ordering of water molecules at an electrode-electrolyte interface. Nature 368, 444–446 (1994).

Saitta, A. M., Saija, F. & Giaquinta, P. V. Ab initio molecular dynamics study of dissociation of water under an electric field. Phys. Rev. Lett. 108, 207801 (2012).

Verstraelen, T., Ayers, P. W., Van Speybroeck, V. & Waroquier, M. Acks2: atom-condensed Kohn-Sham DFT approximated to second order. J. Chem. Phys. 138, 670 (2013).

Gütlein, P., Lang, L., Reuter, K., Blumberger, J. & Oberhofer, H. Toward first-principles-level polarization energies in force fields: a Gaussian basis for the atom-condensed Kohn–Sham method. J. Chem. Theory Comput. 15, 4516–4525 (2019).

Mahoney, M. W. & Drineas, P. CUR matrix decompositions for improved data analysis. Proc. Natl Acad. Sci. 106, 697–702 (2009).

Rappe, A. K. & Goddard, W. A. I. Charge equilibration for molecular dynamics simulations. J. Phys. Chem. 95, 3358–3363 (1991).

Chen, J. & Martínez, T. J. Charge conservation in electronegativity equalization and its implications for the electrostatic properties of fluctuating-charge models. J. Chem. Phys. 131, 044114 (2009).

Blum, V. et al. Ab initio molecular simulations with numeric atom-centered orbitals. Comput. Phys. Commun. 180, 2175–2196 (2009).

Adamo, C. & Barone, V. Toward reliable density functional methods without adjustable parameters: The PBE0 model. J. Chem. Phys. 110, 6158–6170 (1999).

Bartók, A. P., Kondor, R. & Csányi, G. On representing chemical environments. Phys. Rev. B 87, 184115 (2013).

Csányi, G. et al. Expressive programming for computational physics in Fortran 95+, 1–24 (Newsletter of the Computational Physics Group, 2007).

Kermode, J. R. f90wrap: an automated tool for constructing deep Python interfaces to modern Fortran codes. J. Phys. Condens. Matter 32, 305901 (2020).

Larsen, A. H. et al. The atomic simulation environment-a Python library for working with atoms. J. Phys. Condens. Matter 29, 273002 (2017).

Bahn, S. & Jacobsen, K. An object-oriented scripting interface to a legacy electronic structure code. Comput. Sci. Eng. 4, 56–66 (2002).

Acknowledgements

This work used computational resources from the Max Planck Computing and Data Facility (MPCDF). This work was supported by the Max Planck Society.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

M.V. and J.T.M. wrote the main manuscript. All authors conceived of the project and edited the text. M.V. produced the results and prepared the figures with input from all authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vondrák, M., Reuter, K. & Margraf, J.T. Pushing charge equilibration-based machine learning potentials to their limits. npj Comput Mater 11, 288 (2025). https://doi.org/10.1038/s41524-025-01791-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-025-01791-3