Abstract

Ensuring data privacy in machine learning models is critical, especially in distributed settings where model gradients are shared among multiple parties for collaborative learning. Motivated by the increasing success of recovering input data from the gradients of classical models, this study investigates the analogous challenge for variational quantum circuits (VQC) as quantum machine learning models. We highlight the crucial role of the dynamical Lie algebra (DLA) in determining privacy vulnerabilities. While the DLA has been linked to the trainability and simulatability of VQC models, we establish its connection to privacy for the first time. We show that properties conducive to VQC trainability, such as a polynomial-sized DLA, also facilitate extracting detailed snapshots of the input, posing a weak privacy breach. We further investigate conditions for a strong privacy breach, where original input data can be recovered from snapshots by classical or quantum-assisted methods. We establish properties of the encoding map, such as classical simulatability, overlap with DLA basis, and its Fourier frequency characteristics that enable such a privacy breach of VQC models. Our framework thus guides the design of quantum machine learning models, balancing trainability and robust privacy protection.

Similar content being viewed by others

Introduction

In the contemporary technological landscape, data privacy concerns command increasing attention, particularly within the domain of machine learning (ML) models that are trained on sensitive datasets. Privacy concerns are widespread in many different applications, including financial records1,2, healthcare information3,4,5, and location data6, each providing unique considerations. Furthermore, the multi-national adoption of stringent legal frameworks7 has further amplified the urgency to improve data privacy.

The introduction of distributed learning frameworks, such as federated learning8,9,10, not only promises increased computational efficiency but also demonstrates the potential for increased privacy in ML tasks. In federated learning, each user trains a machine learning model, typically a neural network, locally on their device using their confidential data, meaning that they only need to send their model gradients to the central server, which aggregates gradients of all users to calculate the model parameters for the next training step. As the user does not send their confidential data, but rather their training gradients, this was proposed as the first solution to enable collaborative learning while preventing data leakage. However, subsequent works have shown that neural networks are particularly susceptible to gradient inversion-based attacks to recover the original input data11,12,13,14,15. To mitigate the above issue, classical techniques have been proposed to enhance the privacy of distributed learning models, ranging from gradient encryption-based methods16, the addition of artificial noise in the gradients to leverage differential-privacy type techniques10, or strategies involving the use of batch training to perform gradient mixing17. These techniques, although mitigative in nature, are not fully robust since they either still leak some input information, add substantial computational overhead while training the model in the distributed setting, or result in reduced performance of the model.

A natural question that follows is whether quantum machine learning can help mitigate the privacy concerns that their classical counterparts exhibit. Specifically, one is interested in exploring the fundamental question underpinning the privacy of quantum models: Given the gradients of a quantum machine learning model, how difficult is it to reconstruct the original classical data inputs? In search of privacy guarantees with quantum techniques, several quantum distributed learning proposals have been previously introduced18,19,20,21,22,23,24,25,26. Within the field of quantum differential privacy, quantum noise27 and randomized encoding28 have been reported to have a beneficial effect. Previous methods for improving privacy in a federated learning context have ranged from the use of blind quantum computing29, high-frequency encoding circuits30, and hybrid quantum-classical methods that combine pre-trained classical models with quantum neural networks31. In particular, the work of30 considered variational quantum circuits (VQC) as quantum machine learning models and suggested that highly expressive product encoding maps along with an overparameterized hardware efficient ansatz (HEA) would necessitate an exponential amount of resources (in terms of the number of qubits n) for an attacker to learn the input from the gradients. Their work, although the first and sole one to date to theoretically analyze the privacy of a specific VQC model architecture, has certain key drawbacks. The first is that overparameterization of a HEA leads to an untrainable model, since it mixes very quickly to a 2-design32 and thus leads to a barren plateau phenomenon33. The authors enforced the requirement of overparameterization to ensure that there are no spurious local minima in the optimization landscape and that all local minima are exponentially concentrated toward global minima34. However, this requires the HEA to have an exponential depth and thus an exponential number of parameters, which precludes efficient training due to an exponential memory requirement to store and update the parameters. Secondly, the difficulty of inverting gradients to recover data primarily stems from the high expressivity, characterized in this case by an exponentially large number of non-degenerate frequencies of the generator Hamiltonian of the encoding map. Introducing high-frequency terms in the encoding map may not be an exclusive quantum effect, as classical machine learning models could also be enhanced by initially loading the data with these high-frequency feature maps35.

While previous studies have aimed to highlight the benefits of employing VQC models in safeguarding input privacy, none have convincingly addressed what sets VQC models apart from classical neural networks in their potential to provide robust privacy guarantees. A critical aspect missing in a comprehensive examination of the privacy benefits offered by VQC models in a privacy framework tailored for them. Such a framework should avoid dependence on specific privacy-enhancing procedures or architectures and instead focus on exploring the fundamental properties of VQC models that result in input privacy.

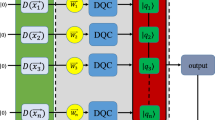

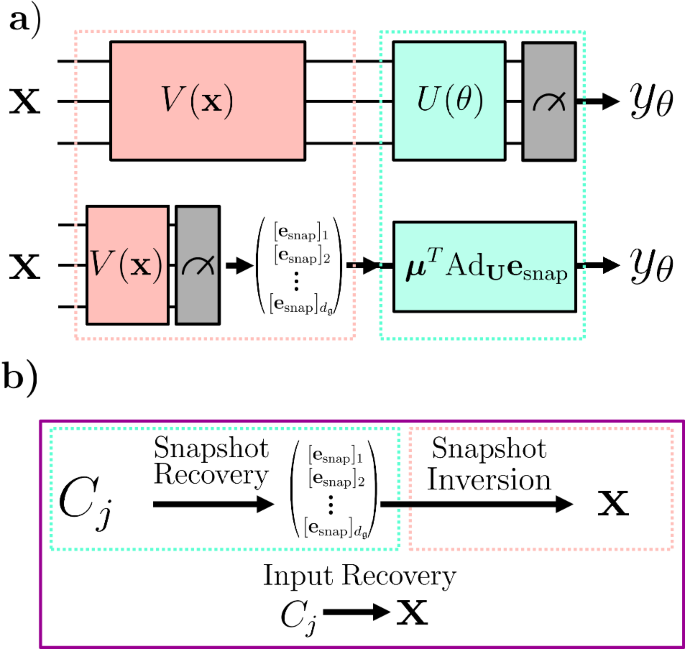

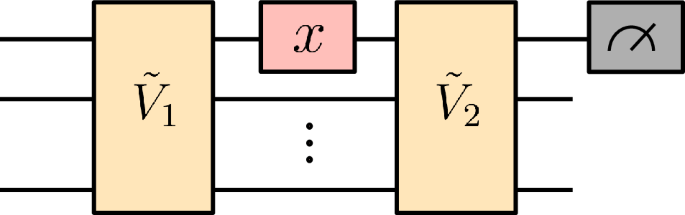

To address the above concerns, we introduce a framework designed to assess the possibility of retrieving classical inputs from the gradients observed in VQC models. We consider VQCs that satisfy the Lie algebra supported ansatz (LASA) property, which has been key in establishing connections with the trainability and classical simulatability of VQCs36,37,38. Our study systematically differentiates the separate prerequisites for input reconstruction across both the variational ansatz and encoding map architectures of these VQC models as summarized in Table 1. Our first result concerns the properties of the variational ansatz and the measurement operator of the VQC. Specifically, we show that when the VQC satisfies the LASA condition, i.e., when the measurement operator is within the dynamical Lie algebra (DLA) of the ansatz, and when the DLA scales polynomially with the number of qubits, it is possible to efficiently extract meaningful snapshots of the input, enabling training and evaluation of VQC models for other learning tasks without having direct access to the original input. We call this the weak privacy breach of the model. Further, we investigate conditions for strong privacy breach, i.e., recoverability of the original input by classical or quantum-assisted polynomial time methods. Fully reconstructing the input data from these snapshots to perform a strong privacy breach presents a further challenge, which we show is dependent on properties of the encoding map, such as the hardness of classically simulating the encoding, the overlap of the DLA basis with encoding circuit generators, and its Fourier frequency characteristics. The two types of privacy breach we introduce are summarized in Fig. 1, while more specific definitions regarding snapshots, recoverability, and invertibility are provided in the input recoverability definitions section.

Weak privacy breach corresponds to attacks where snapshots of the data are retrieved. These can be used as inputs to other models, without explicitly needing the exact data, allowing one to potentially learn characteristics of the data. If these snapshots can then be further inverted to retrieve the input data x explicitly, we say the attack has succeeded in a strong privacy breach.

This investigation presents a comprehensive picture of strategies to extract the key properties of VQCs to provide robust privacy guarantees while ensuring that they are still trainable. We structure our paper in the following manner. Supplementary file Sec I provides the notation used in this work. The results section starts by providing a general framework for studying privacy with VQC. This includes describing the VQC framework, providing Lie theoretic definitions required for this work, and the privacy definitions in terms of input recoverability. The results section then continues with the snapshot recovery and snapshot invertibility subsections that provide a detailed analysis of the snapshot recoverability from the gradients, and snapshot inversion to recover the input, respectively. The method section establishes the connections between privacy and the well-studied trainability of VQCs, and then consequently highlights the future directions of enabling robust privacy with quantum machine learning models.

Results

General Framework

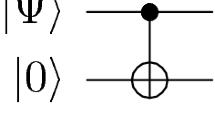

Variational quantum circuits for machine learning

A variational quantum circuit (VQC) is described in the following manner. We consider the d-dimensional input vector \({\bf{x}}\in {\mathcal{X}}\subset {{\mathbb{R}}}^{d}\), which is loaded into the quantum encoding circuit V(x) of n qubits to produce a feature map with the input state mapping,

This operation loads the input vector of dimension d to a Hilbert space \({\mathcal{H}}={({{\mathbb{C}}}^{2})}^{\otimes n}\) of dimension dim(ρ(x)) = 2n. We will explicitly consider the scenario where n = Θ(d), which is a common setting in most existing VQC algorithms, and hence the number of qubits in a given algorithm will be of the same order as the input vector dimension d. The state ρ(x) is then passed through a variational circuit ansatz U(θ) defined as

which is parameterized by a vector of variational parameters θ = [θ1, ⋯ , θD], where D is the total number of variational parameters. Here {H1, ⋯ , HN} are the set of N Hermitian generators of the circuit U. The generator assignment map ν: [D] → [N] is used to assign one the generator Hν(k) to the corresponding variational parameter θk. Under this notation, multiple distinct variational parameters can use the same generator. This is the case for repeated layers of a variational ansatz, where for L repeated layers, one would have D = NL and ν(k) = ((k − 1)mod N) + 1. We note that the above structure is quite general since some common ansatz structures such as the hardware efficient ansatz, the quantum alternating operator ansatz, and Hamiltonian variational ansatz, among others, are all encapsulated in this framework as highlighted in ref. 39.

The parameterized state ρ(x) is passed through a variational circuit denoted by U(θ), followed by the measurement of some observable \({\bf{O}}\in {\mathcal{H}}\). For a given θ, the output of the variational quantum circuit model is expressed as the expectation value of O with the parameterized state,

For the task of optimizing the variational quantum circuits, the model output is fed into the desired cost function Cost(θ, x), which is subsequently minimized to obtain,

where θ* are the final parameter values after optimization. Typical examples of cost functions include cross-entropy loss, and mean-squared error loss, among others40.

The typical optimization procedure involves computing the gradient of the cost function with respect to the parameters θ, which in turn, involves computing the gradient with respect to the model output yθ(x)

Going forward, we will directly deal with the recoverability of input x given Cj, instead of working with specific cost functions. Details of how to reconstruct our results when considering gradients with respect to specific cost functions are covered in the Supplementary file Sec II.

Lie theoretic framework

We review some introductory as well as recent results on Lie theoretic framework for variational quantum circuits which are relevant to our work. For a more detailed review of this topic, we refer the reader to39,41. We provide the Lie theoretic definitions for a periodic ansatz of the form Eq. (2).

Definition 1

(Dynamical Lie Algebra). The dynamical Lie algebra (DLA) \({\mathfrak{g}}\) for an ansatz U(θ) of the form Eq. (2) is defined as the real span of the Lie closure of the generators of U

where the closure is defined under taking all possible nested commutators of S = {iH1, ⋯ , iHN}. In other words, it is the set of elements obtained by taking the commutation between elements of S until no further linearly independent elements are obtained.

Definition 2

(Dynamical Lie Group). The dynamical Lie group \({\mathcal{G}}\) for an ansatz U(θ) of the form of Eq. (2) is determined by the DLA \({\mathfrak{g}}\) such that,

where \({e}^{{\mathfrak{g}}}:= \{{e}^{i{\bf{H}}},i{\bf{H}}\in {\mathfrak{g}}\}\) and is a subgroup of SU(2n). For generators in \({\mathfrak{g}}\), the set of all U(θ) of the form Eq (2) generates a dense subgroup of \({\mathcal{G}}\).

Definition 3

(Adjoint representation). The Lie algebra adjoint representation is the following linear action: \(\forall {\bf{K}},{\bf{H}}\in {\mathfrak{g}}\),

and the Lie group adjoint representation is the following linear action \(\forall {\bf{U}}\in {\mathcal{G}},\forall {\bf{H}}\in {\mathfrak{g}}\),

Definition 4

(DLA basis). The basis of the DLA is denoted as \({\{i{{\bf{B}}}_{\alpha }\}}_{\alpha }\), \(\alpha \in \{1,\cdots \,,\,\text{dim}\,({\mathfrak{g}})\}\), where Bα are Hermitian operators and form an orthonormal basis of \({\mathfrak{g}}\) with respect to the Frobenius inner product.

Any observable O is said to be entirely supported by the DLA whenever \(i{\bf{O}}\in {\mathfrak{g}}\), or in other words

where μα is the coefficient of support of O in the basis Bα.

Definition 5

(Lie Algebra Supported Ansatz36). A Lie Algebra Supported Ansatz (LASA) is a periodic ansatz of the form Eq. (2) of a VQC where the measurement operator O is completely supported by the DLA \({\mathfrak{g}}\) associated with the generators of U(θ), that is,

In addition to its connections to the trainability of a VQC, this condition also implies that \(\forall {\boldsymbol{\theta }},{U}^{\dagger }({\boldsymbol{\theta }})i{\boldsymbol{O}}U({\boldsymbol{\theta }})\in {\mathfrak{g}}\), which enables us to express the evolution of the observable O in terms of elements of \({\mathfrak{g}}\). This is key to some simulation algorithms that are possible for polynomial-sized DLAs37,38.

Input recoverability definitions

In this section, we provide meaningful definitions of what it means to recover the classical input data given access to the gradients \({\{{C}_{j}\}}_{j = 1}^{D}\) of a VQC. Notably, our definitions are motivated in a manner that allows us to consider the encoding and variational portions of a quantum variational model separately.

A useful concept in machine learning is the creation of data snapshots. These snapshots are compact and efficient representations of the input data’s feature map encoding. Essentially, a snapshot retains enough information to substitute for the full feature map encoded data, enabling the training of a machine learning model for a distinct task with the same data but without the need to explicitly know the input data was passed through the feature map. For example, in methods such as \({\mathfrak{g}}\)-sim38, these snapshots are used as input vectors for classical simulators. The simulator can then process these vectors efficiently under certain conditions, recreating the operation of a variational quantum circuit.

It will become useful to classify the process of input data x recovery into two stages; the first concerns recovering snapshots of the quantum state ρ(x) (Eq (1)) from the gradients, which involves only considering the variational part of the circuit.

Definition 6

(Snapshot Recovery). Given the gradients Cj, j ∈ [D] as defined in Eq (5) as well as the parameters θ = [θ1, ⋯ , θD], we consider a VQC to be snapshot recoverable if there exists an efficient \({\mathcal{O}}(poly(d,\frac{1}{\epsilon }))\) classical polynomial time algorithm to recover the vector esnap such that,

for some {Bα} forming a Frobenius-orthonormal basis of the DLA \({\mathfrak{g}}\) corresponding to U(θ) in Eq. (2), and the above holds for any ϵ > 0. We call esnap the snapshot of x.

In other words, esnap is the orthogonal projection of the input state ρ(x) onto the DLA of the ansatz, and thus the elements of esnap are the only components of the input state that contribute to the generation of the model output yθ(x) as defined in Eq. (3). Here, we constitute the retrieval of the snapshot esnap of a quantum state ρ(x) as weak privacy breach, since the snapshot could be used to train the VQC model for other learning tasks involving the same data {x} but without the need to use the actual data. As an example, consider an adversary that has access to the snapshots corresponding to the data of certain customers. Their task is to train the VQC to learn the distinct behavioral patterns of the customers. It becomes apparent that the adversary can easily carry out this task without ever needing the original data input since the entire contribution of the input x in the VQC output decision-making yθ(x) is captured by esnap.

Next, we consider the stronger notion of privacy breach in which the input data x must be fully reconstructed. Assuming that the snapshot has been recovered, the second step we therefore consider is inverting the recovered snapshot esnap to find the original data x, a process that is primarily dependent on the encoding part of the circuit. Within our snapshot inversion definition, we consider two cases that enable different solution strategies: snapshot inversion utilizing purely classical methods and snapshot inversion methods that can utilize quantum samples.

Definition 7

(Classically Snapshot Invertible Model). Given the snapshot esnap as the expectation values of the input state ρ(x), we say that VQC admits classical snapshot invertibility if there exists an efficient \({\mathcal{O}}(\,\text{poly}\,(d,\frac{1}{\epsilon }))\) polynomial time classical randomized algorithm to recover

with probability at least \(p=\frac{2}{3}\), for any user defined ϵ > 0.

Definition 8

(Quantum Assisted Snapshot inversion). Given the snapshot esnap as the expectation values of the input state ρ(x), and the ability to query \(\,\text{poly}\,(d,\frac{1}{\epsilon })\) number of samples from the encoding circuit V to generate snapshots \({{\bf{e}}}_{{\rm{snap}}}^{{\prime} }\) for any given input \({{\bf{x}}}^{{\prime} }\), we say that VQC admits quantum-assisted snapshot invertibility, if there exists an efficient \({\mathcal{O}}(\,\text{poly}\,(d,\frac{1}{\epsilon }))\) polynomial time classical randomized algorithm to recover

with probability at least \(p=\frac{2}{3}\), for any user defined ϵ > 0.

In this work, we specifically focus on input recoverability by considering the conditions under which VQC would admit snapshot recovery followed by snapshot invertibility. Considering these two steps individually allows us to delineate the exact mechanisms that contribute to the overall recovery of the input.

It is important to mention that it may potentially only be possible to recover the inputs of a VQC up to some periodicity, such that there only exists a classical polynomial time algorithm to recover \(\tilde{{\bf{x}}}={\bf{x}}+{\bf{k}}\pi\) up to ϵ-closeness, where \({\bf{k}}\in {\mathbb{Z}}\). As the encodings generated by quantum feature maps inherently contain trigonometric terms, in the most general case it may therefore only be possible to recover x up to some periodicity. However, this can be relaxed if the quantum feature map is assumed to be injective.

Figure 2 shows a diagram that highlights the Lie algebraic simulation method38 along with specifications of the input recovery framework as defined in this work.

a Visualization of the difference between the circuit implementation of a variational quantum model and a Lie algebraic simulation procedure of the same model38. In the Lie algebraic Simulation framework38, input data x is encoded into a quantum circuit using V(x), however, the measurements are then performed on this encoded state and used to form a vector of snapshot expectation values. This vector of snapshot expectation values can then be passed as inputs to a classical simulator that uses the adjoint form of U(θ), which can be performed with resources scaling with the dimension of the DLA formed by the generators of U(θ). b In this work, we assess the ability to recover an input x from gradients Cj. This can be broken into two parts: Firstly, the snapshot esnap must be recovered from the gradients Cj, which corresponds to reversing the Lie algebraic simulation step. Secondly, the recovered snapshot esnap must be inverted to find the original data x, which requires finding the values of x that when input into V(x) will give the same snapshot values esnap. If both snapshot recovery and snapshot inversion can be performed, then it admits efficient input recovery.

Snapshot recovery

This section addresses the weak privacy notion of recovering the snapshots of the input as introduced in Def 6. As the name implies, the goal here is to recover the vector esnap for some Schmidt orthonormal basis \({\{{{\bf{B}}}_{\alpha }\}}_{\alpha \in \text{dim}({\mathfrak{g}})}\) of the DLA corresponding to the VQC ansatz U(θ), given that the attacker is provided the following information,

-

1.

D gradient information updates \({C}_{j}=\frac{\partial {y}_{{\boldsymbol{\theta }}}({\bf{x}})}{\partial {\theta }_{j}},j\in [D]\) as defined in Eq. (5).

-

2.

Ansatz architecture U(θ) presented as an ordered sequence of Hermitian generators \({\{{\theta }_{k},{{\bf{H}}}_{\nu (k)}\}}_{k = 1}^{D}\), where Hν(k) is expressed as a polynomial (in the number of qubits) linear combination of Pauli strings.

-

3.

Measurement operator O, which satisfies the LASA condition according to Def 5 and is expressed as a polynomial (in the number of qubits) linear combination of Pauli strings

Recovering these snapshots will enable an attacker to train the VQC model for other learning tasks that effectively extract the same information from the input states ρ(x) but without the need to use the actual data. The main component of the snapshot recoverability algorithm makes use of the \({\mathfrak{g}}\)-sim37,38 framework, which we briefly review in the following subsection while also clarifying some previously implicit assumptions, to construct a system of linear equations that can be solved to recover esnap as detailed in Algorithm 2.

Review of Lie-algebraic simulation framework

We start by reviewing the \({\mathfrak{g}}\)-sim framework37,38 for classically computing the cost function and gradients of VQCs, when the observable lies in the DLA of the chosen ansatz. Specifically, this framework evolves the expectation values of observables via the adjoint representation. However, a necessary condition for this procedure to be efficient is that the dimension of the DLA (\(\,\text{dim}\,({\mathfrak{g}})\)) is only polynomially growing in the number of qubits.

The first step of \({\mathfrak{g}}\)-sim consists of building an orthonormal basis for the DLA \({\mathfrak{g}}\) given \({(\{{\theta }_{k},{{\bf{H}}}_{\nu (k)}\})}_{k = 1}^{D}\). Algorithm 1 presents a well-known procedure to do this. The procedure simply computes pairwise commutators until no new linearly independent elements are found. Given that all operators are expressed in the Pauli basis, the required orthogonal projectors and norm computations performed by Algorithm 1 can be performed efficiently. If the dimension of DLA is \({\mathcal{O}}(\,\text{poly}\,(n))\), then the iteration complexity, i.e., the number of sets of commutators that we compute, of this procedure is polynomial in n. However, an important caveat is that potentially the elements forming our estimation for the DLA basis could have exponential support on the Pauli basis, which is a result of computing new pairwise commutators at each iteration. Thus, for this overall procedure to be efficient, we effectively require that the nested commutators of the generators Hk do not have exponential support on the Pauli basis.

Definition 9

(Slow Pauli Expansion). A set of Hermitian generators {H1, …, HN} on n-qubits expressed as linear combinations of \({\mathcal{O}}(\,\text{poly}\,(\dim ({\mathfrak{g}})))\) Pauli strings satisfies the slow Pauli expansion condition if ∀ r ∈ [N], [Hr, [ ⋯ , [H2, H1]]] can be expressed as a linear combination of \({\mathcal{O}}(\,\text{poly}\,(\dim ({\mathfrak{g}})))\) Pauli strings.

In general, it is unclear how strong of an assumption this is, which means that the attacks that we present may not be practical for all VQCs that satisfy the polynomial DLA condition, and thus privacy preservation may still be possible. Also, it does not seem to be possible to apply the \({\mathfrak{g}}\)-sim framework without the slow Pauli expansion condition. Lastly, a trivial example of a set of Hermitian generators that satisfies the slow Pauli expansion is those for the quantum compound ansatz discussed in ref. 36.

Algorithm 1

Finding DLA basis

Require: Hermitian circuit generators {H1, …, HN}, all elements are linear combinations of polynomially-many Pauli strings

Ensure: \({{\mathcal{A}}}^{{\prime\prime} {\prime} }=\{{{\bf{B}}}_{1},\ldots ,{{\bf{B}}}_{\dim ({\mathfrak{g}})}\}\) as the basis for the DLA \({\mathfrak{g}}\)

1. Let \({\mathcal{A}}=\{{H}_{1},\ldots ,{H}_{N}\}\), with all elements represented in the Pauli basis.

2. Repeat until breaks

(a) Compute pairwise commutators of elements of \({\mathcal{A}}\) into \({{\mathcal{A}}}^{{\prime} }\)

(b) Orthogonally project \({{\mathcal{A}}}^{{\prime} }\) onto the orthogonal complement of \({\mathcal{A}}\) in \({\mathfrak{g}}\)

(c) Set new \({{\mathcal{A}}}^{{\prime\prime} }\) to be \({\mathcal{A}}\) plus new orthogonal elements. If no new elements, break.

3. Perform Gram–Schmidt on \({\mathcal{A}}\) forming \({{\mathcal{A}}}^{{\prime\prime} {\prime} }\).

4. Return \({{\mathcal{A}}}^{{\prime\prime} {\prime} }\).

Given the orthonormal basis Bα for \({\mathfrak{g}}\), under the LASA condition, we can express \({\bf{O}}={\sum }_{\alpha \in [\text{dim}({\mathfrak{g}})]}{\mu }_{\alpha }{{\bf{B}}}_{\alpha }\), and hence we can write the output as

In addition, given the form of U, we can express AdU as,

We can also compute the structure constants for our basis Bα, which is the collection of \(\dim ({\mathfrak{g}})\times \dim ({\mathfrak{g}})\) matrices for the operators \({\text{ad}}_{i{{\bf{B}}}_{\alpha }}\). As a result of linearity, we also have the matrix for each adiH for \({\bf{H}}\in {\mathfrak{g}}\) in the basis Bα. Then, by performing matrix exponentiation and multiplying \(\dim ({\mathfrak{g}})\times \dim ({\mathfrak{g}})\) we can compute the matrix for AdU.

Using the above, the model output may be written,

where esnap is a vector of expectation values of the initial state, i.e., \({[{{\bf{e}}}_{{\rm{snap}}}]}_{\beta }=\,\text{Tr}\,[{{\bf{B}}}_{\beta }\rho ({\bf{x}})].\)

Similar to the cost function, the circuit gradient can also be computed via \({\mathfrak{g}}\)-sim. Let,

where the adjoint term differentiated with respect to θj can be written as,

The components of χ(j) can be expressed as,

allowing Cj terms to be represented in a simplified manner as

The key feature of this setup is that the matrices and vectors involved have dimension \(\,\text{dim}\,({\mathfrak{g}})\), therefore for a polynomial-sized DLA, the simulation time will scale polynomially and model outputs can be calculated in polynomial time38. Specifically, the matrices for each \({\text{ad}}_{i{{\bf{H}}}_{k}}\) in the basis {Bl} and AdU are polynomial in this case.

This Lie-algebraic simulation technique was introduced in order to show efficient methods of simulating LASA circuits with polynomially sized DLA. In this work, we utilize the framework in order to investigate the snapshot recovery of variational quantum algorithms. Based on the above discussion, the proof of the following theorem is self-evident.

Theorem 1

(Complexity of \({\mathfrak{g}}\)-sim). If ansatz family U(θ) with an observable O satisfies both the LASA condition and Slow Pauli Expansion, then the cost function and its gradients can be simulated with complexity \({\mathcal{O}}(\,\text{poly}\,(\dim ({\mathfrak{g}})))\) using a procedure that at most queries a quantum device a polynomial number of times to compute the \(\dim ({\mathfrak{g}})\)-dimensional snapshot vector esnap.

Snapshot recovery algorithm

Algorithm 2

Snapshot Recovery

Require: Observable O such that \(i{\bf{O}}\in {\mathfrak{g}}\), generators \({\{{{\bf{H}}}_{\nu (k)}\}}_{k = 1}^{D}\), ordered sequence \({(\{{\theta }_{k},{{\bf{H}}}_{\nu (k)}\})}_{k = 1}^{D}\), and gradients \({C}_{j}=\frac{\partial {y}_{{\boldsymbol{\theta }}}({\bf{x}})}{\partial {\theta }_{j}},j\in [D]\) for some unknown classical input x.

Ensure: Snapshot esnap for x

1. Run Algorithm 1 to obtain an orthonormal basis for the DLA \({\{{{\bf{B}}}_{\beta }\}}_{\beta \in [\text{dim}({\mathfrak{g}})]}\)

2. For \(\beta \in [\,\text{dim}\,({\mathfrak{g}})]\), compute the \(\,\text{dim}\,({\mathfrak{g}})\times \,\text{dim}\,({\mathfrak{g}})\) matrix \({\text{ad}}_{i{{\bf{B}}}_{\beta }}\)

3. For k ∈ [D], compute the coefficients of Hν(k) in the basis \({\{{{\bf{B}}}_{\beta }\}}_{\beta \in [\text{dim}({\mathfrak{g}})]}\), which gives us \({\text{ad}}_{i{{\bf{H}}}_{\nu (k)}}\)

4. For k ∈ [D], compute the \(\,\text{dim}\,({\mathfrak{g}})\times \,\text{dim}\,({\mathfrak{g}})\) matrix exponential \({e}^{{\theta }_{k}\text{ad}i{{\bf{H}}}_{\nu (k)}}\)

5. For j ∈ [D] compute the \(\,\text{dim}\,({\mathfrak{g}})\times \,\text{dim}\,({\mathfrak{g}})\) matrix

6. For \(\beta \in [\,\text{dim}\,({\mathfrak{g}})]\), compute the coefficients μβ of O in the basis \({\{{{\bf{B}}}_{\beta }\}}_{\beta \in [\text{dim}({\mathfrak{g}})]}\)

7. For \(j\in [D],\beta \in [\,\text{dim}\,({\mathfrak{g}})]\), compute

and construct \(D\times \,\text{dim}\,({\mathfrak{g}})\) matrix A with \({{\bf{A}}}_{rs}={\chi }_{s}^{(r)}\).

8. Solve the following linear system,

and return y as the snapshot esnap.

With the framework for the \({\mathfrak{g}}\)-sim38 established, we focus on how snapshots esnap of the input data can be recovered using the VQC model gradients Cj, with the process detailed in Algorithm 2. In particular, the form of Eq (21) allows a set-up leading to the recovery the snapshot vector esnap from the gradients \({\{{C}_{j}\}}_{j = 1}^{D}\), but requires the ability to solve the system of D linear equations given by {Cj} with \(\,\text{dim}\,({\mathfrak{g}})\) unknowns \({[{{\bf{e}}}_{{\rm{snap}}}]}_{\beta \in \text{dim}({\mathfrak{g}})}\). The following theorem formalizes the complexity of recovering the snapshots from the gradients.

Theorem 2

(Snapshot Recovery). Given the requirements specified in Algorithm 2, along with the assumption that the number of variational parameters \(D\ge \,\text{dim}\,({\mathfrak{g}})\), where \(\,\text{dim}\,({\mathfrak{g}})\) is the dimension of the DLA \({\mathfrak{g}}\), the VQC model admits snapshot esnap recovery with complexity scaling as \({\mathcal{O}}(\,\text{poly}\,(\dim ({\mathfrak{g}})))\).

Proof

Firstly, we note that given the gradients Cj and parameters θj∈[D], the only unknowns are the components of the vector esnap of length \(\,\text{dim}\,({\mathfrak{g}})\). Therefore, it is necessary to have \(\,\text{dim}\,({\mathfrak{g}})\) equations in total; otherwise, the system of equations would be underdetermined, and it would be impossible to find a unique solution. The number of equations depends on the number of gradients and, therefore, the number of variational parameters in the model; hence, the requirement that \(D\ge \,\text{dim}\,({\mathfrak{g}})\).

Assuming now that we deal with the case where there are \(D\ge \,\text{dim}\,({\mathfrak{g}})\) variational parameters of the VQC model, we can therefore arrive at a determined system of equations. The resulting system of simultaneous equations can be written in a matrix form as,

In order to solve the system of equations highlighted in Eq. (25) to obtain esnap, we first need to compute the coefficients \({\{{\chi }_{\beta }^{(j)}\}}_{j\in [D],\beta \in [\text{dim}({\mathfrak{g}})]}\). This can be done by the \({\mathfrak{g}}\)-sim procedure highlighted in the previous section and in steps 1-7 in Algorithm 2 with complexity \({\mathcal{O}}(\,\text{poly}(\text{dim}\,({\mathfrak{g}})))\). The next step is to solve the system of equations, i.e., step 8 of Algorithm 2, which can solved using Gaussian elimination procedure incurring a complexity \({\mathcal{O}}(\,\text{dim}\,{({\mathfrak{g}})}^{3})\)42. Thus, the overall complexity of recovering the snapshots from the gradients is \({\mathcal{O}}(\,\text{poly}(\text{dim}\,({\mathfrak{g}})))\). This completes the proof.

In the case that the dimension of DLA is exponentially large \(\,\text{dim}\,({\mathfrak{g}})={\mathcal{O}}(\exp (n))\), then performing snapshot recovery by solving the system of equations would require an exponential number of gradients and thus an exponential number of total trainable parameters \(D={\mathcal{O}}(\exp (n))\). However, this would require storing an exponential amount of classical data, as even the variational parameter array θ would contain \({\mathcal{O}}(\exp (n))\) many elements, and hence this model would already breach the privacy definition, which only allows for a polynomial (in n = Θ(d)) time attacker. In addition, the complexity of obtaining the coefficients \({\chi }_{\beta }^{(j)}\) and subsequently solving the system of linear equations would also incur an exponential cost in n. Hence, for the system of simultaneous equations to be determined, it is required that \(\,\text{dim}\,({\mathfrak{g}})={\mathcal{O}}(\,\text{poly}\,(n))\). Under the above requirement, it will also be possible to solve the system of equations in Eq. (25) in polynomial time and retrieve the snapshot vector esnap. Hence, a model is snapshot recoverable if the dimension of the DLA scales polynomially in d.

Snapshot invertibility

We have shown that in the case that the DLA dimension of the VQC is polynomial in the number of qubits n and the slow Pauli expansion condition (Def 9) is satisfied, then it is possible to reverse engineer the snapshot vector esnap from the gradients. As a result, this breaks the weak-privacy criterion. The next step in terms of privacy analysis is to see if a strong privacy breach can also occur. This is true when it is possible to recover the original data x that was used in the encoding step to generate the state ρ(x); the expectation values of this state with respect to the DLA basis elements form the snapshot esnap. Hence, even if the DLA is polynomial and snapshot recovery allows the discovery of esnap, there is still the possibility of achieving some input privacy if esnap cannot be efficiently inverted to find x. The overall privacy of the VQC model, therefore, depends on both the data encoding and the variational ansatz.

One common condition that is necessary for our approaches to snapshot inversion is the ability to compute the expectation values \(\,\text{Tr}\,(\rho ({{\bf{x}}}^{{\prime} }){{\bf{B}}}_{k}),\forall k\in [\,\text{dim}\,({\mathfrak{g}})]\) for some guess input \({{\bf{x}}}^{{\prime} }\). This is the main condition that distinguishes between completely classical snapshot inversion and quantum-assisted snapshot inversion. It is well-known that computing expectation values of specific observables is a weaker condition than ρ(x) being classically simulatable43. Hence, it may be possible to classically perform snapshot inversion even if the state ρ(x) overall is hard to classically simulate. In the quantum-assisted case, it is always possible to calculate \(\,\text{Tr}\,\left.\right(\rho ({{\bf{x}}}^{{\prime} }{{\bf{B}}}_{k})\) values by taking appropriate measurements of the encoding circuit \(V({{\bf{x}}}^{{\prime} })\).

In the first subsection, we present inversion attacks that apply to commonly used feature maps and explicitly make use of knowledge about the locality of the encoding circuit. The common theme among these feature maps is that by restricting to only a subset of the inputs, it is possible to express the ρ(x) or expectations thereof in a simpler way. The second subsection focuses on arbitrary encoding schemes by viewing the problem as black-box optimization. In general, snapshot inversion can be challenging or intractable even if the snapshots can be efficiently recovered and/or the feature map can be classically simulated. Our focus will be on presenting sufficient conditions for performing snapshot inversion, which leads to suggestions for increasing privacy.

Snapshot inversion for local encodings

For efficiency reasons, it is common to encode components of the input vector x in local quantum gates, typically just single-qubit rotations. The majority of the circuit complexity is usually either put into the variational part or via non-parameterized entangling gates in the feature map. In this section, we demonstrate attacks to recover components of x, up to periodicity, given snapshot vectors when the feature map encodes each xj locally. More specifically, we put bounds on the allowed amount of interaction between qubits that are used to encode each xj. In addition, we also require that the number of times the feature map can encode a single xj be sufficiently small. While the conditions will appear strict, we note that they are satisfied for some commonly used encodings, e.g., the Pauli product feature map or Fourier tower map30, which was previously used in a VQC model that demonstrated resilience to input recovery.

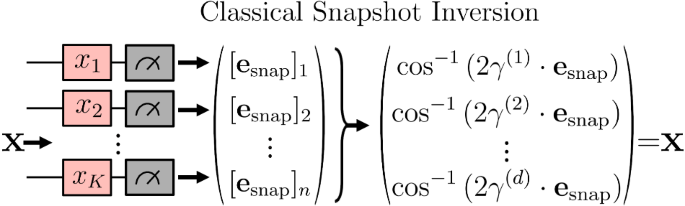

For the Pauli product encoding, we show that a completely classical snapshot inversion attack is possible. An example of a Pauli product encoding is the following:

where \({R}_{{\mathsf{X}}}\) is the parameterized Pauli \({\mathsf{X}}\) rotation gate. The Fourier tower map is similar to Eq. (26) but utilizes a parallel data reuploading scheme, i.e.,

where n = dm, with m being the number of qubits used to encode a single dimension of the input.

1. Pauli Product Encoding: The first attack that we present will specifically target Eq. (26). However, the attack does apply to the Fourier tower map as well. More generally, the procedure applies to any parallel data reuploading schemes of the form:

We explicitly utilize Pauli \({\mathsf{X}}\) rotations, but a similar result holds for \({\mathsf{Y}}\) or \({\mathsf{Z}}\). For a Pauli operator \({\mathsf{P}}\), let \({{\mathsf{P}}}_{j}:= i{{\mathbb{I}}}^{\otimes (j-1)}\otimes {\mathsf{P}}\otimes {{\mathbb{I}}}^{\otimes (n-j)}\).

Algorithm 3

Classical Snapshot Inversion for Pauli Product Encoding

Require: Snapshot vector esnap(x) of dimension \(\dim ({\mathfrak{g}})={\mathcal{O}}(\,\text{poly}\,(n))\) corresponding to a basis \({({{\bf{B}}}_{k})}_{k = 1}^{\dim ({\mathfrak{g}})}\) of DLA \({\mathfrak{g}}\). Each Bk is expressed as a linear combination of \({\mathcal{O}}(\,\text{poly}\,(n))\) Pauli strings. Snapshot inversion is being performed for a VQC model that utilizes a trainable portion of U(θ) with DLA \({\mathfrak{g}}\) and Pauli product encoding Eq. (26). Index j ∈ [d], ϵ < 1

Ensure: An ϵ estimate of the jth component xj of the data input \({\bf{x}}\in {{\mathbb{R}}}^{d}\) up to periodicity, or output FAILURE.

If \(i{{\mathsf{Z}}}_{j}\in {\mathfrak{g}}\) then

α ← 1, β ← 0

\({\bf{W}}\leftarrow {{\mathsf{Y}}}_{j}\)

else if \(i{{\mathsf{Y}}}_{j}\in {\mathfrak{g}}\) then

α ← 1, β ← 0

\({\bf{W}}\leftarrow {{\mathsf{Y}}}_{j}\)

else

1. Determine set of Pauli strings required to span elements \({(i{{\bf{B}}}_{k})}_{k = 1}^{\dim ({\mathfrak{g}})}\) and denote the set \({{\mathcal{P}}}_{{\mathfrak{g}}}\).

2. \({{\mathcal{P}}}_{{\mathfrak{g}}}\leftarrow {{\mathcal{P}}}_{{\mathfrak{g}}}\cup \{{{\mathsf{Z}}}_{j},{{\mathsf{Y}}}_{j}\}\), \(| {{\mathcal{P}}}_{{\mathfrak{g}}}| ={\mathcal{O}}(\,\text{poly}\,(n))\) by assumption. Reduce \({{\mathcal{P}}}_{{\mathfrak{g}}}\) to a basis.

3. Let C be a \(| {{\mathcal{P}}}_{{\mathfrak{g}}}| \times \dim ({\mathfrak{g}})\) matrix whose k-th column corresponds to the components of iBk in the basis \({{\mathcal{P}}}_{{\mathfrak{g}}}\).

4. Let A be a \(| {{\mathcal{P}}}_{{\mathfrak{g}}}| \times 2\) whose first column contains a 1 in the row corresponding to \({{\mathsf{Z}}}_{j}\) and whose second column contains a 1 in the row corresponding to \({{\mathsf{Y}}}_{j}\).

5. Perform a singular value decomposition on \({{\bf{A}}}^{{\mathsf{T}}}{\bf{C}}\), and there are at most two nonzero singular values r1, r2.

if r1 ≠ 1 and r2 ≠ 1 then

return FAILURE

else

1. W ← singular vector with singular value 1.

2. Expand iW in basis \((i{{\mathsf{Z}}}_{j},i{{\mathsf{Y}}}_{j})\) record components as α and β, respectively.

end if

end if

1. Expand iW in basis \({({{\bf{B}}}_{k})}_{k = 1}^{\dim ({\mathfrak{g}})}\), and record components as γk.

2. Compute

3. return \({\tilde{x}}_{j}\).

Theorem 3

Suppose that the polynomial DLA and slow Pauli expansion (Def 9) conditions are satisfied. Also, suppose that we are given a snapshot vector esnap(x) for a VQC with trainable portion U(θ) with DLA \({\mathfrak{g}}\) and Pauli product feature encoding (Eq. (26)) and the corresponding DLA basis elements \({({{\bf{B}}}_{k})}_{k = 1}^{\dim ({\mathfrak{g}})}\). The classical Algorithm 3 outputs an ϵ estimate of xj, up to periodicity, or outputs FAILURE, with time \({\mathcal{O}}(\,\text{poly}\,(n)\log (1/\epsilon ))\).

Proof

We provide the proof in the methods section.

For illustrative purposes, we show in Fig. 3 the snapshot inversion process for the special case where \(i{{\mathsf{Z}}}_{j}\in {\mathfrak{g}}\), i.e.,

for \(i{{\mathsf{Z}}}_{j}=\mathop{\sum }\nolimits_{k = 1}^{\dim ({\mathfrak{g}})}{\gamma }_{k}^{(j)}{{\bf{B}}}_{k}\). The general parallel data reuploading case can be handled by applying the procedure to only one of the rotations that encodes at xj at a time, checking to find one that does not cause the algorithm to return FAILURE.

A product map encoding, whereby each input variable xj is encoded into an individual qubit, and the snapshot used by the model corresponds to single-qubit measurements of the DLA basis elements. In this setting, the snapshot is trivial to invert and find the original data using the relation \({x}_{j}={\cos }^{-1}\left(2{{\mathbf{\gamma }}}^{(j)}\cdot {{\bf{e}}}_{{\rm{snap}}}\right)\).

2. General Pauli Encoding: We now present a more general procedure that applies to feature maps that use serial data reuploading and multi-qubit Paulis. However, we introduce a condition that ensures that each xj is locally encoded. More generally, we focus our discussion on encoding states that may be written as a tensor product of Ω subsystems, i.e., multipartite states.

where \(\dim ({{\mathsf{x}}}_{J})\) is constant. The procedure is highlighted in Algorithm 4 and requires solving a system of polynomial equations.

In addition, the procedure may not be completely classical as quantum assistance may be required to compute certain expectation values of ρJ(x), specifically with respect to the DLA basis elements. For simplicity, the algorithm and the theorem characterizing the runtime ignore potential errors in estimating these expectations. If classical estimation is possible, then we can potentially achieve a \({\mathcal{O}}(\,\text{poly}\,(\log (1/\epsilon )))\) scaling. However, if we must use quantum, then we will incur a \({\mathcal{O}}(1/\epsilon )\) (due to amplitude estimation) dependence, which can be significant. Theorem 4 presents the attack complexity, ignoring these errors.

Algorithm 4

Snapshot Inversion for General Pauli Encodings

Require: Snapshot vector esnap(x) of dimension \(\dim ({\mathfrak{g}})={\mathcal{O}}(\,\text{poly}\,(n))\) corresponding to a basis \({({{\bf{B}}}_{k})}_{k = 1}^{\dim ({\mathfrak{g}})\left.\right)}\) of DLA \({\mathfrak{g}}\). Each Bk is expressed as a linear combination of \({\mathcal{O}}(\,\text{poly}\,(n))\) Pauli strings. Snapshot inversion is being performed for a VQC model that utilizes a trainable portion of U(θ) with DLA \({\mathfrak{g}}\) and separable encoding Eq. (31) with qubit partition \({\mathcal{P}}\). Index j ∈ [d], ϵ < 1

Ensure: An ϵ estimate of the jth component xj of the data input \({\bf{x}}\in {{\mathbb{R}}}^{d}\) up to periodicity

1. Find a ρJ for \(J\in {\mathcal{P}}\) that depends on xj. Let R denote the number of Pauli rotations in the circuit for preparing ρJ that involve xj.

2. For each \(k\in [\dim (g)]\), compute Tr(BkρJ(x)) and \(\,\text{Tr}\,({{\bf{B}}}_{k}{\rho }_{{J}^{c}}({\bf{x}}))\).

3. Determine the set \({{\mathcal{S}}}_{J}=\{k:\,\text{Tr}\,({{\bf{B}}}_{k}{\rho }_{J}({\bf{x}}))\,\ne\, 0\,\& \,\,\text{Tr}\,({{\bf{B}}}_{k}{\rho }_{{J}^{c}}({\bf{x}}))=0\}\), Jc ≔ [n] − J.

if \({{\mathcal{S}}}_{J} < \dim ({{\bf{x}}}_{J})\) then

return FAILURE

else

1. For each \(k\in {{\mathcal{S}}}_{J}\) evaluate Tr(BkρJ(x)) at \(M=2{R}^{\dim ({{\mathsf{x}}}_{J})}+1\) points, \({{\bf{x}}}_{r}\in {\{\frac{2\pi r}{2R+1}:r = -R,\ldots R\}}^{\dim ({{\mathsf{x}}}_{J})}\)

2. For each k, solve a linear system

for α’s.

3. Consider the polynomial system:

with \(k\in {{\mathcal{S}}}_{J}\),

where \({u}_{j}=\cos ({x}_{j}),{v}_{k}=\sin ({x}_{j})\) and Tr, Ur relate to Chebyshev polynomials.

4. Apply Buchberger’s algorithm to obtain a Gröbner basis for the system.

5. Back substitution and univariable root-finding algorithm44 (e.g., Jenkins-Traub45) to obtain \({\tilde{{\bf{x}}}}_{J}\).

6. return \({\tilde{{\bf{x}}}}_{j}\)

end if

Theorem 4

Suppose that the feature encoding state ρ(x) is a multipartite state, specifically, there exists a partition \({\mathcal{P}}\) of qubits [n] such that

where we define \({{\mathsf{x}}}_{J}\subseteq {\bf{x}}\) to be components of x on which ρJ depends. In addition, we have as input an \({\mathcal{O}}(\,\text{poly}\,(n))\)-dimensional snapshot vector esnap with respect to a known basis Bk for the DLA of the VQC.

Suppose that for ρJ(x) the following conditions are satisfied:

-

\(\,\text{dim}\,({{\bf{x}}}_{J})={\mathcal{O}}(1)\),

-

each xk is encoded at most \(R={\mathcal{O}}(\,\text{poly}\,(n))\) times in, potentially multi-qubit, Pauli rotations.

-

and the set \({{\mathcal{S}}}_{J}=\{k:\,\text{Tr}\,({{\bf{B}}}_{k}{\rho }_{J}({\bf{x}}))\ne 0\,\,\& \,\,\,\text{Tr}\,({{\bf{B}}}_{k}{\rho }_{{J}^{c}}({\bf{x}}))=0\}\) has cardinality at least \(\,\text{dim}\,({{\mathsf{x}}}_{J})\), where Jc ≔ [n] − J.

Then the model admits quantum-assisted snapshot inversion for recovering \({{\mathsf{x}}}_{J}\). Furthermore, a classical snapshot inversion can be performed if ∀ k, Tr(BkρJ(x)) can be evaluated classically for all x. Overall, ignoring error in estimating Tr(BkρJ(x)), with the chosen parameters, this leads to a \({\mathcal{O}}(\,\text{poly}\,(n,\log (1/\epsilon )))\) algorithm.

Proof

We provide the proof in the methods section.

In the case a circuit has an encoding structure that leads to a separable state, we have indicated conditions that guarantee snapshot inversion can be performed. If the model is also snapshot recoverable, by having a polynomially sized DLA, then this means the initial data input can be fully recovered from the gradients, and hence the attack constitutes a strong privacy breach.

Snapshot Inversion for Generic Encodings

In the general case, but still \(\dim ({\mathfrak{g}})={\mathcal{O}}(\,\text{poly}\,(n))\), where it is unclear how to make efficient use of our knowledge of the circuit, we attempt to find an x via black-box optimization methods that produces the desired snapshot signature. More specifically, suppose, for simplicity we restrict our search to [−1, 1]d. We start with an initial guess for the input parameters, denoted as \({{\bf{x}}}^{{\prime} }\), and use these to calculate expected snapshot values \(\,\text{Tr}\,[{{\bf{B}}}_{k}\rho ({{\bf{x}}}^{{\prime} })]\). A cost function can then be calculated that compares this to the true snapshot, denoted esnap. As an example, one can use the mean squared error as the cost function,

The goal will be to solve the optimization problem \(\mathop{\min}\nolimits_{{{\bf{x}}}^{{\prime} }\in {[-1,1]}^{d}}f({{\bf{x}}}^{{\prime} })\). For general encoding maps, it appears that we need to treat this as a black-box optimization problem, where we evaluate the complexity in terms of the evaluations of f or, potentially, its gradient. However, in our setting, it is unclear what is the significance of finding approximate local minimum, and thus it seems for privacy breakage, we must resort to an exhaustive grid search. For completeness, we still state results on first-order methods that can produce approximate local minima.

We start by reviewing some of the well-known results for black-box optimization. We recall Lipschitz continuity by,

Definition 10

(L-Lipschitz Continuous Function). A function \(f:{{\mathbb{R}}}^{d}\to {\mathbb{R}}\) is said be L-Lipschitz continuous if there exists a real positive constant L > 0 for which,

If we consider the quantum circuit as a black-box L-Lipschitz function and \({{\bf{x}}}^{{\prime} }\) in some convex, compact set with diameter P (e.g., [−1, 1]d with diameter \(2\sqrt{d}\)). One can roughly upper bound L by the highest frequency component of the multidimensional trig series for f, which can be an exponential in n quantity. In this case, the amount of function evaluations that would be required to find \({{\bf{x}}}^{{\prime} }\) such that \(\Vert{\bf{x}}-{{\bf{x}}}^{{\prime} }{\Vert}_{2}\le \epsilon\) would scale as

which is the complexity of grid search46. Thus if for constant L this is a computationally daunting task, i.e., exponential in d = Θ(n).

As mentioned earlier, it is possible to resort to first-order methods to obtain an effectively dimension-independent algorithm for finding an approximate local minimum. We recall the definition of β-smoothness as,

Definition 11

(β-Smooth Function). A differentiable function \(f:{{\mathbb{R}}}^{d}\to {\mathbb{R}}\) is said be be β-smooth if there exists a real positive constant β > 0 for which

If we have access to gradients of the cost function with respect to each parameter, then using perturbed gradient descent47 would roughly require

function and gradient evaluations for an L-Lipschitz function that is β-smooth to find an approximate local min. With regards to first-order optimization, computing the gradient of f can be expressed in terms of computing certain expectation values of ρ, either via finite-difference approximation or the parameter-shift rule for certain gate sets48.

Regardless of whether recovering an approximate local min reveals any useful information about x, up to periodicity, it is still possible to make such a task challenging for an adversary. In general, the encoding circuit will generate expectation values with trigonometric terms. To demonstrate, we can consider a univariate case of a single trigonomial \(f(x)=\sin (\omega x)\), with frequency ω. This function will be ω-Lipschitz continuous with ω2-Lipchitz continuous gradient. Hence, when considering the scaling of gradient-based approach in Eq (36) we see that the frequency of the trigonometric terms will directly impact the ability to find a solution. Hence, if selecting a frequency that scales exponentially \(\omega ={\mathcal{O}}(\exp (n))\), then snapshot inversion appears to be exponentially difficult with this technique.

Importantly, if the feature map includes high frequency terms, for example the Fourier Tower map of30, then β and L can be \({\mathcal{O}}(\exp (n))\). However, as noted in the snapshot inversion for local encoding part of the results section it is possible to make use of the circuit structure to obtain more efficient attacks. In addition, a poor local minimum may not leak any information about x.

Direct input recovery

Note that it also may be possible to completely skip the snapshot recovery procedure and instead variationally adjust \({{\bf{x}}}^{{\prime} }\) so that the measured gradients of the quantum circuit \({C}_{j}^{{\prime} }\), match the known gradients Cj with respect to the actual input data. This approach requires consideration of the same scaling characteristics explained in Eq. (36), particularly focusing on identifying the highest frequency component in the gradient spectrum. If the highest frequency term in the gradient Cj scales exponentially, \(\omega ={\mathcal{O}}(\exp (n))\), then even gradient descent based methods are not expected to find an approximate local min in polynomial time.

Further privacy insights can be gained from Eq. (21), where a direct relationship between the gradients and the expectation value snapshot is shown, which in general can be written as

This indicates that the highest frequency terms of any esnap component will also correspond to the highest frequency terms in Cj(x), as long as its respective coefficient is non-zero \({\chi }_{t}^{(j)}\ne 0\).

This underscores scenarios where direct input recovery may prove more challenging compared to snapshot inversion, particularly in a VQC model. Consider a subset \({\tilde{{\bf{e}}}}_{{\rm{snap}}}\subseteq {{\bf{e}}}_{{\rm{snap}}}\) where each component has the highest frequency that scales polynomially with n. If there are sufficiently many values in \({\tilde{{\bf{e}}}}_{{\rm{snap}}}\) then recovering the approximate local min to Eq. (34) may be feasible for these components. However, for gradient terms Cj(x) that depend on all values of esnap, including terms outside of \({\tilde{{\bf{e}}}}_{{\rm{snap}}}\) that exhibit exponential frequency scaling, then gradient descent methods may take exponentially long when attempting direct input inversion, even if recovering approximate local minima to the snapshot inversion task can be performed in polynomial time.

Investigations into direct input recovery have been covered in previous work30 where the findings concluded that the gradients generated by Cj(x) would form a loss landscape dependent on the highest frequency ω generated by the encoding circuit, indicating that exponentially scaling frequencies led to models that take exponential time to recover the input using quantum-assisted direct input recovery. The Fourier tower map encoding circuit used in ref. 30 was designed such that ω scales exponentially to provide privacy; this was done by using m qubits in a sub-register per data input xj, with the single qubit rotation gates parameterized by an exponentially scaling amount. The encoding can be defined as

Hence, the gradient contained exponentially scaling highest frequency terms, leading to a model where gradient descent techniques took exponential time. However, if considering the expectation value of the first qubit in a sub-register of this model, we note this corresponds to a frequency ω = 1, and hence the respective expectation value for the first qubit would be snapshot invertible. However, in the case of ref. 30, the DLA was exponentially large, meaning the model was not snapshot recoverable, hence these snapshots could not be found to then be invertible. Hence, from our new insights, we can conclude that the privacy demonstrated in ref. 30 was dependent on having an exponential DLA dimension. However, an exponentially large DLA also led to an untrainable model, limiting the real-world applicability of this previous work. Lastly, recall that Algorithm 3 in the case of poly DLA and slow Pauli expansion is a completely classical snapshot inversion attack for the Fourier tower map. Further, highlighting how snapshot inversion can be easier than direct inversion.

We show that both direct input recovery and snapshot inversion are dependent on frequencies ω generated by the encoding circuit, highlighting that this is a key consideration when constructing VQC models. The introduction of high-frequency components can be used to slow down methods that obtain approximate local minimum to Eq. (34). However, for true privacy breakage, it appears that, in general, we still need to resort to grid search, which becomes exponentially hard with dimension regardless of high-frequency terms. However, for problems with a small amount of input, introducing high-frequency terms can be used to also make grid search harder. The idea of introducing large frequencies is a proxy for the more general condition that our results hint at for privacy, which is that the feature map \(\rho ({{\bf{x}}}^{{\prime} })\) should be untrainable in terms of varying \({{\bf{x}}}^{{\prime} }\).

Notably, cases exist where the same model can have an exponential frequency gradient, but can still contain a certain number of expectation snapshot values with polynomial scaling frequencies. Hence, it is also important to note that merely showing that a model is not directly input recoverable does not guarantee privacy, as one needs to also consider that if the model is snapshot recoverable, and that these snapshots may be invertible if sufficient polynomial scaling frequency terms can be recovered. This duality highlights the complexity of ensuring privacy in quantum computing models and stresses the need for a comprehensive analysis of the frequency spectrum in both model construction and evaluation of privacy safeguards.

Expectation value landscape numerical results

In this section, we provide a numerical investigation of the impact of high-frequency components in the encoding circuit on the landscape of Eq. (34) for snapshot inversion. The idea is to present examples that move beyond the Fourier tower map. We present two cases of encodings that would generally be difficult to simulate classically. By plotting a given expectation value against a univariate x, we can numerically investigate the frequencies produced by both models.

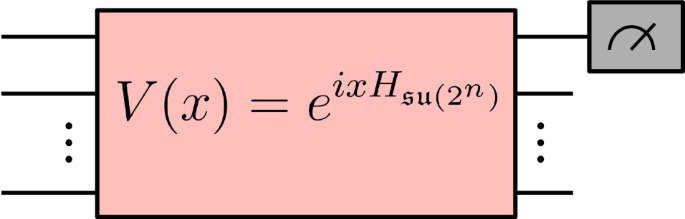

In Fig. 4 we demonstrate a circuit in which x parameterizes a single \({R}_{{\mathsf{X}}}\) rotation gate, but on either side of this is an unknown arbitrary unitary matrix acting on n qubits. This would be classically hard to simulate due to the arbitrary unitary matrices; however, the result effectively corresponds to taking measurements on an unknown basis, and using only a few samples of x it is possible to recreate the graph as a single frequency sinusoidal relationship. This results in the distance between the stationary points being r = π for any value of n. This corresponds to a frequency \(\omega =\frac{r}{\pi }=1\), regardless of the value of n. This circuit, therefore, exhibits constant frequency scaling independent of n and hence could be easy for gradient-based methods to recover an approximate local min.

Encoding circuit diagram showing a single qubit \({R}_{{\mathsf{X}}}\) rotation gate parameterized by the univariate parameter x, but with arbitrary 2n dimensional unitaries applied before and after the x parameterized gate. Despite being hard to simulate analytically, the expectation value ein varies as a simple sinusoidal function in x, regardless of the total number of qubits n.

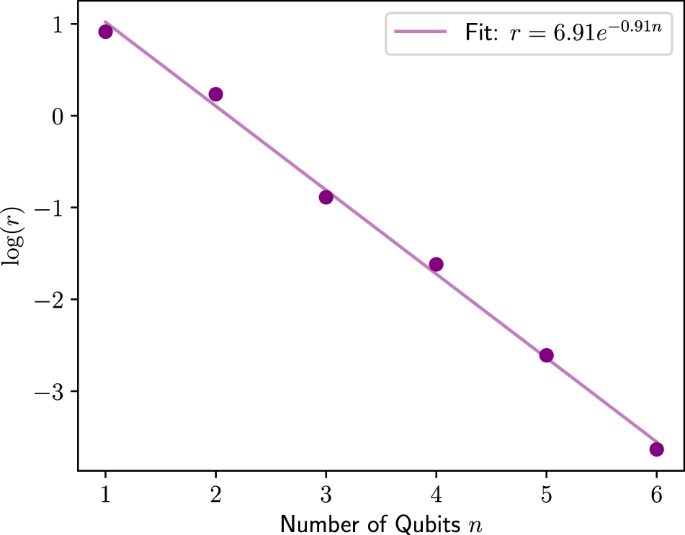

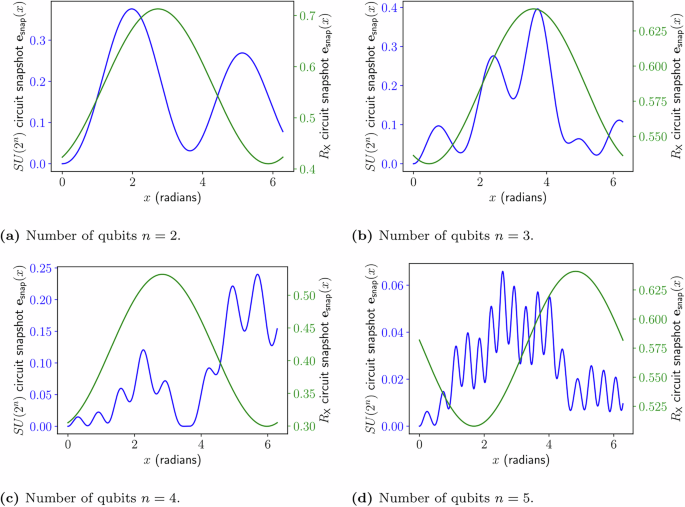

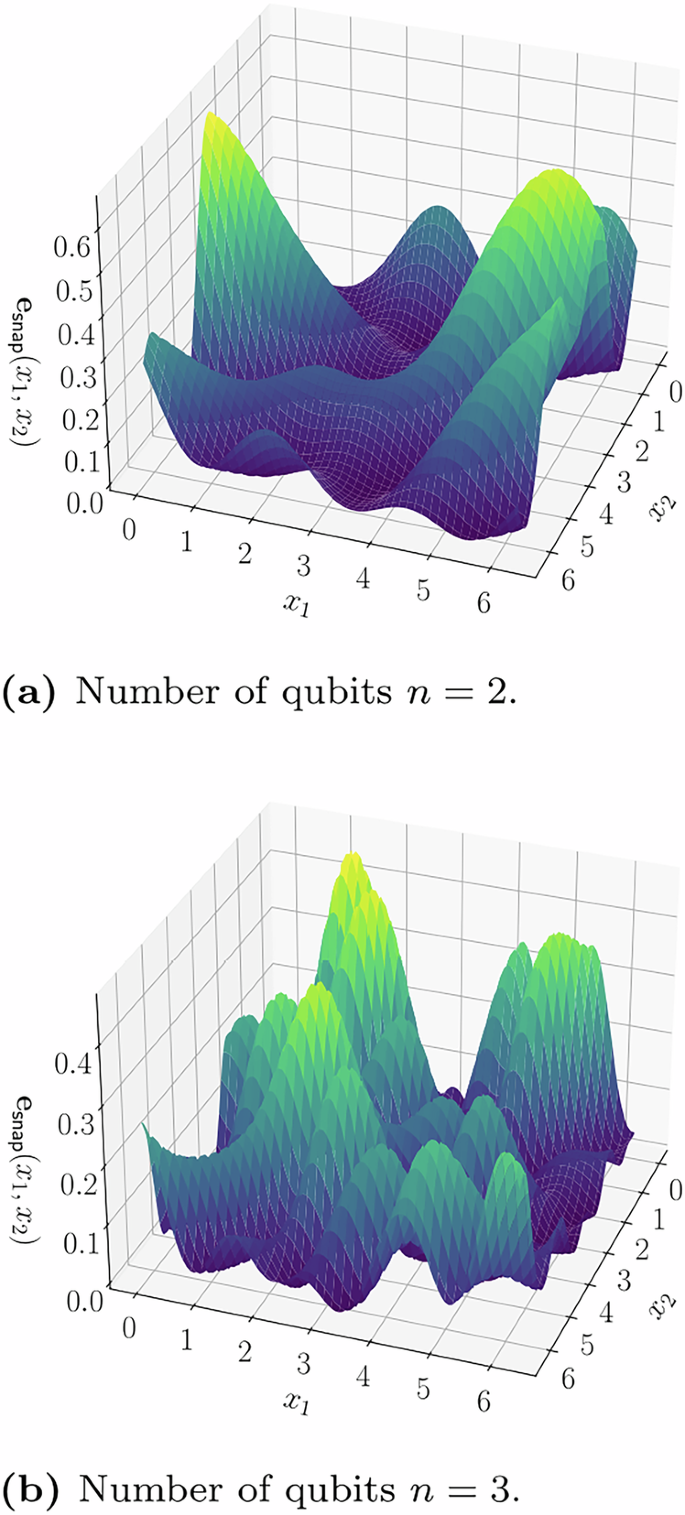

We briefly give an example of a type of circuit that can generate high-frequency expectation values. Figure 5 demonstrates a circuit where x parameterizes an SU(2n) gate. The result when measuring the same expectation value corresponds to the highest frequency term that is exponentially increasing. This is shown in the plot in Fig. 6 in which the distance between stationary points r shrinks exponentially as the number of qubits increases for the SU(2n) parameterized model, which roughly corresponds to an exponentially increasing highest frequency term. A comparison between the expectation value landscape of the two different encoding architectures, is shown in Fig. 7, demonstrating that the single rotation gate parameterization, as shown in Fig. 4, produces a sinusoidal single-frequency distribution, even as the number of qubits is increased; while the SU(2n) gate parameterization, shown in Fig. 5, contains exponentially increasing frequency terms. A visual representation for the multivariate case is also demonstrated in Fig. 8 which shows the expectation value landscape when two input parameters are adjusted, for a model comprised of two different SU(2n) parameterized gates parameterized by the variables x1 and x2 respectively, demonstrating that as more qubits are used, the frequencies of the model increase and hence so does the difficulty of finding a solution using gradient descent techniques.

Plot showing the relationship between the average minimum distance r between stationary points of the expectation value \({\mathsf{Z}}{{\otimes}} {{\mathbb{I}}}^{{{\otimes}} n-1}\) as a function of a univariate x input. The encoding circuit considered is a parameterized SU(2n) gate which is parameterized by a univariate input x as U = eiHx, where H is a randomly generated Hermitian matrix. The average was taken over ten repeated experiments where H was regenerated each time.

Comparison of how the expectation value of the measurement of Z1 varies with x for both the model parameterized using a single \({R}_{{\mathsf{X}}}\) rotation gate as detailed in Fig. 4 and the model parameterized using an SU(2n) gate as detailed in Fig. 5, for varying amounts of qubits. a Landscape with two qubits in the encoding. b Landscape with three qubits in the encoding. c Landscape with four qubits in the encoding. d Landscape with five qubits in the encoding.

Comparison of how the expectation value of the measurement of Z1 varies with x for both the model parameterized using a single \({R}_{{\mathsf{X}}}\) rotation gate as detailed in Fig. 4 and the model parameterized using an SU(2n) gate as detailed in Fig. 5, for varying amounts of qubits. a Landscape with two qubits in the encoding. b Landscape with three qubits in the encoding.

The two example circuits demonstrate encoding circuits that are hard to simulate, and hence, no analytical expression for the expectation values can be easily found. These models do not admit classical snapshot inversion; however, by sampling expectation values, it may be possible to variationally perform quantum-assisted snapshot inversion. Whether numerical snapshot inversion can be performed efficiently will likely be affected by the highest frequency ω inherent in the encoding, which will itself depend on the architecture of the encoding circuit. This suggests that designing encoding circuits such that they contain high-frequency components is beneficial in high-privacy designs. We have shown that SU(2n) parameterized gates can produce high-frequency terms, whereas single-qubit encoding gates will be severely limited in the frequencies they produce.

Discussion

In this research, we conduct a detailed exploration of the privacy safeguards inherent in VQC models regarding the recovery of original input data from observed gradient information. Our primary objective was to develop a systematic framework capable of assessing the vulnerability of these quantum models to a general class of inversion attacks, specifically through introducing the snapshot recovery and snapshot inversion attack techniques, which primarily depend on the variational and encoding architectures, respectively.

Our analysis began by establishing the feasibility of recovering snapshot expectation values from the model gradients under the LASA assumption. We demonstrated that such recovery is viable when the Lie algebra dimension of the variational circuit exhibits polynomial scaling in the number of qubits. This result underscores the importance of algebraic structure in determining the potential for privacy breaches in quantum computational models. Furthermore, due to the fact that a polynomial scaling DLA dimension is commonly required for models to be trainable, our results suggest that a trade-off may exist between privacy and the trainability of VQC models. Assuming one insists on a polynomial-sized DLA, our framework suggests that a weak privacy breach will always be possible for the type of VQC model studied. To ensure the privacy of the model overall, one cannot rely on the variational circuit and needs to instead focus more on the encoding architecture and ensuring snapshot inversion cannot be performed. If snapshot inversion is not possible, then at least strong privacy breaches can be prevented.

We then explored snapshot inversion, where the task is to find the original input from the snapshot expectation values, effectively inverting the encoding procedure. Studying widely used encoding ansatz, such as the local multiqubit Pauli encoding, we found that under the conditions that a fixed subset of the data paramaterizes a constituent state which has sufficient overlap with the DLA, and the number of gates used to encode each dimension of the input x was polynomial, that snapshot inversion was possible in \({\mathcal{O}}\left.\right(\,\text{poly}\,(n,\log (1/\epsilon ))\) time. This shows that a potentially wide range of encoding circuits are vulnerable to strong privacy breaches and brings their usage in privacy-focused models into question. For the most general encoding, which we approached as a black-box optimization problem, we demonstrated that using perturbed gradient descent to find a solution is constrained by the frequency terms within the expectation value Fourier spectrum. In general for exactly finding x it appears that a grid search would be required. Although we cannot provide strictly sufficient conditions due to the possibility of unfavorable local minima with perturbed gradients, we note that gradient descent for snapshot inversion may, in some cases, be easier to perform than for direct input data recovery from the gradients. This simplification arises because gradients can inherit the highest frequency term from the snapshots, potentially leading to scenarios where the gradient term contains exponentially large frequencies. However, there may still be sufficient polynomial frequency snapshots to permit snapshot inversion. This shifts the focus in attack models away from direct input recovery from gradients, a common approach in classical privacy analysis, towards performing snapshot inversion as detailed in this study, as a potentially more efficient attack method.

The dual investigation allowed us to construct a robust evaluative framework that not only facilitates the assessment of existing VQC models for privacy vulnerabilities but also aids in the conceptualization and development of new models where privacy is a critical concern. Our reevaluation of previous studies, such as those cited in ref. 30, through the lens of our new framework, reveals that the privacy mechanisms employed, namely the utilization of high-frequency components and exponentially large DLA, effectively prevent input data recovery via a lack of snapshot recoverability, but at the same time contribute to an untrainable model of limited practical use.

In conclusion, we offer a methodological approach for classifying and analyzing the privacy features of VQC models, presenting conditions for weak and strong privacy breaches for a broad spectrum of possible VQC architectures. Our findings not only enhance the understanding of quantum privacy mechanisms but also offer strategic guidelines for the design of quantum circuits that prioritize security while at the same time maintaining trainability. Looking ahead, this research paves the way for more robust quantum machine learning model designs, where privacy and functionality are balanced. This knowledge offers the potential to deliver effective machine learning models that simultaneously demonstrate a privacy advantage over conventional classical methods.

Methods

We utilize this section to draw the connections between the two key properties of VQC: trainability, i.e., the lack of barren plateaus36,49, and the ability to retain privacy of input. Building upon this connection, we discuss the prospects and future of achieving robust privacy guarantees with VQC models.

Connections between trainability and privacy in VQC

Solely requiring a machine learning model to be private is not sufficient to deploy it for a practical use case of distributed learning, such as federated learning. A key requirement in this collaborative learning scenario is also to ensure that the model remains trainable. A plethora of works have gone into exactly characterizing the trainability of VQC models by analyzing the presence of barren plateaus in the VQC model, starting from the work of McClean et al.33 and culminating in the works of Fontana and Ragone36,49. Especially, the work of Fontana36 provides an exact expression of the variance of the gradient of the model when the VQC is constrained to be in the LASA case, the details of which we also provide in Supplementary file Sec IV for completeness. A key insight into these works suggests that LASA models, with exponentially-sized DLA, may lead to the presence of barren plateaus, drastically deteriorating the trainability of such models36,49.

Within our privacy framework centered around snapshot recoverability, we also show via Theorem 2 that LASA models with an exponential size DLA are not classically snapshot recoverable, although this may lead to untrainable models. We can therefore conclude that a possible condition for protection against classical input recovery using gradients in a VQC model is to choose an ansatz that exhibits an exponentially large dynamical Lie algebra dimension, as this would render snapshot recovery difficult. Through our framework, we can see that previous works30 effectively relied on this property to ensure privacy. Combining the concept of trainability leads to the following corollary on the privacy of VQC models:

Corollary 5

Any trainable VQC on n qubits that satisfies the LASA condition in Def 5, fulfills the slow Pauli expansion condition as highlighted in Def 9, and has a DLA \({\mathfrak{g}}\) whose dimension scales as \({\mathcal{O}}(\,\text{poly}\,(n))\), would admit snapshot recoverability with complexity \({\mathcal{O}}(\,\text{poly}\,(n))\).

Hence, we can conclude that, at least in the LASA case of VQC, the privacy of the model is linked to the DLA dimension, and furthermore, that there is a direct tradeoff between privacy and trainability of the model. As exponentially sized DLA models are expected to be untrainable in the LASA case, this means that for realistic applications, it does not seem feasible to rely on quantum privacy derived from an exponential DLA, precluding snapshot recoverability in the model. This suggests that any privacy enhancement from quantum VQCs should not derive from the variational part of the circuit for LASA-type models that are intended to be trainable. In other words, we expect the majority of trainable VQC models to be vulnerable to weak privacy breaches. The privacy of variational models beyond the LASA case becomes linked to a larger question within the field, notably, whether there exist quantum variational models that are not classically simulatable and do not have barren plateaus50.

It is also worth noting that if one attempted to create a model that is not snapshot recoverable by ensuring that \(D \, < \,{\text{dim}}\,({\mathfrak{g}})\), and hence an underparameterized system of equations, it would effectively lead to an underparameterized model. A model is underparmeterized when there are not enough variational parameters to fully explore the space generated by the DLA of the ansatz, which is a property that may not be desirable for machine learning models51.

Future direction of VQC quantum privacy

Due to the above argument suggesting that achieving privacy via an exponentially large DLA may cause trainability issues in the underlying model, it appears that future improvements in privacy using VQC may primarily focus on preventing the snapshot inversion step, as we highlight in the input recoverability definitions part of the results section. This promotes a focus on the encoding circuit architectures of the VQC in order to prevent the model from admitting snapshot inversion to facilitate input recovery.

We have explicitly shown the necessary condition required to achieve privacy from purely classical attacks. If it is not possible to classically simulate the expectation values of the quantum encoded state with respect to the DLA basis elements of the variational circuit, then it will not be possible to attempt classical analytical or numerical inversion attacks. Any VQC designed where these expectation values cannot be simulated will, therefore, be protected against any purely classical snapshot inversion attempts. This condition can therefore prevent strong privacy breaches, as long as the attacking agent only has access to a classical device.

In the case where the attacker can simulate expectation values of the DLA basis or has access to a quantum device to obtain the expectation values, then numerical classical snapshot inversion or numerical quantum-assisted snapshot inversion can be attempted, respectively. We have shown that in this case, an important factor in preventing these techniques is that the expectation values have exponentially scaling frequency terms, resulting in the attacks requiring solving a system of high-degree polynomial equations. The implication of this is that to achieve a useful privacy benefit in VQC, it may require that the encoding circuit is constructed in such a way that the expectation values of the DLA basis elements of the variational circuit contain frequency terms that scale exponentially. Notably, we find that having high frequency terms in the gradients, as suggested in the encoding circuit of ref. 30, does not necessarily protect against numerical snapshot inversion attacks. This is because the gradients inherit the highest frequency term from all expectation values, but there may be a sufficient number of polynomial frequency expectation values to perform snapshot inversion, even if direct input inversion is not possible.

Unlike the variational case, where a connection between DLA dimension and trainability has been established, the effect that privacy-enhancing quantum encodings would have on the trainability of a model is less clear. If the majority of expectation values used in the model contain exponentially large frequencies, then this potentially restricts the model to certain datasets. In classical machine learning, there have been positive results using trigonometric feature maps to classify high-frequency data in low dimensions35. It remains a question for future research, the types of data that may be trained appropriately using the privacy-preserving high-frequency feature maps proposed. If models of this form are indeed limited in number, then the prospects for achieving input privacy from VQC models appear to be limited. More generally, the prospect for quantum privacy rests on feature maps that are untrainable with regard to adjusting \({{\bf{x}}}^{{\prime} }\) to recover expectation values esnap, while at the same time remaining useful feature maps with respect to the underlying dataset and overall model.

Proof of Theorem 3

Proof

Steps 1–5 in Algorithm 3 can be performed in \({\mathcal{O}}(\,\text{poly}\,(n))\) classical time due to the polynomial DLA and slow Pauli expansion conditions. The purpose of step 5 is to compute the angles between the linear subspaces \({\mathfrak{g}}\) and \({\text{span}}_{{\mathbb{R}}}\{i{{\mathsf{Z}}}_{j},i{{\mathsf{Y}}}_{j}\}\). This is to identify if there is any intersection, i.e., if ∃ α, β such that \(\alpha i{{\mathsf{Z}}}_{j}+\beta i{{\mathsf{Y}}}_{j}\in {\mathfrak{g}}\), which is identified by singular values equal to 1. The algorithm cannot proceed if the intersection is trivially empty, as the snapshot vector does not provide the required measurement to obtain xj efficiently with this scheme. From now on, we suppose that such an element has been found.

We can, without loss of generality, just focus on the one-qubit reduced density matrix for xj. In this case, using Bloch sphere representation:

such that

However, by assumption, \({\gamma }_{k}\in {\mathbb{R}}\) such that

So to recover xj, we only need to solve:

which, after rearranging, allows the recovery of

up to periodicity. By the polynomial DLA and slow Pauli expansion conditions (i.e., all DLA basis elements are expressed as linear combinations of Paulis), we can compute γk in \({\mathcal{O}}(\,\text{poly}\,(n)\log (1/\epsilon ))\) time.

Proof of Theorem 4

Proof

Given that each xk is encoded with multiqubit Pauli rotations, i.e., possible eigenvalues are 1 and −1, it is well known48 that the following holds:

and Tr(BkρJ(x)) is real. The set \({{\mathcal{S}}}_{J}\) is to ensure that we can isolate a subsystem where \(\dim ({{\mathsf{x}}}_{J})\) is constant.