Abstract

Running two separate two-stage randomized trials, we implement and test three variants of a small group instruction intervention aimed at improving mathematics competencies for the 20% lowest achievers in mathematics in grades 2 and 8 in Danish public schools. We calculate immediate impacts on math competencies as well as impacts in the medium term and conduct cost-effectiveness analyses of the different intervention arms. The context is the Scandinavian welfare state with an already high-quality public school system. Nevertheless, we find very large positive and cost-effective impacts of several intervention variants, some of which persist in the medium term. In particular, interventions in grade 2 tend to persist, while eighth-grade interventions tend to fade out in the medium term.

Similar content being viewed by others

Introduction

It is well known that mathematics skills acquired in school are important for later life outcomes1,2, and hence, targeted interventions aimed at low math achievers have the potential to reduce income and other inequalities. In this study, we implement and test three variations of an individually tailored small-group instruction intervention in mathematics aimed at low-achieving pupils in Danish public schools in grades 2 and 8. The intervention begins by assessing each low-performing pupil’s math competencies and then tailors a 12-week intervention (four 30-min sessions per week) aimed at removing or reducing ‘math holes’, using specially designed learning materials and dialog-based instruction methods3,4. We study the effectiveness of different modes of small-group instruction. We randomly manipulate (a) the group size and (b) whether or not the math teacher receives coaching during the intervention period, using a two-stage randomized design.

Although Danish pupils rank relatively high in mathematics proficiency5, there are nevertheless still 14.6% that score below what is considered the minimum level of proficiency that children should have acquired by the end of lower secondary school. These children do not “demonstrate the ability and initiative to use mathematics in simple real-life situations5. This percentage is higher in all but seven countries included in the PISA study, so the problem is present in most countries; for instance, it is 27.1% for 15-year-olds in the United States, 19.2% in the U.K., and 21.1% in Germany, while it is much larger, i.e., 68.1%, in Brazil.

A low level of mathematics proficiency among school leavers is a problem for several reasons; first of all, research has shown that early math skills have great predictive power for later life1,2, larger than reading or attention skills6,7. For instance, high-school math performance is much more important for doing well in college science studies (e.g., biology or chemistry) than performance in high-school science8. Moreover, children with low proficiency in mathematics during early school years may fall even further behind in large classrooms, if teachers teach mainly to the median pupils (e.g., the 60% in the middle) due to, e.g., resource constraints. There is some evidence of this in the Danish case, where the math skill gap across, e.g., socioeconomic status tends to increase during school years9.

Second, if math proficiency affects later life outcomes, it might also affect the development of an entire country over the longer run, affecting, e.g., growth rates. Indeed, cognitive skills, as measured in math and science tests, have strong causal effects on individual earnings, on the distribution of income, and on economic growth10,11. Similarly, a half standard deviation better performance in mathematics and science at the population level has been historically associated with annual GDP growth rates that are 0.87 percentage points larger12.

Perhaps for these reasons, mathematics is gaining increasing importance in educational systems across the world; recent Danish reforms have for example increased the minimum required level of mathematics in academic high schools as well as in vocational schools.

Hence, it is of crucial importance, both to the individual and to society, to improve mathematics proficiency, especially for the pupils with the lowest proficiency in mathematics. One might think that the Scandinavian welfare state, with its universal access to free high-quality public schools, and its egalitarian society and norms, would have solved this problem. However, recent evidence shows that even in the Scandinavian welfare state, educational inequality is not much lower than in, say, the United States13,14.

An additional motivation for the particular focus on the effectiveness of small-group instruction interventions may be found in recent school closures in relation to lockdown periods during the COVID-19 pandemic. School closures during the lockdown have been shown to lead to a lack of academic progress overall and even the loss of previously acquired skills for low-achieving pupils15. Hence, school closures call for pandemic-robust types of interventions, in particular, aimed at low- achieving pupils, and small-group instruction is ideal to address this issue, since it reduces the risk of spread of infection.

A relevant issue is when to intervene. Most research indicates that early interventions are better than later interventions, but this finding is often based on comparisons of different types of interventions applied in different environments. We implement the same type of interventions in grades 2 and 8 in Danish schools, enabling a direct comparison of their relative effectiveness. In addition to estimating immediate effects, we also study math results 14–18 months after the intervention is completed to investigate the fade-out of short-run impacts in the medium term. We also study spillover effects to other subjects, i.e., Danish language. Finally, we briefly discuss the cost-effectiveness of the interventions.

The main research question we wish to answer is the following: Does a tailored small-group instruction intervention help low achievers improve in mathematics?

In addition, the following research questions are of interest as well, although the study does not formally have the power to address all of them fully:

-

1.

Does the timing of the intervention matter for the effect size?

-

2.

Does group size matter for the effect size?

-

3.

Does teacher coaching matter for the effect size?

-

4.

Do effects fade out over time?

-

5.

Are there spillover effects to other subjects?

-

6.

Are there effects on measures of personality and well-being?

-

7.

What intervention variants are more cost-effective?

There are many reasons why some children may fall behind in mathematics; medicinal/neurological, psychological, sociological, and didactical causes may all contribute to the problem16. In the medicinal/neurological category, there are children with general cognitive challenges such as e.g., intellectual disability, and some children suffer from dyscalculia/mathematics learning disability. Intellectual disability prevalence in the United States is estimated at 2–3%17, while dyscalculia prevalence is estimated to be 2–13% depending on how it is measured and defined17,18,19,20. Math ability may also have a genetic component, as documented by Lee et al. (2018)21, who identified 618 lead SNPs (single nucleotide polymorphisms) associated with self-rated math ability.

Among the psychological causes may be various forms of difficulties to concentrate and exert effort but also mathematics anxiety22,23 and low self-efficacy with respect to mathematics. The prevalence of mathematics anxiety is estimated to lie between 2 and 17% depending on measurement and definition24, and the correlation between math anxiety and math outcomes is estimated at −0.28 (95% CI [−0.29,−0.26]) in a recent meta-analysis25. There also appears to be some covariation between dyscalculia and math anxiety in the sense that children with dyscalculia are about twice as likely to suffer from math anxiety as other children. Children with math anxiety are, on the other hand, not more likely to suffer from dyscalculia than children without math anxiety24. Low self-efficacy with respect to mathematics (belief in one’s own ability to master mathematics) was shown to be a problem among quite a few children in many countries26. Here, Danish children were below the OECD average, although their math skills were just about average in the 2012 PISA study.

The sociological factors concern primarily the learning environment and the fact that some children grow up in less advantaged circumstances, and this may affect their ability to learn27,28. For example, Heckman (2006)27 documents very large differences in math test scores of children with parents in the highest versus the lowest income quartiles. These gaps open up early and do not disappear during the school years9,28. Although gaps open during the early years, evidence shows that family-based programs are not always very effective when implemented at scale—particularly for socioeconomically disadvantaged families29,30,31.

Didactical factors reflect inadequate teaching methodologies and variations in teacher quality. Didactical factors may also affect the way children learn and fall behind. An interesting case study32 demonstrates how different teaching methodology affects children’s motivation, beliefs and eventually the teachers’ categorization of them dramatically. Reviews of the literature on teacher quality find that, when using an output-based approach to measuring teacher quality, the difference between teachers at the top of the quality distribution and those near the bottom can be up to an entire year’s worth of learning per school year33,34. These studies also argue that teacher quality is by far the single school attribute with the largest influence on students’ outcomes.

Hence, many factors contribute to some children falling behind in mathematics, and for some children, several of these factors and mechanisms may be at play. This points to the importance of designing and testing interventions that can be tailored to the individual child (and teacher) in order to address the individual-specific nature of the learning (and teaching) problem. This is obviously difficult in the context of ordinary classroom sizes of 20+ pupils. However, small-group instruction in combination with coaching of teachers may address some of these issues.

There are a number of systematic reviews and meta-analyses analyzing the impact of interventions aimed at low achievers and interventions specifically aimed at improving math competencies.

A systematic review and meta-analysis of the impacts of different school-based interventions aimed at children with academic difficulties in grades 0–635 did not distinguish between math and reading interventions. 86% of the studies included were conducted in the United States. They found that small-group teaching interventions and interventions focusing on peer instruction had the largest effects sizes. Small-group instruction (5 or fewer pupils per teacher) had effect sizes (ES) around 0.35 standard deviations (SD).

A meta-analysis of mathematics interventions in grades K-536 found that tutoring interventions, defined as 1-1 or 1-small-group instruction, were the most effective type of intervention (ES = 0.20 SD, k = 22) with 1-small-group being slightly more effective (ES = 0.30 SD, k = 14 vs ES = 0.19 SD, k = 8). Most of the interventions included were from either the United States or the UK.

When it comes to interventions aimed at older pupils (grades 6–12), a systematic review of interventions aimed at pupils with academic difficulties37 found effect sizes in the range 0.2–0.4 SD for interventions where the impact was measured in a mathematics test. They found effect sizes in the upper end of this interval for small-group instruction interventions but did not distinguish between math and other subjects in the latter calculation. The estimated effect sizes were ATETs (average treatment effect on the treated), which are larger than ITT (intention-to-treat) effects.

A systematic review of mathematics interventions aimed at older pupils38 found overall small effect sizes, and only two studies in the small-group category (ES = 0.36 SD). Once again, most studies were based on interventions conducted in the United States or the UK.

The evidence so far thus reveals that small-group instruction interventions (1-1 or 1-small-group instruction) are amongst the most effective when it comes to mathematics instruction but is inconclusive as to the relative effect sizes between early and later interventions, suggesting that for small-group instruction interventions, there may not be much difference. Similarly, there is an indication that 1-small-group instruction is marginally more effective (and at least not worse) than 1-1 instruction, at least for younger pupils. There is, to our knowledge, no discussion of the fade-out of impacts over time, nor of cost-effectiveness, in any of these studies.

The effectiveness of teacher coaching, another element in some intervention arms of the intervention analyzed in the present study, is evaluated in a recent meta-analysis39. Using a broad definition, coaching includes coaches or peers observing teachers’ instruction and providing feedback to help them improve. The assumption regarding the mechanism underlying the hypothesized effectiveness of coaching thus is that it improves teachers’ instructional quality, which in turn improves student achievement. An average effect size of 0.18 SD on student achievement is reported.

Hence, the literature points to small-group instruction, possibly in combination with coaching of teachers, as a potentially strong intervention when it comes to improving math competences of low-performing pupils.

Results

The intervention consisted of small-group instruction (one teacher teaching 1 or 3–4 pupils) with or without the coaching of teachers. It was evaluated in two two-stage randomized trials, one in grade 2 and one in grade 8. The intervention arms tested are depicted in Fig. 1.

For more details on the intervention and evaluation design, power calculation, descriptive statistics, balancing tests, compliance etc., we refer the reader to the Methods section.

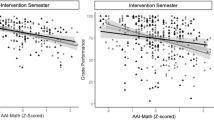

Figure 2 contains the main results. We report intention-to-treat (ITT) effects as well as local average treatment effects (LATE, corresponding to the ITT divided by the difference in fraction treated in the treatment and control groups), corresponding to the average treatment effect on the compliers. We discuss primarily the LATE effects, which correspond more closely to ATET effects reported in most other studies37. Results are reported separately by grade and intervention arm, and we also report pooled results across intervention arms within grade.

The pooled (overall) effect of the intervention is significantly positive in both grades 2 and 8, with LATE effect sizes equal to 0.47 SD and 0.30 SD, respectively. Effects are thus larger in grade 2 than in grade 8, although a formal test from a joint estimation cannot reject equality (P = 0.26)—the experiment was not designed to have sufficient power to detect such differences. ITT effect sizes are smaller, since not all in the treatment group received treatment, and some in the control groups did receive treatment (see Methods section and SI for a detailed analysis of compliance and implementation fidelity).

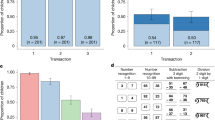

Turning to effect sizes of different variations of the intervention, we find, for grade 2, that 1-1 with coaching is the most effective intervention with a large significantly positive effect (LATE = 0.8 SD), 1-group also has a significant positive effect (LATE = 0.52 SD), while 1-group with coaching has a smaller and insignificant effect. It is difficult to find a good reason for this null result for 1-group with coaching in the data on implementation fidelity. Specifically, this was the group that received the most sessions on average, see panel c in Fig. 3. It is worth noting that one potential “explanation” is that the control group for 1-group with coaching in grade 2 had a much larger improvement in their average test result from pre- to post-test than the control groups of the two other treatment arms in grade 2, see Supplementary Fig 1. Unfortunately, we do not know why this is the case.

a The table shows compliance with the experimental protocol. b Placement of sessions. The figure shows in which lectures, the interventions take place and is based on a questionnaire sent to a sample of 16 participating schools. c The figure shows the average number of sessions attended by pupils. The planned number of sessions was 48. The figure is based on ~70% of the full treatment samples, as for some pupils there were missing data on the number of sessions held.

For grade 8, on the contrary, 1-group with coaching is the most effective intervention (LATE = 0.57 SD), while 1-group and 1-1 with coaching has small positive but insignificant effects. As the study was not designed to be able to detect differences in impacts between intervention arms, a formal test of equality of impacts cannot reject the hypothesis that the effects are equal (P = 0.17 for grade 2, and P = 0.37 for grade 8). These results are robust to a number of alternative estimation strategies, see Supplementary Table 1. Here we show that results do not change very much when a large set of covariates are included, nor do they change when the outcome on which the effect is estimated is the difference between the post-test and the pre-test.

An analysis of how the effect size depends on the number of sessions a pupil attends is likely to be endogenous due to selection (by pupil or teacher) of the number of sessions to attend. Moreover, it is a noisy measure and has around 30% missing observations. The results from the main model specification with a linear function of the number of sessions included reveal a coefficient per session of about 0.02 (P = 0.20 in grade 2 and P = 0.03 in grade 8).

Hence, the answer to the main research question is yes, the intervention improves outcomes for low achievers.

Turning to the secondary research questions, it appears that effects are larger in grade 2 than in grade 8, although the differences, as mentioned, are not statistically significant. With respect to the importance of group size and teacher coaching, results are mixed across the two grades, so no clear conclusions are available.

In Fig. 4, we show results for outcomes measured 14–18 months after the end of each intervention. Results for second graders are from the national tests in mathematics conducted in the spring of grade 3 (16–18 months after the end of the intervention), while results for eighth graders are from school-leaving exams in mathematics at the end of compulsory school (grade 9), which corresponds to ~14 months after the end of the intervention.

The figure shows for grades 2 and 8 separately, the medium term effect sizes on national tests in mathematics, taken 14–18 months after the intervention, for each of the three treatments as well as the pooled treatment. Vertical error bars are 95% confidence intervals. ITT is intention-to-treat effect, LATE is local average treatment effect.

The figure shows that in grade 2, the overall treatment effect is reduced by 31% to 0.32 with some variation across treatment arms, while in grade 8, it is reduced to 0.1 and is no longer statistically significant. In grade 2, 1-group is still significant with only a slightly reduced impact, while 1-1 with coaching is no longer statistically significant, as the estimated effect size is more than halved. The large difference in fade-out between 1-group and 1-1 with coaching could be a statistical coincidence. It could also be caused by a difference in the intervention; in 1-group, pupils sit together, often with classmates, and perhaps they become better at helping each other, accepting errors, and contributing more actively to the learning situation. In 1-1, the pupil sits alone with a teacher and may then, upon returning to the classroom, be more likely to revert to their former patterns of not participating actively or wait for the teacher’s support to make progress. This lack of participation can cause these students to fall behind more quickly compared to the other students. In grade 8, none of the intervention variants show significant effects in the medium term.

We find no significant spillover effects on outcomes in reading, neither for grade 2 nor grade 8 participants, see Supplementary Fig. 2. Neither do we find any significant effects on various measures of personality and well-being in school, see Supplementary Fig. 3.

As for the cost-effectiveness of the different variants of the interventions, we calculated the costs per participant for each intervention arm. Costs consist of teacher salaries, training costs (including costs of hiring substitute teachers), and costs of coaching. 1-group is the cheapest intervention in grade 2 with 1-1 with coaching being the most expensive, more than three times more expensive than 1-group in grade 2. In grade 8, cost differences are smaller due to low compliance rates, especially for 1-group with coaching. Per pupil costs ranged from $392 (1-group in grade 2) to $1273 (1-1 with coaching in grade 2). For more details on cost calculations, see the Methods section.

Figure 5 shows cost-standardized effect sizes, where costs are standardized with respect to the costs of the cheapest intervention, which was 1-group in grade 2. The figure demonstrates that 1-1 with coaching, although the most effective intervention before cost standardization, is naturally much more expensive than interventions treating 3–4 pupils in a group and with no additional costs of coaching. Hence, the most cost-effective intervention is 1-group in grade 2. In grade 8, the only effective intervention is still 1-group with coaching, although its cost-standardized effect size is now smaller than that of 1-group in grade 2, which was not the case before cost standardization.

Discussion

We found significant effects of the intervention in the pooled samples within grade, and in some intervention arms within each grade. Overall LATE effect sizes are 0.47 SD and 0.3 SD in grades 2 and 8, respectively. The most effective intervention in each grade has effect sizes of 0.8 SD and 0.57 SD in grades 2 and 8, respectively. According to a recent review of educational interventions based on 747 randomized trials and 1942 effect sizes40, an effect size should be considered large if it is 0.2 or above. Hence, according to that categorization, the significant effect sizes reported in this study are large. In fact, according to the distribution of effect sizes in educational interventions reported, an effect size of 0.3 in math corresponds around to the 85th percentile rank, while an effect size of 0.47 is considerably above the 90th percentile rank40.

Kraft (2020)40 also proposes a set of guidelines for interpreting effect sizes. We find these guidelines highly relevant, hence we frame the first part of the discussion of effect sizes around them.

First, studies designed to identify causal effects (rather than correlational associations) tend to find smaller effect sizes. The results reported in the present study arise from randomized trials specifically designed to estimate causal effects, which should produce smaller effect sizes.

Second, effect sizes depend on what, when, and how outcomes are measured. In particular, one should expect larger effect sizes if outcomes are measured immediately after the end of the intervention, if they are easy to affect, proximal to the intervention, and measured with more precision (less measurement error). In the present study, the primary outcome is the post-test, which is taken on the last day of the intervention. It is developed by members of the research team but designed to test pupils broadly in the curriculum of the relevant grade. Hence, all in all, we would expect this to produce larger effect sizes.

Third, the research design affects effect sizes. In particular, targeted interventions and small-scale efficacy trials tend to produce larger effect sizes. In our case, the intervention is targeted, but to a relatively large population of pupils, the 20% lowest achievers. It is not considered an efficacy trial, as the trial has been designed specifically to be as close to an everyday normal implementation context as possible (as evidenced by the relatively low degree of compliance and implementation fidelity, see Methods section). Hence, we would expect this to produce smaller effect sizes. Another point made here is that it matters how the outcome variable is standardized. Here, we standardize with respect to a baseline outcome in the target population of pupils, rather than the full population of pupils (for better comparability to other studies). This would tend to lead to larger effect sizes. Indeed, we show in the fourth row of Supplementary Table 1 that effect sizes are considerably smaller when standardized with respect to the full population of pupils in participating schools. Yet another point is that the type of effect matters. We have chosen to focus on the LATE—the treatment effect on compliers—for comparison to other studies, but we also report the somewhat smaller (yet still categorized as large) ITT.

Fourth, costs are obviously also important when interpreting effect sizes. In a schema proposed40, again based on empirical studies, a per-participant cost below $500 is considered low, while costs in the range from $500–$4000 are considered medium costs. It is remarkable that the most cost-effective intervention in our study (1-group in grade 2) is a low-cost intervention, while the overall most effective intervention (1-1 with coaching in grade 2) is in the lower end of the medium-cost range. Note also that our costs include training costs, some of which need not be incurred each time the intervention is repeated.

Fifth and finally, scalability is worth considering. It is a well-known result that effect sizes tend to decrease when programs are implemented at scale. For that specific reason, we have run this intervention as an effectiveness rather than an efficacy study. That is, we have not been involved in trying to boost fidelity and implementation adherence in general, and we have not monitored the intervention closely during its implementation. Nevertheless, we would probably still expect effects to be smaller if implemented more broadly, due to sample selection as well as other issues.

An additional point to be discussed is that compliance was not perfect; some pupils in the treatment group did not receive treatment, while some in the control groups did, see Panel a in Fig. 3. The LATE estimator takes this into account, hence, given an assumption of monotonicity (no one in the treatment group who decided not to participate in the treatment would have participated if assigned to the control groups and vice versa), the LATE estimator should reflect the effect of participating in the treatment on the compliers. However, the low implementation fidelity (only around 50% of the intended 48 sessions were actually held, see Panel c in Fig. 3) implies that the effect of a better implemented intervention could have been larger. On the other hand, this presumably reflects the fact that, as already mentioned, this was designed as an effectiveness trial rather than an efficacy trial. Hence, we might not expect as large a reduction if the intervention is scaled up as one might otherwise have surmised.

Fade-out is another relevant issue when it comes to discussing effect sizes. Regarding fade-out, we observe a tendency for more fade-out of effect sizes in grade 8 than grade 2. This might be a consequence of dynamic complementarity—early investments in skill formation make later investments/interventions more effective—learning begets learning27. However, the real test of this will have to await school-leaving exam results for grade 2 pupils, which will be available for research in 2026. By that time, we will be able to assess the impact of the intervention on school-leaving grades for those who participated in grades 2 and 8.

Another way to discuss effect sizes is to try to interpret them using different metrics or comparisons. Hill et al. (2008)41 propose three ways to interpret them: (1) in terms of years of additional learning, (2) in terms of policy-relevant performance gaps, and (3) by comparisons to similar interventions.

In terms of years of additional learning, the overall effect in grade 2 (0.47) corresponds to about half a year of additional math learning, while the overall effect in grade 8 (0.3) corresponds to more than a year of additional learning. However, looking at the medium-term effects, the grade 2 intervention overall still corresponds to more than a third of a school year of additional learning, while the grade 8 intervention has no significant impact in the medium term.

Looking at policy-relevant performance gaps, the 20% low achievers had a gap in the pre-test of ~1.2 SD, when the standardization is done on the full population of second and eighth graders in the participating schools, see Supplementary Tables 2, 3. Looking at the effect sizes, when the standardization of the main outcome is done on the full population of second and eighth graders, in the fourth row of Supplementary Table 1 (0.26 SD and 0.17 SD, respectively), the intervention closes about 21% of this gap for second graders and around 14% for eighth graders. The achievement gap in third as well as sixth-grade national tests in mathematics of children, whose parents have no education beyond compulsory schooling, is 0.4 SD, while that of children of parents with non-ethnic origin is 0.4-0.5 SD9. Hence, the effect sizes obtained here would go quite a long way to eliminating these gaps, especially in grade 2.

In terms of comparisons to similar interventions or interventions aimed at similar target groups, as mentioned already, the effect size for grade 2 is well above the 90th percentile rank of effect40. It is also very high when compared to the average effect size in a meta-analysis36, and finally, it is at the high end of effect sizes for interventions targeting low disadvantaged pupils/low achievers35. For the grade 8 intervention, the results are similar, albeit slightly less impressive in comparison. A meta-analysis of interventions conducted in Denmark by researchers associated with TrygFondens Centre for Child Research42 estimates an average effect size of around 0.2 and 0.13 in grades 2 and 8, respectively. This comprises targeted as well as untargeted interventions aimed at different types of learning outcomes (mostly language).

In conclusion, the overall effect sizes obtained by the present interventions are impressive irrespective of the chosen metric or comparison. They are even more impressive when we consider the context in which they are obtained; that of the Scandinavian welfare state, with its high-quality public schools with a strong focus on offering equal opportunities for all children in Denmark. Looking at the full set of analyses, we would therefore definitely recommend a scale-up of interventions in grade 2 but would seriously consider only scaling up 1-group variants of the intervention, as the 1-1 variant is much more expensive. Since impacts tend to fade out in grade 8, we would be more reluctant to recommend scale-up of the intervention for eighth graders. We would also recommend that scale-up is rolled out with a design that enables further study of the impacts of the intervention. Finally, policymakers should seriously consider including this type of intervention in the toolbox if future pandemics are encountered, as its small-group nature makes it more robust to such pandemic situations, and it has the potential to prevent socioeconomic achievement gaps from increasing during lockdowns.

Some limitations of the study are worth highlighting: First, some of the very large significant effects of specific intervention arms may be a consequence of winners' curse effects (the effects have to be quite large to become significant). For this reason, we have focused mostly on the pooled effects of interventions within grade (as already mentioned, the study was also not designed to be able to detect differences between intervention arms).

Second, one might be worried about spillover effects on other subjects, especially as some of the sessions took place during other lectures (see panel b in Fig. 3). As mentioned, we have examined these for the Danish language and did not find any (Supplementary Fig. 2), but that does not remove the risk of negative spillovers to other subjects.

Third, there may be spillover effects on pupils in the control group or on pupils who are not considered low achievers. These could, in principle, be positive as well as negative; positive spillovers could be observed if teachers have better time to teach the remaining pupils in the classroom, and if treated pupils are better able to follow the topics covered when returning to the classroom. Negative spillovers could be the consequence of teachers spending extra time on preparing and teaching participants during intervention sessions at the cost of spending less time on preparing and teaching regular classes. We have not been able to analyze this. In principle, a regression discontinuity design at the school-specific threshold of being a low achiever could identify the impact of being assigned to the control group relative to not being a low achiever just above the threshold. In practice, this analysis has standard errors so large that no conclusion would be possible, almost irrespective of the effect size. Spillover effects on pupils who are not low achievers could, in principle, be analyzed by exploiting data on pupils from other schools using difference-in-differences designs. However, since the pre-test was only administered to pupils in participating schools, we have no way of identifying low achievers in the non-participating schools.

Finally, while we would expect the internal validity of our experiment to be excellent, some concerns remain with respect to its external validity. To some extent, this is related to the discussion of scale-up already covered, but there is an additional concern. Schools were recruited through phone calls from the intervention implementation team at the University College Copenhagen. Hence, the implementation team may have been selective in their choice of schools to approach, and the schools themselves may have self-selected for the intervention by agreeing to be part of the experiment.

Methods

Context

The intervention took place in Denmark, one of the Scandinavian countries with an extensive welfare state, including free public schools tending to 85% of pupils in compulsory school (the rest attend highly subsidized private schools). Danish schools are considered of high quality in an international context and Danish pupils are doing fairly well in international comparisons (PISA).

Intervention

The intervention takes inspiration from Math Recovery43 (https://www.mathrecovery.org), having a similar setup, but with some notable differences, e.g., the learning path in the intervention has an adaptive structure, the intervention is broadly oriented towards all mathematical areas and the assessment of students was carried out by the intervention teachers, not by someone external to the learning context. Hence, we believe that the intervention has relevance beyond the Danish public school system.

The intervention was a small-group, structured teaching approach, targeted to low achievers in mathematics. As shown in Fig. 1, three intervention variants were tested (The reason the fourth combination, 1-1 without coaching, was not tested along with the other three variants, is that it was already tested in an intervention in 2014, the results of which were never published). Common to all of them was that they began with an investigative screening-test and -conversation, mapping pupils’ understanding of mathematics, deficiencies (math holes) and their emotional perception of mathematics. The teacher decided on how many questions were needed to map the pupils’ understanding and deficiencies. This was the starting point for a teaching sequence (individual or group based) of 25–35 min sessions four times per week for 12 weeks. Teaching was dialog-based, and the pupils’ knowledge, abilities, and desire for learning, as it appeared from the screening test, was the starting point for the sessions. The math teacher focused on the strengths of each pupil and worked actively to fill up math holes of the pupils, and to improve their mathematical vocabulary and understanding as well as their motivation and desire for learning, which was continually assessed. Teachers used learning material developed specifically for this type of intervention3,4. The screening test was utilized by the math teacher to determine the main mathematical areas and sub-areas to be addressed in the intervention. Each session was then predicated on mapping within the selected sub-area and followed by corresponding teaching to strengthen the pupil’s weak areas. The interventions could be oriented towards any mathematical area and did not necessarily have as strong focus on number learning as in Math Recovery. From one session to the next, the teacher had to decide which sub-area the pupil should be working on next, so the learning path was more adaptive than the more pre-established curriculum structure in Math Recovery.

Teachers participated in a four-day course preparing them to conduct the intervention, and the school’s mathematics coordinator participated in an additional three-day course on how to coach the teachers conducting the sessions. The coaching course was organized and led by instructors from the implementation team. The theoretical foundation of the coaching course is systemic coaching, which structures coaching with a focus on a co-creative process between the coordinator and the teacher. Systemic coaching shifts the focus from an expert-dominated session to a co-creative session with the implementation of the TMTM concept at its core. The co-creative process is defined as a mutually investigative process aimed at improving efforts. The course content included an introduction to and exercises in the use of structuring models for co-creative systemic coaching, including the use of video recordings of TMTM teaching. The mathematics advisors committed to following the systemic and co-creative principles in their guidance of mathematics teachers. The mathematics advisors coached the teachers three times during the 12-week mathematics initiative, based on the teachers’ own video recordings of TMTM teaching and their own mathematics didactic issues. The implementation team coached the coordinator once during the intervention period. This coaching used the same theoretical foundation and methodology as the coordinator’s coaching of the mathematics teachers.

Teachers were math teachers at the school, but they were supposed to conduct the intervention on top of normal teaching requirements, so nothing would ideally be taken from neither the control group nor from other pupils at the school. Schools were allowed to hire additional teachers to conduct the intervention as long as the funds were taken from their own budget. We have no information on the extent to which this took place.

As mentioned, three intervention variants were tested for each of grades 2 and 8. 1-group involved one teacher instructing 3–4 pupils. Teachers took part in the 4-day preparation course but received no additional instruction or coaching. 1-group with coaching involved one teacher instructing 3–4 children. Teachers took part in the four-day preparation course, and on top, they received coaching three times during the intervention period from the math-coordinating teacher at the school. The math coordinator participated in a 3-day coaching course. In addition, he or she received coaching from one of the researchers in the implementation team. 1-1 with coaching involved one teacher and one pupil and was otherwise similar to 1-group with coaching.

The theory of change for the intervention is depicted graphically in Supplementary Fig. 4.

Data

Data was collected and merged from several sources. First, participating schools delivered unique school identification codes for all pupils in grades 2 and 8. Approximately 3 to 4 weeks before the beginning of the intervention, all pupils in participating schools took a mathematics test, henceforth referred to as the pre-test (see more below). This test allowed us to identify within each school and grade the 20% of pupils with the lowest scores, denoted henceforth as the low achievers. These were the target groups of the intervention. Excluded from the group were pupils with psychiatric diagnoses of ADHD or severe psychiatric disorders. Also, some students did not want to participate, or their parents did not want them to, and they were also exempted. In these cases, teachers were allowed to offer the intervention to someone assigned to the control group, justifying the intention-to-treat evaluation approach outlined below.

The data were subsequently merged with data from administrative registers located on a server at Statistics Denmark, offering secure access for research purposes, including information from the national tests in mathematics and Danish language, school-leaving exam grades, and a large set of conditioning variables.

Immediately after the end of the intervention, the mathematics test administered at the pre-test was taken once again by all second and eighth graders in the participating schools—this is denoted the post-test. The mathematics tests were developed for grades 2 and 8 specifically for use in this intervention. However, the tests were designed to test broadly for proficiency in the curriculum of the respective grades. The test for grade 2 contained 60 questions (37 in numbers & algebra, 15 in geometry, and 8 in measurement), while the test for grade 8 contained 68 questions (40 in numbers & algebra, 9 in geometry, 5 in measurement, 2 in trigonometry, and 12 in probability & statistics). Supplementary Fig. 5 shows the raw distributions of pre- and post-tests for all second and eighth graders in the participating schools. There is a weak tendency for ceiling effects in the post-test for second graders, but since the pupils participating in the intervention are ex ante low achievers, we do not expect this to lead to bias in the estimated effects.

Outcomes

The primary outcome is the post-test. This was standardized using the mean and standard deviation in the pre-test of those in the treatment group and the control group. This choice was made to enable a comparison to most other studies, where the standardization is typically made within the target population. To compare effect sizes to the more general population of pupils, we also conducted a robustness check, where the standardization was made with respect to the mean and standard deviation of the pre-test across all pupils in a grade in the participating schools. Secondary outcomes, where we typically did not have access to pre-treatment values, were standardized using the mean and standard deviation in the pooled control group within a grade.

Secondary outcomes are, first, the medium-term math outcomes; second graders are tested in math in the compulsory national tests in mathematics in the spring semester of grade 3, that is, about 16–18 months after the end of the intervention. Eighth graders take their school-leaving exams at the end of the spring semester in grade 9, which is ~14 months after the end of the intervention. We use the average of all math tests at the school-leaving exam, oral and written (problem solving and calculation). Other secondary outcomes were, for second graders, national tests in Danish in the spring term of grade 2, and personality and school well-being measures based on a well-being survey conducted in January-March each year. However, the survey questions change between grades 3 and 4, and the different well-being constructs calculated are only available from grade 4 onwards. Hence, for second graders, we use well-being constructs taken more than 2 years after the end of the interventions. For eighth graders, secondary outcomes were the average GPA obtained at the school-leaving exam in the Danish language as well as personality and school well-being measures based on the well-being survey conducted in the spring term of grade 9.

Conditioning variables

We have access to a large set of conditioning variables; parental employment status and education; child age, gender, ethnicity, and whether any social intervention had been applied (out-of-home placement or in-home intervention). Most importantly, we also had the pre-test.

Evaluation design

The evaluation and its design was preregistered at www.SocialScienceRegistry.org with protocol no. AEARCTR-0002361 (the experiment also included a randomized study of two of these interventions—1-group and 1-group with coaching—in grade 2 aimed at high achievers in mathematics. The results from this part of the trial will be published in a separate study). No ethical approval was required; in Denmark, from a legal perspective, only biomedical research has to be notified to the ethics committee of the respective region (https://www.rm.dk/sundhed/faginfo/forskning/de-videnskabsetiske-komiteer/). Therefore, when the study was initiated, no IRB existed at Aarhus University. The researchers sought advice from the ethics group of the TrygFonden’s Centre for Child Research (https://childresearch.au.dk/om-os/etik/). We followed their guidelines, which are based on the Danish Code of Conduct for Research Integrity and on the respective international declarations, such as the Singapore and Montreal declarations.

The evaluation was designed as two two-stage stratified randomized trials, one for each grade. This was because not all invited schools wanted to participate for both grades. First, schools were randomly assigned to one of the three treatment arms, stratifying schools by the number of pupils in the relevant grade. Secondly, children were randomly assigned to treatment or control groups in a 2:1 ratio. The latter randomization was stratified within schools. The randomization design is depicted graphically in Supplementary Fig. 6. Hence, the sequence of events was as follows:

-

1.

Schools were recruited to participate in the interventions through phone calls from the implementation team at University College Copenhagen.

-

2.

For each grade, schools were randomized into one of the three intervention arms.

-

3.

All children in grades 2 and 8 in participating schools took a baseline math test (the pre-test), and based on this test, 20% of low achievers within each school were identified.

-

4.

Within school and grade, pupils were randomized into treatment or control group.

-

5.

The intervention was conducted.

-

6.

All children in grades 2 and 8 in participating schools were tested in math again (the post-test).

A minimum detectable effect size of 0.25 was what we aimed for. First, the costs of the intervention would require an effect of a certain size to justify using it. Second, we decided to aim for a fairly large effect size, in the top 75 percentile39. The power calculation showed that in order to detect minimum effect sizes of 0.25 with a power of 80%, a significance level of 95%, and an assumption that the pre-test explains about 50% of the variation in the post-test, we would need sample sizes of 255 pupils in the combined treatment and control group within each treatment arm. As shown in Supplementary Fig. 6, we ended up with about 200 pupils per treatment arm in grade 2 and 140–200 in grade 8, partly due to recruitment problems, partly due to schools signing up to participate, and subsequently withdrawing consent. This enables us to detect effect sizes of about 0.28 for second graders and about 0.33 for the smallest treatment arms among eighth graders. (The minimum detectable effect size is inversely related to the sample size; statistical uncertainty increases as the sample size decreases).

Implementation and compliance

As mentioned above, compliance with the experimental assignment was not perfect. In Panel a in Fig. 3, the degree of compliance is detailed for each intervention arm. In grade 2, the fraction in the treatment group actually participating in the intervention is 80–89%, while the fraction in the control group receiving treatment is 2–9%. This leads to differences in treatment rates between treatment and control groups ranging from 77 to 80%. In grade 8, the fraction of treated in the treatment group ranges from 60 to 91% and in the control group from 3 to 21%. Differences in treatment rates in grade 8 range from 57 to 70%.

The intervention sessions took place in the early fall semester of 2017 for grade 2 pupils and in the early spring semester of 2018 for grade 8 pupils. Sessions could take place anytime during—or even outside—normal school hours. This implies that the treatment groups would miss some other classes. In practice, slightly more than half of the sessions took place during what would otherwise have been ordinary math lectures, see Panel b in Fig. 3. Most of the remaining sessions took place during other lectures, which justifies looking for negative spillover effects to outcomes in, e.g., Danish language.

Finally, as mentioned, the intended number of sessions was 48. However, teachers kept logs of sessions held and pupil attendance. We, therefore, have information on pupils’ participation in sessions and on canceled sessions. Particularly in grade 8, there were many cancellations due to mock exams, excursions, school holidays, etc. The average number of sessions attended by grade and intervention arm is shown in Panel c in Fig. 3. In grade 2, 26–30 sessions were held on average, corresponding to slightly more than half of those intended, while in grade 8, the average number of sessions were 20–23, i.e., slightly less than 50% of the intended number.

Descriptive statistics

Supplementary Tables 2, 3 show descriptive statistics for all intervention arms and grades, and pooled across intervention arms within grade, as well as tests for equality of means across treatment and control groups. P values for equality of means were obtained from a regression of the variable in question on a treatment assignment dummy and school (stratification) fixed effects. There are no more significant differences than should be expected by chance, and notably, there are none in the samples pooled across intervention arms within grade. Low achievers are around 1.2 SD behind the average pupil in the grade in the mathematics pre-test. Roughly 41–45% are boys, 18–22% are immigrants or descendants of immigrants, around two-thirds of parents have a qualifying education (vocational or higher), and ~70% (77%) of mothers (fathers) work.

Statistical analysis

Let \({Y}_{{ij}1}\) denote the outcome of interest—for the main outcome, this is the post-test result—of pupil i in school j. \({Y}_{{ij}0}\) denotes the pre-test result. \({{\boldsymbol{IA}}}_{j}\) is a 3 × 1 vector indicating the intervention arm to which school j is assigned. \({Z}_{i}\) measures treatment assignment (1 for the treatment group, 0 for the control group) of pupil i. \({{\boldsymbol{X}}}_{{\boldsymbol{i}}}\) is a vector of background characteristics of pupil i (including characteristics of the parents of the pupil). The intention-to-treat (ITT) effects of the three intervention arms \({{\boldsymbol{\Delta }}}_{{\boldsymbol{ITT}}}\) (1 × 3) are estimated in the following Eq. (1)

where \({{\boldsymbol{\alpha }}}_{{\boldsymbol{j}}}\) are school (stratification) fixed effects, and \({\varepsilon }_{{ij}}\) is an idiosyncratic error term. Treatment effects are estimated using OLS. The pooled ITT treatment effect within grades is estimated in a similar fashion but excluding the (interaction) term \({{\boldsymbol{IA}}}_{j}\).

As already mentioned, compliance rates were lower than 100%, between 57 and 80%. Hence, it would be interesting to estimate the treatment effect on those actually treated. However, given that there is non-compliance in both the treatment and the control groups, we can instead estimate the local average treatment effect (LATE); the treatment effect for those complying with the experimental protocol. Let \({D}_{i}\) denote whether pupil i actually participated in the program. The LATE is then defined as in Eq. (2)

Where Di indicates actual participation in the treatment. It is estimated by its empirical counterpart. The denominator is simply the difference in treatment rates between treatment and control groups. If there were no treated in the control group, this would also be equal to the treatment effect on the treated.

In a robustness check, we also estimate the treatment effect in a change-score specification, i.e. we estimate the parameters of the following Eq. (3) using OLS:

Cost calculations

To be able to compare fairly across intervention arms and grades, we should compare effect sizes, where the costs of the intervention are taken into account. In the present case, we could calculate the costs of the intervention approximately, as we know the number of pupils, teachers, and supervisors involved as well as the activities in which they took part. The costs of the intervention were made up of training costs of teachers and local coaches, salaries of teachers while conducting the intervention, and the costs of coaching during the intervention. The costs per pupil are shown in Supplementary Table 4. Costs are reported in US$ using an exchange rate of 6.42 DKK/US$. Cost differences are mainly driven by four factors; whether coaching is involved or not, whether the interventions are 1-1 or 1-group, the number of teachers involved (training costs), and the number of sessions actually held.

Data availability

The data that support the findings of this study are available from Statistics Denmark, but restrictions apply to the availability of these data, which were used under license for the current study and so are not publicly available. The data were, however, available from the authors upon reasonable request and with the permission of Statistics Denmark.

Code availability

The underlying code for this study is not publicly available but may be made available to qualified researchers on reasonable request from the corresponding author.

References

Cortes, K., Goodman, J. & Nomi, T. Intensive math instruction and educational attainment long-run impacts of double-dose algebra. J. Hum. Resour. 50, 108–158 (2015).

Lindqvist, E. & Vestman, R. The labor market returns to cognitive and noncognitive ability: Evidence from the swedish enlistment. Am. Econ. J. Appl. Econ. 3, 101–128 (2011).

Lindenskov, L. & Weng, P. Matematikvanskeligheder, Tidlig Intervention (in Danish, translates into: Mathematics Difficulties, Early Intervention) (Dansk Psykologisk Forlag, 2013).

Lindenskov, L., Tonnesen, P. & Weng, P. Matematikvanskeligheder På De Ældste Trin, Kortlægning Og Undervisning (in Danish, translates into: Mathematics difficulties in the lower secondary grades, mapping and teaching) (Dansk Psykologisk Forlag, 2016).

OECD. PISA 2018 Results (Volume I): What Students Know And Can Do, PISA (OECD Publishing, 2019).

Duncan, G. J. et al. School readiness and later achievement. Dev. Psychol. 43, 1428–1446 (2007).

Lubinski, D., Benbow, C. P. & Kell, H. J. Life paths and accomplishments of mathematically precocious males and females four decades later. Psychol. Sci. 25, 2217–2232 (2014).

Sadler, P. M. & Tai, R. H. The two high-school pillars supporting college science. Science 317, 457–458 (2007).

Nandrup, A. B. & Beuchert L. V. The Danish national tests at a glance. Nationaløkonomisk Tidsskrift https://www.xn--nt-lka.dk/files/2018/article/2018_1_2.pdf (2018).

Hanushek, E. A. & Woessmann, L. The role of cognitive skills in economic development. J. Econ. Lit. 46, 607–668 (2008).

Hanushek, E. A. & Woessmann, L. Do better schools lead to more growth? Cognitive skills, Economic outcomes, and causation. J. Econ. Growth 17, 267–321 (2012).

OECD. The High Cost of Low Educational Performance: The Long-Run Economic Impact Of Improving Educational Outcomes (OECD Publishing, 2010).

Landersø, R. & Heckman, J. J. The Scandinavian fantasy: the sources of intergenerational mobility in Denmark and the US. Scand. J. Econ. 119, 178–230 (2016).

Heckman, J. J. & Landersø R. Lessons from Denmark about inequality and social mobility. IZA Discussion Paper 14185 (2021).

Engzell, P., Frey, A. & Verhagen, M. D. Learning loss due to school closures during the COVID-19 pandemic. Proc. Natl Acad. Sci. USA 118, e2022376118 (2021).

Engström, A. Specialpedagogik för 2000-talet (in Swedish, translates into: Special pedagogy for the 2000s). Nämnaren 1, 26–31 (2000).

Barbaresi, W. J., Katusic, S. K., Colligan, R. C., Weaver, A. L. & Jacobsen, S. J. Math Learning Disorder: Incidence in a Population-Based Birth Cohort, 1976-82, Rochester, Minn. Ambul. Pediatr. 5, 281–289 (2005).

Monei, T. & Pedro, A. A systematic review of interventions for children presenting with dyscalculia in primary schools. Educ. Psychol. Pract. 33, 277–293 (2017).

Cowan, R. & Powell, D. The contributions of domain-general and numerical factors to third grade arithmetic skills and Mathematical learning disability. J. Educ. Psychol. 106, 214–229 (2014).

Butterworth, B., Varma, S. & Laurillard, D. Dyscalculia: from brain to education. Science 332, 1049–1053 (2011).

Lee, J. J. et al. Gene discovery and polygenic prediction from a genome-wide association study of educational attainment in 1.1 million individuals. Nat. Genet. 50, 1112–1121 (2018).

Carey, E. et al. (2019). Understanding Mathematics Anxiety: Investigating the experiences of UK primary and secondary school students. (Centre for Neuroscience in Education, University of Cambridge, 2019).

Ma, X. A Meta- Analysis of the relationship between anxiety towards mathematics and achievement in mathematics. J. Res. Math. Educ. 30, 520–540 (1999).

Devine, A., Hill, F., Carey, E. & Szűcs, D. Cognitive and emotional math problems largely dissociate: prevalence of developmental dyscalculia and mathematics anxiety. J. Educ. Psychol. 110, 431–444 (2018).

Barroso, C. et al. A Meta-analysis of the relation between math anxiety and math achievement. Psychol. Bull. 147, 134–168 (2021).

OECD. PISA 2012 Results: Ready to Learn: Students’ Engagement, Drive and Self-Beliefs Vol. III (OECD Publishing, 2013).

Heckman, J. J. Skill formation and the economics of investing in disadvantaged children. Science 312, 1900–1902 (2006).

Heckman, J. J. & Mosso, S. The economics of human development and social mobility. Ann. Rev. Econ. 6, 689–733 (2014).

Pomerantz, E. M., Moorman, E. A. & Litwack, S. D. The how, whom, and why of parents’ involvement in children’s academic lives: more is not always better. Rev. Educ. Res. 77, 373–410 (2007).

Castro, M. et al. Parental involvement on student academic achievement: a meta-analysis. Educ. Res. Rev. 14, 33–46 (2015).

Kalil, A. in Families in an Era of Increasing Inequality: Diverging Destinies (eds Amato, P. R., Booth, A., McHale, S. M. & van Hook, J.) (Springer, 2015).

Lambert, R. Constructing and resisting disability in mathematics classrooms: a case study exploring the impact of different pedagogies. Educ. Stud. Math. 89, 1–18 (2015).

Hanushek, E. A. & Rivkin, W. G. in Handbook Of The Economics of Education (eds. Hanushek, E. A. & Welsh, F.) (Elsevier, 2006).

Hanushek, E. A. The economic value of higher teacher quality. Econ. Educ. Rev. 30, 466–479 (2011).

Dietrichson, J. et al. Targeted school-based interventions for improving reading and mathematics for students with or at risk of academic difficulties in grade k to 6: a systematic review. Campbell Syst. Rev. 17, e1152 (2020).

Pellegrini, M., Lake, C., Neitzel, A. & Slavin, R. E. Effective programs in elementary mathematics: a meta-analysis. AERA Open 7, 1–29 (2021).

Dietrichson, J. et al. Targeted school-based interventions for improving reading and mathematics for students with or at-risk of academic difficulties in grade 7 to 12: a systematic review. Campbell Syst. Rev. 16, e1081 (2020).

Slavin, R. E., Lake, C. & Groff, C. Effective programmes in middle and high school mathematics: a best-evidence synthesis. Rev. Educ. Res. 79, 839–911 (2009).

Kraft, M. A., Blazar, D. & Hogan, D. The effect of teacher coaching on instruction and achievement: a meta-analysis of the causal evidence. Rev. Educ. Res. 88, 547–588 (2018).

Kraft, M. A. Interpreting effect sizes of education interventions. Educ. Res. 49, 241–253 (2020).

Hill, J. H., Bloom, H. S., Black, A. R. & Lipsey, M. W. Empirical benchmarks for interpreting effect sizes in research. Child Dev. Perspect. 3, 172–177 (2008).

Rosholm, M. et al. Are impacts of early interventions in the Scandinavian welfare state consistent with a Heckman curve? A meta-analysis. J. Econ. Surv. 35, 106–140 (2021).

Smith, T. M., Cobb, P., Farran, D. C., Cordray, D. S. & Munter, C. Evaluating math recovery: assessing the causal impact of a diagnostic tutoring program on student achievement. Am. Educ. Res. J. 50, 397–428 (2013).

Acknowledgements

M.R. acknowledges financial support from TrygFonden, grant no. 122318. K.R., S.O., J.V.F., P.B.T., S.G.M., and J.H. acknowledge financial support from AP Møller Fonden, grant no. 16-07-0030. Comments and suggestions from seminar participants at TrygFonden’s Centre for Child Research, the Department of Economics at the University of Alicante, and the workshop on Field Experiments held at IAB in Nuremberg, Germany, on November 21-22, 2019, are gratefully acknowledged. We are deeply grateful to schools, teachers, and pupils, who participated in the study.

Author information

Authors and Affiliations

Contributions

M.R., S.O., and P.B.T. designed research; M.R. performed quantitative research, analyzed data, and wrote the first draft of the paper; J.V.F. and S.O. developed math test; and all authors wrote, read, and approved the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

No ethical approval was required or obtained; in Denmark, from a legal perspective, only biomedical research has to be notified to the ethics committee of the respective region (https://www.rm.dk/sundhed/faginfo/forskning/de-videnskabsetiske-komiteer/). Therefore, when the study was initiated, no IRB existed at Aarhus University. The researchers sought advice from the ethics group of TrygFonden’s Centre for Child Research (https://childresearch.au.dk/om-os/etik/). We followed their guidelines, which are based on the Danish Code of Conduct for Research Integrity and on the respective international declarations, such as the Singapore and Montreal declarations.

Informed consent

Informed consent was not required, as the changes in teaching methods were within the discretion of the school management to decide. However, all parents received a letter explaining that some pupils would be part of testing a new method of teaching in mathematics and that if they did not want their child to take part in the study, they should inform the school that they wanted to opt-out.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rosholm, M., Tonnesen, P.B., Rasmussen, K. et al. A tailored small group instruction intervention in mathematics benefits low achievers. npj Sci. Learn. 10, 18 (2025). https://doi.org/10.1038/s41539-025-00310-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41539-025-00310-9