Abstract

Protein language models embed protein sequences for different tasks. However, these are suboptimal at learning the language of protein interactions. We developed an interaction language model (iLM), Sliding Window Interaction Grammar (SWING) that leverages differences in amino-acid properties to generate an interaction vocabulary. SWING successfully predicted both class I and class II peptide–major histocompatibility complex interactions. Furthermore, the class I SWING model could uniquely cross-predict class II interactions, a complex prediction task not attempted by existing methods. Using human class I and II data, SWING accurately predicted murine class II peptide–major histocompatibility interactions involving risk alleles in systemic lupus erythematosus and type 1 diabetes. SWING accurately predicted how variants can disrupt specific protein–protein interactions, based on sequence information alone. SWING outperformed passive uses of protein language model embeddings, demonstrating the value of the unique iLM architecture. Overall, SWING is a generalizable zero-shot iLM that learns the language of protein–protein interactions.

Similar content being viewed by others

Main

Deep learning has revolutionized prediction across contexts1 and generative models have been applied in genomics and proteomics2,3. Protein language models (pLMs) have been used for engineering synthetic protein sequences that function like natural proteins4,5 and in niche contexts of predicting antibody evolution to better understand the immune repertoire6. By extracting information from sequences and/or structures, pLMs have functionally enriched the human proteome in many contexts. Recently, AlphaFold solved the problem of protein structure prediction from multiple sequence alignments7. ESMFold8 used a large language model (LLM) to leverage protein sequences and predict structures. However, pLMs do not easily capture interactions between proteins. This is a limitation of pLMs as proteins perform functions via interactions with other proteins.

pLMs embed sequences of the interactors separately and predict protein–protein interactions (PPIs) from combined embeddings9,10. Alternatively, they concatenate the sequences and embed the product11,12. The individual protein embeddings are aggregated over the contribution of all the amino acids (AA) per position13, without taking into account inherent context specificity or the residue contact points critical for interaction. Fundamentally, residue contact points in an interaction are governed by evolutionary principles and are constrained14,15,16. Sequence length also presents a major obstacle in scaling pLMs to all protein classes. Thus, pLMs do not perform optimally at learning the language of protein interactions.

We present Sliding Window Interaction Grammar (SWING), a length-agonistic interaction language model (iLM) that captures the language of protein–protein or protein–peptide interactions. We applied SWING to multiple tasks, where it outperformed state-of-the-art methods across domains. First, we sought to predict peptide–major histocompatibility complex (MHC) binding. This is important as MHC molecules play a key role in adaptive immunity by presenting a wide array of peptides for T cell receptor recognition. MHC molecules have promiscuous binding interfaces, enabling the presentation of diverse peptides, across MHC alleles. The high number of possible peptide–MHC (pMHC) combinations makes it infeasible to experimentally corroborate them. While computational tools exist to predict pMHC interactions, they rely on allele-specific experimental datasets and do not predict previously unseen alleles well. We hypothesized that an iLM would not be constrained by context specificity and would be generalizable across the sequence diversity of MHC. SWING was adapted for another context to predict the disruptions of PPIs by missense variants. Most variant effect predictors predict pathogenicity, but are not good at predicting disruptions of specific interactions. Predicting which missense mutations can lead to the disruption of specific protein interactions provides a fundamental genotype-to-phenotype link.

Additionally, we have extended the use of deep learning-based models to contexts where the full-length protein sequence is unavailable or unnecessary. Overall, SWING is a biologically inspired, generalizable iLM that can be applied across contexts to learn the language of peptide and protein interactions.

Results

Overview of the SWING framework

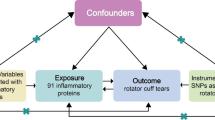

pLMs learn meaningful representations for a multitude of tasks by predicting sections of sequence that are masked from their surrounding context8,17. The models produce residue-level embeddings, which are aggregated to generate protein representations. While these models are extremely versatile at capturing individual sequence and/or structural information, they are suboptimal at capturing interactions. The aggregation of per-residue embeddings to generate protein embeddings does not capture the well-characterized functional importance of interacting residues between two interacting proteins16. We developed SWING, an iLM that preemptively (preembedding) encodes pairwise residue information to generate a language-like representation (Fig. 1a). The language encodes biochemical information and is useful for generating interaction embeddings without aggregation of sequences or embeddings. It does not rely on pLM representations for individual proteins in the interaction nor on modifying existing pLMs for interaction-specific tasks.

a, Schematic highlighting the difference between existing pLMs and the novelty of iLMs. b, Conceptual overview of the SWING vocabulary generation step. c, Abstract overview of the embedding and classification functions of SWING. Overview of distributed memory and distributed bag of words Doc2Vec architectures. In the Doc2Vec models; V is the vocabulary of the interaction language; W is the k-mers embedding matrix; C is the total number of interactions; N is the dimension of the embeddings; W1 is the output layer weight matrix. d, Key conceptual innovations of SWING. Panels a–d created using BioRender.com. AF, AlphaFold.

In protein interactions, highly conserved sub-sequences within proteins facilitate binding between interactors14,16. Additionally, local information distributed within a few sub-sequences dictates protein function18,19. We hypothesized that a local region in the protein, prioritized using the context, should capture a meaningful representation important to an interaction. We call this sub-sequence the sliding window. The sliding window, an n-length peptide, is completely matched with n positions on the target partner sequence, starting from the first position. At each position, a biochemical difference is calculated between the AA pair. This absolute difference after rounding ordinally encodes the AA pair. It is known that biochemical differences between AAs are structurally relevant20. Next, the sliding window is shifted by one position and the steps repeated until the sequence ends (Fig. 1b). We divide the result into overlapping k-sized sub-sequences (k-mers), where each can be thought as a ‘word’ and each interaction as a ‘document’ composed of these words. This matches the input expectation of the embedder model. The iLM samples all possible biochemically informative interprotein residue pairs to capture interaction information to perform prediction tasks.

A Doc2Vec21 model is used to infer the interaction embeddings (Supplementary Note). The embeddings are used as features for downstream supervised learners, for example, XGBoost (Fig. 1c). We demonstrate that Doc2Vec generates meaningful embeddings for the interaction vocabulary, which can then be meaningfully used in a range of downstream prediction tasks. First, we predicted peptide interactions across multiple class I and class II alleles of the MHC. SWING displayed zero-shot and transfer learning capabilities in predicting across or between functionally distinct pMHC interactions. Further, SWING predicted PPI perturbation by missense mutations. Overall, SWING is highly generalizable because biochemical motifs of interacting residues are embedded to capture their semantic features. We demonstrate that SWING can perform a range of interaction tasks, outperforming corresponding state-of-the-art methods (Fig. 1d).

SWING learns the language of pMHC interactions

CD8 and CD4 T cell responses are driven by the recognition of MHC-I and MHC-II bound peptides. pMHC interactions are key for antigen processing and presentation22. Experimental characterization for all pMHC interactions (MHC-I ~107 and MHC-II ~1020)23 is infeasible given the diversity of MHC alleles and their variable binding surfaces. Thus, many methods have been developed to predict pMHC interactions. These include methods that predict pMHC binding from enriched motifs in the peptide sequence or contact points between peptide and MHC24. The MHC sequence modulates the properties of the binding peptides25 leading to methods focusing on learning from experimentally verified allele-specific pMHC properties resulting in poor generalizability to unseen alleles with sparse functional data. In addition to the sequence-level diversity in the MHC, the principles of class I and class II binding are structurally and functionally different26. MHC-I has a deep binding groove that imposes constraints on the residues and lengths (8–12 residues) of binding peptides. MHC-II has shallower grooves forcing the ends of the peptide to extend beyond the binding groove allowing for longer peptides (9–22 residues)27.

The epitope (presented peptide) represents the essential protein locale and is well suited as the described sliding window for SWING. The functional sub-sequence of the MHC along with the peptide window generated the biochemical language (Fig. 2a), which is the input for the embedder and supervised learning. We trained a SWING model on a class I human immunopeptidome. Interactions from a few distinct human leukocyte antigens (HLAs) were chosen for training to capture functional variability and remove redundancy. Gradient boosted trees were used for supervised learning and classification (Methods).

a, Schematic of the pMHC prediction task adaptation of the SWING framework. b, Representation of the SCV evaluation metric. c, Depiction of the cross-prediction evaluation metric. d, Class I allele functional clustering as defined by MHCCluster v.2.0. Orange, alleles in the training set; magenta, alleles in the validation set and blue, distant allele validation set. e, SWING class I model performance plotted across ten replicates of tenfold cross-validation with permutation testing defined by the AUROC. Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. f, Class I model performance on three unseen functionally close alleles in the validation set as defined by the AUROC. Blue, HLA-A02:02; orange, HLA-B40:02; magenta, HLA-C05:01; green, perfect classifier and gray, random classifier. g, Class I model cross-prediction performance on three unseen functionally distinct alleles in the distant validation set as defined by the AUROC. Blue, HLA-A32:01; orange, HLA-B38:01; magenta, HLA-C03:03; green, perfect classifier and gray, random classifier. h, Class II allele functional clustering as defined by MHCCluster v.2.0. Orange, alleles in the training set and blue, alleles in the validation set. i, Class II model performance plotted across ten replicates of tenfold cross-validation with permutation testing defined by the AUROC. Blue, validation curve; red permuted mean; green, perfect classifier and gray, random classifier. j, Class II model cross-prediction performance on two unseen alleles in the validation set as defined by the AUROC. Blue, DRB1_0102; orange, DRB1_0404; green, perfect classifier and gray, random classifier. Mean AUROC ± 2 × standard deviation. Panels a–c created using BioRender.com.

We assessed model performance using replicates of k-fold cross-validation where subsets of data were iteratively held out from training (Fig. 2b). Second, functionally mutually exclusive train and test datasets were used to evaluate cross-prediction (Fig. 2c). In cross-validation, the train and test sets had an intersection of alleles with nonoverlapping pMHC pairs (Fig. 2b) and cross-prediction sets had no allele overlap (Fig. 2c). We used functionally disparate sets for further robust evaluation (Fig. 2d). SWING was significantly predictive of pMHC-I binding in the cross-validation setup (Fig. 2e, area under the curve (AUC) 0.72, P < 0.001). Additionally, prediction accuracy remains consistent for all unseen alleles (Fig. 2f, AUC = 0.72–0.84). SWING also predicted pMHC interactions for unseen HLA-I alleles functionally different from the training set (Fig. 2g, AUC = 0.63–0.70). SWING’s ability to predict pMHC binding for unseen alleles can help make predictions for rare and understudied MHC alleles.

A SWING model was also trained using class II pMHC interactions. Functionally diverse class II alleles were chosen for training (Fig. 2h) and SWING confidently predicted pMHC–II interactions in a cross-validation setting (Fig. 2i, AUC = 0.90, P < 0.001). Like MHC-I, SWING performed well on unseen functionally different class II alleles (Fig. 2j, AUC = 0.93–0.95). This demonstrates SWING learns the language of class I and class II pMHC interactions using functionally representative alleles for training.

SWING captures pMHC interaction biology

SWING is trained to capture biological information, which drives its predictive power. We selected an optimal training dataset to make our method generalizable13,28,29. We stress tested this assumption by changing the number of MHC molecules. Models were trained on 5 and 12 class I receptors (Supplementary Fig. 1a,d) and cross-prediction loss in performance was observed for the former (Supplementary Fig. 1b, standard cross-validation (SCV)-AUC = 0.81, P < 0.001; Supplementary Fig. 1c, cross-prediction-AUC = 0.51–0.80). Additionally, adding more data did not improve the model performances (Supplementary Fig. 1e, SCV-AUC = 0.72, P < 0.001; Supplementary Fig. 1f, cross-prediction-AUC = 0.68–0.79) showing that the original training set is sufficient.

The biochemical metric used for the interaction language is essential to SWING. Biochemical effects have been shown to be main contributors to protein–protein associations30 and thus polarity was chosen to predict pMHC interactions. SWING was evaluated for any polarity specific bias by changing the metric to hydrophobicity for language generation (Fig. 3a). We observed that the new class I (Fig. 3b, SCV-AUC = 0.72, P < 0.001, cross-prediction-AUC = 0.7–0.81) and class II (Fig. 3c, SCV-AUC = 0.88, P < 0.001, cross-prediction-AUC = 0.88) models accurately predicted interactions for functionally similar and different MHC molecules. This demonstrates SWING captures interaction-specific information independent of a biochemical property.

a, Hydrophobic scale for SWING pMHC prediction. b, Hydrophobicity score class I model SCV performance with permutation testing defined by AUROC (left). Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. Hydrophobicity score class I model cross-prediction performance on three alleles in the validation set as defined by the AUROC (right). Blue, HLA-A02:02; orange, HLA-B40:02; magenta, HLA-C05:01; green, perfect classifier and gray, random classifier. c, Hydrophobicity score class II model SCV performance with permutation testing defined by AUROC (left). Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. Hydrophobicity score class II model cross-prediction performance on two alleles in the validation set as defined by the AUROC (right). Blue, DRB1_0102; orange, DRB1_0404; green, perfect classifier and gray, random classifier. d, Sequence length modification for SWING pMHC prediction. e, Full sequence class I model SCV performance with permutation testing defined by AUROC (left). Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. Full sequence class I model cross-prediction performance on three alleles in the validation set as defined by AUROC (right). Blue, HLA-A02:02; orange, HLA-B40:02; magenta, HLA-C05:01; green, perfect classifier and gray, random classifier. f, Full sequence class II model SCV performance with permutation testing defined by AUROC (left). Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. Full sequence class II model cross-prediction performance two alleles in the validation set as defined by AUROC (right). Blue, DRB1_0102; orange, DRB1_0404; green, perfect classifier and gray, random classifier. g, Peptide length distribution of the interacting peptides in the class II datasets defined by percentage. Magenta, training set; blue, DRB1_0102 validation set and orange, DRB1_0404 validation set. h, Peptide length truncation in training and test datasets affects the predictive power of the SWING class II model as defined by the AUC for each cutoff size in two class II datasets (left). Blue, DRB1_0102 and orange, DRB1_0404. i, Stratification of the model performance using four truncation cutoffs for cross predictions on DRB1_0102 (left) and DRB1_0404 (right) defined by AUROC. Sea green, full-length peptides; purple, 20-AA truncation; magenta, 16-AA truncation and yellow, 12-AA truncation. Mean AUROC ± 2 × standard deviation. Panels a and d created using BioRender.com.

Peptide binding is mostly dictated by the first 206 AA (ref. 31) of the MHC sequence and correspondingly used for training. In line with expectation, we observed that the use of a longer MHC sequence (Fig. 3d) did not affect model performance for class I (Fig. 3e, SCV-AUC = 0.69, P < 0.001, cross-prediction-AUC = 0.7–0.82) and class II (Fig. 3f, SCV-AUC = 0.90, P < 0.001, cross-prediction-AUC = 0.93–0.95).

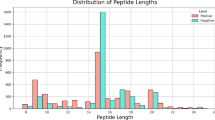

The 9-AA core and the flanking residues of the class II peptides determine specificity32,33 and binding viability34,35. The peptide core is defined by AA motifs36 and we analyzed the effect of removing them and/or the flanking regions on SWING’s performance. We used SWING to predict interactions for peptides truncated to up to 5 AA. The train and test data were identically distributed on peptide length (13–21 AA), with median lengths between 14 and 18 AA (Fig. 3g). The negative set in the training and test sets had a uniform distribution across peptide lengths of 13–21 AA (Supplementary Fig. 2). We reported SWING’s performance on peptides of size 21 to size 5, removing 1 AA at a time from the true sequence (Fig. 3h). There was a sharp drop in performance when peptide length was less than 9 AAs (Fig. 3h). The incremental drop in performance was noticeable in the area under receiver operator characteristic (AUROC) curves (Fig. 3i). This shows that at least 1 AA essential to the interaction is certainly removed below 9 AA and binding information is lost from the sequence. While other approaches use core motifs to train models, SWING automatically learns that the peptide’s core motif is the critical language of pMHC interactions. This confirms that SWING learns biologically important features underlying pMHC interactions.

SWING transfers knowledge across distinct interactions

Few-shot and zero-shot learning reuse previous knowledge from ‘data-rich’ contexts to solve different but related problems in ‘data-sparse’ contexts37,38,39,40. These have been used for context-transfer of models from specific tissues to other tissues for predicting molecular attributes40,41. As discussed earlier, there are structural and functional differences between HLA classes (Fig. 4a) and most methods have class-specific models42,43,44,45. We rationalized that SWING embeddings contain interaction-specific information, which could perform zero-shot predictions between class I and class II pMHC interactions.

a, Structural differences between the class I and II MHC receptors. b, SWING class I model performance for predicting class II pMHC interactions defined by AUROC. Blue, DRB1_0102 and orange, DRB1_0404. c, Comparison of AUROC between SWING class I and II models with NetMHCpan v.4.1, MixMHCpred v.2.0, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0 models for predicting class II pMHC interactions. d, SCV prediction performance of SWING mixed model (trained with class I and II pMHC interactions) with permutation testing defined by AUROC. Blue, validation curve; red, permuted mean; green, perfect classifier and gray, random classifier. e, Prediction performance of the SWING mixed model for predicting class I pMHC interactions represented by AUROC. Blue, HLA-A02:02; orange, HLA-B40:02; magenta, HLA-C05:01; gray, random classifier and green, perfect classifier. f, Prediction performance of the SWING mixed model for predicting class II pMHC interactions represented by AUROC. Blue, DRB1_0102; orange, DRB1_0404; gray, random classifier and green, perfect classifier. g, Evolutionary distance between human and mouse. h, Performance of the SWING human class II model for predicting class II pMHC interactions in mice represented by AUROC. Blue, H-2-IAb; gray, random classifier and green, perfect classifier. i, Performance of the SWING human mixed model for predicting class II pMHC interactions in mice represented by AUROC. Blue, H-2-IAb; gray, random classifier and green, perfect classifier. j, Recall scores for H-2-IEk interacting peptides of different lengths for different SWING models, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0. Blue, SWING class I model; orange, SWING class II model; magenta, SWING mixed-class model; green, MixMHC2pred v.2.0 and gray, NetMHCIIpan v.4.2. k, Recall scores for H-2-IAg7 interacting peptides of different lengths for different SWING models, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0. Blue, SWING class I model; orange, SWING class II model; magenta, SWING mixed model; green, MixMHC2pred v.2.0 and gray, NetMHCIIpan v.4.2. l, AUROC for H-2-IEk (left) and H-2-IAg7 (right) interacting and noninteracting peptides for different SWING models, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0. Blue, SWING class I model; orange, SWING class II model; magenta, SWING mixed model; green, MixMHC2pred v.2.0 and gray, NetMHCIIpan v.4.2. Mean AUROC ± 2 × standard deviation. Panels a and g created using BioRender.com.

The SWING class-I model confidently predicted class II pMHC interactions (Fig. 4b, AUC = 0.74–0.77). We compared SWING models against the performances of NetMHCpan24,42 and MixMHC2pred44, which are comparatively complex methods trained on bigger datasets. Although the SWING class II models, NetMHCpan and MixMHCpred had comparable performance for class II alleles, SWING is the only method without the test alleles in its training set. NetMHCIIpan v.4.2 results show the upper bound as the test peptides were curated from its training set. Notably, the SWING MHC-I model was more predictive for class II MHC interactions than NetMHCpan class I model, which was random for the validation sets (Fig. 4c and Supplementary Table 1). Modifications were made to the NetMHCpan class I model for benchmarking as it is incompatible with class II peptides (Methods) and the MixMHCpred class I model was unadaptable.

Most frameworks provide different models for predicting MHC class I and II peptides. However, class II pMHC datasets are relatively sparse. Unlike other methods, the strength of our approach is that this can be alleviated by a model trained on both classes. Joint profiling of CD4/CD8 T cell epitopes is required to understand disease pathogenesis in complex immunological settings46,47,48. The joint SWING model (trained on class I and class II pMHC interactions) could predict the pMHC interactions for MHC-I and MHC-II molecule sampled data points (Fig. 4d, AUC = 0.70, P < 0.001). The performance was not inflated due to the presence of any one class as it was comparable to predicting class I (Fig. 4e, AUC = 0.70–0.83) and class II pMHC interactions (Fig. 4f, AUC = 0.88, 0.93). SWING learns the shared biology of antigen binding for class I and II MHC as demonstrated by prediction capabilities of the SWING class I model on class II interactions and existence of a confident joint model.

Initially, the evaluation was constrained to human pMHC interactions but a generalizable iLM should also predict interactions in unseen species. Therefore, we adapted the human SWING models to zero-shot predict mouse pMHC interactions. These are biologically distinct contexts, as mouse and humans are evolutionarily distant with different MHC sequences (Fig. 4g). The human SWING MHC-II model could predict the interactions for mouse MHC-II (Fig. 4h, AUC = 0.88). The joint model showed similar performance (Fig. 4i, AUC = 0.85). We observed that SWING is generalizable across sufficiently distinct contexts. This transference is specific to SWING and not observed with widely used tools for pMHC interactions.

SWING enables zero-shot pMHC interaction discovery

pMHC interactions can be associated with immune disorders and their prediction will validate SWING’s practicality in a stringent, biologically relevant setting36,49. We focused on the mouse H-2-IEk allele, a class II MHC implicated in lupus nephritis. We interrogated whether SWING could predict the experimentally verified immunopeptidome of immune-infiltrated kidneys in systemic lupus erythematosus (SLE). This performance was compared with that of MixMHC2pred v.2.0 and NetMHCIIpan v.4.2. SWING models (class I, class II and mixed-class models) performed better than other methods despite the out-of-training alleles (Fig. 4j and Supplementary Table 2). Additionally, since peptides in most training datasets have a median size of 15–17 AA with a range of 13–21 AA, length bias often impedes immunoprofiling specifically for rarer length peptides50. The recall for each peptide size was highest for SWING including longer peptides. NetMHCpan performed randomly and MixMHC2pred was limited to a specific size range. SWING was able to outperform NetMHCIIpan’s variable length setting as well (Methods) (Extended Data Fig. 1a).

In silico methods are also used to identify candidate peptides for novel or rare variants of MHC molecules. We use the H-2-IAg7 allele that is not represented in SWING’s and NetMHCpan training to compare methods for a custom MHC sequence. H-2-IAg7, a class II mouse allele, is orthologous to HLA-DQ8, a high-risk allele for type 1 diabetes (T1D). On an experimentally generated immunopeptidome IAg7 dataset51,52, SWING models made zero-shot predictions with highest recalls (in this case equivalent to accuracy) (Fig. 4k and Supplementary Table 2). MixMHC2pred v.2.0, which has H-2-IAg7 in its training, had worse recall than the class I and mixed-class models for peptide sizes of 12–21. NetMHCIIpan v.4.2 did not identify any epitope even after incorporating various mouse alleles in its training. SWING’s unique framework accurately predicts binders regardless of MHC receptor sequence, species or peptide length.

Ten random peptides were added for each interacting peptide for robust performance evaluation. The SWING mixed model and class II models outperformed MixMHC2pred and NetMHCIIpan across all metrics on the de novo data (Fig. 4l, average AUC = 0.72–0.92, Extended Data Fig. 1b and Supplementary Table 3). The class I model performed comparably to MixMHC2pred and NetMHCIIpan (Fig. 4l, average AUC 0.74; Extended Data Fig. 1b and Supplementary Table 3). Notably, in both H-2-IEk and H-2-IAg7 contexts, no length bias exists toward longer peptides across SWING models (Extended Data Fig. 1c).

We chose three rare and population specific human class II disease alleles that are associated with autoimmune disorders53,54 for demonstrating SWING’s applicability in understanding human immunological disorders. Initially, only peptides with a of size 12–21 were used. The SWING class II and mixed model performance (average AUC = 0.81–0.84) was comparable to the MixMHC2pred v.2.0 and NetMHCIIpan (average AUC = 0.91–0.87) although it was the only method that did not train on these alleles (Extended Data Fig. 2a and Supplementary Table 4). Further, SWING is the only method that functions in the full range of Immune Epitope Database (IEDB) identified peptide sizes (Extended Data Fig. 2b). The class II and mixed-class models can predict peptides of size 22–36 AAs better than NetMHCIIpan (Extended Data Fig. 2c, average AUC = 0.8–0.91). Overall, SWING is essential for predicting pMHC interactions with novel alleles and nonstandard peptides where existing methods fall short (Supplementary Fig. 3).

Protein interaction perturbation by missense variants

Variant effect prediction (VEP) is generally made at an organismal level with reliance on conservation, sequence and structural features55,56,57. Recently, deep learning methods such as EVE58, AlphaMissense (AM) and ESM1b59,60 have been successful at predicting coding variant effects. However, most variants do not affect protein structure but specific PPIs61,62,63. These interaction-specific phenotypes64 are discovered using structural information such as the localization of a variant at the binding interface. Deep learning methods (including AM and ESM1b) can accurately distinguish between interface and noninterface variants (Fig. 5a) and can predict full-length protein interactions (example, D-SCRIPT can predict full-length PPIs with AUC > 0.8). SWING can also perform these tasks with good prediction performance, but in some cases is not as accurate as these state-of-the-art methods.

a, Comparison of interface and noninterface variants scores from VEP tools as −log(P value). One-sided Mann–Whitney U-test was performed. b, Focus of current VEP tools on predicting organismal level pathogenic effect of variants over interaction-specific effect. c, Comparison of predictive performance of VEP tools in predicting interaction-specific effects of the Mendelian variants. d, Interaction language generation procedure for applying the SWING model to predict effect of mutations on PPIs. e, Prediction performance of SWING trained for predicting the interaction perturbation effect by Mendelian missense mutations and their corresponding wild-type interactions. f, Prediction performance of SWING trained for predicting the interaction perturbation effect by population variants and their corresponding wild-type interactions. g, Prediction performance of SWING trained for predicting the interaction perturbation effect by population variants and Mendelian missense mutations (mixed) and their corresponding wild-type interactions. h, Clusters of variant targeted protein sequences for the mixed dataset. i, Prediction performance of SWING on predicting the left-out sequence cluster represented through AUROC. Blue, validation clusters; red, permuted classifier; gray, random classifier and green, perfect classifier. The table provides AUC for each test cluster and the corresponding proximal clusters held out from training. j, Comparative performance of SWING to VEP tools in predicting interaction-specific effects of the Mendelian variants. k, Comparison of the percentage of SWING predicted disruption ExAC variants between rare (MAF < 1%, n = 1,840) and common (MAF > 1%, n = 1,825) variants at a 0.6 cutoff plus standard error of the mean. Two-tailed binomial proportions test P = 0.013. l, Predicted candidate interaction disrupting variants, mechanism of disruption and possible biological implications. Mean AUROC ± 2 × standard deviation. Panels b, d, h, i and l created using BioRender.com.

However, current methods are not predictive of interaction-specific disruptions by variants (Fig. 5b,c). SWING was adapted to predict these variant effects and an informative sub-sequence65 containing the substitution was selected as the sliding window. The interaction partner sequence was the base for the sliding window to generate the interaction language for this context (Fig. 5d).

SWING was first applied to predict interaction effects of Mendelian disease variants63. For each mutation effect in the training dataset, we added the corresponding wild-type interaction and assigned it as ‘nondisrupting’. Using cross-validation, we observed that SWING predicted the effect of Mendelian mutations with high accuracy (Fig. 5e, AUC = 0.87, P < 0.0001). Subsequently, SWING was also predictive of interaction disruption driven by common and rare population variants61 (Fig. 5f, AUC = 0.80, P < 0.0001). Next, we evaluated a source (Mendelian or single-nucleotide variant) agnostic model for predicting interaction disruption. This model predicted interaction disruptions regardless of the variant context (Fig. 5g, AUC = 0.81, P < 0.0001). Additionally, the change in biochemical scale did not affect SWING’s performance (Supplementary Fig. 4a–c). We observed logistic regression (Supplementary Fig. 5a–c) and the neural network (Supplementary Fig. 6a–c) gave comparable performance to the XGBoost Classifier when used with SWING embeddings. Therefore, the embeddings are informative enough that prediction performance becomes classifier independent. We also reported comparable performance for models trained without wild-type interactions (Supplementary Fig. 7a–c).

We checked for potential sequence bias in the model by dividing the dataset into clusters using sequence similarity (Fig. 5h). The cluster assignment was used for the train–test split, and we excluded test clusters and adjacent clusters from the training for fairness. We observed robust predictions for the different clusters (Fig. 5i, AUC = 0.71, P < 0.05) and these are consistent with the aforementioned test cases indicating low sequence bias. Overall, SWING outperformed more complex and parameterized VEP models to predict interaction phenotypes (Fig. 5j).

Variant minor allele frequency (MAF) is inversely correlated with interaction disruption61. We focused on rare and common mutations and showed correlation between SWING scores and allele frequency (Fig. 5k, Supplementary Fig. 8a,b and Supplementary Table 5). The correlation was consistent across allele-frequency bins and SWING score thresholds, demonstrating the model’s ability to capture a fundamental attribute of interaction-disruptive variants.

We highlight two interaction-disruptive variants predicted by SWING (Fig. 5l). The first variant is a common East Asian population variant in the conserved ATP-binding site of PRKCH66 and at the interface of its interaction with BANP. rs2230500 is a ‘risk variant’ for silent lacunar infarction and rheumatoid arthritis66,67,68, associated with higher autophosphorylation and kinase activity68. BANP forms a complex with tumor suppressor TP53 and a BANP mutation modifies the PRKCH interaction to support tumor suppression by altering its phosphorylation state and TP53 binding69. Variant rs12117 potentially disrupts the HPX–GLUD1 interaction70,71. HPX binds heme and transports it to liver plasma with a potential role in oxidative stress protection. GLUD1 catalyzes the oxidative deamination to alpha ketoglutarate and ammonia. AlphaFold3 modeling indicates that mutation on HPX is located away from the interaction interface but still induces a conformational change (Fig. 5l and Supplementary Fig. 9a,b). AM predicted benign labels for both variants. Meanwhile, SWING could even identify the rare instance of interaction disruptions caused by variants away from the interface.

SWING is a generalizable interaction architecture

pLMs perform protein associated tasks but have also been applied to biological interactions and tend to oversimplify complexes as the sum of the interacting molecules. We demonstrate SWING’s superior performance over pLMs in modeling interactions by comparing the performance of different language models on the interaction vocabulary.

We compared the SWING-iLM against models built on the interaction vocabulary; a CNN on the interaction language (CNN-iLM), a BERT72 transformer on the encoded sequences (BERT-iLM) and training XGBoost directly on the interaction language (XGB-iLE). We also compared against other pLMs using different techniques to represent interactions. We tested the traditional methods of concatenating the interacting sequences and embedding the results using Doc2Vec (CEmbed-pLM). We trained two Doc2Vec models for each protein and summed the embeddings (ESum-pLM) to use as features. Finally, we used a protein sequence Doc2Vec model73 (D2V-pLM) and the Evolutionary Scaled Model 2 (ESM2)8 to generate protein embeddings (ESM-PLM) (Fig. 6a).

a, Schematic representation of different types of PPI models. b, Prediction performance in a SCV setting of PPI models trained for predicting the interaction perturbation effect by a mix of population variants and Mendelian missense mutations. Error bars span AUC ± standard deviation. Barplot shows AUC scores of ten replicates of tenfold cross-validation for each model. D2V, Doc2Vec. c, Prediction performance in a cross-prediction setting of mixed allele PPI models trained for predicting binders of a mix of three unseen class I and two unseen class II MHC alleles, averaged for each model. In all parts, gray, random classifier and green, perfect classifier. Shaded regions span ± standard deviation. d, Prediction performance in a cross-prediction setting of mixed allele PPI models trained for predicting binders of two unseen class II MHC alleles, averaged for each model. Shaded regions span ± standard deviation. e, Prediction performance in a cross-prediction setting of mixed allele PPI models trained for predicting binders of three unseen class I MHC alleles, averaged for each model. Shaded regions span ± standard deviation. f, Prediction performance in a cross-prediction setting of class I allele PPI models trained for predicting binders of the unseen DRB1_0102 allele. g, Prediction performance in a cross-prediction setting of class I allele PPI models trained for predicting binders of the unseen DRB1_0404 allele. h, Prediction performance in a cross-prediction setting of mixed allele PPI models trained for predicting binders of the unseen H-2-IEk allele. i, Prediction performance in a cross-prediction setting of mixed allele PPI models trained for predicting binders of the unseen H-2-IAg7 allele. Mean AUROC ± 2 × standard deviation. Panel a created using BioRender.com.

In the interaction disruption by mutations task, SWING-iLM (AUC = 0.81) outperformed CNN-iLM (AUC = 0.74), BERT-iLM (AUC = 0.71), XGB-iLE (AUC = 0.58), CEmbed-pLM (AUC = 0.74), D2V-pLM (AUC = 0.78) and ESM-pLM (AUC = 0.77) (Fig. 6b and Supplementary Fig. 10a). The CNN-iLM performed sufficiently to indicate that the language has some signal but an embedder helps improve performance. ESum-pLM (AUC = 0.85) performed the best of models (slightly better than SWING) for the missense mutation disruption task.

We then compared these models for the pMHC binding prediction task. For models trained on the class I and class II pMHC data, the iLMs were best at predicting the corresponding unseen class I and class II alleles (Fig. 6c, AUC = 0.76–0.87 and Supplementary Fig. 10b). SWING-iLM best predicted class II pMHC interactions (Fig. 6d, AUC = 0.90 and Supplementary Fig. 10c) and XGB-iLE trained on a bigger dataset was best for class I predictions, followed by CNN-iLM (Fig. 6e, AUC = 0.89–0.96 and Supplementary Fig. 10d).

In a cross-class pMHC prediction context, only class I SWING-iLM was able to cross-predict class II pMHC interactions (Fig. 6f,g, AUC = 0.74–0.77 and Supplementary Fig. 10e,f) and only the human SWING-iLM could cross-predict murine class II (H-2-IEk and H-2-IAg7) pMHC interactions (Fig. 6h,i, AUC = 0.81–0.90 and Supplementary Fig. 10g,h). The SWING-iLM architecture was the only model capable of predicting the interactions for cross-class and cross-species pMHC interactions. All other iLM and pLM models performed randomly.

Passively using pLM embeddings does not match SWING-iLM’s performance across contexts. The hardest evaluations that involved interclass and interspecies pMHC predictions were those where SWING-iLM showed the best performance over other models.

Discussion

pLMs can represent proteins well but are suboptimal at capturing interaction nuances. We present SWING, an iLM for converting interacting proteins and peptides into a biochemical language. SWING uses Doc2Vec for generating language embeddings. SWING models can predict pMHC interactions for multiple difficult contexts and perform complex VEP tasks using only sequence information.74.We have focused on predicting protein–peptide interactions, but SWING can be generalized to various macromolecular interactions. In the protein context, further nuance could be captured in PPIs, such as the effect of post-translational modifications on the interaction profile of a protein. Subsequent iterations of SWING should be able to handle more than two input sequences and predict complexes such as T cell receptor–pMHC interactions. Future SWING iterations will be developed to handle different molecular entities such as DNA, RNA and small molecules to profile different kinds of heterogeneous interaction such as transcription factor–DNA complexes to better understand regulatory networks. Although SWING is generalizable across different interaction-specific tasks within a biological domain, it is not a biological interactions foundation model. SWING needs to be customized and trained in a biological context-dependent manner. A main reason is incompleteness inherent in biological networks compared to protein sequences and the specificity of the interaction partners is conditioned strongly on the context. SWING is proficient at predicting peptide–peptide and peptide–protein interactions and other methods such as D-SCRIPT28 may be better at predicting full-length protein interactions.

Overall SWING is a highly versatile iLM that can be adopted across domains for different prediction tasks. It is adept at learning the language of interactions, which transfers well as features across biological contexts within a domain and proves to be an effective tool even in data-sparse contexts.

Methods

SWING framework

Language generation for an interaction has three crucial components: a sliding window, the target protein sequence and a biochemical metric. The sliding window is a sub-sequence from the protein of interest. The length of the sliding window can span from 3 to 36 AA. These ranges include the lengths tested in this study, but can be longer or shorter depending on the context. The target protein sequence is much longer than the sliding window. The sliding window is completely aligned (position for position) to the target protein sequence starting from the first AA on the target protein. At each position the absolute rounded difference in a biochemical metric is taken between the pair of AA at the particular point. Grantham polarity and Miyazawa hydrophobicity scales were used as the two metrics in the study. The information was sourced from expasyProtScale (https://web.expasy.org/protscale/).

where ‘metric’ denotes the value for a particular AA for a specific biochemical metric, i denotes the position on the sliding window and j is the position on the target protein.

The rounded-off biochemicaldifference is the encoding for the pair of AA at a given position on the two sequences. The encodings are concatenated sequentially to generate the interaction language. We add paddings in the end to ensure that the entire length of the target sequence is captured. Finally, the interaction language sequence is divided into k-sized sub-sequences, also called k-mers. The hyperparameter k is either tuned or chosen based on biological context. The k-mers are the input to the embedding architecture for generating the low-dimensional representations for each interaction. The Doc2Vec model is used for generating the interaction level embeddings. We used the Doc2Vec implementation from gensim75 (https://radimrehurek.com/gensim/) v.4.2.0. Two different architectures of the Doc2Vec model were used for the different biological interaction contexts. In the distributed memory (DM) mode, a target biochemical k-mer is predicted using the embeddings of surrounding k-mers (also called the context window) along with the interaction embedding for a particular interaction. In the distributed bag of words, the k-mers in a fixed length context window are predicted from the interaction embedding alone. The size of the context window, w can be adjusted in the embedding model. In both architectures, the k-mer embeddings and interaction embeddings are updated iteratively using stochastic gradient descent and back-propagation. In gensim, the DM hyperparameter is set to 1 for DM architecture and 0 for the distributed bag of words architecture. Besides the context length, other important Doc2Vec hyperparameters are the size of the vectors in the embedding matrix (dim), the minimum frequency of k-mers for inclusion in the model training (min_count) and the initial learning rate (alpha). After training the Doc2Vec model, the document embeddings are used as input features for training a classifier for interaction-specific prediction tasks. We used XGBoost’s XGBClassifier() (https://xgboost.readthedocs.io/en/stable/) function (v.1.6.1) and the sklearn’s LogisticRegression() (https://scikit-learn.org/stable/index.html) implementation as the classifiers. The important hyperparameters in XGBoost are the number of boosting rounds (n_estimators), the maximum tree depth for the base learners (max_depth) and the learning rate for the boosting (learning_rate). The hyperparameters were tuned for the language, Doc2Vec and the classifier using the Bayesian optimization setting in weights and biases (WandB) (https://wandb.ai/site). The selection of the hyperparameters across the different models was selected in a supervised manner based on the performance of the classifier at a particular task. The evaluation setting for the hyperparameter optimization was tenfold cross-validation each for ten replicates of the dataset (generated through changing the order of data points in the dataset). Either the mean of the area under the receiver operating characteristic curve (AUROC) or the F1 score was used for selecting the best model hyperparameters.

Prediction of pMHC interactions using SWING

Mass spectrometry elution datasets were acquired from the publicly available HLA-specific NetMHCpan v.4.1 evaluation sets76, and NetMHCIIpan v.4.2 training sets77 for class I and class II respectively. The MHC sequences were acquired from the MHC Restriction Ontology (https://github.com/IEDB/MRO). The HLA molecules with available mass spectrometry elution datasets were functionally clustered using MHCCluster v.2.0 (ref. 78). For the class I model datasets, a subset of HLAs (HLA*A02:02, HLA*A02:11, HLA*A11:01, HLA*A25:01, HLA*A32:01, HLA*B07:02, HLA*B15:03, HLA*B38:01, HLA*B40:01, HLA*B40:02, HLA*C07:02, HLA*C03:03, HLA*C05:01, HLA*C08:02) were chosen. The subset was carefully curated to represent and test the diverse functional clusters identified through MHCCluster v.2.0. Peptides larger than 12 AA were removed for all class I sets. Similarly for the class II model, a subset of single-allele datasets (DRB1*01:01, DRB1*01:02, DRB1*03:01, DRB1*04:01, DRB1*04:04, DRB1*07:01 and DRB1*15:01) were selected.

We trained an XGBoost model with the pMHC embeddings as the features. For the class I model, eight HLAs were chosen (HLA*A02:11, HLA*A11:01, HLA*A25:01, HLA*B07:02, HLA*B15:03, HLA*B40:02, HLA*C07:02, HLA*C08:02). The HLAs were chosen from functionally distinct clusters such that each HLA group is similarly represented. Six class I (HLA*A02:02, HLA*A32:01, HLA*B40:02, HLA*C03:03, HLA*C05:01) datasets were chosen for validation. For the class II model, the datasets were processed to remove any data that had more than one MHC molecule assigned to the entry. From the MHC molecules that were left, five (DRB1*01:01, DRB1*03:01, DRB1*04:01, DRB1*07:01, DRB1*15:01) were picked from different functional clusters for the training set, and two (DRB*:01:02, DRB1*04:04) were used as the validation. The training subset was chosen such that each DRB1 dataset was equally represented (~30,000 peptides each). The language was generated using the peptide as the sliding window and the first 210 positions of MHC protein sequence as the target sequence. This region includes the class I biologically relevant region (206 AA) of the alpha chains with three AA as padding. For class II, the same cutoff on the beta chain was used to keep the models consistent. We used the Grantham polarity score to capture the difference between the AA pairs at each position of the two sequences. The training k-mers were shuffled for the cross-prediction to prevent the model from overfitting. The Doc2Vec model (min_count =1, dm=0) parameters, dim, w, alpha and epochs, along with the XGBoost Classifier parameters, n_estimators, max_depth and learning rate, were tuned for optimal performance in predicting the interaction-specific task. The final class I model parameters are: min_count=1, dm=0, k=7, dim=583, w=11, alpha=0.02349139979145104, epochs=13, n_estimators=232, max_depth=6, learning_rate=0.9402316101150048. The final class II model parameters are min_count=1, dm=0, k=7, dim=146, w=12, alpha=0.03887032752085429, epochs=13, n_estimators=341, max_depth=9 and learning_rate=0.6534638199102993.

First, the model was evaluated in an SCV setting. Here, tenfold cross-validation was performed for each of the ten replicates of the dataset (generated by the shuffling of the order of the data points in the dataset) and the mean AUROC was reported for each time cross-validation was performed. SCV was also performed for a permuted set where the labels were randomly generated with a 1:10 distribution of binders and nonbinders, and the data shuffled in the same way as previously reported. SCV was also performed for a permuted set where the labels were randomly generated with a 1:10 distribution of binders and nonbinders, and the data shuffled in the same way as previously reported. For visualization, the false positive rates and true positive rates at each threshold were obtained using the roc_curve() function in sklearn. Additionally, we performed interpolation using the numpy interp() function to calculate the mean of the AUROC and the standard deviation of the performance. The mean AUROC was plotted as a dashed line with shading to represent the two standard deviations as the performance bound. For testing the cross-prediction performance of the class I model, first, we chose the HLA alleles closer to the training dataset after the functional clustering. We performed ten bootstraps on the validation dataset, keeping the label distribution intact for each subset of the validation set to mimic the entire dataset. The AUROC was calculated and visualized in the same way as described above. Second, we chose the validation set to comprise of HLA alleles that were further away from the HLA alleles in the training set after the functional clustering. The steps were repeated for this validation set as performed for the preceding validation setting. The exact steps were repeated for testing the performance of the SWING class II model. Due to the minimal number of single-allele class II datasets available, two class II datasets were chosen for the validation.

Optimization of feature selection for MHC model

We optimized the class I MHC model by selecting a subset of MHCs to represent the full functional space. To find the optimal number of datasets, we compared the performance of the class I model on SCV and cross-prediction tasks as described above. Five (HLA*A02:11, HLA*A25:01, HLA*B07:02, HLA*B15:03, HLA*C08:02), eight and twelve (HLA*A02:11, HLA*A11:01, HLA*A25:01, HLA*A68:01, HLA*B07:02, HLA*B15:03, HLA*B35:03, HLA*B40:01, HLA*B58:01, HLA*C07:02, HLA*C08:02, HLA*C12:03) class datasets were compared.

Testing SWING’s performance on customized sequence inputs

We tested the effect of length on the MHC sequence on SWING’s performance. The longer MHC sequences encompass the full chain sequence and are between the range of 265–369. The biochemical metric was the Grantham polarity scale from expasyProtScale. The same evaluation setting was used for evaluating the class I and II models with the longer sequence of the MHC molecules as in the previous section.

The second test was on the effect different biochemical scales have on SWING’s predictive power. We changed the scale from the Grantham polarity scale to the Miyazawa hydrophobicity scale, and the model was evaluated in the same way as described earlier.

Finally, the effect of the peptide length on SWING’s performance was checked. The effect of peptide length was tested on SWING’s performance by truncating the training and test set peptides to different sizes. The peptide sizes in the training set ranged in lengths of 13–21. Peptides larger than the cutoff size were truncated by taking the middle of the peptide. Sixteen different cutoff sizes (20, 19, 18, 17, 16, 15, 14, 13, 12, 11, 10, 9, 8, 7, 6, 5) were used and the cross-prediction was performed as described and the AUC score was reported for two datasets (DRB1_0102, DRB1_0404). Additionally, the AUROC was reported for full, 20, 16 and 12 cutoff size runs.

Testing SWING’s cross-prediction between interactions

SWING’s performance for predicting between biologically different interaction spaces was evaluated. First, the performance of the class I model was tested on two human class II MHC (DRB1*01:02, DRB1*04:04) datasets using the cross-prediction method previously described without the bootstrapping. We compared the SWING class I and class II model performance as defined by AUC score with NetMHCpan v.4.1 (https://services.healthtech.dtu.dk/services/NetMHCpan-4.1/), NetMHCIIpan v.4.2 (https://services.healthtech.dtu.dk/services/NetMHCIIpan-4.2/), MixMHCpred v.2.2 (https://github.com/GfellerLab/MixMHCpred) and MixMHC2pred v.2.0 (http://mixmhc2pred.gfellerlab.org/). As the NetMHCpan v.4.1 method is not traditionally compatible with class II data, the allele names (DRB1_0102, DRB1_0404) were manually added to the allele file and all relevant pseudo sequence files were copied from the NetMHCIIpan v.4.2 model. An empty space was provided for MixMHCpred v.2.2 since it was not applied to the class II data due to incompatibility with class II alleles as well as peptides larger than 14. For the NetMHCpan v.4.1, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0 models the ‘EL_score’, ‘Score’ and the negative of the ‘%Rank_best’ columns were used as the predictions, respectively.

Next, a mixed-class model that consisted of eight class I (HLA*A02:11, HLA*A11:01, HLA*A25:01, HLA*B07:02, HLA*B15:03, HLA*B40:02, HLA*C07:02, HLA*C08:02) and ~10,000 peptides from two class II datasets (DRB1*04:01, DRB1*13:01) was trained. Hyperparameter optimization was performed, and the final mixed-class model parameters were: min_count=1, dm=0, k=7, dim=74, w=12, alpha=0.03887032752085429, epochs=10, n_estimators=269, max_depth=9 and learning_rate=0.6082359422582875. Model performance was evaluated through the SCV and cross-prediction on three class I alleles (HLA-A02:02, HLA-B40:02, HLA-C05:01) and two class II alleles (DRB1_0102, DRB1_0404). Last, a mouse class II allele test set (H-2-IAb) was constructed from the NetMHCIIpan v.4.2 training data and peptides available on IEDB, to test the performance of the class II and mixed-class models. The MHC sequence was retrieved from the UniProt database. The AUROC was reported for each model.

Profiling the IEk immunopeptidome

The protocol to profile the IEk immunopeptidome was adopted from ref. 51 with some modifications79. Kidneys from 20-week-old MRLlpr mice were harvested and minced on a gentleMACS (Miltenyi Biotec) with Collagenase D (Roche) and DNase I (Sigma) in Hank’s buffered saline solution (Gibco)80. Cells were passed through a 70-µm filter to obtain a single cell suspension and red blood cell lysis was performed using RBC lysis buffer (Biolegend). The cells were counted and resuspended at 1 × 108 cells per ml in PBS (Corning). Cells were pelleted and incubated with lysis buffer (40 mM MEGA 8 (Sigma-Aldrich), 40 mM MEGA 9 (Sigma-Aldrich), 1 mM phenylmethylsulfonyl fluoride (ThermoFisher Scientific), 0.2 mM iodoacetamide (Sigma-Aldrich), 20 µg ml−1 leupeptin (Millipore Sigma) and Roche Complete Mini Protease Cocktail (Roche) for 1 h at 4 °C on a rocking platform. The lysate was cleared by centrifugation at 20,000g for 30 min at 4 °C. Anti-MHC class II IEk antibody [14-4-4S] (Abcam) was covalently linked to sepharose G resin (Cytiva) by incubation for 30 min in cold room on rocker. The cleared lysate was transferred to a tube containing sepharose G coupled to antibody and incubated at 4 °C overnight on a rocker. The IEk sepharose lysate was poured in a Poly-Prep Chromatography Column (BioRad) and gradient washes were performed with the following buffers serially: 10 ml of 150 mM NaCl, 20 mM Tris pH 7.4, 10 ml of 400 mM NaCl, 20 mM Tris pH 7.4, 10 ml of 150 mM NaCl, 20 mM Tris pH 7.4 and 10 ml of 20 mM Tris pH 8.0. The peptides were eluted in 10% acetic acid and sent for mass spectrometry to MSBioworks. Peptides (50% per sample) were acidified, then concentrated and desalted using solid-phase extraction with a Waters µHLB C18 plate. Peptides were loaded directly and eluted using 30:70 acetonitrile:water (0.1% trifluoroacetic acid (TFA)). Eluted peptides were lyophilized and reconstituted in 0.1% TFA. Peptides (100%) were analyzed by nano-liquid chromatography with tandem mass spectrometry using a Waters NanoAcquity system interfaced to a ThermoFisher Fusion Lumos mass spectrometer. Peptides were loaded on a trapping column and eluted over a 75-µm analytical column at 350 nl min−1; both columns were packed with Luna C18 resin (Phenomenex). A 2-h gradient was used. The mass spectrometer was operated using a custom data dependent method, with mass spectrometry performed in the Orbitrap at 60,000 full-width at half maximum resolution and sequential tandem mass spectrometry performed using high resolution collision-induced dissociation and electron-transfer/higher-energy collision dissociation in the Orbitrap at 15,000 full-width at half maximum resolution. All mass spectrometry data were acquired from m/z of 300 to 1,600. A 3-s cycle time was used for all steps. Peptides were analyzed using PEAKS software with the following parameters: Enzyme, None; Database, Swissprot Mouse; Fixed modification, None; Variable modifications, Oxidation (M); Acetyl (Protein N terminus); Carbamidomethyl (C); Mass values, Monoisotopic; Peptide Mass Tolerance, 10 ppm; Fragment Mass Tolerance, 0.02 Da; Max Missed Cleavages, N/A; PSM FDR, 1% and Chimeric peptide, TRUE. The IEk antibody was Recombinant Mouse Anti-MHC class II Antibody (14-4-4S) and Abcam (cat. no. ab25681). Twenty-six-week-old female mice were housed in microisolator cages with up to five mice per cage and a 14-h light/10-h dark cycle was used. Temperatures of ~18–23 °C (65–75 °F) with 40–60% humidity were maintained. There was constant access to water. The catalog number of the MRLlpr mice strain is 000485 (https://www.jax.org/strain/000485).

Evaluating the performance of SWING on the de novo datasets

The accuracy of multiple pMHC prediction tools were evaluated using the experimentally generated H-2-IEk dataset. The MHC sequence for the allele was retrieved from UniProt. H-2-IEk binders between the lengths of 9 and 25 AA with standard AAs and no modifications were considered. This subset was evaluated using the three SWING models as well as two benchmarking methods, NetMHCIIpan v.4.2 and MixMHC2pred v.2.0. For the SWING models, we predicted the probability that the peptides are interactors of H-2-IEk. A cutoff threshold was identified using the highest geometric mean of the false positive rate and true positive rate from the SCV of the class I (0.0012), class II (0.008) and mixed-class (0.002) models. Predicted probabilities that were larger than the cutoff threshold were considered binders. For NetMHCIIpan v.4.2, the web server was used. Peptides were split into different groups by size and each group was run through with the H-2-IEk allele as the chosen sequence and default parameters. In a separate analysis, peptide size was set to 15 and NetMHCIIpan predicted on all possible 15-mers for all peptides larger than 15 peptides. Any peptide for which a 15-mer was identified as a binder was given a binder label. For the MixMHC2pred v.2.0, only peptides of length 12–21 were provided due to the limitations imposed on peptide length by the method. Peptides with a rank <30 were considered interactors. Recall was used as the performance metric for all models. A recall was calculated for each peptide length for the range of 9–25 and plotted. Additionally, a total recall score was calculated. Since MixMHC2pred v.2.0 is only able to calculate a score for peptides 12–21, this range was used for the total recall calculation.

Next negatives from the MixMHC2pred v.2.0 rpep folder were added to the binders set in a 1:10 binders to nonbinders by randomly selecting peptides across all lengths (12–25). NetMHCIIpan v.4.2, MixMHC2pred v.2.0 and the SWING models were used to predict the labels and the AUC and AUPR scores were reported. The rank columns were used for both NetMHCIIpan v.4.2 and MixMHC2pred v.2.0. To compare with MixMHC2pred v.2.0 only peptides in the size range 12–21 were considered for all methods. To assess whether there is a length bias in SWING predictions toward longer peptides, the slope of a line across the AUC at each length between 12 and 25 was calculated using linear least-squares regression (scipy.stats.linregress function) and a Bonferroni multiple comparisons correction for each model. The P value and the confidence interval were used to assess whether the difference in AUC across lengths was statistically significant.

We repeated this procedure with a second, previously published dataset for the H-2-IAg7 allele52. Since NetMHCIIpan v.2.0 does not have the H-2-IAg7 allele in the server, the sequence was provided for the beta chain. Additionally, since NetMHCIIpan v.2.0 did not successfully rank any peptides, the secondary 15-mer analysis was not performed.

Evaluating the performance of SWING on human disease alleles

SWING’s performance on human alleles associated with disease was evaluated using peptides downloaded from IEDB. Three class II receptors (DRB1_1454, DRB1_0405, DRB1_1201) that were not in the SWING training set were evaluated. For each allele, all binders of all sizes and assays on IEDB were identified. For the negative set, negatives from the MixMHC2pred v.2.0 rpep folder were added to the binders set in a 1:10 as previously described. The AUC score for SWING class II and mixed model, MixMHC2pred v.2.0, and NetMHCIIpan v.4.2 was reported. For the first comparison, only performance on peptides between 12 and 21 was assessed to accommodate the working range of MixMHC2pred v.2.0. Another comparison of peptide sizes between 22 and 36 was performed to compare across the SWING mixed and class II models and NetMHCIIpan v.4.2.

Using SWING to predict interaction disruption

The dataset for predicting the impact of missense mutations on protein interactions was sourced from two papers studying the impact of population variants on interactions across the allele-frequency spectrum61 and the effect of disease-associated variants on the interactions respectively63. The ENTREZ gene IDs were mapped to the respective UniProt IDs. The UniProt database was used for mapping the proteins to the respective AA sequences. Both mutant and wild-type interactions were included in the dataset. The resulting dataset consisted of 6,306 missense mutations (1,555 Mendelian disease-associated variants and 4,751 population variants) with their corresponding wild types tested across 2,953 PPIs (12,612 total PPIs in the dataset with wild types). With respect to the training procedure, the reported variable was considered to be the disruption of the PPI by the missense mutation tested through a yeast-two-hybrid assay for both the datasets (one is disruption).

For the task of predicting the impact of missense mutations on PPIs, the window is selected from the protein sequence with the mutation. Particularly, L positions on both sides of the mutation affected site are used to select the sliding window where L is a tunable parameter. The language generation task is the same as described above. The string generated from this process is split into k-mers, where k is another tunable parameter. The embeddings generated for the protein-perturbed peptide pair were fed as the features to the classifier to predict the disruption of an interaction. The training datasets had population variants across the allele-frequency spectrum or are associated with Mendelian diseases. The alpha (learning rate), w (window), epochs and dm (training algorithm) of the Doc2Vec model (fixed dim=128 and min_count=1) and n_estimators, max_depth, and learning_rate of the XGBoost Classifier are tuned for optimal performance of the classifier using a Bayesian approach through the framework provided in Weights and Biases (WandB, wandb.ai). For a model trained on both Mendelian disease-associated variants and population variants k = 7 and L = 1 was used. Doc2Vec parameters included an alpha of 0.08711, window size of 6, 52 epochs and used DM (dm=1). XGBoost had 375 estimators, maximum depth of 6 and learning rate of 0.08966.

The SCV was performed on three different models, a SWING model trained on just Mendelian disease-associated variants, model trained with population variants and a model trained on both the datasets. The evaluation framework was the same as described in the sections above.

We constructed a leave-cluster-out framework for a more rigorous evaluation of the model. Each AA in the sequence of the interaction partner protein of the mutated protein was originally encoded with a number between 0 and 20. Subsequently, we performed k-means clustering with k = 10 on the encoded sequences to assign clusters using sklearn. We then applied sklearn’s principal components analysis function to the encodings to visualize them in a two-dimensional space, coloring based on cluster assignment. Sets of two clusters were considered a fold and the two closest positionally adjacent clusters were dropped from the training set for complete exclusivity between the training and testing sets. The AUROC for each test cluster was reported individually. Due to sparse clusters C8 and C9, a fifth fold of proximity dropout was not considered.

AM59, ESM1b60 and EVE58 mutation effect prediction models (LLM tools) were chosen for benchmarking. We checked whether there is a difference in the pathogenicity scores for the interface and the noninterface localized variants. We selected only those mutations whose interaction perturbation profiles were present in the training dataset curated for SWING and the corresponding cocrystal structure for the interaction were present in the Protein Data Bank. Additionally, these were all ClinVar variants and were reported in ref. 59. The final evaluation set was the intersection of the ClinVar variants at the interface of structurally resolved interactions and missense mutations that disrupted the particular interactions. A Mann–Whitney U-test was done to test the difference in the scores of interface versus noninterface localized variants. The P values were transformed to negative of log10 and reported. The LLM tools scores were tested for predictive power on interaction-specific effects by ascribing the scores with each interaction that has been tested for the particular variant. The AUROC score was reported for each method and compared to the SCV AUROC from SWING.

The SWING model that had been trained on Mendelian and population variant interaction effects datasets was used to predict the probability of disruption on Exome Aggregation Consortium (ExAC) variants of different MAFs. Around 2,000 ExAC variants with an interaction partner were chosen at random for a MAF percentage of less than 1% and greater than 1%. These were paired with their wild type for the embeddings. The percentage of candidates over a threshold on the predicted probability of disruption was calculated and compared across the two categories. Significance was calculated through a proportions z-test using statsmodels package propotions_ztest. The threshold for SWING classifying a variant as highly interaction disruptive was 0.5 and the threshold for AM to classify a variant as benign was 0.5. Clinvar pathogenicity annotations (https://www.ncbi.nlm.nih.gov/clinvar/) were used to determine the literature reported pathogenic status of the variant.

Hydrophobicity scale for predicting PPI effect of mutations

The interaction language was encoded using the Miyazawa hydrophobicity from expasyProtScale. We used the same Doc2Vec implementation from gensim75 (https://radimrehurek.com/gensim/) v.4.2.0 and XGBClassifier() from XGBoost (https://xgboost.readthedocs.io/en/stable/) v.1.6.1 implementation as the classifier. The hyperparameters tuned from WandB’s Bayesian optimization setting (https://wandb.ai/site) were found from the mixed model and used for the Mendelian disease-associated and population variants as mentioned in a preceding section (language generation: k = 6, L = 2; Doc2Vec: alpha=0.00417, epochs=82, dim=128, dm=0, window=7; XGBoost: n_estimators=380, max_depth=6, learning_rate=0.04230).

SWING-logistic regression for interaction disruption prediction

We also wanted to show that a simpler model was able to capture signal in predicting the impact of missense mutations on PPIs using the interaction language. We used the same Doc2Vec implementation from gensim75 (https://radimrehurek.com/gensim/) v.4.2.0 but replaced the XGBoost Classifier with sklearn’s LogisticRegression() (https://scikit-learn.org/stable/index.html) v.1.1.1 implementation as the classifier. The hyperparameters used for language generation and Doc2Vec were the same as Fig. 5g (mixed model: k = 7, L = 1, alpha=0.08711, window=6, epochs=52, dm=1, dim =128), but we used WandB’s Bayesian optimization setting (https://wandb.ai/site) to tune LogisticRegression() on C and l1_ratio, fixing solver to saga, max_iter to 10,000 and penalty to Elastic Net. The tuned LogisticRegression() parameters were l1_ratio=0.02384 and C = 0.48407. While this simpler model found a signal, we found XGBoost() performed better at the missense mutation disruption prediction task.

SWING-neural network for interaction disruption prediction

Using the same Doc2Vec implementation from gensim75 v.4.2.0, we implemented sklearn’s MLPClassifier() (https://scikit-learn.org/stable/index.html) v.1.1.1 as our classifier. The hyperparameters used for language generation and Doc2Vec were again the same as Fig. 5g (mixed model: k = 7, L = 1, alpha=0.08711, window=6, epochs=52, dm=1, dim=128), but we used WandB’s Bayesian optimization setting (https://wandb.ai/site) to tune MLPClassifier() on activation, alpha, hidden_layer_sizes and learning_rate with early_stopping=True. The tuned MLPClassifier() parameters were activation=tanh, alpha=1.28499, hidden_layer_sizes = (133,), and learning_rate=invscaling.

SWING trained on only mutant interactions

Using the same Doc2Vec implementation from gensim75 (https://radimrehurek.com/gensim/) v.4.2.0 and XGBClassifier() from XGBoost (https://xgboost.readthedocs.io/en/stable/) v.1.6.1 implementation, a model trained on only mutated interactions was tested in similar ways to Fig. 5. The hyperparameters tuned from WandB’s Bayesian optimization setting (https://wandb.ai/site) were the same as mentioned in a preceding section (language generation: k = 7, L = 2; Doc2Vec: alpha=0.03488, window=3, epochs=7, dim=128, dm=0; XGBoost: n_estimators=208, max_depth=6 and learning_rate=0.05819). We used D-SCRIPT’s human PPIs training dataset to train SWING for predicting full-length PPIs and used the entire sequence of one of the interactors as the sliding window. The AUROC was calculated and reported.

Evaluating SWING and interaction modeling approaches

One-dimensional CNN-iLM

The SWING biochemical encodings were used as the input to the one-dimensional convolutional neural network model. The maximum length of the input was set to 4,500. The model had a single embedding layer over the 11 characters in the SWING interaction language, a one-dimensional convolutional layer and two fully connected layers with the final layer predicting the status of the interaction. The objective function of the model during training was to minimize the cross-entropy loss. The hyperparameters used for the different models trained for different prediction tasks is as follows:

-

(1)

Missense mutation effect on protein interactions: embedding dimension 248, number of kernels 119, epochs 15, kernel size 7, dropout 0.28493, batch size 37 and learning rate 0.00025698.

-

(2)

Mixed-class pMHC interactions: embedding dimension 27, number of kernels 118, epochs 61, kernel size 5, dropout 0.48176, batch size 63 and learning rate 0.00080398.

-

(3)

Class I pMHC interactions: embedding dimension 17, number of kernels 120, epochs 68, kernel size 5, dropout 0.36459, batch size 53 and learning rate 0.00041034.

BERT-iLM

Encoded sequences were split by character using a custom word-level tokenizer from the HuggingFace Tokenizers library. The tokenizer was trained with a vocabulary of 16 tokens consisting of the digits 0–9 and special tokens <s>, <pad>, </s>, <unk>, <mask> and <|endoftext|>. After tokenization, sequences were truncated or padded to a length of 4,500 tokens and saved using the HuggingFace Datasets library with a random 15% of the sequences held out as an evaluation set.

The sequence embeddings were extracted from a custom RoBERTa model built and trained on a masked language modeling task with the HuggingFace Transformers library. The hidden layer and intermediate layer dimensions were 128 and 64, respectively, and the model was built with four hidden layers and four attention heads. The gelu activation function, an initial learning rate of 1 × 10−4, layer dropout probabilities of 0.1 and a mask probability of 0.15 were used. Training was done for 40 epochs and aimed to minimize a cross-entropy loss.

XGBoost on interaction language encodings as features

We trained an XGBoost Classifier model directly on the SWING encodings and used fivefold stratified cross-validation to report the mean AUROC for the different prediction tasks. Based on the interaction task, the models were trained with the following hyperparameters:

-

(1)

Missense mutation effect on protein interactions: learning rate 0.04188, n_estimators 65 and max_depth 4.

-

(2)

Mixed-class pMHC interactions: learning rate 0.23707, n_estimators 66 and max_depth 7.

-

(3)

Class I pMHC interactions: learning rate 0.19707, n_estimators 99 and max_depth 10.

Embeddings for concatenated protein sequences (CEmbed-pLM)

In our CEmbed-pLM, the whole target and interactor sequences are concatenated end to end, then encoded with Grantham scores. The encoding is k-merized and used to train a Doc2Vec model from gensim75 v.4.2.0 to create embedded representations of the PPI. The final embeddings are used to train a XGBClassifier() from XGBoost (https://xgboost.readthedocs.io/en/stable/) v.1.6.1 to predict whether the interaction is maintained or not. The hyperparameters tuned from WandB’s Bayesian optimization setting (https://wandb.ai/site) were the same as mentioned in a preceding section (language generation: k = 4; Doc2Vec: alpha=0.09147, epochs=181, window=4, dim=128, dm=0; XGBoost: n_estimators=93, max_depth=4 and learning_rate=0.09978).

Summed embeddings from two pLMs (ESum-pLM)

Protein sequences in a PPI are embedded separately, then the embedding matrices are summed element-wise together to represent the PPI. In this model, the target and interactor sequences are encoded with Grantham scores, k-merized and used to train two separate Doc2Vec models, one for the target protein sequence and another for the interactor protein sequence. We used the Doc2Vec implementation from gensim75 v.4.2.0, before being summed together to create a final embedded representation. The final embeddings are used to train a XGBClassifier() from XGBboost (https://xgboost.readthedocs.io/en/stable/) v.1.6.1 to predict whether the interaction is maintained or not. For language generation two k-mer sizes were optimized (k1 = 7, k2 = 2) using WandB’s Bayesian optimization (https://wandb.ai/site) on the F1 score. Two sets of Doc2Vec hyperparameters were trained for each model (dm1=0, dm2=1, alpha1=0.06022, alpha2=0.00052, window1=1, window2=4, epochs1=197 and epochs2=121) with fixed dim=128 in WandB. XGBoost parameters n_estimators of 191, max_depth of 7, and learning_rate of 0.057252 were used.

Integrated protein embeddings from a Doc2Vec pLM (D2V-pLM)

We used the protein language Doc2Vec model developed by Yang et al.73. We used the model where k = 5 and w = 7 to infer the embeddings of the proteins. The final embeddings had a dimension of 64. The embeddings for the target and the interactor protein sequences were summed element-wise and the resulting matrix was used as the features for training the XGBClassifier. The XGBClassifier was run with learning_rate of 0.27205, n_estimators 88, and max_depth of 10. We performed a fivefold stratified k-fold cross-validation to report the mean AUROC.

Machine learning model using integrated embeddings from ESM-pLM

We used the ESM2 (ref. 8) model with 36 hidden layers and 3 billion parameters to generate the embeddings for the target and the interactor protein sequences. The embedding dimension was 2,560. The embeddings for the two protein sequences were summed and used as the features for the XGBClassifier. The hyperparameters used during the training were, learning_rate of 0.45903, n_estimators=94 and max_depth=9. We performed a fivefold stratified k-fold cross-validation to report the mean AUROC.

All datasets were read in using python pandas (v.1.2.4), and the arrays and matrices were handled using numpy (v.1.20.1). The plots were generated using matplotlib (v.3.6.3).

Ethics declaration

All animal work was performed as per Institutional Animal Care and Use Committee (IACUC) guidelines under an approved IACUC protocol (nos. 20037102 and 22081781).

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

Data availability