Abstract

Associative learning depends on contingency, the degree to which a stimulus predicts an outcome. Despite its importance, the neural mechanisms linking contingency to behavior remain elusive. In the present study, we examined the dopamine activity in the ventral striatum—a signal implicated in associative learning—in a Pavlovian contingency degradation task in mice. We show that both anticipatory licking and dopamine responses to a conditioned stimulus decreased when additional rewards were delivered uncued, but remained unchanged if additional rewards were cued. These results conflict with contingency-based accounts using a traditional definition of contingency or a new causal learning model (ANCCR), but can be explained by temporal difference (TD) learning models equipped with an appropriate intertrial interval state representation. Recurrent neural networks trained within a TD framework develop state representations akin to our best ‘handcrafted’ model. Our findings suggest that the TD error can be a measure that describes both contingency and dopaminergic activity.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$32.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All behavioral and photometry data are available via figshare at https://doi.org/10.6084/m9.figshare.28216202 (ref. 69). Source data are provided with this paper.

Code availability

The code used to analyze photometry data, do modeling and generate the figures is available via figshare at https://doi.org/10.6084/m9.figshare.28216202 (ref. 69).

References

Rescorla, R. A. Pavlovian conditioning. It’s not what you think it is. Am. Psychol. 43, 151–160 (1988).

Gibbon, J., Berryman, R. & Thompson, R. L. Contingency spaces and measures in classical and instrumental conditioning. J. Exp. Anal. Behav. 21, 585–605 (1974).

Hallam, S. C., Grahame, N. J. & Miller, R. R. Exploring the edges of Pavlovian contingency space: an assessment of contingency theory and its various metrics. Learning Motivation 23, 225–249 (1992).

Cheng, P. W. From covariation to causation: a causal power theory. Psychol. Rev. 104, 367 (1997).

Gallistel, C. R., Craig, A. R. & Shahan, T. A. Contingency, contiguity, and causality in conditioning: applying information theory and Weber’s Law to the assignment of credit problem. Psychol. Rev. 126, 761–773 (2019).

Allan, L. G. Human contingency judgments: rule based or associative? Psychol. Bull. 114, 435–448 (1993).

Bermudez, M. A. & Schultz, W. Responses of amygdala neurons to positive reward-predicting stimuli depend on background reward (contingency) rather than stimulus-reward pairing (contiguity). J. Neurophysiol. 103, 1158–1170 (2010).

Griffiths, T. L. & Tenenbaum, J. B. Structure and strength in causal induction. Cogn. Psychol. 51, 334–384 (2005).

Gershman, S. J. & Ullman, T. D. Causal implicatures from correlational statements. PLoS ONE 18, e0286067 (2023).

Papini, M. R. & Bitterman, M. E. The role of contingency in classical conditioning. Psychol. Rev. 97, 396–403 (1990).

Kamin, L. Selective association and conditioning. In Fundamental Issues in Associative Learning (eds Mackintosh, N. J. & Honig, W. K.) 42–64 (Dalhousie Univ. Press, 1969).

Rescorla, R. A. & Wagner, A. R. A theory of Pavlovian conditioning: variations in the effectiveness of reinforcement and nonreinforcement. In Classical Conditioning II: Current Research and Theory (eds Black, A. H. & Prokasy, W. F.) 64–99 (Appleton Century Crofts, 1972).

Pearce, J. M. & Bouton, M. E. Theories of associative learning in animals. Annu. Rev. Psychol. 52, 111–139 (2001).

Bouton, M. E. Learning and Behavior: A Contemporary Synthesis (Sinauer Associates, Inc., 2007).

Madarasz, T. J. et al. Evaluation of ambiguous associations in the amygdala by learning the structure of the environment. Nat. Neurosci. 19, 965–972 (2016).

Sutton, R. S. & Barto, A. G. Reinforcement Learning: An Introduction vol. 1 (MIT, 1998).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Schultz, W., Dayan, P. & Montague, P. R. A neural substrate of prediction and reward. Science 275, 1593–1599 (1997).

Cohen, J. Y., Haesler, S., Vong, L., Lowell, B. B. & Uchida, N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature 482, 85–88 (2012).

Amo, R. et al. A gradual temporal shift of dopamine responses mirrors the progression of temporal difference error in machine learning. Nat. Neurosci. 25, 1082–1092 (2022).

Niv, Y. Reinforcement learning in the brain. J. Math. Psychol. 53, 139–154 (2009).

Jeong, H. et al. Mesolimbic dopamine release conveys causal associations. Science 378, eabq6740 (2022).

Garr, E. et al. Mesostriatal dopamine is sensitive to changes in specific cue-reward contingencies. Sci. Adv. 10, eadn4203 (2024).

Durlach, P. J. Role of signals for unconditioned stimulus absence in the sensitivity of autoshaping to contingency. J. Exp. Psychol. Animal Behavior Processes 15, 202–211 (1989).

de Jong, J. W. et al. A neural circuit mechanism for encoding aversive stimuli in the mesolimbic dopamine system. Neuron 101, 133–151.e7 (2019).

Akam, T., Costa, R. & Dayan, P. Simple plans or sophisticated habits? State, transition and learning interactions in the two-step task. PLoS Comput. Biol. 11, e1004648 (2015).

Takahashi, Y. K. et al. Expectancy-related changes in firing of dopamine neurons depend on orbitofrontal cortex. Nat. Neurosci. 14, 1590–1597 (2011).

Starkweather, C. K. & Uchida, N. Dopamine signals as temporal difference errors: recent advances. Curr. Opin. Neurobiol. 67, 95–105 (2021).

Takahashi, Y. K. et al. Dopaminergic prediction errors in the ventral tegmental area reflect a multithreaded predictive model. Nat. Neurosci. 26, 830–839 (2023).

Daw, N. D., Courville, A. C. & Touretzky, D. S. Representation and timing in theories of the dopamine system. Neural Comput. 18, 1637–1677 (2006).

Kobayashi, S. & Schultz, W. Influence of reward delays on responses of dopamine neurons. J. Neurosci. 28, 7837–7846 (2008).

Masset, P. et al. Multi-timescale reinforcement learning in the brain. Preprint at bioRxiv https://doi.org/10.1101/2023.11.12.566754 (2023).

Ludvig, E. A., Sutton, R. S. & Kehoe, E. J. Stimulus representation and the timing of reward-prediction errors in models of the dopamine system. Neural Comput. 20, 3034–3054 (2008).

Starkweather, C. K., Babayan, B. M., Uchida, N. & Gershman, S. J. Dopamine reward prediction errors reflect hidden-state inference across time. Nat. Neurosci. 20, 581–589 (2017).

Starkweather, C. K., Gershman, S. J. & Uchida, N. The medial prefrontal cortex shapes dopamine reward prediction errors under state uncertainty. Neuron 98, 616–629.e6 (2018).

Namboodiri, V. M. K. et al. Single-cell activity tracking reveals that orbitofrontal neurons acquire and maintain a long-term memory to guide behavioral adaptation. Nat. Neurosci. 22, 1110–1121 (2019).

Schultz, W. Dopamine reward prediction-error signalling: a two-component response. Nat. Rev. Neurosci. 17, 183–195 (2016).

Lak, A., Nomoto, K., Keramati, M., Sakagami, M. & Kepecs, A. Midbrain dopamine neurons signal belief in choice accuracy during a perceptual decision. Curr. Biol. 27, 821–832 (2017).

Hennig, J. A. et al. Emergence of belief-like representations through reinforcement learning. PLoS Comput. Biol. 19, e1011067 (2023).

Namboodiri, V. M. K. & Stuber, G. D. The learning of prospective and retrospective cognitive maps within neural circuits. Neuron 109, 3552–3575 (2021).

van Zessen, R. et al. Cue and reward evoked dopamine activity is necessary for maintaining learned Pavlovian associations. J. Neurosci. 41, 5004–5014 (2021).

Kalmbach, A. et al. Dopamine encodes real-time reward availability and transitions between reward availability states on different timescales. Nat. Commun. 13, 3805 (2022).

Namboodiri, V. M. K. How do real animals account for the passage of time during associative learning? Behav. Neurosci. 136, 383–391 (2022).

Naneix, F., Marchand, A. R., Di Scala, G., Pape, J.-R. & Coutureau, E. A role for medial prefrontal dopaminergic innervation in instrumental conditioning. J. Neurosci. 29, 6599–6606 (2009).

Piquet, R., Faugère, A. & Parkes, S. L. A hippocampo-cortical pathway detects changes in the validity of an action as a predictor of reward. Curr. Biol. 34, 24–35.e4 (2024).

Delamater, A. R., Siegel, D. B. & Tu, N. C. Learning about reward identities and time. Behav. Process. 207, 104859 (2023).

Gallistel, C. R. & Gibbon, J. Time, rate, and conditioning. Psychol. Rev. 107, 289–344 (2000).

Willmore, L. et al. Overlapping representations of food and social stimuli in mouse VTA dopamine neurons. Neuron 111, 3541–3553.e8 (2023).

Millidge, B. et al. Reward-bases: dopaminergic mechanisms for adaptive acquisition of multiple reward types. PLoS Comput. Biol. 19, e1012580 (2024).

Pearl, J. Causality (Cambridge Univ. Press, 2009).

Baird, L. C. Advantage updating. Technical report WL-TR-93-1146 (Wright-Patterson Air Force Base, 1993); https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.135.9434&rep=rep1&type=pdf

Dayan, P. & Balleine, B. W. Reward, motivation, and reinforcement learning. Neuron 36, 285–298 (2002).

Schulman, J., Moritz, P., Levine, S., Jordan, M. & Abbeel, P. High-dimensional continuous control using generalized advantage estimation. Preprint at https://arxiv.org/abs/1506.02438 (2018).

Pan, H.-R., Gürtler, N., Neitz, A. & Schölkopf, B. Direct advantage estimation. In Advances in Neural Information Processing Systems Vol. 35 (eds Koyejo, S. et al.) 11869–11880 (Curran Associates, Inc., 2022).

Veitch, V., Wang, Y. & Blei, D. Using embeddings to correct for unobserved confounding in networks. In Advances in Neural Information Processing Systems Vol. 32 (eds Wallach, H. et al.) https://papers.nips.cc/paper_files/paper/2019/file/af1c25e88a9e818f809f6b5d18ca02e2-Paper.pdf (Curran Associates, Inc., 2019).

Rafiee, B. et al. From eye-blinks to state construction: diagnostic benchmarks for online representation learning. Adapt. Behav. 31, 3–19 (2023).

Tomov, M. S., Dorfman, H. M. & Gershman, S. J. Neural computations underlying causal structure learning. J. Neurosci. 38, 7143–7157 (2018).

Dezfouli, A. & Balleine, B. W. Habits, action sequences and reinforcement learning. Eur. J. Neurosci. 35, 1036–1051 (2012).

Langdon, A. J., Sharpe, M. J., Schoenbaum, G. & Niv, Y. Model-based predictions for dopamine. Curr. Opin. Neurobiol. 49, 1–7 (2018).

Bäckman, C. M. et al. Characterization of a mouse strain expressing Cre recombinase from the 3ʹ-untranslated region of the dopamine transporter locus. Genesis 44, 383–390 (2006).

Daigle, T. L. et al. A suite of transgenic driver and reporter mouse lines with enhanced brain cell type targeting and functionality. Cell 174, 465–480.e22 (2018).

Kim, C. K. et al. Simultaneous fast measurement of circuit dynamics at multiple sites across the mammalian brain. Nat. Methods 13, 325–328 (2016).

Sabatini, B. L. The impact of reporter kinetics on the interpretation of data gathered with fluorescent reporters. Preprint at bioRxiv https://doi.org/10.1101/834895 (2019).

Gallego, J. A., Perich, M. G., Chowdhury, R. H., Solla, S. A. & Miller, L. E. Long-term stability of cortical population dynamics underlying consistent behavior. Nat. Neurosci. 23, 260–270 (2020).

Bach, F. R. & Jordan, M. I. Kernel independent component analysis. J. Mach. Learn. Res. 3, 1–48 (2003).

Sussillo, D., Churchland, M. M., Kaufman, M. T. & Shenoy, K. V. A neural network that finds a naturalistic solution for the production of muscle activity. Nat. Neurosci. 18, 1025–1033 (2015).

Floeder, J. R., Jeong, H., Mohebi, A. & Namboodiri, V. M. K. Mesolimbic dopamine ramps reflect environmental timescales. eLife https://doi.org/10.7554/eLife.98666.1 (2024).

Loewinger, G., Cui, E., Lovinger, D. & Pereira, F. A statistical framework for analysis of trial-level temporal dynamics in fiber photometry experiments. eLife https://doi.org/10.7554/elife.95802.1 (2024).

Qian, L. et al. Prospective contingency explains behavior and dopamine signals during associative learning, Qian et al., 2025. figshare https://doi.org/10.6084/m9.figshare.28216202 (2025).

Acknowledgements

We thank H. Wu and N. Martiros for technical assistance on the behavioral code design, M. Watabe-Uchida for discussion and advice on task design, and C. Dulac, F. Engert and all lab members from N.U.’s lab and V.N.M.’s lab for discussion. This work was supported by grants from the National Institutes of Health (grant nos. U19 NS113201 to N.U. and S.J.G. and R01DC017311 to N.U. and V.N.M.), the Simons Collaboration on Global Brain (to N.U.), the Air Force Office of Scientific Research (grant no. FA9550-20-1-0413 to S.J.G. and N.U.), the Human Frontier Science Program (grant no. LT000801/2018 to S.M.) and the Harvard Brain Science Initiative and the Brain and Behavior Research Foundation (NARSAD Young Investigator no. 30035 to S.M.). The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

L.Q., N.U. and V.N.M conceived the conceptual framework and designed the behavioral tasks and recording experiments. L.Q. conducted all experiments and data analysis. S.M. established the multifiber photometry system and supplied the transgenic mice. M.B., N.U. and L.Q. discussed the modeling framework. M.B. constructed all the TD learning models and conducted the analysis. J.H. constructed RNN models. The RNN-modeling results were analyzed by M.B., J.H. and L.Q. The results were discussed and interpreted by L.Q., N.U., M.B., J.H, S.G. and V.N.M. The manuscript was written by M.B., L.Q. and N.U. All the other authors provided feedback.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Neuroscience thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Population Average Behavior per session.

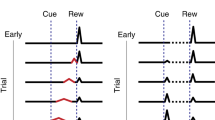

For all panels: Deg group, n = 11; Conditioning, n = 6; Cued Reward n = 12. Error bars are SEM. (a, b, c, d) Bar graphs comparing the average number of licks to Odor A during the first 3 s post-stimulus (a) and during ITI (b), latency to lick (c), and fraction correct (d) in the final sessions of phase 1 and phase 2 for Deg, Cond, and CuedRew groups. Error bars represent SEM. Asterisks denote statistical significance: ns p > 0.05, **p < 0.01, paired two-sided Student’s t-test. (e) Session-wise variation in anticipatory licking for Odor A trials, broken down into early, middle, and late blocks, for all groups. (f, g, h). Line graphs showing the average number of licks to Odor A (colored) during ITI (f), latency to lick after Odor A (g) and fraction correct in Odor A trials (h) for each session in the Conditioning, Degradation, and Cued Reward phase. (i) Anticipatory licking rate in Odor A trials (colored) and in Odor B trials (grey) across multiple phases: Conditioning (Phase I), Degradation (Phase II), Recovery (Phase III), Extinction (Phase IV), and post-Extinction Recovery (Phase V). (j) Anticipatory licking to Odor C develops quickly compared to Odor A, potentially reflecting generalization. (k, l) PSTH showing the average licking response of mice in Deg group (k) and CuedRew group (l) to the various events. The response is time-locked to the odor presentation (time 0). The shaded area indicates the standard error of the mean (SEM).

Extended Data Fig. 2 Dopamine responses are highly correlated across recording sites.

(a) Averaged dopamine axonal responses to Odor A during rewarded trials for both Deg group and CuedRew group, depicted for Phase I session 5 and Phase II session 10 across all recorded sites. (b) Correlation matrix for averaged dopamine responses to Odor A during rewarded trials, comparing across sites from the Deg groups during sessions 5 and 10. Cosine similarity was calculated by averaging z-scored responses across trials within animals, then across animals and then computing the cosine similarity between each recording site. For some olfactory tubercule recording sites there was no discernable signal and were thus excluded from this analysis. Sample size (n) report per site. (c) Population average dopamine responses to Odor A in rewarded trials across sessions 1 to 10 for both Deg and CuedRew groups, detailing the changes in response through Phase I and Phase II.

Extended Data Fig. 3 Population Average Dopamine Response per session.

(a) Predicted reward response in Odor A trials for trials in which the first lick after reward delivery was recording within 200 ms (green) or between 400 and 800 ms (red). There is a biphasic response pattern in the slow licks, suggesting there may be sensory cues associated with reward delivery that act as conditioned stimuli. (b) Three consecutive trials from the same animal in the same session, showing the effect of lick time. The dotted line indicates the first recorded lick after reward delivery. The lick timing has an effect on the height and shape of the response. (c) Mean peak dopamine axonal signal (z-scored) of cue response (orange) and reward response (cyan) in Odor A rewarded trial by sessions for the Deg group (n = 8) across multiple phases: Conditioning (Phase I), Degradation (Phase II), Recovery (Phase III), Extinction (Phase IV), and post-Extinction Recovery (Phase V). Except in extinction, only trials in which the first lick was recorded under 250 ms was included in this analysis. (d) As in panel a, for unpredicted rewards delivered in the degradation condition. (e) Example in three trials from the same session and animal of the response to unpredicted reward. The dotted line indicates the first recorded lick. (f) Reward responses by session and by group. In the degradation group (n = 8), the unpredicted reward elicited greater responses than the reward delivered after Odor A on all sessions (mixed-effect model, p < 0.001, within animal comparison). In the Cued Rew group (n = 5), the reward delivered after Odor C elicited a greater response than the reward delivered after Odor A on the first session of Cued Rew condition (two sided mixed-effect model, p < 0.05, within animal comparison). Mean peak dopamine axonal signal (z-scored) across sessions for four distinct conditions, (g) peak response of early odor B response, (h) area under curve of late odor B response and (i) area under curve for reward omission. (j) Response to Odor C (rewarded) and (k) Odor C (omission), population average per session. In all panels, error bars are SEM.

Extended Data Fig. 4 Effect of discount factor on model estimates.

Top: Influence of discount factor (γ) on relative predicted Odor A response relative to Conditioning (a) or relative to unpredicted reward (b), where reward size = 1 for four models presented in Fig. 3. Bottom left scale showing discount factor converted to step size (0.2 s), other axes use per second discount. Tested range: 0.5–0.975 discount per 0.2 s in 0.025 steps. Dotted line indicates discount factor used in main text. Bottom: Effect size of transition probability in Belief-State model. The Belief-State model (c) used assumes a fixed rate of transition (p) from the Wait state to the Pre-state with each timestep. Varying p around the value fitted to the experimental parameters has minimal effect on prediction (note logarithimic scale, (d) normalized relative to conditioning or (e) to unpredicted reward). If p is assumed extremely high or low than the transition from the Wait state to the Pre-state either happens almost instaneously or not at all, resulting in a single state dominating the ITI and the model behaving like the Cue-Context model.

Extended Data Fig. 5 Microstimuli simulation.

(a) Complete serial compound state spaces assume non-overlapping microstates. (b) Microstimuli state space representation assumes each stimuli produces a sequence of microstimuli that diminish in height (diminishing relative contribution) and grow in width (growing temporal imprecision). (c) High σ and low η microstimuli simulations have low temporal precision – each state substantially overlaps with the previous. (d) With low temporal precision, microstimuli behaves like the Cue-Context model, reproducing the pattern of results qualitatively but not quantitatively. (e) However, at this level there is no expected decrease in the predicted reward response, as the timing in insufficiently accurate. (f) In the opposite situation, with low σ and high η, microstimuli state representations have higher precision in time. (g) However, in this case it suffers from the same issues as the CSC-with-ITI states model, predicting little difference between the Cued Reward and Degradation conditions. (h) Microstimuli help explains why the decrease in predicted reward response is not as predicted by CSC models, in which the reward is perfectly predictable (and thus the reward response following 75% reward probability cues should be 25% the magnitude of an unpredicted reward). (i) From Fig. 2, mean peak dopamine axonal signal for the last session in Phase 1 (Conditioning) and 2 (Degradation and Cued Reward) for both Deg (n = 8) and CuedRew (n = 5) groups. Error bars represent SEM. ***, P < 0.001 in two-sided mixed-effects model with Tukey HSD posthoc. (j) Predicted reward response following Odor A in session 1 (green) versus session 5 (red). (k) Maximum axonal calcium response for predicted reward response following Odor A in session 1 versus session 5 (n = 13). Normalized by subject to session 1. Error bars represent SEM.

Extended Data Fig. 6 Comparison of reward and omission responses between experimental data, Belief-State model and value-RNN predictions.

(a) Plots averaged from one representative simulation of Odor A rewarded trial (n = 4,000 simulated trials) for four distinct conditions using the Belief-State model. Graphs are for the corresponding value function of Odor A rewarded trials, with Pre state, ISI state and Wait state annotated. (b) Z-scored DA axonal signals to reward omission and predicted reward following Odor A quantified from the red shaded area. Line graphs (right) shows mean z-scored response over multiple sessions for each condition. Statistical analysis was performed on data from the first and last session of these conditions. Error bars are SEM. ns, P > 0.05; **, P < 0.01, paired t-test. (c) The predictions of the Belief-State model for reward omission and predicted reward (mean, error bars: SD). (d) The experimental data for reward omission and predicted reward (mean, error bars: SEM). ns, P > 0.05; **, P < 0.01; ***, P < 0.001, Welch’s t-test. (e) The predictions of the Value-RNN models for reward omission and predicted reward (mean, error bars: SD). (f) The experimental data, TD error prediction by Belief-State model and Value-RNN model for uncued reward response in Degradation condition. While the Belief-State model captured the downward trend in response magnitude, none of the three statistical tests showed significant changes.

Extended Data Fig. 7 Methodology for visualizing state space from hidden unit activity.

Illustration for visualizing common state space of RNN models. RNN hidden unit activity was first projected into principal component space, then canonical correlation analysis was used to align between different conditions.

Extended Data Fig. 8 Outcome-specific contingency degradation explained by Belief-State model and Value-RNN model.

(a) Experimental design of Garr et al., two cues predicted either a liquid or food reward. During degradation, every 20 s the liquid reward was delivered with 50% probability. The ITI length was drawn from an exponential distribution with mean of 4 minutes. (b) Belief-State model design. The Belief-State model was extended to include a second series of ISI substates to reflect the two types of rewarded trials. The model was then independently trained on the liquid reward and food reward. (c) The value-RNN model design – as (b) but replacing the Belief-State model with the value-RNN, using a vector-valued RPE as feedback, with each channel reflecting one of the reward types. (d-f) Summary of predicted RPE responses from Belief-State Model and Value-RNN (vRNN). The RPE was calculated as the absolute difference between the liquid RPE and food RPE. Other readout functions (for example weighted sum) produce similar results. Both model predictions match experimental results with degraded (D) cue (d) and degraded reward (e) having a reduced dopamine response versus non-degraded (ND). Furthermore, average RPE during ISI (3 seconds after cue on) and ITI (3 seconds before ITI) capture measured experimental trend. Error bars are SEM.

Supplementary information

Supplementary Video 1

Video 1 State space trajectories. Animation of trajectories in CCA space from RNN presented in Fig. 6e. In sequence, trajectories showing odor A (rewarded), odor A (unrewarded), odor A (rewarded and unrewarded), odor B and then all together for the three conditions. Real-time speed multiples are indicated top right. ITI length is extended from training or actual experiment to demonstrate the return to the original (‘Pre’) state in the conditioning and cued reward conditions but the delayed return in the degradation condition.

Source data

Source Data Fig. 1

Statistical source data.

Source Data Fig. 2

Statistical source data.

Source Data Fig. 3

Statistical source data.

Source Data Fig. 4

Statistical source data.

Source Data Fig. 5

Statistical source data.

Source Data Fig. 6

Statistical source data.

Source Data Fig. 7

Statistical source data.

Source Data Extended Data Fig. 1

Statistical source data.

Source Data Extended Data Fig. 2

Statistical source data.

Source Data Extended Data Fig. 3

Statistical source data.

Source Data Extended Data Fig. 4

Statistical source data.

Source Data Extended Data Fig. 5

Statistical source data.

Source Data Extended Data Fig. 6

Statistical source data.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qian, L., Burrell, M., Hennig, J.A. et al. Prospective contingency explains behavior and dopamine signals during associative learning. Nat Neurosci 28, 1280–1292 (2025). https://doi.org/10.1038/s41593-025-01915-4

Received:

Accepted:

Published:

Issue date:

DOI: https://doi.org/10.1038/s41593-025-01915-4

This article is cited by

-

What dopamine teaches depends on what the brain believes

Nature Neuroscience (2025)