Abstract

Named entity recognition is a fundamental subtask for knowledge graph construction and question-answering in the agricultural diseases and pests field. Although several works have been done, the scarcity of the Chinese annotated dataset has restricted the development of agricultural diseases and pests named entity recognition(ADP-NER). To address the issues, a large-scale corpus for the Chinese ADP-NER task named AgCNER was first annotated. It mainly contains 13 categories, 206,992 entities, and 66,553 samples with 3,909,293 characters. Compared with other datasets, AgCNER maintains the best performance in terms of the number of categories, entities, samples, and characters. Moreover, this is the first publicly available corpus for the agricultural field. In addition, the agricultural language model AgBERT is also fine-tuned and released. Finally, the comprehensive experimental results showed that BiLSTM-CRF achieved F1-score of 93.58%, which would be further improved to 94.14% using BERT. The analysis from multiple aspects has verified the rationality of AgCNER and the effectiveness of AgBERT. The annotated corpus and fine-tuned language model are publicly available at https://doi.org/XXX and https://github.com/guojson/AgCNER.git.

Similar content being viewed by others

Background & Summary

As a basic sub-task of information extraction, named entity recognition (NER) plays an important role in many natural language processing tasks such as relation extraction, question answering, etc.1, aiming to extract the named entities from the unstructured texts. Over the years, scholars worldwide have conducted in-depth NER research in various languages such as Chinese2, English3, and Arabic4, as well as in various vertical fields such as social media5, biomedicine6, and cybersecurity7. In recent years, NER has gradually been applied to the field of agricultural diseases and pests, used to identify the entities related to agricultural diseases and pests such as “小麦 (wheat)” and “玉米大斑病 (Corn northern leaf blight)”. It is an important component of downstream tasks such as agricultural knowledge graph construction and intelligent question answering, and has important practical significance and theoretical research value for improving the efficiency and accuracy of crop diseases and pests diagnosis and control, reducing food losses, and ensuring food security8.

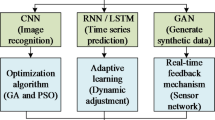

In recent years, various deep learning-based NER methods have been proposed. According to the main types of neural networks, it can be divided into various classic NER models based on CNN-CRF;9,10, BiLSTM-CRF;11,12, and Transformer13,14,15. Moreover, according to the proposed chronological order, it can be divided into traditional statistical machine learning methods16, traditional word embedding-based models12, external feature enhancement models;15,17,18,19, and large-scale pre-trained language models;20,21. In addition, various NER tools such as NLTK22 and spacy23 have also been constructed. However, the pre-trained language model oriented to agricultural diseases and pests has not been reported. Nevertheless, the ADP-NER task is still challenging due to the scarcity of publicly available datasets, which is the core foundation for this domain research. Therefore, it is necessary to establish a comprehensive and unified benchmark corpus to fill the gap in ADP-NER tasks.

To achieve this goal, many efforts that attempt to construct agriculture-related NER corpora have been made as detailed in Table 1. Malarkodi et al.24 constructed the first agricultural-oriented dataset by using CRF, which included 19 fine-grained tags and 11,041 entities. Bisbas et al.25 introduced WordNet to construct an agricultural dataset and dictionary that includes 5 major categories, i.e., grains, fruits, nuts, spices, and vegetables. However, they mainly focus on English and are not applicable to Chinese, let alone agricultural diseases and pests. To this end, Li et al.26 collected a dataset for crop diseases and pests, which contains 3 entity categories, i.e., crops, pests and diseases, and pesticides. Zhang et al.27 further divided a new type, i.e., fertilizer. Finally, a dataset containing 4 entity categories was constructed.

To solve the problems of the limited and low quality of the agricultural dataset, Qian et al.28 implemented a BiLSTM-CRF model. However, their dataset only contains 6 categories and 8,873 entities, which is relatively small in scale. Wei et al.29 focused on the issues of insufficient extraction in terms of character position, context, and long-distance dependency, and constructed an agricultural dataset containing 37,243 samples and 29,790 agricultural entities. However, it only includes 4 main types. Moreover, Jiang et al.30 regarded the authoritative agricultural books as the data sources, and introduced active learning and crowdsourcing to build a dataset containing 9 types of entities and more than 48,000 entities. But it has not been made public. Besides, Chen et al.31 constructed a relatively complete agricultural knowledge graph AgriKG, which defined 16 entity categories such as animal, plant, and agricultural products, etc., including more than 150,000 entities, and realized the entity retrieval and question answering.

In addition, some works mainly revolved around only one crop. Shen et al.32 labeled 1581 samples of rice diseases and pests based on 4 types of entities such as disease, pest, weeds, and pesticide, and achieved better recognition results on the proposed JE-DPW model. In addition, Zhang et al.33 divided the apple-related entities into 21 categories and constructed a dataset ApdCNER for Chinese apple named entity recognition. The F1-score of 92.14% on ApdCNER was implemented by using the novel proposed APD-CA model. Moreover, several works also annotated the NER datasets for specific crops such as wheat34 and grapes35, but the limitation is that they cannot effectively cover other crops36. More importantly, as marked by ✗, all the corpora mentioned above are not publicly available, which undoubtedly hinders the ADP-NER task.

In summary, efforts have been made in the past to collect and annotate agricultural datasets. Nevertheless, constructing such an ADP-NER-oriented benchmark dataset faces two main challenges. (1) There is no publicly available dataset for the ADP-NER task. To achieve the ADP-NER tasks, it is necessary to first self-construct a dataset, which will be time-consuming. (2) Although the above works can provide some reference for the construction of the corpus in entity categories division, there are still shortcomings in terms of entity categories, sample size, and crop coverage. To tackle the abovementioned issues, Based on existing works;8,37, we constructed a large-scale dataset named AgCNER for the Chinese named entity recognition in the agricultural diseases and pests domain. It contains a total of 206,992 entities and 66,553 samples, with over 3 million characters, including 13 categories such as Crop, Disease, Pest, Drug, Cultivar, Fertilizer, Company, et al. The original texts come from the Internet and have a certain degree of diversity and universality. In addition, a series of NER models on AgCNER were trained, and the pre-trained language model for ADP-NER tasks named AgBERT was also fine-tuned to dynamically generate high-quality contextual semantic representations with rich domain information. Finally, The domain dataset AgCNER and fine-tuned language model AgBERT are also made publicly available on GitHub, which is the first time in the agricultural diseases and pests field, even in the agriculture domain.

Methods

Entity types

Most general domain-oriented datasets focus on annotating the common entity types such as Person, Location, and Organization, while the vertical domain-oriented datasets such as Biomedical38, Archaeological39, and Geographic Information40 focus on the domain entity types such as 慢性阻塞性肺疾病(chronic obstructive pulmonary disease), 斧头(Axe), and 通济渠(Tongji Canal). However, due to the differences across domains, the aforementioned domain entities do not apply to the agricultural diseases and pests field. Considering that there is currently no unified standard for entity types division, this paper divided the entities of agricultural diseases and pests into 10 entity types, including Crop, Disease, Pest, Drug, Fertilizer, Pathogens, Period, Part, Cultivar, and Biosystematic according to the previous agricultural works;24,28,30,31,32,. In addition, several general entity types such as Organization and Company were also considered. Moreover, the category Other was also introduced to mark the potential entities. Finally, 13 categories were divided and their specific details were shown in Table 2.

Data acquisition

To obtain sufficient corpus, seven of the most authoritative data sources related to agricultural diseases and pests have been considered as listed in Table 3. Among them, China National Knowledge Infrastructure, Wangfang Data, and Baidu Baike were selected to crawl the abstracts of the articles or semi-structured data by using the specific diseases or pests (https://github.com/guojson/AgCNER/blob/main/diseases_and_pests) as keywords such as “小麦赤霉病(Wheat scab)” and “稻瘟病(Rice blast)”. The irrelevant texts were removed under the guidance of experts. Moreover, to ensure diversity, we also obtained various related texts from multiple platforms such as the China Agricultural Technology Promotion Information Platform and China Pesticide Information Network. The domain experts were also invited to verify to ensure the relevance and accuracy of the samples. After the preprocessing such as deduplication and denoising, we obtained 66,553 original samples.

Annotation tool and process

To improve annotation efficiency, a new named entity annotation tool named ChineseNERAnno (https://github.com/guojson/ChineseNERAnno.git) has been developed in our previous work37. It is a general NER annotation tool that is not only applicable to Chinese NER tasks but also to other languages with spaces as separators, such as English, Arabic, German, etc. It’s front-end mainly includes the main interface (a) and operation panel (b) as shown in Fig. 1. Among them, the operation panel pre-defined the buttons with different entity categories to mark the entities in text with corresponding categories. The main interface was used to visualize the samples to be labeled, and entities were displayed with different formats “ < e1 > < /e1 > ” and colors according to their respective category. The category numbers were aligned with category names as shown in Table 2. For example, given an entity belonging to Disease can be labeled with the mark pairs “ < e2 > < /e2 > ”. In addition, to ensure the consistency and accuracy of annotation, a data dictionary was built based on the SQLite database, which was conducive to realizing semi-automatic through entity matching. Moreover, the data dictionary can be updated by continuously incorporating new entities. Besides, to avoid confusion with the original data, the annotated corpus will be saved as a text file with the suffix “.ann”, and its format is shown in Fig. 1. The final corpus will be converted into text files with suffix “.txt” through “导出(Export)” menu according to different label formats, i.e., BIO (B-begin, I-inside, O-outside) as shown in Fig. 2(a), BMES (B-begin, M-middle, E-end, S-single) as shown in Fig. 2(b), or BIOES (B-begin, I-inside, O-outside, E-end, S-single) as shown in Fig. 2(c).

To ensure the quality of the dataset, and improve the speed and consistency of annotation, the corpus was annotated by 3 annotators under the guidance of domain experts. The basic process of data annotation is shown in Fig. 2, which was generally divided into four parts, i.e., semi-automatic annotation, agreement and consistency between annotators, unlabelled entity discovery, and export.

It is worth noting that both auto annotation and rigorously checked annotation are considered throughout the annotation process. One common sense is that automatic annotation will show poor performance at the beginning of annotation because of the limited annotated entities in the dictionary. Therefore, it relies more on manual annotation to expand the vocabulary and correct incorrectly annotated entities. However, the advantages of automatic annotation will become increasingly prominent with an increasing number of annotated entities expanded into vocabulary. Meanwhile, manual validation is also required to discover the entities with incorrect annotations and discover new entities.

Besides, considering that different annotators may have different understandings of the entity types, resulting in annotation conflicts. Therefore, the strict manual verification with the correction principle, i.e., minority obeys majority, is conducted to correct annotation errors and unify the annotators’ cognition after labeling a certain number of samples. Meanwhile, Fleiss’ Kappa41, donated as k, was used in the inter-rater agreement. The larger the k, the more consistent the annotated results. It is generally believed that when k > 0.8, there is almost perfect consistency among annotators.

where n, N, and m represent the number of annotators, characters, and entity types respectively. pe is the sum of squares of the joint edge distributions for each entity type j; Po is the average of the percentage of consistent annotation pairs for each type among all annotation pairs.

Quality control

In summary, we adopt various strategies for quality control. In the data acquisition stage, several authoritative and standardized domain portals were also selected as the main data source. Meanwhile, some samples from Baidu Baike were also collected to improve the diversity of the corpus. In the data processing stage, besides the data cleaning and noise removal, correlation screening was also conducted under the guidance of domain experts. In the data annotation stage, multiple measures such as annotation tool, semi-automatic recognition, manual validation, agreement and consistency between annotators, and model prediction were also considered to alleviate annotation conflicts while improving annotation speed. Moreover, the BiLSTM-CRF model was introduced to discover unlabelled entities as much as possible. More detailed guidelines about the annotation process can be found on the figshare platform at https://doi.org/10.6084/m9.figshare.c.6807873.v142.

Data Records

According to the statistics, we corrected approximately 121,700 entities during manual annotation, including 98,748 real entities and 22,952 pseudo entities with incorrect labels. Moreover, with an increase in the annotated samples, the frequency of manual validation also decreased gradually, thus accelerating the annotation speed.

Finally, a large-scale Chinese named entity recognition corpus for agricultural diseases and pests named AgCNER was constructed. For the convenience of users and to evaluate the effectiveness of the novel ADP-NER models, the annotated corpus is publicly available on the figshare platform at https://doi.org/10.6084/m9.figshare.c.6807873.v142. It contains a total of 13 categories, 66,552 annotated samples, 206,992 entities, and 3,909,293 characters. As shown in Fig. 3, AgCNER mainly contains four files, i.e., a training set with the text file named ‘train.txt’ that contains 47840 sentences, a development set with the text file named ‘dev.txt’ that contains 5964 sentences, a test set with the file named ‘test.txt’ that contains 5975 sentences according to a ratio of 8:1:1 and the format of BIO, and the guidelines about the annotation tool and process. As shown in Fig. 2a,each character is stored on a single line with a corresponding BIO label in the text file and separated by a space. Several examples are shown in Table 4. The statistical analysis of the distribution of each type of entity in AgCNER is shown in Fig. 4. It can be seen from the figure that several types such as CRO, PET, and DIS account for a relatively large proportion, indicating that they maintain a higher level of attention and will be easier to be identified. However, the proportion of several entities such as COM, BIS, and FER is relatively low, leading to lower predicted F1 values.

In addition, we also compared AgCNER with some other agricultural corpora listed in Table 1. Most datasets such as Biswas et al.25, Li et al.26, and Zhang et al.27 only contain several typical categories such as DIS, PET, DRUG, etc. The categories SYMPTOM and PART have been pre-designed by Chen et al.31 and Zhang et al.33, but they are not publicly available. Compared to our previous work37, two new categories,i.e., ORG and COM were further annotated, and the number of categories was ultimately increased to 13. Besides, AgCNER contains 206,992 entities, which exceeds most datasets listed in Table 1 except37. Still, our corpus has a larger scale with 66,553 samples and 3,909,293 characters, indicating richer semantic information and is more practical.

Technical Validation

In this section, a variety of mainstream NER models were selected for various experiments and discussions to promote the widespread use of AgCNER and provide a reference for future NER research in agricultural diseases and pests field. The deep learning framework was Pytorch 1.12.1 based on NVIDIA GeForce RTX 3090 GPU and i9-12900K CPU.

Evaluation metrics

In this paper, three commonly used metrics, i.e., Precision(P), Recall(R), and F1-score(F1) were selected as the evaluation indicators. The entity is considered to be correctly predicted only when its boundaries and tag are correctly recognized.

Among them, TP represents the number of samples that correctly predicted positives concerning the ground truth labels, FP means the number of samples that predicted positive but negative ground truth labels, FN represents the number of samples that predicted negative but positive ground truth labels.

Comparison models

To comprehensively evaluate the quality of the constructed dataset, various representative baselines were introduced, which can be roughly divided into four categories,i.e., traditional machine learning models such as HMM and CRF, word embedding-based models such as BiLSTM-CRF, IDCNN-CRF, pre-trained language model-based methods such as BERT-CRF、BERT-BiLSTM-CRF、BERT-IDCNN-CRF, and external feature enhancement-based models such as Lattice-LSTM18, TENER15, Flat-Lattice(FLAT)43, NFLAT44, HNER45. BiLSTM-CRF is the earliest recognized classic NER model based on deep learning that utilizes the bidirectional LSTM to extract contextual semantic features and CRF as the decoder. In addition, the domain PLM named AgBERT was also obtained by fine-tuning the original BERT based on the agricultural corpus, and its effectiveness was discussed.

Hyper-parameters setting

For BiLSTM-CRF and IDCNN-CRF, the embedding size was set to 128, batch size to 32, to alleviate the over-fitting problem, dropout was introduced and set to 0.6. We selected Adam as the optimizer. The learning rate (lr) was set to 1e-3. To improve the training performance, lr would be adjusted during the training process by an effective decay strategy StepLR with the multiplier gamma 0.8 and interval step_size 5. The epoch was set to 30 with patience number 5, i.e., if the F1 value on the development dataset does not obtain a higher value within 5 epochs, the training process would be terminated. For IDCNN-CRF, kernel size was set to 3 with the dilation 1, and the number of filters was 128. For BiLSTM, the hidden size of BiLSTM was set to 384. For the PLM-based models, the batch size was set to 50. Moreover, AdamW was selected as the optimizer with the ReduceLROnPlateau method to improve the fine-tuning performance. The learning rates of PLM, BiLSTM, IDCNN, Linear, and CRF were set to 3e-5, 1e-3, 1e-3, 1e-3, and 1e-3 respectively. It is worth noting that when PLM is not fine-tuned, the parameters of PLM will be frozen.

Quality control

As seen from the previous context, Fleiss’ Kappa was considered for annotation consistency evaluation in this paper. Every 5000 annotated samples were evaluated. According to Fig. 5, a low k value was obtained when a total of 5000 samples were annotated in the first round for the reason that annotators have different understandings of domain entities and annotation rules at this stage, resulting in significant differences in annotation. Moreover, it gradually increased with the number of annotated samples increases. The k value slowed down when the total number of the annotated samples reached 30000, indicating that the cognitive unity among annotators was gradually reached. Meanwhile, k > 0.8, it could be considered that the annotated dataset is credible.

To discover potential unlabelled and incorrectly labeled entities in the preliminarily annotated dataset, BiLSTM-CRF was conducted to 5-fold cross-validation. The F1 values after validation and correction are shown in Fig. 6. The gaps were relatively lower in each fold, and the average F1 value of the preliminary dataset is 92.43%. According to statistics, a total of about 560 new entities were discovered, mainly focusing on the categories PART and OTH. Because they have limitations such as diverse expression forms and blurred boundaries, which increases the difficulty of labeling. However, after the correction, the average F1 value increased by 0.21 percentage points, reaching 92.64%, indicating the effectiveness of the five-fold cross-validation.

Division Evaluation for AgCNER Dataset

Reasonable dataset partitioning is a prerequisite for training NER models, which may contribute to ensuring the efficient utilization of data and improving the generalization of the NER models. In this section, the ten-fold cross-validation was introduced, i.e., first, the whole dataset was divided into an average of 10 parts. Then, two of them were randomly selected as the development set and test set respectively, while the rest would be merged and regarded as the training set. We selected the basic mode BiLSTM-CRF and BERT-BiLSTM-CRF with frozen weights, denoted as BERTfrozen-BiLSTM-CRF as benchmark models. The results are shown in Fig. 7 and Table 5. Figure 7 shows the macro results of benchmark models on AgCNER, while Table 5 lists the detailed results of baselines for each type of category.

We can find in Fig. 7 that different results would be obtained on the datasets merged with different partitions. Both BiLSTM-CRF and BERTfrozen-BiLSTM-CRF obtained the highest F1 scores of 93.58% and 94.14% respectively in 2nd group. Therefore, we selected the 2nd group as the optimal dataset. Moreover, BERTfrozen-BiLSTM-CRF achieved an average F1-score of 93.61%, which is 0.3% higher than BiLSTM-CRF, indicating the intuitive common tense that BERTfrozen-BiLSTM-CRF on tan datasets were generally higher than those of BiLSTM-CRF.

In Table 5, BiLSTM-CRF and BERTfrozen-BiLSTM-CRF both achieved excellent results on DIS, PET, and CRO, which demonstrated their easy recognition because of the relatively clear boundaries and sufficient quantity. For example, the entities belonging to DIS such as ‘小麦纹枯病(Wheat Sharp Eyespot)’ and the entities belonging to PET such as ‘大豆食心虫(Soybean Pod Borer)’ usually contain the fixed word, i.e., ‘病(disease)’ and ‘虫(pest)’, which would be regarded as the delimiters that contribute the entity detection. However, some types of entities such as ORG, COM, and OTH were difficult to recognize because of the boundary ambiguity and complex composition. Overall, the experimental results demonstrated the effectiveness of the corpus, and the optimal dataset could be regarded as a benchmark dataset for the named entity recognition task in the agricultural diseases and pests domain.

Main results

The experimental results for all models on the dataset annotated by the authors were visualized in Table 6. Among them, BERTfrozen represents the original BERT that does not participate in fine-tuning during the training process, while AgBERT indicates the agricultural BERT fine-tuned on the agricultural corpus. Compared to other models, HMM performed the worst because it is an independent assumption model, which would be limited in capturing complex contextual information and label dependencies. Different from HMM, CRF significantly improved the performance of NER for the reason that it can consider global information and learn more contextual relationships. In addition, the label transition probability matrix also enhances the ability to handle complex dependency relations between labels.

Moreover, with the introduction of BiLSTM for feature extraction, BiLSTM-CRF has improved the F1-score by 0.41%. Its advantages lie in two aspects. (1)There is no need for manual feature engineering, which will alleviate human error. (2) The global sequence context features can be effectively modeled. Furthermore, BERT-based BiLSTM-CRF achieved better performance than word embedding-based models. Specifically, BERTfrozen-based BiLSTM-CRF and IDCNN-CRF increased their F1-values by 0.56 and 0.3 percentage points compared to word2vec-based ones, indicating that the character-level semantic representation generated by original BERT is richer than that generated by word2vec. Moreover, under the fine-tuning, i.e., AgBERT, the F1 values were further increased by 0.2% and 0.79%, reaching 94.34%, and 93.97% compared with BERTfrozen-based BiLSTM-CRF and IDCNN-CRF respectively, indicating the effectiveness of AgBERT that fine-tuning contributes BERT to learning domain knowledge and then generating high-quality text representations.

TENER achieved a higher F1 value of 93.85% than the above-mentioned models other than AgBERT-based ones, for the reason that it proposed a novel attention that incorporates the direction and relative distance of the words, which are both important for the NER tasks. Moreover, except for the word-level information, the character-level information in a word was also considered. Lattice-LSTM contains a character-word lattice structure that can dynamically match the lattice and incorporate the word information. However, it obtained a relatively lower F1 value on the AgCNER dataset, i.e., 91.74%, due to the agricultural text and insufficient ability to capture long-distance dependencies. To remedy the deficiencies of low inference speed, FLAT was proposed to convert the lattice structure into flat span structure43. The experimental results listed in Table 6 showed that FLAT exhibited excellent parallelization ability and its F1 increased by 2.52% compared to Lattice-LSTM. NFLAT further improved the F1 to 94.66% by proposing InterFormer, a novel lexical enhancement method that contains non-flat lattices, which can reduce the amount of computational and memory costs. By comparing the experimental results mentioned above, it was found that models such as TENER, FLAT, NFLAT, and HNER are generally superior to CRF-based models. This is because existing research has shown that external features such as Lexicon, Flat Lattice, Subword sequence, character level, or word level features are conducive to enriching contextual semantic information and improving the recognition accuracy of NER models. It also showed that they were suitable for the dataset annotated by the authors.

Detailed results for each category

To further evaluate the predicted results from a microscopic perspective, five classical models, i.e., BiLSTM-CRF, IDCNN-CRF, BERTfrozen-BiLSTM-CRF, AgBERT-BiLSTM-CRF, and HNER were selected and corresponding experimental results for each category were detailed in Table 7. Among them, the optimal results on each evaluation metric for each entity category were highlighted in Bold format and different colors, i.e., red indicates the optimal P, green indicates the optimal R, and black indicates the optimal F1. All benchmark models generally achieved high F1 values for several categories such as CRO, DIS, DRUG, and PET. Taking DIS as an example, its highest F1 was 97.99% produced by AgBERT-BiLSTM-CRF, while the lowest one was still as high as 97.59%. On the contrary, the models performed not well in some categories such as OTH and ORG. For example, the lowest F1-value of 53.33% for OTH was achieved by IDCNN-CRF, while the highest one was only 59.76% obtained by AgBERT-BiLSTM-CRF, expressing more obvious difficulty-to-recognition. The reasons may include the following two aspects: (1) limited domain corpus leads to insufficient training. For example, ORG listed in Fig. 4 only accounts for the overall 0.34%. (2) The complexity of the composition of the domain-named entities such as OTH, often consists of multiple types of characters such as Chinese characters, numbers, and characters, which can easily interfere with recognition results to a certain extent. Therefore, it is extremely challenging to identify the aforementioned entities. In addition, AgBERT-based BiLSTM-CRF achieved optimal F1 and recall values in most categories such as OTH, CRO, DIS, and CUL, demonstrating the effectiveness of BERT fine-tuning in detail. In the future, more advanced models will be proposed to improve the recognition accuracy of agricultural diseases and pests named entities.

Agricultural pre-trained language modals

In recent years, large-scale pre-training language models (PLMs) such as BERT have been widely used in many NLP tasks because of their strong semantic representation ability. Meanwhile, the experimental results in Table 5 and Table 6 also indicate the effectiveness of PLMs to improve the performance of named entity recognition. However, there is no publicly available PLM in agricultural field. In this section, the domain PLM, named AgBERT, was obtained by fine-tuning the original BERT on the agricultural corpus. To demonstrate the rationality of selecting BERT, the experiments were conducted by selecting CRF as the decoder and introducing other two PLMs, i.e., Albert and RoBerta as the comparison model. As shown in Fig. 8, BERT-CRF outperformed other models and achieved the best F1 value of 94.26%. In addition, the experimental results in Table 6 indicate that BERT-BiLSTM-CRF also achieved higher F1 than BERT-CRF under the fine-tuning condition, indicating that the character-level semantic representation generated by BERT may be more conducive to improving the performance of NER models.

To further confirm the above inference, this section took BERT as an example and randomly selected 50 sentences from the AgCNER and Resume datasets respectively, and their sentence-level semantic representations were generated by using the pre-trained language models mentioned above. Then, the average semantic representation was obtained according to the sentence length. In this paper, T-SNE46 was introduced to reduce the 768 dimensions into 2 dimensions to visualize the semantic representation by using the rectangular coordinate system. As shown in Fig. 9, the closer the two points separated from the space, the more similar the sentence semantics, and the greater the possibility of belonging to the same field. Common sense is that data points belonging to the same dataset should be spatially distributed in one cluster. Compared to the original BERT, the fine-tuned BERT, i.e., AgBERT not only shortened the average distance (0.000434 for AgBERT and 0.164018 for original BERT on AgCNER) and the distance variance (0.000001 for AgBERT and 0.006020 for original BERT on AgCNER) between the inner-cluster nodes and central node (marked by ⋆) but also divided all points that belong to the same domain into their corresponding clusters with obvious boundaries, indicating that the nodes generated by AgBERT are more similar and demonstrating the effectiveness and superiority of the fine-tuned BERT in representing the domain characters. Therefore, the above experimental results not only demonstrated the rationality of selecting BERT, but also reveal the effectiveness of AgBERT after fine-tuning on domain corpus.

Error analysis

Considering the possible errors in manual annotation, we took the results predicted by HMM, CRF, BERTFreeze-BiLSTM-CRF(BERT-FZ-BiLSTM-CRF), AgBERT-BiLSTM-CRF, and HNER as an example to analyze the possible errors that may occur during the prediction and annotation process. According to47, we divided the possible types of errors into boundary errors and entity-type errors. As shown in Fig. 10, boundary errors are the leading cause of the final predicted errors, while entity-type errors are the secondary cause. In contrast, the pre-trained model can alleviate such problems to some extent due to its strong domain representation ability. To report a detailed analysis of the possible errors, the confusion matrix on the test dataset of AgCNER was also plotted in Fig. 11. we observed that the majority of the predicted errors were caused by boundary errors. For example, B-ORG was mislabeled with O and vice versa. Therefore, the gap between the NER models and humans indicates the challenge of the dataset annotated by the authors and the importance of the domain NER tasks.

Code availability

All codes and fine-tuned language model AgBERT with outputs were publicly available at: https://github.com/guojson/AgCNER.git.

References

Baigang, M. & Yi, F. A Review: Development of Named Entity Recognition (Ner) Technology for Aeronautical Information Intelligence. Artif. Intell. Rev. 56, 1515–1542, https://doi.org/10.1007/s10462-022-10197-2 (2023).

Liu, P., Guo, Y., Wang, F. & Li, G. Chinese Named Entity Recognition: The State of the Art. Neurocomputing. 473, 37–53, https://doi.org/10.1016/j.neucom.2021.10.101 (2022).

Li, J., Sun, A., Han, J. & Li, C. A Survey On Deep Learning for Named Entity Recognition. Ieee Trans. Knowl. Data Eng. 34, 50–70, https://doi.org/10.1109/TKDE.2020.2981314 (2020).

Khalifa, M. & Shaalan, K. Character Convolutions for Arabic Named Entity Recognition with Long Short-Term Memory Networks. Computer Speech \& Language. 58, 335–346, https://doi.org/10.1016/j.csl.2019.05.003 (2019).

Taufiq, U., Pulungan, R. & Suyanto, Y. Named Entity Recognition and Dependency Parsing for Better Concept Extraction in Summary Obfuscation Detection. Expert Syst. Appl. 217, 119579, https://doi.org/10.1016/j.eswa.2023.119579 (2023).

Fabregat, H., Duque, A., Martinez-Romo, J. & Araujo, L. Negation-Based Transfer Learning for Improving Biomedical Named Entity Recognition and Relation Extraction. J. Biomed. Inform. 104279, https://doi.org/10.1016/j.jbi.2022.104279 (2023).

Wang, X. & Liu, J. A Novel Feature Integration and Entity Boundary Detection for Named Entity Recognition in Cybersecurity. Knowledge-Based Syst. 260, 110114, https://doi.org/10.1016/j.knosys.2022.110114 (2023).

Guo, X. et al. Cg-Aner: Enhanced Contextual Embeddings and Glyph Features-Based Agricultural Named Entity Recognition. Comput. Electron. Agric. 194, 106776, https://doi.org/10.1016/j.compag.2022.106776 (2022).

Cao, Y. & Yusup, A. Chinese Electronic Medical Record Named Entity Recognition Based On Bert-Wwm-Idcnn-Crf. 2022 9th International Conference on Dependable Systems and Their Applications (DSA). Wulumuqi, China: IEEE, 582-589 https://doi.org/10.1109/DSA56465.2022.00084 (2022).

Meifang, Y. & Bo, Y. Extracting Entities for Enterprise Risks Based On Stroke Elmo and Idcnn-Crf Model. Data Analysis and Knowledge Discovery. 6, 86–99, https://doi.org/10.11925/infotech.2096-3467.2021.1308 (2022).

Chang, C. et al. Multi-Information Preprocessing Event Extraction with Bilstm-Crf Attention for Academic Knowledge Graph Construction. Ieee Trans. Comput. Soc. Syst. https://doi.org/10.1109/TCSS.2022.3183685 (2022).

Huang, Z., Xu, W. & Yu, K. Bidirectional Lstm-Crf Models for Sequence Tagging. Arxiv Preprint Arxiv:1508.01991. https://doi.org/10.48550/arXiv.1508.01991 (2015).

Rouhou, A. C., Dhiaf, M., Kessentini, Y. & Salem, S. B. Transformer-Based Approach for Joint Handwriting and Named Entity Recognition in Historical Document. Pattern Recognit. Lett. 155, 128–134, https://doi.org/10.1016/j.patrec.2021.11.010 (2022).

Wang, X., Xu, X., Huang, D. & Zhang, T. Multi-Task Label-Wise Transformer for Chinese Named Entity Recognition. Acm Trans. Asian Low-Resour. Lang. Inf. Process. 22(4), 1–15, https://doi.org/10.1145/3576025 (2023).

Yan, H., Deng, B., Li, X. & Qiu, X. Tener: Adapting Transformer Encoder for Named Entity Recognition. Arxiv Preprint Arxiv:1911.04474. https://doi.org/10.48550/arXiv.1911.04474 (2019).

Zhou, G. & Su, J. Named Entity Recognition Using an Hmm-Based Chunk Tagger. Proceedings of the 40th Annual Meeting on Association for Computational Linguistics. Philadelphia Pennsylvania: Association for Computational Linguistics, 473-480, https://doi.org/10.3115/1073083.1073163 (2002).

Xuan, Z., Bao, R. & Jiang, S. Fgn: Fusion glyph network for chinese named entity recognition. Knowledge Graph and Semantic Computing: Knowledge Graph and Cognitive Intelligence: 5th China Conference, CCKS 2020. Nanchang, China:Springer, 28-40, https://doi.org/10.1007/978-981-16-1964-9_3 (2021).

Zhang, Y. & Yang, J. Chinese Ner Using Lattice Lstm. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Melbourne, Australia: Association for Computational Linguistics, 1554-1564, https://doi.org/10.18653/v1/P18-1144 (2018).

Zhu, Y. & Wang, G. Can-Ner: Convolutional Attention Network for Chinese Named Entity Recognition. Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Minneapolis, Minnesota: Association for Computational Linguistics, 3384-3393, https://doi.org/10.18653/v1/N19-1342 (2019).

Li, Z., Li, Q., Zou, X. & Ren, J. Causality Extraction Based On Self-Attentive Bilstm-Crf with Transferred Embeddings. Neurocomputing. 423, 207–219, https://doi.org/10.1016/j.neucom.2020.08.078 (2021).

Yan, R., Jiang, X. & Dang, D. Named Entity Recognition by Using Xlnet-Bilstm-Crf. Neural Process. Lett. 53, 3339–3356, https://doi.org/10.1007/s11063-021-10547-1 (2021).

Bird, S., Klein, E. & Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit, “ O’Reilly Media, Inc.”, (2009).

Honnibal, M. & Montani, I. Natural Language Understanding with Bloom Embeddings, Convolutional Neural Networks and Incremental Parsing. Unpublished Software Application. Https://Spacy. Io. (2017).

Malarkodi, C. S., Lex, E. & Devi, S. L. Named Entity Recognition for the Agricultural Domain. Res. Comput. Sci. 117, 121–132 (2016).

Biswas, P., Sharan, A. & Verma, S. Named Entity Recognition for Agriculture Domain Using Word Net. Int J Comput Math Sci. 5, 29–36, https://api.semanticscholar.org/CorpusID:53555638 (2016).

Li, X. et al. Recognition of Crops, Diseases and Pesticides Named Entities in Chinese Based On Conditional Random Fields. Transactions of the Chinese Society for Agricultural Machinery. 48, 178–185, https://doi.org/10.6041/j.issn.1000-1298.2017.S0.029 (2017).

Jian, Z. et al. Chinese Agricultural Named Entity Recognition Based On Conditional Random Fields. Conmputer and Modernization. 123-126, https://doi.org/10.3969/j.issn.1006-2475.2018.01.024 (2018).

Qian, Y. et al. Agricultural Text Named Entity Recognition Based On the Bilstm-Crf Model. Fifth International Conference on Computer Information Science and Artificial Intelligence (CISAI 2022). Chongqing,China: SPIE, 525-530, https://doi.org/10.1117/12.2667761 (2023).

Zijun, W., Ling, S., Xiaochun, H. & Ningjiang, C. Named Entity Recognition of Agricultural Based Entity-Level Masking Bert and Bilstm-Crf. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the Csae). 38, 195–203, https://doi.org/10.11975/j.issn.1002-6819.2022.15.021 (2022).

Jingchi, J., Changhe, G., Jie, L., Yi, G. & Shanfeng, K. Annotation Scheme and Corpus Construction for Agricultural Knowledge Based On Active Learing and Crowdsourcing. Journal of Chinese Information Processing. 37, 33–45, http://jcip.cipsc.org.cn/CN/Y2023/V37/I1/33 (2023).

Chen, Y. et al. Agrikg: An Agricultural Knowledge Graph and its Applications. Database Systems for Advanced Applications: DASFAA 2019 International Workshops: BDMS, BDQM, and GDMA. Chiang Mai, Thailand: Springer International Publishing AG, 2019:533-537, https://doi.org/10.1007/978-3-030-18590-9_81.

Liyan, S., Haiyan, J., Bin, H. & Yuancheng, X. A Study On Joint Entity Recognition and Relation Extraction for Rice Diseases Pests Weeds and Drugs. Journal of Nanjing Agricultural University. 43, 1151–1161, https://doi.org/10.7685/jnau.201912024 (2020).

Zhang, J. et al. Chinese Named Entity Recognition for Apple Diseases and Pests Based On Character Augmentation. Comput. Electron. Agric. 190, 106464, https://doi.org/10.1016/j.compag.2021.106464 (2021).

Hebing, L., Demeng, Z., Shufeng, X., Xinming, M. & Lei, X. Named Entity Recognition of Wheat Diseases and Pests Fusing Albert and Rules. Journal of Frontiers of Computer Science and Technology. 1-12, https://doi.org/10.3778/j.issn.1673-9418.2203129 (2022).

Yan, L. & Li, S. Grape Diseases and Pests Named Entity Recognition Based On Bilstm-Crf. 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC). Chongqing, China: IEEE, 2121-2125, https://doi.org/10.1109/IMCEC51613.2021.9482206 (2021).

Hao, X. et al. Countshoots: Automatic Detection and Counting of Slash Pine New Shoots Using Uav Imagery. Plant Phenomics. 5, 65, https://doi.org/10.34133/plantphenomics.0065 (2023).

Guo, X. et al. Chinese Agricultural Diseases and Pests Named Entity Recognition with Multi-Scale Local Context Features and Self-Attention Mechanism. Comput. Electron. Agric. 179, 105830, https://doi.org/10.1016/j.compag.2020.105830 (2020).

Zhu, Z., Li, J., Zhao, Q. & Akhtar, F. A Dictionary-Guided Attention Network for Biomedical Named Entity Recognition in Chinese Electronic Medical Records. Expert Syst. Appl. 120709, https://doi.org/10.1016/j.eswa.2023.120709 (2023).

Brandsen, A., Verberne, S., Lambers, K. & Wansleeben, M. Can Bert Dig It? Named Entity Recognition for Information Retrieval in the Archaeology Domain. Journal On Computing and Cultural Heritage (Jocch). 15, 1–18, https://doi.org/10.1145/3497842 (2022).

Tao, L. et al. Geographic Named Entity Recognition by Employing Natural Language Processing and an Improved Bert Model. Isprs Int. J. Geo-Inf. 11, 598, https://doi.org/10.3390/ijgi11120598 (2022).

Statistics, L. Fleiss’ Kappa Using Spss Statistics. Statistical Tutorials and Software Guides. (2019) Available at: https://statistics.laerd.com/spss-tuorials/fleiss-kappa-in-spss-statistics.php (Accessed: October, 19, 2019 spetember 2023).

Yao, X., Hao, X., Liu, R., Li, L. & Guo, X. Agcner, the First Large-Scale Chinese Named Entity Recognition Dataset for Agricultural Diseases and Pests. figshare https://doi.org/10.6084/m9.figshare.c.6807873.v1 (2023).

Li, X., Yan, H., Qiu, X. & Huang, X. Flat: Chinese Ner Using Flat-Lattice Transformer. Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. Online: Association for Computational Linguistics, 6836-6842, https://doi.org/10.18653/v1/2020.acl-main.611 (2020).

Wu, S., Song, X., Feng, Z., & Wu, X. J. Nflat: non-flat-lattice transformer for chinese named entity recognition. preprint arXiv arXiv:2205.05832. https://doi.org/10.48550/arXiv.2205.05832 (2022).

Zaratiana, U., Holat, P., Tomeh, N. & Charnois, T. Hierarchical Transformer Model for Scientific Named Entity Recognition. Arxiv Preprint Arxiv:2203.14710. https://doi.org/10.48550/arXiv.2203.14710 (2022).

Van der Maaten, L. & Hinton, G. Visualizing Data Using T-Sne. J. Mach. Learn. Res. 9, http://jmlr.org/papers/v9/vandermaaten08a.html (2008).

Sui, D., Tian, Z., Chen, Y., Liu, K. & Zhao, J. A Large-Scale Chinese Multimodal Ner Dataset with Speech Clues. Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing. Online: Association for Computational Linguistics, 2807-2818, https://doi.org/10.18653/v1/2021.acl-long.218 (2021).

Sui, D., Chen, Y., Liu, K., Zhao, J. & Liu, S. Leverage Lexical Knowledge for Chinese Named Entity Recognition Via Collaborative Graph Network. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Hong Kong, China: Association for Computational Linguistics, 3830-3840, https://doi.org/10.18653/v1/D19-1396 (2019).

Acknowledgements

The research was supported by the Major S&T project (Innovation 2030) of China under grant 2021ZD0113702 and Shandong Province Natural Science Foundation Youth Branch under grant ZR2023QF016.

Author information

Authors and Affiliations

Contributions

Xiaochuang Yao coordinated and initiated this work, and also participated in the collection and annotation of the datasets. Xia Hao assisted in annotating the dataset and providing feedback regarding the writing of the manuscript. Ruilin Liu assisted in data collection, annotation, and methods achievements. Lin Li provided the raw data and guided the annotation as domain experts. Xuchao Guo conducted full-text conceptualization, data collection and annotation, validation, result analysis, and paper writing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yao, X., Hao, X., Liu, R. et al. AgCNER, the First Large-Scale Chinese Named Entity Recognition Dataset for Agricultural Diseases and Pests. Sci Data 11, 769 (2024). https://doi.org/10.1038/s41597-024-03578-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-03578-5

This article is cited by

-

Business Intent and Network Slicing Correlation Dataset from Data-Driven Perspective

Scientific Data (2025)