Abstract

Household occupant behavior and decision-making dynamics substantially impact technology uptake and residential building energy performance. Although significant research underscores the importance of social science in energy studies, few public data with representative samples on household energy decision-making patterns are available. The dataset (UPGRADE-E: Understanding Patterns Guiding Residential Adoption and Decisions about Energy Efficiency) presents 9,919 responses from U.S. residents of single-family and small multifamily homes. Derived from a national-scale internet survey, the dataset contains 391 variables: demographics, building characteristics, home modifications, willingness to adopt new technologies, motivations for making changes, barriers, program participation, trusted information sources, and energy scenarios. Responses were validated via internal consistency checks and comparison with other U.S. national scale datasets. UPGRADE-E advances knowledge of household energy related decision-making, tying demographics, home modifications, and self-reported cognitive drivers together at a scale and breadth that has not been previously achieved. Policymakers and researchers at local, regional, and national levels may leverage this dataset to understand drivers influencing the adoption of key technologies in U.S. homes.

Similar content being viewed by others

Background & Summary

Residential energy use represents roughly 21% of total energy consumption in the global building sector, and 15% in the United States1,2. Residential energy efficiency programs aim to advance presence of high-performance heating, ventilation and air conditioning (HVAC) and water heating technologies, to lower energy costs for consumers. Although program and policy mechanisms exist, residential uptake has not been robust, especially for existing homes3,4. When high-performance technologies are adopted, gaps often exist between the projected and actual savings, and projected future scenarios indicate further increases in building energy demand are likely5,6. This discrepancy frequently results in smaller efficiency gains, which can hinder the primary objective of energy programs7. Regardless, residents must actively decide to invest in energy-saving technologies over other upgrade options, yet relatively few studies have provided robust data in this area8,9,10. Recent advancements in the discourse have expanded social-science approaches to better understand the energy efficiency gap, including new data collection methods11,12.

This paper presents a novel dataset, UPGRADE-E: Understanding Patterns Guiding Residential Adoption and Decisions about Energy Efficiency13. This robust dataset presents residential occupant (homeowner and renter) technology uptake, decision-making and motivations for energy efficiency improvements in their homes. Additionally, these data provide information related to market dynamics such as barriers to implementing changes, trusted sources of information, program participation and other factors that impact decision-making. These one-of-a-kind data were collected via a survey instrument developed by the Pacific Northwest National Laboratory (PNNL), distributed to 10,000 households throughout the United States. Data were gathered between August-October 2022 as one portion of a multi-part project, described in detail in other studies12,14.

The broad goal of this study is to provide data evaluating how residents make decisions regarding technologies, home upgrades and other factors that support an efficient, healthy, affordable, and resilient housing stock. Although several building stock characterization datasets describe typical building characteristics and dissemination of energy efficient technologies15,16,17,18, there are few public datasets describing the human dimensions of energy use in buildings19. Outside of the national/regional scale building stock assessments, the data that do exist tend to focus on residential energy use profiles20, occupancy profiles21, or modelled predictions based on assumptions applied to building stock assessments22,23. Data on the motivations, perceptions, and attitudes of occupants regarding home upgrades and modifications is lacking, but essential for informing various research areas such as building occupant behavior assessments, energy social science studies, building energy modeling, and pathway analyses9,24,25.

To fill the need for a more comprehensive dataset, the study included questions about demographics, building characteristics, upgrades and home modification made, as well as questions probing the motivations, preferences, considerations, barriers, and mentalities surrounding residential decision-making (Fig. 1). This U.S. national-scale dataset represents the largest dataset in the United States to-date that considers a broader range of behavior and decision-making variables in the residential space.

Methods

The study utilized a survey data collection instrument to develop the final dataset. This section describes the methodology associated with the collection, pre-processing, and variable assignments for the UPGRADE-E survey instrument, and associated final dataset (Fig. 2).

Data collection

To develop and organize survey questions, the study employed a grounded survey methodology, as outlined by Csutora et al.26. This process involved iterative feedback between qualitative and quantitative perspectives. A two-part review process enhanced the instrument’s validity to ensure high data quality and strengthen the reliability of survey responses27,28. The first review involved peer review from an advisory committee composed of industry experts in housing-related studies, including social science, energy policy, and building science. The second review was conducted by the research team and focused on themes identified during semi-structured interviews of residents in four U.S. states12, along with statistical tests investigating data uniformity. Because the data collection process involved human subjects, the Institutional Review Board (IRB) of Pacific Northwest National Laboratory reviewed and approved the research protocols (PNNL IRB #2022-03), and the research team followed IRB protocols for data collection, cleaning, storage and dissemination. Informed consent was obtained from all participants on the prior to accessing the survey instrument (the Supplementary Information file includes the full informed consent and survey questionnaire).

Data collected from this project was overseen by PNNL’s Institutional Review Board (IRB). The IRB approved the data collection, consent, data cleaning, data storage, and data publication protocols. Personally identifiable information (PII) has been removed, the dataset has been cleared for publication.

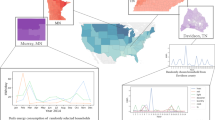

The survey targeted homeowners and renters residing in detached or small attached buildings (four units or fewer) throughout the United States (Fig. 3). Containing 77 questions for homeowners and 87 questions for renters, the final survey concentrated on decision-making processes related to home upgrades, renovations, and appliance purchases. Notably, survey questions expanded beyond just energy efficiency to include general renovations and improvements (e.g. new roofs, bathroom remodels, yard care), addressing a limitation identified in earlier research29. Questions addressed demographics, housing characteristics, the decision-making process regarding residence, beliefs about household and broader consequences, social influences, and policy measures8.

Administered through the Qualtrics platform, the survey was available in English and Spanish, visible on both desktop and mobile devices. Response collection occurred between August and October 2022, until reaching the quota of 10,000 valid responses. Survey participants were not compensated. The questions were primarily multiple-choice, with options for free-text responses where relevant. Using flow logic to guide respondents (e.g. homeowner or renter, made specific changes or not), respondents only answered the questions applicable to their circumstances. The average completion time was approximately 20 minutes. Throughout the survey, Qualtrics and PNNL reviewed responses to check for invalid or spurious responses. Qualtrics features built-in data cleaning algorithms to identify and eliminate invalid responses, including those from bots, duplicate IP addresses, and geographic outliers30.

Exclusion criteria

Survey respondents had to meet all the following criteria to be eligible: (1) be at least 18 years old, (2) be a U.S. resident, (3) live in a single-family or small multifamily (defined as 4 units or fewer) dwelling, (4) be a household decision-maker, and (5) agree to the informed consent statement. The goal of the study was to recruit a sample representing the geographic extent of the U.S. population that was also representative of U.S. demographics. Study sample design is informed by review of U.S. Census regions and demographics16. Soft quotas for study are based on U.S. Census regions (2500 per region), ownership (70:30 owner/renter), and other demographics. Qualtrics screening eliminated responses that were completed too quickly or were probable bots. After obtaining the screened 10,000 responses, all further data evaluation occurred using packages in R via RStudio31.

Eighty-one questionable responses were excluded for one or more of the following reasons: (1) too many reported occupants per household based on an outlier analysis in EnvStats32, (2) no responses in crucial variables (state, ZIP Code, household income), (3) responses indicating respondents were not serious (contradictory responses, slurs, obvious filler words in multiple text fields), and respondents with IP addresses that were not within the United States. Antonopoulos et al.14 (Appendix B) summarizes the methods used for assessing data quality and resolving geographic discrepancies, providing a detailed summary of why the final dataset was reduced to 9,919 valid responses.

Data Pre-processing

Raw responses were evaluated in R via tidyverse33 to create the final dataset. The PNNL IRB approved the pseudonymization procedure and protection of potentially identifiable information of survey respondents. Potentially identifiable geographically identifying variables (ZIP Code, IP address coordinates) were excluded; U.S. County is the smallest geographic extent. Each response is identified by a unique, pseudonymous number (PermNum, an integer ranging from 10000 to 19999). Questions with multiple choices were split into multiple variables. For example, in response to questions where respondents could select any of five answers, five corresponding indicator variables indicate whether the respondent selected the choice (1 = selected, 0 = not selected).

It is recommended users read the data dictionary and README file to understand how and why one of three possible blank values (NA, 999,777) were assigned. For example, homeowners were not asked questions about landlord home modifications, so those responses for these variables are NA. If a respondent completely skipped questions, their skips have a value of 999. For purchase preference variables, the respondents were asked to rate how important (important, somewhat important, not important) various factors were in their purchase decisions, after selecting all factors that applied. For unselected and therefore unrated factors, blanks were assigned 777 (rather than 999 or NA) to indicate that the question was not skipped but the respondent did not select “not important” as a factor influencing their purchase decision.

New variables were derived from data in the responses (Fig. 2). For example, counties and climate zones were assigned based on the respondent’s location. Additional summary variables describe how many residents lived in the home, whether not the respondent had made changes to their home, how many of those changes supported higher energy performance of the home, and how many high-performance technologies a respondent was willing to adopt.

Variable assignment

Variable groups reflect the underlying characteristics and purpose of the variables (Fig. 1). Broad categories include information about the respondent, household characteristics, and home energy decision-making factors. The specific datasets under each of these variable groups are described in the Data Records section. Variable names are unique across all datasets and are descriptive of the question content.

Two datasets have multiple derived variables: counts_changes_willingness and geography. In counts_changes_willingness, the binary variable anychange captures whether respondents made any changes to the home structure (1 = changed, 0 = unchanged). Another variable, highperformancecount, is a count variable summarizing the number of home changes that support high-performance goals14. Finally, a third variable, adopt_willing, is a count variable that summarizes the number of high-performance technologies that the respondent would be willing to adopt. These seven high performance technologies are included in this dataset for ease of comparison. The Technical Validation section describes geography variable processing, validation, and creation.

Data Records

The UPGRADE-E dataset can be found on the PNNL Data Hub13; it can also be found on Kaggle34.

The data download consists of 18 files (Table 1): 14 data files, a data dictionary, a README file, the survey questions, and an R script allowing a user to combine the files into a single data (9,919 observations of 391 variables). All variables of the survey data are described in the file ‘DataDictionary.xlsx’. The ‘data type’ column in the data dictionary states the variable type (numeric, binary, or text). The data dictionary also provides sample data for variables and provides units for variables (if applicable).

Technical Validation

This section explains how we evaluated potential geography conflicts and presents statistical comparisons of collected data with U.S. Census data and the American Housing Survey16.

Geography validation

Responses in the raw data file contained several location-based variables: state where residence is located, ZIP Code of residence, and IP address coordinates. Within the accepted dataset, 18 respondents did not provide their state of residence, and 353 did not provide a ZIP Code. Because reported ZIP Code was sparser than reported state, reported state was prioritized over reported ZIP Code for state of residence (assigned_state in the published dataset) when there were conflicts.

The final geography dataset contains 23 variables. Twelve of these are variables reported directly by the respondent or assigned during the geography review (Table 2): PermNum, five variables related to geography checks we conducted, three state variables, region, assigned county. After reconciling geography, eleven variables were assigned, depend upon county or ZIP Code in other published datasets26,27,28,29,30,31. The data dictionary explains each variable type (e.g., float, Boolean, integer, etc.).

The first stage of the geography review required obtaining valid geospatial datasets to compare to the geographic variables in the raw dataset (Tiger/Line, ZIP Code Tabulation Areas (ZCTAs), county, United States Department of Agriculture (USDA) commuting codes)35,36,37,38. A list of all possible U.S. Counties, ZCTAs and their centroids were created in QGIS39, using the U.S. Census Tiger Line shapefiles35,36. ZCTAs represent U.S. Census approximations of the geographic extent of U.S. ZIP Codes, which represent mail routes and points to efficiently deliver mail rather than geographic areal extents40. Spatial joins attributed each ZCTA with county, climate, and USDA commuting codes37.

The second stage of the geography review compared raw data to the validating geospatial datasets. A preliminary geography data file was exported from R as a.csv file and imported into QGIS as a tabular file. This raw geography file consisted of PermNum, reported state, reported ZIP Code, and IP address coordinates. Each record from the raw dataset was assigned a ZCTA by one of three methods: (1) by an exact match between ZIP Code and ZCTA, (2) by assigning unmatchable but valid ZIP Codes (e.g., a Post Office Box) to those mapped on the U.S. Postal website38, or (3) by ZCTA of the IP address. State, county and climate zone variables were assigned based on assigned ZCTA and IP address. The third stage of the geography review and final variable assignment occurred in R. The geography shapefile dbf from the second stage was imported into R for further variable assignment. The variable geo_state_zip summarizes the data quality of reported_state and respondents’ ZIP Code. The variable assigned_state is the same as reported_state, unless respondent did not report state, in which case assigned_state is IP address state. The variable region is based on assigned_state. Three data quality location variables ending in “chk” provide additional geography comparisons for the data user (Table 2): state_chk (compares reported_state to IP address state), zcta_chk (compares reported_state to ZCTA state), and county_chk (compares ZCTA county to IP address county). The variable geo_review consists of state_chk, zcta_chk, and county_chk pasted together as single, composite variable.

Comparison to other datasets

Comparing the UPGRADE-E with other U.S. national-scale datasets shows that they align with expected outcomes. Several demographics, home characteristics, and home modification questions can be compared with other existing datasets, such as the American Housing Survey (AHS) and the Residential Energy Consumption Survey15,16, to understand how this dataset is positioned relative to known distributions.

Figure 4 compares the UPGRADE-E dataset with similar data on demographics from the U.S. Census16: gender, age structure, home ownership, and ethnicity. These comparisons show a high level of similarity in the data collected to that of the total U.S. population represented by the Census, suggesting that this is an appropriate sampling by the survey. The largest discrepancy was for occupants owning versus renting their household, with Census data showing a smaller proportion of homeownership.

Figure 5 compares the UPGRADE-E dataset to the AHS for six variables: home size, year built, education, household income, whether the residence is an urban or rural area, and primary heating fuel16. The 2023 AHS data (n = 133,231) was used to calculate percentages by aggregating the detached single-family home, attached single family home, and 2–4-unit dwellings in the AHS (n = 102,522) to mirror the dwelling types included in the UPGRADE-E dataset. For some categories, slight differences in the categorical groupings required harmonization between the two sources, e.g. home size, year built, and income categories were summed to match the lowest resolution between the datasets. Distribution of respondents in each survey are similar with a few exceptions. The UPGRADE-E dataset has more respondents reporting completion of an associate degree or trade school, differences in household income distribution, and differences in heating fuel source. The higher representation of associate degrees and trade schools in the UPGRADE-E dataset is most likely because the AHS does not include trade school, so the AHS segment represents only those with associate degrees. UPGRADE-E has a greater representation of residents earning less than $60,000 per year, while the AHS has a higher representation of individuals with annual household incomes approaching or above $200,000. Additionally, there are some differences in reported primary home heating fuel between the UPGRADE-E data and the AHS data (n = 102,178 due to some homes without a primary heating system).

In addition to comparison with known datasets for technical validation, it is useful to demonstrate how cross-factor analysis provides an exploratory view of the data and can be used to understand technology adoption from various lenses, along with illustrating how the data can be used by researchers in other capacities. While the high number of variables included in this dataset does not allow a comprehensive demonstration of all possible crosscuts, an example is provided in the usage notes that provides insight and a roadmap for this type of exploration.

Usage Notes

The datasets are publicly available. They can be analysed in any statistical computing or visualization software that enables import of.csv files, such as R, Python, MATLAB, SAS, SPSS, and many others. The research team has used these data to explore technology adoption regionally14, and to compare contractor and household preferences41.

When importing the data, researchers are encouraged to review the README file and the data dictionary that accompany the data subsets. Researchers should take caution in running statistical analyses, making sure to remove data containing blank values (NA, 777, 999) as appropriate, so the software does not erroneously interpret the missing data as a numerical value.

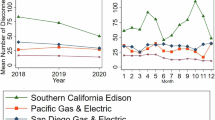

A crosscut of the data considering a combination of geographic, socioeconomic, personal preference, and home modification factors is presented in Fig. 6. For this example, four technologies and upgrades were selected including heat pumps for space heating and cooling, heat pump water heaters, wall insulation, and air sealing cracks and openings. Similar analyses can be conducted across a wide variety of demographic and home energy decision-making factors. These data are presented, investigating uptake of these technologies by region, motivation behind the purchase/upgrade decision and fuel type used in the home for space heating. Household income is used on the x-axis to demonstrate how upgrades vary by overall household budgets for each category.

When looking specifically at technology adoption in relation to heating fuel (Fig. 6), a driver for heat pumps when primary heating fuel is electricity was observed, which aligns with previous investigations42.

Code availability

Code used to produce derived variables (see Fig. 2) and to process the data as presented in this article is included in the supplemental files.

Each of the subsets of data can be accessed independently through the data tables and files and do not require code to process13,34. Dataset users can import the data into their program of choice for statistical analysis or visualization. However, to expedite the process of combining the subsets into one master data file, the code entitled “combine_files.R” is included in the dataset package. This script, written for R Project for Statistical Computing language, pulls the data subsets into a single environment and merges them by the unique participant identifiers (“PermNum”), resulting in a single dataset of 9,919 entries with 391 variables. The script can be accessed directly at PNNL’s GitHub site at this link: https://github.com/pnnl/NEI/blob/master/RED/combine_files.R.

References

U.S. Energy Information Administration. U.S. energy consumption by source and sector, 2023. U.S. energy consumption by source and sector, 2023 https://www.eia.gov/energyexplained/us-energy-facts/images/consumption-by-source-and-sector.pdf (2023).

International Energy Agency (IEA). Buildings. https://www.iea.org/energy-system/buildings (2022).

Mayer, A., Carter, E. & Feinberg, E. Partisanship and energy efficiency program participation in the USA. Npj Clim. Action 2, 39 (2023).

Hoicka, C. E., Parker, P. & Andrey, J. Residential energy efficiency retrofits: How program design affects participation and outcomes. Energy Policy 65, 594–607 (2014).

Falchetta, G., Cian, E. D., Pavanello, F. & Wing, I. S. Inequalities in global residential cooling energy use to 2050. Nat. Commun. 15, 7874 (2024).

Wang, C. et al. Impacts of climate change, population growth, and power sector decarbonization on urban building energy use. Nat. Commun. 14, 6434 (2023).

Christensen, P., Francisco, P., Myers, E. & Souza, M. Decomposing the Wedge between Projected and Realized Returns in Energy Efficiency Programs. Rev. Econ. Stat. 105, (2023).

Kastner, I. & Stern, P. C. Examining the decision-making processes behind household energy investments: A review. Energy Res. Soc. Sci. 10, 72–89 (2015).

Sovacool, B. What are we doing here? Analyzing fifteen years of energy scholarship and proposing a social science research agenda. Energy Res. Soc. Sci. 1, 1–29 (2014).

Foulds, C. et al. An agenda for future Social Sciences and Humanities research on energy efficiency: 100 priority research questions. Humanit. Soc. Sci. Commun. 9, 223 (2022).

Wekhof, T. & Houde, S. Using narratives to infer preferences in understanding the energy efficiency gap. Nat. Energy 8, 965–977 (2023).

Biswas, S., Fuentes, T. L., McCord, K. H., Rackley, A. L. S. & Antonopoulos, C. A. Decisions and decision-makers: Mapping the sociotechnical cognition behind home energy upgrades in the United States. Energy Res. Soc. Sci. 109, 103411 (2024).

Fuentes, T. et al. UPGRADE-E: Understanding Patterns Guiding Residential Adoption and Decisions about Energy Efficiency. Pacific Northwest National Laboratory 2 https://doi.org/10.25584/2371918 (2024).

Antonopoulos, C. A., Fuentes, T. L., McCord, K. H., Rackley, A. L. S. & Biswas, S. Regional assessment of household energy decision-making and technology adoption in the United States. Energy Policy 185, 113940 (2024).

U.S. Energy Information Administration. Residential Energy Consumption Survey (RECS). (2022).

U.S. Census Bureau. American Housing Survey (AHS). (2023).

Residential Building Stock Assessment. Northwest Energy Efficiency Alliance (NEEA) https://neea.org/data/residential-building-stock-assessment.

ResStock - NREL. https://resstock.nrel.gov/datasets.

D’Oca, S., Hong, T. & Langevin, J. The human dimensions of energy use in buildings: A review. Renew. Sustain. Energy Rev. 81, 731–742 (2018).

Thorve, S. et al. High resolution synthetic residential energy use profiles for the United States. Sci. Data 10, 76 (2023).

Mitra, D., Steinmetz, N., Chu, Y. & Cetin, K. S. Typical occupancy profiles and behaviors in residential buildings in the United States. Energy Build. 210, 109713 (2020).

Cui, X., Lee, M., Uddin, M. N., Zhang, X. & Zakka, V. G. Analyzing different household energy use patterns using clustering and machine learning. Renew. Sustain. Energy Rev. 212, 115335 (2025).

Chidmi, B., Ettoumi, Y. & Aljohani, E. S. Analyzing the Determinants of U.S. Residential Energy Usage and Spending: A Machine Learning Approach. Energy J. 46, 147–177 (2025).

Faiers, A., Cook, M. & Neame, C. Towards a contemporary approach for understanding consumer behaviour in the context of domestic energy use. Energy Policy 35, 4381–4390 (2007).

Ghofrani, A., Zaidan, E. & Jafari, M. Reshaping energy policy based on social and human dimensions: an analysis of human-building interactions among societies in transition in GCC countries. Humanit. Soc. Sci. Commun. 8, 246 (2021).

Csutora, M., Zsoka, A. & Harangozo, G. The Grounded Survey – An integrative mixed method for scrutinizing household energy behavior. Ecol. Econ. 182, 106907–106907 (2021).

Desimone, L. M. & Le Floch, K. C. Are We Asking the Right Questions? Using Cognitive Interviews to Improve Surveys in Education Research. Educ. Eval. Policy Anal. 26, 1–22 (2004).

Ryan, K., Gannon-Slater, N. & Culbertson, M. J. Improving Survey Methods With Cognitive Interviews in Small- and Medium-Scale Evaluations. Am. J. Eval. 33, 414–430 (2012).

Wilson, C., Crane, L. & Chryssochoidis, G. Why do homeowners renovate energy efficiently? Contrasting perspectives and implications for policy. Energy Res. Soc. Sci. 7, 12–22 (2015).

Qualtrics. How Qualtrics Ensures Data Quality. https://qxm.co1.qualtrics.com/CP/File.php?F=F_esUQdbCZpKsymFM (2022).

RStudio Team. R: A Language and Environment for Statistical Computing. https://www.r-project.org/ (2022).

Millard, S. P. EnvStats: An R Package for Environmental Statistics. https://doi.org/10.1007/978-1-4614-8456-1 (Springer New York, New York, NY, 2013).

Wickham, H. et al. Welcome to the Tidyverse. J. Open Source Softw. 4, 1686 (2019).

Fuentes, T., McCord, K., Kabre, W., Martel, M., & C.A. Antonopoulos. UPGRADE-E: Understanding Patterns. Kaggle. Pacific Northwest National Laboratory. https://www.kaggle.com/datasets/maxmartell/upgrade-e.

US Census Bureau. 2023 TIGER/Line® Shapefiles: Counties (and equivalent). https://www.census.gov/cgi-bin/geo/shapefiles/index.php?year=2023&layergroup=Counties+%28and+equivalent%29 (2023).

US Census Bureau. 2023 TIGER/Line® Shapefiles: ZIP Code Tabulation Areas. https://www.census.gov/cgi-bin/geo/shapefiles/index.php?year=2023&layergroup=ZIP+Code+Tabulation+Areas (2023).

US Department of Agriculture. Rural-Urban Commuting Area Codes. https://www.ers.usda.gov/data-products/rural-urban-commuting-area-codes (2019).

US Postal Service. Cities by ZIP CodeTM. Look Up a ZIP CodeTM https://tools.usps.com/zip-code-lookup.htm?citybyzipcode (2024).

QGIS. QGIS. (2025).

Grubesic, T. H. Zip codes and spatial analysis: Problems and prospects. Socioecon. Plann. Sci. 42, 129–149 (2008).

Casquero-Modrego, N., Antonopoulos, C., Fuentes, T. L., McCord, K. H. & Walker, I. S. Decarbonizing residential buildings in the United States: A comparative analysis of households and construction professionals. Energy Res. Soc. Sci. 120, 103908 (2025).

Davis, L. W. The Economic Determinants of Heat Pump Adoption. Environ. Energy Policy Econ. 5, 162–199 (2024).

ZIP Code to ZCTA Crosswalk – UDS Mapper. https://udsmapper.org/zip-code-to-zcta-crosswalk/.

Acknowledgements

This work was funded by the U.S. Department of Energy Residential Building Integration Program within the Building Technologies Office, via Contract DE-AC05-76RL01830 to the Pacific Northwest National Laboratory. Holly Carr and Amy Royden-Bloom provided helpful counsel throughout development of this manuscript. Contributors to related data analyses include Saurabh Biswas, Adrienne Rackley, Nuria Casquero-Modrego, and Katelyn Stenger. ILLUME Advising, including Liz Kelley, Mallika Jayaraman and Maass contributed to the development and conducting of the semi-structured interviews. The survey was developed with guidance from an advisory committee that included Lauren Bates, KC Boyce, Nuria Casquero-Modrego, Tim Echols, Renee Guillory, Tom Hargreaves, Owen Howlett, Brennan Less, Matthew Lipson, Joshua Loughman, Loren Lutzenhiser, Carlos Eduardo-Martin, Mithra Moezzi, Juliet Shavit, Jill Vohr, Iain Walker, Michelle Moore, Tracey Woods, and Emily Robichaux. Susan Varnum was the PNNL Institutional Review Board representative.

Author information

Authors and Affiliations

Contributions

Tracy Fuentes: Conceptualization, Methodology, Validation, Formal analysis, Investigation, Data Curation, Writing – Original Draft, Writing – Review and Editing. Kieren McCord: Conceptualization, Methodology, Validation, Formal analysis, Data Curation, Writing – Original Draft, Writing – Review and Editing, Visualization. Max Martell: Formal analysis, Writing – Review & Editing, Visualization. Chrissi Antonopoulos: Conceptualization, Methodology, Investigation, Resources, Writing – Original Draft, Writing – Review and Editing, Supervision, Project administration, Funding acquisition.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Fuentes, T.L., McCord, K.H., Martell, M.J. et al. A dataset for understanding self-reported patterns influencing residential energy decisions. Sci Data 12, 1273 (2025). https://doi.org/10.1038/s41597-025-05335-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-05335-8