Abstract

Multimodal devices utilizing optical cameras and LiDAR are crucial for precise underwater environmental perception. Enhancing and optimizing RGB images and laser point clouds through algorithms is a key focus in underwater computer vision. This research necessitates extensive underwater multivariate data for training and evaluation, along with the organization and labeling of this data. To meet these needs, we present a multimodal optical dataset for underwater image detection, segmentation, enhancement, and 3D reconstruction (MOUD). The dataset comprises over 18,000 original RGB images, 12,000 labeled images, 60 point cloud sets, and several labeled point clouds. The labeled images feature nine different target objects, including scallop, starfish, conch, holothuria, seaweed, coral, reef, abalone, and barnacle. These data were gathered from our underwater simulation scenarios. To ensure accuracy and utility, we employed a state-of-the-art image-laser underwater detector with appropriate kinematic parameters. This dataset supports training and evaluation in underwater image enhancement, detection, segmentation, and reconstruction, which are vital for precise underwater sensing. Consequently, this dataset holds significant importance for advancing underwater optical exploration technology.

Similar content being viewed by others

Background & Summary

Underwater environment sensing technology is a critical aspect of ocean exploration and underwater computer vision. While advancements in traditional optical detection methods have significantly enhanced the efficiency and accuracy of perceiving non-aquatic environments, such as terrestrial and aerial spaces, resulting in the creation of extensive, high-quality optical datasets, the challenge remains in adapting optical devices and environmental sensing algorithms for underwater applications1,2,3. The successful deployment and execution of these tasks depend on underwater optical datasets utilized for image processing and 3D reconstruction. Research in machine vision has focused on target detection and 3D reconstruction to enhance underwater environmental perception. Numerous publicly accessible underwater 2D/3D datasets exist, including the SeaThru4,5, MIMIR-UW6, and AQUALOC7 for underwater reconstruction, as well as the TRASHCAN8, JAMSTEC9, RUIE10, UIEB11, and Subpipe12 datasets which are aimed at underwater target detection and segmentation. Despite the existing literature, no research has addressed the simultaneous tasks of underwater image enhancement13,14, detection15, segmentation16, and reconstruction17,18,19. This paper aims to achieve these tasks concurrently within an underwater simulated environment, utilizing a substantial dataset with diverse training samples. The specific tasks applicable to the 2D datasets are detailed in Table 1, while Table 2 outlines the relevant tasks for the 3D datasets.

Collecting data in real underwater environments20 incurs significant economic, labor, and time expenses. Consequently, accurately simulating the natural underwater setting in experimental waters is crucial. To achieve this, we established an underwater simulation laboratory in Dalian Maritime University, China. We utilized authentic sea sand, reef structures, and high-precision coral models to construct an extensive underwater simulation area, while also replicating water bodies with varying colors and transparency. From a research standpoint, our MOUD dataset21 is crucial for various applications, including underwater image enhancement, detection, segmentation, and reconstruction, such as identifying underwater biological species15 and reconstructing 3D scenes of submerged environments17,18,19. Additionally, the labeled categories of underwater targets and their instance segmentation, along with the assignment of relevant category names—particularly in biological contexts—benefit multiple stakeholders. These include the underwater computer vision community22 (for training and evaluation purposes), the aquaculture and fisheries sector23 (to assess local biological populations), and underwater exploration initiatives24 (for reconstructing aquatic environments in three dimensions).

Our MOUD dataset21 is multivariate and comprises high-resolution RGB images alongside point cloud data, with nine distinct classes of target objects annotated within the RGB images for detection and segmentation purposes. This dataset is versatile and can address various tasks: it facilitates the detection and classification of target objects, which includes locating an object, providing bounding boxes, and categorizing each box; additionally, it supports semantic segmentation25,26 by offering pixel-level segmentation and labeling different regions of the image. Moreover, it enables instance segmentation27, which not only assigns a single label to all instances of the same class but also differentiates each specimen with unique labels and masks for targeted segmentation.

Simultaneously, we maintain a strict standard in acquiring state-of-the-art image-laser underwater detectors28. This includes ensuring the accuracy of the equipment’s kinematic parameters and the precise alignment of image-point cloud data29. Such a dataset plays a significant role in advancing underwater environment sensing, particularly in areas such as optical underwater image detection, segmentation, enhancement, and 3D reconstruction.

Methods

Collecting Data

For data acquisition, we utilized the Insight Micro-1000m Laser&12MP Stills Image-Laser Underwater Probe, as illustrated in Fig. 1(a). This device features an optical sensing element composed of a laser probe, which facilitates point cloud reconstruction of underwater scenes, and a 4K optical camera for capturing underwater RGB images (Resolution 4096 X 3008), depicted in Fig. 1(b). The Image-Laser Underwater Probe is mounted on a three-axis linear slider mechanism, enabling precise control during data collection at a constant speed of 0.5 m/s in underwater environment. This mechanism ensures that the probe operates at a constant 2 m water depth while accurately maintaining the positioning of the detector’s X and Y coordinates throughout the scanning process.

The optical sensing element of the Image-Laser Underwater Probe consists of two parts: a laser probe for point cloud reconstruction of the underwater scene and an optical 4K camera for underwater RGB image capture. The probe is fixed in the frame and an exposure lamp module is added to simulate different lighting conditions.

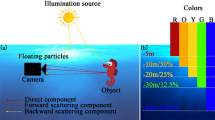

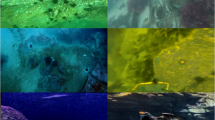

During the acquisition process, we maintain the laser probe and the optical 4K camera at a constant height directly above the acquisition plane, while effectively minimizing the impact of external light sources. This involves eliminating natural light effects on the simulated temporal fluctuations of the deep-water environment by conducting data collection in a dark room. As illustrated in Fig. 1(c), we have integrated an exposure lamp module into the image-laser detector to replicate three distinct lighting scenarios: high, medium, and low light. Additionally, we also use silica micropowder based on sand and water-soluble organic dyes to treat the water to simulate the difference in color and turbidity of the water, which resulting in 6 distinct types of underwater environmental data, as shown in Fig. 2(a), where High Clarity and Low Clarity are the turbidity of 2 NTU and 10 NTU. The simulation featured nine target objects arranged among the sand and rocks, including scallops, starfish, conchs, holothurians, seaweed, coral, reefs, abalones, and barnacles, as depicted in Fig. 3. Our choice of target object classes is informed by their prevalence in real marine ecosystems, as well as their technical and commercial significance, including applications in underwater computer vision training and evaluation, biological population exploration in aquaculture and fisheries, and 3D exploration and reconstruction of underwater environments.

(a) Data under different light and water conditions, light is categorized into High, Mid and Low, which is determined by the exposure lamp module installed on the body of the image-laser underwater detector; water conditions are categorized into High clarity and Low clarity, which is determined by the turbidity of the fine sand in the water and water-soluble organic dyes. (b) The image-laser underwater detector acquires data in the acquisition area there is a local overlapping regions, we define the small overlapping regions (overlap area less than 30%) and the large overlapping regions (overlap area more than 30%) according to the overlap rate of the two images, we select the small overlapping regions image with a large difference in the type of the target to be labeled.

After positioning the object, we operated the three-axis linear module to elevate the underwater image laser detector, enabling it to navigate and scan the designated area. This process involved capturing RGB images and generating 3D point clouds. Subsequently, we systematically adjusted the scanning area and recorded extensive data to support future related tasks. To facilitate image stitching, we reserved enough overlapping areas for the acquisition ranges of the neighboring routes, as shown in Fig. 2(b).

Object Annotation

Given the complexity of the underwater environment and the challenge of identifying marine organisms, labelers cannot rely solely on experience and intuition for accurate categorization. To address this, we organized the team into two groups. The first group utilized the image annotation tool (Labelme) to create initial labels for the dataset, forming polygons based on the contours of the target objects’ shells. These polygons were designed to closely match the shapes of the objects, with distinct colors representing different categories, as illustrated in Fig. 4. The second group performed a thorough review of the labeled dataset, correcting any omissions or inaccuracies, before using this refined dataset for detection purposes. In our dataset, we only annotated images with small overlapping regions that depict distinct areas, while images with significant overlaps remain unannotated; however, the raw data for these images is still accessible. Figure 5 provides an example of an underwater simulation scene, featuring a mask that includes category labels for nine types of target objects: scallop, starfish, conch, holothuria, seaweed, coral, reef, abalone, and barnacle.

Point Cloud Data

We capture underwater images while simultaneously scanning the seabed with an underwater laser detector to generate point cloud data, as illustrated in Fig. 6. Each sequence of images is linked to a corresponding global point cloud. We achieve timestamp alignment of the point cloud with the image through integrated synchronized triggering of the image-laser underwater detector to ensure cross-modal data consistency. When the dataset is applied to a task that relies on both RGB images and point clouds, our dataset is guaranteed to have a maximum error upper bound of 1mm thanks to the factory pre-alignment of the image-laser underwater detector. Additionally, we account for variables such as turbidity from underwater currents during certain point cloud acquisition processes, creating a benchmark for downstream tasks to enhance robustness. Furthermore, we have annotated the point cloud data, exemplified in Fig. 7, which showcases a simulated underwater scene with category labels identifying multiple specimens across four target object categories: coral reef, conch, starfish, and holothuria.

Data Records

The dataset is available at Zenodo21. Our dataset comprises 18,000 RGB images paired with 60 large-scale point clouds, accompanied by corresponding annotated images. The data is organized into five ZIP directories: “Scene_1.zip”, “Scene_2.zip”, “Scene_3.zip”, “Scene_4.zip”, and “Scene_5.zip”. The “Scene” folders comprise 18,000 original RGB images, 12,000 annotated images, and 60 sets of point clouds, organized into three distinct folders. The RGB images are located in the “Images” folders, the annotated images are located in the “Annotation” folders, and the point clouds are located in the “Point Clouds” folders. Each “Images” folders is further categorized into “High light,” “Mid light,” and “Low light” sub-folders based on light intensity, with each sub-folder containing over 1,200 RGB images, as illustrated in Fig. 8(b). Additionally, these sub-folders are subdivided into four groups according to the sequence of collection and the scanning area, labeled as “High light area_1,” “High light area_2,” “High light area_3,” and “High light area_4.”

Technical Validation

Labeling Validation

We have designed a three-tier review mechanism to ensure the accuracy of optical image annotation.

First, we used an image annotation tool (Labelme) to generate a polygonal mask based on the target contours, automatically identify the boundaries by an edge detection algorithm30, and establish corresponding color coding rules based on the preset 9 types of targets (scallops, starfish, conchs, sea cucumbers, seaweeds, corals, reefs, abalones, and barnacles), which in turn generated the initial annotated images.

Second, we formed a review team consisting of marine biologists and computer vision researchers, assigned the initial labeled images to the team members, and used cross-validation for manual review. In the cross-validation stage, we first verified the annotation completeness (e.g., whether there was any target omission), and then calibrated the classification accuracy (e.g., morphological differentiation between corals and reefs), and re-labeled if there was any controversy.

Third, we used the labeling consistency coefficient (Cohen’s Kappa) to quantify the degree of labeling consistency among different members, and the final overall Kappa value reached 0.92 (95% confidence interval 0.89-0.94), which indicated that the labeling results were reliable.

In addition, for partially occluded target objects, we formulate the following rules to accomplish more accurate annotation: when the target occlusion area is less than 20%, they are annotated separately as independent individuals; when the target occlusion area is greater than 20%, the target boundaries are refined by morphological corrosion operations and follow the order of the occlusion to be annotated.

Sensor Uncertainty Analysis

Our image-laser underwater detectors are pre-calibrated, integrated units.

Clarity impact metrics: We employ the Modulation Transfer Function (MTF) as an indicator for evaluating the image-laser underwater detector imaging system's ability to restore underwater target details. Modulation Transfer Function (MTF) of 10 lp/mm measured by resolution test card under varying turbidity conditions (Turbidity range 5-50 NTU).

Point cloud uncertainty metrics: The point cloud metrics were tested at a 2 m nadir scanning distance, which represents the standard operating range for our image-laser underwater detector in typical tasks such as underwater structure inspection and 3D mapping. At this distance, the point cloud density is 120 points/m2, with single-point localization error < 3.5 mm and distance measurement relative error < 0.5%.

Turbidity and Staining Simulation Validation

In turbidity control, we use silica micropowder based on sand grinding to regulate the turbidity of the water, and real-time monitoring by turbidimeter to simulate different turbidity of the underwater environment (2 NTU/10 NTU). Comparing with the real sea data (measured turbidity 1-3 NTU in the open waters of the South Pacific Ocean and 8-12 NTU in the offshore of the Yellow Sea), the error of two different simulations was < ± 2 NTU.

In coloring scheme, we used water-soluble organic dyes to regulate the spectral absorption of the water body and measured the attenuation coefficient of the simulated water body by a spectrometer at a wavelength of 490 nm, c = 0.11 ± 0.05 m−1 (measured value of 0.09-0.16 m−1 in the offshore of the Yellow Sea), with a simulation error of < ± 0.1 m−1.

Usage Notes

We evaluated the data using the YOLOv8 algorithm31, as shown in Fig. 9. Underwater target detection mAP@0.5 = 90%, and the test results show that the present annotation method can ensure high accuracy and meet the research needs of underwater complex environments. In order to make it easier for users to download and run our dataset with the relevant algorithms, we archive each scene as a separate zip directory. Similarly, we have compressed all the code into a single zip directory named “MOUD_tools”. Users can use our scripts to process the original data, or read the labeled images directly for training according to our JSON file parsing scripts.

Code availability

The dataset is available from the Zenodo repository21 mentioned above. In addition, we have released the annotation tool code along with the dataset, archived as “MOUD_tools” and available online. In our annotation work, the tool works well under Ubuntu 20.04/22.04. At the same time, we also provide a JSON file parsing script in “MOUD_tools” to make it easier for users to work with our data.

References

Huy, D. Q. et al. Object perception in underwater environments: a survey on sensors and sensing methodologies. Ocean. Eng. 267, 113202, https://doi.org/10.1016/j.oceaneng.2022.113202 (2023).

Lu, H. et al. Underwater optical image processing: a comprehensive review. Mob. networks applications 22, 1204–1211, https://doi.org/10.48550/arXiv.1702.03600 (2017).

Shen, Y., Zhao, C., Liu, Y., Wang, S. & Huang, F. Underwater optical imaging: Key technologies and applications review. IEEE Access 9, 85500–85514, https://doi.org/10.1109/ACCESS.2021.3086820 (2021).

Akkaynak, D. & Treibitz, T. Sea-thru: A method for removing water from underwater images. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1682-1691, https://doi.org/10.5281/zenodo.14936350 (2019).

Levy, D. et al. Seathru-nerf: Neural radiance fields in scattering media. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 56-65, https://doi.org/10.5281/zenodo.13381727 (2023).

Álvarez Tuñón, O. et al. Mimir-uw: A multipurpose synthetic dataset for underwater navigation and inspection. In 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 6141–6148, https://doi.org/10.1109/IROS55552.2023.10341436 (2023).

Ferrera, M., Creuze, V., Moras, J. & Trouvé-Peloux, P. Aqualoc: An underwater dataset for visual-inertial-pressure localization. The Int. J. Robotics Res 38, 1549–1559, https://doi.org/10.1177/0278364919883346 (2019).

Hong, J., Fulton, M. & Sattar, J. Trashcan: A semantically-segmented dataset towards visual detection of marine debris. arXiv preprint arXiv:2007.08097 https://doi.org/10.17596/0004035 (2020).

Enomoto, T. et al. Observing-system research and ensemble data assimilation at jamstec. Data assimilation for atmospheric, oceanic hydrologic applications (Vol. II) 509-526, https://doi.org/10.17596/0004035 (2013).

Liu, R., Fan, X., Zhu, M., Hou, M. & Luo, Z. Real-world underwater enhancement: challenges, benchmarks, and solutions. IEEE Transactions on Circuits and Systems for Video Technology https://doi.org/10.1109/TCSVT.2019.2963772 (2020).

Li, C. et al. An underwater image enhancement benchmark dataset and beyond. IEEE transactions on image processing 29, 4376–4389, https://doi.org/10.1016/j.patcog.2019.107038 (2019).

O. Álvarez-Tuñón. et al. SubPipe: A Submarine Pipeline Inspection Dataset for Segmentation and Visual-inertial Localization. OCEANS 2024 - Singapore https://doi.org/10.1109/OCEANS51537.2024.10682150 (2024).

Deluxni, N., Sudhakaran, P., Ndiaye, M. F. et al. A review on image enhancement and restoration techniques for underwater optical imaging applications. IEEE Accesshttps://doi.org/10.1109/ACCESS.2023.3322153 (2023).

Raveendran, S., Patil, M. D. & Birajdar, G. K. Underwater image enhancement: a comprehensive review, recent trends, challenges and applications. Artif. Intell. Rev. 54, 5413–5467, https://doi.org/10.1007/s10462-021-10025-z (2021).

Lei, F., Tang, F. & Li, S. Underwater target detection algorithm based on improved yolov5. J. Mar. Sci. Eng. 10, 310, https://doi.org/10.3390/jmse10030310 (2022).

Islam, M. J. et al. Semantic segmentation of underwater imagery: Dataset and benchmark. In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 1769-1776, https://doi.org/10.1109/IROS45743.2020.9340821227 (2020).

Beall, C., Lawrence, B. J., Ila, V. & Dellaert, F. 3d reconstruction of underwater structures. In 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems 4418-4423, https://doi.org/10.1109/IROS.2010.5649213 (2010).

Hu, K. et al. Overview of underwater 3d reconstruction technology based on optical images. J. Mar. Sci. Eng. 11, 949, https://doi.org/10.3390/jmse11050949 (2023).

Maccarone, A. et al. Submerged single-photon lidar imaging sensor used for real-time 3d scene reconstruction in scattering underwater environments. Opt. Express 31, 16690–16708, https://doi.org/10.1364/OE.487129 (2023).

Igbinenikaro, O. P., Adekoya, O. O. & Etukudoh, E. A. Emerging underwater survey technologies: a review and future outlook. Open Access Res. J. Sci. Technol. 10, 071–084, https://doi.org/10.53022/oarjst.2024.10.2.0052 (2024).

Chu, X. A multimodal optical dataset for underwater image enhancement, detection, segmentation, and reconstruction. Version v2. Zenodo https://doi.org/10.5281/zenodo.14865039 (2025).

González-Sabbagh, S. P. & Robles-Kelly, A. A survey on underwater computer vision. ACM Comput. Surv. 5, 1–39, https://doi.org/10.1145/3578516 (2023).

Lim, L. W. K. Implementation of artificial intelligence in aquaculture and fisheries: deep learning, machine vision, big data, internet of things, robots and beyond. J. Comput. Cogn. Eng. 3, 112–118, https://doi.org/10.47852/bonviewJCCE3202803 (2024).

Fu, C. et al. Rethinking general underwater object detection: Datasets, challenges, and solutions. Neurocomputing 517, 243–256, https://doi.org/10.1016/j.neucom.2022.10.039 (2023).

Thisanke, H. et al. Semantic segmentation using vision transformers: A survey. Eng. Appl. Artif. Intell. 126, 106669, https://doi.org/10.48550/arXiv.2305.03273 (2023).

Luo, Z., Yang, W., Yuan, Y., Gou, R. & Li, X. Semantic segmentation of agricultural images: A survey. Inf. Process. Agric. https://doi.org/10.1016/j.inpa.2023.02.001 (2023).

Wang, X., Girdhar, R., Yu, S. X. & Misra, I. Cut and learn for unsupervised object detection and instance segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 3124-3134, https://doi.org/10.48550/arXiv.2301.11320 (2023).

Jung, K., Hitchcox, T. & Forbes, J. R. Performance evaluation of 3d keypoint detectors and descriptors on coloured point clouds in subsea environments. In 2023 IEEE International Conference on Robotics and Automation (ICRA), 1105-1111, https://doi.org/10.48550/arXiv.2209.12881 (2023).

Zhang, J.-H. et al. An accurate calibration method for multilayer refractive imaging in underwater 3-d scanning. IEEE/ASME Transactions on Mechatronics https://doi.org/10.1109/TMECH.2024.3405545 (2024).

Kirillov, A. et al. Segment anything, https://doi.org/10.48550/arXiv.2304.02643 (2023).

Qu, S., Cui, C., Duan, J., Lu, Y. & Pang, Z. Underwater small target detection under yolov8-la model. Sci. Reports 14, 16108, https://doi.org/10.1038/s41598-024-66950-w (2024).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.62301107). The authors express their gratitude to all collaborators at Magics Lab, Dalian Maritime University, for their contributions.

Author information

Authors and Affiliations

Contributions

The project was first conceived by Siyuan Liu, Siyuan Liu coordinated with Xuanhe Chu, and the data classification was drafted by Xuanhe Chu, Dehua Zou, Junwen Tian, Shijian Zhou, Han Chen and Qi Gao, with additional inputs from Siyuan Liu, Yancheng Liu, and Xianping Fu. Xuanhe Chu was in charge of the design of the simulated underwater scene, Xuanhe Chu, Dehua Zou, Junwen Tian, Shijian Zhou, Jianda Wang, Jingchun Zhou, and Qi Gao was responsible for the underwater scene construction. Xuanhe Chu and Junwen Tian led the data acquisition. Junwen Tian and Shijian Zhou commissioned the equipment and processed the dataset. Dehua Zou led the dataset categorisation and labelling. Xuanhe Chu wrote the initial outline of the manuscript, and all authors were involved in the subsequent refinement of the manuscript and provided editorial feedback on it.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chu, X., Chen, H., Zou, D. et al. A Multimodal Optical Dataset for Underwater Image Enhancement, Detection, Segmentation, and Reconstruction. Sci Data 12, 1554 (2025). https://doi.org/10.1038/s41597-025-05797-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-05797-w