Abstract

Retinal fundus photographs are now widely used in developing artificial intelligence (AI) systems for the detection of various fundus diseases. However, the application of AI algorithms in intraocular tumors remains limited due to the scarcity of large, publicly available, and diverse multi-disease fundus image datasets. Ultra-wide-field (UWF) fundus images, with a broad imaging range, offer unique advantages for early detection of intraocular tumors. In this study, we developed a comprehensive dataset containing 2,031 UWF fundus images, encompassing five distinct types of intraocular tumors and normal images. These images were classified by three expert annotators to ensure accurate labeling. This dataset aims to provide a robust, multi-disease, real-world data source to facilitate the development and validation of AI models for the early diagnosis and classification of intraocular tumors.

Similar content being viewed by others

Background & Summary

Intraocular tumors can be categorized as either benign or malignant1. Benign tumors include choroidal hemangioma (CH)2, retinal capillary hemangioma (RCH)3, choroidal osteoma (CO)4, et cetera, while malignant tumors encompass retinoblastoma (RB)5, uveal melanoma (UM)6, and intraocular metastases7. Both benign and malignant tumors can cause visual impairment, with malignant tumors posing a substantial risk of mortality due to their potential for local invasion and metastasis4,6,8. The insidious onset of intraocular tumors often leads to delayed medical treatment, as most patients seeking medical care only after experiencing significant visual impairment. Early and accurate diagnosis of intraocular tumors is critical in preserving vision and preventing potentially life-threatening complications.

Fundus photography, as a non-invasive imaging modality, is widely regarded as an effective tool for screening, diagnosis, and monitoring of fundus diseases9,10. Compared with the 30° to 45° field of view provided by traditional fundus color imaging, the emerging ultra-wide-field (UWF) fundus imaging system can capture a 200° panoramic retinal image11. This wide-field imaging is particularly crucial for detecting and monitoring intraocular tumors, which frequently extend into or present in the peripheral fundus12. It facilitates the identification of early-stage lesions and enables more accurate monitoring of tumor progression.

Recent advancements in artificial intelligence (AI) have shown great promise in automating the screening and diagnosis of fundus diseases, including diabetic retinopathy13, age-related macular degeneration14, glaucoma15, and retinopathy of prematurity16. AI algorithms trained on large datasets of fundus photographs have achieved good diagnostic performance in sensitivity and specificity and addressed the immense screening burden. However, the application of AI algorithms to intraocular tumors remains limited, primarily due to the scarcity of cases and the lack of large, publicly available fundus image datasets17. Currently, most fundus image datasets for intraocular tumors are non-public, typically focus on single disease type, and predominantly utilize traditional fundus photography18,19,20,21. We have recently published a dataset for intelligent diagnosing ROP; however, these datasets are not yet capable of supporting the automatic diagnosis of multiple types of intraocular tumors16. These limitations significantly hinder the development and validation of AI models for intraocular tumors.

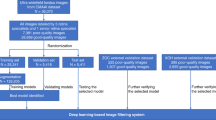

To address these challenges, this study aims to provide a more comprehensive dataset of UWF fundus images, encompassing multiple intraocular tumors alongside normal fundus. Brief description of the study is present in Fig. 1. This dataset is intended to enhance the training and validation of AI algorithms, facilitating improved diagnostic accuracy and ultimately contributing to better patient outcomes in the management of intraocular tumors.

Accurate diagnosis of intraocular tumors in clinical practice typically requires the integration of multimodal information, including patient history, ultrasonography, OCT, CT, MRI, and histopathological findings, in addition to fundus imaging. In this study, we focused exclusively on UWF fundus images to construct a foundational, image-based dataset intended for developing AI tools capable of automated lesion screening and early referral. Therefore, clinical metadata and additional imaging modalities were not included in the current version. We acknowledge this as a limitation. In addition, the dataset does not yet include tumor-mimicking conditions (such as retinal detachment, choroidal nevi, or Coats’ disease) which may resemble intraocular tumors in fundus photographs. Incorporating such cases, along with multimodal clinical data, will be a critical step in future work to enhance the model’s specificity, support differential diagnosis, and improve its clinical applicability.

Methods

Data collection

A total of 2,031 UWF fundus images were collected from Shenzhen Eye Hospital (SZEH) between 2019 and 2024. All images were captured using the Optomap Daytona scanning laser ophthalmoscope (SLO), an ultra-wide-field fundus camera. Fundus images that were blocked, blurry, or unfocused were excluded during the image quality control process. All selected images were exported in JPG format. The dataset consists of 677 UWF fundus images from intraocular tumors patients and 1354 UWF fundus images from healthy participants, collected from 332 eyes of 218 individuals, all of whom are Asian. Due to the progressive nature of intraocular tumor and the necessity for ongoing treatment and follow-up, a single patient may have multiple fundus images captured from the same eye at different stages of the disease. Thus, the dataset includes multiple images from the same eye, reflecting tumor changes or treatment responses over time. The data collection was approved by the Ethics Committee of Shenzhen Eye Hospital (2024KYPJ108), and informed consent was waived due to the retrospective design and data anonymization.

Image categorization

All UWF fundus images were divided into six categories: normal images and five types of intraocular tumors, including Choroidal Hemangioma (CH), Retinal Capillary Hemangioma (RCH), Choroidal Osteoma (CO), Retinoblastoma (RB), and Uveal Melanoma (UM). Representative fundus images of each category are shown in Fig. 2. The images were classified by three experienced annotators from SZEH. Specifically, each UWF image was independently classified by two junior annotators independently. In cases of inconsistent classifications, a senior annotator reviewed and reclassified the images to ensure accuracy. Table 1 presents the distribution of images across the six categories. T-distributed Stochastic Neighbor Embedding (t-SNE) was used to visualize the distributions for different categories of the dataset as described previously16 (Fig. 3).

Data Records

The dataset, titled “UWF Fundus Images of Intraocular Tumors”, is publicly available on Figshare22. It is provided as a zipped file containing six subfolders, each corresponding to a specific category: Normal, Choroidal Hemangioma (CH), Retinal Capillary Hemangioma (RCH), Choroidal Osteoma (CO), Retinoblastoma (RB), and Uveal Melanoma (UM). Each subfolder includes all the fundus images belonging to its respective category. The dataset is designed to facilitate the development of AI algorithms aimed at automating the detection of intraocular tumors.

Technical Validation

To evaluate the dataset’s utility for training and testing AI models, we developed four deep learning models to automatically identify intraocular tumors based on UWF fundus images. The dataset was randomly divided into training, validation, and test sets using a stratified sampling approach with an 8:1:1 ratio. This stratification ensures that the proportional distribution of each of the six categories is preserved across all subsets, as detailed in Table 1. The models were implemented using the PyTorch framework and trained on an NVIDIA V100 GPU. Prior to training, all input images were resized to 224 × 224 pixels. To enhance model generalization and prevent overfitting, we applied a series of data augmentation techniques, including random horizontal and vertical flips, random rotations, and color jitter. For this 6-class classification task, we used the standard Cross-Entropy Loss function, defined as:

where \({y}_{i}\) is the binary indicator (1 if class i is the correct classification, and 0 otherwise), and \({\hat{y}}_{i}\) is the predicted probability for class i. The models were optimized using the Stochastic Gradient Descent (SGD) optimizer. The initial learning rate was set to 0.001 and was managed by a dynamic schedule that combined a linear warmup phase with cosine annealing to ensure stable and effective convergence. Training was conducted for 100 epochs with a batch size of 256. Our evaluation protocol consisted of training the model on the training set, selecting the best-performing checkpoint based on accuracy on a separate validation set, and finally, reporting the model’s performance on an unseen test set to ensure an unbiased assessment. We are confident these additions will greatly enhance the clarity and credibility of our work. Four algorithms (ResNet5023, ResNet10124, ConvNeXt-T25, and ViT-B26) were selected for AI model development and validation (Fig. 4). To validate the robustness of our models, we conducted five independent runs for each model, using a different random seed for each run. The performance of each model was evaluated using various metrics, including accuracy, area under the receiver operating characteristic curve (AUC), precision, sensitivity, F1 score, specificity, and kappa (Table 2). Based on the results from these five runs, we have calculated the mean, standard deviation (SD), and 95% confidence intervals (CI) for every performance metric. Additionally, confusion matrix was utilized to clearly illustrate the correspondence between the AI model’s predictions and actual classifications, facilitating comprehensive performance evaluation and error analysis. To further understand the classification performance of the models, we employed t-SNE to visualize the high-dimensional feature embeddings learned by each model on the test set. The t-SNE visualizations were generated based on the final-layer feature embeddings of each model, extracted just before the classification head. These visualizations offer qualitative insights into how the models organize the data in their learned feature space, complementing the quantitative metrics. A visual inspection of the t-SNE plots (Fig. 4c) reveals several key findings. First, all four models effectively separate the Normal and Retinoblastoma (RB) classes from the other tumor types, indicating that the features of these two categories are distinct enough to be learned reliably. However, the clusters produced by the ViT-B model for these classes exhibit greater intra-class compactness, with data points being more tightly grouped, suggesting a more confident and consistent feature extraction. In contrast, for the more challenging and visually similar tumor types—Choroidal Osteoma (CO), Uveal Melanoma (UM), Choroidal Hemangioma (CH), and Retinal Capillary Hemangioma (RCH)—the plots for ResNet50, ResNet101, and ConvNeXt-T show considerable overlap between clusters. This visual confusion directly corresponds to the off-diagonal errors observed in their respective confusion matrices (Fig. 4a). The ViT-B model, however, demonstrates superior inter-class separability even among these difficult classes, with more defined boundaries between the clusters.

The results (Table 2) show that while all models achieved high specificity (all > 94%), their performance varied considerably across other key metrics like accuracy, sensitivity, and F1 score. Notably, the ViT-B model demonstrated the strongest and most consistent overall performance. It achieved the highest mean accuracy of 91.46%, AUC of 96.87%, F1 score of 81.42%, and Kappa score of 84.14%. This suggests that the Vision Transformer architecture is particularly effective at learning the discriminative features required for classifying a diverse set of intraocular tumors from UWF fundus images. To formally validate whether the observed superiority of the ViT-B model was statistically significant, we performed the DeLong test to compare the AUC of ViT-B against each of the other three models. The results confirmed a statistically significant advantage for the ViT-B model in all comparisons: ViT-B vs. ResNet50 (p = 0.00793), ViT-B vs. ResNet101 (p < 0.00001), and ViT-B vs. ConvNeXt-T (p = 0.0003). This statistical evidence strongly supports the conclusion that the ViT-B model provides a significant improvement in diagnostic performance for this task.

External Validation

To further evaluate the generalizability of the AI models developed using the dataset in this study, an external validation dataset from the Eye, Ear, Nose, and Throat (EENT) Hospital of Fudan University was employed27. This external dataset included 16 UWF fundus images of RB, 27 of UM, 16 of CH, and 11 of RCH. The AI models’ excellent performance on external validation further demonstrates their applicability in the real-world clinical settings and supports the usability of dataset used in this study (Table 3 and Fig. 5). Taken together, these results demonstrate both the high technical quality of the dataset and its potential for developing AI-driven diagnostic tools for intraocular tumors.

Code availability

No novel code was used in the construction of the dataset.

References

Oyemade, K. A. et al. Population-based incidence of intraocular tumours in Olmsted County, Minnesota. The British journal of ophthalmology 107, 1369–1376, https://doi.org/10.1136/bjophthalmol-2021-320682 (2023).

Si, Y. et al. Comparison of the therapeutic effects of photodynamic therapy, transpupillary thermotherapy, and their combination on circumscribed choroidal haemangioma. Photodiagnosis and photodynamic therapy 48, 104250, https://doi.org/10.1016/j.pdpdt.2024.104250 (2024).

Zhang, X. et al. Vitreoretinal Surgery for Retinal Capillary Hemangiomas With Retinal Detachment. Asia-Pacific journal of ophthalmology (Philadelphia, Pa.) 12, 623–625, https://doi.org/10.1097/apo.0000000000000588 (2023).

Zhang, L., Ran, Q. B., Lei, C. Y. & Zhang, M. X. Clinical features and therapeutic management of choroidal osteoma: A systematic review. Survey of ophthalmology 68, 1084–1092, https://doi.org/10.1016/j.survophthal.2023.06.002 (2023).

Zhou, M. et al. Recent progress in retinoblastoma: Pathogenesis, presentation, diagnosis and management. Asia-Pacific journal of ophthalmology (Philadelphia, Pa.) 13, 100058, https://doi.org/10.1016/j.apjo.2024.100058 (2024).

Carvajal, R. D. et al. Advances in the clinical management of uveal melanoma. Nature reviews. Clinical oncology 20, 99–115, https://doi.org/10.1038/s41571-022-00714-1 (2023).

Konstantinidis, L. & Damato, B. Intraocular Metastases–A Review. Asia-Pacific journal of ophthalmology (Philadelphia, Pa.) 6, 208–214, https://doi.org/10.22608/apo.201712 (2017).

Vempuluru, V. S., Maniar, A. & Kaliki, S. Global retinoblastoma studies: A review. Clinical & experimental ophthalmology 52, 334–354, https://doi.org/10.1111/ceo.14357 (2024).

Iqbal, S., Khan, T. M., Naveed, K., Naqvi, S. S. & Nawaz, S. J. Recent trends and advances in fundus image analysis: A review. Computers in biology and medicine 151, 106277, https://doi.org/10.1016/j.compbiomed.2022.106277 (2022).

Begum, T. et al. Diagnostic Accuracy of Detecting Diabetic Retinopathy by Using Digital Fundus Photographs in the Peripheral Health Facilities of Bangladesh: Validation Study. JMIR public health and surveillance 7, e23538, https://doi.org/10.2196/23538 (2021).

Midena, E. et al. Ultra-wide-field fundus photography compared to ophthalmoscopy in diagnosing and classifying major retinal diseases. Scientific reports 12, 19287, https://doi.org/10.1038/s41598-022-23170-4 (2022).

Callaway, N. F. & Mruthyunjaya, P. Widefield imaging of retinal and choroidal tumors. International journal of retina and vitreous 5, 49, https://doi.org/10.1186/s40942-019-0196-5 (2019).

Rajesh, A. E., Davidson, O. Q., Lee, C. S. & Lee, A. Y. Artificial Intelligence and Diabetic Retinopathy: AI Framework, Prospective Studies, Head-to-head Validation, and Cost-effectiveness. Diabetes care 46, 1728–1739, https://doi.org/10.2337/dci23-0032 (2023).

Wei, W., Anantharanjit, R., Patel, R. P. & Cordeiro, M. F. Detection of macular atrophy in age-related macular degeneration aided by artificial intelligence. Expert review of molecular diagnostics 23, 485–494, https://doi.org/10.1080/14737159.2023.2208751 (2023).

Coan, L. J. et al. Automatic detection of glaucoma via fundus imaging and artificial intelligence: A review. Survey of ophthalmology 68, 17–41, https://doi.org/10.1016/j.survophthal.2022.08.005 (2023).

Zhao, X. et al. A fundus image dataset for intelligent retinopathy of prematurity system. Scientific data 11, 543, https://doi.org/10.1038/s41597-024-03362-5 (2024).

Koseoglu, N. D., Corrêa, Z. M. & Liu, T. Y. A. Artificial intelligence for ocular oncology. Current opinion in ophthalmology 34, 437–440, https://doi.org/10.1097/icu.0000000000000982 (2023).

Chandrabhatla, A. S., Horgan, T. M., Cotton, C. C., Ambati, N. K. & Shildkrot, Y. E. Clinical Applications of Machine Learning in the Management of Intraocular Cancers: A Narrative Review. Investigative ophthalmology & visual science 64, 29, https://doi.org/10.1167/iovs.64.10.29 (2023).

Zhang, R. et al. Automatic retinoblastoma screening and surveillance using deep learning. British journal of cancer 129, 466–474, https://doi.org/10.1038/s41416-023-02320-z (2023).

Rahdar, A. et al. Semi-supervised segmentation of retinoblastoma tumors in fundus images. Scientific reports 13, 13010, https://doi.org/10.1038/s41598-023-39909-6 (2023).

Hoffmann, L. et al. Using Deep Learning to Distinguish Highly Malignant Uveal Melanoma from Benign Choroidal Nevi. Journal of clinical medicine 13, https://doi.org/10.3390/jcm13144141 (2024).

Sun, J. et al. An ultra-wide-field fundus image dataset for intelligent diagnosis of intraocular tumors. figshare https://doi.org/10.6084/m9.figshare.27986258 (2025).

Zhang, Y. et al. Deep Learning-based Automatic Diagnosis of Breast Cancer on MRI Using Mask R-CNN for Detection Followed by ResNet50 for Classification. Academic radiology 30(Suppl 2), S161–s171, https://doi.org/10.1016/j.acra.2022.12.038 (2023).

Lee, J. et al. Immune response and mesenchymal transition of papillary thyroid carcinoma reflected in ultrasonography features assessed by radiologists and deep learning. Journal of advanced research 62, 219–228, https://doi.org/10.1016/j.jare.2023.09.043 (2024).

Chen, H. et al. Nondestructive testing of runny salted egg yolk based on improved ConvNeXt-T. Journal of food science 89, 3369–3383, https://doi.org/10.1111/1750-3841.17010 (2024).

Wang, Z. et al. HCEs-Net: Hepatic cystic echinococcosis classification ensemble model based on tree-structured Parzen estimator and snap-shot approach. Medical physics 50, 4244–4254, https://doi.org/10.1002/mp.16444 (2023).

Tan, W. et al. Fairer AI in ophthalmology via implicit fairness learning for mitigating sexism and ageism. Nature communications 15, 4750, https://doi.org/10.1038/s41467-024-48972-0 (2024).

Acknowledgements

This work was supported by National Natural Science Foundation of China (82271103, 82401315), Sanming Project of Medicine in Shenzhen (SZSM202311018), Shenzhen Medical Research Fund (C2301005, A2403020), Shenzhen Key Medical Discipline Construction Fund (SZXK038), Shenzhen Fund for Guangdong Provincial High Level Clinical Key Specialties (SZGSP014), Shenzhen Science and Technology Program (JCYJ20240813152703005), Science and Technology Development Fund of Macao (0009/2025/ITP1) and grant from Macao Polytechnic University (RP/FCA-08/2024).

Author information

Authors and Affiliations

Contributions

J.S., X.Z., S.C.: equal contribution; J.S., X.Z.: data collection, writing; S.C.: data quality control, image classification; Y.Z.: methodology; G.Z., Y.S., H.R.: conceptualization, reviewing; G.Z., X,Z., Y.S.: funding acquisition; All authors have contributed to writing the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Sun, J., Zhao, X., Chen, S. et al. An ultra-wide-field fundus image dataset for intelligent diagnosis of intraocular tumors. Sci Data 12, 1521 (2025). https://doi.org/10.1038/s41597-025-05864-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-025-05864-2