Abstract

Endoscopic spine surgery (ESS) is a minimally invasive procedure used for spinal nerve decompression, herniated disc removal, and spinal fusion. Despite its many advantages, its steep learning curve poses a challenge to widespread adoption. The development of artificial intelligence (AI) systems is crucial for enhancing the precision and safety of ESS. The automatic segmentation of surgical instruments is a key step towards realizing intelligent surgical assistance systems. As such, this paper has created the Spine Endoscopic Atlas (SEA) dataset, a comprehensive collection of annotated images encompassing all instruments commonly used in spinal endoscopic surgery. In total, SEA contains 48,510 images and 10,662 instrument segmentations derived from real-world ESS. This dataset is specifically designed to train deep learning models for precise instrument segmentation. Through validation of five models, we demonstrate the dataset’s value in improving segmentation accuracy under complex conditions, providing a foundation for future AI advancements in ESS.

Similar content being viewed by others

Background & Summary

Endoscopic spine surgery (ESS) is a minimally invasive surgical technique commonly applied to procedures including decompression of spinal nerves, removal of herniated discs, and spinal fusion1. This advanced surgical approach allows surgeons to operate with minimal disruption to surrounding tissues, resulting in less postoperative pain, faster recovery, reduced scarring, and shorter hospital stays compared to traditional open surgery2,3,4. However, the steep learning curve represents a significant obstacle to the widespread adoption of these techniques by surgeons5,6. The technical intricate nature of the surgical procedure, along with the limited operational space, requires surgeons to possess a high degree of precision and control when manipulating surgical instruments. Young surgeons often lack surgical experience, which can lead to various issues when managing patients with diverse pathological presentations. In particular, situations such as epidural hematoma or significant bleeding also pose serious risks to patient safety7. In recent years, the rapid advancement of artificial intelligence (AI) has driven innovation and development in disease diagnosis and surgical assistance systems based on endoscopic data8,9,10,11,12. Furthermore, the implementation of computer-assisted interventions can enhance navigation during surgery, automate image interpretation, and enable the operation of robotic-assisted tools13. Therefore, developing intelligent systems capable of real-time decision-making during surgery is a key direction for the future of endoscopic spinal surgery, holding great potential for enhancing the intelligence, safety and efficiency of procedures.

The automatic segmentation of surgical instrument boundaries is the first step in realizing the intelligent surgical assistance system for ESS. It is also a crucial prerequisite for efficient tracking and posture estimation of instruments during surgery. However, during the surgeries, surgeons often need to frequently switch instruments and adjust the position of the access port in real-time, which can lead to deformations in the instruments’ appearance within the field of view. Moreover, unlike laparoscopic surgery, ESS is performed in a fluid-irrigated environment. The diversity of surgical instruments and the uncertainties in the surgical environment (such as blood and bubble interference, tissue occlusion, and overexposure) pose greater challenges to the tracking and rapid adaptation capabilities of computer-assisted systems. Currently, deep learning algorithms represent the most advanced technologies for automatic instrument boundary segmentation in medical imaging14,15,16,17,18,19. These algorithms, such as UNet, CaraNet, CENet, nnU-Net and YOLOv11x, share key strengths in medical instrument segmentation by effectively capturing global information, offering versatility in training, and excelling in the precise segmentation of both large and small objects. Their combined advancements in context awareness and feature extraction make them particularly well-suited for the complex challenges of instrument segmentation in medical imaging17,20,21,22,23,24.

Large-scale, high-quality datasets are the cornerstone for developing successful deep learning models. Balu A et al.25 released a simulated minimally invasive spinal surgery video dataset (SOSpine), which includes corresponding surgical outcomes and annotated surgical instruments. This dataset was used to train neurosurgery residents through a validated minimally invasive spine surgery cadaveric dura repair simulator. However, there still lacks specialized and publicly accessible image datasets in the field of ESS. Therefore, we have created the first image dataset (Spine Endoscopic Atlas, SEA) of endoscopic surgical instruments based on real surgical procedures. The new dataset comprises a total of 48,510 images from two centers, covering both cervical and lumbar endoscopic surgeries involving both small and large channel procedures. We have segmented part of the surgical instrument boundaries and categorized them meticulously. A large amount of unsegmented image data provides abundant training material for semi-supervised learning. By using a small set of segmented and annotated images as supervision signals, the model can leverage the unsegmented images to automatically learn structural features, significantly reducing the annotation workload26,27,28. Moreover, by combining segmented and unsegmented data, the model can learn more diverse features, enhancing its ability to recognize surgical instruments under various conditions29. This utilization of unsegmented data helps prevent the model from overfitting to the limited annotated data, thereby improving its generalization performance when encountering new data. We believe that publishing this dataset will greatly assist researchers in enhancing existing algorithms, integrating data from multiple centers, and improving the intelligence of ESS more efficiently.

Method

Ethics statement

Shenzhen Nanshan People’s Hospital’s ethics committee (Ethics ID: ky-2024-101601) had granted a waiver of informed consent for this retrospective study. As all data were strictly anonymized after video export, with no involvement of any identifiable or sensitive patient information, the committee approved the use of the anonymized data for research purposes. Additionally, the approval allows for the open publication of the dataset.

Data collection

This study retrospectively collected endoscopic surgery videos of the cervical and lumbar spine from a total of 119 patients. All of the operations were performed by senior surgeons at two medical centers between January 1, 2022 and December 31, 2023. The video recordings were captured using standard endoscopic equipment from STORZ (IMAGE1 S) and L’CARE company, with all videos recorded in 1080 P/720 P at a frame rate of 60 frames per second. The endoscopes are from Joimax and Spinendos companies, respectively. To ensure the originality and completeness of the data, no form of preprocessing was performed before data storage. The video data were not subjected to denoising, cropping, color adjustments, or any other modifications, thus preserving all potentially important details during the surgery to provide the most authentic foundational data for subsequent image analysis and model training. All videos and images were automatically anonymized at the time of export from the recording devices and during the process of frame extraction. This procedure ensured that the final dataset contained no personally identifiable information and that individual participants could not be traced from the stored data.

Data processing, annotation and quality assessment

Image data

We created the SEA dataset using the collected video data. An expert with extensive experience in image processing selected segments from each video where the instruments appeared frequently. One frame per second was extracted from these segments, with priority given to selecting images that presented segmentation challenges. Data was excluded if no instruments appeared in the field of view. Each sample was stored as an independent entry in the corresponding folder.

The display of surgical instruments may vary under different perspectives. Specifically, the shape, size, contours, and visible parts of the instruments in the image may change due to variations in the endoscopic field of view. Thus, the images were preliminarily classified based on the size of the surgical working channel. Images where the instrument boundaries were continuous and clear were categorized as “Normal scenario”, while images where the instruments were obscured by issues like blood, bubbles, or tissue were classified as “Difficult scenario”. The six main instruments appearing in the dataset were further classified into designated folders. After classification, two experts reviewed the dataset repeatedly. It is worth noting that, despite efforts to minimize subjective errors through re-evaluation of the dataset, certain errors caused by lighting conditions (such as overexposure or underexposure) may still persist.

Segmentation of images

The segmentation process was performed using LabelMe (v5.0.2) and 3D Slicer (v5.0.2). Prior to the commencement of the annotation process, multiple preparatory meetings were conducted to thoroughly explain the annotation standards applicable to various scenarios. These sessions included detailed guidelines as well as hands-on practice with representative examples. Furthermore, feedback was provided to ensure the annotators achieved a consistent understanding of the criteria. In the initial stages of annotation, two annotators independently labeled a set of 200 images from the same dataset, followed by a consistency analysis of the annotation results. These annotations were then reviewed by two junior reviewers. Any annotations that did not meet the standards were returned for re-labeling until they were approved. Afterward, formal annotation began, with the annotators dividing the remaining tasks between them. Throughout the process, the two junior reviewers continued to assess the annotations, and when uncertain data was encountered, a senior reviewer conducted a final review to determine if any annotations needed to be returned for revision. The specific process flow can be seen in Fig. 1. It is particularly noteworthy that for instruments partially obscured by factors such as blood, tissue, or lighting issues, clinicians were still required to outline the full contours of the instruments as accurately as possible. For cases where most parts were unclear, the segmentation focused on the visible parts. Figure 2 illustrates examples of segmentation results for various types of surgical instruments.

Data statistics

Tables 1, 2 show the overview of data record and image dimensions in the dataset. The dataset comprises a total of 4,8510 images. These images come in three different dimension and are stored in both JPG and PNG formats. The total size of these image files is 9.99 GB (gigabyte). The segmentation mask files are stored in NRRD format only. The total size of all segmentation files is 0.08 GB.

Figure 3a shows the proportional relationship between the number of images in two different scenarios, with a clear bias towards images from difficult scenarios in the dataset. Regarding the issue of data imbalance, we did not employ any specific measure. If a model performs well only in normal scenarios, it may fall short in practical applications. By increasing the number of images from difficult scenarios, the model can gain more training data in these complex conditions, thereby enhancing its robustness and generalization capability.

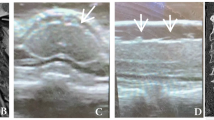

In a fluid-irrigated environment, instrument visibility issue may cause significant challenge. Figure 4 illustrates different types of challenging situations. When an instrument occupies less than 20% of the entire field of view, it is considered to have a small proportion. The dataset does not provide individual annotations for each specific difficulty, as these challenges often do not exist in isolation but rather arise from a combination of multiple factors occurring simultaneously, such as limited visibility, obstructed instrument operation, and difficulty in controlling bleeding.

Figure 3b reveals the distribution differences of various types of surgical instruments in the dataset. The number of images for grasping forceps and bipolar was higher, at 4,918 and 2,933 images, respectively. The number of images for drill and punch is slightly lower, which is related to their use only in specific steps during surgeries. The dataset contains only 407 images of scissors and 241 images of dissectors, indicating that these instruments are relatively less frequently used during surgeries.

Data description

The dataset is available at the Figshare repository30. The entire folder structure is shown in Fig. 5. In general, the image dataset is divided into two main folders: “classified” and “unclassified”. To increase the diversity of recording equipment and sample variety, data from two medical centers have been included. Within the “classified” folder, the data is further divided into “big channel” and “small channel” subfolders based on the size of the working channel (diameter = 6.0 mm and 3.7 mm). Additionally, the data is categorized according to the surgical region, with separate folders for the “cervical” and “lumbar” areas. Each patient is treated as an individual sample stored in a separate folder. Samples from medical center 1 and 2 are labeled as “P + Patient ID” and “T + Patient ID”, respectively. Inside each sample folder, images of instruments are meticulously classified and stored under the “Normal scenario” and “Difficult scenario” subfolders. Within the respective scenario folders, both the images and their corresponding annotation files are organized under instrument-specific subfolders (e.g., “bipolar”, “grasping forceps”, “drill”, “dissector”, “punch”, “scissor”). The naming convention for both the image and annotation files follows this format: “P/T + Patient ID + video index + frame index” (e.g., P7-2-0204). All unclassified data comes from medical center 1, providing researchers with a rich source of raw data that aids in the development of more precise algorithms for automatically identifying and classifying complex medical images and instrument features.

Technical Validation

we performed a consistency analysis on 200 samples annotated by two annotators in the early stages, as shown in Table 3. In Stage 1, we conducted an initial consistency assessment of the annotation results from the first 100 cases completed by the two annotators. The results were then reviewed by two junior reviewers, who returned them for revision as needed until all cases were approved. Upon completion, the process advanced to Stage 2, where the annotators annotated an additional 100 new cases. A second consistency assessment was then carried out on these results. The evaluation metrics included the Dice coefficient (DC) and Intersection over Union (IoU) values. The results showed that in Stage 1, the DC was 0.8743, and the IoU was 0.8021. In Stage 2, both metrics improved significantly, with the DC reaching 0.9550 and the IoU increasing to 0.9166, indicating enhanced consistency and reliability of the annotations. This two-stage validation approach not only helped identify and correct early inconsistencies in the annotation process but also facilitated continuous improvement in annotation quality. By implementing iterative reviews and quantitative assessments, we ensured that the final dataset maintained high standards of accuracy and consistency, which is critical for downstream tasks such as model training, evaluation, and clinical applicability.

To compare the performance of different deep learning algorithms in instrument segmentation within SEA, we trained and tested five deep learning models: UNet, CaraNet, CENet, nnU-Net and YOLOv11x. The selection of each model was based on its suitability for medical image segmentation and its proven performance in related tasks.

CENet

CENet is designed to capture both local and global features by integrating context encoding with convolutional operations20,31, making it highly effective for tasks where precise segmentation of instruments is required in complex environments. The context module encodes rich global information, which is particularly important for handling scenarios with occlusions, bubbles, or low contrast, as highlighted in the dataset.

U-Net

U-Net is one of the most widely used architectures for medical image segmentation due to its encoder-decoder structure with skip connections21,32. It excels at preserving spatial information during down-sampling, which is crucial for accurate segmentation of surgical instruments, even when only a small portion of the instrument is visible.

CaraNet

CaraNet is an innovative medical image segmentation model that significantly enhances segmentation accuracy and detail capture by incorporating a reversed attention mechanism and context information enhancement module22. The model excels at capturing image details and edges, making it suitable for medical image segmentation tasks in complex backgrounds.

nnU-Net

nnU-Net is an adaptive framework based on the U-Net architecture23,33, specifically designed for medical image segmentation tasks. Compared to traditional U-Net models, nnU-Net not only provides the model architecture but also includes a complete automated pipeline for preprocessing, training, inference, and post-processing, thereby simplifying the adaptation to different datasets.

YOLOv11x

YOLOv11x is the latest real-time object detection model in the YOLO series24, combining advanced architectural design and feature extraction capabilities. Compared to YOLOv8, YOLOv11 achieves higher mean accuracy on the COCO dataset and improves computational efficiency.

Validation procedure

The SEA was divided into a training set (80%) and two testing sets (20%), with the testing set further split into local (10%) and external (10%) testing. The training set was exclusively derived from Medical Center 1, whereas data from Medical Center 2 was reserved for external validation and excluded from the training phase. Each model was trained and tested according to this distribution. For training, we used Adam optimizer with a learning rate scheduler to adjust the learning rate dynamically. Cross-entropy loss combined with dice loss was used as the loss function to handle class imbalance, as some instrument categories (such as dissector and scissor) had fewer samples.

Performance metrics

The dice coefficient and intersection over union (IoU) are used to evaluate the performance of the five deep learning models in the task of instrument segmentation in SEA. Both metrics are used to measure the similarity between the predicted results and the ground truth labels, with higher values indicating better predictive performance. The dice coefficient is more effective in handling class imbalance issues, while IoU more intuitively reflects the proportion of the overlap area relative to the union.

Results

On the local test set, nnU-Net performed the best, with a dice coefficient of 0.9753 and an IoU of 0.9531, slightly higher than the other models. On the external test set, nnU-Net’s dice coefficient and IoU still remained at relatively high levels, 0.9376 and 0.8966, demonstrating better generalization ability. While U-Net, CaraNet and YOLOv11x showed a significant decline in performance. The specific results can be found in Table 4, while Figs. 6, 7 illustrate the automatic segmentation quality of different models.

Overall, the model’s performance declines on external test set, especially with the U-Net model, revealing that when the training data comes from a single center or has a concentrated distribution, the model’s performance significantly deteriorates when facing data from different sources. It is obvious that the new environment poses a challenge to the model’s generalization ability. Consequently, the SEA demonstrates significant value in improving models and advancing the intelligent development of minimally invasive spinal surgery in the future.

Usage Notes

This study introduces the first comprehensive instrument dataset for endoscopic spine surgery (ESS), designed to accelerate the development of AI-driven solutions in minimally invasive spinal interventions. The dataset is uniquely positioned to support a spectrum of downstream tasks critical to intelligent surgical systems, including real-time tool tracking, context-aware navigation, procedural action recognition, safety landmark detection, and 3D pose estimation of instruments. These capabilities address fundamental challenges in ESS, such as maintaining spatial awareness in confined anatomical spaces and mitigating risks associated with instrument-tissue interactions.

The clinical significance of this resource extends beyond conventional instrument segmentation. By providing high-fidelity annotations across diverse surgical scenarios (cervical/lumbar procedures, variable channel sizes), the dataset enables systematic investigation of AI’s role in enhancing surgical precision—particularly in reducing positional errors during decompression—and improving safety through early detection of hazardous instrument trajectories. Furthermore, the inclusion of temporal and environmental variability (fluid artifacts, tissue occlusion patterns) facilitates the development of robust models capable of adaptive performance under realistic surgical conditions.

Future efforts will be implemented in phases to expand the dataset’s clinical and technical impact. We plan to collaborate with at least three tertiary hospitals to incorporate their ESS surgical videos and clinical data, covering diverse regions (North and South China), surgical teams (senior experts and residents), and more variety of surgical instruments (such as suturing devices, ultrasonic bone cutters, etc.), thereby enhancing the dataset’s diversity and representativeness. Building upon existing annotations, we will introduce additional surgical phase labels (dural incision, nerve decompression) to support the development of surgical risk prediction models. These efforts will position the dataset as a critical resource for advancing intelligent ESS systems, enhancing surgical precision, safety, and efficiency worldwide.

Data availability

The dataset generated and analyzed during the current study has been deposited in the Figshare repository and is publicly available at https://doi.org/10.6084/m9.figshare.27109312.

Code availability

The dataset can be used without any additional code. All tools in the experiments are publicly accessible and available for use. The code for reading and converting segmentation masks is available and can be accessed within the second-level folder of the dataset30. And the code for models used in technical validation can be found at the following links respectively:

CENet: https://github.com/Guzaiwang/CE-Net

CaraNet: https://github.com/AngeLouCN/CaraNet

U-Net: https://github.com/Xzp1702/UNet-Wsj

References

Mayer, H. M. & Brock, M. Percutaneous endoscopic discectomy: surgical technique and preliminary results compared to microsurgical discectomy. Journal of neurosurgery 78, 216–225, https://doi.org/10.3171/jns.1993.78.2.0216 (1993).

Liu, Y. et al. Interlaminar Endoscopic Lumbar Discectomy Versus Microscopic Lumbar Discectomy: A Preliminary Analysis of L5-S1 Lumbar Disc Herniation Outcomes in Prospective Randomized Controlled Trials. Neurospine 20, 1457–1468, https://doi.org/10.14245/ns.2346674.337 (2023).

Ruetten, S., Komp, M., Merk, H. & Godolias, G. Full-endoscopic interlaminar and transforaminal lumbar discectomy versus conventional microsurgical technique: a prospective, randomized, controlled study. Spine 33, 931–939, https://doi.org/10.1097/BRS.0b013e31816c8af7 (2008).

Ruetten, S., Komp, M., Merk, H. & Godolias, G. Recurrent lumbar disc herniation after conventional discectomy: a prospective, randomized study comparing full-endoscopic interlaminar and transforaminal versus microsurgical revision. Journal of spinal disorders & techniques 22, 122–129, https://doi.org/10.1097/BSD.0b013e318175ddb4 (2009).

Lewandrowski, K. U. et al. Difficulties, Challenges, and the Learning Curve of Avoiding Complications in Lumbar Endoscopic Spine Surgery. International journal of spine surgery 15, S21–s37, https://doi.org/10.14444/8161 (2021).

Saravi, B. et al. Artificial intelligence-based analysis of associations between learning curve and clinical outcomes in endoscopic and microsurgical lumbar decompression surgery. European spine journal: official publication of the European Spine Society, the European Spinal Deformity Society, and the European Section of the Cervical Spine Research Society, https://doi.org/10.1007/s00586-023-08084-7 (2023).

Zhou, C. et al. Unique Complications of Percutaneous Endoscopic Lumbar Discectomy and Percutaneous Endoscopic Interlaminar Discectomy. Pain physician 21, E105–e112 (2018).

Xu, H. et al. Artificial Intelligence-Assisted Colonoscopy for Colorectal Cancer Screening: A Multicenter Randomized Controlled Trial. Clinical gastroenterology and hepatology: the official clinical practice journal of the American Gastroenterological Association 21, 337–346.e333, https://doi.org/10.1016/j.cgh.2022.07.006 (2023).

Hsiao, Y. J. et al. Application of artificial intelligence-driven endoscopic screening and diagnosis of gastric cancer. World journal of gastroenterology 27, 2979–2993, https://doi.org/10.3748/wjg.v27.i22.2979 (2021).

Sumiyama, K., Futakuchi, T., Kamba, S., Matsui, H. & Tamai, N. Artificial intelligence in endoscopy: Present and future perspectives. Digestive endoscopy: official journal of the Japan Gastroenterological Endoscopy Society 33, 218–230, https://doi.org/10.1111/den.13837 (2021).

Wang, B. et al. Development of Artificial Intelligence for Parathyroid Recognition During Endoscopic Thyroid Surgery. The Laryngoscope 132, 2516–2523, https://doi.org/10.1002/lary.30173 (2022).

Esmaeili, N. et al. Contact Endoscopy - Narrow Band Imaging (CE-NBI) data set for laryngeal lesion assessment. Scientific data 10, 733, https://doi.org/10.1038/s41597-023-02629-7 (2023).

Chadebecq, F., Lovat, L. B. & Stoyanov, D. Artificial intelligence and automation in endoscopy and surgery. Nature reviews. Gastroenterology & hepatology 20, 171–182, https://doi.org/10.1038/s41575-022-00701-y (2023).

Yang, L., Gu, Y., Bian, G. & Liu, Y. An attention-guided network for surgical instrument segmentation from endoscopic images. Computers in biology and medicine 151, 106216, https://doi.org/10.1016/j.compbiomed.2022.106216 (2022).

Colleoni, E., Psychogyios, D., Van Amsterdam, B., Vasconcelos, F. & Stoyanov, D. SSIS-Seg: Simulation-Supervised Image Synthesis for Surgical Instrument Segmentation. IEEE transactions on medical imaging 41, 3074–3086, https://doi.org/10.1109/tmi.2022.3178549 (2022).

Chen, J., Li, M., Han, H., Zhao, Z. & Chen, X. SurgNet: Self-Supervised Pretraining With Semantic Consistency for Vessel and Instrument Segmentation in Surgical Images. IEEE transactions on medical imaging 43, 1513–1525, https://doi.org/10.1109/tmi.2023.3341948 (2024).

Rueckert, T., Rueckert, D. & Palm, C. Methods and datasets for segmentation of minimally invasive surgical instruments in endoscopic images and videos: A review of the state of the art. Computers in biology and medicine 169, 107929, https://doi.org/10.1016/j.compbiomed.2024.107929 (2024).

Zhao, Z. et al. Anchor-guided online meta adaptation for fast one-Shot instrument segmentation from robotic surgical videos. Medical image analysis 74, 102240, https://doi.org/10.1016/j.media.2021.102240 (2021).

Jha, D. et al. in 2021 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI). 1–4.

Gu, Z. et al. CE-Net: Context Encoder Network for 2D Medical Image Segmentation. IEEE transactions on medical imaging 38, 2281–2292, https://doi.org/10.1109/tmi.2019.2903562 (2019).

Shvets, A. A., Rakhlin, A., Kalinin, A. A. & Iglovikov, V. I. in 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA). 624–628.

Lou, A., Guan, S. & Loew, M. CaraNet: context axial reverse attention network for segmentation of small medical objects. Journal of medical imaging (Bellingham, Wash.) 10, 014005, https://doi.org/10.1117/1.Jmi.10.1.014005 (2023).

Isensee, F. et al. in Medical Image Computing and Computer Assisted Intervention – MICCAI 2024. (eds M. G., Linguraru et al.) 488–498 (Springer Nature Switzerland).

Khanam, R. & Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements.

Balu, A. et al. Simulated outcomes for durotomy repair in minimally invasive spine surgery. Scientific data 11, 62, https://doi.org/10.1038/s41597-023-02744-5 (2024).

Cheplygina, V., de Bruijne, M. & Pluim, J. P. W. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Medical image analysis 54, 280–296, https://doi.org/10.1016/j.media.2019.03.009 (2019).

Huynh, T., Nibali, A. & He, Z. Semi-supervised learning for medical image classification using imbalanced training data. Computer methods and programs in biomedicine 216, 106628, https://doi.org/10.1016/j.cmpb.2022.106628 (2022).

Wu, Y. et al. Mutual consistency learning for semi-supervised medical image segmentation. Medical image analysis 81, 102530, https://doi.org/10.1016/j.media.2022.102530 (2022).

Chaitanya, K. et al. Semi-supervised task-driven data augmentation for medical image segmentation. Medical image analysis 68, 101934, https://doi.org/10.1016/j.media.2020.101934 (2021).

Xu, Z. P. et al. Spine endoscopic atlas: an open-source dataset for surgical instrument segmentation. figshare https://doi.org/10.6084/m9.figshare.27109312 (2025).

Zhou, Q. et al. Contextual ensemble network for semantic segmentation. Pattern Recognition 122, 108290, https://doi.org/10.1016/j.patcog.2021.108290 (2022).

Ronneberger, O., Fischer, P. & Brox, T. in Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. (eds Navab, N., Hornegger, J, Wells, W. M. & Frangi, A. F.) 234–241 (Springer International Publishing).

Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nature Methods 18, 203–211, https://doi.org/10.1038/s41592-020-01008-z (2021).

Acknowledgements

This work is funded by the Nanshan District Health Science and Technology Project (Grant No. NS2023002; NS2023044); the Nanshan District Health Science and Technology Major Project (Grant No. NSZD2023023; NSZD2023026); the Medical Scientific Research Foundation of Guangdong Province of China (Grant No. A2023195) and the Science, Technology, and Innovation Commission of Shenzhen Municipality (Grant No. JCYJ20230807115918039).

Author information

Authors and Affiliations

Contributions

Conceptualization and design: Xiang Liao, Guoxin Fan and Yongxian Huang; Acquisition and classification of data: Zhipeng Xu, Hong Wang, Shangjie Wu and Jax Luo; Data analysis and/or interpretation: Zhipeng Xu, Jianjin Zhang and Yanhong Chen, Guanghui Yue and Zhouyang Hu; Drafting of manuscript and/or critical revision: Zhipeng Xu, Guoxin Fan and Xiang Liao; Approval of final version of manuscript: Zhipeng Xu, Xiang Liao, Guoxin Fan and Jax Luo.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Xu, Z., Wang, H., Huang, Y. et al. Spine endoscopic atlas: an open-source dataset for surgical instrument segmentation. Sci Data 12, 1611 (2025). https://doi.org/10.1038/s41597-025-05897-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41597-025-05897-7