Abstract

Diabetic retinopathy (DR) is a frequent vascular complication of diabetes mellitus and remains a leading cause of vision loss worldwide. Microaneurysm (MA) is usually the first symptom of DR that leads to blood leakage in the retina. Periodic detection of MAs will facilitate early detection of DR and reduction of vision injury. In this study, we proposed a novel model for the detection of MAs in fluorescein fundus angiography (FFA) images based on the improved FC-DenseNet, MAs-FC-DenseNet. FFA images were pre-processed by the Histogram Stretching and Gaussian Filtering algorithm to improve the quality of FFA images. Then, MA regions were detected by the improved FC-DenseNet. MAs-FC-DenseNet was compared against other FC-DenseNet models (FC-DenseNet56 and FC-DenseNet67) or the end-to-end models (DeeplabV3+ and PSPNet) to evaluate the detection performance of MAs. The result suggested that MAs-FC-DenseNet had higher values of evaluation metrics than other models, including pixel accuracy (PA), mean pixel accuracy (MPA), precision (Pre), recall (Re), F1-score (F1), and mean intersection over union (MIoU). Moreover, MA detection performance for MAs-FC-DenseNet was very close to the ground truth. Taken together, MAs-FC-DenseNet is a reliable model for rapid and accurate detection of MAs, which would be used for mass screening of DR patients.

Similar content being viewed by others

Introduction

Retinal microaneurysms (MAs) are defined as the small swelling of tiny blood vessels, which mainly locate in the inner nuclear layer and deep capillary layer1. MAs often occur as the early clinical signs of retinal diseases, such as diabetic retinopathy (DR) and retinal vein occlusions. The number and turnover of retinal MAs are considered as the indicators to assess the presence, severity, and progression risk of retinopathy. Thus, periodic detection of MAs is required for the early diagnosis of retinopathy. MAs can be identified by several imaging technologies, including color fundus photography, fundus fluorescein angiography (FFA), and optical coherence tomography angiography (OCTA). Clinically, FFA is well-recognized as an important standard to visualize retinal vasculature and is routinely used to describe the subtle vascular alterations2.

FFA is highly sensitive and demonstrates MAs as the hyperfluorescent dots in the early phase. It is an important imaging modality, which can capture images after the intravenous injection of fluorescein dye3. With the increasing amount of FFA images that require for analysis, manual detection and quantification of MAs have become the labor-intensive and time-consuming jobs4. In addition, manual detection of MAs is subjective and error-prone, which may cause poor reproducibility5. Thus, an automated detection method is urgently required for the accurate detection of MAs in FFA images.

Recently, the development of MA detection methods have become a hot topic in the field of ophthalmic study. Some models based on the neural network have been used for MA detection. CNN and ResNet could obtain higher-level features from the upper layer and give up the features of the lower layer, but these model may loss parts of small-size targets6,7. DeeplabV3+and PSPNet use spatial pyramid pooling module to further extract contextual information and improve the detection accuracy of small-size targets, but they have misdetection and missed detection problems for MA detection8,9. Some improvements on neural network have been used for MA detection. Mazlan et al. proposed a detection method for using H-maxima and thresholding technique10. Sarhan et al. proposed a two-stage deep learning approach for MA segmentation using the multiple scales of the input with selective sampling and embedding triplet loss11. Kou et al. proposed an architecture for U-Net obtained by combining the deep residual model and recurrent convolutional operations into U-Net12. Reguant et al. proposed an unsupervised method for DR detection based on CNN13. González-Gonzalo et al. proposed a deep visualization method based on the unsupervised selective inpainting14. However, MAs usually have low contrast and tiny size. The pixels of MAs are often similar as the pixels of blood vessels. Thus, it still required to develop novel methods to further improve the detection accuracy of MAs.

In this study, we proposed a novel model for detecting MAs in FFA images based on the improved FC-DenseNet, MAs-FC-DenseNet. This model showed advantage over other FC-DenseNet models (FC-DenseNet56 and FC-DenseNet67) or the end-to-end models (DeeplabV3+and PSPNet) for MA detection. MAs-FC-DenseNet may become a promising method for rapid and accurate detection of MAs during mass screening of DR patients.

Materials and methods

Proposed MA detection model, MAs-FC-DenseNet

Normal FFA image and FFA image with MAs are shown in Fig. 1. MAs are the small white round spots in FFA image as shown in Fig. 1B.

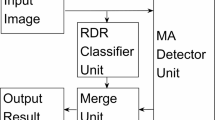

Figure 2 shows the flowchart of MAs-FC-DenseNet model, including the pre-processing of FFA images by the Histogram Stretching and Gaussian Filtering and MA detection by the improved FC-DenseNet.

Pre-processing of FFA images by the histogram stretching and Gaussian filtering

FFA images were pre-processed to improve their contrast and reduce image noises. The contrast between MAs and the background was enhanced by the Histogram stretching. Histogram stretching could stretch the values of pixel from 0 to 25515,16,17. The noises of FFA images were then reduced by the Gaussian Filtering. Gaussian Filtering could remove the surrounding noises in the non-uniform retinal images18,19,20.

Histogram Stretching is expressed as

where Inew is the new transformed image; Imax and Imin are the largest and smallest possible grey level value in the original image, respectively. Gmax and Gmin are the largest and smallest possible grey level value in the transformed image, respectively.

Gaussian Filtering is expressed as

where σ2 is the variance of Gaussian Filtering; l is the size of the filter kernel.

MA detection by the improved FC-DenseNet

At this step, MAs were detected by the improved Fully Convolutional DenseNet (FC-DenseNet)21. FC-DenseNet contains the down sampling path for extracting sparse semantic features and the up sampling path for restoring original resolution. The down sampling path consists of dense block (DB) layer and transition down (TD) layer22. The up sampling path consists of DB layer and transition up (TU) layer. DB layer is composed of batch normalization (BN)23, ReLU24, 3 × 3 convolution, and dropout with probability p = 0.2. TD layer is composed of BN, ReLU, 1 × 1 convolution, dropout with probability p = 0.2 and 2 × 2 maximum pooling. TU layer includes 3 × 3 transposed convolution with stride 2.

As shown in Fig. 2, the feature maps from the down sampling path were concatenated with the corresponding feature maps in the up sampling path. The connectivity pattern in the up sampling and the down sampling paths were different. In the down sampling path, the input to a dense block was concatenated with its output, leading to a linear growth of the number of feature maps, whereas in the up sampling path, it was not.

Due to the closeness of MAs to the vessels and low number of pixels belonging to MAs, it is difficult to accurately detect MAs. Here, we replaced the cross entropy loss of FC-DenseNet with the focal loss25. The focal loss could decrease the weight of the background and increase the weight of MAs. Thus, this model could increase the detection preformation of MA regions.

Here, the focal loss function is expressed as

where pt is probability of correct prediction for different categories. αt and γ ≥ 0 are adjustable hyperparameters, which can be used to control the sharing weight of different samples to the total loss.

Datasets

FFA image cohort was constructed with the collaboration of the Affiliated Eye Hospital of Nanjing Medical University, the first Affiliated Hospital of Soochow University, and Huai'An First People's Hospital. The dataset contains 1200 FFA images (768 × 868 pixels) from 1200 eyes of DR patients (age range 31–81 years old) who underwent FFA in three hospitals from August 2015 to December 2019. Each hospital provided 400 images. The operations were performed by the experienced clinicians using the Heidelberg Retina Angiograph (Heidelberg Engineering, Heidelberg, Germany). The dataset did not include the blurry or overexposed FFA images caused by the environmental factors or equipment materials. This study was approved by the Ethics Committee of the Affiliated Eye Hospital, Nanjing Medical University. The procedures adhered to the tenets of the Declaration of Helsinki. Written informed consents were obtained from all participants. Subsets of 960, 120 and 120 FFA images were randomly selected for training, validation and testing, respectively. Each FFA image was individually labeled by 3 experienced clinicians with more than 10-year clinical working experiences. Due to the limited human energy and occasional blurred images, some artificial deviations would inevitably occur during MA label. For these images, thorough rounds of discussion and adjudication were needed until full consensus was reached.

Evaluation metrics

Six metrics, including pixel accuracy (PA), mean pixel accuracy (MPA), Precision (Pre), Recall (Re), F1-score (F1), and mean intersection over union (MIoU) were calculated to estimate the detection performance of MAs26,27,28,29.

TP, FP, and FN denote the true positive region, false positive region, and false negative region, respectively. k indicates the labeling results of different classes, where k = 0 expressed as background class and k = 1 as MAs class. pij is the number of pixels of class i predicted to belong to class j, among \(i,j \in \left[ {0,1} \right]\). PA is the overall pixel accuracy. MPA is the average pixel accuracy of MAs and background. Pre and Re are the proportion of real MAs in the samples predicted as MAs and the proportion of correct predictions in all MAs, respectively. F1-score (F1) is a balanced metric and determined by the precision and recall. Mean intersection over union (MIoU) is an accuracy assessment metric applied to measure the similarity between ground truth and prediction.

Implementation

The hardware configuration used for the experiment were shown below: Ubuntu 16.04.4, 2GPUs, GPU NVIDIA Tesla P100 PCIE, and 1 GPU memory (16 GB). Software environment was Deep-learning framework Tensorflow1.8.0 and programming language python 3.6.

Results

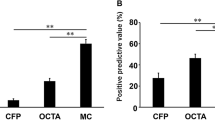

The original FFA images, the detection results by MAs-FC-DenseNet, and the ground truth by the clinicians were shown in Fig. 3. To evaluate the detection performance for MAs, three comparison experiments were conducted. Experiment 1 was an ablation experiment. In experiment 2, MAs-FC-DenseNet was compared against other FC-DenseNet models including FC-DenseNet56 and FC-DenseNet67 to compare MA detection performance. In experiment 3, MAs-FC-DenseNet was compared against other end-to-end models, including DeeplabV3+ and PSPNet, to compare MA detection performance.

Ablation experiment

Figure 4 and Table 1 showed the comparison result of MA detection performance in the ablation studies, including FC-DenseNet103 with pre-processing (pre-processing + FC-DenseNet103), the improved FC-DenseNet103 without pre-processing (FC-DenseNet103 + Focal loss), and the proposed MAs-FC-DenseNet.

As shown in Fig. 4 and Table 1, the models of FC-DenseNet103 with pre-processing or improved FC-DenseNet103 without pre-processing led to some missed and false detection of MAs. By contrast, MAs-FC-DenseNet reduced the missed and false detection regions and significantly enhanced the values of PA, MPA, Pre, Re, F1, and MIoU.

Evaluation of MA detection performance of MAs-FC-DenseNet by comparing against other FC-DenseNet models

MAs-FC-DenseNet was compared against other FC-DenseNet models, including FC-DenseNet56 and FC-DenseNet67, to evaluate the detection performance of MAs.

As shown in Fig. 5 and Table 2, some MA regions were missed and falsely detected in FC-DenseNet56 and FC-DenseNet67 model. MA detection result of proposed MAs-FC-DenseNet achieved greater values of PA, MPA, Pre, Re, F1, and MIoU, which were 99.97% (0.01↑), 94.19% (2.82↑), 88.40% (5.67↑), 89.70% (8.76↑), 88.98% (7.86↑), and 90.14% (5.80↑), respectively.

Evaluation of MA detection performance of MAs-FC-DenseNet by comparing against other end-to-end models

MAs-FC-DenseNet was compared against other end-to-end models, including DeeplabV3+ and PSPNet, to evaluate the detection performance of MAs.

As shown in Fig. 6 and Table 3, the DeeplabV3+ model could not distinguish the boundaries of MAs and normal regions, which led to some false detection of MAs. As for PSPNet model, some MA regions were missed. MA detection result of proposed MAs-FC-DenseNet was very close to the ground truth. Moreover, MAs-FC-DenseNet had grater values of PA, MPA, Pre, Re, F1, and MIoU than that of other models.

Discussion

Microaneurysm (MA) is recognized as the first symptom of DR that leads to retinal blood injury. Detection of MAs within FFA images facilitates early DR detection and prevents vision loss30. However, MA is extremely small and its contrast to the surrounding background is very subtle, which make MA detection challenging. In this study, we proposed a novel model, MAs-FC-DenseNet, for the detection of MAs in FFA images. FFA images were pre-processed to enhance image quality by the Histogram Stretching and Gaussian Filtering. Improved FC-DenseNet model was then used to detect the deep features of MAs and enhance the detection accuracy of MAs.

Six metrics were used to evaluate the accuracy for MA detection, including pixel accuracy (PA), mean pixel accuracy (MPA), Precision (Pre), Recall (Re), F1-score (F1), and mean intersection over union (MIoU). The values of these metrics of MAs-FC-DenseNet were significantly greater than that of other deep learning network models, including DeeplabV3+ and PSPNet, which could reach to 99.97%, 94.19%, 88.40%, 89.70%, 88.98%, and 90.14%, respectively. Moreover, the detection results of MAs-FC-DenseNet were very close to the ground truth. Thus, MAs-FC-DenseNet is a suitable model for diabetic retinopathy screening based on MA detection result.

DR is a complex, progressive, and heterogenous ocular disease associated with diabetes duration. It is generally recognized as a vascular disease like other diabetes-related diseases. Signs of DR contain the lesions such as MAs, hemorrhages, and yellowish or bright spots such as hard and soft exudates31. This model was designed based on the feature of MAs, but the impacts of other lesions on MA detection were not considered. In addition, DR can be classified as mild non-proliferative DR (NPDR), moderate NPDR, severe NPDR, and proliferative DR (PDR) according to disease severity32. Our proposed model did not consider the severity DR. The degree of DR severity should be considered as a contributing factor for MA detection in the future study.

Taken together, this study proposed a two-step model, MAs-FC-DenseNet, for the detection of MAs in FFA images, including pre-processing of FFA images by the Histogram Stretching and Gaussian Filtering and detection of MAs by the improved FC-DenseNet. This model will become a promising method for early diagnosis of diabetic retinopathy with a competitive accuracy. In the future, this model should be improved to embrace more feature-learning capacities, as well as some knowledge regarding retinal geometry and other characters.

References

Couturier, A. et al. Capillary plexus anomalies in diabetic retinopathy on optical coherence tomography angiography. Retina 35, 2384–2391 (2015).

Wu, B. et al. Automatic detection of microaneurysms in retinal fundus images. Comput. Med. Imaging Graph. 55, 106–112 (2017).

Spaide, R. F., Klancnik, J. M. Jr. & Cooney, M. J. Retinal vascular layers imaged by fluorescein angiography and optical coherence tomography angiography. JAMA Ophthalmol. 133(1), 45–50 (2015).

Bhaskaranand, M. et al. Automated diabetic retinopathy screening and monitoring using retinal fundus image analysis. J. Diabetes Sci. Technol. 10, 254–261 (2016).

Dai, L. et al. Clinical report guided retinal microaneurysm detection with multi-sieving deep learning. IEEE Trans. Med. Imaging. 37, 1149–1161 (2018).

Szegedy, C., et al. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 1–9 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Li, T. et al. Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening. Inf. Sci. 501, 511–522 (2019).

Shaaban, A. M., Salem, N. M. & Al-atabany, W. I. A semantic-based scene segmentation using convolutional neural networks. AEU-Int. J. Electron. Commun. 125, 9 (2020).

Mazlan, N., Yazid, H., Arof, H. & Isa, H. M. Automated microaneurysms detection and classification using multilevel thresholding and multilayer perceptron. J. Med. Biol. Eng. 40, 292–306 (2020).

Sarhan, M. H., Albarqouni, S., Yigitsoy, M., Navab, N. & Abouzar, E. Microaneurysms segmentation and diabetic retinopathy detection by learning discriminative representations. IET Image Process. 14, 4571–4578 (2020).

Kou, C., Li, W., Liang, W., Yu, Z. & Hao, J. Microaneurysms segmentation with a U-Net based on recurrent residual convolutional neural network. J. Med. Imaging. 6, 025008 (2019).

Reguant, R., Brunak, S. & Saha, S. Understanding inherent image features in CNN-based assessment of diabetic retinopathy. Sci. Rep. 11, 1–12 (2021).

González-Gonzalo, C., Liefers, B., van Ginneken, B. & Sánchez, C. I. Iterative augmentation of visual evidence for weakly-supervised lesion localization in deep interpretability frameworks: Application to color fundus images. IEEE Trans. Med. Imaging. 39, 3499–3511 (2020).

Luo, W. L., Duan, S. Q. & Zheng, J. W. Underwater image restoration and enhancement based on a fusion algorithm with color balance, contrast optimization, and histogram stretching. IEEE Access. 9, 31792–31804 (2021).

Langarizadeh, M. et al. Improvement of digital mammogram images using histogram equalization, histogram stretching and median filter. J. Med. Eng. Technol. 35, 103–108 (2011).

Kaur, H. & Sohi, N. A study for applications of histogram in image enhancement. Int. J. Engin. Sci. 6, 59–63 (2017).

Nasor, M. & Obaid, W. Segmentation of osteosarcoma in MRI images by K-means clustering, Chan-Vese segmentation, and iterative Gaussian filtering. IET Image Process. 15, 1310–1318 (2021).

Mustafa, W. A., Yazid, H. & Yaacob, S. B. Illumination correction of retinal images using superimpose low pass and Gaussian filtering. In 2015 2nd International Conference on Biomedical Engineering (ICoBE) 1–4 (IEEE, 2015).

Mehrotra, A., Tripathi, S., Singh, K. K., & Khandelwal, P. Blood vessel extraction for retinal images using morphological operator and KCN clustering. In 2014 IEEE International Advance Computing Conference (IACC) 1142–1146 (IEEE, 2014).

Guo, X. J., Chen, Z. H. & Wang, C. Y. Fully convolutional DenseNet with adversarial training for semantic segmentation of high-resolution remote sensing images. J. Appl. Remote Sens. 15, 12 (2021).

Brahimi, S., Ben Aoun, N., Benoit, A., Lambert, P. & Ben Amar, C. Semantic segmentation using reinforced fully convolutional densenet with multiscale kernel. Multimed. Tools Appl. 78, 22077–22098 (2019).

Lee, S. & Lee, C. Revisiting spatial dropout for regularizing convolutional neural networks. Multimed. Tools Appl. 79, 34195–34207 (2020).

Soltani, A. & Nasri, S. Improved algorithm for multiple sclerosis diagnosis in MRI using convolutional neural network. IET Image Process. 14, 4507–4512 (2020).

Romdhane, T. F., Alhichri, H., Ouni, R. & Atri, M. Electrocardiogram heartbeat classification based on a deep convolutional neural network and focal loss. Comput. Biol. Med. 123, 13 (2020).

Deng, H. B., Xu, T. Y., Zhou, Y. C. & Miao, T. Depth density achieves a better result for semantic segmentation with the Kinect system. Sensors. 20, 14 (2020).

Zhu, X. L., Cheng, Z. Y., Wang, S., Chen, X. J. & Lu, G. Q. Coronary angiography image segmentation based on PSPNet. Comput. Methods Programs Biomed. 200, 8 (2021).

Chicco, D. & Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genomics 21, 1–13 (2020).

Li, Z. & Xia, Y. Deep reinforcement learning for weakly-supervised lymph node segmentation in CT images. IEEE J. Biomed. Health Inform. 25, 774–783 (2021).

Bilal, A., Sun, G. & Mazhar, S. Survey on recent developments in automatic detection of diabetic retinopathy. J. Fr. Ophthamol. 44, 420–440 (2021).

Lu, J. et al. Association of time in range, as assessed by continuous glucose monitoring, with diabetic retinopathy in type 2 diabetes. Diabetes Care 41(11), 2370–2376 (2018).

Heng, L. Z. et al. Diabetic retinopathy: pathogenesis, clinical grading, management and future developments. Diabet. Med. 30(6), 640–650 (2013).

Acknowledgements

This study was generously supported by the grants from the National Natural Science Foundation of China (Grant Nos. 81970809 and 81570859), grant from the Medical Science and Technology Development Project Fund of Nanjing (Grant No ZKX1705), and grant from innovation team Project Fund of Jiangsu Province (No. CXTDB2017010).

Author information

Authors and Affiliations

Contributions

B.Y. and Q.J. were responsible for the conceptualization, data collection. Z.-HW. and X.-K.L. were responsible for the experiment design and manuscript writing; M.-D.Y. and J.L. conducted the data collection and data entry; B.Y. and Z.-H.W. were responsible for overall supervision and manuscript revision.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, Z., Li, X., Yao, M. et al. A new detection model of microaneurysms based on improved FC-DenseNet. Sci Rep 12, 950 (2022). https://doi.org/10.1038/s41598-021-04750-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-04750-2

This article is cited by

-

Convolutional block attention gate-based Unet framework for microaneurysm segmentation using retinal fundus images

BMC Medical Imaging (2025)

-

Predicting malignancy in breast lesions: enhancing accuracy with fine-tuned convolutional neural network models

BMC Medical Imaging (2024)

-

Scheme evaluation method of coal gangue sorting robot system with time-varying multi-scenario based on deep learning

Scientific Reports (2024)

-

Dual spin max pooling convolutional neural network for solar cell crack detection

Scientific Reports (2023)