Abstract

Bimodal cochlear implant (CI) listeners have difficulty utilizing spatial cues to segregate competing speech, possibly due to tonotopic mismatch between the acoustic input frequency and electrode place of stimulation. The present study investigated the effects of tonotopic mismatch in the context of residual acoustic hearing in the non-CI ear or residual hearing in both ears. Speech recognition thresholds (SRTs) were measured with two co-located or spatially separated speech maskers in normal-hearing adults listening to acoustic simulations of CIs; low frequency acoustic information was available in the non-CI ear (bimodal listening) or in both ears. Bimodal SRTs were significantly better with tonotopically matched than mismatched electric hearing for both co-located and spatially separated speech maskers. When there was no tonotopic mismatch, residual acoustic hearing in both ears provided a significant benefit when maskers were spatially separated, but not when co-located. The simulation data suggest that hearing preservation in the implanted ear for bimodal CI listeners may significantly benefit utilization of spatial cues to segregate competing speech, especially when the residual acoustic hearing is comparable across two ears. Also, the benefits of bilateral residual acoustic hearing may be best ascertained for spatially separated maskers.

Similar content being viewed by others

Introduction

For many cochlear implant (CI) users, relaxed candidacy criteria, modified electrode designs, and improved surgical techniques have preserved residual acoustic hearing in non-implanted and/or implanted ears. Residual acoustic hearing provides detailed low-frequency information that can greatly benefit CI users under challenging listening conditions. For listeners with one CI, combined acoustic and electric hearing can be categorized into three listening conditions: (1) Bimodal: electric stimulation in one ear and acoustic stimulation in the other ear; (2) EAS: electric stimulation and acoustic stimulation in the same ear; and (3) BiEAS: electric stimulation in one ear and acoustic stimulation in both ears.

Bimodal listening has been shown to significantly improve speech and music perception relative to performance with the CI alone1,2,3,4,5,6,7,8,9,10,11,12,13. However, some CI users do not experience significant benefits with Bimodal listening14,15,16 and others even experience interference11,13,17,18. Performance with EAS has been shown to be better than that with the CI alone, with even greater benefits observed for BiEAS19. The data from these previous studies indicate that the benefits of combined acoustic and electric hearing can be highly variable, possibly due to differences among individual CI users’ ability to combine acoustic and electric stimulation patterns within or across ears20.

In addition to providing useful low-frequency temporal fine-structure information, residual acoustic hearing in the non-implanted ear may provide some amount of binaural hearing in combination with the implanted ear. This low-frequency binaural hearing may be useful for sound localization and segregation of sound sources in space. However, for bimodal CI users, residual hearing in the non-implanted ear seems to have limited benefit for sound localization21 and segregation of spatially separated target and masker speech22. Dorman et al.21 found that sound localization scores were near chance level for bimodal CI users. Similarly, recent studies found little, no, or even negative spatial release from masking (SRM, defined as the performance difference in recognition of target speech between spatially separated and co-located maskers) for Bimodal CI listeners when head shadow effects were minimized by using symmetrically placed maskers22,23.

Normal-hearing (NH) listeners can use various spatial cues, such as inter-aural time differences (ITDs), inter-aural level differences (ILDs), and/or some other binaural process (e.g., inter-aural coherence)24,25 to better detect or recognize a target in the presence of competing sounds. For segregation of spatially separated speech, there are several benefits when listening with two ears over a single ear: head shadow (where the target-to-masker ratio, or TMR, is better in one ear than the other), binaural summation (where the redundant binaural representation allows for better segregation of the target), and binaural squelch (where the addition of the ear with the poorer TMR improves performance over the better ear alone)26. In general, bimodal hearing may not preserve important ITDs and/or ILDs due to timing differences (e.g., different compression time constants) between the acoustic hearing ear and the CI ear27,28,29,30. For many Bimodal CI patients, residual hearing in the contralateral ear provides only limited information due to the severity of underlying hearing loss. Without high-frequency audibility in the non-implanted ear, Bimodal listeners cannot benefit from ILD cues in the higher frequency region; for CI users, ILDs are the dominant cue for spatial perception. Firszt et al.31 reported limited bimodal benefit for spatial perception in Bimodal listeners with sloping or severe-to-profound hearing loss in the high-frequency region (i.e., the vast majority of Bimodal CI patients), compared to Bimodal listeners who have broadband audibility in the non-implanted ear.

Different from Bimodal CI patients, bilateral CI patients seem able to benefit from some spatial cues for sound localization21, possibly due to the availability of high-frequency ILDs. While bilateral CI users may be able to use ILD cues for sound localization, the bilateral benefit for segregation of spatially separated target and masker speech are limited. This is especially true when head shadow effects are minimized by using symmetrically placed maskers22,23. The lack of SRM in these studies may be partly driven by possible inter-aural frequency mismatch due to different insertion depth and uneven nerve survival across ears. Indeed, recent bilateral CI simulation studies have shown that inter-aural tonotopic mismatch may negatively affect utilization of spatial cues32,33,34. Thomas et al.34 found that minimizing the inter-aural mismatch may significantly increase SRM, possibly because that inter-aural frequency matching may improve inter-aural coherence.

Similarly, Bimodal CI users’ difficulties in utilizing spatial cues may be partly due to the tonotopic mismatch between the acoustic input frequency and the electrode place of stimulation in the cochlea. In clinical fitting of Bimodal CI users, the lowest acoustic input frequency is typically much lower than the characteristic frequency associated with the most apical electrode position35, resulting in some degree of tonotopic mismatch in the CI ear. Simulation studies have shown that tonotopic mismatch negatively affects the integration of acoustic and electric stimulation, regardless of whether residual acoustic and electric hearing were combined within an ear or across ears36,37. However, little is known about the effects of tonotopic mismatch on SRM for Bimodal listening.

One approach to reduce tonotopic mismatch in the CI is to adjust the input acoustic range to match the characteristic frequencies associated with the electrode positions. For most bimodal CI users, this would involve increasing the lowest acoustic input frequency, truncating all information below the adjusted input frequency. The loss of low-frequency speech information in one CI ear with a tonotopically matched frequency allocation may be less deleterious for Bimodal CI users since low-frequency speech information would be available with the contralateral acoustic hearing. Fowler et al.38 evaluated the effects of adjusting the low cutoff frequency (LCF) of the CI for CI-only and Bimodal CI listeners. They found that for the CI-only group, increasing the LCF reduced speech performance in both quiet and in noise. Similar results were observed for Bimodal listeners with limited acoustic hearing in the non-implanted ear (thresholds > 60 dB HL at 250 and 500 Hz). For Bimodal listeners with better hearing in the non-implanted ear (thresholds < 60 dB HL at 250 and 500 Hz), word recognition in quiet improved as the LCF was increased. Increasing the LCF likely reduced the tonotopic mismatch in the CI ear due to the relatively shallow electrode insertion depth.

While contralateral acoustic hearing may provide only limited benefit for perception of spatial cues in Bimodal CI users, Dorman et al.21 reported significant improvements in localization for BiEAS users. The availability of residual hearing in both ears may allow the listeners to use ITD cues, thus resulting in improved sound localization. Gifford et al.39,40 showed significant speech recognition and subjective perceptual benefits for BiEAS compared to the Bimodal listening condition. They also found that limiting the CI bandwidth (i.e., increasing the LCF to the CI) yielded significant improvement for speech recognition in noise and subjective estimates of listening difficulty. These data suggest that tonotopic mismatch may significantly limit the benefit of BiEAS listening, consistent with previous simulation studies showing that EAS is highly sensitive to tonotopic mismatch36. Tonotopic matching within the CI appears to be important for utilization of acoustic hearing in the ipsilateral ear.

Taken together, these previous studies suggest that tonotopic matching is important for Bimodal and BiEAS listening. However, most previous studies evaluated Bimodal and/or BiEAS speech performance in quiet, noise, or speech babble. The benefits of tonotopic matching on Bimodal and BiEAS listening for segregation of competing speech is not well studied. Also, the benefits of tonotopic matching may also depend on the target-masker spatial configuration. For example, Thomas et al.34 found limited benefit for tonotopic matching in simulations of bilateral CIs when maskers were co-located with the target speech, but a large benefit when maskers were spatially separated from the target. While tonotopic matching may be beneficial for Bimodal CI simulations when maskers are co-located with the target37, the benefit is less clear when the target and maskers are spatially separated. Similarly, the benefits of bilateral residual hearing for utilization of spatial cues to segregate competing speech have not been fully explored. Some studies have found BiEAS advantages over bimodal listening for spatialized noise41,42,43,44, but little is known regarding BiEAS advantages for competing speech, especially when target and maskers are spatially separated. Also, it is unclear how much residual hearing is needed in the implanted ear to be beneficial in conjunction with the contralateral residual hearing.

In the present study, segregation of co-located or spatially separated target and masker speech was measured in NH adults listening to acoustic simulations of Bimodal and BiEAS CI signal processing in which the frequency allocation for the simulated CI was tonotopically matched or mismatched. The first aim of the study was to evaluate bimodal perception of target speech with co-located or spatially separated maskers. We expected that speech reception thresholds (SRTs) would be higher (poorer) with spatially separated than with co-located maskers due to poorer integration of acoustic and electric hearing across ears36. The second aim of the study was to evaluate the effects of tonotopic matching in electric hearing on segregation of competing speech for Bimodal listening. We expected limited benefit of tonotopic matching for co-located target and masker speech, but a larger benefit when target and masker speech were spatially separated, consistent with previous bilateral CI simulation data29. The third aim of the study was to evaluate the effects of bilateral residual acoustic hearing (i.e., simulations of BiEAS) on segregation of competing speech. We expected limited advantage for BiEAS over Bimodal listening for co-located target and masker speech, but a larger BiEAS advantage for spatially separated target and masker speech, as the bilateral residual hearing may provide useful low-frequency binaural cues21,44.

Results

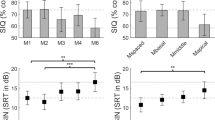

Figure 1 shows boxplots of SRTs with co-located or spatially separated target and masker speech for the different listening conditions. Mean SRTs are shown at the top of Table 1. With the co-located maskers, SRTs were generally comparable across listening conditions except for the Bimodal clinical condition. With the spatially separated maskers, SRTs were highest (poorest) with the Bimodal clinical condition; SRTs decreased (improved) with the matched frequency allocations and the addition of ipsilateral residual acoustic hearing. A two-way repeated-measures analysis of variance (RM ANOVA) was performed on the SRT data, with listening condition (Bimodal clinical, Bimodal adapted, Bimodal match, BiEAS low, BiEAS high) and masker configuration (co-located with or spatially separated from the target) as factors; complete results are shown at the bottom of Table 1. Results showed a significant effect for listening condition [F(4, 44) = 63.2, p < 0.001], but not for masker configuration [F(1,44) = 1.5, p = 0.250]; there was a significant interaction [F(4,44) = 21.2, p < 0.001]. With the co-located maskers, post-hoc Bonferroni pairwise comparisons showed that SRTs were significantly poorer with the Bimodal clinical than with the other listening conditions (p < 0.001), with no significant difference among the remaining listening conditions. With the spatially separated maskers, SRTs were significantly higher for the Bimodal clinical and Bimodal match conditions than for the Bimodal adapted, BiEAS low, and BiEAS high conditions (p < 0.01 for all comparisons), and significantly lower for the BiEAS high condition than for the remaining conditions (p < 0.001). SRTs were significantly higher with the spatially separated than with the co-located maskers for the Bimodal match condition (p < 0.001), and significantly lower with the spatially separated than with the co-located maskers (p < 0.001) for the BiEAS high condition.

Boxplots of speech reception thresholds (SRTs) with co-located or spatially separated maskers for the five listening conditions. The boxes show the 25th and 75th percentiles, the error bars show the 10th and 90th percentiles, the circles show outliers, the black line shows the median, and the red line shows the mean.

SRM was calculated as the difference in SRTs between the co-located and spatially separated maskers. Figure 2 shows boxplots of SRM for the different listening conditions. Mean SRM values are shown at the top of Table 2. Negative mean SRM (i.e., poorer performance with spatially separated than with co-located maskers) was observed for all listening conditions except for the BiEAS high condition. An RM ANOVA performed on the SRM data showed a significant effect for listening condition [F(4,44) = 27.2, p < 0.001]. Post-hoc Bonferroni pairwise comparisons showed that SRM was significantly higher (better) for the BiEAS high condition than for the other conditions (p < 0.01), and significantly higher for the BiEAS low condition than for the Bimodal match condition (p < 0.05).

Discussion

Little SRM was observed for the simulated Bimodal listening conditions, consistent with data reported in Bimodal CI simulations45 and real Bimodal CI users22,23. Tonotopic matching improved SRTs with co-located maskers, but less so with spatially separated maskers, resulting in reduced SRM. This finding is not consistent with previous data reported with simulated bilateral CIs34. SRM greatly improved for BiEAS listening when the amount of residual acoustic hearing was similar across ears, suggesting a great benefit for SRM with even a small amount of bilateral residual hearing (e.g., < 600 Hz). Gifford and Stecker44 found that the benefit of bilateral residual acoustic hearing was significantly correlated with BiEAS sensitivity to ILDs and ITDs, suggesting that SRM with BiEAS listening may be driven by perception of low-frequency spatial cues (more ITDs). The present data with the spatially separated maskers are consistent with previous studies that found significant SRM with spatialized noise for BiEAS CI simulations42,43. Note that mean SRM with spatialized noise was larger than with the present two-talker speech maskers (5.5 dB), possibly due to the increased susceptibility to informational masking with CI signal processing46,47. Combined with the spatial perception data from Dorman et al.21 and Gifford and Stecker44, the present data shows the advantage of preserving residual acoustic hearing in both ears to support segregation of spatially separated target and masker speech.

For both the Bimodal adapted and Bimodal clinical conditions, the acoustic-to-electric frequency allocation maximized the speech information delivered to the simulated CI. As with clinical fitting of Bimodal CI users, the Bimodal clinical condition introduced both an intra-aural and an inter-aural tonotopic mismatch. The Bimodal adapted condition represented long-term perceptual adaptation to this mismatch (effectively, tonotopic matching while providing the maximum speech information to the simulated CI). As such, it also represented the upper limit of Bimodal performance, assuming complete adaptation. Previous studies have shown that Bimodal CI users can partially adapt to tonotopic mismatch, but adaption is generally incomplete48. The present Bimodal adapted condition likely overestimated the degree of adaptation for real bimodal CI users.

If complete adaptation to the clinical frequency allocation is not possible49, the frequency allocation can be adjusted to match the electrode locations in the cochlea (i.e., the Bimodal match condition). In this case, the combined acoustic and electric hearing across ears would be tonotopically matched, with little spectral overlap between the stimulation modes. Interestingly, there was no significant difference in SRTs between the Bimodal match and Bimodal adapted conditions, despite the very different CI acoustic input frequency ranges. The data suggest that the loss of speech information in the CI with a tonotopically matched frequency allocation may have only limited impact on speech performance for co-located maskers, because the lost speech information in electric hearing would be represented by the residual acoustic hearing. The similarity between the Bimodal match and adapted conditions also suggests that spectral overlap may not be an issue when there is no tonotopic mismatch in electric hearing, consistent with previous studies36,44. Compared to the Bimodal clinical condition, in which there was a tonotopic mismatch, SRTs were significantly lower (better) with the Bimodal match and adapted conditions.

With co-located or spatially separated maskers, mean SRTs were significantly lower for the Bimodal match than for the Bimodal clinical condition. However, the mean improvement in SRTs with the spatially separated maskers in the Bimodal match condition was only 1.8 dB, half of that observed with the co-located maskers (3.9 dB). Accordingly, the mean SRM was significantly poorer for the Bimodal match (− 2.6 dB) than for the Bimodal clinical conditions (− 0.6 dB). This result is different from Thomas et al.34, who reported that SRM was similar for tonotopically-matched or -mismatched bilateral CI simulations. The differences in speech bandwidth between residual acoustic hearing and the CI may partly explain these different outcomes.

With the co-located maskers, mean SRTs improved from 9.3 dB with the Bimodal clinical to 5.5 dB with the Bimodal adapted condition, an improvement of 3.8 dB. Similarly, with the spatially separated maskers, mean SRTs improved from 9.8 dB with the Bimodal clinical to 5.8 dB with the Bimodal adapted condition, resulting in a similar improvement of 4.0 dB. Again, the acoustic input for the Bimodal clinical and adapted conditions maximized the speech information, with tonotopic mismatch for the clinical condition but no tonotopic mismatch for the adapted condition. While little SRM was observed for either condition, bimodal SRM was not affected by tonotopic mismatch. This suggests that Bimodal CI users may not benefit much from spatial cues even if they are able to completely adapt to tonotopic mismatch. The present Bimodal simulation data are generally consistent with previous data from Bimodal CI simulations45 and real Bimodal CI users22,23.

When there was no tonotopic mismatch, adding ipsilateral residual acoustic hearing provided no significant advantage for Bimodal SRTs when target and masker speech were co-located. This finding is not consistent with Fu et al.36, who found significantly better integration efficiency for vowel recognition in quiet when tonotopically matched electric hearing was combined with residual hearing in the ipsilateral rather than the contralateral ear. Differences in the speech tests and listening conditions may partly explain this discrepancy in results. A very different pattern was observed for spatially separated speech maskers. Mean SRTs improved from 8.0 dB with no ipsilateral residual hearing (Bimodal match) to 5.3 dB with ipsilateral residual hearing up to 300 Hz (BiEAS low) and to − 0.5 dB with ipsilateral residual hearing up to 600 Hz (BiEAS high). This finding is consistent with Fu et al.36, despite differences in speech tests and listening conditions. The improvement was likely driven by the better spatial perception with BiEAS listening21,44. In the present study, bilateral acoustic hearing up to 300 Hz was not tested, so it is difficult to know how the bandwidth and/or asymmetry in residual acoustic hearing may affect segregation of spatially separated target and masker speech with BiEAS listening.

There are limitations to the current study which should be noted. A critical consideration is that the tonotopic map outlined by Greenwood50 was based on threshold detection in NH listeners. Hearing loss and subsequent amplification by hearing aids may result in substantial distortion to Greenwood’s50 tonotopic map. In the present study, there was no simulation of hearing loss for the residual acoustic hearing; instead, the residual acoustic hearing was simulated by simply band-pass filtering the acoustic input between 100 and 300 Hz or 100 Hz and 600 Hz. This would not capture the degree of distortion in the residual acoustic hearing that is likely experienced by real Bimodal CI users. As such, the present simulation of residual acoustic hearing is probably a “best-case scenario” that may overestimate the contribution of residual hearing.

Another limitation is the sharp filter cutoffs in the simulated CI and residual acoustic hearing, which minimized interactions between acoustic and electric hearing within the CI ear. However, acoustic and electric stimulation may have significant overlap due to channel interaction. Gifford et al.39 found that greater overlap between the acoustic component and the CI allocation provided greater EAS benefit for speech performance than with the clinically recommended settings, where overlap was minimized. In the present study, the Bimodal match condition (where there was no frequency overlap between acoustic and electric hearing) produced much poorer SRM than that did the Bimodal clinical or adapted conditions. While these listening conditions were different from the EAS condition in Gifford et al.39, both suggest that some degree of frequency overlap between acoustic and electric hearing may benefit combined acoustic and electric hearing. Further studies are needed to better understand potential tradeoffs between tonotopic mismatch and frequency overlap between acoustic and electric hearing, within and across ears.

Conclusions

The present simulation data suggest that hearing preservation in the implanted ear may significantly benefit Bimodal CI listeners’ utilization of spatial cues when segregating competing speech, especially when the amount of residual acoustic hearing is comparable across ears. The benefits of residual acoustic hearing in the implanted ear may be best ascertained in Bimodal CI listeners when target and masker speech are spatially separated. The present simulation data with co-located or spatially separated competing speech maskers adds to previous studies with real Bimodal and BiEAS CI users typically tested in quiet, noise, or speech babble. Bilateral low-frequency residual acoustic hearing may increase release from informational masking in CI users.

Materials and methods

In compliance with ethical standards for human subjects, written informed consent was obtained from all participants or their legal guardians before proceeding with any of the study procedures. The study and its consent procedure were approved by the Institutional Review Board of the University of California, Los Angeles (UCLA IRB#19-000722 and IRB#18-001604) and this research was conducted in accordance with the principles of the Declaration of Helsinki and its later amendments.

Participants

Twelve NH adults (4 males and 8 females; mean age = 35.1 years, age range: 21–64 years) participated in the study. All participants had pure tone thresholds < 25 dB HL at all audiometric frequencies between 250 and 8000 Hz. All were native speakers of American English. In compliance with the ethical standards for human participants, written informed consent was obtained from all participants before proceeding with any of the study procedures.

Test materials

The matrix-style test materials were drawn from Sung Speech Corpus11,12 and consisted of a total of 50 words from five categories (Name, Verb, Number, Color, and Object), each of which contained 10 monosyllable words. Target sentences were generated by always selecting the Name “John” (the target sentence cue word), and then randomly selecting from the 10 words in each of the remaining categories. All 50 words for the target sentence were produced by a male talker and the mean fundamental frequency (F0) across all 50 words was 106 Hz. Similarly, two different masker sentences were generated by randomly selecting words from each of the categories. For each masker sentence, words were randomly selected to be different from the target sentence as well as from the other masker sentence. Thus, during each test trial, target and masker sentences were comprised of different words. All 50 words for the two masker sentences were produced by two different male talkers (mean F0s: 97 Hz and 128 Hz). A more detailed description regarding the test materials can be found in previous related studies33,37. The duration of the words used to generate the target and masker sentences varied slightly across categories and talkers. As such, after generating the target and masker sentences, the masker sentence duration was normalized in real-time to have the same duration as the target without affecting pitch using SoundTouch software (https://gitlab.com/soundtouch/soundtouch).

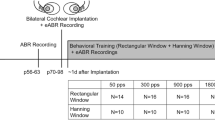

Bimodal and BiEAS CI simulation signal processing

All target and masker stimuli were generated in real-time and delivered to circumaural headphones (Sennheiser HDA 200) via audio interface (Edirol UA-25EX) connected to a mixer (Mackie 402). Non-individualized head-related transfer functions (HRTFs) were used to create a virtual auditory space for headphone presentation of the stimuli51. The target sentence always originated directly in front of the listener (0° azimuth), and the two masker sentences were either co-located with the target (0°) or spatially separated from the target (± 90°).

In each test trial, the target and masker sentences were first generated according to the specified target-to-masker ratio (TMR). The TMR was calculated according to long-term root-mean-squared (RMS) power between the target sentence and each masker sentence. Note that the masker sentences were equalized in terms of long-term RMS level before the TMR calculation. The target and masker sentences were first processed by the HRTF and then mixed independently into the left and right channel. The mixed target and masker sentences in the left channel were bandpass filtered with a cutoff of 100–600 Hz and a slope of − 240 dB/octave to simulate residual acoustic hearing in the non-implanted ear. The mixed target and masker sentences in the right channel were processed by a 16-channel sine-wave vocoder to simulate electric hearing with the CI. For the sine-wave vocoder, the signal was first processed through a high-pass pre-emphasis filter with a cutoff of 1200 Hz and a slope of − 6 dB/octave. The input frequency range was then divided into 16 frequency analysis bands according to the experimental acoustic-to-electric frequency allocation using 4th-order Butterworth filters that were distributed according to Greenwood’s frequency-place formula50. The temporal envelope from each analysis band was extracted using half-wave rectification and low-pass filtering (cutoff frequency = 160 Hz). Next, the extracted envelopes were used to modulate the amplitude of sinewave carriers. The distribution of the carrier sinewaves assumed a 20-mm electrode array with 16 electrodes linearly spaced in terms of cochlear place. The simulated insertion depth was fixed at 24 mm, relative to the base. The frequency allocation used in the vocoder for the CI ear was manipulated to simulate three distinct speech processors:

-

Bimodal clinical: The input frequency range was 200–8000 Hz while the output frequency range was upshifted to 610–11,837 Hz based on the expected spiral ganglion characteristic frequency associated with the simulated insertion depth (24 mm from the base) and electrode length (20 mm) according to the Greenwood’s function50. However, there was an intra-aural tonotopic mismatch within the CI ear, as well as an inter-aural low-frequency mismatch between the contralateral acoustic hearing ear and the CI ear.

-

Bimodal adapted: Both the input acoustic frequency range and output frequency range were 200–8000 Hz. This bimodal condition was used to simulate long-term adaptation to tonotopic mismatch. All speech information was presented to the CI ear. Here, there was no intra-aural tonotopic mismatch within the CI ear and no inter-aural mismatch between the acoustic ear and the CI ear. Performance in this condition represented the upper limit for traditional Bimodal listening.

-

Bimodal match: Both the input acoustic frequency range and output frequency range were 610–11,837 Hz. The input frequency range was tonotopically matched to the expected spiral ganglion characteristic frequency associated with the simulated insertion depth (24 mm from the base) and electrode length (20 mm), according to Greenwood’s function50. Low-frequency speech information below 610 Hz was truncated in the CI ear, but was preserved in the contralateral acoustic ear. Here, there was no intra-aural tonotopic mismatch within the CI ear and no inter-aural mismatch between the acoustic ear and the CI ear. There was also no frequency overlap between acoustic and electric hearing.

The mixed target and masker sentences in the right channel were also bandpass filtered to simulate the residual acoustic hearing in the implanted ear. The amount of residual hearing in the implanted ear was manipulated to simulate two additional speech processors. For both speech processors, the parametric variations in electric hearing were same as bimodal match condition.

-

BiEAS low: The simulated residual hearing in the non-implanted ear was 100–600 Hz. The simulated residual hearing in the CI ear was 100–300 Hz.

-

BiEAS high: The simulated residual hearing in the non-implanted and implanted ears was 100–600 Hz.

Figure 3 illustrates the input and output frequency bands for the left and right ears for the five listening conditions.

Illustration of the speech processing conditions for the left (L) and right ears (R). The green areas represent the range of simulated residual acoustic hearing. The circles represent the simulated electrode locations (output frequency range). The red circles represent a typical electrode insertion, and the black circles represent a deep insertion. The analysis bands (input frequency range) are shown at the right of each processing condition. The black analysis bands represent the clinical acoustic-to-electric frequency allocation and the red analysis bands represent a tonotopically matched allocation relative to a typical electrode insertion depth.

Test procedures

Testing was performed in a sound-attenuating booth. SRTs were measured using an adaptive procedure (1-up/1-down) that produced a 50% correct identification of both keywords. The procedure was similar to a coordinate response matrix test52. Participants were instructed to listen to the target sentence (cued by the name “John”) and then click on one of the 10 response choices for each of the Number and Color categories; no other selections could be made from the remaining categories, which were greyed out. The target level was fixed at 65 dBA. The TMR was globally adjusted from trial to trial by varying the levels of each of the male maskers by the same amount according to the correctness of the response. If the participant correctly identified both the target Number and Color keywords, the TMR was reduced; if not the TMR was increased. The initial step size was 4 dB for the first two reversals in TMR, and the final step size was 2 dB. The SRT was calculated by averaging the last six reversals in TMR. The 10 listening conditions (5 listening conditions × 2 masker spatial configurations) were randomized within a test block and 2–3 blocks were tested for each participant; SRTs for each condition were averaged across blocks. Participants were given no practice or previews prior to the testing sessions, and no feedback was provided during testing. All testing was completed in a single session with short breaks between test blocks.

Data analysis

SRT data were analyzed using an RM ANOVA, with listening condition and masker spatial configuration as within-subject factors. SRM data were analyzed using an RM ANOVA, with listening condition as the within-subject factor. Significance was defined as p < 0.05. Bonferroni correction was applied to post-hoc pairwise comparisons. RM ANOVAs were performed using SPSS (Version 20.0; Armonk, NY). All figures were generated using Sigmaplot software (Version 14).

Data availability

The raw de-identified data are included as Supplementary material (Supplementary Table S1.xlsx).

References

Tyler, R. S. et al. Patients utilizing a hearing aid and a cochlear implant: Speech perception and localization. Ear Hear. 23, 98–105 (2002).

Kong, Y. Y., Stickney, G. S. & Zeng, F. G. Speech and melody recognition in binaurally combined acoustic and electric hearing. J. Acoust. Soc. Am. 117, 1351–1361 (2005).

Dorman, M. F., Gifford, R. H., Spahr, A. J. & McKarns, S. A. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol. Neurootol. 13, 105–112 (2008).

Looi, V., McDermott, H., McKay, C. & Hickson, F. The effect of cochlear implantation on music perception by adults with usable pre-operative acoustic hearing. Int. J. Audiol. 47, 257–268 (2008).

Brown, C. A. & Bacon, S. P. Achieving electric-acoustic benefit with a modulated tone. Ear Hear. 30, 489–493 (2009).

Dorman, M. F. & Gifford, R. H. Combining acoustic and electric stimulation in the service of speech recognition. Int. J. Audiol. 49, 912–919 (2010).

Zhang, T., Dorman, M. F. & Spahr, A. J. Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 31, 63–69 (2010).

Zhang, T., Dorman, M. F. & Spahr, A. J. Frequency overlap between electric and acoustic stimulation and speech-perception benefit in patients with combined electric and acoustic stimulation. Ear Hear. 31, 195–201 (2010).

Yoon, Y. S., Li, Y. & Fu, Q. J. Speech recognition and acoustic features in combined electric and acoustic stimulation. J. Speech Lang. Hear. Res. 55, 105–124 (2012).

Yoon, Y. S., Shin, Y. R., Gho, J. S. & Fu, Q. J. Bimodal benefit depends on the performance difference between a cochlear implant and a hearing aid. Cochlear Implants Int. 16, 159–167 (2015).

Crew, J. D., Galvin, J. J. 3rd., Landsberger, D. M. & Fu, Q. J. Contributions of electric and acoustic hearing to bimodal speech and music perception. PLoS ONE 10, e0120279 (2015).

Crew, J. D., Galvin, J. J. 3rd. & Fu, Q. J. Perception of sung speech in bimodal cochlear implant users. Trends Hear. 20, 2331216516669329 (2016).

Liu, Y. W. et al. Factors affecting bimodal benefit in pediatric mandarin-speaking chinese cochlear implant users. Ear Hear. 40, 1316–1327 (2019).

Kiefer, J. et al. Combined electric and acoustic stimulation of the auditory system: Results of a clinical study. Audiol. Neurootol. 10, 134–144 (2005).

Li, Y., Zhang, G., Galvin, J. J. 3rd. & Fu, Q. J. Mandarin speech perception in combined electric and acoustic stimulation. PLoS ONE 9, e112471 (2014).

Plant, K., van Hoesel, R., McDermott, H., Dawson, P. & Cowan, R. Influence of contralateral acoustic hearing on adult bimodal outcomes after cochlear implantation. Int. J. Audiol. 55, 472–482 (2016).

Litovsky, R. Y., Johnstone, P. M. & Godar, S. P. Benefits of bilateral cochlear implants and/or hearing aids in children. Int. J. Audiol. 45, S78-91 (2006).

Mok, M., Grayden, D., Dowell, R. C. & Lawrence, D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J. Speech Lang. Hear. Res. 49, 338–351 (2006).

Gantz, B. J. et al. Multicenter clinical trial of the Nucleus Hybrid S8 cochlear implant: Final outcomes. Laryngoscope. 126, 962–973 (2016).

Yang, H. I. & Zeng, F. G. Reduced acoustic and electric integration in concurrent-vowel recognition. Sci. Rep. 3, 1419 (2013).

Dorman, M. F., Loiselle, L. H., Cook, S. J., Yost, W. A. & Gifford, R. H. Sound source localization by normal-hearing listeners, hearing-impaired listeners and cochlear implant listeners. Audiol. Neurootol. 21, 127–131 (2016).

Willis, S. et al. Bilateral and bimodal cochlear implant listeners can segregate competing speech using talker sex cues, but not spatial cues. JASA Express Lett. 1, 014401 (2021).

D’Onofrio, K., Richards, V. & Gifford, R. Spatial release from informational and energetic masking in bimodal and bilateral cochlear implant users. J. Speech Lang. Hear. Res. 63, 3816–3833 (2020).

Faller, C. & Merimaa, J. Source localization in complex listening situations: Selection of binaural cues based on interaural coherence. J Acoust Soc Am. 116, 3075–3089 (2004).

Dietz, M., Ewert, S. D. & Hohmann, V. Auditory model-based direction estimation of concurrent speakers from binaural signals. Speech Commun. 53, 592–605 (2011).

Zurek, P. Binaural advantages and directional effects in speech intelligibility. In Acoustical Factors Affecting Hearing Aid Performance (eds Studebaker, G. A. & Hochberg, I.) 255–276 (Allyn and Bacon, 1993).

Aronoff, J. M. et al. The use of interaural time and level difference cues by bilateral cochlear implant users. J. Acoust. Soc. Am. 127, 87–92 (2010).

Hu, H., Dietz, M., Williges, B. & Ewert, S. D. Better-ear glimpsing with symmetrically-placed interferers in bilateral cochlear implant users. J. Acoust. Soc. Am. 143, 2128–2141 (2018).

Potts, W. B., Ramanna, L., Perry, T. & Long, C. J. Improving localization and speech reception in noise for bilateral cochlear implant recipients. Trends Hear. 23, 2331216519831492 (2019).

Bakal, T. A., Milvae, K. D., Chen, C. & Goupell, M. J. Head shadow, summation, and squelch in bilateral cochlear-implant users with linked automatic gain controls. Trends Hear. 25, 23312165211018148 (2021).

Firszt, J. B., Reeder, R. M., Holden, L. K., Dwyer, N. Y., Asymmetric Hearing Study Team. Results in adult cochlear implant recipients with varied asymmetric hearing: A prospective longitudinal study of speech recognition, localization, and participant report. Ear Hear. 39, 845–862 (2018).

Goupell, M. J., Stoelb, C. A., Kan, A. & Litovsky, R. Y. The effect of simulated interaural frequency mismatch on speech understanding and spatial release from masking. Ear Hear. 39, 895–905 (2018).

Xu, K., Willis, S., Gopen, Q. & Fu, Q. J. Effects of spectral resolution and frequency mismatch on speech understanding and spatial release from masking in simulated bilateral cochlear implants. Ear Hear. 41, 1362–1371 (2020).

Thomas, M., Willis, S., Galvin, J. J. & Fu, Q. J. Effects of tonotopic matching and spatial cues on segregation of competing speech in simulations of bilateral cochlear implants. PLoS ONE 17, e0270759 (2022).

Landsberger, D. M., Svrakic, M., Roland, J. T. Jr. & Svirsky, M. The relationship between insertion angles, default frequency allocations, and spiral ganglion place pitch in cochlear implants. Ear Hear. 36, e207–e213 (2015).

Fu, Q. J., Galvin, J. J. & Wang, X. Integration of acoustic and electric hearing is better in the same ear than across ears. Sci. Rep. 7, 12500 (2017).

Willis, S., Moore, B. C. J., Galvin, J. J. & Fu, Q. J. Effects of noise on integration of acoustic and electric hearing within and across ears. PLoS ONE 15, e0240752 (2020).

Fowler, J. R., Eggleston, J. L., Reavis, K. M., McMillan, G. P. & Reiss, L. A. Effects of removing low-frequency electric information on speech perception with bimodal hearing. J. Speech Lang. Hear. Res. 59, 99–109 (2016).

Gifford, R. H. et al. Combined electric and acoustic stimulation with hearing preservation: Effect of cochlear implant low-frequency cutoff on speech understanding and perceived listening difficulty. Ear Hear. 38, 539–553 (2017).

Gifford, R. H., Sunderhaus, L. W., Dawant, B. M., Labadie, R. F. & Noble, J. H. Cochlear implant spectral bandwidth for optimizing electric and acoustic stimulation (EAS). Hear Res. 426, 108584 (2022).

Rader, T., Fastl, H. & Baumann, U. Speech perception with combined electric-acoustic stimulation and bilateral cochlear implants in a multisource noise field. Ear Hear. 34, 324–332 (2013).

Williges, B., Dietz, M., Hohmann, V. & Jürgens, T. Spatial release from masking in simulated cochlear implant users with and without access to low-frequency acoustic hearing. Trends Hear. 19, 2331216515616940 (2015).

Williges, B. et al. Spatial speech-in-noise performance in bimodal and single-sided deaf cochlear implant users. Trends Hear. 23, 2331216519858311 (2019).

Gifford, R. H. & Stecker, G. C. Binaural cue sensitivity in cochlear implant recipients with acoustic hearing preservation. Hear Res. 390, 107929 (2020).

Schoof, T., Green, T., Faulkner, A. & Rosen, S. Advantages from bilateral hearing in speech perception in noise with simulated cochlear implants and residual acoustic hearing. J. Acoust. Soc. Am. 133, 1017–1030 (2013).

Nelson, P. B., Jin, S. H., Carney, A. E. & Nelson, D. A. Understanding speech in modulated interference: Cochlear implant users and normal-hearing listeners. J. Acoust. Soc. Am. 113, 961–968 (2003).

Fu, Q. J. & Nogaki, G. Noise susceptibility of cochlear implant users: the role of spectral resolution and smearing. J. Assoc. Res. Otolaryngol. 6, 19–27 (2005).

Reiss, L. A. J. et al. Pitch adaptation patterns in bimodal cochlear implant users: over time and after experience. Ear Hear. 36, e23-34 (2015).

Sagi, E., Fu, Q. J., Galvin, J. J. 3rd. & Svirsky, M. A. A model of incomplete adaptation to a severely shifted frequency-to-electrode mapping by cochlear implant users. J. Assoc. Res. Otolaryngol. 11, 69–78 (2010).

Greenwood, D. D. A cochlear frequency-position function for several species–29 years later. J. Acoust. Soc. Am. 87, 2592–2605 (1990).

Wightman, F. L. & Kistler, D. J. Headphone simulation of free-field listening. II: Psychophysical validation. J. Acoust. Soc. Am. 85, 868–878 (1989).

Brungart, D. S., Simpson, B. D., Ericson, M. A. & Scott, K. R. Informational and energetic masking effects in the perception of multiple simultaneous talkers. J. Acoust. Soc. Am. 110, 2527–2538 (2001).

Acknowledgements

We thank all subjects for their participation. This work was partially supported by NIDCD-R01-DC016883 and NIDCD-R01-DC017738.

Author information

Authors and Affiliations

Contributions

Q.F. designed the experiments. M.T., J.G., and Q.F. analyzed the data and wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Thomas, M., Galvin, J.J. & Fu, QJ. Importance of ipsilateral residual hearing for spatial hearing by bimodal cochlear implant users. Sci Rep 13, 4960 (2023). https://doi.org/10.1038/s41598-023-32135-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-32135-0