Abstract

For common diseases such as lung cancer, patients often use the internet to obtain medical information. As a result of advances in artificial intelligence and large language models such as ChatGPT, patients and health professionals use these tools to obtain medical information. The aim of this study was to evaluate the readability of ChatGPT-generated responses with different readability scales in the context of lung cancer. The most common questions in the lung cancer section of Medscape® were reviewed, and questions on the definition, etiology, risk factors, diagnosis, treatment, and prognosis of lung cancer (both NSCLC and SCLC) were selected. A set of 80 questions were asked 10 times to ChatGPT via the OpenAI API. ChatGPT's responses were tested using various readability formulas. The mean Flesch Reading Ease, Flesch-Kincaid Grade Level, Gunning FOG Scale, SMOG Index, Automated Readability Index, Coleman-Liau Index, Linsear Write Formula, Dale-Chall Readability Score, and Spache Readability Formula scores are at a moderate level (mean and standard deviation: 40.52 ± 9.81, 12.56 ± 1.66, 13.63 ± 1.54, 14.61 ± 1.45, 15.04 ± 1.97, 14.24 ± 1.90, 11.96 ± 2.55, 10.03 ± 0.63 and 5.93 ± 0.50, respectively). The readability levels of the answers generated by ChatGPT are "collage" and above and are difficult to read. Perhaps in the near future, the ChatGPT can be programmed to produce responses that are appropriate for people of different educational and age groups.

Similar content being viewed by others

Introduction

Lung cancer is one of the most common malignant tumors, with high morbidity and mortality1. The five-year survival rate for individuals diagnosed with lung cancer is typically reported to be between 10 and 20%2,3. As in many diseases, the internet is a popular platform to access information on lung cancer today4. The tendency of patients to search for answers to health-related questions on the internet is increasing day by day. While search engines are often used for this purpose, artificial intelligence tools such as GPT (Generative Pre-trained Transformer) are increasingly being used for this purpose as a result of developments in technology 4.

Large language models (LLMs) are algorithms that can detect and analyze natural language and generate unique responses and are new developments in artificial intelligence and neural networks5. OpenAI (San Francisco, CA) developed ChatGPT, one of the most well-known LLMs today6. Since its initial debut in November 2022, it has, on average, added 25 million users by February 2023. ChatGPT's generative powers set it apart from other AI solutions7. ChatGPT is a promising technology that has the potential to revolutionize the healthcare industry, including pharmacy, by offering practitioners, students, and researchers’ most up-to-date medical information and support in a conversational, interactive manner8.

ChatGPT's ability to provide accurate and fast answers to complex health questions has attracted the interest of many researchers, and many studies are planned to examine the potential of ChatGPT on medical-related topics. Previous research has shown that ChatGPT can be successful in medical exams9,10. Some researchers have mentioned the advantages of ChatGPT in medical article writing6,11,12. ChatGPT also provides diagnosis and treatment recommendations to patients and healthcare professionals regarding medical issues13,14,15. Therefore, ChatGPT is being used, researched, and tested by more and more people in this field.

Undoubtedly, the accuracy and reliability of ChatGPT's answers to health-related questions are extremely important. Several studies have been documented in the academic literature pertaining to this particular topic14,16,17. Nevertheless, the readability and comprehensibility of the responses generated by ChatGPT are equally significant factors to consider. The aim of this study was to evaluate the readability of ChatGPT-generated responses with different readability scales in the context of lung cancer.

Material and methods

This article does not contain any studies with human or animal subjects, and ethical approval is not applicable for this article.

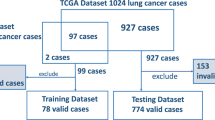

For this study, the most common questions in the lung cancer section of Medscape® (WebMD LCC, US) were reviewed, and 80 questions on the definition, etiology, risk factors, diagnosis, treatment, and prognosis of lung cancer (both NSCLC and SCLC) were selected. Medscape® is a leading online global destination for physicians and healthcare professionals worldwide, offering the latest medical news and expert perspectives; essential point-of-care drug and disease information; and relevant professional education and CME.

A python code specially prepared for this study was used to transmit the questions to ChatGPT and receive the answers. The answers were obtained through English version of ChatGPT-API, supported by the "gpt-3.5-turbo" model provided by OpenAI®. Each question was asked to ChatGPT 10 times in total, and 10 answers were obtained. The Python code was run in a single run on October 1, 2023. A total of 800 answers obtained for 80 questions were exported to a file (Supplementary Material 1) and analyzed for readability.

Readability formulas

Flesch Reading Ease (FRE) formula

Rudolph Flesch developed the Flesch Reading Ease (FRE) formula in 1948. The FRS ranges from 1 to 100, where 100 is the highest level of readability. A score of 60 is considered standard for publications targeting a general audience, and a score of 70 or more is considered easy for the average adult to read18.

flesch-kincaid grade level (FKGL)

The Flesch Reading Grade Level formula was built upon in FRE by Kincaid et al. in 1975 for the US Navy to give a grade level to written material. It is commonly referred to as the Flesch–Kincaid Grade Level (FKGL). Both FRE and FKGL calculate the readability based on two variables: average sentence length (based on the number of words) and average word length (based on the number of syllables)19.

Fog scale (gunning FOG formula)

The Gunning Fog Index is a readability formula that estimates the years of formal education required to understand a piece of text on the first reading20. It is based on the average number of words per sentence and the percentage of complex words in the text. The formula calculates the grade level at which the text is written, with a higher grade level indicating more complex and difficult-to-understand text21.

SMOG index

The Simplified Measure of Gobbledygook (SMOG) index is a readability formula used to assess the readability of a piece of text. It estimates the years of education required to understand the text on the first reading22. The SMOG index takes into account the number of polysyllabic words in a sample of text and uses a formula to calculate the grade level at which the text is written21.

Automated readability index (ARI)

The Automated Readability Index (ARI) is a readability formula used to assess the readability of a piece of text. It estimates the years of education required to understand the text on the first reading. The Automated Readability Index (ARI) considers the mean number of characters per word and the mean number of words per sentence within a given text sample. By employing a specific formula, the ARI determines the grade level at which the text is composed23.

Coleman-Liau index

The Coleman-Liau Index is a readability formula used to assess the readability of a piece of text. It estimates the years of education required to understand the text on the first reading. The Coleman-Liau Index is a metric that considers the mean number of characters per word and the mean number of sentences per 100 words within a given text sample. By employing a specific formula, this index determines the grade level at which the text is composed24.

Linsear write formula

The Linsear Write Formula is a readability formula used to assess the readability of a piece of text. The metric provides an estimation of the number of years of formal education necessary to comprehend the content upon initial perusal. The Linsear Write Formula considers the presence of both simple and complex words within a given text sample, employing a specific formula to determine the grade level at which the text is written25.

Dale-Chall readability score

The Dale-Chall Readability Score is a widely used formula for assessing the readability of a text. The text's grade level is determined by analyzing the frequency of complex vocabulary employed within it. This method has been utilized in numerous research endeavors to assess the comprehensibility of diverse forms of literature, encompassing materials designed for patient education, survey inquiries, and internet health-related content26.

Spache readability formula

The Spache Readability Formula is a widely employed tool for evaluating the readability of written material, with a specific focus on children's literature. The text's grade level can be determined by estimating the frequency of familiar words it contains. In honor of his wife Alice Spache, G. Harry McLaughlin created the formula, which is also known as the Spache formula 27.

Statistical analysis

We used a custom code written in Python (v3.9.18) to get the responses from ChatGPT. ChatGPT communication was set up with the English version of ChatGPT-API (premium version) based on the "gpt-3.5-turbo" model provided by OpenAI®. The "textstat 0.7.3" python library was used to calculate readability formulas. Data analysis was performed on Python (v3.9.18) using Pandas (v1.4.4) and Numpy (v1.24.3) libraries. The results obtained from the study were presented using descriptive statistical methods (mean, standard deviation, minimum, and maximum).

Results

The 80 questions (with 10 iterations) on diagnosis, treatment, prognosis, and risk factors of lung cancer (both SCLC and NSCLC) were asked to ChatGPT with a Python script specific to this study. It took approximately 4 h and 7 min to obtain a total of 800 responses. The mean response time for each question was 18.52 ± 5.53 s. The fastest response was 4.26 s, while the slowest response was 97.80 s.

The shortest response given by ChatGPT to questions related to lung cancer was "How frequently is tobacco smoking the cause of non-small cell lung cancer?”. The response to this question contains 4 sentences, 33 words, and 328 characters. The longest response was to the question "How is lung cancer diagnosed?" and was 23 sentences, 250 words, and 2579 characters. The mean response length is 12.95 ± 3.76 sentences, 144.25 ± 35.73 words, and 1428.76 ± 380.58 characters.

Considering the readability of all the responses given by ChatGPT, it is seen that the mean Flesch Reading Ease, Flesch-Kincaid Grade Level, Gunning FOG Scale, SMOG Index, Automated Readability Index, Coleman-Liau Index, Linsear Write Formula, Dale-Chall Readability Score, and Spache Readability Formula scores are at a high level (mean and standard deviation: 40.52 ± 9.81, 12.56 ± 1.66, 13.63 ± 1.54, 14.61 ± 1.45, 15.04 ± 1.97, 14.24 ± 1.90, 11.96 ± 2.55, 10.03 ± 0.63 and 5.93 ± 0.50, respectively). Descriptive statistics on the readability levels of all responses can be seen in Table 1. Among the reponses given by ChatGPT to the questions, the sample responses with the highest and lowest FRE scores are given in Table 2.

Discussion

Today, many people, whether they are patients or not, receive information from alternative sources other than face-to-face meetings with physicians and health professionals. With the development of technology and especially the widespread use of the internet, studies have shown that a significant proportion of patients use the internet for health-related purposes, including seeking information about their conditions, treatment options, and medications28,29,30. In addition, exciting developments in artificial intelligence have enabled patients and even health professionals to add a new one to the sources of health information31,32.

ChatGPT is one of the most exciting technologies in today's technology world. The use and potential of this technology, which can produce answers by understanding the commands (questions) given, in the field of health is being investigated more and more every day. Large language models belonging to the natural language processing sub-branch of artificial intelligence can analyze and make sense of questions asked in natural spoken language and produce original answers very quickly. In our study, the API script using the "chatgpt-3.5-turbo" model answered the questions relatively quickly (mean response time was 18.52 ± 5.53 s). It is possible that improvements in processor, storage, and internet connection speeds could reduce this time even further.

The most important feature of artificial intelligence and natural language models is that they produce original responses using natural language. Although it is the nature of the system to be authentic, the authenticity of the responses produced by ChatGPT has been investigated in many studies in the literature33,34.

The fast and unique responsiveness of ChatGPT will be useless if it cannot produce accurate and reliable answers. Especially in the field of health, ChatGPT is expected to be much more reliable. Providing false, incomplete, or misleading information through ChatGPT and similar artificial intelligence applications will significantly affect the health of patients. For example, if patients are not given accurate information about lung cancer, there may be a delay in diagnosis, and the patient may miss the chance of an early diagnosis. Moreover, inaccurate information in treatment protocols may affect the decisions of healthcare professionals who are supported by artificial intelligence applications such as ChatGPT while creating diagnosis and treatment strategies. For this reason, many studies have investigated how accurately ChatGPT can produce answers to health-related questions35,36,37,38. Many studies have been published on how successful ChatGPT can be in exams for medical students, physicians, and health professionals10,39,40,41. Although it has been suggested that chatGPT can be successful in medical exams, there are some studies in the literature that argue the opposite42.

ChatGPT's ability to produce fast and original answers that are also accurate and reliable is, of course, a great achievement. ChatGPT and many other artificial intelligence tools are used by many people of very different ages and education levels. The fact that these tools do not require any additional cost other than an internet connection and provide more natural responses allows them to be used by many people. For example, a smoker may want to investigate etiological issues related to lung cancer. Or a person whose radiology report shows a nodule or mass may want to find out the stage of his or her cancer before consulting his or her physician. In addition, of course, medical students and other health sciences students, healthcare professionals, physicians, and those who provide professional healthcare services also benefit from this service offered by artificial intelligence. As a result, there is a group with a very different level of education and age. Therefore, in a disease with a high mortality rate, such as lung cancer, it is extremely important that ChatGPT not only provides correct answers but also provides readable and understandable answers. To address this aspect of ChatGPT, we investigated several readability scores accepted in the literature.

The most commonly used formulas for readability testing are Flesch Reading Ease (FRE) and Flesch-Kincaid Reading Grade Level (FKGL). According to the FRE score, the most comprehensible response produced by the ChatGPT was at the "standard" level, while the most incomprehensible response was at the "very confusing" level (69.52 and 6.95, respectively). In FKGL, the lowest score was 7.1 and the highest score was 18.7 ("professional" level and "college graduate" level, respectively). A study of urology patients found that the readability level of ChatGPT responses was similarly low according to the FRE and FKGL formulas (median 18, 15.8; IQR 21, 3, respectively)4. These results show that the FRE score was very variable in the study and that the ChatGPT responses were very difficult to read. In a study of radiology reports, although FRE and FKGL levels were slightly higher (means difficult to read), they were still below the values in our study (38.0 ± 11.8 vs. 40.52 ± 9.81, 10.4 ± 1.9 vs. 12.58 ± 1.66, respectively)43. Similar to the literature, the average FRE and FKGL scores found in our study indicate that the responses generated by ChatGPT are very difficult to read and can only be understood by university graduates.

The responses were found to be at the "college freshman" level according to the Gunning fog index and at the "college student" level according to the automated readability index (ARI) (13.63 ± 1.54 and 15.04 ± 1.97, respectively). According to the ARI index, the answers can only be understood by those aged 18–22 and older (maximum level). According to other readability formulas, the Coleman-Liau index and the Dale-Chall index, the responses given by ChatGPT were found to be at the "collage" level (not easy to read, difficult) (14.24 ± 1.90 and 10.03 ± 0.62, respectively). In the SMOG index, which is frequently used in the field of health, the average readability level is 14.61 ± 1.45, indicating that the texts produced by ChatGPT are quite difficult to read. In another study on urology patients, the readability scores of the texts produced by ChatGPT were evaluated, and the mean SMOG index was found to be 8.7 ± 2.1. In the same study (8th or 9th grade), the mean FKE and FKGR scores of the summary texts produced by ChatGPT were also high (means difficult to read) (56.0 ± 13.7 and 10.0 ± 2.4, respectively)44.

Conclusions

This study has shown that the readability levels of the responses generated by ChatGPT are "collage" and above and are difficult to read. Of course, the fact that the subject we tested belongs to a high-level field such as medicine is also effective in reaching this conclusion. However, considering that many people of different age groups and educational levels use ChatGPT to get information about lung cancer, it should be considered that the readability level will be high along with the reliability of the answers given and may be misunderstood or not understood at all. Perhaps in the near future, the ChatGPT can be programmed to produce responses that are appropriate for people of different educational and age groups. It is also clear that there is a need for more extensive and advanced research on a wider range of medical topics.

Data availability

The data that support the findings of this study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

References

Howlader, N. et al. The Effect of Advances in Lung-Cancer Treatment on Population Mortality. N. Engl. J. Med. [Internet] 383(7), 640–649. https://doi.org/10.1056/NEJMoa1916623 (2020).

Siegel, R. L., Miller, K. D. & Jemal, A. Cancer statistics, 2020. CA A Cancer J. Clin. [Internet] 70(1), 7–30. https://doi.org/10.3322/caac.21590 (2020).

Sung, H. et al. Global cancer statistics 2020: GLOBOCAN Estimates of ıncidence and mortality worldwide for 36 cancers in 185 countries. CA A Cancer J. Clin. [Internet] 71(3), 209–249. https://doi.org/10.3322/caac.21660 (2021).

Cocci A, Pezzoli M, Lo Re M, Russo GI, Asmundo MG, Fode M, et al. Quality of information and appropriateness of ChatGPT outputs for urology patients. Prostate Cancer Prostatic Dis [Internet]. 2023 Jul 29 [cited 2023 Oct 5]; Available from: https://www.nature.com/articles/s41391-023-00705-y

Luitse, D. & Denkena, W. The great transformer: Examining the role of large language models in the political economy of AI. Big Data Soc. [Internet] 8(2), 205395172110477. https://doi.org/10.1177/20539517211047734 (2021).

Buholayka, M., Zouabi, R. & Tadinada, A. Is ChatGPT ready to write scientific case reports independently? A comparative evaluation between human and artificial intelligence. Cureus https://doi.org/10.7759/cureus.39386 (2023).

Liu, Y. et al. Generative artificial intelligence and its applications in materials science: Current situation and future perspectives. J. Materiomics [Internet] 9(4), 798–816 (2023).

Arif, T. B., Munaf, U. & Ul-Haque, I. The future of medical education and research: Is ChatGPT a blessing or blight in disguise?. Med. Educ. Online [Internet] 28(1), 2181052. https://doi.org/10.1080/10872981.2023.2181052 (2023).

Gilson, A. et al. How does ChatGPT perform on the united states medical licensing examination? The ımplications of large language models for medical education and knowledge assessment. JMIR Med. Educ. [Internet] 9, e45312 (2023).

Gencer, A. & Aydin, S. Can ChatGPT pass the thoracic surgery exam?. Am. J. Med. Sci. [Internet] 366(4), 291–295 (2023).

Biswas, S. ChatGPT and the future of medical writing. Radiology [Internet] 307(2), e223312. https://doi.org/10.1148/radiol.223312 (2023).

Mondal, H., Mondal, S. & Podder, I. Using ChatGPT for writing articles for patients’ education for dermatological diseases: A pilot study. Indian Dermatol Online J. [Internet] 14(4), 482. https://doi.org/10.4103/idoj.idoj_72_23 (2023).

Schulte B. Capacity of ChatGPT to Identify Guideline-Based Treatments for Advanced Solid Tumors. Cureus [Internet]. 2023 Apr 21 [cited 2023 Oct 5]; Available from: https://www.cureus.com/articles/149231-capacity-of-chatgpt-to-identify-guideline-based-treatments-for-advanced-solid-tumors

Walker, H. L. et al. Reliability of medical ınformation provided by ChatGPT: Assessment against clinical guidelines and patient ınformation quality ınstrument. J. Med. Internet Res. [Internet] 25, e47479 (2023).

Hamed, E., Sharif, A., Eid, A., Alfehaidi, A. & Alberry, M. Advancing artificial ıntelligence for clinical knowledge retrieval: A case study using ChatGPT-4 and link retrieval plug-ın to analyze diabetic ketoacidosis guidelines. Cureus https://doi.org/10.7759/cureus.41916 (2023).

Almazyad, M. et al. Enhancing expert panel discussions in pediatric palliative care: Innovative scenario development and summarization with ChatGPT-4. Cureus https://doi.org/10.7759/cureus.38249 (2023).

Rahsepar, A. A. et al. How AI responds to common lung cancer questions: ChatGPT versus google bard. Radiology [Internet] 307(5), e230922. https://doi.org/10.1148/radiol.230922 (2023).

Flesch, R. A new readability yardstick. J. Appl. Psychol. [Internet] 32(3), 221–233. https://doi.org/10.1037/h0057532 (1948).

Jindal, P. & MacDermid, J. Assessing reading levels of health information: Uses and limitations of flesch formula. Educ. Health [Internet] 30(1), 84. https://doi.org/10.4103/1357-6283.210517 (2017).

Athilingam, P., Jenkins, B. & Redding, B. A. Reading level and suitability of congestive heart failure (CHF) Education in a mobile app (CHF Info App): Descriptive design study. JMIR Aging [Internet] 2(1), e12134 (2019).

Arora, A., Lam, A. S., Karami, Z., Do, L. G. & Harris, M. F. How readable are Australian paediatric oral health education materials?. BMC Oral Health [Internet] 14(1), 111. https://doi.org/10.1186/1472-6831-14-111 (2014).

Hamnes, B., Van Eijk-Hustings, Y. & Primdahl, J. Readability of patient information and consent documents in rheumatological studies. BMC Med Ethics https://doi.org/10.1186/s12910-016-0126-0 (2016).

Mc Carthy, A. & Taylor, C. SUFE and the internet: Are healthcare information websites accessible to parents?. bmjpo 4(1), e000782 (2020).

Azer, S. A., AlOlayan, T. I., AlGhamdi, M. A. & AlSanea, M. A. Inflammatory bowel disease: An evaluation of health information on the internet. WJG 23(9), 1676 (2017).

Lambert, K., Mullan, J., Mansfield, K., Koukomous, A. & Mesiti, L. Evaluation of the quality and health literacy demand of online renal diet information. J. Hum. Nutr. Diet [Internet] 30(5), 634–645. https://doi.org/10.1111/jhn.12466 (2017).

Koo, K. & Yap, R. L. How readable Is BPH treatment ınformation on the ınternet? assessing barriers to literacy in prostate health. Am. J. Mens Health [Internet] 11(2), 300–307. https://doi.org/10.1177/1557988316680935 (2017).

Begeny, J. C. & Greene, D. J. can readabılıty formulas be used to successfully gauge dıffıculty of readıng materıals?. Psychol. Schools [Internet] 51(2), 198–215. https://doi.org/10.1002/pits.21740 (2014).

Wong, D. K. K. & Cheung, M. K. Online health ınformation seeking and ehealth literacy among patients attending a primary care clinic in hong kong: A cross-sectional survey. J. Med. Internet Res. [Internet] 21(3), e10831 (2019).

Potemkowski, A. et al. Internet usage by polish patients with multiple sclerosis: A multicenter questionnaire study. Interact J. Med. Res. [Internet]. 8(1), e11146 (2019).

Duymus, T. M. et al. Internet and social media usage of orthopaedic patients: A questionnaire-based survey. WJO [Internet] 8(2), 178 (2017).

Boillat, T., Nawaz, F. A. & Rivas, H. Readiness to embrace artificial ıntelligence among medical doctors and students: Questionnaire-based study. JMIR Med. Educ. [Internet] 8(2), e34973 (2022).

Fritsch, S. J. et al. Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients. Dıgıtal Health [Internet] 8, 205520762211167. https://doi.org/10.1177/20552076221116772 (2022).

Bhattacharya, K. et al. ChatGPT in surgical practice—a new kid on the block. Indian J. Surg. https://doi.org/10.1007/s12262-023-03727-x (2023).

Elkhatat, A. M. Evaluating the authenticity of ChatGPT responses: A study on text-matching capabilities. Int. J. Educ. Integr. 19(1), 15. https://doi.org/10.1007/s40979-023-00137-0 (2023).

Yeo, Y. H. et al. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma [Internet]. Gastroenterology https://doi.org/10.1101/2023.02.06.23285449 (2023).

Kusunose, K., Kashima, S. & Sata, M. Evaluation of the accuracy of ChatGPT in answering clinical questions on the Japanese society of hypertension guidelines. Circ. J. [Internet] 87(7), 1030–1033 (2023).

Suppadungsuk, S. et al. Examining the validity of ChatGPT in ıdentifying relevant nephrology literature: Findings and ımplications. JCM [Internet] 12(17), 5550 (2023).

Samaan, J. S. et al. Assessing the accuracy of responses by the language model ChatGPT to questions regarding bariatric surgery. Obes. Surg. [Internet] 33(6), 1790–1796. https://doi.org/10.1007/s11695-023-06603-5 (2023).

AlessandriBonetti, M., Giorgino, R., Gallo Afflitto, G., De Lorenzi, F. & Egro, F. M. How Does ChatGPT perform on the ıtalian residency admission national exam compared to 15,869 medical graduates?. Ann. Biomed. Eng. https://doi.org/10.1007/s10439-023-03318-7 (2023).

Wang, X. et al. ChatGPT Performs on the Chinese national medical licensing examination. J. Med. Syst. 47(1), 86. https://doi.org/10.1007/s10916-023-01961-0 (2023).

Kung, T. H. et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. Plos Digit Health 2(2), e0000198. https://doi.org/10.1371/journal.pdig.0000198 (2023).

Weng, T. L., Wang, Y. M., Chang, S., Chen, T. J. & Hwang, S. J. ChatGPT failed Taiwan’s family medicine board exam. J. Chinese Med. Assoc. 86(8), 762–766. https://doi.org/10.1097/JCMA.0000000000000946 (2023).

Li, H. et al. Decoding radiology reports: Potential application of OpenAI ChatGPT to enhance patient understanding of diagnostic reports. Clin. Imag. 101, 137–141 (2023).

Eppler, M. B. et al. Bridging the gap between urological research and patient understanding: The role of large language models in automated generation of layperson’s summaries. Urol. Pract. [Internet] 10(5), 436–443. https://doi.org/10.1097/UPJ.0000000000000428 (2023).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

The author confirms sole responsibility for the following: study conception and design, data collection, analysis and interpretation of results, and manuscript preparation.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gencer, A. Readability analysis of ChatGPT's responses on lung cancer. Sci Rep 14, 17234 (2024). https://doi.org/10.1038/s41598-024-67293-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-67293-2

Keywords

This article is cited by

-

Areas of research focus and trends in the research on the application of AIGC in healthcare

Journal of Health, Population and Nutrition (2025)

-

An academic evaluation of ChatGpt’s ability and accuracy in creating patient education resources for rare cardiovascular diseases

Scientific Reports (2025)

-

Evaluation of ChatGPT-4 responses on physical activity guidance in children with cystic fibrosis: reliability, quality, and readability

European Journal of Pediatrics (2025)