Abstract

We introduce the theory of thermodynamic relativity, a unified theoretical framework for describing both entropies and velocities, and their respective physical disciplines of thermodynamics and kinematics, which share a surprisingly identical description with relativity. This is the first study to generalize relativity in a thermodynamic context, leading naturally to anisotropic and nonlinear adaptations of relativity; thermodynamic relativity constitutes a new path of generalization, as compared to the “traditional” passage from special to general theory based on curved spacetime. We show that entropy and velocity are characterized by three identical postulates, which provide the basis of a broader framework of relativity: (1) no privileged reference frame with zero value; (2) existence of an invariant and fixed value for all reference frames; and (3) existence of stationarity. The postulates lead to a unique way of addition for entropies and for velocities, called kappa-addition. We develop a systematic method of constructing a generalized framework of the theory of relativity, based on the kappa-addition formulation, which is fully consistent with both thermodynamics and kinematics. We call this novel and unified theoretical framework for simultaneously describing entropy and velocity “thermodynamic relativity”. From the generality of the kappa-addition formulation, we focus on the cases corresponding to linear adaptations of special relativity. Then, we show how the developed thermodynamic relativity leads to the addition of entropies in nonextensive thermodynamics and the addition of velocities in Einstein’s isotropic special relativity, as in two extreme cases, while intermediate cases correspond to a possible anisotropic adaptation of relativity. Using thermodynamic relativity for velocities, we start from the kappa-addition of velocities and construct the basic formulations of the linear anisotropic special relativity; e.g., the asymmetric Lorentz transformation, the nondiagonal metric, and the energy-momentum-velocity relationships. Then, we discuss the physical consequences of the possible anisotropy in known relativistic effects, such as, (i) matter-antimatter asymmetry, (ii) time dilation, and (iii) Doppler effect, and show how these might be used to detect and quantify a potential anisotropy.

Similar content being viewed by others

Introduction

The development of thermodynamics was driven by our worldly experience with gasses, which are coupled through short-range, collisional interactions, and generally, reside in thermal equilibrium distributions. In contrast, space plasmas, from the solar wind and planetary magnetospheres to the outer heliosphere and beyond to interstellar and galactic plasmas, are quite different as particles have correlations and interact through longer-range electromagnetic interactions. Thus, space plasmas provide a natural laboratory for directly observing plasma particle distributions and for the experimental ground truth in the development of a new and broader paradigm of thermodynamics. The journey to improve our understanding of the physical underpinnings of space thermodynamics has led to discovering new fundamental physics, including the concept of entropy defect1, the generalization of the zeroth law of thermodynamics2, and in this study, the development of thermodynamic relativity under a unified theoretical framework for describing both entropies and velocities. This is a novel theory, which should not be confused with some relativistic adaptation of thermodynamics, such as, the description of particle distributions and their thermodynamics in relativistic regimes of kinetic energies. Instead, it is a unification of two fundamental physical disciplines, those of thermodynamics and kinematics, which share a relativity description that is surprisingly identical. Velocity and entropy are basic physical variables of kinematics and thermodynamics, respectively. In an abstract description, velocity measures the change in position of a body through a motion, while entropy measures the corresponding change of information or order/disorder of this body. Thus, they appear to describe entirely different physical contexts. However, we show here that they share the same postulates and mathematical framework that was thought to be characteristic only of special relativity. In addition, another common property is stationarity for both the velocity and entropy, which we upgraded here to be a new, formal, postulate. Finally, as shown in this study, the common postulates provide the basis that leads to a common mathematic formalism and unified relativity for velocities and entropies.

It has been nearly 60 years since the first observations of magnetospheric electrons, whose velocities unexpectedly deviated from the classical kinetic description of a Maxwellian distribution3,4,5. Since then, numerous observations of space plasma throughout the heliosphere have followed and repeatedly verified this specific non-Maxwellian behaviour6,7,8,9,10,11. Various empirical models of these distributions have been suggested, some simpler, some more mathematically complex, but all originating from the perspectives of generalized expressions, rather than physical first principles6,12,13.

Empirical kappa distributions have been found to well describe the velocities of a plethora of space plasma particles, generalizing the classical Maxwellian distribution14, by means of a parameter kappa, κ, that provides a measure of the shape of these distributions, in addition to the standard parameterization of temperature. The classical, Maxwellian distribution is included as the special kappa value of κ→∞.

Various names were given to the particle populations described by these distributions, with the most frequent being suprathermal and nonthermal. The term suprathermal was given to describe the non-Maxwellian distribution tails; as it was thought, the core of the distribution was still Maxwellian, with a suprathermal tail that was enhanced above the Maxwellian (e.g. Refs.15,16,17,18,19). Later, it was realized that both the Maxwellian core and the non-Maxwellian suprathermal tail are actually part of the same distribution, the kappa distribution (e.g. Refs.9,10. The nonthermal characterization came from the fact that the Maxwellian distribution describes particles in thermal equilibrium, thus non-Maxwellian distributions were thought to imply nonthermal particle populations. Now we understand that this was not accurate characterization; because how can a statistical distribution of particle velocities be nonthermal and simultaneously be parameterized by temperature? Temperature is the key-parameter of the zeroth law of thermodynamics that equalizes the inwards/outwards flow of heat when the particle system resides in thermal equilibrium. It was clear that a drastic change of classical statistical mechanics and thermodynamics was needed.

The first theoretical efforts came from the connection of kappa distributions with nonextensive statistical mechanics (e.g. Refs.6,20,21,22). This statistical framework is constructed on the basis of a generalized entropic formulation, called q-entropy23,24, which includes the classical Boltzmann25 – Gibbs26 (BG) formulation as a special case, q→1. It is also called kappa entropy, as it is the entropy associated with kappa distributions; indeed, the maximization of the q-entropy, under the constraints of the canonical ensemble leads to the kappa distribution, where the kappa and q parameters are trivially equated through q = 1 + 1/κ6,27. In addition, Livadiotis and McComas6,28 showed that this path connects the theory and formalism of kappa distributions with nonextensive statistical mechanics under consistent and equivalent kinetic and thermodynamic definitions of temperature.

The maximization of entropy leads to the canonical stationary distribution (i.e., the Gibb’s path26), but this does not mean that it counts as an origin of this distribution. In fact, this is simply a self-consistent derivation, as one can always find a specific entropy formulation that can lead to a certain distribution function when maximized29,30,31. The kappa distribution emerges within the framework of statistical mechanics by maximizing Tsallis entropy under the constraints of canonical ensemble. Nevertheless, this entropy maximization cannot be considered to be the origin of kappa distributions; both the entropic and distribution functions can be equivalently derived from each other. Therefore, the question still remains:

What is the thermodynamic origin of both the kappa distributions and their entropy? Or, equivalently: Are the kappa distributions and their entropy consistent with thermodynamics?

A misconception of the thermodynamic origin concerns the existence of mechanisms that can generate kappa distributions in plasmas. Some examples are: superstatistics32,33,34,35,36,37, shock waves38,39, turbulence40,41,42, colloidal particles43, interaction with pickup ions44,45, pump acceleration mechanism46, polytropic behavior47,48; Debye shielding and magnetic coupling49,50,51; (see also Ref.10, Ch. 5, 6, 8, 10, 15, 16). While there are a variety of such mechanisms that can occur in space plasmas, thermodynamics ultimately determines if a particular distribution is allowed. Therefore, none of these mechanisms can explain whether kappa distributions (and their associated entropy) are consistent with thermodynamics.

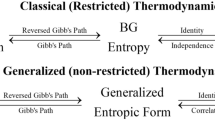

The origin of a particle distribution and its associated entropy, which are capable of physically describing particle systems, must be based on first principles of thermodynamics1,2. In order to derive the most generalized formulation that consistently represents entropy, we focus on the possible ways that the entropy of a system partitions into the entropies of the system’s constituents (e.g., individual or groups of particles)1,2,52,53,54,55,56,57,58,59,60,61. The entropy partitioning has been approached in two ways, restricted and unrestricted1. The restricted way describes classical thermodynamics. According to this, the entropy is restricted to be an additive quantity, and systems can reach the classical thermal equilibrium, that is, a special stationary state interwoven with the following equivalent properties: (i) entropy is restricted to be additive, (ii) the formulation of entropy is given by the BG statistical framework25,26, and (iii) the velocity distribution that maximizes this entropy within the constraints of the canonical ensemble is expressed by the Maxwell-Boltzmann formulation14. In contrast, the unrestricted way describes generalized thermodynamics. According to this, the entropy is not restricted by any addition rule, and systems can reach generalized thermal equilibrium, that is, any stationary state interwoven with the following properties: (i) kappa-addition of entropies; although entropy can be initially assumed to be unrestricted, the consistence of math naturally leads to a certain rule of addition, which stands as the most generalized way of entropy partitioning, that is, the kappa-addition of entropies58,60; (ii) the formulation of entropy is given by a general framework of nonextensive statistical mechanics such as the Tsallis entropy23,54, and (iii) the velocity distribution that maximizes this entropy within the constraints of the canonical ensemble is given by the formulation of kappa distributions6,29,30,61. (Further details on classical/restricted vs. generalized/non-restricted thermodynamics can be found in Sect. 2 of Ref.1.)

Therefore, the possible ways of entropy partitioning have a fundamental role in thermodynamics. Let the entropy of a system, composed from two parts A and B, be given as a function of their entropies, SA and SB, respectively. This property of composability is due to the fact that entropy is the only macroscopic thermodynamic quantity that is well-defined for both stationary and non-stationary states; in contrast to temperature and related thermal variables, which are well-defined only at stationary states. Therefore, the entropy of the composed system, denoted by \({\text{A}}{ \oplus _\kappa }{\text{B}}\), is \({S_{{\text{A}}{ \oplus _\kappa }{\text{B}}}}=f({S_{\text{A}}},{S_{\text{B}}})\). The symbol \({ \oplus _\kappa }\) refers to the κ-addition, the mathematical formulation of the generalized portioning of entropies, which recovers the classical case of standard addition, \({S_{{\text{A}}{ \oplus _\kappa }{\text{B}}}}(\kappa \to \infty )={S_{\text{A}}}+{S_{\text{B}}}\); (recall that classical thermodynamics, which forces the partitioning of entropies to be additive, leads to the BG entropy and Maxwellian distribution1,52). Our search shifted to the most generalized way of entropy partitioning, that is, the most generalized expression of a system’s entropy as a function of the entropies of the system’s constituents. We developed this expression through the concept of entropy defect1.

Briefly, the entropy defect leads to the most generalized way of entropy partitioning that is consistent with the laws of thermodynamics. Entropy is a physical quantity that quantifies the disorder of a system. When a particle system resides in classical thermodynamic equilibrium, the entropy has a very simple way of being shared among the particles: it sums their entropies. However, when a particle system resides in the generalized thermodynamic equilibrium, such as space plasmas, this summation rule does not hold, as there is an additional term that reduces the total entropy, so that the total becomes less than the sum of the individual entropies. Particles in space plasmas move self-consistently with electromagnetic fields (e.g., Debye shielding49,50,51, frozen in magnetic field49), which interact in ways that bind these particles together and produce correlations among particles. The existence of particle correlations adds order to the whole system, and thus, decreases its total disorder, or entropy. This concept is analogous to the mass defect that arises when nuclear particle systems are assembled: the total mass is less than the sum of the assembled masses because of the mass-energy spent in the fields, which bind the particles together (Fig. 1).

Schematic diagram of mass and entropy defects. Analogous to the mass defect (MD) that quantifies the missing mass (binding energy) associated with assembling subatomic particles, the entropy defect (SD) quantifies the missing entropy (correlation order) associated with assembling space plasma particles. (Taken from2).

The entropy defect describes how the entropy of the system partitions into the entropies of the system’s constituents1,2,45,52,53,54. Take two, originally independent, constituents A and B with entropies SA and SB, respectively, which are assembled into a composed system of entropy \({S_{{\text{A}} \oplus {\text{B}}}}\), with additional correlations developed between the two constituents. Then, the order induced by the developed correlations causes the system’s combined entropy to decrease, and thus become less than the simple sum of entropies of the constituents, \({S_{{\text{A}} \oplus {\text{B}}}} - ({S_{\text{A}}}+{S_{\text{B}}})<0\). The missing entropy defines the entropy defect \({S_{\text{D}}} \equiv ({S_{\text{A}}}+{S_{\text{B}}}) - {S_{{\text{A}} \oplus {\text{B}}}}\). Note that the setup of the two subsystems A and B is a thermodynamically close system. As such, the entropy of the system before its composition, that is, the summation of the entropies of two independent systems, SA + SB, and the entropy after the composition plus the entropy defect that measures the entropy spent on the additional correlations developed once the total system is composed, \({S_{{\text{A}} \oplus {\text{B}}}}+{S_{\text{D}}}\), are equal, i.e., \({S_{{\text{A}} \oplus {\text{B}}}}={S_{\text{A}}}+{S_{\text{B}}} - {S_{\text{D}}}\). Moreover, the exact expression of the entropy defect was shown to be \({S_{\text{D}}}=\tfrac{1}{\kappa } \cdot {S_{\text{A}}} \cdot {S_{\text{B}}}\)1,2, with 1/κ measuring the magnitude of the interconnectedness (i.e., correlations) among the system’s constituents, which is causing the defect. The total entropy of the system is determined by a nonlinear expression of the constituent entropies, formulating a kappa-dependent addition rule for entropies partitioning, simply called, kappa-addition,

The entropy partitioning consistent with this addition rule leads to the specific formula of q- or kappa entropy54,55,56,57,58,59. The partitioning in Eq. (1) follows the simple entropy defect, while in its most generalized version, the entropy defect can be expressed through an arbitrary positive and increasingly monotonic function H (see the full description of H in the next section):

We showed1,2, that the entropy defect can be determined from three basic axioms, which must hold for any partitioning function H: (1) Separability \({S_{\text{D}}}({S_{\text{A}}},{S_{\text{B}}}) \propto g({S_{\text{A}}}) \cdot h({S_{\text{B}}})\); (2) Symmetry, \({S_{\text{D}}}({S_{\text{A}}},{S_{\text{B}}})={S_{\text{D}}}({S_{\text{B}}},{S_{\text{A}}}) \propto g({S_{\text{A}}}) \cdot g({S_{\text{B}}})\); and (3) Upper boundedness, i.e., the existence of an upper limit of any entropy value, \(S<{S_{\hbox{max} }}\), where the upper limit is, in general a function of κ, i.e., \({S_{\hbox{max} }}={S_{\hbox{max} }}(\kappa )\); e.g., the simple case of \(H(S)=S\) gives \({S_{\hbox{max} }}=\kappa\). Also, the composition of entropies under the addition of Eq. (2) can lead to a stationary state; namely, if SA and SB are the entropies of a stationary state, the total system is also residing in a stationary state with entropy SA⊕B58,60.

The addition rule of entropies, which is implied with the formulation of entropy defect, has some specific algebra2. This includes the transitive and symmetric properties, which are fundamental for the zeroth law of thermodynamics. The zeroth law of thermodynamics is naturally a transitive thermodynamic property of systems (a relation R on a set X is transitive if, for all elements A, B, C in X, whenever R relates A to B and B to C, then R also relates A to C). In addition, the zeroth law of thermodynamics is also a symmetric property, i.e., if A is in a stationary state (generalized thermal equilibrium) with B, then B is in the same stationary state with A. The transitive and symmetric properties of the zeroth law of thermodynamics conjure the matter of connections between systems. Indeed, the way that C is connected to A and B provides all the information on how A and B are connected. If, for instance, C is in a stationary state with A and with B, then, A and B are together in a stationary state.

The journey through understanding generalized thermodynamics led us to realize that the physical framework of entropic values also extends beyond the context of thermodynamics. Indeed, we note the following characteristics of any arbitrary entropy value S: (1) a non-standard addition rule and connection between observers; (2) existence of upper limit Smax, so that for any entropy S < Smax; and (3) existence of stationarity for any entropy S (still less than Smax). We cannot say strongly enough: these three characteristics are identical to the two traditional postulates of special relativity for velocities plus the trivial assumption of the existence of inertial frames.

In this study we use these parallel conditions to develop a unified relativity framework for both thermodynamics and kinematics, which we call, thermodynamic relativity. The purpose of this paper is to shed light on the relativity of both the entropies and velocities, examine their similarities, and finally, construct a unified framework of thermodynamic relativity. First, we apply thermodynamic relativity to entropies and show the consequences in statistical mechanics. Next, we apply thermodynamic relativity to velocities, show that this produces a naturally derived anisotropic special theory of relativity, and discuss some of the important consequences of this relativistic kinematics. For simplicity, here we consider linear motion and the 1-dimensional velocity, determining the effects of relativity in spacetime that involves one spatial dimension, the one of motion; for this, the 1D-velocity reduces to the characterization of speed, neglecting the sign where it is not necessary.

The paper is organized as follows. Section "Entropy defect – general formulation" provides the general formulation of entropy partitioning, as determined by the entropy defect. Section "Postulates of the relativity of entropy" develops the postulates of the relativity of entropy: (1) principle of relativity for entropy; (2) existence of a fixed and invariant entropy, that is, an upper limit of entropy values; and (3) existence of stationary entropy. Section "Relativity of velocity" revisits the relativity of velocities and restates and discusses its postulates: (1) all frames of reference are equivalent; (2) existence of a fixed and invariant speed, that is, an upper limit of speeds; and (3) existence of stationary velocity. Section "Relativity of entropy and velocity – a unified framework of thermodynamics and kinematics" unifies the two relativities in one framework, thermodynamic relativity for entropies and velocities. This is shown to be a naturally derived anisotropic version of special relativity. We study the two extreme cases, that is, the isotropy (Einstein’s special relativity) and maximum anisotropy (nonextensive thermodynamics), and show how these can be included in a single unified description. Section "Formulation of thermodynamic relativity for velocity" focuses on the kinematics, i.e., the anisotropy in the speed of light, the velocity addition, the asymmetric matrix of the Lorentz transformation, the non-diagonal norm, the energy-momentum and energy-velocity equations. Section "Physical consequences of thermodynamic relativity" examines the physical consequences of, and possibility for measuring, the anisotropy within the unified framework of thermodynamic relativity, focusing on the (i) matter-antimatter asymmetry, (ii) time dilation, and (iii) Doppler effect. Finally, Section "Discussion and conclusions" summarizes and discusses the conclusions. The supplementary material covers aspects of the formulation of the relativity framework for entropies and velocities.

Entropy defect – general formulation

The entropy partitioning formulates the addition rule that includes the entropy defect, as shown in Eq. (1). In general, this is expressed in terms of a function of the involved entropies, i.e., H(SA), H(SB), H(SA⨁B). Then, the entropy partitioning is formulated through a partitioning function H; rewriting Eq. (2), we have:

This is the most general formulation of the entropy partitioning consistent with thermodynamics, as shown by Refs.58,60 for stationary systems, and then shown in general, even for non-stationary systems, through the path of the entropy defect by Refs.1,2.

The partitioning function H = H(S) is not uniquely determined. The properties characterizing this function are: (i) H ≥ 0, where the zero holds at S = 0 (see next property (ii)); the non-negativity comes from the requirement of H to equal S in the classical limit (see property (v) below); (ii) H(0) = 0, because adding zero entropy must have zero change in total entropy; indeed, setting \({S_{\text{B}}}=0\) in Eq. (2), requiring that \(H({S_{{\text{A}} \oplus {\text{B}}}})=H({S_{\text{A}}})\), we obtain \(H(0) \cdot [1 - \tfrac{1}{\kappa }H({S_{\text{A}}})]=0\) for any \({S_{\text{A}}}\), thus H(0) = 0; (iii) \(H^{\prime}(0)=1\), because \(H^{\prime}(0)\) appears always in a ratio with kappa, thus its value is arbitrary and can be absorbed into the kappa; indeed, for small entropies, we have \(H(S) \cong H(0)+H^{\prime}(0) \cdot S=H^{\prime}(0) \cdot S+O({S^2})\), and thus the H-partitioning in Eq. (3) leads to \({S_{\text{D}}} \equiv {S_{\text{A}}}+{S_{\text{B}}} - {S_{{\text{A}} \oplus {\text{B}}}}=H^{\prime}(0) \cdot \tfrac{1}{\kappa }{S_{\text{A}}}{S_{\text{B}}}+O(S_{{\text{A}}}^{2}{S_{\text{B}}})+O({S_{\text{A}}}S_{{\text{B}}}^{2})\), where the square term should be identical to \({S_{\text{D}}}=\tfrac{1}{\kappa }{S_{\text{A}}}{S_{\text{B}}}\), hence, \(H^{\prime}(0)=1\); (iv) H is monotonically increasing, because of (iii) and that its inverse H−1(S) must be defined; (v) if H is kappa dependent, then at the classical limit where κ→∞, it must reduce to the identity function H(S) = S52,58; and (vi) the produced entropy defect SD must be positive, i.e., \({S_{\text{D}}} \equiv {S_{\text{A}}}+{S_{\text{B}}} - {S_{{\text{A}} \oplus {\text{B}}}}>0\).

For any function H following these properties, the entropy defect leads to the whole structure of thermodynamics1, deriving the entropy45,53, its statistical equation54, its thermodynamic properties2, the stationary state characterized by the canonical distribution function1,52,61, and the connection of entropy and temperature, which are given by the thermodynamic definitions of temperature and kappa53.

Postulates of the relativity of entropy

The H-partitioning of entropies, and the corresponding kappa-addition, follows three fundamental postulates that lead to the relativity of entropy: (1) no privileged reference frame with zero entropic value (principle of relativity for entropic values); (2) existence of an upper limit of entropic values; and (3) existence of stationarity of entropic values. The first two postulates follow the classical paradigm, while the third was considered a trivial condition in Einstein’s special relativity for velocities, but is clearly necessary for entropies.

First postulate: principle of relativity for entropy

We present the relative nature of entropy through the following three arguments: (1) Measurements of entropy come always via differences; (2) The definition of entropy as an absolute measure is simply by construction; (3) The definition entropy as a relative measure has already been expressed and studied (see below the Kullback–Leibler definition). Namely:

-

(1)

The entropy of a system is only ever obtained as an entropy difference, that is, the entropy of the system measured from a different reference state or frame; here, the thermodynamic reference frame is the stationary state of the observer, from which the entropy of some other body is measured. For instance, let the exoentropic chemical reaction, A→B, where an amount of entropy SB, A is released (entropy decreases, SB, A < 0); then, the reverse reaction B→A is endoentropic, where an amount of entropy SA, B is absorbed (entropy increases, SB, A > 0). Now, if the entropies of A and B are measured from a different entropic level, let this be O (that is, the observer), then, we have that SB, A = SB, O – SA, O.

-

(2)

The entropy is a relative physical quantity in that it can be measured only with respect to another reference frame. Thus, seeking an absolute value of entropy requires an arbitrary definition of entropic zero. Specifically, the third law of thermodynamics states that the entropy at zero temperature is a well-defined constant62 that can be set to zero63,64. However, like the false assumption of the existence of aether, absolute vacuum was taken as the absolute reference frame for measuring entropy. This frame is characterized by the least possible entropy, which is set as the absolute zero of entropy. The vacuum energy that characterizes the hypothetical aether is the zero-point energy – that is, the energy of the system at the temperature of absolute zero, and thus, at zero entropy. The classical perception of the aether is that it is immobile, i.e., the preferred reference frame of zero speed, but also, of zero entropy. Einstein, however, cleared up this misconception, as the absolute vacuum is characterized not just by immobility but also by nonexistence65. In particular, he did not interpret the zero metric as a realistic solution of his field equations, but rather as a mathematical possibility that has no physical significance.

-

(3)

In the perspective of relativity, the entropy of a system can only be determined from a reference frame in which the entropy is measured. The expression of entropy of a system through its probability distribution (e.g., the classical BG26 or other generalized formulations such as the q-entropy23,24, which is equivalent to kappa entropy29,52,54) constitutes an absolute definition. Instead, the relative entropy is expressed as a combination of both the probability distributions of the two difference systems. The most rigorous expression of entropy is through the information measure, called also “surprisal”. Information quantifies the amount of surprise, through a specific function of the probability distribution, called information measure, while the entropy is defined as the expectation of the information measure, or, expected surprise66,67. Therefore, entropy measures the average amount of information needed to represent an event drawn from a probability distribution for a random variable. In its relative determination, the entropy of a system measured from a reference frame is expressed by the expected surprise of that system as measured from that reference frame (also called, Kullback–Leibler entropy difference)68,69. When measuring your own relative entropy, it would turn out to be zero: indeed, there is no expected surprise to be measured in your own system. (For the quantitative expression of relative entropy, see:69, and Supplementary, Section A.)

For a system A with entropy SA, it is implied that this is measured from some reference frame O (e.g., a laboratory), thus we note it as SA, O. The entropy of another system B, measured from O is SB, O. The connection of the two systems A and B leads to the measurement of entropy of B from A, SB, A, and the measurement of its “inverse”, the measurement of entropy of A from B, SA, B. The mathematical properties of (i) commutativity between SB, A and SA, B, and (ii) associativity between SA, O, SB, O, and SA, B, reflect the physical properties of symmetry and transitivity, respectively, which characterize the zeroth law of thermodynamics2, and the corresponding relationships are determined by the kappa addition shown in Eq. (3), i.e., (i) \({S_{{\text{B,A}}}}{ \oplus _\kappa }{S_{{\text{A,B}}}}=0\) with \({S_{{\text{A,B}}}}={\bar {S}_{{\text{B,A}}}}\), and (ii) \({S_{{\text{A,O}}}}={S_{{\text{A,B}}}}{ \oplus _\kappa }{S_{{\text{B,O}}}}\) (Supplementary, Section A).

The connection between the entropies measured in different reference frames is described by the kappa addition. Given the function H(S), we can conclude that the kappa addition forms a mathematical group on the set of entropies. This is shown through the following algebra, which generalizes the steps and properties developed in Ref.2: (i) Closure: For any two entropic values, \({S_{\text{A}}}\) and \({S_{\text{B}}}\), belonging to the set of possible entropies, ΩS, their addition belongs also to ΩS, \({S_{\text{A}}},{S_{\text{B}}} \in {\Omega _S} \Rightarrow {S_{{\text{A}} \oplus {\text{B}}}} \in {\Omega _S}\). (ii) Identity: if SB = 0, then for any \({S_{\text{A}}} \in {\Omega _S}\), \({S_{{\text{A}} \oplus {\text{B}}}}={S_{\text{A}}}\). (iii) Inverse: for any SA, there exists its inverse element with entropy \({\bar {S}_{\text{A}}}\), for which \({S_{\text{A}}}{ \oplus _\kappa }{\bar {S}_{\text{A}}}=0\), hence, we find that \(H({\bar {S}_{\text{A}}})= - H({S_{\text{A}}})/[1 - \tfrac{1}{\kappa }H({S_{\text{A}}})]\). This defines the κ-subtraction of two elements B and A, that is, the κ-addition with the inverse of subtrahend, \({S_{\text{B}}}{ \oplus _\kappa }{\bar {S}_{\text{A}}}\). The entropy of B measured by the reference frame of A, \({S_{{\text{B,A}}}}\), is determined by their subtraction, \({S_{{\text{B,A}}}}={S_{\text{B}}}{ \oplus _\kappa }{\bar {S}_{\text{A}}}\). (iv) Associativity: The entropy of B measured by A, \({S_{{\text{B,A}}}}\), can be expressed by the κ-addition of the entropy of B measured by C, \({S_{{\text{B,C}}}}\), and the entropy of C measured by A, \({S_{{\text{C,A}}}}\), i.e., \({S_{{\text{B,A}}}}={S_{{\text{B,C}}}}{ \oplus _\kappa }{S_{{\text{C,A}}}}\), or \(H({S_{{\text{B,A}}}})=H({S_{{\text{B,C}}}})+H({S_{{\text{C,A}}}}) - \tfrac{1}{\kappa }H({S_{{\text{B,C}}}}) \cdot H({S_{{\text{C,A}}}})\). (v) Commutativity: Additionally, the group is abelian, since the addition function is symmetric, \({S_{\text{A}}}{ \oplus _\kappa }{S_{\text{B}}}={S_{\text{B}}}{ \oplus _\kappa }{S_{\text{A}}}\).

In summary, the first postulate states: There is no absolute reference frame in which entropy is zero. On the contrary, entropy is a relative quantity, connected with the properties of symmetry and transitivity, broadly defined under a general addition rule that forms a mathematical group on the set of allowable entropic values.

Second postulate: existence of a fixed and invariant entropy, upper limit of entropy values

Adding two systems A and B together into a composed system \({\text{A}} \oplus {\text{B}}\) requires the total entropy of the composed system to be at least as large as any of component’s entropies, \({S_{{\text{A}} \oplus {\text{B}}}} \geqslant {S_{\text{A}}}\) (see the strict proof consistent with thermodynamics in1,2); then, \(H({S_{{\text{A}} \oplus {\text{B}}}}) \geqslant H({S_{\text{A}}})\) (H: monotonically increasing function), leading to \(1 - \tfrac{1}{\kappa }H({S_{\text{A}}}) \geqslant 0\), defining an upper limit cS of entropy values S (dropping the A and B subscripts):

The upper limit constitutes an invariant (constant for all observers) and fixed (constant for all times) value. It is invariant because remains the same, independent of the reference frame or the observer: If cS equals the entropy of B measured from C, \({S_{{\text{B,C}}}}={c_S}\), then, applying \({S_{{\text{B,A}}}}={S_{{\text{B,C}}}}{ \oplus _\kappa }{S_{{\text{C,A}}}}\), we find \({S_{{\text{B,A}}}}={c_S}\), i.e., cS also equals the entropy of B measured from A, a result that is independent of the entropy difference between the observing frames C and A, i.e., \({S_{{\text{C,A}}}}\) or \({S_{{\text{A,C}}}}={\bar {S}_{{\text{C,A}}}}\). It is also fixed, because it determines a fixed point in the difference equation that describes the entropy evolution: The entropy of the system at the ith iteration (discrete time), Si, changes to Si+1 when adding an entropy σ, according to \(H({S_{i+1}})=H({S_i})+H(\sigma ) - \tfrac{1}{\kappa }H({S_i}) \cdot H(\sigma )\). Then, the entropic value \({S_i}={c_S}\) is a fixed point (i.e., \({S_{i+1}}={S_i}\)).

The upper limit also recovers the entropic units of kappa. The nonlinear entropic relations typically have the entropies appear as unitless, that is, each entropic value is silently divided by the Boltzmann constant kB. For example, we consider the case of the simple entropy defect with the identity partitioning function, H(S) = S; then, the entropy partitioning is \({S_{{\text{A}} \oplus {\text{B}}}}={S_{\text{A}}}+{S_{\text{B}}} - \tfrac{1}{\kappa } \cdot {S_{\text{A}}} \cdot {S_{\text{B}}}\), corresponding to the relationship between the entropy with some finite kappa S=S(κ) and the extensive entropy S∞=S(κ→∞), that is, \({S_\infty }=\ln {(1 - \tfrac{1}{\kappa }S)^{ - \kappa }}\) (see Eq. (5) below); we observe that entropy appears always as a ratio of its value divided by kappa, S/κ. Indeed, if we set \(\chi \equiv S/\kappa\), then there is no need for any assumption in regards to the units, e.g., \({\chi _{{\text{A}} \oplus {\text{B}}}}=\varphi ({\chi _{\text{A}}},{\chi _{\text{B}}}) \equiv {\chi _{\text{A}}}+{\chi _{\text{B}}} - {\chi _{\text{A}}}{\chi _{\text{B}}}\), \({\chi _\infty }= - \ln (1 - \chi )\). Consequently, the value of kappa can be set to have entropy units, i.e., kB. Furthermore, more general H functions can be written as \(H(S)=S \cdot g(S/\kappa )\), so that the functional \(1 - \tfrac{1}{\kappa }H(S)\) that appears in the H-partitioning (i.e., see Eq. (6) below) involves only the ratio S/κ, i.e., \(\tfrac{1}{\kappa }H({S_{{\text{A}} \oplus {\text{B}}}})=\varphi \left( {\tfrac{1}{\kappa }H({S_{\text{A}}}),\tfrac{1}{\kappa }H({S_{\text{B}}})} \right)\). Also, the upper limit is given by \(\tfrac{1}{\kappa }H({c_S})=1\) or \({c_S} \propto \kappa\), meaning that both cS and κ have the same units as kB.

Therefore, the second postulate states: There exists a nonzero, finite entropy value, which remains fixed (i.e., constant for all times) and invariant (i.e., constant for all observers), thus, it has the same value in all stationary frames of reference, and constitutes the upper limit of any entropy.

Third postulate: existence of stationary entropies

Once the two systems A and B are connected, energy and entropy are allowed to flow and be exchanged, leading to a state of the composed system where the total entropy is expressed as a function of the individual original entropies SA and SB (a property called composability). Specifically, this function is formulated by the H-partitioning of Eq. (3), or the κ-addition of entropies. It has been shown that the H-partitioning constitutes the most general formalism that corresponds to stationarity58,60. Namely, for variations of the constituents’ entropies, \({S_{\text{A}}}\) and \({S_{\text{B}}}\), in a way that the total entropy remains invariant, i.e., \({S_{{\text{A}} \oplus {\text{B}}}}=const.\) or \(d{S_{{\text{A}} \oplus {\text{B}}}}=0\), then, the total entropy is given by the kappa addition as in Eq. (3), \(\tfrac{1}{\kappa }H({S_{{\text{A}} \oplus {\text{B}}}})=\varphi \left[ {\tfrac{1}{\kappa }H({S_{\text{A}}}),\tfrac{1}{\kappa }H({S_{\text{B}}})} \right]\).

Once the composed system resides in a stationary state, then a temperature can be thermodynamically defined. The thermodynamic definition of temperature comes from the relationship between entropy and internal energy U, \(1/T \equiv \partial {S_\infty }/\partial U\), though, the involved entropic quantity S∞ behaves exactly as the entropy S at the classical case of thermal equilibrium, κ→∞52. The extensive measure of entropy, noted with S∞, is a mathematical quantity that becomes physically meaningful for systems residing in stationary states. This is because it serves as the connecting link of the actual entropy S with the temperature T, which it can only be meaningful in stationary states (that is, generalized thermal equilibrium). The extensive measure S∞ has the units of S and coincides with the classical BG entropy at the limit of κ→∞,

This relationship can be derived as follows: The H-partitioning can be written in the product form:

We observe that the logarithm of the quantities \(1 - \tfrac{1}{\kappa }H\) behave extensively, i.e., the relationship \(\ln [1 - \tfrac{1}{\kappa }H({S_{{\text{A}} \oplus {\text{B}}}})]\)\(=\ln [1 - \tfrac{1}{\kappa }H({S_{\text{A}}})]+\ln [1 - \tfrac{1}{\kappa }H({S_{\text{B}}})]\) is extensive. Then, the quantity \(A \cdot \ln [1 - \tfrac{1}{\kappa }H(S)]\) is extensive, while the constant A is taken as \(A= - \kappa\), so that this quantity to coincide with S at the limit of κ→∞ (recall that at this limit H is the identity function). Hence, we conclude with Eq. (5).

The relationship can be also shown through infinitesimal variations. In particular, we derive the change of the system’s entropy dS, once an originally independent amount of entropy \(d{S_\infty }\) is added to its initial entropy S, i.e., \(S+dS=S{ \oplus _\kappa }d{S_\infty }\). Setting \({S_{\text{A}}} \to S\), \({S_{\text{B}}} \to d{S_\infty }\), then \({S_{{\text{A}} \oplus {\text{B}}}} \to S+dS\), in Eq. (6), and considering \(H(S+dS)=H(S)+H^{\prime}(S)dS\), and \(H^{\prime}(0)=1\), we find \(d{S_\infty }=\{ H^{\prime}(S)/[1 - \tfrac{1}{\kappa }H(S)]\} \cdot dS\), leading to the extensive measure of entropy \({S_\infty }\) given by Eq. (5).

The extensive measure \({S_\infty }\) depends on temperature, but not on kappa. In fact, it is given by the Sacker-Tetrode equation, \({S_\infty }=\tfrac{1}{2}d \cdot \ln T+const.\) Then, the equation that determines thermodynamically the temperature is the same, independent of kappa, thus, as in the case of κ→∞, i.e., \(1/T=\partial {S_\infty }/\partial U\), or

Finally, stationarity is interwoven with the zeroth law of thermodynamics. If a system A is stationary for a reference frame O, it will be stationary for any other system O΄ which is also stationary with O. This comes from the zeroth law of thermodynamics, which is associated with the properties of transitivity and symmetry. The properties of symmetry and transitivity connect the entropies S of systems, both for stationary and nonstationary states. Then, the zeroth law of thermodynamics can be stated in terms of entropy difference: “If a body C measures the entropies of two other bodies, A and B, SA,C and SB,C, then, their combined entropy, SA,B and SB,A,, is measured as the connected A and B entropy, where the H-partitioning is involved in all the entropy measurements”2. In particular, the law’s transitivity states that \({S_{{\text{A}},{\text{O}}}}={S_{{{\text{A,O}^{\prime}}}}} \oplus {S_{{{\text{O}^{\prime},\text{O}}}}}\); the corresponding extensive measures replace the kappa addition with the standard sum, i.e., \({S_\infty }_{{{\text{A}},{\text{O}}}}={S_\infty }_{{{{\text{A,O}^{\prime}}}}}+{S_\infty }_{{{{\text{O}^{\prime},O}}}}\). Then, the stationarity of O΄ (from O) leads to \(\partial {S_\infty }_{{{\text{A}},{\text{O}}}}=\partial {S_\infty }_{{{{\text{A,O}^{\prime}}}}}\), while the conservation of energy leads to a similar equation holds for internal energy, \(\partial {U_{{\text{A}},{\text{O}}}}=\partial {U_{{{\text{A,O}^{\prime}}}}}\). Therefore, the thermodynamic definition of temperature of A is equivalent for all stationary observers (O or O΄),

On the other hand, the law’s symmetry between two connected systems A and B states that \({S_{{\text{A}},{\text{B}}}} \oplus {S_{{\text{B,A}}}}=0\), or equivalently, \({S_\infty }_{{{\text{A}},{\text{B}}}}+{S_\infty }_{{{\text{B,A}}}}=0\), thus \(\partial {S_\infty }_{{{\text{A}},{\text{B}}}}= - \partial {S_\infty }_{{{\text{B,A}}}}\). Also, the energy requires \(\partial {U_{\text{A}}}= - \partial {U_{\text{B}}}\), hence,

Finally, the third postulate states: If a system is stationary for a reference frame O, it will be stationary for all reference frames that are stationary for O.

Relativity of velocity

Here we revisit and discuss the postulates of special relativity70: (1) First postulate (principle of relativity): The laws of physics take the same form in all inertial frames of reference; (2) Second postulate (invariance of c): the speed of light in free space has the same value in all inertial frames of reference; and (3) Third postulate, which has been added to express the necessity of the existence of stationarity. We show the surprising result that they are parallel and essentially identical to those of thermodynamics.

First postulate: all frames of reference are equivalent

Inertial reference frames are systems with a constant bulk velocity. Observers define systems in different reference frames, which here are considered to be inertial. The term inertial here is identical to “stationary” but referring to the velocity space. Thus, throughout the paper we can characterize these frames as stationary, and examine entropy and velocity in a unified framework.

The observation of a system by an observer in another system, requires the connection between those two systems and the mutual exchange of information. The underlying assumption of this postulate is that observers can connect and exchange information. Once a connection is made between two reference systems, a rule of addition between the respective stationary velocities applies. The addition rule follows the properties of commutativity and associativity, that is, symmetry and transitivity, respectively, which are required for expressing the connection among reference frames. Given the addition rule \({V_{{\text{A}} \oplus {\text{B}}}}=f({V_{\text{A}}}\,;{V_{\text{B}}})\), the velocity of B measured from A, \({V_{{\text{B,A}}}}\), and its inverse, the velocity of A measured from B, \({V_{{\text{A,B}}}}\), are connected with \(0=f({V_{{\text{A,B}}}}\,;{V_{{\text{B,A}}}})\) (symmetry), while \({V_{{\text{A,B}}}}\)is connected with the velocities of A and B measured from O with \({V_{{\text{A,O}}}}=f({V_{{\text{A,B}}}},{V_{{\text{B,O}}}})\) (transitivity).

Consider two originally independent systems A and B, with velocities VA and VB, respectively, as measured by a third system O. The addition of velocities requires the exchange of information through some connection, eventually reaching a stationary state, where the velocity of the whole system, \({V_{{\text{A}} \oplus {\text{B}}}}\) is also stationary. For now, we do not focus on any particular addition rule, i.e., this may be Galilean, relativistic, or even more broadly defined. In general, the addition rule provides the relationship \({V_{{\text{A}} \oplus {\text{B}}}}=f({V_{\text{A}}},{V_{\text{B}}})\), where the 2-D symmetric function f(x,y) forms a mathematical group on the set of velocities: (i) Closure: For any two velocities VA and VB belonging to the set of possible velocities ΩV, their addition VA⨁B belongs also to ΩV; in fact ΩV is bounded, since their measure, the speed, is given by 0 ≤ V ≤ c, where c denotes the upper limit of speed values, the speed of light in vacuum, i.e., \(0 \leqslant f({V_{\text{A}}},{V_{\text{B}}}) \leqslant c\). (ii) Identity: if VB = 0, then for any VA, VA⨁B = VA, or \(f({V_{\text{A}}},0)={V_{\text{A}}}\). (iii) Inverse: for any VA, there exists its inverse element with velocity \({\bar {V}_{\text{A}}}\), for which \(f({V_{\text{A}}},{\bar {V}_{\text{A}}})=0\). (iv) Associativity: The velocity of B measured by A, \({V_{{\text{B,A}}}}\), is expressed by the addition function of the velocity of A measured by C, \({V_{{\text{A,C}}}}\), and the velocity of C measured by B, \({V_{{\text{C,B}}}}\), i.e., \({V_{{\text{A,B}}}}=f({V_{{\text{A,C}}}},{V_{{\text{C,B}}}})\). (v) Commutativity: The group is abelian, since the addition function is symmetric, \(f({V_{\text{A}}},{V_{\text{B}}})=f({V_{\text{B}}},{V_{\text{A}}})\). (vi) Finally, the existence of a fixed and invariant speed c, requires\(f({V_{\text{A}}},c)=c\) for any VA.

We note two key things in this development. First, the symmetry of the general addition rule, \(0=f({V_{{\text{A,B}}}}\,;{V_{{\text{B,A}}}})\) does not necessarily lead to \({V_{{\text{B,A}}}}={V_{{\text{A,B}}}}\), as in the case of Einstein’s special relativity, (indeed, if (V + u)/(1 + Vu/c2) = 0, then V=–u). Still, they are mutually inverse values \({V_{{\text{A,B}}}}\,={\bar {V}_{{\text{B,A}}}}\) or \({V_{{\text{B,A}}}}={\bar {V}_{{\text{A,B}}}}\,\), but with the associated inverse element definition as given above. Second, both the addition of entropies (H-partitioning) and the addition of velocities form a mathematical group on their allowable set of values71.

Therefore, the first postulate is as follows: There is no absolute reference frame in which speed is zero. On the contrary, the velocities are connected with the properties of symmetry and transitivity, broadly defined under a general addition rule that forms a mathematical group on the set of allowable speeds.

Second postulate: existence of a fixed and invariant speed, upper limit of speed values

Einstein’s second postulate of relativity is that the speed of light is fixed (i.e., constant for all times) and invariant (i.e., constant for all observers). Throughout this paper, we have this noted with c, however, the essence of this postulate is the existence of a nonzero, finite speed, fixed and invariant among any time and observers, and not specifically that c is the speed of light in a vacuum. This is because neither the nature of light nor the value of the certain fixed speed is involved in the formalism of relativity. Specifically, in the speed addition rule, there is no requirement that the involved fixed speed c refers to the speed of light, but only that this speed c is fixed and invariant. Indeed, adding u = c on an arbitrary speed V, results in the same speed c. Nevertheless, such a fixed and invariant speed, if exists, is also the maximum speed; this is a consequence, and not requirement of the postulate.

Therefore, the only requirement of the postulate is the existence of a nonzero, finite speed, c, fixed and invariant in time and among all observers. Below, we explain these terms; (all the involved velocities are considered in the same direction, for simplicity).

-

Fixed velocity (constant for all times). A fixed velocity, V*, means it is a stable fixed-point solution in the velocity addition rule. In order to explain the terms, let the addition rule between two arbitrary velocities, VA and VB, \({V_{{\text{A+B}}}}=f({V_{\text{A}}},{V_{\text{B}}})\); now consider the case of sequential additions of the speed fluctuation δui added to the velocity Vi, with i numbering the iterated addition, \({V_{i+1}}=f({V_i},\delta {u_i})\). The term “fixed-point” of the velocity Vi means \({V_*}=f({V_*},\delta {u_i})\), independently of the value of \(\delta {u_i}\). Then, the term “stable” characterizes the type of stability of the fixed point. Stable fixed point means it “attracts” the iterated velocity, leading eventually to smaller deviations: \(\left| {{V_{i+1}} - {V_*}} \right|<\left| {{V_i} - {V_*}} \right|\). Therefore, the stable fixed point can be approached but not reached, i.e., there is no finite iteration step i = j, for which \(\left| {{V_j} - {V_*}} \right|=0\). On the other hand, the stability allows the existence of elements with velocity equal to the fixed point at all the iterations (eternally), i.e., if for some i = j, there is \(\left| {{V_j} - {V_*}} \right|=0\), the same holds for all i’s, from i = 0 and beyond. For instance, the addition rule in Einstein’s special relativity gives the iterated velocity values \({V_{i+1}}=f({V_i},\delta {u_i})=({V_i}+\delta {u_i})/(1+{V_i}\delta {u_i}/{c^2})\), thus, the stable fixed velocity is given by \({V_*}=f({V_*},\delta {u_i})=({V_*}+\delta {u_i})/(1+{V_*}\delta {u_i}/{c^2})\), leading to \({V_*}=c\); namely, the speed of light c involved in the relativity addition rule provides the fixed velocity.

-

Invariant velocity (constant for all observers). To understand the invariance of velocities, we need first to set how different velocities can be connected (third postulate). The velocity can be measured by different observers, whose connection is subject to a rule of addition of velocities. In particular, the velocity of A measured by B, \({V_{{\text{A,B}}}}\), is a function of the velocity of A measured by C, \({V_{{\text{A,C}}}}\), and the velocity of C measured by B, \({V_{{\text{C,B}}}}\), i.e., \({V_{{\text{A,B}}}}=f({V_{{\text{A,C}}}},{V_{{\text{C,B}}}})\). Once we realize that the velocity connection is determined by an addition rule, the invariant velocity can be determined in a way similar to the fixed velocity. Namely, the velocity of A is the same for any observer, B or C, \({V_{{\text{A,B}}}}={V_{{\text{A,C}}}}\); setting this as \({V_{{\text{A,B}}}}={V_{{\text{A,C}}}} \equiv {V_*}\), we can find \({V_*}\) from \({V_*}=f({V_*},{V_{{\text{C,B}}}})\), which leads to the value of \({V_*}\), independently of the value of \({V_{{\text{C,B}}}}\). Again, Einstein’s relativity leads to \({V_*}=c\), thus, as expected, the speed c involved in the relativity addition rule is invariant for all the observers.

The velocity provides information for both speed and direction, and thus, the fixed and invariant velocity allows for different fixed and invariant speed values in the positive and negative directions, giving insights for the anisotropic adaptation of relativity; (to be examined in Section "Anisotropic relativity"). The reasoning behind the existence of a fixed and invariant speed is the existence of a speed limit, and in particular, an upper limit, which can be approached but not reached. (The fixed and invariant speed constitutes an upper and not a lower limit, because the zero speed always exists as a possibility.) Thus, a body with speed V less than the fixed and invariant speed c, may approach, but never reach this limit speed, V < c; on the other hand, natural elements with this speed (e.g., photons in vacuum), will remain with this speed, eternally, V = c.

Therefore, we restate the second postulate as follows: There exists a nonzero, finite speed, which remains fixed (i.e., constant for all times) and invariant (i.e., constant for all observers), thus it has the same value in all inertial frames of reference and constitutes the upper limit of any speed.

Third postulate: existence of stationary velocities

Stationarity is considered as a trivial condition in Einstein’s special relativity and was not included explicitly as postulate. However, it is a requirement that restricts the generality of the addition rule that can apply to velocities.

If a system is stationary according to an inertial observer, it will be stationary for all inertial observers. In particular, if the velocity of A measured from observer B is stationary, i.e., VA, B=constant, then, it would be stationary for any other stationary observer, e.g., an observer C with stationary velocity as measured from B, VC, B=constant, i.e., C will also observe a stationary velocity for A, i.e., VA, C=constant. This property of the existence of stationarity for all observers, leads to the H-partitioning or kappa addition described by Eq. (3).

Indeed, the generalized partitioning in Eq. (3) characterizes any physical quantity with the properties of entropy defect, i.e., symmetry, separability, and boundedness1,2, or the existence of stationary entropy values58,60; therefore, it applies to both entropy and velocity. Then, the most general addition function f is described through the H-partitioning, that is,

where the partitioning function H falls under the properties discussed in Section "Entropy defect – general formulation".

The question that arises now is whether the velocity addition of Einstein’s special relativity can be carried out simply through the kappa addition of Eq. (10). Indeed, by selecting the partitioning function

and substituting in Eq. (10), we end up with (see Supplementary, Section B.1):

Then, we derive kappa as a function of the corresponding invariant and fixed speed c. This can be found by setting \({V_{{\text{A}} \oplus {\text{B}}}}={V_{\text{A}}}=c\) in the addition rule of Eq. (12), leading to

Therefore, Eq. (10) includes the standard velocity addition of Einstein’s special relativity.

We can derive the corresponding extensive measure of velocity, V∞, following the formulation given by Eq. (5), and apply the specific partitioning function H given by Eq. (11). We find,

We note that this is the so-called rapidity, a commonly used additive measure of velocity (e.g. Refs.72,73), but here we showed how can be naturally derived within the context of thermodynamic relativity.

The connection of rapidity with kinetic energy is identical with the relationship between extensive entropy and momentum, \(\partial {S_\infty }/\partial U=1/T\), as shown in Eqs. (7,8), i.e.,

where the standard energy momentum relativity equations have been used, \(E=\gamma {E_0}\), \(pc=\beta \gamma {E_0}\). (We mention again that, for simplicity, the paper takes the velocity and momentum as 1-dimensional, i.e., along the direction of motion.) This similarity between the extensive measures of entropy and velocity and their relationship with energy was inspiring for the development of thermodynamic relativity.

Following the symmetry and transitivity properties for both of entropies and velocities, we come to equations similar to Eqs. (8,9). Namely, between two connected systems A and B, we have \({V_{{\text{A}},{\text{B}}}} \oplus {V_{{\text{B,A}}}}=0\), or equivalently, \({V_\infty }_{{{\text{A}},{\text{B}}}}+{V_\infty }_{{{\text{B,A}}}}=0\), thus \(\partial {V_\infty }_{{{\text{A}},{\text{B}}}}= - \partial {V_\infty }_{{{\text{B,A}}}}\). Also, the conservation of energy requires \({E_{\text{A}}}+{E_{\text{B}}}=const.\), or \(\partial {E_{\text{A}}}= - \partial {E_{\text{B}}}\), hence,

with a common momentum absolute value, given by \(p=\left| {{p_{{\text{A}},{\text{B}}}}} \right|=\left| {{p_{{\text{B,A}}}}} \right|\).

We note that variations of particles numbers N and/or volume V are not part of the thermal equilibrium approach; surely, once N and/or V are not fixed, then, they are involved in the first law of thermodynamics, i.e., \(dU=TdS+\mu dN - Pd{\text{V}}\), where μ and P stand for the chemical potential and pressure, respectively. In the same way, variations of positions are not involved in the stationarity approach of relativistic kinematics, but once potential energy Φ is taken into account, then, the positions are involved in the energy equation, i.e., \(dE=pdV+d\Phi (x)\). Thus, in order to describe the nature of kinematic and thermodynamic stationary states, there is no need for the size (N,V) and positional (x) variables to vary. Since we describe the common physical framework of entropy and velocity, we eliminate all of the other factors from the analysis and the energy variation and simply compare the two equations: \(dU=TdS\) with \(dE=pdV\), or \({\left( {\partial {S_\infty }/\partial U} \right)_{size}}=1/T\) with \({\left( {\partial {V_\infty }/\partial E} \right)_{size}}=1/p\).

Moreover, we must delineate the role of the third postulate compared to the first two: (1) The third postulate does not require the existence of an immobile reference frame; however, it also does not state whether such a frame actually exists or not; indeed, the classical perception of aether is to be immobile, i.e., the reference frame of zero velocity and entropy. It is the first postulate that states that this privileged frame does not exist. (2) The third postulate allows for the existence of a fixed and invariant speed; however, this does not mean that such a velocity necessarily exists. The second postulate states that this exists.

Therefore, we state the third postulate as follows: If a system is stationary for a reference frame O, it will be stationary for all reference frames being stationary for O.

Relativity of entropy and velocity – a unified framework of thermodynamics and kinematics

Motivation

It is remarkable that the relativity concepts for entropy and velocity are based on three identical postulates: (1) no privileged reference frame with zero value; (2) existence of an invariant and fixed value for all frames; and (3) existence of stationarity. Hereafter, we use the term reference frame to encompass both the kinematic reference frame of measuring another system’s motion and the thermodynamic reference frame of measuring another system’s entropy.

Any comparison of observations between reference frames requires a connection, through which information can be exchanged. We have shown that the H-partitioning via the kappa addition supplies the connection, which is characterized by the properties of symmetry and transitivity that underly the zeroth law of thermodynamics. Thus, postulates and H-partitioning (derived from the postulates) are all identical for both entropies and velocities, and hereafter we are using a common formulation, expressed in terms of the variable x = S and V.

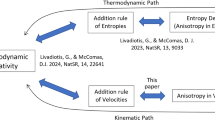

The kappa addition for entropies and velocities is given by the H-partitioning, shown in Eq. (3) and Eq. (10), for any well-defined function H, described by the properties set in Section "Entropy defect – general formulation"; in both cases the kappa addition forms a mathematical group on their allowable set of values. Table 1 summarizes the characteristics of H-partitioning. Given a well-defined partitioning function H, we can formulate a kappa-addition rule that applies to entropies or velocities, and then, construct generalized schemes of nonlinear relativity. In this paper, we develop an anisotropic version of linear relativity (a.k.a., corresponding to a linear Lorentz transformation) as just one example.

The partitioning functions for entropies and velocities are \(H(x)=x\) and \(H(x)=x/(1+\tfrac{1}{{2\kappa }}x)\), respectively; both cases can be written using a parameter, a, under the scheme \({H_a}(x)=x/(1+\tfrac{a}{\kappa }x)\), where \(a=0\) characterizes entropies with the addition rule of the standard entropy defect (nonextensive thermodynamics), while \(a=1/2\) characterizes velocities with the relativistic addition rule (Einstein’s special relativity).

We note that one may check that if we chose, instead of \(H(x)=x\), its inverse (in terms of κ-addition), i.e., \(H(x)=\bar {x}= - x/(1 - \tfrac{1}{\kappa }x)\), we would end up with the same addition rule, because \({S_{{\text{A}} \oplus {\text{B}}}}={S_{\text{A}}}{ \oplus _\kappa }{S_{\text{B}}}\) and \({\bar {S}_{{\text{A}} \oplus {\text{B}}}}={\bar {S}_{\text{A}}}{ \oplus _\kappa }{\bar {S}_{\text{B}}}\) are identical; this choice is not permissible (since H < 0), but it is interesting that its functional form is similar to \({H_a}(x)\). We also recall that the double inverse returns the identity, i.e., \(\bar {\bar {x}}=x\); this is true as long as the operations are characterized by the same kappa. It is interesting to consider the case where the second inverse operation may act with a different kappa.

The choice of focusing on the particular partitioning function \(H(x)={H_a}(x)\) has one additional important motivation. When it comes to relativity for velocities, x = V, the partitioning leads to the proportionality \(1 - \tfrac{1}{\kappa }H(V^{\prime}) \propto [1 - \tfrac{1}{\kappa }H(V)]\) (Table 1, #4), where V and V΄ denote the velocities of a body measured in two different inertial reference frames. Then, the corresponding Lorentz transformation of spacetime coordinates is linear only when the relationship between the velocities is a rational linear function (at its greatest complexity), i.e., \(V^{\prime}=({a_1}V+{a_2})/({a_3}V+{a_4})\). This is true, only when H is also a rational linear function. Also considering the properties of H-partitioning function (Section "Entropy defect – general formulation"), we end up \({H_a}(x)\) as the most general H-partitioning function aligned with linear Lorentz transformation.

Anisotropic relativity

We consider the case where the kappa is different in positive and negative entropies. Recall that negative entropy, \(\bar {S}\), is defined as the quantity that when added to an entropy, returns zero, \(S{ \oplus _\kappa }\bar {S}=0\); thus, if \({S_{{\text{B,A}}}}\) is the entropy of B measured by A, then its inverse, \({S_{{\text{A,B}}}}\), is the entropy of A measured by B.

Since there is no absolute entropic zero, and entropy is relative rather than absolute, there are no meaningful positive or negative absolute entropic values. Instead, there are positive or negative relative entropic values; namely, if \({S_{{\text{A,B}}}}>0\), means that the entropy of A is larger than that of B, as measured by any other reference frame O, \({S_{{\text{A,O}}}}>{S_{{\text{B,O}}}}\). On the other hand, its inverse would be \({S_{{\text{B,A}}}}<0\), i.e., again \({S_{{\text{A,O}}}}>{S_{{\text{B,O}}}}\), for any O. We define the “positive direction” to be the one corresponding to ΔS > 0, e.g., in the previous example, the observation of A from B that corresponds to \({S_{{\text{A,B}}}}>0\); similarly, we call the “negative direction”, its inverse, corresponding to ΔS < 0, e.g., the observation of B from A that corresponds to \({S_{{\text{B,A}}}}<0\).

There is nothing different in entropy and velocity for the concepts of positive and negative directions, but perhaps are more easily understood for velocities, rather than entropies. For both the velocities and entropies, there is no “absolute direction” in the universe to be assigned as positive direction, or its inverse, as negative direction, independent of observers. In contrast, the concept of directions is itself relativistic, rather than absolute. When the distance of a body B, as measured from a reference frame A, increases, this defines the positive direction of velocity, with respect to (A) At the same time, the distance of A increases with respect to the reference frame of (B). The inverse direction defines the negative direction. In the negative direction with respect to A, the distance of B should decrease with a negative relative velocity, \({\bar {V}_{{\text{B,A}}}}<0\); similarly, in the negative direction with respect to B, the distance of A should decrease with negative relative velocity, \({\bar {V}_{{\text{A,B}}}}<0\). When the velocity \({V_{{\text{B,A}}}}\) is positive (or negative), the velocity of B is larger (or smaller) from the velocity of A, as measured from any observer O. The same characteristics identify the positive and negative directions for the entropic values. In particular, the entropy of B as measured from A can be positive assigning the positive direction, \({S_{{\text{B,A}}}}>0\), or negative \({\bar {S}_{{\text{B,A}}}}<0\) assigning the inverse, negative direction. When entropy \({S_{{\text{B,A}}}}\) is positive (negative), the entropy of B is larger (smaller) than the entropy of A, as measured from any observer O. (See Fig. 2).

Next, we ask, what would be the upper limit of entropic values for the two directions? Given \(H(x)\) and setting \(0=H(0)=H(x)+H(\bar {x}) - \tfrac{1}{\kappa }H(x)H(\bar {x})\), we find

from which we can extract the expression of the inverse, \(\bar {x}(x)={H^{ - 1}}\{ - H(x)/[1 - \tfrac{1}{\kappa }H(x)]\}\). Then, we use this relation to find the upper limit of \(\left| {\bar {x}} \right|\), given the upper limit of \(x \leqslant {H^{ - 1}}(\kappa )\). We examine three cases, (1) Relativity for entropy, (2) Relativity for velocity, and (3) Relativity for both entropy and velocity.

-

(1)

Relativity for entropy, x = S, (nonextensive thermodynamics).

The partitioning function is \(H(x)=x\), thus \(H(x)=x \leqslant \kappa\) or \(x \leqslant \kappa\) is the upper limit in the positive direction. However, \(H(\bar {x})=\bar {x}= - x/(1 - \tfrac{1}{\kappa }x)\), leading to \(\left| {\bar {x}} \right|=x/(1 - \tfrac{1}{\kappa }x) \leqslant +\infty\); namely, there is no upper limit in the negative direction.

-

(2)

Relativity for velocity, x = V, (Einstein’s special relativity).

The partitioning function is \(H(x)=x/(1+\tfrac{1}{{2\kappa }}x)\), thus, \(H(x)=x/(1+\tfrac{1}{{2\kappa }}x) \leqslant \kappa\) or \(x \leqslant 2\kappa\) provides the upper limit in the positive direction. Also, \(H(\bar {x})=\bar {x}/(1+\tfrac{1}{{2\kappa }}\bar {x})= - x/(1 - \tfrac{1}{{2\kappa }}x)\), hence, \(\bar {x}= - x\), or \(\left| {\bar {x}} \right|=x \leqslant 2\kappa\) provides the upper limit in the negative direction; namely, the upper limit in both directions is the same; Einstein’s special relativity is isotropic74.

-

(3)

Relativity for entropy and velocity, x = S or V, (a possible description of anisotropic relativity).

We start with the partitioning function Ha(x), that is,

$${H_a}(x)=x/(1+\tfrac{a}{\kappa }x),$$(17)which recovers the previous two cases for α = 0 and α = 1/2, respectively. Then, \(H(x)=x/(1+\tfrac{a}{\kappa }x) \leqslant \kappa\) or \(x \leqslant \tfrac{1}{{1 - a}}\kappa\) is the upper limit in the positive direction, while \(H(\bar {x})=\bar {x}/(1+\tfrac{a}{\kappa }\bar {x})= - x/(1+\tfrac{{a - 1}}{\kappa }x)\), \(\bar {x}= - x/(1 - \tfrac{{1 - 2a}}{\kappa }x)\), or \(\left| {\bar {x}} \right|=x/(1 - \tfrac{{1 - 2a}}{\kappa }x) \leqslant \tfrac{\kappa }{a}\) is the upper limit in the negative direction. Therefore, there are two kappa values, characterizing the upper limits of entropy and velocity in the positive and negative directions. Let κ1 and κ2 be these upper limits, respectively, i.e., for \(0<x\): \(x<{\kappa _1}\), and for \(\bar {x}<0\): \(\left| {\bar {x}} \right|<{\kappa _2}\). Then, the limits are equal to:

$$x<{\kappa _1} \equiv \tfrac{1}{{1 - a}}\kappa \, \text{and} \,\left| {\bar {x}} \right|<{\kappa _2} \equiv \tfrac{1}{a}\kappa.$$(18)

The measurable value of upper limit should be given by the mean of the two directional limits. This defies Einstein’s synchronization convention, which had assumed that the one-way speed is equal to the two-way speed; however, all experimental predictions of the theory do not depend on this convention (e.g75). The inverse kappa measures the correlations76,77, and thus, the mean value of the upper limits is given by the harmonic mean, i.e.,

The anisotropy is materialized by the nonzero difference of these two upper limits, i.e.,

while the product of the two limits gives

As shown in Table 1, the parameter κ is included in the developed of this systematic formalism. Hereafter, the original parameterization of κ and a can be substituted by the directional kappa parameters of κ1 and κ2, as shown in Eq. (19) (for solving in terms of κ) and Eq. (20) (for solving in terms of a). For instance, the particular partitioning function Ha(x) in Eq. (17) is now expressed as

while the extensive measure \(1 - \tfrac{1}{\kappa }H(x)={e^{ - \tfrac{1}{\kappa } \cdot {x_\infty }}}\) depends on both directional kappa,

We recall that entropy S and velocity V can be noted using a common symbol, x. and use this symbol in the section. The limiting symbols of \(\{ {\kappa _1},{\kappa _2},{\kappa _{\rm O}}\}\) refer to both entropy and velocity, unless we focus specifically on the velocity relativity and use \(\{ {\kappa _1},{\kappa _2},{\kappa _{\rm O}}\} \to \{ {c_1},{c_2},{c_{\rm O}}\}\).

Anisotropic kappa addition

The kappa addition describes equivalently both entropies or velocities, for any partitioning function H. Here, we apply the specific partitioning function Ha(x) (Eq. (17)) in the kappa addition (Table 1), i.e., \({H_a}({x_{{\text{A}} \oplus {\text{B}}}})={H_a}({x_{\text{A}}})+{H_a}({x_{\text{B}}}) - \tfrac{1}{\kappa }{H_a}({x_{\text{A}}}){H_a}({x_{\text{B}}})\), which after some calculus leads to the addition rule (see Supplementary, Section B.1):

or, in terms of the directional upper limits:

Notice the two limiting cases of standard entropy defect (a = 0, maximum anisotropy) for κ2→∞, and special relativity (a = 1/2, zero anisotropy), for κ1 = κ2.

The entropies or velocities may be expressed as normalized to the average upper limit, \(\chi =S/{\kappa _{\rm O}}\) or \(\chi =V/{c_{\rm O}}\); then, the kappa addition is written as

with

Regular and anomalous anisotropy - example of constant “acceleration”

We discuss the notion of regular κ1 < κ2 and anomalous κ1 > κ2 anisotropy. As an example, we examine the case of constant rate of increase of \(x=S,V\), where both entropy and velocity are covered by the common symbol x. For simplicity, we call the rate of change “acceleration”, \(dx/dt=a={\text{const}}.\) (for both entropy and velocity).

For a continuous addition of entropy, \(\Delta x=\Delta \sigma\), or velocity, \(\Delta x=\Delta u\), in a time-scale of Δt, we construct the difference equation \({x_n}=f({x_{n - 1}})\) that connects the entropy or velocity \({x_n}\) of the nth iteration with the previous one \({x_{n - 1}}\) of the (n–1)th iteration (e.g., Refs.35,78),

In the classical case of unrestricted addition, the respective equation for the value of x is trivial: \({x_n}={x_{n - 1}}+\Delta x\), that is, solved to \({x_n}={x_0}+\Delta x \cdot n\). We also assume no initial entropy or velocity, for simplicity, i.e., \({x_n}=\Delta x \cdot n\). Time is measured by the number of iterations, i.e., \(t=n \cdot \Delta t\), while the constant rate is \(a=\Delta x/\Delta t\), hence, \({x_n}=a \cdot t\). In the negative direction, the equation is \({\bar {x}_n}= - a \cdot t\), and since \(\bar {x}= - x\), the absolute value behaves similarly to the positive direction, \(\left| {{{\bar {x}}_n}} \right|=a \cdot t\). Clearly, there is no upper limit on the values of x in either direction, namely, entropy and velocity are unbounded in the classical case, \({S_{t \to \infty }} \to \infty\) and \({V_{t \to \infty }} \to \infty\).

Next, we repeat the previous steps, for the restricted addition of entropies or velocities, given by Eqs. (28a), (28b)). In order to solve this difference equation, it is easier to use the corresponding H-partitioning function, Eqs. (22,23), and the formalism of the kappa addition (Table 1). We induce the recursive relation:

Again, we assume zero initial values, and given \(H({x_0}=0)=0\) (properties of function H, Sect. 2), we find that the solution is given by \(1 - \tfrac{1}{\kappa }H({x_n})={[1 - \tfrac{1}{\kappa }H(\Delta x)]^{\,n\,}}\). The partitioning function is \(H({x_i})={x_i}/(1+\tfrac{1}{{{\kappa _2}}}{x_i})\) and \(H(\Delta x) \cong \Delta x\) (for small Δx). Hence, in the limit of many iterations, we have \({(1 - \tfrac{1}{\kappa }\Delta x)^n}={(1 - \tfrac{1}{\kappa } \cdot \tfrac{{{x_\infty }}}{n})^n}\xrightarrow{{n\, \to \infty }}{e^{ - \tfrac{1}{\kappa } \cdot {x_\infty }}}\), where we substituted Δx with the extensive measure of x, \({x_\infty }_{n}=\Delta x \cdot n\) (that is, \({x_n}(\kappa \to \infty )\)). Again, we introduce continuous time \(t=n \cdot \Delta t\): \({x_\infty }=\Delta x \cdot n=(\Delta x/\Delta t) \cdot t=\alpha \cdot t\), thus, we write \({x_n}={x_t}\); hence, we have \(1 - \tfrac{1}{\kappa }H({x_n}) \cong {e^{ - \tfrac{1}{\kappa } \cdot {x_\infty }}}\), or \(1 - \tfrac{1}{\kappa }H({x_t})={e^{ - \tfrac{1}{\kappa } \cdot \alpha \cdot t}}\), where the equations become exact in the infinitesimal limits (\(\Delta x \to dx\), \(\Delta t \to dt\), with \(a=dx/dt\)). Substituting from Eq. (22b), \(1 - \tfrac{1}{\kappa }H({x_t})=(1 - \tfrac{1}{{{\kappa _1}}}{x_t})/(1+\tfrac{1}{{{\kappa _2}}}{x_t})\), we solve in terms of \({x_t}\) and its inverse, \({\bar {x}_t}= - {x_t}/[1 - (\tfrac{1}{{{\kappa _1}}} - \tfrac{1}{{{\kappa _2}}}){x_t}]\), i.e.,

with limits \({x_\infty } \to {\kappa _1}\) and \(\left| {{{\bar {x}}_\infty }} \right| \to {\kappa _2}\).

Figure 3 plots the difference equations in Eq. (29) showing the isotropic case (b) and the two extreme cases of anisotropy: regular anisotropy, which is aligned with the concept of entropy defect (a) and the opposite extreme of anomalous anisotropy that does not align with the entropy defect (c). The entropy defect, which leads to a negative change on the system’s entropy, is not due to the specific physical quantity involved, e.g., the thermodynamic meaning of entropy, but due to the “Cause-Effect” principle2. According to this, the insertion of some entropy Sin into the system provokes a negative feedback of -SD (the entropy defect), and thus the total change of the system’s entropy is \(\Delta S={S_{{\text{in}}}} - {S_{\text{D}}} \geqslant 0\), that is, positive, because \({S_{\text{D}}} \leqslant {S_{{\text{in}}}}\), i.e., the effect, SD, is less than the cause, Sin. The cause-effect principle applies to both entropy and velocity, and thus, thermodynamic relativity has anisotropy: κ1 ≤ κ2, while the anomalous case of κ2 ≤ κ1 violates the “cause-effect” principle. Therefore, the two physically accepted extrema are: (i) κ1 = κ2 (e.g., the case of Einstein’s special relativity for velocities) and (ii) κ1 < ∞ with κ2 →∞ (e.g., the case of the standard defect for entropies).

Entropy or velocity, xt = St, Vt, increasing with time, t = n∙Δt, at a constant rate α = Δx/Δt, plotted for finite limits κ1 and κ2 of the positive (\({x_t}\)) and negative (\({\bar {x}_t}\)) directions, respectively, and for the cases: (a) κ1 < κ2, (b) κ1 = κ2, and (c) κ1 > κ2 (anomalous anisotropy); n counts the iterations, where each iteration has a time scale of Δt. Also shown are the cases of classical physics, where the limits are taken to infinity, κ1→∞, κ2 →∞, the values of entropy or velocity continuously increase, unboundedly, towards infinity.

In the classical understanding, the entropy and velocity are allowed to constantly increase toward infinity. Einstein’s special relativity restricts the velocities to increase up to the limit of light speed value, isotropically, i.e., for both the positive and negative directions. On the other hand, thermodynamics with simple entropy defect allows the entropy to increase up to a limit only in the positive direction, while it is unrestricted in the negative direction. The developed thermodynamic relativity naturally merges the two restrictions in a generalized conception of anisotropic relativity, where the positive and negative directions are characterized by different limits.

Comparison between the extreme cases and a natural generalization

The relativity of velocities and entropies is based on identical frameworks with no mathematical differences other than the quantities themselves. They are characterized by the same three postulates discussed in Sections "Postulates of the relativity of entropy" and "Relativity of velocity" for entropies and velocities, respectively. Both frameworks are characterized by the H-partitioning, that is, the composition of the total value as a function of the constituents’ values (entropies or velocities), which is expressed by the kappa addition. The partitioning characterizing Einstein’s special relativity, H(x) = x/[(1 + x/(2κ)], is isotropic, namely, it considers equal finite fixed and invariant upper limits for the positive and negative directions, κ1 = κ2<∞. At the other extreme, the partitioning with entropy defect (nonextensive thermodynamics), H(x) = x, is the most anisotropic case possible, because while it has a finite fixed and invariant upper limit in the positive direction, κ1<∞, it has an infinite upper limit in the negative direction, κ2→∞. The general case including these two extrema has a partitioning function that depends on any value of κ2 (finite or not), H(x) = x/(1 + x/κ2), as shown in Eq. (22). While other H-partitioning functions (following the properties presented in Section "Entropy defect – general formulation") may be suitable for constructing a relativity framework, the obvious generalization is the consideration of any finite upper limit in the negative direction. Table 2 summarizes the basic characteristics of relativity for entropies and velocities, for the disciplines of Einstein’s special relativity and nonextensive thermodynamics, and their anisotropic generalization – thermodynamic relativity.

Formulation of thermodynamic relativity for velocity

Scheme of the derivations