Abstract

Droughts and floods are examples of extreme weather events that can result from changes in ocean temperature. Ocean temperature is a key component of the global open sea system. Currently, real-time sea surface temperature (SST) forecasts are generated by numerical models based on physics principles and influenced by boundary and initial conditions. These models generally perform better over large areas than at specific locations. To address this and improve prediction accuracy, particularly in high-precision areas, the Coati Optimization Algorithm-based Deep Convolutional Forest (COA-DCF) method is proposed. This optimization approach is utilized to train the Deep Convolutional Forest (DCF) classifier, which then applies the prediction strategy. The COA-DCF method forecasts ocean surface temperature anomalies by considering key variables such as SST, Sea Surface Height (SSH), soil moisture, and wind speed, using historical data ranging from 1 to 10 days across six different locations. The proposed method achieves improved accuracy with low Root Mean Square Error (RMSE) and Mean Absolute Error (MAE) values, and a high Pearson’s correlation coefficient (r) of 0.493, 0.487, and 0.4733, respectively, thereby enhancing the overall performance of the deep learning model.

Similar content being viewed by others

Introduction

Satellite remote sensing data sets have greatly contributed to ocean studies. However, due to the challenge of electromagnetic radiation penetrating water, most of these datasets are limited to the ocean’s surface7. Despite advancements in deep ocean data mining, driven by large-scale in-situ observation programs like the Argo buoy11, existing subsurface observational datasets still need enhancement to meet the demands of research into the fundamental dynamics of the oceans40. Further investigation into the ocean’s depths is essential, as most significant oceanographic events occur below the surface2,5,14,25,39. Moreover, the majority of the additional heat added to Earth’s climate system in recent decades has been absorbed by the oceans49, making the study of internal ocean heating a crucial aspect of addressing global warming6,34.

Recent research9,16,17,31 has shown that the warming of the upper ocean reflects recent increases in sea surface temperatures (SST). Changes in the ocean system significantly influence atmospheric circulation and global climate24. Temperature differences between land and water can lead to air-sea interactions, potentially causing severe weather and climate phenomena, such as storm swells44 and super typhoons27. Additionally, changes in ocean temperature affect monsoon circulation and precipitation, influencing both interannual and interdecadal climatic variations in the region18. Model-based studies suggest that the El Niño-Southern Oscillation (ENSO) and La Niña may be linked to upper ocean warming19. However, due to a lack of subsurface data, only a few studies have explored this area. Since air-sea interactions have less impact on subsurface temperatures compared to SST, interannual variability in subsurface temperatures is often more pronounced. Therefore, investigating the ocean’s thermal structure is crucial, as it plays a significant role in climatic patterns. Although satellites cannot directly observe deep ocean layers, information about the subsurface can be inferred from surface measurements15,19,22. Surface satellite remote sensing data can be used to estimate subsurface conditions through statistical modeling10,21,28,29,30,46.

Changing environmental factors, such as cyclone intensity, are accounted for by incorporating both oceanic and atmospheric features into calculations of general environmental variables. However, tropical cyclones (TC) exhibit distinct characteristics at different stages of their development. Consequently, various changes are reported due to the interaction of oceanic factors, distributional characteristics, and atmospheric variables. These fluctuations impact heat transfer between the ocean and atmosphere, influencing sea surface temperatures (SST). Research has revealed that the roles of the North Atlantic Oscillation (NAO) and the Arctic Oscillation (AO) are more complex than previously thought.

Additionally, multidecadal variability in SST is evident in the Pacific Decadal Oscillation (PDO) in the North Pacific Ocean and the Atlantic Multidecadal Oscillation (AMO) in the North Atlantic Ocean, significantly affecting Arctic sea ice melting. The Arctic ecosystem is particularly vulnerable to these changes. Sea ice is essential for controlling the energy transfer between the ocean and atmosphere, and its melting modifies the structure of the upper Arctic Ocean. As a result of the interaction of ice, sea, and air, anomalies in Arctic surface temperatures arise. These alterations alter the temperature differential between the polar and mid-latitude regions and weaken the westerly winds in the mid-latitudes41,47.

To increase prediction accuracy, this study uses the Deep Convolutional Forest (COA-DCF) technique, which is based on the Convolutional Optimisation Algorithm and targets both atmospheric and oceanic components. Key factors that serve as forecast inputs for the model are wind velocity, soil moisture, rainfall index, and sea surface temperature (SST). First, technical indicators such as the trend detection index (TDI), simple moving average (SMA), and commodity channel index (CCI) are extracted from these input values. These indicators help to smooth out short-term oscillations and reveal trends based on data from the ocean and atmosphere. In order to increase the dimensionality of the data and improve the prediction performance of the model, the extracted features are subjected to data augmentation.

Oversampling is used to do this, adding new, artificial data points to the dataset to make it more complete. The Deep Convolutional Forest (DCF), which combines the advantages of deep learning and decision trees to handle complicated interactions within the data, is one of the deep learning classifiers that receives the enriched, high-dimensional dataset. With the use of the COA-DCF optimisation technique, the classifier is adjusted to produce predictions based on the supplemented dataset that are more accurate and guarantee that the model is well calibrated. This method efficiently analyses and forecasts results from complicated atmospheric and marine data by fusing deep learning with sophisticated data processing techniques.

The key contributions of this research are as follows:

-

1.

A COA-DCF model is introduced, capable of capturing the spatial and temporal dependencies of sea surface temperature (SST), enabling a more accurate and comprehensive prediction of SST fields.

-

2.

The proposed method has been shown to be an effective approach in predicting root mean square error (RMSE), correlation coefficient (r), and mean absolute error (MAE) for SST fields within a specific region, using time series satellite data.

-

3.

This study also presents a straightforward yet efficient averaging technique within the COA-DCF model, demonstrating superior performance over the SVR, LSTM, AdaBoost, and ArDHO models in predicting sea surface temperature.

The structure of the paper is as follows: Sect. 2 provides a foundational overview of the research. Section 3 details the proposed COA-DCF method for predicting subsurface temperature fields. Section 4 discusses the research findings, and the final section addresses the implications and potential future research directions.

Literature review

In the past few years, air-sea interactions at intermediate latitudes have received more attention, which is indicative of a general increase in research on the North Pacific Oscillation (NPO). Climate change significantly impacts the local weather and ecosystem in East Asia and North America, thereby exerting a substantial influence on the NPO45,47. Global climate is influenced by ocean-atmospheric circulation patterns, which are influenced by ocean thermal conditions, mostly represented by water temperature4,37.

In order to find latent relationships between variations in the intensity of tropical cyclones and their spatial distribution, Wang et al. created a 3D convolutional neural network (CNN) model41. This model does not require specific cyclone settings; instead, it relies only on observable environmental patterns to extract deep hybrid characteristics for forecasting strength fluctuations from TC (tropical cyclone) imagery. The precision of the model was enhanced by the use of data augmentation approaches, but at the expense of a reduced model lifetime.

Sarkar et al. used an LSTM classifier to improve numerical prediction outputs33. This method showed that the learning-based LSTM performed better in feature extraction from the sample space than other approaches by successfully using various statistical measures based on correlation values. Test findings suggest that this approach has potential as a forecasting tool32.

To replicate rainfall properties, Im et al. created a regional climate model with higher resolution13. By incorporating physical models into conventional systems, performance was enhanced, though at the cost of increased computation time. Using time series data, pattern recognition, and correlation measures, He et al. presented an updated version of the particle swarm optimisation (PSO) technique12. Although it produced an inaccurate forecast, the use of a support vector machine (SVM) to identify the most pertinent patterns from the gathered data improved convergence, especially for long-term series.

To address issues of portability, robustness, and biases in feature selection, Wolf et al. created a machine-learning ensemble model43. Using seasonal trends and temporal correlations, this model was able to accurately predict sea surface temperature (SST) features with a low computational resource requirement.

Xiao et al. first introduced the LSTM-AdaBoost method for predicting sea surface temperature (SST)46. By incorporating a deep learning classifier into the algorithm, this approach significantly reduced prediction error and improved results. Polynomial regression was used to model the time series data; however, long-term and spatial-temporal forecasting were not addressed.

Zhang et al. employed a gated recurrent neural network (RNN) with a Gated Recurrent Unit (GRU) layer to capture the temporal patterns of SST50. The predictions were made using a fully connected layer. This method was highly reliable and effectively captured the SST trend, though it was occasionally affected by weather fluctuations.

Ye et al. proposed partial least squares regression (PLSR) to forecast sea ice concentration (SIC) variability in key regions49. This statistical model accurately predicted sea variation and achieved a high absolute value. Despite its utility in predictions, it exhibited poor predictability and contributed to systematic inaccuracies, prompting the development of a backpropagation neural network (BPNN)-based method for SST prediction.

In response, we developed a COA-DCF model to forecast SST using historical data. Performance is evaluated through root mean square error (RMSE), Pearson’s correlation coefficient (r), and mean absolute error (MAE). However, the research should have incorporated high-resolution data. The SST predictions were refined using a feedback connection network, achieving the best performance in prediction accuracy. Additionally, a two-stacked BPNN was developed to further enhance forecast precision.

Proposed COA-DCF method for atmospheric and oceanic prediction

The study of the linkages between the atmospheric and oceanic systems has provided considerable impetus to the recent surge in the application of machine learning to predict climate change. This project is one of the most sophisticated and difficult problems in science and technology today. This paper presents a research project that uses the Deep Convolutional Forest (DCF), which is based on COA, to develop a prediction model that is useful for evaluating oceanic and atmospheric data. Technical indicators are first extracted from the input parameters to provide features like the Trend Detection Index (TDI), Commodity Channel Index (CCI), and Simple Moving Average (SMA). After extracting these features, an enhanced dataset is created by applying oversampling to the data.

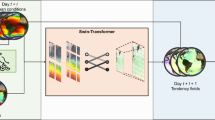

The COA algorithm is then used to train the DCF classifier, which is then used to generate predictions. Figure 1 depicts the suggested COA-DCF approach.

COA methodology

The COA technique is based on coatis’ behaviour modelling. The metaheuristic COA algorithm treats coatis as members of the population8. Coati locations in the search space influence choice variable values. Thus, coatis in the COA may solve the situation. Equation (1) describes the COA implementation’s random initialization of the coatis’ search space location.

The mentioned equation pertains to the determination of the position of the\(\:{\:i}^{th}\) coati within the search space, wherein \(\:{s}_{ij}\) denotes the value of the \(\:{\:j}^{th}\) decision variable. The variables P and Q represent the number of coatis and decision variables, respectively. Additionally, \(\:r\)and is a random real number that falls within the interval of [0, 1], while \(\:{l}_{j}\) and \(\:{u}_{j}\) correspond to the lower and upper bounds of the\(\:{\:j}^{th}\) decision variable. The population matrix \(\:M\) is utilized as a mathematical representation in Eq. (2) of the coati population in the COA.

The allocation of potential solutions across decision variables results in the computation of distinct values for the problem’s objective function. The aforementioned values are exhibited through the utilization of Eq. (3).

The vector \(\:f\) represents the objective function obtained, while \(\:{f}_{i}\) denotes the objective function value obtained from the \(\:{\:i}^{th}\) coati.

In metaheuristic algorithms, like the COA algorithm, the value of the objective function is used to assess the quality of a potential solution. Therefore, the member of the population that leads to the identification of the ideal value for the objective function is known as the optimal member of the population. The potential solutions are updated while the algorithm iterations continue on, which results in the optimal member of the population being updated simultaneously with each iteration.

Exploration phase (hunting and attacking strategy)

In the initial stage of improving the coatis’ population within the exploration area, their behaviour is modeled after their approach to hunting iguanas. This model represents a pack of coatis scaling a tree to get to an iguana and frighten it. Certain coatis display their hunting instincts by congregating under the tree and biding their time until the iguana descends to the ground. The coatis then launch an ardent chase to get the iguana down on the ground. This method demonstrates the COA’s capacity for comprehensive exploration across the problem-solving space by enabling coatis to move to different areas within the search space. The optimal population member is thought to be positioned where the iguana is according to the COA design. Additionally, it’s thought that half of the coatis ascend the tree, and the other half waits below for the iguana to descend. Equation (4) describes the mathematical simulation of the coatis climbing out of the tree.

Upon descending, the iguana is placed at a stochastic location within the exploration domain. Coatis on the ground then move within the simulated search space according to Eqs. (5) and (6), which determine their new positions based on random assignments.

If the new position of each coati results in an improved value for the objective function, the update is considered acceptable. If not, the coati will remain in its previous position. This update condition applies to values of \(\:i\) ranging from 1 to P and is simulated using Eq. (7).

In this context, \(\:{S}_{i}^{x1}\)denotes the newly calculated position of the the ith coati, with \(\:{s}_{ij}^{x1}\) representing its \(\:{j}^{th}\) dimension and \(\:{f}_{i}^{x1}\) representing the corresponding objective function value. The variable \(\:rand\) is a random real number within the interval [0,1]. The term “Iguana” refers to the position of the best member in the search set {1,2}. The position of the iguana on the ground, \(\:{I}_{j}^{G}\), is randomly generated, where \(\:{I}_{j}^{G}\), represents as \(\:{I}_{j}^{G}\) for its jth dimension. Additionally, J is an integer randomly selected from the set {1,2}, and \(\:{f}_{{I}^{G}}\) represents the objective function value at the iguana’s position.

Exploitation phase (the procedure of fleeing out of the way of a predator)

The mathematical modeling of the second phase of updating the coatis’ positions in the search space is inspired by their natural behaviour in response to predator encounters and their evasion tactics. When a predator attacks a coati, the animal quickly moves away from its current location. These strategic movements result in the coati remaining close to its original position, demonstrating the coati’s ability to perform a local search effectively, as per the COA.

The acceptability of the newly computed position depends on whether it improves the objective function’s value. This criterion is simulated using Eq. (8).

In the second phase of COA, the new position \(\:{S}_{i}^{x2}\) for the ith coati is computed based on its \(\:{j}^{th}\) dimension \(\:{s}_{ij}^{x1}\) and objective function value \(\:{f}_{i}^{x2}\). This calculation incorporates a random number (\(\:rand\)) and the iteration counter (\(\:t\)). Additionally, the local lower bound (\(\:{l}_{j}\)) and local upper bound (\(\:{u}_{j}\)) of the jth decision variable decision variable, as well as the overall lower bound (\(\:{l}_{j}\)) and upper bound (\(\:{u}_{j}\)) for the same variable, are considered.

Deep Convolutional Forest for parameter prediction

Predictions for atmospheric and oceanic parameters are then produced by feeding the augmented data into the Deep Convolutional Forest (DCF) classifier. In atmospheric prediction, deep learning classifiers have shown to be especially successful. To generate its predictions, the DCF classifier makes use of the updated data. The recommended COA algorithm is utilised for the classifier’s training, though. Researchers frequently use data augmentation to get around the requirement for a lot of training samples for deep learning classifiers. To satisfy the needs of the classifier, this procedure produces several data samples.

A popular method in research to fulfil the demand for a high number of training samples for deep learning classifiers is data augmentation. This approach guarantees that the classifier has a varied and sufficient collection of samples, which improves its learning and predicting skills by producing several versions of the original data.

As shown in Fig. 2, the DCF model uses a cascade mechanism that was influenced by deep forest and neural network designs38. According to this method, each level processes and sends its output to the level after it, which creates its own results and sends them on. Based on the processed data, each level generates a probability. These probabilities are merged with feature maps to provide input for the subsequent step. Calculating the average probability from the last level and choosing the maximum average as the forecast outcome yields the final classification.

The main factor affecting the model’s difficulty level is its accuracy. When an accuracy plateau is reached, the Dynamic Cascade Forward (DCF) algorithm ends its iterative process, in contrast to deep neural networks that have a set number of hidden layers. This happens when the accuracy of the validation data increases, creating new levels until a suitable degree of precision is attained. It is possible to apply the DCF methodology to datasets of different sizes, even small-scale datasets. The system’s capacity to modify its complexity by stopping the training process when acceptable precision levels are reached accounts for this adaptability.

The convolutional layer, classification layer, and pooling layer are the three fundamental parts of each level of the DCF model. Four primary classifiers comprise the classification layer: two highly randomised trees and two randomised forests. The pooling layer is essential for lowering overfitting in the suggested model, whereas the convolutional layer manages feature extraction.

The reason DCF works so well is that it combines the best aspects of bagging and boosting. These methods each have a different use in terms of lowering bias and variation. In bagging, a group of ineffective learners are trained concurrently, and the final model score is calculated by averaging their performances. Boosting, on the other hand, educates weak learners in a sequential manner, with each learner trying to outperform its predecessor.

The DCF model uses an aggregate of all the forests’ classification layer outputs to represent bagging. The basic classifier used by DCF is Random Forest; the average of the model is obtained by summing the predictions made by each decision tree separately. DCF also includes boosting by consistently adding new stages, each of which fixes the flaws of the one before it. With this strategy, DCF can take advantage of the advantages of both boosting and bagging.

Convolution operation

In the convolutional layer of the neural network, convolution is performed on an input matrix, followed by the application of the Rectified Linear Unit (ReLU) activation function to extract hidden features from the textual input. Let \(\:X\in\:{\mathbb{S}}^{n\times\:m}\) be the input matrix, where \(\:n\) represents the number of samples and \(\:m\) is the dimensionality of each sample vector. A filter \(\:X\in\:{\mathbb{S}}^{m\times\:d}\) is then applied to the input, resulting in a feature vector \(\:V\) with a dimension of \(\:n-d+1\), commonly referred to as a feature map. Here, \(\:d\) refers to the size of the kernel’s area of interest. Equations (9–11) illustrate the process for deriving the feature vector, assuming \(\:d\) equals 2.

The feature vector \(\:V\) of length \(\:(n\:-\:2\:+\:1)\) is obtained by applying the convolution operator ⊛. Each element in the resulting vector is computed using the following formula:

The ReLU activation function is applied to the feature vector \(\:V\). As described in Eqs. (12–14), ReLU processes each value \(\:{V}_{i}\) by returning the value itself if it is positive. If the value is negative, ReLU returns zero, effectively selecting the maximum value between \(\:{V}_{i}\) and zero.

The convolutional layer’s output is transformed into a set of feature maps by applying several filters. This is because each filter generates only one feature map \(\:{\widehat{V}}_{i}\) of length \(\:(n\:-\:2\:+\:1)\) that contains only positive values.

Pooling operation

In deep learning, pooling is a popular method for lowering the dimensionality of feature maps. The input is divided into non-overlapping sections, and for each region, a summary statistic—like the maximum or average value—is computed. By doing this, the model’s parameter count is lowered, overfitting is avoided, and significant data features are preserved. The DCF model’s pooling layer uses pooling to downsize sample feature maps, which lowers the possibility of overfitting, according to Akhtar et al. (2020)1.

By choosing a subset of features from a wider set, pooling makes the model simpler by combining the filter outputs and lightening the computational load on later processing steps. By addressing model complexity and lowering data noise, it aids in the mitigation of overfitting. An early termination mechanism included in the DCF model stops training as soon as performance starts to decline.

There are three ways that pooling can be applied: average, min, and max. Since max-pooling chooses the biggest value from each region to represent the feature map’s primary feature, it is typically recommended. In max-pooling, the output dimension matches the input dimension, and each element \(\:{\stackrel{\sim}{V}}_{i}\:\)represents the maximum value of its corresponding feature map, which can be computed using Eq. (16).

Ultimately, the features extracted from the convolutional and pooling layers are passed through the classification layer.

Fitness function

The optimal solution is determined using a fitness evaluation, where the goal is to achieve the minimum value. The fitness function is calculated using a specific expression.

The fitness measure is represented by \(\:F\), while \(\:Z\) denotes denotes the target output. The total number of training samples is specified by \(\:\omega\:\), and \(\:{\stackrel{\sim}{V}}_{i}\) represents the classification result from the deep learning classifier.

These steps are repeated iteratively until the optimal solution is obtained. Algorithm 1 provides the pseudo-code for the developed COA-DCF.

Dataset description

These observational SST datasets are provided by NOAA in the USA and are collected using the AVHRR infrared satellite. Among NOAA’s products, the OISST version 2 dataset is notable for its large sample size and near-surface readings. This dataset is particularly well-suited for short-term modeling projections as it accounts for diurnal variations in SST. The dataset features a grid resolution of 0.25° by 0.25°, allowing for detailed weekly and monthly evaluations (https://www.esrl.noaa.gov/psd/data/gridded/data.noaa.oisst.v2.html).

Acquisition of input atmospheric and oceanic parameters

The deep learning classifier is utilized for the prediction of atmospheric and oceanic parameters. For the prediction process, this study incorporates a comprehensive set of meteorological and oceanic variables, including soil moisture, wind velocity and direction, sea level height (SLH), and sea surface temperature (SST).

Sea Surface temperature (SST) SST is a key climate and weather parameter derived from microwave radiometers on satellites, widely recognized as a reliable indicator of global productivity, pollution, and climate change. This measurement is typically obtained using the infrared bands of optical satellites. SST serves as a crucial climatic variable for monitoring continuous climate variations and understanding the broader climate system.

Annual India Rainfall Index (AIRI) AIRI consists of annual rainfall data organized in a time series format. It provides evidence of climate variability in India, offering insights into the region’s changing climate patterns.

Wind Velocity/Speed Wind velocity at two meters above the ground level represents wind speed at pedestrian height, directly influencing thermal comfort through air ventilation. In the atmospheric context, wind velocity is calculated using the Cartesian coordinate system on a spherical surface. The minimum wind speed that initiates particle movement is referred to as the threshold velocity.

Sea Level Height (SLH) SLH is a critical global ocean climate parameter obtained using tidal gauges. It represents the sea surface height measured relative to an ellipsoid reference point. Although SLH is not well-observed in the Arctic region, it plays a vital role in understanding the global ocean circulation patterns.

Soil moisture Soil moisture refers to the amount of water retained within the soil, primarily influenced by soil properties, precipitation, and temperature. It is the key variable controlling the exchange of water and heat energy between the atmosphere and the ground surface, largely through processes like plant transpiration and evaporation.

where, \(D\) indicates dataset, \(P\) denotes atmospheric and oceanic data, \(P_{i}\) denotes \(i^{th}\) data, and \(n\) indicates total number of data.

Extraction of technical indicators

To extract technical indicators such as CCI (Commodity Channel Index), SMA (Simple Moving Average), and TDI (Trend Detection Index), the relevant input data \(P_{i}\) is selected from the dataset and processed in the technical indicators extraction phase55. Technical indicators are heuristic or pattern-based signals derived from price, open interest, or trade volume of an asset. These indicators are crucial for predicting soil and atmospheric moisture, as they use historical data to forecast future parameters. Below is an explanation of the technical indicators derived from the atmospheric and oceanic parameters:

CCI: Commodity channel index is the term used to describe the data's cyclical turns. This indicator is described as follows:

where, \(f_{3}\) specifies CCI indicator with the dimension of \(\left[ {1 \times 1} \right]\).

SMA: It is calculated by taking into account the average statistics for a specific time frame. It's calculated as

where, \(B_{a}\) indicates close price on the day \(a\), \(m\) signifies input window length, and \(f_{6}\) represents SMA with the size of \(\left[ {1 \times 1} \right]\).

TDI: It is employed to determine the beginning and end of a trend. It can be used in conjunction with other indicators or as a standalone indication. It is depicted as \(f_{7}\) having the dimensions of \(\left[ {1 \times 1} \right]\). In order to produce the augmentation result, the technical indicators that were collected from the data are sent to the data augmentation phase. On the other hand, the technical indicators that were taken out of the data are described as \(f\) having a dimension of \(\left[ {1 \times 7} \right]\) such that \(f = \left\{ {f_{1} ,...,f_{7} } \right\}\), respectively.

Data augmentation by oversampling method

Each technical feature undergoes data augmentation once technical indicators have been extracted from the data, which increases the dimensionality of the data. Therefore, in order to produce a feature result with big sized dimensions, each feature is fed independently to the data augmentation module. By adding data at random, it is a significant technique used to enhance diversity and volume. By employing the training data’s minimum and maximum feature index values as the threshold values for creating data samples, the oversampling approach produces the augmented output.

Every technical characteristic with a dimension \(\left[ {U \times V} \right]\) is permitted to proceed to the data augmentation phase, whereupon the oversampling model procedure generates an augmented result as \(A\) with the dimension of \(\left[ {M \times V} \right]\) such that \(M > U\). Nevertheless, after the data augmentation process, the RSI indicator with size \(\left[ {1 \times 1} \right]\) produces an enhanced result with dimensions of \(\left[ {50,000 \times 1} \right]\) created by the oversampling technique.

As a result, the outcome with size \(\left[ {1 \times 1} \right]\) of is produced by the TRIX indication with size of \(\left[ {50,000 \times 1} \right]\). Likewise, the technical indicators—CCI, Williams %R, ATR, SMA, and TDI—each possessing a dimension of \(\left[ {1 \times 1} \right]\) that produces an enlarged outcome with the dimension of \(\left[ {50,000 \times 1} \right]\) for each feature, correspondingly. As a result, the enhanced result's entire dimension is provided as \(\left[ {50,000 \times 7} \right]\), each. The augmented data is fed into the classifier as input to create the prediction mechanism, and its size is larger than that of the original training set.

Experimental setup and result analysis

For each location’s SST forecast trial, datasets for the COA-DCF, SVR, AdaBoost, ArDHO, and LSTM models were prepared with a maximum input sequence length of 40 observations. The trial interval was determined, with 70% of the new data used for training and the remaining 30% reserved for testing. During training, 5% of the training data was further partitioned for validation.

Comparisons were made among the LSTM, SVR, AdaBoost, ArDHO, and COA-DCF models using stacking generalization to demonstrate that averaging their predictions is the most effective strategy. The COA-DCF model was constructed using the following procedures:

-

1.

Base Learners Training: The training dataset was divided into three distinct sets, each containing an equal number of unique examples.

-

2.

Training and Validation: Each set was used once as the validation set, while the other two sets served as training data. This process was repeated until each set had been used for validation.

-

3.

Prediction Generation: After training, predictions were generated using the trained base learners for the remaining set.

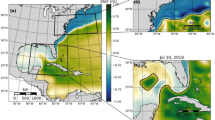

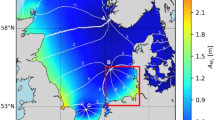

Referring to Fig. 3, the predictions were integrated, and the target SST was forecasted at a higher level.

Estimated temperature based on sea surface temperature in six locations (L1, L2, L3, L4, L5, L6) (https://skepticalscience.com/print.php?n=4180).

Depending on the resolution of satellite observations, images display the specifics of sea-surface temperature distributions. The resolution in the infrared is much higher (1 km) than it is in the microwave (about 25 km). Various data plots can be created by using the data range provided and latitude and longitude information. (https://psl.noaa.gov/mddb2/makePlot.html?variableID=2701)

Figure 4 shows the root-mean-square error (RMSE) of predictions generated using the five methods: LSTM, SVR, AdaBoost, ArDHO, and COA-DCF, across various prediction horizons. When comparing performance across six different locations and the full forecast range, COA-DCF outperforms LSTM, SVR, AdaBoost, and ArDHO. However, there is no clear superiority between LSTM and SVR at any of the six tested locations or across the forecast ranges.

To further quantify and compare the accuracy of the predicted SSTs, Pearson’s correlation coefficient (r) was calculated, and the results are displayed in Fig. 5. The ‘r’ values are all statistically significant, as shown in the table. The results indicate that the observed and predicted SSTs exhibit a linear relationship, which weakens as the forecast horizon extends from 1 to 10 days.

In all six location and prediction horizon comparisons, SVR and LSTM demonstrated lower r values compared to BPNN. Additionally, the r values for ArDHO were either lower than or comparable to those for COA-DCF across all prediction horizons from L1 to L6.

Table 1; Fig. 6 present the mean absolute error (MAE) for predictions generated using the five methods: LSTM, SVR, AdaBoost, ArDHO, and COA-DCF, across all prediction horizons. COA-DCF consistently outperforms LSTM, SVR, AdaBoost, and ArDHO across all prediction horizons and six locations.

Currently, mathematical models based on physics-based hypotheses, subject to boundary and initial conditions, are capable of generating real-time SST estimates over larger geographic areas compared to models tailored to specific locations.

The results indicate the proposed COA-DCF model outperforms the other models for 10 days successively and in all 6 locations. The performance is evaluated on two metrics- the errors and the correlation coefficient. The performance not degrading for 10 consecutive days is an indication that the methodology systematically discovers the optimum parameter in the search space at all intermediate steps. In meteorology this is a significant development because the deterministic function that governs the time series in unknown. Neither the numerical nor the statistical models are characterized to determine this equation. The prediction is based on modelling the regression curve to the best possible estimation. In such a process the models tend to deviate from the time series in a spatial or temporal region of prediction and then come back to the trajectory in another region. No model systematically predicts better than the others. This is one reason why Multi Model Ensemble (MME) (Krishnamurthy and others, 2001) works in numerical modelling domain56. The system dynamics is captured by one model at one time or location and by another model at an entirely different time or space. The errors of individual models are neutralised by such ensemble.

The present study indicates that statistical models of the tune described above are capable of making better predictions at all temporal and spatial domains. Consequently the need for ensemble forecasting can be done away with thus saving computational time and cost.

Conclusion

Surface water temperature is a critical indicator of global ocean health and can significantly influence or exacerbate droughts, floods, and other severe weather conditions, as well as impact oceanic systems and global warming. To generate accurate daily SST predictions, it is recommended to use the back-propagation neural network model, a machine learning technique that leverages an ensemble of diverse predictors. This ensemble approach uses averaging to mitigate the weaknesses of individual predictors while capitalizing on their strengths, thus providing state-of-the-art performance.

We train and evaluate the proposed COA-DCF method using daily SST data from the AVHRR sensor satellite at six locations. Across nearly all forecast ranges, from one to ten days, and using various metrics such as RMSE, correlation coefficient (r), and MAE, COA-DCF consistently outperforms LSTM, SVR, AdaBoost, and ArDHO. This location-specific SST forecasting method has the potential to enhance maritime activity planning and safety.

With advances in high-performance computing, researchers will soon be able to explore both extended SST forecasts and spatiotemporal SST predictions over larger regions. Additionally, the proposed technique could be applied to forecast other important marine, atmospheric, and environmental variables, including sea surface height (SSH), sea surface velocity (SSV), and sea surface wave (SSW).

Data availability

The data used for analysis were downloaded from the following website and may be available upon request: https://www.esrl.noaa.gov/psd/data/gridded/data.noaa.oisst.v2.html. Additional data supporting the findings of this study are available upon request from the corresponding author.

References

Akhtar, N. & Ragavendran, U. Interpretation of intelligence in CNN-pooling processes: a methodological survey. Neural Comput. Appl. 32(3), 879–898 (2020).

Balmaseda, M. A., Trenberth, K. E. & Källén, E. Distinctive climate signals in reanalysis of global ocean heat content. Geophys. Res. Lett. 40, 1754–1759 (2013).

Ballabrera-Poy, J., Mourre, B., Garcia-Ladona, E., Turiel, A. & Font, J. Linear and non-linear T–S models for the eastern North Atlantic from Argo data: role of surface salinity observations. Deep Sea Res. Part. I. 56, 1605–1614 (2009).

Bao, S. et al. Salinity profile estimation in the Pacific Ocean from satellite surface salinity observations. J. Atmos. Ocean. Tech. 36, 53–68 (2019).

Bindoff, N. L. et al. Observations: Oceanic Climate Change and Sea Level. Climate Change 2007: The Physical Science Basis. Contribution of Working Group I, 385–428 (Cambridge University Press, 2007).

Chen, X. & Tung, K. K. Varying planetary heat sink led to global-warming slowdown and acceleration. Science. 345, 897–903 (2014).

Cheng, H., Sun, L. & Li, J. Neural network approach to retrieving ocean subsurface temperatures from surface parameters observed by satellites. Water. 13(3), 388 (2021).

Dehghani, M., Montazeri, Z., Trojovská, E. & Trojovský, P. Coati optimization algorithm: a new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl. Based Syst. 259, 110011 (2023).

Drijfhout, S. S. et al. Surface warming hiatus caused by increased heat uptake across multiple ocean basins. Geophys. Res. Lett. 41, 7868–7874 (2014).

Fox, D. N. The modular ocean data assimilation system. Oceanography. 15, 22–28 (2002).

Guinehut, S., Dhomps, A. L., Larnicol, G. & Le Traon, P. Y. High resolution 3-D temperature and salinity fields derived from in situ and satellite observations. Ocean. Sci. 8, 845–857 (2012).

He, Q. et al. Improved particle swarm optimization for sea surface temperature prediction. Energies. 13(6), 1369 (2020).

Im, E. S. & Eltahir, E. A. Simulation of the diurnal variation of rainfall over the western maritime continent using a regional climate model. Clim. Dyn. 51(1), 73–88 (2018).

Klemas, V. & Yan, X. H. Subsurface and deeper ocean remote sensing from satellites: an overview and new results. Prog Oceanogr. 122, 1–9 (2014).

Levin, L. A. & Le, B. N. The deep ocean under climate change. Science. 350, 766–768 (2015).

Levitus, S. et al. Global ocean heat content 1955–2008 in light of recently revealed instrumentation problems. Geophys. Res. Lett. 36, 1–5 (2009).

Levitus, S. et al. World ocean heat content and thermosteric sea level change (0–2000 m), 1955–2010. Geophys. Res. Lett. 39, 1–5 (2012).

Li, J., Sun, L., Yang, Y. & Cheng, H. Accurate evaluation of sea surface temperature cooling Induced by typhoons based on satellite remote sensing observations. Water. 12, 1413 (2020).

Li, J., Sun, L., Yang, Y., Yan, H. & Liu, S. Upper ocean responses to binary typhoons in the nearshore and offshore areas of northern south China sea: a comparison study. J. Coast Res. 99, 115–125 (2020).

Liu, S. et al. Basin-wide responses of the South China Sea environment to Super Typhoon Mangkhut. Sci. Total Environ. 731(2018).

Liu, L., Peng, S. & Huang, R. Reconstruction of ocean’s interior from observed sea surface information. J. Geophys. Res. Oceans. 122, 1042–1056 (2017).

Lowe, J. A., Gregory, J. M. & Flather, R. A. Changes in the occurrence of storm surges around the United Kingdom under a future climate scenario using a dynamic storm surge model driven by the Hadley Centre climate models. Clim. Dyn. 18, 179–188 (2001).

Lu, W., Su, H., Yang, X. & Yan, X. H. Subsurface temperature estimation from remote sensing data using a clustering-neural network method. Remote Sens. Environ. 229, 213–222 (2019).

Lyman, J. M. et al. Robust warming of the global upper ocean. Nature. 465, 334–337 (2010).

Meehl, G. A., Arblaster, J. M., Fasullo, J. T., Hu, A. & Trenberth, K. E. Model-based evidence of deep-ocean heat uptake during surface-temperature hiatus periods. Nat. Clim. Chang. 1, 360–364 (2011).

Meijers, A. J. S., Bindoff, N. L. & Rintoul, S. R. Estimating the four-dimensional structure of the Southern Ocean using satellite altimetry. J. Atmos. Ocean. Tech. 28, 548–568 (2011).

Mel, R. & Lionello, P. Storm surge ensemble prediction for the city of Venice. Weather Forecast. 29, 1044–1057 (2014).

Mel, R., Sterl, A. & Lionello, P. High resolution climate projection of storm surge at the venetian coast. Nat. Hazard. Earth Syst. 13, 1135–1142 (2013).

Nardelli, B. B. & Santoleri, R. Methods for the reconstruction of vertical profiles from surface data: multivariate analyses, residual GEM, and variable temporal signals in the NPO. J. Atmos. Ocean. Tech. 22, 1762–1781 (2005).

Nardelli, B. B., Cavalieri, O., Rio, M. H. & Santoleri, R. Subsurface geostrophic velocities inference from altimeter data: application to the Sicily Channel (Mediterranean Sea). J. Geophys. Res. Oceans. 111(2006).

Raj, S., Tripathi, K. C. & Bharti, R. K. Scope and status of artificial neural network based prediction models in meteorology: from backpropagation to recurrent networks. In International Conference on Computational Intelligence, Communication Technology and Networking (CICTN), Ghaziabad, India, 300–308 https://doi.org/10.1109/CICTN57981.2023.10141160 (2023).

Raj, S., Tripathi, S. & Tripathi, K. C. ArDHO-deep RNN: autoregressive deer hunting optimization based deep recurrent neural network in investigating atmospheric and oceanic parameters. Multimedia Tools Appl. 81(6), 7561–7588 (2022).

Sarkar, P. P., Janardhan, P. & Roy, P. Prediction of sea surface temperatures using deep learning neural networks. SN Appl. Sci. 2(8), 1–14 (2020).

Song, Y. T. & Colberg, F. Deep ocean warming assessed from altimeters, gravity recovery and climate experiment, in situ measurements, and a non-boussinesq ocean general circulation model. J. Geophys. Res., 116(2011).

Su, H., Huang, L., Li, W., Yang, X. & Yan, X. H. Retrieving ocean subsurface temperature using a satellite-based geographically weighted regression model. J. Geophys. Res. Oceans. 123, 5180–5193 (2018).

Su, H., Li, W. & Yan, X. H. Retrieving temperature anomaly in the global subsurface and deeper ocean from satellite observations. J. Geophys. Res. Oceans. 123, 399–410 (2018).

Su, H., Wu, X., Yan, X. H. & Kidwell, A. Estimation of subsurface temperature anomaly in the Indian Ocean during recent global surface warming hiatus from satellite measurements: a support vector machine approach. Remote Sens. Environ. 160, 63–71 (2015).

Utkin, L. V., Meldo, A. A. & Konstantinov, A. V. Deep Forest as a framework for a new class of machine-learning models. Natl. Sci. Rev. 6(2), 186–187 (2019).

Vinogradova, N. et al. Satellite salinity observing system: recent discoveries and the way forward. Front. Mar. Sci. 6, 243 (2019).

Wang, X. D., Han, G. J., Li, W. & Qi, Y. Q. Reconstruction of ocean temperature profile using satellite observations. J. Trop. Oceanogr. 30, 10–17 (2011).

Wang, H., Wang, G., Chen, D. & Zhang, R. Reconstruction of three-dimensional Pacific temperature with Argo and satellite observations. Atmos. -Ocean. 50, 116–128 (2012).

Wang, X., Wang, W. & Yan, B. Tropical cyclone intensity change prediction based on surrounding environmental conditions with deep learning. Water. 12(10), 2685 (2020).

Wolf, S., O’Donncha, F. & Chen, B. Statistical and machine learning ensemble modelling to forecast sea surface temperature. J. Mar. Syst. 208, 103347 (2020).

Woth, K., Weisse, R. & Von, S. H. Climate change and North Sea storm surge extremes: an ensemble study of storm surge extremes expected in a changed climate projected by four different regional climate models. Ocean. Dynam. 56, 3–15 (2006).

Wu, X., Yan, X. H., Jo, Y. H. & Liu, W. T. Estimation of subsurface temperature anomaly in the North Atlantic using a self-organizing map neural network. J. Atmos. Ocean. Tech. 29, 1675–1688 (2012).

Xiao, C. et al. Short and mid-term sea surface temperature prediction using time-series satellite data and LSTM-AdaBoost combination approach. Remote Sens. Environ. 233, 111358 (2019).

Yan, H. et al. A dynamical-statistical approach to retrieve the ocean interior structure from surface data: SQG-mEOF-R. J. Geophys. Res. Oceans. 125, 1–15 (2020).

Yan, X. H. et al. The global warming hiatus: slowdown or redistribution? Earth’s Future. 4, 472–482 (2016).

Ye, X. & Wu, Z. Seasonal prediction of Arctic summer sea ice concentration from a partial least squares regression model. Atmosphere. 12(2), 230 (2021).

Zhang, Z. et al. Monthly and quarterly sea surface temperature prediction based on gated recurrent unit neural network. J. Mar. Sci. Eng. 8(4), 249 (2020).

The SST dataset will be extracted from. https://www.ncei.noaa.gov/pub/data/cmb/ersst/v5/netcdf/?C=D;O=D (Accessed Mar 2021).

SLH data acquired from. https://data.gov.au/dataset/ds-marlin-800eeed8-2bb3-4ba0-b5a4-ab89ab756406/distribution/dist-marlin-800eeed8-2bb3-4ba0-b5a4-ab89ab756406- (Accessed Mar 2021).

Soil moisture dataset. https://www.kaggle.com/amirmohammdjalili/soil-moisture-dataset (Accessed Mar 2021).

Wind speed dataset. https://developer.nrel.gov/docs/wind/wind-toolkit/india-wind-download/ (Accessed Mar 2021).

Shynkevich, Y., McGinnity, T. M., Coleman, S. A., Belatreche, A. & Li, Y. Forecasting price movements using technical indicators: investigating the impact of varying input window length. Neurocomputing 264, 71–88 (2017).

Krishnamurti, T. N. et al. Improved weather and seasonal climate forecasts from multimodel superensemble. Science. 285(5433), 1548–1550 https://doi.org/10.1126/science.285.5433.1548 (1999).

Acknowledgements

We would like to thank the Uttarakhand Technical University Administration, Dehradun, India, for their support of this research.

Funding

This article and the related work were not supported by any funding agency.

Author information

Authors and Affiliations

Contributions

Author Contributions: Conceptualization, Data Curation, Formal Analysis, Methodology: Sundeep Raj. Supervision, Validation, Visualization, Writing: Sundeep Raj, Rajendra Bharti, K.C. Tripathi.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Raj, S., Bharti, R.K. & Tripathi, K.C. Coati optimization algorithm based Deep Convolutional Forest method for prediction of atmospheric and oceanic parameters. Sci Rep 14, 22160 (2024). https://doi.org/10.1038/s41598-024-73811-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-73811-z

Keywords

This article is cited by

-

Multi-strategies improved coati optimization algorithm and performance analysis

Knowledge and Information Systems (2025)