Abstract

Procedural coding presents a taxing challenge for clinicians. However, recent advances in natural language processing offer a promising avenue for developing applications that assist clinicians, thereby alleviating their administrative burdens. This study seeks to create an application capable of predicting procedure codes by analysing clinicians’ operative notes, aiming to streamline their workflow and enhance efficiency. We downstreamed an existing and a native German medical BERT model in a secondary use scenario, utilizing already coded surgery notes to model the coding procedure as a multi-label classification task. In comparison to the transformer-based architecture, we were levering the non-contextual model fastText, a convolutional neural network, a support vector machine and logistic regression for a comparative analysis of possible coding performance. About 350,000 notes were used for model adaption. By considering the top five suggested procedure codes from medBERT.de, surgeryBERT.at, fastText, a convolutional neural network, a support vector machine and a logistic regression, the mean average precision achieved was 0.880, 0.867, 0.870, 0.851, 0.870 and 0.805 respectively. Support vector machines performed better for surgery reports with a sequence length greater than 512, achieving a mean average precision of 0.872 in comparison to 0.840 for fastText, 0.837 for medBERT.de and 0.820 for surgeryBERT.at. A prototypical front-end application for coding support was additionally implemented. The problem of predicting procedure codes from a given operative report can be successfully modelled as a multi-label classification task, with a promising performance. Support vector machines as a classical machine learning method outperformed the non-contextual fastText approach. FastText with less demanding hardware resources has reached a similar performance to BERT-based models and has shown to be more suitable for explaining the predictions efficiently.

Similar content being viewed by others

Introduction

Repetitive administrative tasks in the healthcare sector represent a burden for clinicians. Notably, when it comes to procedural coding in surgery. Providing automated support for these administrative processes can improve the clinicians’ focus more on practical duties, and reduce the risk of administrative overload1. A majority of surgical procedures are elective and can be derived from an array of existing operative reports. Ideally, such a support system would offer surgeons with coding recommendations after they complete the detailed natural language formulation of the surgical report.

Modern model based natural language processing (NLP) systems usually rely on vast amounts of annotated training data, so that a specific component in the pipeline can be adjusted with an acceptable performance for a given task. Based on an application-oriented investigation, we address the following points and argue that, particularly in clinical environments, the extensive accumulation of textual data from routine documentation over the past decades makes this scenario especially promising for modern NLP applications. We support our statements with a state-of-the-art implementation that demonstrates the potential of reusing textual data sources in a secondary use case scenario.

Good-quality NLP-training data

The generation of manually annotated textual resources is a time-consuming process, yet essential for training and evaluation of NLP models. The most prominent annotation frameworks2 in the biomedical and clinical domain are brat3 and INCEpTION4. We argue that, to mimic a digital process that has been done repeatedly by clinicians, enough annotated textual resources have been accumulated over the past decades within hospital information systems (HIS) in their underlying database architecture. These can be used as a silver standard for data-driven NLP-based model tuning. This holds true for language model generation, usually while exploiting transformer-based architectures, as well as having sufficient annotated resources available for domain specific downstreaming tasks. For a task like multi-label classification, reliable annotation of texts with labels by the surgeons who have conducted the surgery is necessary, though this is rare in research settings.

Complex linguistic problems

Clinical narratives pose specific challenges for machine based processing, as demonstrated by the following example5: “3. St.p. TE eines exulz. sek.knot.SSM (C43.5) li Lab. majus. Level IV, 2,42 mm Tumordurchm.” (3. Status post total excision of an ulcerated secondary node of superficial spreading melanoma (C43.5) on the left labium majus. Level IV, tumor diameter 2.42 mm.). In general, the idiosyncratic nature of clinical routine documentation comprises spelling mistakes, typing errors, clinical expert jargon, non-standardised numeric expressions, and an above-average use of acronyms and abbreviations. Additionally, the dialects of German language changes drastically from region to region, which is often reflected in written form of the language. We hypothesize that transformer-based architectures, which serve as the core building block of large language models, can overcome the complexity of clinical language. Specifically, due to the use of their attention mechanism and the ability to exploit contextualized embeddings, enabling them to resolve e.g. synonymous and homonymous term expressions.

Limited hardware resources

The generation of language models, or the downstreaming of a language model to a specific problem domain, is a resource-intensive task, which is normally performed on GPUs, TPUs, or clusters of them. The required hardware resources often exceed what is available within a clinical information system environment. Furthermore, cloud-based compute resources are not an option due to ethical regulations surrounding the use of clinical data. These observations usually lead to find on-premise solutions with limited amount of hardware resources available. We aim to demonstrate the generation, deployment and downstreaming of a language model to a specific problem domain within one of our interests. Additionally, we want to compare the computational demands, with a shallow neural network, reducing hardware costs to a CPU or single GPU level.

NLP

NLP has seen significant development with the recent advent of transformer-based methods. Automated clinical coding has been an important research topic in the clinical NLP context6. The application of machine learning methods such as logistic regression (LR), support vector machines (SVM), and recurrent neural networks (RNN) by transforming clinical texts into different word embeddings has shown promising results in the classification of clinical texts7. However, some of these methods come with the limitation of not taking context into consideration8. Convolutional neural networks (CNN)9, long short-term memorys (LSTM)10, and conditional random fields (CRF)8 account for the sequence-patterns of words in a sentence and yield good results in the classification of clinical notes.

With respect to assigning labels to text, Tal Baumel et al. experimented with an SVM-based one-vs-all model, a continuous bag-of-words (CBOW) model, a CNN, and bi-directional gated recurrent unit (GRU) model with a hierarchical attention (HA) mechanism (HA-GRU) on MIMIC II (v2.6) and MIMIC III (v1.4) datasets to assign ICD-9 codes to clinical notes. Their results showed that HA-GRU required fewer training samples to train11. Scheurwegs et al. employed unsupervised and semi-supervised techniques for the extraction of multi-word expressions to generalize medical meanings and discovered concepts derived from expert-created dictionaries, which outperformed a bag-of-words approach in clinical code assignment. ICD and procedural codes associated with patient stays were considered as labels12.

Fei Li et al. also attempted to assign ICD codes from clinical text using a multi-filter residual CNN (resNet) on the MIMIC dataset. The authors’ innovation was to use a multi-filter CNN layer to capture various text patterns with different lengths, and a residual convolutional layer to enlarge the receptive field. This approach showed that their method outperformed another potential method, convolutional attention for multi-label classification (CAML)13. In this survey, HA-based neural networks14 along with CAML15, not only achieved acceptable performance with multi-label classification but also provided information on the highly relevant words with respect to the assigned codes. All these methods use one form of word embeddings, with different pre-processing techniques, on which the performance of the machine learning methods largely depends. Recent developments in BERT-based textual models, which were trained on large corpora, hold contextual information and are meant for downstreaming to specific tasks16,17. Marco Bombieri et al. evaluated the pre-trained model SurgicBERTa for procedural surgical language on several task-specific downstream tasks to determine if the downstreamed models carry surgical knowledge in procedural sentence detection, procedural knowledge extraction, ontological information discovery, and surgical terminology acquisition18.

In addition to the work stated above, in our study, we compare transformer-based contextualized models like BERT with shallow less hardware demanding non-contextualized representation schemes as used via fastText and CNNs. Besides this, we contrast them to classical approaches such as SVM and LR using a TF-IDF representation scheme to assigning surgical procedural codes to surgical notes. The process we have identified, wherein years of coding efforts have accumulated in HIS, pertains to the accounting coding of surgical procedures. Through a targeted ETL workflow, we extracted data with the goal of constructing a data structure comprising surgical reports, each one associated with a set of procedure codes. Subsequently, the issue at hand is framed as a multi-label classification task. Our objective in this investigation is to assess a possible coding performance to assist the user with a collection of recommended codes to facilitate the coding process and mitigate the overall workload.

Results

Multi-label classification

Table 1 shows the results of our multi-label classification approach. We report precision, recall and F1-measure values macro-averaged to emphasize equal class importance, in combination with Mean Average Precision (MAP) for ranked retrieval results (see Section Training and testing for a brief overview). The MAP was calculated on the top five class probabilities, taking into account that \(\sim\) 99% of the data set are coded with five or fewer class labels (see Section Descriptive analysis). This data driven decision was supported by the fact that, for a fast visual perception of information from a cognitive load perspective, five items of information is an acceptable work load to support the manual coding process with a machine-learning based pre-selection19. We therefore aimed to shrink down the space of possible classes from 1059 to 5, allowing users to select from these five codes, and represent correct codes as accurate as possible. This corresponds to a compression rate of 96% of the initial overall coding space.

What can be seen from Table 1 is the approximately equal performance of fastText compared to the transformer-based architectures. A natively built language model from scratch (surgeryBERT.at) gave no advantage over a pre-existing re-usable one (medBERT.de). Interestingly, fastText which is much less hardware demanding and differs significantly from the complexity of the underlying model architecture, has about the same performance as the BERT-based models. Although SVM has performed as good as fastText, SVM is computationally expensive and complex to explain as it was modelled using a one-vs-all classification setup. We therefore decided to use fastText for our prototypical front-end implementation, which is described in more detail in the next section.

In addition, Table 2 shows the results when the predicted codes are limited to their probability greater than 0.5. There is an increase in precision and F1-measure, with a slight decline in sensitivity (recall) and MAP. Further, we evaluated the performance of a naïve model that always predicts the most top five popular procedural codes from the training set where the precision, recall, F1-measure and MAP are 0.037, 0.17, 0.0578 and 0.120 respectively. These results indicate that the trained models learned the task of multi-label classification independent of the underlying code distribution.

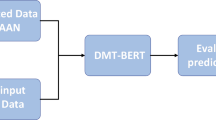

XAI component placement

An intuitive and interactive user interface (UI), when deploying machine models, is crucial to effectively communicate insights and results to users, specifically in a clinical deployment setting. We used Streamlit, a Python library20, to build our web-based coding support front-end to the user. The prototype enables the user to input the surgery report and visualize the suggested top five codes by the pre-trained classifier (see section Multi-label classification). Code suggestions are explained using Local Interpretable Model-agnostic Explanations (LIME)21 (see Section Explainable AI), where textual features responsible for a code are highlighted with corresponding colors. The prediction probabilities are visualized as bar charts, where each bar’s length represents the standardized probability of the corresponding outcome.

Figure 1 shows the user entered surgery report, followed by its top five suggested codes according to the classifier, sorted on their prediction probabilities. By default, the code with the highest prediction probability is explained using LIME. Explanation for other predicted labels can be visualized upon user selection from the scroll-down bar. Physicians can assign codes from the set of suggested codes or from the full reference set via the drop-down selection bar. By default, labels with a probability greater than 0.5 will already be selected as one of the suitable labels.

Error analysis and system limitation

Regarding technical coding performance, we investigated a quantitative error analysis as we were specifically interested to what extent the BERT-based model limitation of processing sequence lengths of 512 tokens affects classification performance compared to fastText, where such a limitation is not existent. What can be seen from Table 3, that the classification performance of fastText when processing narratives with more than 512 tokens is slightly better than compared to the transformer-based architectures.

This makes sense in a way as the shallow neural network architecture, in that case, generates one summarizing embedding vector of the surgery report under investigation which is fed into the last layer for model downstreaming. So there is no cut-off, after 512 tokens, which is a clear system limitation of the transformer-based architectures currently in use. This limitation could be overcome introducing a sliding window for sequence positions after 512 tokens, which nevertheless complicates the training process, as different results from overlapping classification results have to be inferred into a final result. Table 4 presents the performance of the models predicting codes having probabilities greater than 0.5 of narratives with more than 512 tokens. The performance of fastText in this evaluation scenario is slightly lower than the BERT-based models.

Discussion

In this study, we applied BERT-based models, fastText, CNNs, SVM and LR for multi-label text classification. We used, precision, recall, F1- measure and mean average precision as performance metrics. Regarding the stated investigation questions in Section Introduction, we discuss the limitations and strengths of each method within the following contexts:

Good-quality NLP-training data: The secondary use of annotated clinical narratives, seems to be a promising approach to have a feasible amount of training data, needed for supervised machine learning approaches. The generation of manually annotated data sets of hundreds of thousands of documents, is otherwise not feasible in a reasonable amount of time. The curation of already annotated narratives which accumulated over the years in HIS, for data driven machine learning approaches, is a reasonable approach. This observation remains in line with the systematic literature review of automated clinical coding approaches considering studies in MEDLINE and other relevant databases, prior to March 200922. In about 51% of all studies, reference standards fell into the category of “regular practice”, therefore reusing existing codes at the quality level of clinical routine documentation for evaluation. The other two categories were (i) classical “gold standards”, manually annotated and reviewed by at least two annotators and (ii) “trained standard” codes manually assigned by a single expert. The quantity and quality of training data affect the performance of the models. BERT, CNNs, and fastText are effective in handling large, well-annotated datasets due to their ability to capture semantic relationships. Whereas, SVM and LR with TF-IDF rely on term frequencies, which lack the ability to capture contextual relationships. One must address that, fastText offers a balanced ground as it uses sub-word information to handle word semantics.

Complex linguistic problems: The idiosyncratic nature of clinical narratives can be overcome via an end-to-end approach, as long as enough available annotated resources are available for the downstreaming process. Such narratives require models that can capture contextual meaning. In this investigation, we used about 350,000 manually annotated surgery notes as part of clinical routine documentation. The complexity of clinical language can be fetched by the model, the more crucial part is the quality and consistency of the codes manually assigned to the notes. The given coding quality as part of clinical routine documentation can be seen as upper bound which can be reached of the supportive coding system. More challenging are the systems which try to assign within the narratives specific codes in context to a terminology like SNOMED CT in combination with relations between the information entities. Post-coordination of SNOMED CT codes in clinical narrative is still a challenging task to support the FAIR principles of data handling in a clinical setting. BERT based models are suitable for capturing contextual textual meaning, and CNNs can manage complex patterns at lower computational costs. SVM and LR, using TF-IDF as a representation scheme, struggle with these tasks as they treat words independently of their local context. fastText offers improvements over TF-IDF by using sub-word information to handle word morphology, and the overall generated embedding vector mimics to some extent the context of the content at a specific granularity level. Although SVM performed slightly better than fastText in our experiments, our parameterized SVM does not account for the context of each label in relation to word sequences in a surgical report. SVM was computationally expensive in our one-versus-all multi-label text classification setting, which also held true for inference, thus affecting processing time for explainability and interpretability when using LIME.

Limited hardware resources: With respect to hardware resources, typically in clinical information systems there are no GPU clusters available to build large scale language models from scratch. NLP systems typically rely on language models which can be re-used and are open available for the community. Language models specifically in the clinical domain are still lacking. BERT based models as well as CNNs are computationally intensive, especially when dealing with large data- and label sets. They require considerable memory and processing power for training as well as for inference, therefore typically exploiting tailored GPU hardware architectures. SVM struggles with scalability when using one-vs-all for multi-label classification, especially when the label set is as large as in our case (1059 procedural codes). In this experiment, fastText provides balance between performance and efficiency. The investigation has shown that depending on the task, low resource models like fastText can reach similar performance than high demanding transformer architectures as well as an SVM. Therefore, we decided to go for the less demanding model architecture with respect to adoption time but comparable classification performance. Nevertheless, specifically, the deployment and investigation of large language models on premises within a HIS network to support specific processes of clinical routine documentation is needed in the future. This also has to be supported in languages other than English.

In conclusion, based on a TF-IDF representation scheme, SVM delivered the best classification results, fastText stands out for its efficiency in adaptable explainability and classification performance, making it a more practical option in resource scarce settings. Future investigations will emphasize on technical aspects whether large language models can be prompted and adapted to reach a similar coding performance on-premises, as shown in this experimental set up. From a user perspective, we are interested in a qualitative evaluation of the coding support tool to get more insights into usability aspects in a next step.

The contribution of this work lies in the comprehensive comparative evaluation of a diverse set of machine learning models ranging from traditional classifiers like SVM and LR to shallow neural architectures like CNNs, and fastText and finally BERT-based architectures within the domain of multi-label clinical text classification, leveraging codes from routine documentation in a secondary use case scenario for model adaption in German. This study additionally explores the potential by contrasting classical machine learning methods based on a TF-IDF representation scheme (SVM, LR) and those leveraging state-of-the-art embeddings with non-contextual (CNN, fastText) and contextual representations (BERT). Furthermore, this work addresses the research question on large scale multi-label clinical text classification by emphasizing fastText as a still viable alternative for resource-constrained environments. While fastText is often overlooked in favour of more complex architectures like BERT, this study provides evidence that shallow neural network architectures as used by fastText, with its efficient sub-word embeddings, offers a competitive balance between performance and computational resource consumption, in combination with explainability and interpretability wrappers, which are crucial for practical applications where model transparency is paramount.

Moreover, this investigation offers a deeper understanding of how models scale with data complexity and hardware constraints to support an identified coding process in clinical routine documentation. By comparing performance not only in terms of standard metrics like precision, recall, and F1-score but also by considering ranking of the procedural codes, computational demands, this study advances the current discourse on model efficiency and real-world applicability in multi-label classification tasks in the healthcare sector.

Materials and methods

The Styrian hospital holding (Steiermärkische Krankenanstaltengesellschaft M.B.H. (KAGes)) is a healthcare provider of Styria, one of the nine provinces of Austria, which holds 90% of in-patient occupancy in the region. The HIS of KAGes contains longitudinal electronic medical records of the Styrian population, with a size of about 2.1 million records. KAGes administers 20 hospitals and three nursing homes in Styria, Austria. For this study, we have focused on the operative notes documented for surgeries performed at the University Hospital Graz. The University Hospital Graz, operated by KAGes, has an approximate average of 2 million out-patient cases, approximately 90,000 in-patient cases and around 30,000 surgeries performed per year.

Operative notes

Surgeons document operative notes following each surgery, expecting to identify the appropriate standardized procedure codes for administrative purposes. An illustration of a surgical report is provided below:

German:

“Es besteht eine massive Entzündung der linken Großzehe am lateralen und am medialen Nagelwall, die durch Vorbehandlung etwas besser geworden ist, dort ist aber der Nagel beidseits eingewachsen.. An beiden Nagelwällen wird eine keilförmige Excision bis weit nach proximal durchgeführt, dadurch der Nagel verschmälert und das Granulationsgewebe entfernt. Auskratzen des Nagelbettes, um keinen Rest der Matrix übrig zu lassen. Adaptierende Nähte. Operation unter lokaler Blutsperre. Anschließend Betaisodona-Salbenverband.”

English:

“There is a massive inflammation of the left big toe on the lateral and medial nail wall, which has improved somewhat with pre-treatment, but the nail has grown in on both sides.. A wedge-shaped excision is carried out on both nail walls as far as proximal, thus narrowing the nail and removing the granulation tissue. Scraping of the nail bed to leave no residual matrix. Adaptive sutures. Surgery under local hemostasis. Followed by Betaisodona (Betadona) ointment dressing.”

MEL codes

The “Medizinische Einzelleistungen” (individual medical services - MEL) code is an Austrian standardized set of codes used to identify and classify healthcare procedures administered by healthcare professionals, organizations or doctors in Austria. The MEL code is integrated into the Austrian healthcare system, facilitating the exchange of information between healthcare providers, insurers and government agencies. MEL codes are administered by Austrian health authorities to maintain consistency and accuracy in medical billing and reimbursement processes. The service catalog forms the binding basis for the nationwide service documentation in Austria from 1 January 2022. The service catalogue includes inpatient and outpatient procedures: 872 inpatient procedures (e.g. major operations); 856 procedures to be recorded both as outpatient and inpatient procedures (e.g. CT, MR, minor operations); 349 procedures to be recorded only for outpatient visits (e.g. ECG, sonogram, outpatient examinations, etc.). An excerpt of descriptions out of the catalogue is shown in Table 5.

Descriptive analysis

In KAGes, each patient is allocated a unique patient ID, along with a case ID for every separate visit, and a document ID for each report. Each case is linked with specific document types, including operative notes, discharge summaries for hospitalizations, physician reports for outpatient visits, and nursing reports if applicable. We identified 585,193 surgical reports documented in the database until January 18, 2023, stemming from 504,006 cases involving 319,254 patients. Some documents could not be retrieved due to their absence in XML format. Consequently, we retained 568,639 surgical reports from 493,087 cases and 314,841 patients. Among the retrieved documents, we discarded any that were empty, resulting in 566,657 surgical reports from 491,618 cases and 314,186 patients. In a next step, we isolated surgical procedural codes linked to cases featuring surgical text within operative notes, disregarding those lacking an assigned surgical procedural code. Consequently, 339,777 documents from 234,408 patients remained. A vast majority (99.27%) of surgical reports were associated with fewer than six procedure codes per report, leading us to exclude operative notes containing more than five surgical codes. The resultant dataset comprises 334,229 de-identified operative notes from 231,258 patients, encompassing 1059 unique surgical procedural codes. The average length of the surgical reports is 218 words. A detailed flow diagram depicting the data extraction, transformation, and loading process is presented in Fig. 2.

Figure 3 presents the distribution of the number of surgical procedural codes across different categories within the procedural catalogue, as well as the number of operative notes documented at specialized departments within the University Hospital Graz.

Training and testing

The dataset was partitioned into training, validation and test sets, according to an 80%, 10%, 10% split, respectively. For deploying a chosen German BERT model and training a native BERT model, we opted for the technical 512-token limit for each text, as this aligns with the upper boundary for the chosen sequence-based models. In contrast, for training fastText, CNN, SVM and LR, we utilized the full length of texts. BERT-based models, fastText and CNN were trained as multi-label classifiers with one model instance per architecture, whereas, SVM and LR were trained using a TF-IDF representation schemed and a one-vs-all classification setting to solve the task of multi-label assignment. For the systematic evaluation of the multi-label classification approach, with the intention of supporting the user in the coding process through a pre-selection of the top five codes, we applied the well established evaluation measurements precision (P), recall (R) and F1-measure (F1)23. Additionally, to consider the ranking of the top five results, we applied the mean average precision (MAP) for ranked retrieval results. Label Ranking Average Precision (LRAP)24, was used during the model downstreaming task to obtain feedback of this value in the evaluation set at every epoch, in combination with the current loss at this training stage.

Neural network architectures

Besides our main research interest, what performance levels can be reached via a model based approach, two main different architecture types were inspected and evaluated for the aforementioned research questions: a shallow neural network architecture, where we decided to exploit fastText and a CNN as a baseline reference model and a deep, stacked, sequence-model-based architecture, specifically the transformer-based architecture types, which leverage the current state-of-the-art for various NLP applications, in the clinical domain.

Shallow neural network

FastText. FastText25,26 is a powerful NLP tool that generates word embeddings, which are dense vector representations of words in a text corpus, and pre-trained skip-gram embeddings27 on both sub-word and word type level from the training set. The resulting vector representation does not distinguish between homonyms (e.g. HWI “Harnwegsinfekt” (urinary tract infection) versus “Hinterwandsinfarkt” (posterior wall infarct)) but it is context aware in the sense that, in our approach, one document is modelled as n-dimensional vector. FastText is known for its efficiency, speed in training and inference, and its ability to classify text into predefined categories. FastText also provides pre-trained models, saving time and resources in training custom embeddings. It is language-agnostic and suitable for various languages, and it is an open-source project. Notably, the capability that model training can be performed without the high computational costs of GPUs, but directly on CPUs, which makes it a suitable architectural candidate for low hardware-resource deployment settings.

Convolutional Neural Network (CNN). Unlike fastText, which provides shallow, sub-word-level representations, CNNs can learn deeper hierarchical patterns, including phrase structures and important context-dependent relationships within the text 28. CNNs are effective at capturing local dependencies within the input text, which makes them well-suited for short- to medium-length text sequences. While CNNs are not explicitly designed to model long-range dependencies, their ability to combine learned n-grams via pooling layers allows them to generalize well across various classification tasks. However, CNNs still fall short in comparison to more advanced models like transformers in handling complex sentence-level or document-level relationships. Despite this, CNNs are computationally less intensive than transformer-based models, providing a balanced approach in terms of performance and efficiency.

Transformer architecture - BERT

Bidirectional Encoder Representations from Transformers (BERT). Transformers are a state-of-the art NLP model architecture that utilizes a self-attention mechanism29 to efficiently process textual information. Transformers typically employ an encoder-decoder architecture, which conveys word order as part of a sequence-model. In contrast to fastText, the resulting contextualized embedding vectors can distinguish homonyms at a token level (e.g. HWI “Harnwegsinfekt” (urinary tract infection) versus “Hinterwandsinfarkt” (posterior wall infarct) get different embedding representations). Transformer employ multi-head attention, applying the self-attention mechanism multiple times in parallel. Typically, models are pre-trained on large datasets and fine-tuned for specific tasks, thus support transfer learning capabilities and allowing for competitive performance across various NLP tasks. Additionally, transformers offer interpretability through attention heads, making them suitable for different NLP tasks, explored in various experiments in the clinical domain. In this work, we conducted two types of experiments with transformer architectures: i) building a language model from scratch localizing the language domain under scrutiny, followed by the downstreaming process to the problem domain ii) reusing an existing language model in a transfer learning process for the multi-label classification task. We were using \(\sim\)350,000 documents to train a domain specific language model (surgeryBERT.at) for 20 epochs (see Annex Fig. 1) on a TITAN V GPU. The resulting language model was afterwards used for a downstream process for 50 epochs, following an 80:10:10 training, evaluation, test split, where the learning statistics are depicted in Annex Fig. 2. The best model yielding an LRAP score (see section Training and testing) of 0.85 was chosen for performance evaluation on the held-out test set. For the second experiment, we were using the language model medBERT.de30, for the moment, the most comprehensive language model available with respect to medical language resources available in German. Specifically, it contains language knowledge of about 370k electronic health records in German, which makes it specifically valuable for the task described in here. Applying the same downstream-setting as described before, we took the model with the best LRAP score of 0.86 for getting the final results on the test set. The downstreaming statistics are shown in Annex Fig. 3.

Classical models

Support Vector Machine (SVM). In our study, we used the term-frequency inverse-document-frequency (TF-IDF)31 representation of text data to capture the importance of terms, and SVMs were able to leverage this high-dimensional feature space effectively32. SVMs are useful when there is a clear margin of separation between classes, making them suitable for tasks with well-defined boundaries. However, they rely on the assumption that words are independent, thus, limits their ability to capture contextual relationships between words, unlike more advanced models such as BERT. Furthermore, while SVMs usually offer strong performance in classification tasks, their computational cost can increase significantly with the number of labels, especially when a one-vs-all approach is used. Nevertheless, their ability to generalize well to unseen data made them a valuable part of our multi-label classification investigation. We experienced SVM both computationally and resource intensive, for this task.

Logistic Regression (LR). Logistic Regression33 is a classical and interpretable machine learning algorithm widely used for binary and multi-class classification. In our study, we applied logistic regression in a one-vs-all framework to handle multi-label classification. Logistic regression is based on the principle of using a logistic function to map the input feature space to a probability score that predicts class membership. Like the SVM, we used TF-IDF to represent the text data, transforming it into high-dimensional feature vectors before fitting the logistic regression model. The advantage of logistic regression lies in its simplicity and ease of interpretation, as it provides direct insight into the relationship between the input features and the output class labels through the learned coefficients. While logistic regression is computationally efficient, especially in high-dimensional settings, its performance is limited in cases where data is non-linearly separable or when complex relationships between features need to be modeled. Unlike deep learning models such as CNN or BERT, logistic regression does not inherently capture semantic or contextual information from text. However, it serves as a robust baseline method for classification tasks due to its straightforward implementation and lower computational cost34.

Explainable AI

Local Interpretable Model-agnostic Explanations (LIME). LIME35 is an explainable AI (XAI) technique that offers comprehensible justifications for the predictions of machine learning models. It emphasizes local interpretability and explains the model’s forecasts for a particular input instance. Any machine learning model, including decision trees and neural networks, can be used with LIME because it is model-neutral. It operates by fitting a straightforward, understandable surrogate model to the data after probing the model with perturbed data. The simplicity of LIME’s explanations fosters trust and offers knowledge into model decision-making, specifically relevant in the healthcare when applying for example NLP based decision functions36,37,38.

Shapely values. Shapely values are mathematical concepts used to fairly allocate the contribution of each player in a cooperative game to the overall outcome. They quantify the importance of each feature in a model’s output, providing a more refined understanding than traditional methods. Shapely values can generate explanations for individual predictions, assess the impact of individual features on fairness and bias in AI models, aid in model debugging, provide global and local interpretability, and ensure consistency and fairness. They can be applied to various types of machine learning models, including neural networks and decision trees.

Saliency analysis. Saliency analysis is a technique used to identify the most significant features or inputs impacting a model’s output. It can be used to create interpretable explanations, visually represent saliency maps, gather human feedback, detect bias, and evaluate the robustness and trustworthiness of AI systems. It can also help identify reliance on biased features or data during decision-making. Understanding saliency enhances AI performance and fairness. More formally, the notion of saliency is defined as the norm of the gradient of the loss function to a given input, an approach successfully applied in the general39,40,41 and clinical NLP domains42 for explainable ML systems43.

Chen Shikun44 demonstrated the effectiveness of SHAP for multi-label classification, but also noted that SHAP can be computationally intensive and not guaranteed to consistently produce visually appealing results. Saranya A. et al.45 reviewed different XAI models and found that Grad-CAM++ is more reliable than SHAP for multi-label classification. While there are different XAI models, LIME is more preferable for this task for its simplicity and ease of use, making it a popular choice for interpreting text classification models46,47. Furthermore, a comparison of Kernel SHAP, LIME, and Shapley sampling values by Lundberg48 concluded that LIME is the most computationally efficient method while maintaining a high degree of precision. Hence, for multi-label clinical text classification, in our investigation, we chose LIME as a more favourable option than other XAI models, given its simplicity and effectiveness.

Data availability

The datasets generated and/or analyzed during the current study are not publicly available in accordance to the approved study (30-496 ex 17/18) by the IRB of the Medical University of Graz. The datasets reside on a secure data lake on-premises with mandated access rights. For further details about the datasets, contact the corresponding author.

References

Kroth, P. J. et al. Association of electronic health record design and use factors with clinician stress and burnout. JAMA Netw. Open 2, e199609–e199609 (2019).

Neves, M. & Ševa, J. An extensive review of tools for manual annotation of documents. Brief. Bioinf. 22, 146–163 (2021).

Stenetorp, P. et al. Brat: A web-based tool for nlp-assisted text annotation. In Proceedings of the Demonstrations at the 13th Conference of the European Chapter of the Association for Computational Linguistics, 102–107 (Association for Computational Linguistics, St roudsburg, PA, United States, 2012).

Klie, J.-C., Bugert, M., Boullosa, B., de Castilho, R. E. & Gurevych, I. The inception platform: Machine-assisted and knowledge-oriented interactive annotation. In proceedings of the 27th international conference on computational linguistics: System demonstrations, 5–9 (Association for Computational Linguistics, Santa Fe, New Mexico, USA, 2018).

Kreuzthaler, M. & Schulz, S. Detection of sentence boundaries and abbreviations in clinical narratives. BMC Med. Inf. Decision Making 15, 1–13 (2015).

Dong, H. et al. Automated clinical coding: What, why, and where we are?. npj Digital Med. 5, 159 (2022).

Wu, S. et al. Deep learning in clinical natural language processing: A methodical review. J. Am. Med. Inf. Assoc. 27, 457–470 (2020).

Jasmir, J., Nurmaini, S., Malik, R. F. & Tutuko, B. Bigram feature extraction and conditional random fields model to improve text classification clinical trial document. TELKOMNIKA (Telecommun. Comput. Electron. Control) 19, 886–892 (2021).

Kim, Y. Convolutional neural networks for sentence classification. arXiv:1408.5882 (2014).

Sari, W. K., Rini, D. P. & Malik, R. F. Text classification using long short-term memory with glove. Jurnal Ilmiah Teknik Elektro Komputer dan Informatika (JITEKI) 5, 85–100 (2019).

Baumel, T., Nassour-Kassis, J., Cohen, R., Elhadad, M. & Elhadad, N. Multi-label classification of patient notes a case study on ICD code assignment. arXiv:1709.09587 (2017).

Scheurwegs, E., Luyckx, K., Luyten, L., Goethals, B. & Daelemans, W. Assigning clinical codes with data-driven concept representation on Dutch clinical free text. J. Biomed. Inf. 69, 118–127. https://doi.org/10.1016/j.jbi.2017.04.007 (2017).

Li, F. & Yu, H. ICD coding from clinical text using multi-filter residual convolutional neural network. Proc. AAAI Conf. Artif. Intell. 34, 8180–8187 (2020).

Jin, Y. et al. Hierarchical attention neural network for event types to improve event detection. Sensors (Basel) 22, 4202 (2022).

Mullenbach, J., Wiegreffe, S., Duke, J., Sun, J. & Eisenstein, J. Explainable prediction of medical codes from clinical text. In Walker, M., Ji, H. & Stent, A. (eds.) Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), 1101–1111, https://doi.org/10.18653/v1/N18-1100 (Association for Computational Linguistics, 2018).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv:1810.04805 (2019).

Rasmy, L., Xiang, Y., Xie, Z., Tao, C. & Zhi, D. Med-BERT: Pretrained contextualized embeddings on large-scale structured electronic health records for disease prediction. NPJ Digit Med. 4, 86 (2021).

Bombieri, M., Rospocher, M., Ponzetto, S. P. & Fiorini, P. Surgicberta: A pre-trained language model for procedural surgical language. Int. J. Data Sci. Anal. https://doi.org/10.1007/s41060-023-00433-5 (2023).

Miller, G. Human memory and the storage of information. IRE Trans. Inf. Theory 2, 129–137 (1956).

Raghavendra, S. Introduction to streamlit. Beginner’s Guide to Streamlit with Python: Build Web-Based Data and Machine Learning Applications, 1–15 (Apress, Berkley, CA, 2023).

Visani, G., Bagli, E., Chesani, F., Poluzzi, A. & Capuzzo, D. Statistical stability indices for lime: Obtaining reliable explanations for machine learning models. J. Oper. Res. Soc. 73, 91–101 (2022).

Stanfill, M. H., Williams, M., Fenton, S. H., Jenders, R. A. & Hersh, W. R. A systematic literature review of automated clinical coding and classification systems. J. Am. Med. Inf. Assoc. 17, 646–651 (2010).

Schütze, H., Manning, C. D. & Raghavan, P. Introduction to information retrieval Vol. 39 (Cambridge University Press, Cambridge, 2008).

Tsoumakas, G., Katakis, I. & Vlahavas, I. Mining multi-label data. Data Mining and Knowledge Discovery Handbook, 667–685 (Springer, Boston, MA, 2010).

Joulin, A., Grave, E., Bojanowski, P. & Mikolov, T. Bag of tricks for efficient text classification. arXiv:1607.01759 (2016).

Joulin, A. et al. Fasttext.zip: Compressing text classification models. arXiv:1612.03651 (2016).

Bojanowski, P., Grave, E., Joulin, A. & Mikolov, T. Enriching word vectors with subword information. arXiv:1607.04606 (2016).

Kim, Y. Convolutional neural networks for sentence classification. In Moschitti, A., Pang, B. & Daelemans, W. (eds.) Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 1746–1751, https://doi.org/10.3115/v1/D14-1181 (Association for Computational Linguistics, Doha, Qatar, 2014).

Vaswani, A. et al. Attention is all you need. Advances in Neural Information Processing Systems. arXiv:1706.03762 (2017).

Bressem, K. K. et al. Medbert.de: A comprehensive german bert model for the medical domain. arXiv:2303.08179 (2023).

Sammut, C. & Webb, G. I. (eds) TF-IDF, 986–987 (Springer, US, Boston, MA, 2010).

Joachims, T. Text categorization with support vector machines: Learning with many relevant features, 137–142 (Springer, Berlin, Heidelberg, 1998).

COX, D.R. Two further applications of a model for binary regression. Biometrika 45, 562–565. https://doi.org/10.1093/biomet/45.3-4.562 (1958).

Bishop, C. M. Pattern recognition and machine learning (Springer, New York, 2006).

Ribeiro, M. T., Singh, S. & Guestrin, C. “why should i trust you?” explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, 1135–1144 (2016).

Abdelhalim, N., Abdelhalim, I. & Batista-Navarro, R. T. Training models on oversampled data and a novel multi-class annotation scheme for dementia detection. In Proceedings of the 5th Clinical Natural Language Processing Workshop, 118–124 (2023).

Dhrangadhariya, A., Otálora, S., Atzori, M. & Müller, H. Classification of noisy free-text prostate cancer pathology reports using natural language processing. In International Conference on Pattern Recognition, 154–166 (Springer, 2021).

Dolk, A., Davidsen, H., Dalianis, H. & Vakili, T. Evaluation of lime and shap in explaining automatic icd-10 classifications of swedish gastrointestinal discharge summaries. In Scandinavian Conference on Health Informatics, 166–173 (2022).

Li, J., Chen, X., Hovy, E. & Jurafsky, D. Visualizing and understanding neural models in NLP. arXiv:1506.01066 (2015).

Arras, L., Montavon, G., Müller, K.-R. & Samek, W. Explaining recurrent neural network predictions in sentiment analysis. arXiv:1706.07206 (2017).

Chae, J., Gao, S., Ramanthan, A., Steed, C. & Tourassi, G. D. Visualization for classification in deep neural networks. In Workshop on Visual Analytics for Deep Learning. https://www.osti.gov/biblio/1407764 (2017).

Gehrmann, S. et al. Comparing Rule-based and Deep Learning Models for Patient Phenotyping. arXiv:1703.08705 (2017).

Mullenbach, J., Wiegreffe, S., Duke, J., Sun, J. & Eisenstein, J. Explainable prediction of medical codes from clinical text. arXiv:1802.05695 (2018).

Chen, S. Interpretation of multi-label classification models using shapley values. arXiv:2104.10505 (2021).

Saranya, A. & Subhashini, R. A systematic review of explainable artificial intelligence models and applications: Recent developments and future trends. Decis. Anal. J. 7. https://doi.org/10.1016/j.dajour.2023.100230 (2023).

Radečić, D. LIME vs. SHAP: Which is better for explaining machine learning models? https://towardsdatascience.com/lime-vs-shap-which-is-better-for-explaining-machine-learning-models-d68d8290bb16 (2020).

Li, S. Explain NLP models with LIME & SHAP. https://towardsdatascience.com/explain-nlp-models-with-lime-shap-5c5a9f84d59b (2019).

Lundberg, S. M. & Lee, S.-I. A unified approach to interpreting model predictions. Adv. Neural. Inf. Process. Syst. arXiv:1705.07874 (2017).

Author information

Authors and Affiliations

Contributions

S.V., M.K. and A.A. conducted the experiment(s), analyzed the results and wrote the main manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Veeranki, S.P.K., Abdulnazar, A., Kramer, D. et al. Multi-label text classification via secondary use of large clinical real-world data sets. Sci Rep 14, 26972 (2024). https://doi.org/10.1038/s41598-024-76424-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-76424-8

Keywords

This article is cited by

-

Clinician-in-the-loop screening saturation: predicting annotation yield for efficient EHR review

BMC Medical Informatics and Decision Making (2025)