Abstract

Remote sensing (RS) images contain a wealth of information with expansive potential for applications in image segmentation. However, Convolutional Neural Networks (CNN) face challenges in fully harnessing the global contextual information. Leveraging the formidable capabilities of global information modeling with Swin-Transformer, a novel RS images segmentation model with CNN (GLE-Net) was introduced. This integration gives rise to a revamped encoder structure. The subbranch initiates the process by extracting features at varying scales within the RS images using the Multiscale Feature Fusion Module (MFM), acquiring rich semantic information, discerning localized finer features, and adeptly handling occlusions. Subsequently, Feature Compression Module (FCM) is introduced in main branch to downsize the feature map, effectively reducing information loss while preserving finer details, enhancing segmentation accuracy for smaller targets. Finally, we integrate local features and global features through Spatial Information Enhancement Module (SIEM) for comprehensive feature modeling, augmenting the segmentation capabilities of model. We performed experiments on public datasets provided by ISPRS, yielding notably remarkable experimental outcomes. This underscores the substantial potential of our model in the realm of RS image segmentation within the context of scientific research.

Similar content being viewed by others

Introduction

In recent years, the remarkable development of RS technology has provided strong data support for Earth observation and environmental monitoring1. Increased spatial and spectral resolution of remote sensing images provides access to monitoring natural disasters, expansion of cities, and environmental changes, more accurately. And providing important support for scientific research and resource management2. Alongside the advancement of deep learning, we can identify interesting content in RS images. For example, we can recognize each pixel in remote sensing images through semantic segmentation and apply the results to city planning3, agricultural production4, and other aspects.

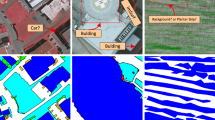

RS images, as shown in Figure 1, contain extensive surface object information (buildings, vegetation, roads, etc.). And at the same time, they are subject to various kinds of interference (cloud cover, atmospheric interference, etc.), which cause the edges of land objects blurred in the images. And different objects may have similar features, which are difficult to distinguish in the segmentation process. Conventional machine learning techniques or deep learning approaches, such as Support Vector Machine (SVM)5 and Convolutional Neural Network (CNN)6, mainly utilize local features to process RS images. However, local features ignore contextual information, with poor recognition of occlusions and robustness of the model. Therefore, different features and global context information need to be fully utilized during method construction to improve the segmentation effect7,8.

Initially, semantic segmentation based on CNN mainly relied on Fully Convolutional Network (FCN)9. Substitute the fully connected layer within the network with a 1\(\times\)1 convolutional layer, enabling the network to process input images of varying sizes and generate corresponding segmentation results. Pixel-level classification results are achieved, establishing the groundwork for semantic information in remote sensing image segmentation. Then, in response to the requirements for detail and contextual information in segmentation tasks, U-Net10 introduced skip connections to splice features generated by the encoder and decoder. To a certain extent, the ability to utilize contextual information of model has been improved, effectively blends deep and shallow features, and raises the precision of semantic segmentation. Furthermore, Deeplab11 introduced void convolution12 and void space pyramid pooling. Cavity convolution extends the sensory field while maintaining the resolution to better capture contextual data and extract features across multiple scales. Also applying the principle of pyramid pooling is PSPNet13, enhancing segmentation accuracy of the model by integrating multi-scale features to capture diverse depths of feature information. Santosh Kumar Tripathy14,15 achieved excellent results by designing different multi-scale feature extraction methods to handle changes in target scales in different scenarios. Ding16 et al. proposed ACNet, which introduced two asymmetric convolution operations, allowing the network to obtain different information in different directions and enhancing expressive ability of network. Saurabh Arora17 proposed a new encoder-decoder model based on multi-modal feature extraction for automatic segmentation of tooth regions, which performs well on panoramic X-ray images. Yan18 performs cluster detection by grouping targets with similar distances and semantic similarities, and uses target density to generate a Gaussian probability map for training a probability prediction model.

The development of attention mechanisms allows models to focus more important features in specific regions19. These developments have improved the ability to restore image details and enhance the precision of semantic segmentation. Woo and colleagues20introduced the spatial channel attention mechanism, a lightweight hybrid module designed to augment feature information in both channel and spatial dimensions within any foundational network, thereby enhancing the model’s performance. Li21 et al. suggested a novel attention mechanism with Segmentation network called SCAttNet, which visualizes the features learned by the attention module on the basic of adaptive feature refinement, strengthens the connection between different features, and enhances the segmentation accuracy of the employed model. Fu22 et al. proposed DANet,introducing spatial and channel attention mechanisms aimed at enhancing the segmentation performance in scene segmentation tasks. Zhou23,24,25 proposed the ECA-MobileNetV3(large)+Seg-Net and Liu26,Xuo27 proposed other remote sensing image processing methods, aiming to balance both the accuracy of remote sensing images and the computational efficiency for tasks like object detection. Cheng28 developed the ”Advanced Fusion Block” (AFB), which achieves paired cross-fusion of low-resolution features, reconstructed features, and lighting features.

Although CNN has good results in RS image segmentation with the addition of attention mechanisms. However, owing to the inherent limitation of convolution operation, each convolution mainly prioritizes or emphasizes local information, and it is difficult to combine contextual information and global information for modeling29. Success of Transformer provides a new idea to solve this problem. With the integration of the multi-head self-attention mechanism, Transformer model adeptly captures comprehensive global contextual information. Chen30 et al. developed a dual-path Transformer architecture to acquire features across various scales and achieved good experimental results. Henaff31 et al. proposed Swin-Transformer to achieve good research progress in medical image segmentation. The common point of these researches is decreasing the computational overhead of the Transformer by different methods, so that the Transformer can be applied to high-resolution image segmentation. However, this reduces the capacity of model to gather features.

Inspired by above studies, we propose GLE-Net, which combines CNN32 and Transformer33 to fuse both global and local information to perform segmentation on RS images. For main branch, we use Swin-Transformer and FCM modules to acquire comprehensive global contextual information while mitigating the omission of nuanced features. For sub-branch, we employ the MFM module to capture features from RS images across varying scales. Finally, through the SIEM module, the fusion of feature information from primary and auxiliary branches optimally utilizes global and local data, enhancing the segmentation accuracy of feature targets in RS images. The primary contributions of this paper are outlined as follows:

-

(1)

We propose a new semantic segmentation network GLE-Net. Through CNN and Swin-Transformer, global and local contextual information are extracted respectively to achieve fusion of features across multiple scales.

-

(2)

We propose a Multi-scale Feature-extraction Module (MFM) for extracting local features with different scales of RS images, processing small-scale features that are easy to be neglected, reducing information degradation, and enhancing the role of small-scale features in model.

-

(3)

We introduce a Spatial Information Enhancement Module (SIEM) designed to integrate CNN and Swin-Transformer features. And a Feature Compression Module (FCM) is added to main branch to minimize information loss and enhance the capability to distinguish high similarity features of model.

Related work

CNN applied to RS image segmentation

For the last few years, semantic segmentation of RS images based on CNNs has garnered considerable interest and has been extensively investigated by numerous researchers, driven by some important competitions34. Researchers have posited numerous innovative approaches to enhancing the effectiveness of CNNs for semantic segmentation of RS images. SegNet35 achieved notable performance by down-sampling feature resolution during encoding and subsequently reinstating it through the fusion of diverse levels of semantic and spatial information during decoding. HRNet36 was proposed, which introduces a multi-branch parallel convolutional structure for generating feature maps at various scales and devises a module that dynamically adjusts spatial pooling to integrate greater local contextual information. FactSeg37 proposed a symmetric two-branch decoder for multiscale feature fusion via jump connections to enhance the precision of segmenting small-scale targets.

Dilated convolution38 varied the convolution operation by introducing intervals (called dilatation rate) in convolution kernel, which effectively enlarges the convolution kernel’s effective perceptual field, augmenting the capacity to comprehend global information. ICNet39 introduced dilatation convolution to improve the capture of contextual information within the image while preserving high-resolution details. PSPNet13 introduced a pyramid-pooling strategy aimed at acquiring contextual information at multiple scales, thereby enhancing the perception of both global and local features.

In addition, the attention mechanisms are also widely used in this area of research. For example, Li40 et al. combined the attention mechanism with pyramid networks to enhance multi-scale feature learning. GCNet41 combined the ideas of non-local networks and SENet42 to synthesize the advantages of both. Non-local networks are able to capture global contextual information, while SENet is able to dynamically adjust the weights between channels to enhance feature representation. Xing’s research43 employed a parallel-serial graph attention module and a multi-head graph attention mechanism to focus on important points or features and assist in their fusion.

Overall, approaches based on CNN have greatly contributed to the advancement of semantic segmentation of RS images. However, the attributes involving considerable feature scale variations and notable similarity present fresh challenges, necessitating more refined spatial features and comprehensive global information. Addressing this, various approaches have combined multi-scale features and attention mechanisms to grasp distant relationships and elevate feature enhancement. Although yielding promising results, further exploration is required to augment the extraction of global information.

Application of transformer in visual processing

Transformer44 introduced the Self-Attention mechanism , which facilitates the model to dynamically allocate attention to various locations of information while processing sequential data. The model was mainly used Within the domain of computational linguistics, especially in machine translation tasks with great success. In the realm of image processing, researchers gradually transitioned to applying the Transformer. DETR11 applied the Transformer to the task of target detection by segmenting the image into small chunks and encoding them into sequences, and then utilized the Transformer model for target detection, achieving end-to-end target detection while making a significant breakthrough in efficiency. Vision Transformer (ViT)45 split an image into fixed-size chunks, encoding spatial information from the image into a serialized format, subsequently leveraging the modeling capabilities inherent in the Transformer architecture for tasks associated with image classification. ViT46 is applied to the task of semantic segmentation of an image, and by modifying the original ViT model to adapt to the segmentation task, and achieved good segmentation results. CLIP47 combined text and image as inputs, and trained on large-scale text and image datasets, which facilitated the model in comprehending the correlation between images and textual information. CoTNet48 combined the ideas of convolution and Transformer, and was applied to target detection and instance segmentation tasks, achieving efficient performance. Liu32 et al. introduced an innovative Transformer-based architecture designed for image categorization, called Swin-Transformer, which achieved excellent data efficiency by introducing a block-based visual perception strategy. Hu49 et al. leveraged the Swin-Transformer as the foundational architecture for devising a semantic segmentation model tailored for medical images. This model employed a U-shaped encoder-decoder framework integrated with Swin-Transformer-based dual encoders, allowing for the extraction of feature representations across diverse semantic scales.

Transformer is mainly designed for sequence modeling and is less adept at handling spatial information in images, especially for capturing local correlations between pixels, compared to convolutional neural networks (CNNs). Moreover, for semantic segmentation tasks, there is a high sensitivity to positional information, and Transformer does not have a mechanism to handle positional information. To solve this problem, many researchers have combined CNN and Transformer to utilize the advantages of both for better performance on semantic segmentation tasks.

Method

Overall framework of GLE-Net

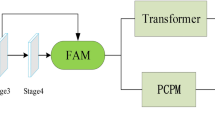

As illustrated in Fig. 2, our proposition involves a two-branch network divided into four stages, aiming to extract features of diverse depths. Each stage is equipped with multiple Swin-Transformer blocks dedicated to extracting the global contextual information inherent in RS images. Each stage relates to FCM, we add extended convolutional processing for extracting fine features, avoiding the omission of a large amount of key information during feature compression, and improving the segmentation ability for small targets of the model. On the sub-branch, we use the residual network to perform preliminary feature extraction on the image at first. Following this, the MFM module is utilized to extract features across multiple scales, effectively encompassing the entirety of local contextual information present in remote sensing imagery.

Finally, the SEIM module integrates information extracted by the CNN for local details and that extracted by the Swin-Transformer block for global context. This fusion establishes a correlation between local and global information, enhancing segmentation capabilities.

MFM module

Global information of the image does not only come from the main branch. In the auxiliary branch we introduce the MFM module for extracting the RS image features across various scales in preparation for the subsequent feature fusion. Fig. 3 illustrates the MFM module.

In this structure, we introduce two branches for different feature extraction and channel information enhancement. For the first branch we design hollow convolution of multiple coefficients. The input feature F of size is reduced by a convolution firstly. Then it goes through three expansion convolutions with coefficients 6, 12, and 18 respectively. Feature information under different receptive fields is extracted by varying the size of the rate, where i =1,2,3. features with different scales are spliced to obtain after one convolution. After another convolution and matrix multiplication with the original feature F, we obtain \(F_1\). In this process, we can well extract the feature information under different sensory fields.

where \(\oplus\) denotes element-level summation and \(\otimes\) denotes matrix multiplication.

On another branch, we were inspired by the CBAM attention mechanism and improve it. The input feature F is undergoes both average pooling and maximum pooling operations, resulting in two\(\ 1 \times 1 \times C\) feature maps, respectively. Subsequently, it proceeds through a densely connected layer and undergoes Sigmoid activation before being matrix-multiplied with the original input feature F, resulting in the acquisition of feature \(F_2\). The specific process is publicized as follows:

where \(\otimes\) represents matrix multiplication, and \(\sigma\) represents the Sigmoid activation.

Finally, the multiscale features and the results processed by channel attention mechanism are fused by a summation operation. The first branch can generate effective feature representations in channel dimension, and the second branch generates feature maps across varying scales, complementing the first branch to articulate comprehensive spatial and semantic information simultaneously, encompassing rich spatial details.

SIEM module

Swin-Transformer mainly relies on self-attention mechanism to deal with spatial information and does not establish spatial relationships between pixels. To mitigate the impact of feature occlusion within RS images, we introduce the SEIM module to augment the exploitation of spatial information in the model, as depicted in Fig. 4. The SIEM module handles inputs from both the primary and auxiliary branches. To reconstruct the features from the primary branch, we initially employ \(\ 3 \times 3\) convolutions. Subsequently, for the preservation of detailed information and mitigation of overfitting, we perform separate operations along the width and height directions.

This includes employing average pooling along the width and maximum pooling along the height to retain crucial information within the feature map and reduce the impact of noise in the image. After pooling, the feature sizes are\(\ H \times 1 \times C\),\(\ 1 \times W \times C\). Then by matrix multiplication, the size is restored to\(\ H \times W \times C\). The output feature of the MFM module is passed through a soft pooling, which better preserves the fine features and retains the local spatial information. The output F of the SIEM module is obtained by splicing with the output to establish the connection between pixel spaces. The process is shown in the following equation :

where \(\oplus\) denotes element-level summation and \(\otimes\) denotes matrix multiplication.

FCM module

In traditional research, each stage is connected to each other by a maximum pooling layer, which effectively diminishes the width and height dimensions of the input features, thereby reducing the model’s computational demands and parameter count. However, the simple maximum pooling process loses much detail and structural information. To further preserve detailed information, expand the receptive field, and enhance the model’s capacity to segment densely-targeted images, we devised a feature compression module leveraging average pooling and dilation convolution, outlined in Fig. 5.

The output features from Swin-Transformer go into two branches. First through \(\ 1 \times 1\) convolution kernel, then through \(\ 3 \times 3\), rate = 2 expansion convolution for feature extraction; and finally through a\(\ 1 \times 1\) convolution kernel. Without increasing the parameters, the receptive field is increased to retain more detailed information, better capture subtle features, provide global information, and reduce the risk of overfitting the model.

The other branch passes through average pooling, in contrast to maximum pooling, this method maintains intricate details, refines small-scale features, and fortifies the stability of model. The features after average pooling are upscaled by\(\ 1 \times 1\) convolution and summed with the result of the previous branch. The public announcement is as follows:

where \(\oplus\) denotes the addition of elements.

Experiment

Dataset

Vaihingen dataset: The Vaihingen dataset comprises 33 genuine photographic images collected across a wide area using sophisticated airborne sensors. These images have been categorized into eleven unique labels specifically for semantic segmentation purposes. Following previous studies50,51, eleven images were chosen for training purposes, while five images were reserved for testing. All images were resized to dimensions of \(\ 512 \times 512\) .

Potsdam dataset: the Potsdam dataset consists of 38 patches, all sized at \(\ 6000 \times 6000\) pixels, extracted from a very high-resolution TOP mosaic with a Ground Sampling Distance of 5 cm. This dataset showcases complex buildings and a dense concentration of subsidence structures. Labeled with six distinct categories intended for semantic segmentation research. In line with previous research52, our experiments involved testing using 14 images, while 24 images were trained. Similar to previous approaches, these original images were resized to 512 \(\times\) 512 for analysis and processing.

Experimental details

Our network architecture is implemented using the PyTorch framework for model construction. The model is trained using an SGD optimizer, leveraging a momentum of 0.9 and a weight decay of 1e−4. The initial learning rate is set to 0.01, employing a decay strategy based on the ”Poly” method. Tests are conducted on RTX 3090, utilizing a batch size of 16 and a maximum calendar element of 100 for streamlined and efficient processing.

To assess the model’s performance, we employ various benchmarks as assessment standards. The Overall Accuracy (OA) scrutinizes general classification capability, while Mean Intersection Union (MIoU) evaluates the precision of segmentation. Additionally, Mean F1 score serves to evaluate the performance across distinct categories.These indicators depend on a confusion matrix, including True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN), computed as follows:

Ablation experiment

To appraise the effectiveness of the proposed network architecture and its integral modules, we conduct ablation studies on the Vaihingen dataset. This empirical investigation involves systematically modifying components while employing the Swin-Transformer (ST) as the foundational baseline model.

Effect of MFM: Table 1 indicates that incorporating MFM within the ST framework yields improvements in segmentation results: a 2.50\(\%\) increase in MIoU, 2.11\(\%\) in MeanF1, and 1.05\(\%\) in OA. Notably, the ”Tree” category displays the most significant accuracy enhancement, with a 3.04\(\%\) increase in IoU, followed by a 1.7\(\%\) increase for the ”Car” category. For better visual comparison, Fig. 6 illustrates the segmentation outcomes. The first and second rows demonstrate how MFM mitigates segmentation errors due to light variations and skylights on rooftops. In the third row, plants growing atop a ”building”, resembling ”low vegetation”, remain distinguishable post SIEM application. These experiments underscore that leveraging MFM to integrate global context information effectively heightens segmentation accuracy, particularly for similar objects.

Effect of SIEM: According to Table 1, employing SIEM independently results in improvements of 2.09\(\%\), 2.74\(\%\), and 2.04\(\%\) in MIoU, MeanF1, and OA, respectively, validating its efficacy in our network. In remote sensing images, discerning semantic features of ”car” and ”low vegetation” amid occlusion by ”trees” presents challenges for extraction and recognition. Upon integrating SIEM, the model demonstrates a 1.24\(\%\) enhancement for ”car”, 0.69\(\%\) for ”low vegetation”, and 2.46\(\%\) for ”tree” categories. For instance, in the first row of Fig. 6, tall ”trees” obstruct a significant portion of the ”cars”, leading the model to struggle in delineating car boundaries and potentially merging two cars into one (boundary fusion phenomenon). Similarly, in the third row, the visual similarity between ”Tree” and ”Low Vegetation” results in the ”Tree” being embedded within the ”Low Vegetation”, causing difficulty in accurately delineating the ”Tree” area. This reduction in the negative impact of object occlusion is evident in the visualization results displayed in Fig. 6 , showcasing SIEM’s effectiveness.

Effectiveness of FCM: According to the findings in Table 1, employing FCM within the ST framework results in a 2.34\(\%\) increase in MIoU, a 3.09\(\%\) increase in MeanF1, and a negligible decrease of 1.98\(\%\) in OA. Notably, the most substantial impact of the FCM model is observed in the ”Car” category, exhibiting a notable 3.26\(\%\) increase in IoU. The comparison results are visually depicted in Fig. 6, FCM effectively delineates its semantic region, highlighting its efficacy in enhancing segmentation accuracy for small-scale features. For example, ”Car” and ”Building” features are successfully extracted and segmented the second and third rows.

The combined effects of modules within the ST framework are explored, as detailed in Table 1. Introducing both MFM and SIEM leads to improvements of 2.76\(\%\) in MIoU, a slight decrease of 1.93\(\%\) in MeanF1, and an increase of 3.17\(\%\) in OA. Integrating both MFM and FCM results in a 2.39\(\%\) increase in MIoU, a decrease of 1.27\(\%\) in MeanF1, and an increase of 3.24\(\%\) in OA. Incorporating both SIEM and FCM leads to a 3.54\(\%\) increase in MIoU, a marginal 2.01\(\%\) increase in MeanF1, and a 2.67\(\%\) increase in OA. The comprehensive ST model incorporating all three essential modules (MFM, SIEM, and FCM) showcases a substantial increase of 3.68\(\%\) in MIoU, 2.17\(\%\) in OA, and 3.35\(\%\) in MeanF1 compared to the base ST model.

Comparison

We compare the proposed GLE-Net with a number of existing methods, including FCN9, UNet10, Deeplab V3+53, PSPNet13, TransUNet54 and Swin-UNet49. The initial set of four comparative approaches leverage traditional CNNs, contrasting with the subsequent two methodologies grounded in the Transformer architecture.

Results for Vaihingen dataset: Table 2 displays the quantitative outcomes of various semantic segmentation methods applied to the Vaihingen dataset. Our proposed GLE-Net outperforms other methods across MIoU, MeanF1, and OA metrics. Deeplab V3+ integrates an Atrous Spatial Pyramid Pooling (ASPP) module alongside a meticulously engineered decoder structure, achieving commendable results among CNN-based models. UNet, while integrating spatial information through skip connections, slightly lags behind Deeplab V3+ in segmentation performance. Notably, GLE-Net demonstrates significant enhancements over Deeplab V3+, improving MIoU by 5.25\(\%\), Mean.F1 by 0.52\(\%\), and OA by 0.41\(\%\).

On the other hand, Swin-UNet, employing a pure Swin-Transformer structure, falls short compared to other methods. Despite the superior global modeling capabilities of Swin-Transformer blocks, their stacking in Swin-UNet doesn’t suffice for RS images. This underscores the feasibility and efficacy of combining CNN and Transformer architectures to yield improved results. GLE-Net, compared to Trans-UNet, exhibits greater effectiveness with a remarkable increase of 6.28\(\%\), 0.94\(\%\), and 2.2\(\%\) in MIoU, Mean.F1, and OA, respectively. Figure 7 represents the prediction results of various segmentation experiments detailed in Table 2. Observations reveal that GLE-Net effectively mitigates segmentation errors, especially for ground objects exhibiting high visual resemblance. Notably, GLE-Net showcases accurate identification.As shown in Fig. 7, the model distinguishes ”building” from ”impervious surface” despite material similarity observed in other methods. Furthermore, GLE-Net demonstrates consistent performance in effectively delineating dense and small-scale ground objects, as seen in the second row example.

Results on the Potsdam dataset: Table 3 displays the segmentation outcomes of various methods on the Potsdam dataset, showcasing the effectiveness of our proposed GLE-Net. GLE-Net achieves notable scores: 87.33\(\%\) on MIoU, 85.55\(\%\) on Mean.F1, and 86.04\(\%\) on OA, surpassing other methods. Generally, the Potsdam dataset exhibits higher segmentation accuracy compared to the Vaihingen dataset due to differences in sizes and data types. It’s noteworthy that TransUNet surpasses traditional CNN models in Table 3, due to a hybrid structure, highlighting limitations in global dependency description by CNN-based models. However, Swin-UNet, despite its global modeling capabilities, remains inferior, emphasizing the importance of spatial information extraction for RS images. In comparison to Swin-UNet, GLE-Net improves MIoU by 0.09\(\%\), Mean.F1 by 2.51\(\%\), and OA by 2.52\(\%\). Notably, across all categories, GLE-Net consistently achieves enhanced segmentation accuracy compared to other comparative methods.

Figure 8 visualizes the segmentation results. In the first row, similarities in colors between ”Low vegetation” and ”Impervious surface” pose challenges in distinction. Despite this, GLE-Net effectively leverages global context and local features to maintain relatively accurate inference. The third row demonstrates GLE-Net’s accurate differentiation of ”impervious surfaces” amidst crowded ”buildings” and ”low vegetation”, outperforming other methods. Furthermore, our model excels in identifying the ”tree” region within ”low vegetation” and delineating elongated ”impervious surfaces” sandwiched between various land covers.

Conclusion

This paper introduces GLE-Net, a novel hybrid model that merges CNN and Swin-Transformer architectures for the semantic segmentation of images captured through remote sensing. GLE-Net leverages the merits of both CNN and Swin-Transformer, enabling the learning of local and global features. It incorporates SIEM, a MFM module within CNNs, facilitating multiscale feature learning for effective fusion of deep and shallow features. Additionally, GLE-Net introduces the FCM module in the Swin-Transformer phase to enhance fine feature retention and reduce feature size. The efficacy of our approach is validated through extensive comparative tests and ablation experiments conducted on ISPRS Vaihingen and Potsdam high-resolution remote sensing datasets. As future work, we aim to further refine our network structure and delve deeper into exploring the potential and applications of CNN and Swin-Transformer in the realm of RS.

Data availability

Publicly accessible data have been used by the authors. The dataset can be downloaded from the following website: https://www.isprs.org/education/benchmarks/UrbanSemLab/default.aspx

References

Bi, H., Xu, F., Wei, Z., Xue, Y. & Xu, Z. An active deep learning approach for minimally supervised polsar image classification. IEEE Trans. Geosci. Remote Sens. 57(11), 9378–9395 (2019).

Yao, H., Qin, R. & Chen, X. Unmanned aerial vehicle for remote sensing applications-A review. Remote Sens. 11(12), 1443 (2019).

Li, R., Zheng, S., Duan, C., Wang, L. & Zhang, C. Land cover classification from remote sensing images based on multi-scale fully convolutional network. Geo-spatial Inform. Sci. 25(2), 278–294 (2022).

Ding, L., Zhang, J. & Bruzzone, L. Semantic segmentation of large-size VHR remote sensing images using a two-stage multiscale training architecture. IEEE Trans. Geosci. Remote Sens. 58(8), 5367–5376 (2020).

Pal, M. & Mather, P. M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 26(5), 1007–1011 (2005).

Cao, X., Yao, J., Xu, Z. & Meng, D. Hyperspectral image classification with convolutional neural network and active learning. IEEE Trans. Geosci. Remote Sens. 58(7), 4604–4616 (2020).

Krähenbühl, P. & Koltun, V. Efficient inference in fully connected CRFS with Gaussian edge potentials. Adv. Neural Inform. Process. Syst. 24, 109–117 (2011).

Ding, L., Tang, H. & Bruzzone, L. Lanet: Local attention embedding to improve the semantic segmentation of remote sensing images. IEEE Trans. Geosci. Remote Sens. 59(1), 426–435 (2020).

Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440

Ronneberger, O., Fischer, P. & Brox, T. U-net: Convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18, pp. 234–241. Springer

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A. & Zagoruyko, S. End-to-end object detection with transformers. In: European Conference on Computer Vision, pp. 213–229. Springer

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K. & Yuille, A. L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFS. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017).

Zhao, H., Shi, J., Qi, X., Wang, X., & Jia, J. (2017). Pyramid scene parsing network. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2881-2890).

Tripathy, S. K., Kostha, H. & Srivastava, R. Ts-mda: Two-stream multiscale deep architecture for crowd behavior prediction. Multimedia Syst. 29(1), 15–31 (2023).

Tripathy, S. K., Sudhamsh, R., Srivastava, S. & Srivastava, R. Must-pos: Multiscale spatial-temporal 3d Atrous-net and PCA guided OC-SVM for crowd panic detection. J. Intell. Fuzzy Syst. 42(4), 3501–3516 (2022).

Ding, X., Guo, Y., Ding, G. & Han, J. Acnet: Strengthening the kernel skeletons for powerful cnn via asymmetric convolution blocks. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1911–1920

Arora, S., Tripathy, S. K., Gupta, R. & Srivastava, R. Exploiting multimodal CNN architecture for automated teeth segmentation on dental panoramic X-ray images. Proc. Inst. Mech. Eng. 237(3), 395–405 (2023).

Yan, P. et al. Clustered remote sensing target distribution detection aided by density-based spatial analysis. Int. J. Appl. Earth Obs. Geoinf. 132, 104019 (2024).

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R. & Bengio, Y. Show, attend and tell: Neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057. PMLR

Woo, S., Park, J., Lee, J.-Y. & Kweon, I.S. Cbam: Convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19

Li, H., Qiu, K., Chen, L., Mei, X., Hong, L., Tao, C. Scattnet: Semantic segmentation network with spatial and channel attention mechanism for high-resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 18(5), 905–909 (2020)

Fu, J., Liu, J., Tian, H., Li, Y., Bao, Y., Fang, Z. & Lu, H. Dual attention network for scene segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3146–3154

Zhou, G., Liu, W., Zhu, Q., Lu, Y. & Liu, Y. Eca-mobilenetv3 (large)+ Segnet model for binary sugarcane classification of remotely sensed images. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022).

Zhou, G. & Liu, X. Orthorectification model for extra-length linear array imagery. IEEE Trans. Geosci. Remote Sens. 60, 1–10 (2022).

Zhou, G. et al. Orthorectification of fisheye image under equidistant projection model. Remote Sens. 14(17), 4175 (2022).

Liu, K. et al. On image transformation for partial discharge source identification in vehicle cable terminals of high‐speed trains. High Voltage (2024).

Xu, H., Li, Q. & Chen, J. Highlight removal from a single grayscale image using attentive GAN. Appl. Artif. Intell. 36(1), 1988441 (2022).

Cheng, D., Chen, L., Lv, C., Guo, L. & Kou, Q. Light-guided and cross-fusion u-net for anti-illumination image super-resolution. IEEE Trans. Circuits Syst. Video Technol. 32(12), 8436–8449 (2022).

Zeiler, M.D. & Fergus, R. Visualizing and understanding convolutional networks. In: Computer Vision-ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part I 13, pp. 818–833. Springer

Chen, J., Shen, D., Chen, W. & Yang, D. Hiddencut: Simple data augmentation for natural language understanding with better generalization. arXiv preprint arXiv:2106.00149 (2021).

Henaff, O. Data-efficient image recognition with contrastive predictive coding. In: International Conference on Machine Learning, pp. 4182–4192. PMLR

Zhou, L., Zhang, C. & Wu, M. D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 182–186

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S. & Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022

He, X. et al. Swin transformer embedding UNET for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 60, 1–15 (2022).

Badrinarayanan, V., Kendall, A. & Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017).

Sun, K. et al. High-resolution representations for labeling pixels and regions. arXiv preprint arXiv:1904.04514 (2019).

Ma, A., Wang, J., Zhong, Y. & Zheng, Z. Factseg: Foreground activation-driven small object semantic segmentation in large-scale remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 60, 1–16 (2021).

Yu, F. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015).

Zhao, H., Qi, X., Shen, X., Shi, J. & Jia, J: Icnet for real-time semantic segmentation on high-resolution images. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 405–420

Li, R., Wang, L., Zhang, C., Duan, C. & Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 43(3), 1131–1155 (2022).

Cao, Y., Xu, J., Lin, S., Wei, F. & Hu, H. Gcnet: Non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, pp. 0–0

Hu, J., Shen, L. & Sun, G. Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141

Xing, J., Yuan, H., Hamzaoui, R., Liu, H. & Hou, J. Gqe-net: A graph-based quality enhancement network for point cloud color attribute. IEEE Trans. Image Process. 32, 6303–6317 (2023).

Vaswani, A. Attention is all you need. Advances in Neural Information Processing Systems (2017).

Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020).

Strudel, R., Garcia, R., Laptev, I. & Schmid, C: Segmenter: Transformer for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7262–7272

Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P. & Clark, J: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, pp. 8748–8763. PMLR

Dai, Z., Liu, H., Le, Q. V. & Tan, M. Coatnet: Marrying convolution and attention for all data sizes. Adv. Neural Inform. Process. Syst 34, 3965–3977 (2021).

Cao, H., Wang, Y., Chen, J., Jiang, D., Zhang, X., Tian, Q. & Wang, M: Swin-unet: Unet-like pure transformer for medical image segmentation. In: European Conference on Computer Vision, pp. 205–218. Springer

Volpi, M. & Tuia, D. Dense semantic labeling of subdecimeter resolution images with convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 55(2), 881–893 (2016).

Liu, Y., Minh Nguyen, D., Deligiannis, N., Ding, W. & Munteanu, A. Hourglass-shapenetwork based semantic segmentation for high resolution Aerial imagery. Remote Sens. 9(6), 522 (2017).

Mou, L., Hua, Y. & Zhu, X. X. Relation matters: Relational context-aware fully convolutional network for semantic segmentation of high-resolution aerial images. IEEE Trans. Geosci. Remote Sens. 58(11), 7557–7569 (2020).

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F. & Adam, H: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818

Chen, J. et al. Transunet: Transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306 (2021).

Funding

This work was supported by Chongqing Technology Innovation and Application Development Project:No.2023TIAD-GPX0007, National Key Research and Development Program: SQ2024YFE0200856, SQ2024YFB4700221, Youth Project of Science and Technology Research Program of Chongqing Municipal Education Commission: KJQN202103407, Sichuan Science and Technology Program 2023JDRC0033 and Luzhou Science and Technology Program 2021-JYJ-92, cooperative projects between universities in Chongqing and the Chinese Academy of Sciences, grant number Grant HZ2021015, Young Project of Science and Technology Research Program of Chongqing Education Commission of China, grant number KJQN202001513 and KJQN202101501.

Author information

Authors and Affiliations

Contributions

Conceptualization, J.Y. and G.C.; methodology, J.Y.; software, J.L.; validation, H.J., H.Z. and J.L.; formal analysis, H.J.; investigation, J.L.,D.M; resources, G.C.; data curation, J.Y.; writing-original draft preparation, J.Y; writing-review and editing, G.C.,D.M; visualization, J.L.; supervision, J.L.; project administration, G.C.; funding acquisition, J.L, H.Z, G.C, H.J. All authors have read and agreed to the published version of the manuscript

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, J., Chen, G., Huang, J. et al. GLE-net: global-local information enhancement for semantic segmentation of remote sensing images. Sci Rep 14, 25282 (2024). https://doi.org/10.1038/s41598-024-76622-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-76622-4

Keywords

This article is cited by

-

Enhancing cross view geo localization through global local quadrant interaction network

Scientific Reports (2025)