Abstract

Reliable traffic flow data is not only crucial for traffic management and planning, but also the foundation for many intelligent applications. However, the phenomenon of missing traffic flow data often occurs, so we propose an imputation model for missing traffic flow data to overcome the randomness and instability bands of traffic flow. First, k-means clustering is used to classify road segments with traffic flow belonging to the same pattern into a group to utilize the spatial characteristics of roads fully. Then, the LSTM networks optimized with an attention mechanism are used as the base learner to extract the temporal dependence of the traffic flow. Finally, the AdaBoost algorithm is used to integrate all the LSTM-attention networks into a reinforced learner to impute the missing data. To validate the effectiveness of the proposed model, we use the PeMS dataset for validation, we impute the data with missing data rate from 10 to 60% under three missing modes, and we use multiple baseline models for comparison, which confirms that our proposed model improves the stability and accuracy of imputing the missing data of the traffic flow with different scenarios.

Similar content being viewed by others

Introduction

The continuous progress of technology and the economy has led to the rising traffic flow, which has brought about serious traffic problems. Traffic congestion, inefficient travel and frequent traffic accidents have brought great challenges to the development of cities. Intelligent Transportation System (ITS) is seen as an effective solution to traffic problems, and the key to building smart cities. Traffic flow data processing and analysis work, such as traffic flow prediction, traffic operation state analysis and road network capacity analysis, are not only the basis for ITS development, but also provide accurate information support for smart city-related technologies, such as lane-level navigation, autonomous driving and smart internet-connected vehicles.

Currently, sensors are widely used to collect traffic flow data. Due to bad weather, detector failures, data transmission interruptions and transmission errors, the collected traffic flow data generally have different degrees of data missing. Accurate and reliable traffic flow data is crucial for traffic flow data processing and analysis, especially traffic flow prediction which has received extensive attention from scholars today. The quality of traffic flow data determines the effectiveness of prediction, and in today’s critical period of rapid development of advanced technologies, such as lane-level navigation and automated driving technology, accurate traffic flow prediction can better provide real-time and future traffic information, which facilitates people to make reasonable decisions and improves the safety of traveling.

Many scholars have already adopted advanced deep learning time series models, such as Long Short-Term Memory (LSTM)1,2 network, Gated Recurrent Unit (GRU)3,4, and Bidirectional LSTM (BiLSTM)5,6 network for traffic flow prediction, and some scholars have also added Graph Neural Network (GNN)7, Convolutional Neural Network (CNN)8 to extract spatial correlation of traffic flow. However, missing data on traffic flow reduces data quality, thereby limiting the learning ability of traffic flow prediction models and making it difficult to further improve prediction accuracy. Therefore, inputting missing data in traffic flow is an important task.

Currently, scholars mainly use advanced deep learning models to impute data for missing traffic flow data. Some scholars9,10 used an adversarial game employing generators and discriminators in generative adversarial networks (GAN) to generate traffic flow data with high accuracy, but these models do not consider the spatial correlation of traffic flow. Some scholars11,12 also used Graph Convolutional Neural (GCN) networks to extract the spatio-temporal correlation of traffic flow based on the distance and connectivity of roads, so as to impute the missing data, but these models are more complicated in the preliminary preparation, and they cannot identify the key parts of a large amount of input data. With the increasing traffic flow, coupled with the influence of nonlinearity, volatility and randomness of traffic flow, it brings great challenges to the imputation of missing data.

Aiming at the problems and challenges faced by the current imputation model for missing traffic flow data, we propose a model for the imputation of missing traffic flow data that can handle different traffic flow environments. The model overcomes the problem of imputing missing data under massive and randomly fluctuating traffic flow. We first use k-means clustering to distinguish different traffic patterns in the road network, extract traffic flow belonging to the same traffic flow pattern as input, and use LSTM networks optimized through the attention mechanism as the basic learners. The AdaBoost algorithm’s weighted set becomes a stronger learner, fully learning the spatiotemporal correlation of data, focusing on important information, and further improving the quality of data.

The contributions of this paper are mainly as follows:

-

1.

k-means clustering is used to divide the road sections with traffic flow belonging to the same pattern into a group in order to make full use of the spatial correlation existing in the road to impute the missing traffic flow data.

-

2.

LSTM-attention network is proposed to extract the time dependence of traffic flow. The optimization of the attention mechanism strengthens the LSTM’s focus on the key information, reduces the interference of useless information, and improves the ability of the LSTM to extract the time dependence.

-

3.

The LSTM-attention networks are set as the base learner and integrate all LSTM attention networks into one reinforcement learner using the Adaptive Boost (AdaBoost) algorithm to impute missing data and reduce estimation error.

-

4.

Imputation validation of data with missing data rate of 10% ~ 60% under three missing modes, and multiple baseline models are used for comparison, which confirms that our proposed model improves the stability and accuracy of imputing missing data of traffic flow.

The remaining parts of this article are as follows: Section “Related work” provides for related work, section “Methodology” introduces the principles and framework related to the model, section “Experiment” introduces the details and results of the experiment, and section “Conclusion” draws conclusions.

Related work

Earlier studies on the imputation of missing traffic flow data mainly included statistical, interpolation and prediction methods. Statistical-based missing data imputation methods mainly include Probabilistic Principal Component Analysis (PPCA)13,14, Markov Chain Monte Carlo (MCMC)15,16, and Bayesian Principal Component Analysis (BPCA)17,18, but statistical-based data imputation methods usually need to make assumptions on probability distribution, which performs better in less fluctuating traffic flow data, but is easier to fail to meet the set assumptions when traffic flow fluctuates more complex cases. Interpolation methods mainly include Historical Averages19, Weighted Average20, K-Nearest Neighbor (KNN)21,22, etc. These methods rely heavily on temporal estimation of neighboring historical data. When historical data is missing severely, the imputation effect will be seriously affected. Prediction-based imputation of missing data mainly uses Autoregressive Integrated Moving Average (ARIMA)23,24, Support Vector Machine (SVM)25,26, etc., when the data is severely missing, these methods cannot maintain stable performance.

In response to the more complex phenomenon of missing traffic flow data, some scholars started using tensors to impute missing data. Tensors extract spatiotemporal features based on the multidimensional relationships and low-rank nature of traffic flow, thus exhibiting better performance compared to early imputation models. Liu et al.27 applied the tensor to capture information from high-dimensional data to repair traffic flow data, and achieved good results in high missing rates. Chen et al.28 used Gaussian regular polynomial decomposition for feature extraction based on the temporal regularized tensor decomposition method of the Bayesian network. The experiment demonstrated that the algorithm achieved high data quality. Chen et al.29 defined a new truncation kernel paradigm that introduces a parameter to determine how to truncate, which can better extract hidden information from data. Although tensor-based methods improve the accuracy of data imputation, tensor algorithms often decompose input features into multiple dimensions, overly considering the combination of these features with other useless features, and being unable to determine the importance of information30. In recent years, many neural network models with super strong learning abilities, parallel processing abilities, generalization abilities, and feature extraction abilities have emerged, making further breakthroughs in the missing data imputation in traffic flow. Autoencoder is an algorithm that excels in denoising and dimensionality reduction, and some scholars31,32,33 used autoencoder to impute missing data in traffic flow and convert it into clean data after denoising lost or damaged data, achieving good imputation results. However, these methods typically assume that the noise is uniformly distributed and discontinuous, and the results can be poor when the missing data is unevenly distributed34.

GAN, GCN, and LSTM provide new perspectives for data imputation. Zhang et al.35 proposed a GAN with conditional cyclic constraints, while Yang et al.36 used a bidirectional GAN with added an attention mechanism, both of which obtained high-quality traffic flow data. However, when the volume of traffic data is huge or the data quality is extremely unstable, the error of these models will be greatly reduced30. Cui et al.37 proposed a traffic missing value imputation model based on bidirectional LSTM architecture. Saroj et al.38 used LSTM and Recurrent Neural Network (RNN) to perform traffic data imputation tasks and achieved certain results. In order to deeply analyze spatial information while exploiting the temporal dependence, some scholars39,40,41 started analyzing the non-Euclidean structure of roads through GCN, and spatial correlation of data based on the structure of roads. These methods greatly improve the effectiveness of models, but the models are computationally huge and complicated to prepare for the preliminaries. Taking the whole surrounding traffic flow as an input. Different traffic flow patterns are not considered. Yang et al.42 used LSTM as a temporal regularizer to capture temporal features and Graph Laplacian (GL) as a spatial regularizer to increase the performance of imputation. Li et al.43 used LSTM to learn temporal dependence and SVR to learn spatial dependence. However, these models also suffer from the problem that they do not consider different traffic flow patterns and take all the surrounding traffic flow as inputs, which results in the model failing to recognize traffic flow that is truly spatially correlated.

Therefore, to overcome the shortcomings of existing models, further improve the performance of traffic flow missing data imputation models, and improve imputation accuracy. We propose a data imputation model that can distinguish traffic flow patterns, has high efficiency, simple preparation work, high accuracy, and fully utilizes the spatiotemporal correlation of traffic flow, for processing missing traffic flow data in different situations or traffic flow environments.

Methodology

In this section, we focus on the principles of k-means clustering, LSTM, Attention mechanism and AdaBoost algorithms and finally describe the framework of the proposed model.

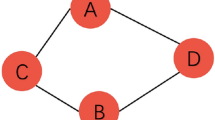

k-means clustering

K-means clustering is an unsupervised learning algorithm that measures the similarity between different samples based on distance44. The use of k-means clustering to determine the pattern of traffic flow can not only handle situations with large amounts of data and high-dimensional inputs, but also has high efficiency and good results in algorithm operation. The centroid is used to represent the center of a cluster, and traffic flow is divided into different clusters. The closer the samples in the same cluster are, while the farther the samples in different clusters are, the higher the spatial similarity of traffic flow. K-means clustering determines the pattern of traffic flow in the following steps:

-

(1)

Randomly select k samples from the traffic flow dataset \(x = \{ x^{1} ,x^{2} ,x^{3} ,...,x^{n} \}\) as the initial cluster centers \(x^{i} (i = 1,2,...,k)\).

-

(2)

Calculate the Euclidean distance from the remaining n-k samples \(x^{l} (l = 1,2,...,n - k)\) to k cluster centers.

$$d(p,q) = \sqrt {\sum\limits_{h = 1}^{n} {(a_{pm} - a_{qm} )^{2} } }$$(1)In the formula, the dimensions of data \(p = (a_{p1} ,a_{p2} ,...,a_{pm} )\) and data \(q = (a_{q1} ,a_{q2} ,...,a_{qm} )\) are both m.

-

(3)

Identify which clustering center \(x^{i}\) is closest based on the Euclidean distance \(d(p,q)\) of the sample \(x^{l}\) and classify it into clusters with the same center.

-

(4)

Updated cluster centers.

$$x^{c} = \sum\limits_{i = 1}^{k} {\frac{{x^{i} }}{{c_{k} }}}$$(2)In the formula, \(c_{k}\) is the total number of data in the k-th cluster center.

-

(5)

Repeat steps (2) to (4) until the required squared error is reached or the maximum number of iterations t is reached, then stop.

$$e = \sum\limits_{i = 1}^{k} {\sum\limits_{b = 1}^{{c_{k} }} {\left| {x^{b} - x^{c} } \right|} }$$(3)In the formula, \(x^{b}\) is the object contained in the final k-th cluster.

-

(6)

Use the contour coefficient S to calculate the clustering effect.

$$s(n) = \frac{{c^{i} - d^{i} }}{{\max \{ c^{i} ,d^{i} \} }}$$(4)$$s = \frac{1}{n}\sum\limits_{i = 1}^{n} {s(n)}$$(5)In the formula, \(s(n)\) is the contour coefficient of a single sample, \(c^{i}\) is the average distance from sample x to other samples within the cluster, and \(d^{i}\) is the average distance from sample x to samples within other clusters.

LSTM

The structure of LSTM is shown in Fig. 1, which overcomes the shortcomings of RNN such as modal aliasing and endpoint effects by introducing a memory cell, input gate, output gate, and forget gate45. The use of LSTM in our model allows us to fully analyze the temporal characteristics of the traffic flow, which greatly improves the model’s ability to learn long-term dependencies, thus mining the correlations between the traffic flow data, and impute the missing traffic flow data imputation. The formula of LSTM is as follows46:

-

(1)

Select which information needs to be deleted through the forget gate. Output a 0–1 vector based on \(h_{t - 1}\) and \(x_{t}\) information to analyze which information to save or delete in the memory cell.

$$f_{t} = \sigma (w_{f} \cdot [h_{t - 1} ,x_{t} ] + b_{f} )$$(6)In the formula, \(f_{t}\) is the output information of the forget gate, \(\sigma\) is the sigmoid activation function, \(w_{f}\) is the weight of the forget gate, \(h_{t - 1}\) is the hidden state of the neuron at the moment t-1, \(x_{t}\) is the input information at the moment t, and \(b_{f}\) is the bias of the forget gate.

-

(2)

Determine which new information to add to the memory cell through the input gate. The updated information is decided through \(h_{t - 1}\) and \(x_{t}\) information.

$$i_{t} = \sigma (w_{i} \cdot [h_{t - 1} ,x_{t} ] + b_{i} )$$(7)$$\overline{c}_{t} = \tanh (w_{c} \cdot [h_{t - 1} ,x_{t} ] + b_{f} )$$(8)In the formula, \(i_{t}\) denotes the output information of the input gate,\(w_{i}\) is the weight of the input gate, and \(b_{i}\) is the bias of the input gate.

-

(3)

Updates the old memory cell and obtains new ones. The information in the forgotten old memory cell is selected through the forget gate, and the new information is obtained from the candidate memory cell information through the input gate.

$$c_{t} = f_{t} \odot c_{t - 1} + i_{t} \odot \overline{c}_{t}$$(9)\(\overline{c}_{t}\) denotes candidate information of the memory cell, \(c_{t}\) denotes new information, and \(\odot\) denotes point-by-point multiplication operation.

-

(4)

The output gate selects which features of the memory cell to output. The judgment condition is obtained by the \(h_{t - 1}\) and \(x_{t}\) information. Obtain the output of LSTM.

$$o_{t} = \sigma (w_{o} [h_{t - 1} ,x_{t} ] + b_{o} )$$(10)$$h_{t} = o_{t} \odot \tanh (c_{t} )$$(11)In the formula, \(o_{t}\) is the output information of the output gate, \(w_{o}\) is the weight of the output gate, and \(b_{o}\) is the bias of the output gate.

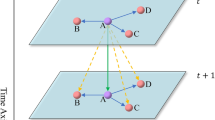

Based on LSTM, we have constructed an LSTM model for inputting missing traffic flow data, whose structure is shown in Fig. 2, and consists of an input layer, an LSTM hidden layer, a fully-connected layer, and an output layer. The Adam gradient descent algorithm is used, and the maximum number of iterations is 1000.

Attention mechanism

The attention mechanism is an algorithm based on the way the human brain allocates attention. Attention mechanism algorithm is adopted to allocate different weights based on the contribution of different information in the traffic flow data, strengthen the attention to important information, solve the problem of long-distance dependence of input information, improve the interpretability of the imputation model of the missing data of the traffic flow, so as to better cope with the complex and massive input data. The structure of the attention mechanism is shown in Fig. 3.

The formula of the attention mechanism is as follows47:

In the formula, \(w\) is the weight matrix, \(b\) is the bias, \(\alpha_{t}\) is the importance score, \(v_{t}\) and is the final output.

AdaBoost

AdaBoost is an ensemble learning algorithm proposed by Yoav FXreund and Robert Schapire48. The core idea of the AdaBoost algorithm is to synthesize the judgments of multiple experts to obtain the final result for a complex task. Usually, the judgment effect is better than that of any individual expert. AdaBoost, based on Boosting49, uses multiple base learners for iterative training, allocating different weights according to the size of the training error to increase attention to samples with larger errors. At the same time, considering the results of multiple base learners, the weighted set becomes a strong learner, greatly improving the accuracy and generalization ability of the base learners, and avoiding overfitting.

Data imputation based on AdaBoost

LSTM has a strong ability to learn time-dependent imputation of missing data; the attention mechanism can better cope with long traffic flows and large amounts of data. Therefore, we use the LSTM optimized with the attention mechanism to impute the missing traffic flow data. Although LSTM-attention has a good ability to learn time-dependence, a single LSTM-attention network is not always stable for data imputation when coping with traffic flow data with stochasticity and instability, and there may be a large error in the imputed data, and the imputation ability of the model is not enough to satisfy the requirements. Therefore, in order to ensure the high stability of the model in different scenarios and reduce data imputation error, we propose to use the AdaBoost algorithm to upgrade the data imputation effect of the LSTM-attention networks. Adopting LSTM-attention networks as the base learners, the AdaBoost algorithm trains multiple base learners, adjusts the weights according to the error, and increases the attention to the samples with larger imputation error and the base learners with higher accuracy rates, as follows:

-

(1)

LSTM-attention-AdaBoost is to initialize the weights.

$$\alpha_{k} (i) = \frac{1}{N}$$(15)In the formula: N is the number of samples, \(i\) is the sample number, and k is the base learner number.

-

(2)

Calculate the error of the base learner under this sample.

$$\varepsilon_{k} = \frac{1}{N}\sum\limits_{i = 1}^{N} {\varepsilon_{i} }$$(16)In the formula: \(\varepsilon_{i}\) is the error of sample.

-

(3)

Update the weights of each sample and base learner:

$${\text{Confidence level}}\;\beta_{k} = \frac{{\varepsilon_{k} }}{{(1 - \varepsilon_{k} )}}$$(17)$${\text{Sample weights}}:\;\alpha_{k} (i) = \frac{{\alpha_{t - 1} (i)\beta_{k} }}{{Q_{k} }}$$(18)$${\text{Base learner weights}}:\;w_{k} = \ln \left( {\frac{1}{{\beta_{k} }}} \right)$$(19)In the formula, Q is the normalization factor.

-

(4)

Repeat the iteration until the maximum number of iterations is reached, and then stop.

-

(5)

Form a strong learner through weighted sets:

$$S(x) = \sum\limits_{k = 1}^{K} {\omega_{k} s_{k} (x)}$$(20)In the formula, \(s_{k} (x)\) is the result of the base learner, and K is the total number of base learners.

We have established a traffic flow missing data imputation model based on the attention mechanism, LSTM and AdaBoost principles, which combines the LSTM-attention model and AdaBoost algorithm. The structure is shown in Fig. 4.

Modeling framework

The use of k-means clustering can effectively distinguish different traffic flow patterns in the road network and extract traffic flow belonging to the same traffic flow pattern as inputs, which avoids the interference of useless information and adequately extracts data with high spatial relevance; the LSTM-attention algorithm can adequately learn the spatio-temporal relevance of data with a focus on the important information, and the AdaBoost can weight the integrating the results of multiple base learners to improve the stability and accuracy of missing data imputation, so we propose a combined model for missing data imputation for traffic flow to overcome the randomness and volatility of traffic flow. The framework of this model is shown in Fig. 5, and the specific steps are as follows:

-

(1)

The KNN algorithm is used to fill in the missing data in the road network in the first four days and calculate the average traffic volume in the first four days.

-

(2)

Different traffic flow patterns in the road network are effectively distinguished by k-means clustering, and traffic flow belonging to the same traffic flow pattern as the traffic flow that needs data imputation is extracted as input.

-

(3)

LSTM-attention networks are used as the base learner to impute the missing data to fully learn the spatio-temporal correlation of traffic flow and increase attention to important information. AdaBoost algorithm trains multiple base learners and adjusts the weights according to the error to increase the attention to the samples with larger imputation error and the base learners with higher accuracy.

-

(4)

Root Mean Square Error, Mean Absolute Error, and Mean Absolute Percentage Error are used to evaluate the results of data imputation.

Experiment

In this section, we first introduced the detailed information of the detectors used in the experiment and the patterns of data missing. Then, we introduced the evaluation indexes and baseline models of the experiments. Finally, we conducted experiments under different data missing rates and patterns and visualized the results of the experiments.

Data

In order to evaluate the effectiveness of our proposed traffic flow data imputation model, we used the real-world PeMS traffic flow dataset for our experiments50. Specifically, we select three detectors from PeMS that are far away from each other for data imputation, the details of the detectors are shown in Table 1, and selected 20 detectors that are close to these three detectors respectively to analyze the spatial correlation of the traffic flow. The time granularity of the traffic flow data is 5 min, and the time range is from August 14, 2023 to August 18, 2023, and we use the traffic flow data of the first four days as the training set, and the traffic flow data of the fifth day as the test set. The detector is located as shown in Fig. 6.

To simulate various situations of missing traffic flow data in reality, we divide data missing into three patterns: random missing (RM), cluster missing (CM), and hybrid missing (HM). Random missing values are randomly dispersed and independent points, mainly caused by equipment instability or environmental chaos; Cluster missing values are often independent points generated due to system shutdown or detector failure; and hybrid missing includes random missing and aggregated missing modes51. Taking the missing rate of 50% as an example, the specific manifestations of the three missing modes are shown in Fig. 7. The legend on the right indicates that the colors on the graph gradually change with the size of traffic flow, and the colors on the graph gradually change with the size of traffic flow. The black part has zero traffic flow, the blue part has a smaller traffic flow, and the red part has a larger traffic flow. The black area represents the missing part of the traffic flow data. As shown in Fig. 7a, the missing traffic flow of RM mode is composed of many irregular points; In Fig. 7b, the missing traffic flow in CM mode is displayed in bars; In Fig. 7c, the missing traffic flow in HM mode is a mixture of bars and irregular points. Meanwhile, we also validated the effectiveness of the model using data with overall missing rates of 10%, 20%, 30%, 40%, 50%, and 60%.

Evaluating indicator

The experiment used root mean square error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE) to evaluate the effectiveness of our proposed model. Their formulas are as follows:

In the formula: \(f_{i}\) is real traffic flow data, \(\widehat{f}_{i}\) is predicted data, and n is the number of samples.

Baseline model

We used advanced models proposed in various literature as baseline models to evaluate the performance of our proposed model and strictly followed the parameter settings in the literature. We have considered the following models:

-

(1)

Historical Average (HA)19: Estimating missing data for the day based on the average of the previous four days of historical data.

-

(2)

K-nearest neighbor (KNN)21: Estimate missing data by calculating the average of adjacent data.

-

(3)

Support Vector Machine (SVM)25: Using SVM-based nonlinear mapping to impute missing data.

-

(4)

Low-rank tensor completion (LRTC-TNN)29: A truncated kernel criterion based on tensor complementarity is defined to impute missing data.

-

(5)

Stacked Denoising Autoencoder (SDAE)32: Considers damaged or missing traffic data as damage vectors for data imputation considering both time and space factors.

-

(6)

Bidirectional Long Short-Term Memory Network (BLSTM-I)37: A bi-directional LSTM extracts the temporal dependence in both directions and estimates the missing data.

-

(7)

Dynamic Graph Convolution Network (MDGCN)40: a model based on GCN and LSTM can dynamically impute missing data of traffic flow.

K-means clustering results

Select the traffic flow with missing data imputation and the traffic flow data of the 20 nearest detectors where the traffic flow is located. The KNN method is first used to complete the missing traffic flow data in the road network for the previous 4 days and Eq. (20) is used to calculate the daily average trend of traffic flow for each detector. Then the daily average trend of traffic flow is identified using K-means clustering, the optimal number of clusters is determined by contour coefficients, and data belonging to the same mode are used as inputs to extract the spatio-temporal correlation between traffic flow.

In Eq. \(T_{l}^{p}\) is the traffic flow data of the lth detector on the pth day, in this paper l is from 0 to 20 and p is from 1 to 4; d is the number of days of the calculation, in this paper d is 4; and a is the daily average trend of the traffic flow.

The optimal number of clusters for the region where detector A detector B and detector C are located is determined by the profile coefficient as shown in Table 2, the highest profile coefficient of the region where detector A belongs is 0.67, so the optimal number of clusters for the traffic flow in the region where detector A belongs is 2. The highest profile coefficient of the region where detector B belongs is 0.69, so the optimal number of clusters for the traffic flow in the region where detector B belongs is 2. The highest profile coefficient of detector C is 0.60, so the optimal number of clusters for traffic flow in detector C is 3. Figure 8 shows the K-mean clustering results for the area where detector A is located. From the figure, it can be seen that the traffic flow pattern to which detector A belongs is cluster 2. Therefore, the input for imputing the data of detector A contains not only the data of detector A, but also cluster 2 which belongs to the same traffic pattern as that of detector A. The K-mean clustering results are shown in Fig. 9 for the area where detector B is located. Figure 9 shows the K-mean clustering results for the region where detector B is located. As can be seen from the figure, the traffic pattern to which detector B belongs is cluster 2, so the input for imputing the data of detector B contains not only the data of detector B, but also the cluster 2 that belongs to the uniform pattern of traffic flow with detector B. Figure 10 shows the K-mean clustering results for the area where detector C is located. As can be seen from the figure, detector C belongs to cluster 1, so the imputed input of data for detector C contains not only the data of detector C, but also cluster 1, which belongs to the same traffic pattern as detector C. Traffic flow is divided into different patterns, thereby avoiding interference from irrelevant information and the problem of excessive information, and better extracting the spatial correlation of data.

The performance of imputation

To test the missing data imputation performance of our proposed model, we used the baseline models and our proposed model to impute missing data with different modes (RM/CM/HM) and missing rates (10% ~ 60%) on detector A, detector B and detector C, and use three evaluation indexes (RMSE/MAE/MAPE).

-

(1)

The experimental results in RM missing mode: Fig. 11 shows the visualization of data imputation results for detector A in RM missing mode; Fig. 12 shows the visualization of data imputation results for detector B in RM missing mode; Fig. 13 shows the visualization of data imputation results for detector C in RM missing mode. By comparing the three figures, we can see that the performance of HA and KNN is generally poor compared to other models, and the performance fluctuates greatly and is extremely unstable under different detectors, modes, and missing rates. This may be due to the relatively simple principles of these two models, while the performance of LRTC-TNN, SDAE, and BISTM-I is moderate. MDGCN performs the best in the baseline models. Our proposed model achieves satisfactory results in detector A and the evaluation indexes RMSE, MAE, and MAPE are lower than all baseline models. In detector B, our proposed model performs better than the baseline models in imputing 10% to 50% of missing data, while in 60% of missing data, our proposed model results on par with MDGCN. In detector C, our proposed model is comparable to MDGCN in 10% missing data and performs better than the baseline models in 20% to 60% missing data imputation. In summary, our proposed traffic flow missing data imputation model has achieved significant advantages in overall results and has good performance.

-

(2)

Experimental results in CM missing mode: Fig. 14 shows the visualization of the data imputation results of detector A in CM missing mode; Fig. 15 shows the visualization of the data imputation results of detector B in CM missing mode; Fig. 16 shows the visualization of the data imputation results of detector C in CM missing mode. By comparing the three figures, we find that the performance of HA and KNN still fluctuates a lot and there is no obvious pattern, which may be due to the fact that the results of HA are more affected by the historical traffic flow, while the results of KNN are affected by the more serious missing neighboring data. In contrast, the overall trend of the evaluation indexes of the other models increases with the increase of the missing rate. In the experiment under CM missing mode, the three evaluation indexes of our proposed model have outstanding advantages, which once again strongly proves the high accuracy of our proposed mode in missing data imputation.

-

(3)

Experimental results in HM missing mode: Fig. 17 shows the visualization of data imputation results for detector A in HM missing mode; Fig. 18 shows the visualization of data imputation results for detector B in HM missing mode; Fig. 19 shows the visualization of data imputation results for detector C in HM missing mode. Through these three figures, we have obtained a conclusion similar to the first two missing modes. LRTC-TNN, SDAE and BISTM-I perform moderately but better than HA and KNN, which may be due to, LRTC-TNN’s full utilization of the low-rank nature of the traffic flow, SDAE’s consideration of corrupted data and BISTM’s superb temporal-dependent extraction capability. MDGCN performs the best among the baseline models, possibly because it takes into account the spatial characteristics of the road network using GCN. However, our model can distinguish traffic flow patterns with high efficiency and simple preparation, and can fully utilize the spatio-temporal correlation of traffic flow to cope with the imputation of missing data of traffic flow in various road environments, and thus not only performs the best experimentally in the HM missing mode, but also has high stability.

-

(4)

Comprehensive comparison: Tables 3, 4 and 5 show the data imputation results of detectors A, B and C in RM missing mode, and Tables 6, 7 and 8 show the data imputation results of detectors A, B and C in CM missing mode, and Tables 9, 10 and 11 shows the data imputation results of detectors A, B and C in HM missing mode. In order to observe the stability of the predictions of different models, we conducted five experiments for each model and calculated the mean and standard deviation of each evaluation index as the final results. In addition, according to the calculation principle of HA, KNN and SVM models, their three evaluation indexes are fixed in the same experiments, so there is no need to calculate their mean and standard deviation. Comparing the tables, we can find that in the overall results, the three evaluation indexes of the experiments under RM missing mode are the best compared to CM missing mode and HM missing mode. The imputing under HM missing mode is moderate, while the three evaluation indexes for imputing missing data under CM missing mode are slightly worse. This indicates that imputing missing data in CM missing mode is more challenging than the other two modes. Compared to the baseline model, our proposed model not only has good data repair accuracy in RM missing mode and HM missing mode, but still maintains strong stability in CM missing mode. In addition, we can find that although HA, KNN and SVM have fixed prediction results, they have poorer prediction results because their principles are simpler and cannot be applied to more complex data. In contrast, our proposed model not only has better prediction results compared to other baseline models, with a small mean value of error, but also has a small standard deviation, indicating that the prediction results are also more stable.

Sensitivity analysis

In our proposed model, several important parameters play a key role in the imputation of missing traffic flow data, and thus these parameters need to be tuned. The key parameters we tuned are the number of base learners LSTM-attention networks, the number of layers of LSTM hidden layers and the number of neurons per hidden layer of LSTM. We investigated the data imputation performance of these parameters under a 30% missing rate.

Figure 20 shows the three evaluation indexes (MAPE/RMSE/MAE) for imputing the missing data under different numbers of LSTM-attention networks (5/10/15/20) for detectors A, B and C. In the figure, all three evaluation indexes show a trend of first decreasing and then increasing. When the LSTM attention count is 10, the evaluation indexes reach their lowest points, indicating that the input data of the model has the highest accuracy at this time. Too many LSTM-attention networks will lead to heavy computational cost and overload of data, and too little LSTM-attention will lead to insufficient information, therefore, the number of LSTM-attention networks is set to 10.

Figure 21 shows three evaluation indexes (MAPE/RMSE/MAE) for imputing missing data under different LSTM hidden layer numbers (1/2/3/4) for detectors A, B and C. Fewer LSTM hidden layers are more conducive to achieving better model performance. As the number of hidden layers in LSTM increases, MAPE, RMSE, and MAE gradually increase. When the number of hidden layers in LSTM is 1, the evaluation index results are the best. Therefore, the number of hidden layers in LSTM is set to 1.

Figure 22 shows three evaluation indexes (MAPE/RMSE/MAE) for imputing missing data under different LSTM neuron counts (6/12/18/24) for detectors A, B and C. The evaluation indexes MAPE, RMSE, and MAE of detector A, B and C decrease first and then increase with the increase of the number of neurons. However, the optimal value for the number of LSTM neurons in detector A is 12, the optimal value for the number of LSTM neurons in detector B is 18, and the optimal value for the number of LSTM neurons in Detector C is 18. To ensure strong performance of the model, we set the number of LSTM neurons to 18.

Ablation experiment

To verify the importance of each component or module of our proposed model, we conducted an ablation experiment. The experiment was conducted using data with a 50% missing rate, and was investigated by deleting or replacing one component at a time. The ablation experiment is mainly studied using the following models:

-

(1)

Model 1: This model removes the module of extracting spatio-temporal correlation based on k-means clustering. The importance of the module for extracting spatio-temporal correlation based on k-means clustering is investigated.

-

(2)

Model 2: This model removes the attention mechanism component. The importance of the attentional mechanism component is examined.

-

(3)

Model 3: This model deletes the AdaBoost component. The importance of the AdaBoost component is studied.

-

(4)

Model 4: This model uses SVM instead of LSTM to study the importance of LSTM for the model.

-

(5)

Model 5: This model is the model proposed in this paper.

Figure 23 shows the results of the ablation experiment, and it can be seen that in detectors A, B and C, Model 5 has a significant decrease in the evaluation indexes RMSE, MAE and MAPE compared to Model 1, indicating that the module of extracting spatio-temporal correlation based on k-means clustering can help improve the final results; Model 5 has some decrease in the evaluation indexes RMSE, MAE and MAPE compared to Model 2, indicating that the Attention mechanism can focus on and learn the important information of traffic flow; Compared with Model 3, the evaluation indexes of Model 5 have different degrees of decreases, with the most decreases in the detector C which has a larger amount of data, which indicates that AdaBoost can repair the data well in the case of larger traffic flow; Compared with Model 4, all three evaluation indexes of Model 5 show that the data imputation accuracy has been greatly improved, indicating that the LSTM in our model has good time-dependent learning ability. In conclusion, each component or module in our model is meaningful to improve the imputation accuracy of traffic flow data.

Conclusion

The imputation of missing data in traffic flow is a crucial step in the development of ITS. In order to overcome the shortcomings of traffic flow missing data imputation models, we propose a model that can distinguish traffic flow patterns, is efficient, accurate, and fully utilizes the spatiotemporal correlation of traffic flow to cope with traffic flow missing data imputation in different situations or traffic flow environments. Specifically, we first use k-means clustering to distinguish different traffic patterns in the road network, extract traffic flow belonging to the same traffic flow pattern as input, and use attention mechanism optimized LSTM networks as the base learners. The AdaBoost algorithm weighted set becomes a stronger learner with stronger performance, fully learning the spatiotemporal correlation of data, focusing on important information, and overcoming the randomness and volatility of traffic flow. A large number of experiments were conducted using multiple baseline models in real-world traffic flow datasets, imputing data for various missing rates (10% ~ 60%) and missing modes (RM/CM/HM). The experimental results show that our proposed model has good data imputation performance, high repair accuracy, and robustness. In addition, we conducted sensitivity analysis and an ablation experiment on the model to determine the values of key parameters and the contribution of each component of the model.

In the future, we can also introduce a residual optimization structure that can dynamically iterate into the proposed model, and consider factors related to weather, traffic conditions, and environment in the model to achieve accurate restoration of missing traffic data.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Luo, Y., Zheng, J., Wang, X., Tao, Y. & Jiang, X. GT-LSTM: A spatio-temporal ensemble network for traffic flow prediction. Neural Networks 171, 251–262 (2024).

Tian, Y., Zhang, K., Li, J., Lin, X. & Yang, B. LSTM-based traffic flow prediction with missing data. Neurocomputing 318, 297–305 (2018).

Hu, N. et al. Multi-range bidirectional mask graph convolution based GRU networks for traffic prediction. Journal of Systems Architecture 133, 102775 (2022).

Sun, P., Boukerche, A. & Tao, Y. SSGRU: A novel hybrid stacked GRU-based traffic volume prediction approach in a road network. Computer Communications 160, 502–511 (2020).

Ounoughi, C. & Yahia, S. B. Sequence to sequence hybrid Bi-LSTM model for traffic speed prediction. Expert Systems with Applications 236, 121325 (2024).

Naheliya, B., Redhu, P. & Kumar, K. MFOA-Bi-LSTM: An optimized bidirectional long short-term memory model for short-term traffic flow prediction. Physica A: Statistical Mechanics and its Applications 634, 129448 (2024).

Chen, F., Sun, X., Wang, Y., Xu, Z. & Ma, W. Adaptive graph neural network for traffic flow prediction considering time variation. Expert Systems with Applications 255, 124430 (2024).

Méndez, M., Merayo, M. G. & Núñez, M. Long-term traffic flow forecasting using a hybrid CNN-BiLSTM model. Engineering Applications of Artificial Intelligence 121, 106041 (2023).

Zong, X., Qi, Y., Yan, H. & Ye, Q. An intelligent deep learning framework for traffic flow imputation and short-term prediction based on dynamic features. Knowledge-Based Systems 300, 112178 (2024).

Fang, J., He, H., Xu, M. & Chen, H. MDTGAN: Multi domain generative adversarial transfer learning network for traffic data imputation. Expert Systems with Applications 255, 124478 (2024).

Chen, Y. & Chen, X. M. A novel reinforced dynamic graph convolutional network model with data imputation for network-wide traffic flow prediction. Transportation Research Part C: Emerging Technologies 143, 103820 (2022).

Xu, D., Peng, H., Tang, Y. & Guo, H. Hierarchical spatio-temporal graph convolutional neural networks for traffic data imputation. Information Fusion 106, 102292 (2024).

Qu, L., Li, L., Zhang, Y. & Hu, J. PPCA-based missing data imputation for traffic flow volume: A systematical approach. IEEE Transactions on Intelligent Transportation Systems 10(3), 512–522 (2009).

Li, Y., Li, Z., Li, L., Zhang, Y., & Jin, M. Comparison on PPCA, KPPCA and MPPCA based missing data imputing for traffic flow. In ICTIS 2013: Improving Multimodal Transportation Systems-Information, Safety, and Integration, 1151–1156 (2013).

Farhan, J. & Fwa, T. F. Airport pavement missing data management and imputation with stochastic multiple imputation model. Transportation research record 2336(1), 43–54 (2013).

Li, Y., Li, Z. & Li, L. Missing traffic data: comparison of imputation methods. IET Intelligent Transport Systems 8(1), 51–57 (2014).

Chiou, J. M., Zhang, Y. C., Chen, W. H. & Chang, C. W. A functional data approach to missing value imputation and outlier detection for traffic flow data. Transportmetrica B: Transport Dynamics 2(2), 106–129 (2014).

Li, H., Wang, Y., & Li, M. A BPCA based missing value imputation and its impact on traffic incident prediction. In 18th COTA International Conference of Transportation Professionals, 1782–1791 (American Society of Civil Engineers, Reston, 2018).

Chang, G., & Ge, T. Comparison of missing data imputation methods for traffic flow. In Proceedings 2011 International Conference on Transportation, Mechanical, and Electrical Engineering, 639–642 (IEEE, 2011).

Yin, W., Murray-Tuite, P. & Rakha, H. Imputing erroneous data of single-station loop detectors for nonincident conditions: Comparison between temporal and spatial methods. Journal of Intelligent Transportation Systems 16(3), 159–176 (2012).

Liu, Z., Sharma, S. & Datla, S. Imputation of missing traffic data during holiday periods. Transportation Planning and Technology 31(5), 525–544 (2008).

Cai, P. et al. A spatiotemporal correlative k-nearest neighbor model for short-term traffic multistep forecasting. Transportation Research Part C: Emerging Technologies 62, 21–34 (2016).

Zhong, M., Sharma, S. & Lingras, P. Genetically designed models for accurate imputation of missing traffic counts. Transportation Research Record 1879(1), 71–79 (2004).

Elshenawy, M., El-Darieby, M., & Abdulhai, B. Automatic imputation of missing highway traffic volume data. In 2018 IEEE International Conference on Pervasive Computing and Communications Workshops 373–378 (IEEE, 2018).

Yang, B., Janssens, D., Ruan, D., Bellemans, T., & Wets, G. A data imputation method with support vector machines for activity-based transportation models. In Computational Intelligence for Traffic and Mobility, 159–171 (2013).

Aydilek, I. B. & Arslan, A. A hybrid method for imputation of missing values using optimized fuzzy c-means with support vector regression and a genetic algorithm. Information Sciences 233, 25–35 (2013).

Liu, J., Musialski, P., Wonka, P. & Ye, J. Tensor completion for estimating missing values in visual data. IEEE Transactions on Pattern Analysis and Machine Intelligence 35(1), 208–220 (2012).

Chen, X., He, Z. & Sun, L. A Bayesian tensor decomposition approach for spatiotemporal traffic data imputation. Transportation Research Part C: Emerging Technologies 98, 73–84 (2019).

Chen, X., Yang, J. & Sun, L. A nonconvex low-rank tensor completion model for spatiotemporal traffic data imputation. Transportation Research Part C: Emerging Technologies 117, 102673 (2020).

Zhang, T., Zhang, J., Qi, X. & Chen, C. A review of research on imputation methods for missing traffic data. Modern Transportation and Metallurgical Materials 04, 69–81 (2023).

Duan, Y., Lv, Y., Liu, Y. L. & Wang, F. Y. An efficient realization of deep learning for traffic data imputation. Transportation research part C: emerging technologies 72, 168–181 (2016).

Ku, W. C., Jagadeesh, G. R., Prakash, A., & Srikanthan, T. A clustering-based approach for data-driven imputation of missing traffic data. In 2016 IEEE forum on Integrated and Sustainable Transportation Systems, 1–6 (IEEE, 2016).

Boquet, G., Morell, A., Serrano, J. & Vicario, J. L. A variational autoencoder solution for road traffic forecasting systems: Missing data imputation, dimension reduction, model selection and anomaly detection. Transportation Research Part C: Emerging Technologies 115, 102622 (2020).

Li, J., Li, R., Xu, L. & Liu, J. Self-supervised generative adversarial learning with conditional cyclical constraints towards missing traffic data imputation. Knowledge-Based Systems 284, 111233 (2024).

Zhang, B., Miao, R. & Chen, Z. Spatial-temporal traffic data imputation based on dynamic multi-level generative adversarial networks for urban governance. Applied Soft Computing 151, 111128 (2024).

Yang, B., Kang, Y., Yuan, Y., Huang, X. & Li, H. ST-LBAGAN: Spatio-temporal learnable bidirectional attention generative adversarial networks for missing traffic data imputation. Knowledge-Based Systems 215, 106705 (2021).

Cui, Z., Ke, R., Pu, Z. & Wang, Y. Stacked bidirectional and unidirectional LSTM recurrent neural network for forecasting network-wide traffic state with missing values. Transportation Research Part C: Emerging Technologies 118, 102674 (2020).

Saroj, A. J., Guin, A. & Hunter, M. Deep LSTM recurrent neural networks for arterial traffic volume data imputation. Journal of big data analytics in transportation 3(2), 95–108 (2021).

Wang, Y. et al. Attention-based message passing and dynamic graph convolution for spatiotemporal data imputation. Scientific Reports 13(1), 6887 (2023).

Huang, X., Ye, Y., Ding, W., Yang, X. & Xiong, L. Multi-mode dynamic residual graph convolution network for traffic flow prediction. Information Sciences 609, 548–564 (2022).

Kong, X. et al. Dynamic graph convolutional recurrent imputation network for spatiotemporal traffic missing data. Knowledge-Based Systems 261, 110188 (2023).

Yang, J. M., Peng, Z. R. & Lin, L. Real-time spatiotemporal prediction and imputation of traffic status based on LSTM and Graph Laplacian regularized matrix factorization. Transportation Research Part C: Emerging Technologies 129, 103228 (2021).

Li, L., Zhang, J., Wang, Y. & Ran, B. Missing value imputation for traffic-related time series data based on a multi-view learning method. IEEE Transactions on Intelligent Transportation Systems 20(8), 2933–2943 (2018).

Ay, M. et al. FC-Kmeans: Fixed-centered K-means algorithm. Expert Systems with Applications 211, 118656 (2023).

Cai, S., Gao, H., Zhang, J. & Peng, M. A self-attention-LSTM method for dam deformation prediction based on CEEMDAN optimization. Applied Soft Computing 159, 111615 (2024).

Liu, S., Kong, Z., Huang, T., Du, Y. & Xiang, W. An ADMM-LSTM framework for short-term load forecasting. Neural Networks 173, 106150 (2024).

Kong, X., Du, X., Xue, G. & Xu, Z. Multi-step short-term solar radiation prediction based on empirical mode decomposition and gated recurrent unit optimized via an attention mechanism. Energy 282, 128825 (2023).

Freund, Y. & Schapire, R. E. A decision-theoretic generalization of on-line learning and an application to boosting. Journal of computer and system sciences 55(1), 119–139 (1997).

Kim, Y. et al. Self-supervised representation learning anomaly detection methodology based on boosting algorithms enhanced by data augmentation using StyleGAN for manufacturing imbalanced data. Computers in Industry 153, 104024 (2023).

Caltrans performance measurement system (PeMS) (2023).

Li, J., Li, R. & Xu, L. Multi-stage deep residual collaboration learning framework for complex spatial–temporal traffic data imputation. Applied Soft Computing 147, 110814 (2023).

Acknowledgements

This study was supported by the Natural Science Foundation of Shandong Province (ZR2021MF109) and the Humanities and Social Sciences Research Project of the Ministry of Education of China (21YJC630110).

Author information

Authors and Affiliations

Contributions

Q.S: Conceptualisation, Methodology, Supervision, Writing – review & editing. Y.T: Conceptualisation, Methodology, Data processing, Software, Validation, Visualisation, Writing – original draft. L.Y: Writing – review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shang, Q., Tang, Y. & Yin, L. A hybrid model for missing traffic flow data imputation based on clustering and attention mechanism optimizing LSTM and AdaBoost. Sci Rep 14, 26473 (2024). https://doi.org/10.1038/s41598-024-77748-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-77748-1