Abstract

Glioma refers to a highly prevalent type of brain tumor that is strongly associated with a high mortality rate. During the treatment process of the disease, it is particularly important to accurately perform segmentation of the glioma from Magnetic Resonance Imaging (MRI). However, existing methods used for glioma segmentation usually rely solely on either local or global features and perform poorly in terms of capturing and exploiting critical information from tumor volume features. Herein, we propose a local and global dual transformer with an attentional supervision U-shape network called DTASUnet, which is purposed for glioma segmentation. First, we built a pyramid hierarchical encoder based on 3D shift local and global transformers to effectively extract the features and relationships of different tumor regions. We also designed a 3D channel and spatial attention supervision module to guide the network, allowing it to capture key information in volumetric features more accurately during the training process. In the BraTS 2018 validation set, the average Dice scores of DTASUnet for the tumor core (TC), whole tumor (WT), and enhancing tumor (ET) regions were 0.845, 0.905, and 0.808, respectively. These results demonstrate that DTASUnet has utility in assisting clinicians with determining the location of gliomas to facilitate more efficient and accurate brain surgery and diagnosis.

Similar content being viewed by others

Introduction

Gliomas are benign or malignant brain tumors arising from nerve-supporting tissues or glial cells, which damage the nervous system and are characterized by a high mortality rate and a low treatment success rate1. Because Magnetic Resonance Imaging (MRI) has the advantages of being non-radioactive, harmless to human health, an excellent soft tissue contrast, and able to offer a high spatial resolution, it is often used for the detection of segmented gliomas and their subregions, which is critical for the diagnosis and treatment of the disease2,3. However, the manual segmentation of tumors based on MRI is still performed by experts in most clinical centers. With an unbalanced distribution of voxels in different regions of the tumor and blurred boundaries existing between the tumor and normal tissues, manual segmentation may lead to misdiagnosis and missed cases4,5,6. It is therefore important to design models that can automatically segment gliomas and their subregions based on multimodal MRI.

Deep learning neural networks are widely used for medical image processing, offering the advantage of fully automating the learning of complex feature representations from raw data without requiring experts to manually edit feature extractors by relying only on their expertise7,8,9,10. With continuous innovation being made in the designs of neural networks, the attention mechanism has become a top priority in the field of deep learning, which can be divided into two categories: ‘channel and spatial attention’ and ‘Transformer’. Channel attention and spatial attention11,12 are used to assign weights to feature maps in the channel and spatial directions, highlighting useful features and suppressing redundant information by correcting the feature maps, which is highly suitable for dealing with MRI feature maps containing rich structural information13,14,15. The Transformer attention mechanism, which has achieved great success in the field of natural language processing (NLP), has been demonstrated to be highly flexible and powerful in representation extraction16. Drawing on its success in NLP, researchers have extended and successfully applied the Transformer to the field of computer vision. The mechanism decomposes an image into one-dimensional sequences and achieves global information exchange by performing Self-Attention computation on all one-dimensional sequences, thereby handling remote dependencies more effectively17,18.

Brain MR images have high spatial resolution and contain rich structural information, while gliomas have complex shapes and relatively large variations in their distribution ranges, so ensuring the accurate segmentation of gliomas has been a longstanding and difficult problem. Considering the advantages of the attention model in modeling high-resolution radiological images, we built a pyramid hierarchical encoder consisting of 3D local and global dual transformer modules to efficiently extract the local features of different tumor regions and construct global dependencies. Meanwhile, to capture and utilize key information in the tumor volume features, we designed the 3D channel and spatial attention supervision module to guide the network, allowing it to capture and utilize key information in the volume features more accurately during the training process and gradually learn to produce finer feature representation. Our contributions to this work can be summarized as follows.

-

1.

We propose a local/global dual transformer with attentional supervision U-network for performing brain tumor segmentation (DTASUnet), which efficiently learns the spatial continuity of 3D multi-contrast MRI and segments gliomas with a high degree of accuracy.

-

2.

We designed a pyramidal hierarchical encoder based on the proposed 3D shift local transformer blocks and 3D global transformer blocks to validate the potential of a transformer encoder for performing feature extraction in glioma segmentation.

-

3.

We also designed a 3D channel and spatial attention supervision module to expand channel attention and spatial attention into the 3D format and combined them with supervised loss, which guides the network to capture and utilize key information more accurately and removes redundant information during the training process.

-

4.

Comprehensive experiments conducted on the BraTS 2018 and BraTS 2020 datasets demonstrated the effectiveness of the proposed model, showing it to be competitive with other state-of-the-art methods.

The remainder of the paper is structured as follows: Sect. 2 is a review of the relevant techniques for medical image segmentation; Sect. 3 discusses the implementation of DTASUnet; Sect. 4 provides details of the experiments conducted to verify the validity of the proposed model and compare it to state-of-the-art techniques for the segmentation of the multimodal BraTS dataset; finally, Sect. 5 discusses and concludes the paper.

Related work

In this section, we briefly review two groups of related work. The first group deals with U-Net and its various improved versions, while the second group focuses on the application of the Transformer model in medical image segmentation.

U-Net variants

Given the challenging nature of medical image segmentation, multi-scale feature fusion is crucial for advanced networks. U-Net19 employs a symmetric coder-decoder architecture with skip connections, which uses a symmetric U-shape structure for feature extraction using convolutional operations in the encoder part while using transposed convolution for up-sampling in the decoder part; this recovers the original image size and achieves finer segmentation results. Subsequent research has focused extensively on improved versions of U-Net-based applications for medical image segmentation. For example, the DeepLab network20 employs atrous convolution to expand the receptive field of the convolution kernel to capture a wider range of contextual information. UNet++21 and UNet+++22 are improvements to U-Net, which increase the segmentation accuracy by adding cross-layer skip connections and improving the up-sampling module. 3D U-Net23 uses a 3D convolution instead of U-Net’s 2D convolution; additionally, the whole case is used for training, enabling the full extraction of information between different slices of the case and retaining spatial continuity. nnUNet24 uses many sophisticated and complex technical methods to improve segmentation accuracy based on UNet, and achieved the champion result in the BraTS2020 challenge. Increasingly more 3D segmentation algorithms have appeared since. Li et al.25 cascaded 3D U-Net and 3D Unet++, which can segment enhancing tumors more accurately. Wang et al.26 designed the spatially-expanded feature pyramid module and combined it with the residual module of U-Net. Zhang et al.7 designed a cross-modal transition and fusion module to learn rich feature representations. Raza et al.27 proposed a 3D deep residual U-Net, which utilizes both shallow and deep features for prediction. Oktay et al.28 proposed an attention gate mechanism and combined it with U-Net to enable the model to better focus on the target region. Akbar et al.29 combined 3D U-Net and residual attention to significantly improve the segmentation results for gliomas.

Application of the transformer model in medical image segmentation

Several recent studies have shown that the Transformer model exhibits excellent performance on medical image segmentation tasks. Chen et al.30 proposed TransUnet, which is a model that combines the Transformer model with U-Net. Swin-Unet31 uses a hierarchical shift window with Swin Transformer32 as an encoder to extract contextual features and uses a symmetric architecture as a decoder. VT-Unet33 uses parallel 3D Self-attention and Cross-Self-Attention strategies to capture information from details, which in turn, enables the fine segmentation of tumor edges to be performed. The bottom of the U-shaped network of TransBTS18 uses a transformer module to extract global contextual information from low-resolution feature maps. BiTr-Unet34 differs from TransBTS in that this model is deeper, has more transformer layers, and adds two additional transformer layers in the skip-connect section to capture global features. Hatamizadeh et al.35 proposed a structure called UNETR, which employs a Transformer model-based encoder and connects the features extracted by the encoder directly to a 3D convolution-based decoder. Qu et al.36 fused the Transformer model with convolution, using the former to complement the remote dependencies lost by the convolution operation.

Methods

This section focuses on the design and implementation of DTASUnet. In Sect. 3.1, we briefly outline the overall architecture of DTASUnet. Then, we discuss the shift local and global dual transformer encoder of DTASUnet in detail in Sect. 3.2 and provide an in-depth analysis of the 3D channel and spatial attention supervision module in Sect. 3.3.

Overview of DTASUnet

As shown in Fig. 1, the proposed DTASUnet is built on standard encoder-decoder U-shaped architecture. The input to the network is a 4-channel 3D multimodal MRI; the shape is \(\:X\in\:{R}^{H\times\:W\times\:D\times\:C}\), where \(\:H\times\:W\) is the spatial resolution of the MRI scan, D is the depth (number of slices), and C is the number of channels (number of MRI modalities). We first divided the input image into non-overlapping 3D patches by the use of the 3D patch partition and then fed patches into the dual transformer encoder with a pyramidal hierarchical architecture to fully capture the local and global information and establish the short-term and long-term dependencies. Because the pyramid architecture encoder has richer feature expression capabilities in learning contextual information when compared to the encoder with no change in the size of the feature map, DTASUnet uses 3D patch merging to compress and fuse the feature information extracted by the transformer to build a hierarchical network. Meanwhile, the 3D channel and spatial attention supervision module (3D CSAS) in the decoder reweights the important information of the extracted features to compensate for the spatial and semantic information lost by the encoder during the 3D patch information fusion compression process, thereby optimizing and aggregating feature information at different scales. In the last layer of the decoder, the feature mapping passes through the residual block to output a segmentation mask consisting of three sub-regions corresponding to ET, WT, and TC, which is used to provide pixel-level glioma segmentation.

The encoder of the 3D shift local and global dual transformer

Compared to the traditional transformer input scan, which uses a 2D transverse plane to divide the patch18,31, the input of DTASUnet consists of 3D MRI scans composed of four contrasts; T1, T2, T1ce, T2 FLAIR; which add richness to the spatial semantic information contained within the patch, thus enabling it to better learn the information and the relationship between different slices of the four modalities relationships to segment gliomas more accurately. In DTASUnet, we first use the 3D patch partition to map the case X input to DTASUnet into a high-dimensional tensor \(\:X\in\:\:{R}^{\frac{H}{{P}_{h}}\times\:\frac{W}{{P}_{w}}\times\:\frac{D}{{P}_{d}}\times\:C}\), where \(\:({P}_{h},{P}_{w},{P}_{d})\) is the size of each non-overlapping patch, \(\:\frac{H}{{P}_{h}}\times\:\frac{W}{{P}_{w}}\times\:\frac{D}{{P}_{d}}\) is the number of patches, and C is the dimension of the patch after stretching it into a vector. In this work, \(\:{P}_{h}\), \(\:{P}_{w}\), and \(\:{P}_{d}\) are all 2, and C is 48.

Our 3D patch partition uses a 3D convolution with a convolution kernel and stride of 2 to divide the input MRI scans of the four contrasts into non-overlapping 3D patches, which allows the encoder to learn the positional information of each patch. The input channel of this convolution is 4 (corresponding to four different contrasts of brain MRI images), and the output channel is C. For instance, an input image of \(\:(H,\:W,\:D,\:4)\) undergoes this convolution, resulting in an output feature map of \(\:(\frac{H}{2},\:\frac{W}{2},\frac{D}{2},\:C)\). After 3D convolution, we merge the first three dimensions of the feature map into one dimension while keeping the fourth dimension unchanged. The feature map is transformed into \(\:(\frac{H}{2}\times\:\:\frac{W}{2}\times\:\frac{D}{2},\:C)\), where C is the dimension of the 3D patches after being stretched into a one-dimensional vector, and \(\:\frac{H}{2}\times\:\:\frac{W}{2}\times\:\frac{D}{2}\) is the number of patches. We then send the patch vectors with dimension C into the Transformer block to learn feature representation.

As shown in Fig. 2, the transformer encoder consists of 8 layers of transformer blocks; the first 4 layers use the 3D shift local transformer block to extract local feature information within the different blocks, while the last 4 layers use the global transformer block to learn the global dependency on the compressed feature map. The composition of the transformer block can be described as follows:

Here, MSA is the Multihead Self-Attention mechanism and MLP is the Multilayer Perceptron. \(\:\widehat{X}\) and \(\:X\) are the outputs of MSA and MLP, respectively. \(\:l\) is the number of layers in the transformer and LN is layer normalization. U-Net and its variants have demonstrated that encoders with pyramid architecture have richer feature representations when learning contextual information. We extended patch merging32 into the 3D form so that the MRI volume feature mapping could be varied step by step from low-level, high-resolution to high-level, low-resolution. A pyramid hierarchical backbone composed of transformers was constructed.

DTASUnet adopts 3D patch merging to fuse and compress the feature information extracted from the transformer block. After the patch vectors undergo local shift Transformer calculations in the first two layers to calculate their correlation, the output feature representation size is \(\:(\frac{H}{2}\times\:\:\frac{W}{2}\times\:\frac{D}{2},\:C)\). In the 3D patch merging, all 3D patches are divided into 8 parts and spliced together in the channel direction. At this time, the size of the volumetric feature block is downsampled by a factor of 2, while the number of channels (the dimension of the patch vector) is increased to 8, the size of feature representation changes from \(\:(\frac{H}{2}\times\:\:\frac{W}{2}\times\:\frac{D}{2},\:C)\) to \(\left( {\frac{H}{4} \times \frac{W}{4} \times \frac{D}{4},8C} \right)\). We then used the linear layer in the channel direction to reduce the length of the patch’s word vectors by a factor of 4, the size of feature representation changes from \(\left( {\frac{H}{4} \times \frac{W}{4} \times \frac{D}{4},8C} \right)\) to \(\left( {\frac{H}{4} \times \frac{W}{4} \times \frac{D}{4},2C} \right)\). In this way, the image patches undergo data fusion and compression. The number of patches is reduced by half, and the vector dimension corresponding to each patch is doubled. In the next two 3D patch merging, we will perform similar operations, resulting in a hierarchical change in the size of the 3D transformer encoder’s feature map.

As shown in Fig. 3, to fully extract local information and establish short-term dependencies using transformers on low-level, high-resolution feature maps, and as inspired by the work of the Swin transformer and Swin-Unet31,32, DTASUnet uses the 3D local transformer containing different local information in the first four transformer blocks of the encoder. Patches with spatial location information are first divided into different 3D windows to compute Self-Attention (SA) so that the focus of the proposed model is first concentrated on the local region of the case to achieve short-range contextual modeling, which helps to prevent the wrong evaluation of the attention scores among different patches due to the excessive number of patches. To calculate Local Self-Attention (LSA), a window of size \(\:({B}_{h},{B}_{w},{B}_{d})\) is used to divide the patches into \(\:T=\frac{H}{{P}_{h}\times\:{B}_{h}}\times\:\frac{W}{{P}_{w}\times\:{B}_{w}}\times\:\frac{D}{{P}_{d}\times\:{B}_{d}}\) regions. Each window calculates Self-Attention independently without any interaction of information between the windows. After each window computes Self-Attention, it then stacks all the windows into \(\:X\in\:\:{R}^{\frac{H}{{P}_{h}}\times\:\frac{W}{{P}_{w}}\times\:\frac{D}{{P}_{d}}\times\:C}\), which is the size of the transformer block input feature. This process can be described as follows:

Window division with different patch information in the 3D shift local transformer, green squares are windows, gray squares are patches. We used SimpleITK 3.4.0 and Microsoft Powerpoint 2021 to draw this image. We used ITK-SNAP 3.4.0 (URL: https://sourceforge.net/projects/itk-snap/files/itk-snap/3.4.0/) and Microsoft Powerpoint 2021 (URL: https://www.microsoft.com/en-us/microsoft-365/powerpoint) to draw this image.

After layer norm and MLP processing, 3D shift local transformer block extracted feature maps can be obtained. In the next transformer block, the windows on the MRI scan are translated by 1/2 window, where each window contains new local information. The next local transformer block repeats the above process in all new windows, each of which performs the Self-Attention computation independently. This strategy can effectively avoid a large amount of local information being lost without Self-Attention interaction occurring between different windows. DTASUnet outputs an intermediate feature map \(X \in R^{{\frac{H}{{4P_{h} }} \times \frac{W}{{4P_{w} }} \times \frac{D}{{4P_{d} }} \times 4C}}\) after the 4-layer transformer’s local context modeling. At this point, X represents the low-resolution, high-level feature map, which has been downsampled by a factor of 4 compared to the input feature map. In the next four transformer blocks, the windowing strategy is no longer used, but global Self-Attention operations are performed on entire 3D high-level, low-resolution feature maps. This can fully compute the global correlation between the already compressed and fused patch, capture the long-range dependencies, and enable global modeling.

3D Channel and spatial attention supervision module

As shown in Fig. 4, we extend channel attention and spatial attention11,12 to a 3D form and use the loss function to supervise the features processed by 3D channel attention and spatial attention, 3D Transposed CNN and 3D Residual CNN and thus construct a 3D channel and spatial attention supervision module (3D CSAS). The 3D CSAS module is responsible for enhancing useful information and removing redundant information to adaptively supervise the correction of the attentional feature responses. The inputs to the 3D CSAS are the intermediate feature maps \(F \in R^{{H \times W \times D \times C}}\) extracted by the 3D transformer encoder at different levels. 3D CSAS first uses the 3D channel spatial attentional ordering to infer the intermediate feature maps F with the 1-dimensional channel weights \(\:{M}_{C}\left(F\right)\in\:{R}^{1\times\:1\times\:1\times\:C}\) and 3-dimensional spatial weights \(\:{M}_{S}\left({F}^{{\prime\:}}\right)\in\:{R}^{H\times\:W\times\:D\times\:1}\). It then assigns weights to the feature map F in the channel and spatial directions based on the learned weights. The channel attention module can adaptively adjust the feature weights of different channels so that the network focuses on the most representative channels and learns information between the MRI scans of different modalities to improve the segmentation capability of the network. Meanwhile, the spatial attention module adjusts the feature weights of different locations in the same channel so that the network focuses on the most representative spatial locations of gliomas and improves the network’s adaptive ability to different tumors. This 3D channel spatial attention weighting correction strategy can be described as follows:

Here, \(\:\otimes\:\) represents the element-by-element multiplication of the two arrays, and before the multiplication, the broadcast mechanism is used so that \(\:{M}_{C}\left(F\right)\), \(\:{M}_{S}\left({F}^{{\prime\:}}\right)\in\:{R}^{H\times\:W\times\:D\times\:C}\). \(\:{F}^{{\prime\:}{\prime\:}}\) is the feature map after the correction of the 3D channel spatial attention. Next, we introduce the method of obtaining the two sets of weights of \(\:{M}_{C}\left(F\right)\) and \(\:{M}_{S}\left({F}^{{\prime\:}}\right)\). We first use 3DMaxPooling and 3DAvgPooling of the spatial side to obtain the spatial features \(\:{F}_{avg}^{C}\),\(\:{F}_{max}^{C}\in\:{R}^{1\times\:1\times\:1\times\:C}\). Then, \(\:{F}_{max}^{C}\), \(\:{F}_{avg}^{C}\) are learned by the shared MLP layer. The fused feature undergoes Sigmoid to obtain the channel weight \(\:{M}_{C}\left(F\right)\), which is given by the following equation

After assigning the channel weights \(\:{M}_{C}\left(F\right)\) to the intermediate feature maps F to obtain the channel direction modified feature maps \(\:{F}^{{\prime\:}}\in\:{R}^{H\times\:W\times\:D\times\:C}\), we use 3DMaxPooling and 3DAvgPooling in the channel direction on \(\:{F}^{{\prime\:}}\) to obtain \(\:{F}_{avg}^{S}\),\(\:\:{F}_{max}^{S}\in\:{R}^{H\times\:W\times\:D\times\:1}\). We then fuse \(\:{F}_{avg}^{S}\), \(\:{F}_{max}^{S}\) through 3D convolutional learning with a shared convolutional kernel of \(\:(\text{7,7},7)\). The spatial weight \(\:{M}_{S}\left({F}^{{\prime\:}}\right)\) can be obtained after scoring by the Sigmoid function, and the formula is shown as follows.

After assigning the spatial weights \(\:{M}_{S}\left({F}^{{\prime\:}}\right)\) to the feature map \(\:{F}^{{\prime\:}}\) to obtain \(\:{F}^{{\prime\:}{\prime\:}}\), we then use the supervised loss to guide the proposed network to learn the hierarchical features. After decoding the feature mapping \(\:{F}^{{\prime\:}{\prime\:}}\) by the 3D transposed convolution block and the 3D residual convolution block, we upsample the output feature map of the 3D residual convolution block to match the ground truth to compute the loss and supervise the optimization of the feature representations extracted by the encoder. As shown in Eq. 9, the total loss of the DTASUnet is the weighted sum of the output loss and the supervision loss, where λ is the weight of the supervision loss:

Experiments and discussion

Datasets

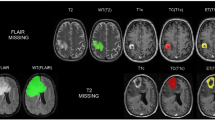

As shown in Fig. 5, the BraTS dataset contains three types of gliomas: peripheral edema (ED), non-enhancing tumor (NET), and enhancing tumor (ET). The task of the segmentation challenge is to accurately segment the three tumor regions: whole tumor (WT), tumor core (TC), and enhancing tumor (ET) using the information from the four modalities. The WT includes the ED, NET, and ET regions, while the TC includes the NET and ET regions. The BraTS 2018 dataset contains 285 patients in the training set and 66 cases in the validation set. The BraTS 2020 dataset contains 369 cases in the training set and 125 cases in the validation set, rendering it a more challenging set to process compared to BraTS 2018. The data for each patient contained T1, T1 CE, T2, and T2 FLAIR 4-contrast MRI images in addition to ground truth, whereas the validation set did not contain ground truth. All datasets were aligned, cranially stripped, and voxel-resampled to 1 × 1 × 1 mm³. The training set ground truth was drawn by experts.

The first and second columns show the brain MRI images of glioma patients with four different contrasts: T1, T1 CE, T2, and T2 FLAIR. The third column displays the corresponding 2D gold standards: the whole tumor region (WT, green + red + yellow), the tumor core region (TC, red + yellow), and the enhancing tumor region (ET, yellow).

Data processing

In this study, to address the imbalance in the voxel distribution across different gliomas and background regions, we first removed the redundant black background regions to avoid an excess of background information interfering with the learning process of the proposed network. Additionally, in the MRI images, the intensity of even the same type of tissue scans may be significantly different between different images due to the use of different acquisition devices. To solve the two problems of noise effects and intensity inhomogeneity, we first removed the highest 1% and lowest 1% of voxels from each scan, and then performed intensity min-max normalization on each scan, as shown in Eq. (10).

where \(\:{z}^{{\prime\:}}\) is the normalized image, \(\:z\) is the input image, and \(\:{z}_{max}\) and \(\:{z}_{min}\) denote the intensity maximum and minimum values, respectively.

Implementation detail

The proposed network is implemented by the deep learning framework PyTorch using a single Nvidia Tesla V100 (16G). Table 1 lists the details of the hyperparameter settings. The batch size of the proposed network is set to 1, Adam is used as the optimizer of the network with an initial learning rate of 1e-4, the learning rate decay strategy is cosine annealing37, and training is performed using the Dice loss function defined in Eq. (11). where C is the total number of segmentation categories, N is the total number of voxels, g is the ground truth, p is the predicted value, and ε is a very small value used to prevent the denominator from being 0. After experimental analysis, the weight λ of the supervised loss was determined to be 0.7.

Evaluation metrics

We used the Dice coefficient and sensitivity as evaluation metrics to evaluate the segmentation results of DTASUnet. In the field of medical image segmentation, the Dice coefficient is the difference between the predicted area of the tumor and ground truth, while sensitivity is used to evaluate the ability of the model to segment the region of interest. The Dice coefficient and sensitivity can be defined as follows:

where TP is true positive, FN is false negative, and FP is false positive. The larger the values of the Dice coefficient and sensitivity, the closer the glioma segmentation map of the proposed network is to the real glioma region; their maximum value is 1.

Ablation experiment of DTASUnet

In this section, because the validation set of BraTS does not provide ground truth, we randomly divided the BraTS 2020 training set into five parts; four for training and one for validation; at a cross-validation ratio of 0.2, which is used to evaluate the effectiveness of the dual transformer encoder and the 3D CSAS module proposed in this study.

Analysis of the dual transformer encoder on the BraTS 2020 datasets

In this section, we analyze the composition of the transformer encoder for DTASUnet. If the encoder uses the local transformer without the shift window, the degradation network performs poor segmentation of the ET region, as shown in the red circle in the second row of the example given in Fig. 6, where the yellow ET region has been incorrectly segmented into the WT region. If all the encoders use the shift local transformer, when compared to the model without the window shift strategy, more local information can be extracted. As shown in Table 2, the Dice score increases from 0.844 to 0.869, while sensitivity increases from 0.860 to 0.868, which verifies the effectiveness of the window shift strategy of DTASUnet. If the encoder uses only the global transformer, although a sufficient quantity of global information can be extracted, local feature extraction is insufficient, and a large number of details conducive to tumor segmentation is lost during the training process. When only using the global transformer, the Dice score is improved by 0.9% when compared to using only the local transformer with a fixed window, but is 1.6% lower when compared to using only the shifted window with a local transformer. If the local information is not sufficiently extracted, although allowing better segmentation results to occur in the WT and TC regions, the segmentation results in the ET region with a smaller range are insufficiently poor. For the dual transformer, which combines the shift local transformer and global transformer, the local and global information of gliomas can be fully extracted (with Dice and sensitivity scores of 0.882 and 0.880, respectively), and the most difficult to segment the ET region has a Dice score of 0.836 and a sensitivity score of 0.842. As shown in Fig. 6, by combining the local and global transformers, the details of the three tumor regions are closer to ground truth compared to the degraded network.

Analysis of the 3D CSAS module on the BraTS 2020 datasets

In this section, we analyze the effectiveness of the 3D channel spatial attention supervised module (3D CSAS). By analyzing Table 3, compared to the degraded network that uses residual convolution blocks instead of CSAS modules, by using 3D channel spatial attention (3D CSA), the feature maps can be weighted in both the channel and spatial regions, useful information can be extracted, and redundant information can be removed, which improves the Dice score of the ET region by 2%. If only the supervised module is used, compared to only 3D CSA, although the Dice score of the WT and TC increased by 0.5% and 1.8% and the sensitivity increased by 0.5% and 1.1%, the Dice and sensitivity scores of the ET region were decreased by 1% and 1.2%, respectively. It can be seen that the use of supervised loss can strengthen the WT and TC regions but suppresses the segmentation results in the ET region, which is because the neural network tends to segment the tumor into the WT and TC regions with a higher number of voxels. 3D CSAS combines 3D CSA and the supervised block, which can retain the advantages of both, as shown in Fig. 7. In the circles of the first row of cases, only DTASUnet using 3D CSAS involves no splicing in the notch of the red tumor region. In the circles of the second row of cases, the degraded networks had all incorrectly segmented many normal brain tissues into the category of glioma, which is effectively suppressed while using 3D CSAS. Compared to the network using only supervised blocks, although 3D CSAS reduced the average sensitivity score by 0.1%, it improved the Dice score of the ET region by 2.4%, the sensitivity score of the ET region by 2.3%, and the average Dice score of all gliomas by 0.6%. In cases where the WT and TC region segmentation results are already high and sufficient for clinical research applications, it is necessary to significantly improve the segmentation accuracy of the more difficult-to-segment ET region. Both quantitative and qualitative analyses have demonstrated that 3D CSAS is a well-suited module for brain glioma segmentation.

Comparison with state-of-the-art methods

In this section, we validate the effectiveness of DTASUnet in performing multimodal MRI brain tumor image segmentation using the validation sets BraTS 2018 and BraTS 2020. We trained the BraTS 2018 and BraTS 2020 training sets separately, segmented the corresponding validation sets without ground truth, and then uploaded the segmentation results to the CBIBA platform to conduct evaluation and comparison with other previously published and high-performing works that also use this evaluation method.

Comparison using the BraTS 2018 validation set

This section compares the segmentation results of DTASUnet with those produced by other high-performing published works on the BraTS 2018 validation set. In the BraTS 2018 validation set, the Dice scores of DTASUnet in the ET, WT, and TC regions were 0.808, 0.905, and 0.845, while the sensitivity scores were 0.820, 0.916, and 0.837, respectively. Table 4 shows the results of the comparison of DTASUnet with the other state-of-the-art methods on the BraTS 2018 validation set. A brief description of these works is given below: Ben et al.38 used class weighting and overlapping patch techniques to address the data distribution imbalance. Kong et al.14 proposed a dual-attention, fully convolutional network that supervises the network segmentation simultaneously with attention gates and hybrid loss. Zhang et al.39 improved the inception module with a grid aggregation strategy. Tong et al.40 designed a multipath feature extraction block for modal input data, which better identifies specific tumor regions. Liu et al.41 proposed a context-aware network that obtains discriminative high-dimensional features from convolutional space and feature interaction maps and selectively aggregates features using an attention-conditional random field. Akbar et al.29 combined 3D U-Net and residual attention to achieve excellent segmentation results for both the BraTS 2018 and BraTS 2020 validation sets. Jiang et al.42 designed the network to focus on learning edge features to improve its performance. For DTASUnet, we used a local and global dual transformer to extract global and local features while optimizing the supervised feature representation using the 3D CSAS module. Compared to the other networks listed in Table 4, DTASUnet improved the Dice score by 7.7–1.3% and the sensitivity score by 7.6–0.1% for the BraTS 2018 validation set. It can therefore be said that DTASUnet has reached state-of-the-art performance in terms of conducting segmentation using the BraTS 2018 validation set.

Comparison of the BraTS 2020 validation set

In the more challenging BraTS 2020 validation set, DTASUnet gave Dice scores of 0.906, 0.844, and 0.790, and sensitivity scores cs of 0.912, 0.832, and 0.788 for WT, TC, and ET, respectively. As shown in Table 5, Ali et al.43 assembled a collection of 2D U-Net and 3D U-Net to segment gliomas. Qamar et al.44 captured information at different scales by superimposing the decomposition of the 3D weighted convolutional layers in the initial block of residuals. Wang et al.18 combined convolution and a transformer to perform glioma segmentation and proposed a 3D automated segmentation network that possessed the biased induction capability of convolution and the global modeling capability of a transformer. Liew et al.13 designed a form of asymmetric channel spatial attention to add spatial context information, which is crucial in medical image segmentation tasks. Jia et al.45 designed networks capable of performing simultaneous segmentation with super-resolution using super-resolution reconstruction branching to improve 3D U-Net segmentation, which was used to enhance the robustness of the network. Raza et al.27 combined deep residual networks with the U-Net model and used the residual network as an encoder to overcome the gradient vanishing problem together with the decoder of the Unet model, which substantially improved the Dice score of the ET region. Compared to the state-of-the-art networks listed in Table 5, DTASUnet achieves an average Dice score improvement of 5.8% to -0.6% and improves the sensitivity score by 6.4–1.5% for the BraTS 2020 validation set. nnUNet24 is indeed a remarkable achievement, achieving the best segmentation results in BraTS2020 competitions. It utilizes a series of sophisticated and complex technical means to improve segmentation accuracy, such as undergoing elaborate data preprocessing and post-processing procedures. In contrast, our work primarily focuses on the innovation level of the network model, appearing relatively simplistic in data preprocessing and post-processing procedures, which is indeed an area where we need to improve.From Table 5 we can also get that DTASUnet al.so achieves excellent results in terms of the Dice and sensitivity scores for ET without compromising the accuracy of WT and TC segmentation. This further demonstrates that DTASUnet not only achieves excellent segmentation results on the BraTS 2018 validation set, but also accurately predicts the location of gliomas in the more difficult-to-segment BraTS 2020 validation set.

Research on the generalization and clinical application of the model

In this section, we use the BTCV2021 (Beyond the Cranial Vault) and FETA2021 (Fetal Brain Annotation) datasets to verify the generalization and clinical application capabilities of the proposed model. The BTCV dataset consists of abdominal CT scans from 30 subjects. Thirteen organs were annotated by interpreters under the supervision of clinical radiologists at Vanderbilt University Medical Center. These organs include the spleen, left kidney, right kidney, gallbladder, liver, esophagus, stomach, inferior vena cava (IVC), splenic vein (PSV), pancreas, left adrenal gland, and right adrenal gland. The FETA dataset consists of 80 T2-weighted single-shot fast spin echo infant brain MRI scans from the University Children’s Hospital Zurich, with 64 scans used for training and 16 scans for validating the network performance. Detailed annotations were provided for seven specific tissues: External Cerebrospinal Fluid (ESF), Grey Matter (GM), White Matter (WM), Ventricles, Cerebellum, Deep Grey Matter (DGM), Brainstem.

By comparing the data in Tables 6 and 7, it can be found that DTASUnet achieves better segmentation results on the two clinical datasets FETA2021 and BTCV2021 compared to the networks 3D U-Net, V-Net and TransBTS. Our model achieved good average Dice scores of 0.820 and 0.848 on the BTCV and FETA clinical datasets for segmentation. At the same time, we invited doctors to observe the segmentation masks and real labels, and received their recognition. By observing Fig. 8, it can also be found that the segmentation mask of the proposed model is the closest to the ground truth. This suggests that DTASUnet can not only achieve excellent results in the BraTS challenge but also deliver outstanding segmentation outcomes on abdominal CT scans and infant brain MRI datasets.This demonstrates the robustness and clinical applicability of the proposed model.

Discussion on the interpretability and resource consumption of the model

The interpretability of deep learning has always been a difficult problem to solve. However, we know that for clinicians and end users, understanding how models make decisions is the key to building trust. As shown in Fig. 9, we use Class Activation Mapping (CAM) to initially increase the interpretability of the model. The heatmap generated by CAM shows the attention of the model to the ET, TC, and WT regions during brain tumor segmentation through color coding. The closer the area is to red, the more important the model considers these parts to be for segmenting the corresponding tumor. CAM can help clinicians and end-users understand which parts of the MRI image are more important for tumor segmentation as perceived by the model. At the same time, we also asked medical experts to observe the heatmap, real labels, and corresponding brain MRI, and gained their trust in the proposed model.

Our model uses local and global dual Transformer and attention supervision mechanism, so the resource consumption is relatively large. As shown in Table 8, we calculated and compared the time required to validate a single case on the BTCV dataset for 3D U-Net, V-Net, TransBTS, and our DTASUnet, as well as their respective average Dice scores. Compared to 3D UNET, VNET, and TransBTS, the validation time for DTASUnet has increased by 0.221 to 1.383 s. However, the sacrifice of resources has led to significant performance improvements, with the proposed model achieving a 3.4–1.6% increase in dice score on the BTCV dataset. While this increase in computational costs may not be significant in some areas, such as tumor diagnosis, improvements are needed for real-time applications or resource-constrained environments.

To further reduce the resource usage of the model, a feasible solution is to use more powerful devices to run the model. In addition, we will continue to explore optimization methods for the model, including lightweighting the model and adopting data preprocessing methods that can reduce computational burden. Ranjbarzadeh, R. et al.47 have outlined the tumor’s scope in advance and selected two patch images of different sizes for training. They achieved excellent brain tumor segmentation results while maintaining a lightweight network. In future work, we will try to apply this data processing strategy to the model to achieve higher segmentation accuracy while further reducing the overall computational complexity.

Conclusion

In this paper, we propose a novel U-shaped network, DTASUnet, to perform the accurate segmentation of brain gliomas. Its encoder consists of dual types of 3D transformers; local and global; and the feature maps are corrected by channel spatial attention-supervised loss blocks. Our 3D automatic segmentation network uses a pyramidal hierarchical architecture, which means that we can efficiently capture different features of the tumor region through multi-level feature extraction. A series of experimental results obtained using the BraTS 2018 and BraTS 2020 datasets show that our proposed method reaches state-of-the-art performance in brain glioma segmentation. The average Dice scores of DTASUnet were 0.853 and 0.847 on the BraTS 2018 and BraTS 2020 validation sets, respectively. Ablation studies also show that our proposed mechanisms and modules help to improve the performance of the network. The performance on the BTCV and FETA datasets proves the generalization ability of the proposed model. In the future, we intend to conduct further research in developing the window division approach of the local transformer and further expand our work on other medical segmentation datasets to further improve the segmentation accuracy and generalizability of DTASUnet. Overall, our study provides a more accurate solution for brain tumor segmentation that may help improve brain tumor diagnosis and treatment in clinical practice.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request. The brain tumor image dataset used is public (URL: https://ipp.cbica.upenn.edu/).

References

Wang, H., Xu, T., Huang, Q., Jin, W. & Chen, J. Immunotherapy for malignant glioma: current status and future directions. Trends Pharmacol. Sci. 41, 123–138. https://doi.org/10.1016/j.tips.2019.12.003 (2020).

Nan, Y. et al. Data harmonisation for information fusion in digital healthcare: a state-of-the-art systematic review, meta-analysis and future research directions. Inf. Fusion. 82, 99–122. https://doi.org/10.1016/j.inffus.2022.01.001 (2022).

Mohan, G. & Subashini, M. M. MRI based medical image analysis: Survey on brain tumor grade classification. Biomed. Signal. Process. Control. 39, 139–161. https://doi.org/10.1016/j.bspc.2017.07.007 (2018).

Pemberton, H. G. et al. Multi-class glioma segmentation on real-world data with missing MRI sequences: comparison of three deep learning algorithms. Sci. Rep. 13, 18911. https://doi.org/10.1038/s41598-023-44794-0 (2023).

Menze, B. H. et al. The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Trans. Med. Imaging. 34, 1993–2024. https://doi.org/10.1109/TMI.2014.2377694 (2015).

Greene, D. J. et al. Behavioral interventions for reducing head motion during MRI scans in children. Neuroimage. 171, 234–245. https://doi.org/10.1016/j.neuroimage.2018.01.023 (2018).

Zhang, D. et al. Cross-modality deep feature learning for brain tumor segmentation. Pattern Recognit. 110, 107562. https://doi.org/10.1016/j.patcog.2020.107562 (2021).

Lu, S. et al. Mutually aided uncertainty incorporated dual consistency regularization with pseudo label for semi-supervised medical image segmentation. Neurocomputing. 548, 126411. https://doi.org/10.1016/j.neucom.2023.126411 (2023).

Ayadi, W., Elhamzi, W. & Atri, M. A deep conventional neural network model for glioma tumor segmentation. Int. J. Imaging Syst. Technol. 33, 1593–1605. https://doi.org/10.1002/ima.22892 (2023).

Roy, S. & Maji, P. Tumor delineation from 3-D MR brain images, Signal, Image and Video Processing 17 3433–3441. (2023). https://doi.org/10.1007/s11760-023-02565-4

Woo, S., Park, J., Lee, J. Y. & Kweon, I. S. CBAM: Convolutional Block attention Module, in: Computer Vision – ECCV 2018, Springer, 3–19. https://doi.org/10.1007/978-3-030-01234-2_1. (2018).

Roy, A. G., Navab, N. & Wachinger, C. Concurrent Spatial and Channel ‘Squeeze & Excitation’ in fully Convolutional Networks, in: Medical Image Computing and Computer Assisted Intervention – MICCAI 2018, Springer, 421–429. https://doi.org/10.1007/978-3-030-00928-1_48. (2018).

Liew, A., Lee, C. C., Lan, B. L. & Tan, M. CASPIANET++: a multidimensional Channel-spatial asymmetric attention network with Noisy Student Curriculum Learning paradigm for brain tumor segmentation. Comput. Biol. Med. 136, 104690. https://doi.org/10.1016/j.compbiomed.2021.104690 (2021).

Kong, D., Liu, X., Wang, Y., Li, D. & Xue, J. 3D hierarchical dual-attention fully convolutional networks with hybrid losses for diverse glioma segmentation. Knowledge-Based Syst. 237, 107692. https://doi.org/10.1016/j.knosys.2021.107692 (2022).

Rehman, M. U., Ryu, J., Nizami, I. F. & Chong, K. T. RAAGR2-Net: a brain tumor segmentation network using parallel processing of multiple spatial frames. Comput. Biol. Med. 152, 106426. https://doi.org/10.1016/j.compbiomed.2022.106426 (2023).

Vaswani, A. et al. Atten. is all you need, 30 (2017).

Liu, J., Zheng, J. & Jiao, G. Transition net: 2D backbone to segment 3D brain tumor. Biomed. Signal. Process. Control. 75, 103622. https://doi.org/10.1016/j.bspc.2022.103622 (2022).

Wang, W. et al. Transbts: multimodal brain tumor segmentation using transformer, in: Medical Image Computing and Computer Assisted Intervention–MICCAI 2021, Springer, 109–119. https://doi.org/10.1007/978-3-030-87193-2_11. (2021).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation, in: Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015, Springer, 234–241. https://doi.org/10.1007/978-3-319-24574-4_28. (2015).

Chen, L. C., Papandreou, G., Kokkinos, I., Murphy, K. & Yuille, A. L. DeepLab: semantic image segmentation with Deep Convolutional nets, atrous Convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 40, 834–848. https://doi.org/10.1109/TPAMI.2017.2699184 (2018).

Zhou, Z., Siddiquee, M. M. R., Tajbakhsh, N. & Liang, J. UNet++: redesigning skip connections to exploit Multiscale features in image segmentation. IEEE Trans. Med. Imaging. 39, 1856–1867. https://doi.org/10.1109/TMI.2019.2959609 (2020).

Huang, H. et al. UNet 3+: A Full-Scale Connected UNet for Medical Image Segmentation, in: ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1055–1059. (2020). https://doi.org/10.1109/ICASSP40776.2020.9053405

Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T. & Ronneberger, O. 3D U-Net: learning dense volumetric segmentation from sparse annotation, in: Medical Image Computing and Computer-Assisted Intervention – MICCAI 2016, Springer, 424–432. https://doi.org/10.1007/978-3-319-46723-8_49. (2016).

Isensee, F., Jäger, P. F., Full, P. M., Vollmuth, P. & Maier-Hein, K. H. Nnu-net for Brain Tumor Segmentation, in: Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, Springer International Publishing, 118–132. https://doi.org/10.1007/978-3-030-72087-2_11. (2021).

Li, P. et al. Automatic brain tumor segmentation from Multiparametric MRI based on cascaded 3D U-Net and 3D U-Net++, Biomed. Signal. Process. Control. 78, 103979. https://doi.org/10.1016/j.bspc.2022.103979 (2022).

Wang, J. et al. DFP-ResUNet:Convolutional Neural Network with a dilated Convolutional feature pyramid for Multimodal Brain Tumor Segmentation. Comput. Meth Programs Biomed. 208, 106208. https://doi.org/10.1016/j.cmpb.2021.106208 (2021).

Raza, R., Ijaz Bajwa, U., Mehmood, Y., Waqas Anwar, M. & Hassan Jamal, M. dResU-Net: 3D deep residual U-Net based brain tumor segmentation from multimodal MRI, Biomed. Signal. Process. Control. 79, 103861. https://doi.org/10.1016/j.bspc.2022.103861 (2023).

Oktay, O. et al. Attention u-net: learning where to look for the pancreas, https://doi.org/10.48550/arXiv.1804.03999, (2018). arXiv preprint arXiv:1804.03999.

Akbar, A. S., Fatichah, C. & Suciati, N. Single level UNet3D with multipath residual attention block for brain tumor segmentation. J. King Saud Univ. -Comput Inf. Sci. 34, 3247–3258. https://doi.org/10.1016/j.jksuci.2022.03.022 (2022).

Chen, J. et al. Transunet: Transformers make strong encoders for medical image segmentation, (2021). https://doi.org/10.48550/arXiv.2102.04306, arXiv preprint arXiv:2102.04306.

Cao, H. et al. Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation, in: Computer Vision – ECCV 2022pp. 205–218 (Springer, 2023). https://doi.org/10.1007/978-3-319-46723-8_49

Liu, Z. et al. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows, in: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9992–10002. (2021). https://doi.org/10.1109/ICCV48922.2021.00986

Peiris, H., Hayat, M., Chen, Z., Egan, G. & Harandi, M. A robust volumetric transformer for accurate 3d tumor segmentation, in: Medical Image Computing and Computer Assisted Intervention, Springer, 162–172. https://doi.org/10.1007/978-3-031-16443-9_16. (2022).

Jia, Q. & Shu, H. Bitr-unet: a cnn-transformer combined network for mri brain tumor segmentation, in: International MICCAI Brainlesion Workshop, Springer, 3–14. https://doi.org/10.1007/978-3-031-09002-8_1. (2021).

Hatamizadeh, A. et al. UNETR: Transformers for 3D Medical Image Segmentation, in: 2022 IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1748–1758. (2022). https://doi.org/10.1109/WACV51458.2022.00181

Qu, T. et al. Transformer guided progressive fusion network for 3D pancreas and pancreatic mass segmentation, Med. Image Anal. 86, 102801. https://doi.org/10.1016/j.media.2023.102801 (2023).

Loshchilov, I. & Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts, (2016). https://doi.org/10.48550/arXiv.1608.03983, arXiv preprint arXiv:1608.03983.

Ben Naceur, M., Akil, M., Saouli, R. & Kachouri, R. Fully automatic brain tumor segmentation with deep learning-based selective attention using overlapping patches and multi-class weighted cross-entropy. Med. Image Anal. 63, 101692. https://doi.org/10.1016/j.media.2020.101692 (2020).

Zhang, Y. et al. MSMANet: a multi-scale mesh aggregation network for brain tumor segmentation. Appl. Soft Comput. 110, 107733. https://doi.org/10.1016/j.asoc.2021.107733 (2021).

Tong, J. & Wang, C. A dual tri-path CNN system for brain tumor segmentation, Biomed. Signal. Process. Control. 81, 104411. https://doi.org/10.1016/j.bspc.2022.104411 (2023).

Liu, Z. et al. CANet: Context Aware Network for Brain Glioma Segmentation. IEEE Trans. Med. Imaging. 40, 1763–1777. https://doi.org/10.1109/tmi.2021.3065918 (2021).

Jiang, M., Zhai, F. & Kong, J. A novel deep learning model DDU-net using edge features to enhance brain tumor segmentation on MR images. Artif. Intell. Med. 121, 102180. https://doi.org/10.1016/j.artmed.2021.102180 (2021).

Ali, M. J., Akram, M. T., Saleem, H., Raza, B. & Shahid, A. R. Glioma segmentation using ensemble of 2D/3D U-Nets and survival prediction using multiple features Fusion, in: International MICCAI Brainlesion Workshop, Springer, 189–199. https://doi.org/10.1007/978-3-030-72087-2_17. (2021).

Qamar, S., Ahmad, P. & Shen, L. HI-Net: Hyperdense inception 3D UNet for Brain Tumor Segmentation, in: International MICCAI Brainlesion Workshop, Springer, 50–57. https://doi.org/10.1007/978-3-030-72087-2_5. (2021).

Jia, Z., Zhu, H., Zhu, J. & Ma, P. Two-branch network for brain tumor segmentation using attention mechanism and super-resolution reconstruction. Comput. Biol. Med. 157, 106751. https://doi.org/10.1016/j.compbiomed.2023.106751 (2023).

Milletari, F., Navab, N. & Ahmadi, S. A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation, in: 2016 Fourth International Conference on 3D Vision (3DV), 2016, pp. 565–571. https://doi.org/10.1109/3DV.2016.79

Ranjbarzadeh, R. et al. Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images, 11 1–17. (2021). https://doi.org/10.1038/s41598-021-90428-8

Funding

This work is supported by Natural Science Foundation of Shandong Province (ZR2020LZL002 and YDZX2022010) and National Natural Science Foundation of China (12005123).

Author information

Authors and Affiliations

Contributions

Writing—original draft: B.M. ; Conceptualization: G.Y. and B.M. ; Validation: G.Y., Y.W., Q.C., Q.S. and B.L. ; Methodology: B.M., G.Y. and Z.M. ; Resources: G.Y.;Software: G.Y., B.M.and Y.W. ; Data curation: Q.S.,Q.C. and Z.M.; Supervision: Q.S.,B.L. and Z.M. ; Writing—review and editing: G.Y., Q.C. and Y.W.All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ma, B., Sun, Q., Ma, Z. et al. DTASUnet: a local and global dual transformer with the attention supervision U-network for brain tumor segmentation. Sci Rep 14, 28379 (2024). https://doi.org/10.1038/s41598-024-78067-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-78067-1

Keywords

This article is cited by

-

Deep Learning for Brain Tumor Analysis: A Systematic Review of the BraTS Dataset

Archives of Computational Methods in Engineering (2026)

-

ResSAXU-Net for multimodal brain tumor segmentation from brain MRI

Scientific Reports (2025)

-

SwinCLNet: a robust framework for brain tumor segmentation via shifted window attention and cross-scale fusion

Scientific Reports (2025)