Abstract

Controlled release of a desired drug from porous polymeric biomaterials was analyzed via computational method. The method is based on simulation of mass transfer and utilization of artificial intelligence (AI). This study explores the efficacy of three regression models, i.e., Kernel Ridge Regression (KRR), Gaussian Process Regression (GPR), and Gradient Boosting (GB) in determining the concentration of a chemical substance (C) based on coordinates (r, z). Leveraging Firefly Optimization (FFA) for hyperparameter optimization, the models are fine-tuned to maximize their predictive performance. The findings unveil notable disparities in the performance metrics of the models, with GB showcasing the most impressive R2 score of 0.9977, indicative of a remarkable alignment with the data. GPR closely trails with an R2 score of 0.88754, while KRR falls short with an R2 score of 0.76134. Additionally, GB manifests the most modest Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) among the trio of models, further cementing its supremacy in predictive precision. These outcomes accentuate the significance of judiciously selecting regression methodologies and optimization approaches for adeptly modeling intricate spatial datasets.

Similar content being viewed by others

Introduction

In the pharmaceutical industry there are two major problems, i.e., batch manufacture and poor solubility of medicines. The current batch mode of operation in pharmaceutical industry is time consuming and wasteful. Therefore, the pharmaceutical industry is shifting from batch to continuous paradigm to keep globally competitive1,2,3. Some unit operations based on continuous processing have been developed for solid-dosage oral formulations, however there are still more underpinning research required to develop integrated continuous model of operation for pharmaceutical industry4,5.

The other challenge for pharmaceutical sector is poor solubility of medicines in water which makes patients take more dose to achieve therapeutic effects. This in turn would cause some side effects for patients when taking high drug dosage6,7. Some new techniques have been developed for enhancing drug efficacy with lower taken dosage of medicines. One of the strategies for efficiency enhancement of medicines is controlled release of drugs which can propose better performance. With use of controlled release systems, the drug can reach the desired site with enough dosage so that the side effects of medicines can be minimized accordingly. Controlled release (CR) of medicines can be done by design of new formulations. In some cases, polymeric materials with biodegradable properties are used as carrier to reach the drug to the desired tissue8,9,10. Understanding the drug release from the polymeric carrier is of fundamental importance and some computational techniques can be employed for studying the release phenomena.

Mass transfer and chemical reactions should be considered for modeling CR of medicines. These models can be integrated to machine learning method to build hybrid approach for modeling of CR of drugs. The advent of Machine Learning (ML) has instigated a metamorphosis within the domain of predictive modeling. ML algorithms have equipped researchers and professionals to confront intricate relationships within datasets, culminating in the development of more precise and intricate models. ML algorithms demonstrate prowess in discerning patterns and extracting invaluable insights from data. They possess the capability to manage substantial data volumes and adeptly navigate through intricate and multi-faceted datasets11,12.

Previous studies have explored the use of ML models along with computational simulation of mass transfer and chemical reactions in estimation of drug concentrations within biomaterials. Alshahrani et al.13 proposed a method based on mass transfer and ML to predict the concentration of drug at different locations within a polymeric matrix. In their study, Decision Tree regression showed the best performance for correlation of dataset with R2 greater than 0.99. Alqarni et al.14 developed Decision Tree, Random Forest, and Extra Tree in estimating drug concentration within polymeric matrix via mass transfer and ML models. Although several ML models have been developed and validated for drug release analysis, more models need to be explored for this field in order to build a holistic analysis of the drug release from biomaterial matrices and to find out the appropriateness of model for each case study.

In this investigation, the evaluation of three commonly utilized regression models, specifically kernel ridge regression (KRR), Gaussian Process Regression (GPR), and Gradient Boosting (GB), is performed in modeling the concentration parameter from release of drug from a polymeric matrix. Gaussian Process Regression offers a flexible framework for regression tasks, capable of capturing complex data relationships without assuming a specific functional form. Its ability to quantify uncertainty makes it particularly valuable in scenarios where reliable predictions and confidence estimates are crucial15. Kernel Ridge Regression embodies a versatile technique amalgamating the advantages of kernel functions and regularization, thereby proving efficacious in capturing elaborate patterns within regression endeavors16. Gradient Boosting Regression stands as a prevalent ensemble method tailored for regression assignments. It constructs a prognostic model incrementally, refining accuracy by mitigating the errors of antecedent models in a stepwise manner. Employing gradient descent on a predefined loss function, feeble learners (commonly shallow decision trees) are tailored to the residual errors, progressively honing the predictive model and attaining superior accuracy in forecasts17. We used an integrated approach in this study for modeling CR of medicines from a porous biomaterial carrier. Mass transfer and chemical reactions are considered for modeling the content of drug inside the carrier. The results are utilized as inputs for building machine learning models. The developed models are trained and compared to find out the best one for correlating the concentration distribution dataset.

Data collection

The dataset under analysis in this study is made of over 15,000 points, containing two input (independent) variables: r quantified in meters, z likewise in meters. Also, the concentration (C) of drug denoted in mol/m3 is the single dependent variable (target output). Indeed, models with two inputs and one output are built using mass transfer dataset. The data are concentration distribution of drug (C) inside a polymeric biomaterial which encapsulates the drug for controlled release13. The geometry of biomaterial (fibrin) and its surrounding fluid is considered, and diffusional mass transfer is assumed for numerical simulations14 . Once the simulations have been successfully conducted, the concentration of drug on each node of geometry was extracted and used for ML modeling18.

The diffusional mass transfer model was used for the matrix and the fluid which may be written as19:

where C is the drug concentration (mol/m3), t is time (s), D is drug diffusivity (m2/s), and R is chemical reaction term.

By numerical solution of the diffusion equation, C as a function of r and z can be obtained entire the systems’ geometry. r and z are the coordinates of cylindrical geometry. More details regarding the model, equations, and numerical simulations can be found elsewhere18,19.

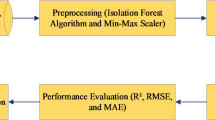

Methodology

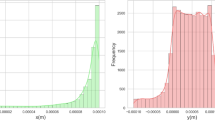

Pre-processing

In the context of this study, pre-processing of the data plays a crucial role in preparing it for further analysis. Two key techniques employed for data preparation are normalization and outlier removal, with the use of the Z-score method. Normalization is a fundamental pre-processing step aimed at scaling the features of the dataset to a standard range, facilitating fair comparison between different variables. The Z-score, also known as standardization, is a commonly utilized method for normalization. It involves transforming each data point to have a mean of zero and a standard deviation of one. Mathematically, the Z-score \(\:{z}_{i}\) of a data point \(\:{x}_{i}\) is calculated as20,21:

Here, \(\:{\upmu\:}\) represents the mean and \(\:{\upsigma\:}\) signifies the standard deviation of the dataset.

Through the implementation of the Z-score normalization method, variables characterized by disparate scales and units are standardized onto a uniform scale, thereby averting the undue influence of any specific feature owing to its magnitude disparity. Moreover, the Z-score method also serves as a robust technique for outlier removal. Data points that fall beyond a certain threshold, typically defined as a specified number of standard deviations from the mean, are considered outliers and can be removed from the dataset. This process helps in improving the robustness of subsequent analyses by mitigating the influence of anomalous observations that could skew the results.

Firefly optimization (FFA)

The Firefly Optimization (FFA) algorithm is a bio-inspired optimization method derived from the luminous conduct of fireflies. In this approach, solutions generated randomly are likened to fireflies, with their luminosity determined by their efficacy in the designated objective function22. The algorithm entails guiding fireflies towards one another across all dimensions, where the brightness of their light signifies the value of the objective function. Additionally, the algorithm incorporates sporadic movements of fireflies to explore the search space comprehensively23. Iterations of the algorithm persist until convergence is achieved, with the rate of convergence modifiable through parameter adjustments like the quantity of fireflies and the extent of the domain. The working steps of FFA algorithm are shown visually in Fig. 1.

In this study, we used FFA for hyper-parameter tuning of regression models. The objective function serves as a critical metric in optimizing the hyperparameters of models, particularly in the context of regression tasks where the R2 score is commonly employed. The aim of the function is to assess the model’s effectiveness by assessing its alignment with the provided dataset. Specifically, when optimizing hyperparameters, this function computes the R2 score, which gauges the extent to which the variance in the output is explained by the inputs. Maximizing the R2 score through hyperparameter tuning ensures that the model achieves the best possible fitting.

The choice of the Firefly Optimization Algorithm (FFA) for hyperparameter optimization in this research was influenced by several significant factors. Although other metaheuristic algorithms such as Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) are widely used, FFA presents unique benefits that suit the intricacy of the specific problem under investigation. FFA is well-suited for tasks involving multimodal search spaces due to its robust exploration-exploitation balance, which enhances the algorithm’s ability to avoid local minima while still efficiently converging to optimal solutions. This feature is critical in tuning machine learning models, where the search space of hyperparameters can be highly non-linear and complex. Additionally, although Bayesian Optimization (BO) is considered reliable for hyperparameter tuning, it can become computationally expensive when dealing with high-dimensional spaces and large datasets. FFA’s iterative nature and parallel search capabilities provide a more scalable solution for large datasets like those used in this study. Furthermore, FFA has demonstrated efficiency in handling continuous optimization problems and offers flexibility in fine-tuning its parameters, such as light absorption and attractiveness, which further improved the performance of the regression models in this context.

Gradient boosting regression (GB)

GB utilizes the concept of boosting, where each subsequent model strives to address the mispredictions. The methodology operates by iteratively training new models on the residuals of the former models, progressively diminishing the discrepancies and enhancing the overall predictive precision. Gradient Boosting Regression employs gradient descent optimization to minimize a loss function, like mean squared error, and determine the optimal parameters for each model. Moreover, the algorithm integrates regularization methods, such as shrinkage and feature subsampling, to avert overfitting and enhance the generalization capability17. Mathematically, the training portion can be assigned as \(\:\{\left({x}_{i},{y}_{i}\right){\}}_{i=1}^{N}\), where \(\:{x}_{i}\) stands for the inputs and \(\:{y}_{i}\) is the corresponding output. GB tries to approximate the true relationship between the input features and target values by iteratively minimizing a loss function \(\:L\left(y,F\left(x\right)\right)\), where \(\:F\left(x\right)\) represents the ensemble model’s prediction. The ensemble model is constructed as a weighted sum of weak learners, typically regression trees, denoted as \(\:F\left(x\right)={\sum\:}_{k=1}^{K}{f}_{k}\left(x\right)\), where \(\:{f}_{k}\left(x\right)\) denotes the prediction of the k-th weak learner. Central to the GB algorithm is the iterative optimization of the ensemble model’s parameters by sequentially fitting weak learners to the residuals of the previous iterations. Specifically, at each iteration k, a weak learner \(\:{f}_{k}\left(x\right)\) is trained to approximate the negative gradient of the loss function with respect to the ensemble model’s prediction, denoted as \(\:-\frac{\partial\:L\left(y,F\left(x\right)\right)}{\partial\:F\left(x\right)}\). Subsequently, the contribution of the newly added weak learner is scaled by a learning rate \(\:{\upnu\:}\), and the ensemble model is updated accordingly24. This iterative process of gradient descent coupled with the ensemble learning framework enables GB to systematically refine the model’s predictive capabilities, gradually reducing the residual errors and enhancing the overall predictive accuracy. Furthermore, GB boasts a remarkable resilience to overfitting, thanks to its judicious control over model complexity through hyperparameters.

Gaussian process regression (GPR)

Gaussian process regression represents a non-parametric Bayesian method employed in regression tasks. It encompasses the creation of distributions over functions, enabling the modeling, investigation, and utilization of unfamiliar functions25. Fundamentally, GPR embodies the concept of representing functions as derived from a Gaussian process, where every limited set of function values adheres to a collective Gaussian distribution. This pivotal characteristic endows GPR with a remarkable flexibility, enabling it to capture intricate patterns while quantifying the inherent uncertainty in predictions26. Formally, let us denote a function f(x) as a member of a Gaussian process with covariance k(x, x’) and mean m(x). Here, x represents the input space, and x’ denotes another point in the input space. The mean function m(x) encapsulates our prior beliefs about the function’s behavior, while the covariance function k(x, x’) governs the smoothness and correlations between different points in the input space. Typically, popular choices for the covariance function include the squared exponential or Matérn kernels, each offering distinct characteristics suitable for different data scenarios. Central to GPR is the inference of the posterior distribution over functions given observed data. Leveraging Bayes’ theorem, GPR seamlessly combines prior knowledge with actual set to yield a posterior distribution that encapsulates updated beliefs about the underlying function. This posterior distribution not only furnishes point estimates but crucially quantifies uncertainty through its variance, thereby offering a comprehensive understanding of the fitting. Moreover, GPR extends beyond mere point predictions, offering a rich tapestry of insights into the underlying data.

Kernel ridge regression (KRR)

The kernel ridge regression technique serves as a predictive strategy employed in scenarios where numerous predictors exhibit nonlinear associations with the target variable. This methodology encompasses the transformation of predictor (input / independent) variables into a high-dimensional realm through the application of a kernel function. The subsequent estimation of the predictive regression model entails the utilization of a shrinkage estimator to mitigate the risk of overfitting. The versatility of this approach extends to the incorporation of lags from the dependent variable or other individual variables as predictive factors, a practice often sought after in macroeconomic and financial contexts. Through Monte Carlo simulations and practical applications, it has been demonstrated that kernel ridge regression yields more precise prognostications compared to conventional linear methodologies like principal component regression, particularly in situations characterized by a multitude of predictors. Mathematically, KRR is a regression method combining kernel methods with ridge regression. The objective function of KRR minimizes the regularized empirical risk27,28:

Here, \(\:y={\left[{y}_{1},{y}_{2},\dots\:,{y}_{N}\right]}^{T}\) represents the target values, K indicates the Gram matrix with elements \(\:{K}_{ij}=k\left({x}_{i},{x}_{j}\right)\) generated by the kernel function \(\:k\left(x,{x}^{{\prime\:}}\right)\), and \(\:{\upalpha\:}={\left[{{\upalpha\:}}_{1},{{\upalpha\:}}_{2},\dots\:,{{\upalpha\:}}_{N}\right]}^{T}\)is the vector of coefficients. The parameter \(\:{\uplambda\:}\) controls the regularization. The prediction function f(x) is expressed as29:

KRR’s computational efficiency is notable as its complexity depends solely on the size of the dataset through the Gram matrix K, making it suitable for large-scale datasets. In essence, KRR efficiently captures complex data relationships while mitigating overfitting, making it a robust tool for regression tasks.

Results and discussion

The fitting accuracy for the models, i.e., KRR, GPR, and GB was evaluated on a dataset of mass transfer. The main and only response in this work is concentration of drug inside the biomaterial which is calculated via mass transfer modeling and ML techniques. Indeed, mass transfer modeling data is used for training as well as validation of ML models. Table 1 summarizes the hyperparameters utilized for each model alongside their corresponding performance metrics in Tables 2 and 3.

The Gradient Boosting model stands out as the top performer, achieving an impressive R2 of 0.9977, indicating its exceptional ability to capture the variability in the data and make accurate predictions. It also exhibits the MSE and RMSE among the models, demonstrating its superior predictive capability. This fact is confirmed by Fig. 2 which is observed and actual values comparison using this model. Gaussian Process Regression (GPR) follows closely behind, with a commendable R2 score of 0.88754, revealing its performance in capturing complex data associations. Although Kernel Ridge Regression (KRR) performs slightly lower in terms of R2 score compared to the other models, it still provides reliable predictions with an R2 score of 0.76134. Figures 3 and 4 are observed and actual values comparison using these two models. Considering the comprehensive data and statistics, the GB model emerged as the optimal choice in this research. Figure 5 illustrates the three-dimensional representation of concentration utilizing this model. Furthermore, partial dependency visualizations are depicted in Figs. 6 and 7. Lastly, Fig. 8 showcases permutation-based feature importances via GB model. Permutation-based feature importance evaluates a feature’s significance by shuffling its values and observing the impact on the model’s performance30. A significant drop in accuracy indicates that the feature is important, while a small change suggests it is less important. This method is versatile and can be used with any machine learning model14. The change in drug concentration is obviously seen which is due to the mass transfer mainly by diffusion inside the biomaterial. Diffusional mass transfer along with the chemical reaction reduces the solute content in the carrier13.

Conclusion

In summary, we conducted a comprehensive assessment of the efficacy of Gaussian Process Regression (GPR), Kernel Ridge Regression (KRR), and Gradient Boosting (GB) models in forecasting drug concentrations based on spatial coordinates for release from a biomaterial matrix. Numerical simulation data was used as the source for training and validation of ML models. By leveraging Firefly Algorithms (FFA) for hyperparameter optimization, our analysis unveiled discernible disparities in model performance, with GB showcasing superior predictive precision, trailed by GPR and KRR. These results highlight the importance of meticulous selection of regression methodologies and optimization approaches for proficiently modeling intricate spatial datasets. Future endeavors could delve into exploring alternative regression algorithms and optimization techniques to bolster predictive capabilities and propel advancements in spatial data analysis.

Data availability

The data supporting this study are available when reasonably requested from the corresponding author.

References

Hyer, A. et al. Continuous Manufacturing of active Pharmaceutical ingredients: current trends and perspectives. Adv. Synthesis Catal. 366 (3), 357–389 (2024).

Sundarkumar, V. et al. Developing a Modular Continuous Drug Product Manufacturing System with Real Time Quality Assurance for Producing Pharmaceutical Mini-Tablets (Journal of Pharmaceutical Sciences, 2023).

Velez-Silva, N. L., Drennen, J. K. & Anderson, C. A. Continuous manufacturing of pharmaceutical products: a density-insensitive near infrared method for the in-line monitoring of continuous powder streams. Int. J. Pharm. 650, 123699 (2024).

Bhalode, P., Chen, Y. & Ierapetritou, M. Hybrid modelling strategies for continuous Pharmaceutical Manufacturing within Digital Twin Framework, in Computer Aided Chemical Engineering, Y. Yamashita and M. Kano, Editors. Elsevier. 2125–2130. (2022).

Jelsch, M. et al. Model predictive control in pharmaceutical continuous manufacturing: a review from a user’s perspective. Eur. J. Pharm. Biopharm. 159, 137–142 (2021).

Aslam, M. et al. Study the solubility of pharmaceutical ingredients and their eutectic mixtures: an in-depth density functional theory and molecular dynamics simulations approaches. J. Mol. Liq. 397, 124070 (2024).

Elder, D. P., Holm, R. & Diego, H. L. Use of pharmaceutical salts and cocrystals to address the issue of poor solubility. Int. J. Pharm. 453 (1), 88–100 (2013).

Lim, J. V., Nepacina, M. R. & Hsu, Y. C. The Study of Designing a Controlled drug Release Using oxaliplatin-loaded Hydrogel for Ovarian cancer Treatmentp. 105326 (Journal of the Taiwan Institute of Chemical Engineers, 2024).

Liu, J., Cabral, H. & Mi, P. Nanocarriers address intracellular barriers for efficient drug delivery, overcoming drug resistance, subcellular targeting and controlled release. Adv. Drug Deliv. Rev. 207, 115239 (2024).

Shi, Z., Hu, Y. & Li, X. Polymer mechanochemistry in drug delivery: from controlled release to precise activation. J. Controlled Release. 365, 259–273 (2024).

Sugiyama, M. Introduction to Statistical Machine Learning (Morgan Kaufmann, 2015).

Candanedo, I. S. et al. Machine learning predictive model for industry 4.0. in Knowledge Management in Organizations: 13th International Conference, KMO Žilina, Slovakia, August 6–10, 2018, Proceedings 13. 2018. Springer. (2018).

Alshahrani, S. M., Alotaibi, H. F. & Alqarni, M. Modeling and validation of drug release kinetics using hybrid method for prediction of drug efficiency and novel formulations. Front. Chem. 12, 1–12 (2024).

Alqarni, M. et al. Model development using hybrid method for prediction of drug release from biomaterial matrix. Chemometr. Intell. Lab. Syst. 253, 105216 (2024).

Rasmussen, C. E. & Williams, C. K. Gaussian Processes for Machine LearningVol. 1 (Springer, 2006).

Vovk, V. Kernel Ridge Regression, in Empirical Inferencep. 105–116 (Springer, 2013).

He, Z. et al. Gradient boosting machine: a survey. arXiv preprint arXiv:1908.06951, (2019).

Maxwell, D. J. et al. Development of rationally designed affinity-based drug delivery systems. Acta Biomater. 1 (1), 101–113 (2005).

COMSOL Multiphysics Version 3.5a: COMSOL Reaction Engineering Lab Model Library. (2008).

Henderi, H., Wahyuningsih, T. & Rahwanto, E. Comparison of Min-Max normalization and Z-Score normalization in the K-nearest neighbor (kNN) algorithm to test the accuracy of types of breast Cancer. Int. J. Inf. Inform. Syst. 4 (1), 13–20 (2021).

Aggarwal, V. et al. Detection of spatial outlier by using improved Z-score test. in. 3rd International Conference on Trends in Electronics and Informatics (ICOEI). 2019. IEEE. (2019).

Yang, X. S., Hosseini, S. S. S. & Gandomi, A. H. Firefly algorithm for solving non-convex economic dispatch problems with valve loading effect. Appl. Soft Comput. 12 (3), 1180–1186 (2012).

Yang, X. S. Cuckoo Search and Firefly Algorithm: Theory and Applications 516 (Springer, 2013).

Zhu, F. et al. A multi-layer nesting and integration approach for predicting groundwater levels in agriculturally intensive areas using data-driven models. J. Hydrol. 643, 132038 (2024).

Schulz, E., Speekenbrink, M. & Krause, A. A tutorial on gaussian process regression: modelling, exploring, and exploiting functions. J. Math. Psychol. 85, 1–16 (2018).

Sun, Z. et al. Application of Gaussian Process Regression Model in Industry. in. 2nd International Conference on Robotics, Automation and Artificial Intelligence (RAAI). IEEE. (2022).

Zhdanov, F. & Kalnishkan, Y. An identity for kernel ridge regression. Theor. Comput. Sci. 473, 157–178 (2013).

Welling, M. Kernel ridge regression. Max Welling’s classnotes in machine learning, 1–3. (2013).

Zhang, Y., Duchi, J. & Wainwright, M. Divide and conquer kernel ridge regression. In Conference on learning theory. PMLR. (2013).

Radivojac, P. et al. Feature selection filters based on the permutation test. in Machine Learning: ECML : 15th European Conference on Machine Learning, Pisa, Italy, September 20–24, 2004. Proc. 15. 2004. Springer. (2004).

Acknowledgements

The authors extend their appreciation to Prince Sattam bin Abdulaziz University for funding this research work through the project number (PSAU/2024/R/1445). The authors extend their appreciation to Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia for funding this work under researcher supporting project number PNURSP2024R205. The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through large group Research Project under grant number (RGP2/24/45).

Funding

This work was supported by Princess Nourah Bint Abdulrahman University researchers supporting project number (PNURSP2024R205), Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia. The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through large group Research Project under grant number (RGP2/24/45). This study is supported via funding from Prince Sattam bin Abdulaziz University project number (PSAU/2024/R/1445).

Author information

Authors and Affiliations

Contributions

Saad M Alshahrani: Methodology, Funding, Formal analysis, Investigation, Writing, Hadil Faris Alotaibi: Conceptualization, Formal analysis, Validation, Writing, Funding, M. Yasmin Begum: Conceptualization, Formal analysis, Investigation, Validation, Writing, Visualization, Funding.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alshahrani, S.M., Alotaibi, H.F. & Begum, M.Y. Computational analysis of controlled drug release from porous polymeric carrier with the aid of Mass transfer and Artificial Intelligence modeling. Sci Rep 14, 28422 (2024). https://doi.org/10.1038/s41598-024-79749-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-79749-6

Keywords

This article is cited by

-

Chemometric and computational modeling of polysaccharide coated drugs for colonic drug delivery

Scientific Reports (2025)