Abstract

Time series analysis predicts the future based on existing historical data and has a wide range of applications in finance, economics, meteorology, biology, engineering, and other fields. Although the combination of decomposition techniques and machine learning algorithms can effectively solve the problem of predicting nonstationary sequences, this kind of decomposition-integration-prediction strategy of the prediction method has serious defects. After the decomposition of the division of the training set and the test set, the information of the test set in the process of decomposition of the information leakage ultimately shows a high accuracy of the prediction of the illusionary. This paper proposes three improvement strategies for this type of “information leakage” problem: sliding window decomposition (SW-EMD), single training and multiple decomposition (STMP-EMD), and multiple training and multiple decomposition (MTMP-EMD). They are combined with a bidirectional multiscale temporal convolutional network (MSBTCN), bidirectional long- and short-term memory network (BiLSTM), and attention mechanism (DMAttention), which introduces a dependency matrix based on cosine similarity to be applied to water quality prediction. The experimental results show that the model achieves good performance in the prediction of three water quality indicators (pH, DO and KMnO4), and the accuracies of the three models proposed in this paper are improved by 1.958% and 0.853% in terms of the RMSE and MAPE, respectively, compared with those of the mainstream LSTM models. The key contributions of this study include the following: (1) three methods are proposed to improve the class EMD decomposition, which can effectively solve the problem of “information leakage” that exists in the current models via class EMD decomposition; (2) the CEEMDAN-MSBTCN-BiLSTM-DMAttention model structure is innovated by combining improved class EMD decomposition methods; and (3) the three improved decomposition methods proposed in this paper can effectively solve the problem of “information leakage” and optimize the prediction model at the same time. This study provides an effective experimental method for water quality prediction and can effectively address the problem of “overfitting” models via class EMD decompositions during model training and testing.

Similar content being viewed by others

Introduction

Background

Time series forecasting has been a hot topic of academic interest and is important in business decision making and financial markets, including natural science research. Mainstream time series models tend to focus on parametric models provided by expertise in the application area, such as autoregression (AR)1, exponential smoothing2 or structural time series models3. However, most models that study linear time series do not handle nonlinear time series well4. On the other hand, machine learning implements stochastic dependencies between the past and the future inferred from observations and can be used to address time series through supervised learning, multistep prediction, and other methods5. In recent years, deep learning architectures capable of adapting to diverse time series datasets in different domains have been proposed by scholars and are gradually becoming an important part of novel time series forecasting models6.

Integrated learning methods have been widely used in various research areas, such as pattern classification, regression and time series forecasting, by strategically combining multiple learning algorithms to obtain better prediction performance. For time series data, divide-and-rule7 is a common concept in time series forecasting. Compared with mainstream time‒frequency analysis methods, EMD can effectively avoid the problem of setting functions and parameters and can decompose complex time series into multiple IMFs and residual series8. The IMF therein represents local signals with time‒frequency characteristics on different time scales, which is used to reveal the spectral volatility of local signals; the residuals map the long-term trend of the overall signal. Moreover, deep learning has a better effect on the prediction of nonlinear, dynamic, multivariate and complex time series9, which are gradually becoming an important part of novel time series forecasting models.

In previous time series prediction problems, when mainstream decomposition and deep learning methods were used, some of the information or features in the dataset used for testing were exposed to the model in the process of model training and testing, which caused the model to present “illusion” of very high prediction accuracy on the test set, a problem known as “information leakage”. This problem is called “information leakage”. The existence of “information leakage” leads to unreliable training and testing results, and the generalization ability of the model cannot be correctly evaluated. In the context of time series forecasting, which essentially involves the use of historical data to guess and estimate future data, models need to have some generalizability. However, the existence of “information leakage” means that the information in the test set becomes “known”, causing the model to lose its ability to generalize to unknown data. In addition, the number of components is heavily dependent on the sequence distribution and length. The impact of “information leakage” is profound. This causes the model to overfit specific samples and patterns in the training set instead of learning generalized laws. This means that the model may perform well on the test set but not in real applications. If “information leakage” occurs, the performance evaluation of the models in the test set will be invalid because the test set is not significantly representative of the unknown data. This may lead to a misleading understanding of the functionality and limitations of the model. At present, scholars have recognized the problem of future “unknown” data involved in the decomposition process25, and some scholars have adopted stepwise decomposition and other methods to prevent it27; however, excessive computational effort is still needed to improve the method, and there is no wide-scale research or methodological improvement to address this problem28.

In this paper, an improved completely ensemble empirical modal decomposition with adaptive noise (CEEMDAN) method is proposed for water quality prediction, and three different improved CEEMDAN decomposition methods are used to address the “information leakage” problem. Moreover, unlike mainstream temporal convolutional networks (TCNs) and attention, this paper uses a bidirectional multiscale improved TCN model MSBTCN10 and introduces the cosine similarity-based dependency matrix attention mechanism DMAttention11. On the basis of the above, by using three improved CEEMDAN-MSBTCN-BiLSTM-DMAttention model structures, this paper effectively captures the dynamic data in the time series model and achieves better prediction results while ensuring the effectiveness of the model.

Related work

Currently, forecasting via a combination of mainstream decomposition and deep learning methods is an important part of current time series forecasting, and in this paper, three different types of methods are investigated: machine learning-based, deep learning, and empirical modal decomposition methods.

Supervised machine learning is commonly used in time series forecasting problems. Kane et al.12 used random forest and ARIMA for time series forecasting of avian influenza and compared them to determine their effectiveness. Masini et al.13 investigated the problem of supervised machine learning on time series forecasting from the problems of supervised machine learning for the LASSO, feed forward and recurrent versions of shallow and deep neural networks and other methods on financial and economic time series problems. Some newer machine learning algorithms have also been applied to time series forecasting, with Uddin et al.14 using models such as K nearest neighbor regression (KNN), the extreme gradient boosting algorithm (XGBoost), and support vector machines (SVMs) to forecast time series. However, mainstream machine learning models may suffer from the shortcomings of a small applicability range and high requirements on data features, whereas deep learning approaches can specifically address the high dimensionality of data and temporal challenges.

Commonly used deep learning time series prediction models include artificial neural networks (ANNs)15,16, recurrent neural networks17, and convolutional neural networks (CNNs). These sequence models can effectively extract temporal features and predict them18. Among them, ANNs have been widely used in time series prediction in the past decade, but they often fall into the dilemma of local minima19. In contrast, Hewage et al.20 used a temporal convolutional network (TCN) to better capture temporal features, capturing long-range dependencies in time series and combining them with long short-term memory (LSTM) for prediction. By combining these methods with long short-term memory networks (LSTMs), bidirectional long short-term memory neural network layers (BiLSTM), etc., in addition to attentional mechanisms21, long-range temporal dependencies can be effectively captured22, which further enhances the model’s ability to solve nonlinear time series prediction problems.

Empirical mode decomposition (EMD)23 is also widely used in nonlinear time series data processing and can improve the prediction accuracy and reduce the effect of noise. However, EMD has problems such as mode mixing and matrix alignment. Therefore, some scholars have introduced improved EMD methods, such as variational mode decomposition (VMD)24 and complete ensemble empirical mode decomposition (CEEMDAN)25. However, many problems cannot be solved by the current mainstream improvement methods in EMD. Qian et al.26 argued that several decomposition methods that are currently widely used raise the problems of large computational volumes and the use of future data in model training, resulting in “inflated” prediction results. Weng et al.27 proposed the use of multiple predictions to avoid the problem of “inflated” prediction results caused by the use of future data in model training, whereas some scholars have adopted other improved algorithms, where each decomposition adds one unit of new data to the training set for redecomposition and prediction, which may lead to excessive computational burden28. Gao R et al.29 combined the empirical wavelet transform (EWT) with RVFL and proposed a forward decomposition mechanism to realize EWT, which effectively avoids the data leakage problem in the prediction process. Wang Z et al.30 captured the complex multiscale relationships among NSP, SSP, and SSV data by extracting multiple frequency-aligned oscillatory patterns, which helps in multivariate forecasting of ship prices at each time scale.

In larger-scale experiments, Agnihotri J et al.31 used the Noah land surface model with multiphysics (MP; Noah-MP) options and the Routing Application for Parallel Computing Ion of Discharge (RAPID) over the Mississippi River Basin. Khalil M A et al.32 measured nine resistivity lines via Wenner and dipole‒dipole arrays on the basis of the high resolution, accuracy and data coverage of electrical resistivity tomography (ERT). For the mass transport problem in fractured porous media, Khoei A R et al.33 used the extended finite element method (X–FEM) to discretize the discontinuity of a medium to resolve the issue of discontinuity in the field variables and the Newmark integration scheme to discretize the governing equations in the time domain. Zare N et al.34 employed two quantitative methodologies, the CDRI and the STDRM, to assess urban flood resilience in District 6 of Tehran over a specific time frame. The selection of the fewest features possible without impairing the accuracy of the results in feature subset selection (FSS) is a multiobjective optimization issue35. Larijani A et al.35 used the modified JAYA (MJAYA) algorithm to optimize the C and gamma parameters of the support vector machine (SVM) classifier.

At present, many scholars have widely explored and adopted different methods, and all three methods have been widely used in time series prediction problems. However, methods for solving the problem of “information leakage” have not been widely used. This paper discusses the problem of exposing a dataset to a test set.

Main contributions

The main contributions of this paper are as follows: (1) Three methods are proposed to improve the class EMD decomposition, which can effectively solve the “information leakage” problem that exists in current models via class EMD decomposition, where model training will obtain future data information and features in advance. (2) The proposed CEEMDAN-MSBTCN-BiLSTM-DMAttention method, which uses the improved EMD decomposition method, is a good solution to the “information leakage” problem that exists in current models via class EMD decomposition. The MSBTCN-BiLSTM-DMAttention model using the improved class EMD decomposition method, combined with the ablation study, proves that its model structure is effective while realizing better water quality prediction, which is suitable for water quality prediction tasks. (3) The three improved decomposition methods, STMP-CEEMDAN, MTMP-CEEMDAN and SW-CEEMDAN, proposed in this paper are useful in the prediction of the three indicators of pH, DO, and KMnO4, and the RMSE and MAPE results are smaller, which can effectively solve the problem of “information leakage” and optimize the prediction model at the same time.

Section plan

In this work, water quality prediction was chosen as an improved application of the CEEMDAN decomposition method, and the hydrogen ion concentration index (pH), dissolved oxygen (DO), and potassium permanganate (KMnO4) were used as the main indicators for water quality detection and analysis. pH, DO, and KMnO4 can visually reflect the changes in water quality and the problems of aquatic organisms, the water environment, and the chemical properties of water bodies and can be used to analyze the degree of pollution and the status of the water body through the detection and prediction of these three indicators. By detecting and predicting these three indicators, it is useful to analyze the degree of pollution and water quality status of water bodies. The specific section of this paper is as follows:

“Methods” section analyzes the resolution of the “information leakage” problem and presents three improved EMD methods: SW-EMD, STMP-EMD, and MTMP-EMD, as well as the CEEMDAN method and its integration with BiLSTM for comparison with the improved methods.

“Models” section presents the models used in this paper, including the MSBTCN, BiLSTM, and DMAttention, highlighting their structure and contribution to improving model performance. This section presents the structure of the final improved model.

“Experiment” section specifically presents the experimental analysis, introduces the dataset, evaluation metrics and parameter settings, analyzes the model prediction effects in conjunction with the comparative models, and further analyzes the model validity by carrying out an ablation study.

Based on the above research process, “Conclusion” section summarizes the conclusions of this paper.

Methods

EMD methods are signal decomposition methods that are used to decompose a complex signal into multiple components. However, signal decomposition has the problem of exposing some information or features of the model training and testing process to the model, which is called “information leakage”. This problem is called “information leakage”, which is a common problem in signal decomposition and can lead to unreliable training and testing results and the inability to correctly assess the generalization ability of the model.

Models that use information or features of future data in model training and thus obtain information and features of future data in advance may overfit specific samples and patterns in the training set and are not realistically learning generalized laws. Focusing on noise or specific features in the training set results in a model that performs better on the test set but performs poorly in real applications. In particular, the essence of time series forecasting is to use historical data to guess and estimate future data, and its model needs to have a certain generalization ability, while the existence of “information leakage” means that the information in the test set becomes “known”, resulting in the model losing the ability to generalize to unknown data. The existence of “information leakage” means that the information in the test set becomes “known”, which causes the model to lose the ability to generalize to unknown data, and the performance evaluation of the model in the test set will not even be valid and will not be able to accurately reflect its performance in the real scene.

For general deep learning models, there is a risk of “information leakage” caused by utilizing the information or features of the test data in the process of data preprocessing, feature engineering and hyperparameter tuning, so it is possible to avoid “information leakage” by cross validation, dividing the dataset in advance, and utilizing the independence of feature engineering and hyperparameters to avoid “information leakage”. Therefore, “information leakage” can be avoided by cross validation, prior division of datasets, feature engineering and hyperparameter independence. However, when scholars use signal decomposition and other related methods, they need to use future data to decompose historical data, which will inevitably make the information and features of test data pass into the model, thus causing “information leakage”.

The key to avoiding “information leakage” is to ensure that all the data and features used for training are available before the prediction moment, i.e., no future information is used, during the data preprocessing and model training phases. Scholars have conducted research to improve the decomposition method. On the one hand, some scholars have used step-by-step decomposition and step-by-step prediction, where a new unit of data is added at each decomposition training, but the computational burden is heavy; on the other hand, some scholars have not explored more ways to solve “information leakage” and the limitations of the methods used. In this paper, to address the problems of class EMD signal decomposition methods, the combination of multiple trainings and multiple predictions is used to improve the model, and three solutions are proposed, namely, SW-EMD decomposition by sliding window decomposition, STMP-EMD decomposition with single training and multiple decomposition predictions of the training set, and MTMP-EMD decomposition with multiple trainings and multiple decomposition predictions. While drawing on the research of past scholars, a sliding window form of multiple prediction multiple decomposition is used in this paper to ensure that the number of computations is within the appropriate range. The three methods proposed in this paper enable the model not to access future data during the training and validation phases and accurately reflect the performance during model evaluation and validation, solving the problem of “information leakage”.

Improved EMD decomposition

SW-EMD

In this work, it is assumed that the time series data used for model training and testing are D and the length is T + k, the first T data are used for model training, the last k data are used for model testing, and the first M data are used to predict the last N data. The SW-EMD method constructs the samples by sliding decomposition, which first selects two windows of data and decomposes them separately, and the two windows slide N data points each time and repeat the cycle until all the training samples are obtained. until all the training samples are obtained. The specific steps are as follows:

-

(1)

Decompose the data of D[1:M] to obtain the set of components X, decompose D[N + 1:M + N] to obtain the set of components Y, and then construct a sample (x, y) for each component separately, where x is the first M data and y is the last N data;

-

(2)

Decompose D[N + 1:M + N] and D[M + N + 1:M + 2 N], respectively, with N + 1: M + N as x and M + N + 1:M + 2 N as y. Again, generate a sample for all the components;

-

(3)

Repeat the above steps with both windows sliding N data at a time until the end of the training set to obtain all the training samples.

For formal prediction, the nearest M data are selected each time for decomposition, and then the prediction is superimposed. The prediction algorithm is shown in Table 1.

For formal prediction, the nearest M data are selected for decomposition each time, followed by prediction overlay. The SW-EMD decomposition is computationally intensive and has the problem that the number of IMFs decomposed per T data points needs to be consistent; otherwise, the trained model cannot be used.

The specific working principle of SW-EMD decomposition is shown in Fig. 1:

SW-EMD aims to address the “information leakage” problem in EMD decomposition by using a sliding window approach. This method ensures that the model does not use future data information during training, thus avoiding leakage.

STMP-EMD

STMP-EMD decomposition is a class EMD method that uses single training and multiple predictions for the decomposed dataset to avoid “information leakage”. As in “SW-EMD” section, it is assumed that the first T data are decomposed for training, and the first M data are used to predict the next N data points for prediction. The specific steps are as follows:

-

(1)

First, decompose the first T data and train a model for each component;

-

(2)

When predicting, first use the decomposition data of D[T-M + 1:T] to directly obtain the predicted value of D[T + 1:T + N]; then, decompose the data D[N + 1:T + N] and use D[T-N + 1:T + M-N] to obtain the predicted value of D[T + N + 1:T + M];

-

(3)

Cyclic prediction until the end of prediction.

The prediction algorithm of this method is shown in Table 2.

As shown in Table 2, the computational effort is lower than that of SW-EMD because this method trains only once and does not perform multiple training and sliding decompositions. At the same time, it is the same as SW-EMD decomposition: the number of IMFs decomposed from each M dataset must be consistent.

The specific working principle of STMP-EMD decomposition is shown in Fig. 2:

STMP-EMD uses single training and multiple predictions on the decomposed dataset to avoid “information leakage”. Compared with SW-EMD, STMP-EMD reduces the computational effort because it trains only once and does not perform multiple training and sliding decompositions. However, it also requires that the number of IMFs decomposed from each M dataset be consistent.

MTMP-EMD

MTMP-EMD decomposition is a class EMD method that uses multiple training steps and multiple predictions for the decomposed dataset. First, the first T data decomposition is trained, and for prediction, the first M decomposed component data are used to predict the latter N component data. Then, the components are superimposed to obtain the predicted value of the latter N data in this paper, and the cycle is repeated. The assumptions are consistent with those in “SW-EMD” section, and the specific steps are as follows:

-

(1)

Decompose the first T data to obtain multiple components and then train a model for each component;

-

(2)

After the model is trained, this paper uses the data of D[T-M + 1:T] to obtain the N predicted outputs of each component model and then superposes them to obtain the predicted values of D[T + 1:T + N];

-

(3)

Decompose the data of D[N + 1:T + N] again to obtain multiple components and then train a model for each component. The M data of D[T-M + N + 1:T + N] are then utilized to obtain N predictions for each component model, which are then superimposed to obtain N predictions for D[T + 1 + N: T + 2 N + 1];

-

(4)

Repeat the above 123 steps, slide N data in the decomposition window each time, decompose and train the model for the data in the window, and finally make the prediction until all the predicted values of D[T + 1:T + k] are obtained;

-

(5)

Calculate the evaluation index via the predicted and real values of D[T + k].

The prediction algorithm of the method is shown in Table 3.

As shown in Table 3, compared with “SW-EMD” section and “STMP-EMD” section, the above method does not need to ensure the same number of IMFs in each decomposition and ensures that there is no “information leakage”, but its algorithm is very computationally intensive.

The specific working principle of STMP-EMD decomposition is shown in Fig. 3:

MTMP-EMD does not require that the number of IMFs decomposed from each M dataset be consistent, but it is computationally intensive. MTMP-EMD uses multiple training and multiple predictions on the decomposed dataset to address “information leakage”. It decomposes the data multiple times, trains a model for each component each time, and superimposes the predictions.

This paper summarizes the advantages and disadvantages of these three methods as follows:

-

(1)

SW-EMD decomposition and STMP-EMD decomposition can solve the problem of “information leakage”, but these models require that the number of IMFs decomposed from each M dataset must be consistent; otherwise, the training will fail, and the trained model cannot be used;

-

(2)

MTMP-EMD does not need to ensure that the number of IMFs decomposed from each M dataset is consistent, but its model structure is the most complex, requiring the most steps and times of training and prediction and the largest amount of computation. For more complex models, the method may have higher equipment and environment requirements.

To address the large computational effort of the first two methods, the number of decompositions can be assumed in advance, and each time, the decomposition is carried out in accordance with the number of decompositions, this means that the model results are not necessarily optimal. Moreover, there is an extreme case: the number of decompositions at a certain time may not reach the set minimum value.

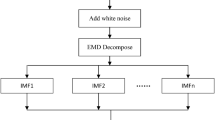

Improving inspections

EMD decomposition can capture patterns and trends on different time scales and reduce the complexity of time series while being able to improve the model prediction accuracy. In this paper, CEEMDAN is studied as an example, which is an improved model derived and innovated from EMD. The significant drawback of the EMD model is the mode mixing problem of the decomposition process when the local signal is an incomplete white noise process or when there are anomalous events in the signal. The CEEMDAN method is a special form of EMD-based mode decomposition technique that obtains the IMFs of each order after adding a set of adaptive white noise of the same size and opposite sign to calculate the overall average, which not only overcomes the problem of incomplete decomposition but also has a reconstruction error close to 0, effectively reducing the computational scale. However, although CEEMDAN denoising can effectively improve the prediction accuracy and reduce the influence of noise, this type of EMD considers future data in the training set when performing empirical decomposition, resulting in “information leakage”.

In this work, CEEMDAN and the three improved CEEMDANs are combined with the BiLSTM model, and CEEMDAN-BiLSTM is established for comparison with STMP-CEEMDAN-BiLSTM, MTMP-CEEMDAN-BiLSTM, SW-CEEMDAN-BiLSTM, and the water quality prediction index pH. The RMSE and MAPE of the results visualize the prediction effect of the model to test the influence of “information leakage” on the prediction accuracy of the model before and after processing. The model results and comparison results are shown in Table 4:

As shown in Table 4, the CEEMDAN-BiLSTM with the “information leakage” problem achieved very high prediction accuracies for the three water quality indicators of pH, KMnO4, and DO, whereas the three STMP-BiLSTM, MTMP-CEEMDAN-BiLSTM, and SW-CEEMDAN-BiLSTM models, which have already dealt with “information leakage”, achieved much lower prediction effects. CEEMDAN-BiLSTM, MTMP-CEEMDAN-BiLSTM, and SW-CEEMDAN-BiLSTM, which have already dealt with the problem of “information leakage”, yield much lower prediction effects. There are two main reasons for analyzing the “information leakage” of mainstream decomposition methods:

-

(1)

When using CEEMDAN to decompose a time series, the whole time series needs to be input into the CEEMDAN model, and future information may be exposed. For example, to consider the information of the entire time series, the signal length used in performing CEEMDAN may contain future time windows, which in turn generates the corresponding subsequence. In this process, if the future data are also included in the input sequence, the generated subsequence containing future information will be incorrectly input into the Bi LSTM model for training and prediction, which will lead to “information leakage”.

-

(2)

In BiLSTM, past information affects the current prediction result, and past information may contain future information. For example, when BiLSTM uses the past time window for prediction, the past time window may contain future information, i.e., the model uses information during training that it cannot actually know during prediction, which affects the generalization ability of the model. Because the model has access to future data during training, the model can perform well during prediction but often produces biased and inaccurate results in real-world applications.

Combined with the test results, the decomposition process with the problem of “information leakage” can improve the prediction accuracy of the model to a certain extent, but the credibility of the model is limited by “information leakage”. The three improvement methods proposed in this paper can effectively address the “information leakage” problem and make the model results more credible.

Compute duration analysis

To further explore the three improved CEEMDANs for solving the “information leakage” problem, this paper also counts the computation time of these three methods and the mainstream CEEMDANs under Python 3.88 and CPU memory. The computation time of each method is shown in Table 5.

In this work, MTMP-CEEMDAN-BiLSTM is found to take a longer computational time, and its multiple training and multiple prediction structure makes the model structure more complex and takes more time, whereas STMP-CEEMDAN-BiLSTM with single training and multiple prediction structures has the shortest computation time. On this basis, this paper improves the model prediction accuracy by combining the improved CEEMDAN with the MSBTCN, attention mechanism and BiLSTM as a way to ensure model credibility while improving the model prediction accuracy.

Models

In this paper, three models with improved methods, TCN, LSTM and attention, are used as prediction models. The TCN is able to learn translational invariance via a CNN and introduces an inflated convolution, which makes it effective in capturing long-range dependencies. LSTM networks are suitable for analyzing long-term trends and patterns in time series data, whereas forget gates allow the network to process long sequences when it selectively retains or forgets previous information, making them more powerful for dealing with long-range dependencies. The attention mechanism pays more attention to important time points while ignoring unimportant parts when dealing with sequences and is suitable for dealing with unimportant events in time series, which is very important for trends and periodicity in time series data. Moreover, the attention mechanism enables the model to adjust the weight of attention according to different parts of the input series, improving the flexibility and adaptability of the model. On the basis of three improved CEEMDANs, this paper combines TCN, LSTM and attention to effectively improve model prediction.

MSBTCN

The structure of the traditional TCN module is shown in Fig. 4. Owing to the use of inflated causal convolution, the traditional TCN can only transport information from the past to the future. Considering the variability and complexity of water quality indicator data changes, the unidirectional transportation TCN obviously cannot meet the comprehensive extraction requirements of water quality indicator features. To avoid losing features as the sensory field increases, this paper captures the bidirectional depth dependence between residues by further using the MSBTCN model, which possesses bidirectional multiscale TCNs based on the BTCN model consisting of forward TCNs and backward TCNs, to classify the residue features in a more comprehensive way.

The MSBTCN is an improved multiscale time convolutional network based on the BTCN model consisting of a forward TCN and a backward TCN, which not only has the advantage that the BTCN can fully capture the bidirectional depth dependency between residues but also effectively solves the problem that the inflated convolutional architecture of the BTCN is prone to losing the hidden layer feature information. The main steps of the MSBTCN include the following steps:

-

(1)

X denotes the water quality data sequence, X={x1,x2…,xL}, and \(\:\overleftarrow{X}\) denotes the inverse sequence of X, \(\:\overleftarrow{X}\)= {xL,xL−1…,xt}. First, the forward and reverse sequences are input into the TCN model to obtain Eq. (1):

$$\hat{y}_{t} = \text{Re} {\text{LU}}\left( {{\text{BatchNorm}}\left( {\overrightarrow {{TCN}} \left( {x_{1} ,x_{2} ,...,x_{t} } \right) \oplus \overleftarrow {{TCN}} \left( {x_{L} ,x_{{L - 1}} ,...,x_{t} } \right)} \right)} \right)$$(1)where \(\:\oplus\:\) denotes the matrix addition operation, \(\:\overrightarrow{TCN}\) and \(\:\overleftarrow{TCN}\) are sequences of forward and reverse TCNs, respectively, ReLU is the activation function, and BatchNorm is a layer of neural network layers. The output result \(\:{\widehat{y}}_{t}\) represents the output at moment t.

-

(2)

The DCov1 one-dimensional convolutional layer is utilized to further optimize the function; thus, the forward and backward TCN models are obtained via two-way depth interaction, and the network architecture is obtained as follows:

$$\:\text{B}\text{T}\text{C}\text{N}={\text{D}\text{C}\text{o}\text{v}}_{1}\left(\overrightarrow{TCN}+\overleftarrow{TCN}\right)$$(2)BTCN denotes a bidirectional TCN. In a bidirectional TCN, \(\:{\widehat{y}}_{q-1}\) is the output of the previous layer. W and b are the weight matrix and bias term of the fully connected layer, so the output \(\:{\widehat{y}}_{q}\) of the residual block of the Qth layer is Eq. (3):

$$\:{\widehat{y}}_{q}=\text{R}\text{e}\text{L}\text{U}\left(f\left(W\times\:{\widehat{y}}_{q-1}+b\right)+{\widehat{y}}_{q-1}\right)$$(3) -

(3)

Since the BTCN uses an inflated convolutional architecture, the sensory field of the network expands as the number of BTCN layers increases, whereas the hidden layer in the middle of the network loses much important feature information. Therefore, in this paper, the MSBTCN model with a bidirectional multiscale TCN is used to utilize the residual features more comprehensively for classification. The result of multiple scales in the BTCN is shown in Eq. (4)

$$\:{\widehat{y}}_{i}=\text{B}\text{T}\text{C}\text{N}\left(Scale=s\right)$$(4)where \(\:{\widehat{y}}_{i}\) represents the output of the BTCN on the s scale. As shown in Fig. 5, the output \(\:\widehat{y}\) of the unidirectional MSTCN with n residuals is as follows:

$$\hat{y} = {\text{Concatenate}}\left( {\hat{y}_{1} ,\hat{y}_{2} , \ldots ,\hat{y}_{n} } \right)$$(5)The improved MSBTCN not only extracts bidirectional multiscale features but also better preserves the key information of the intermediate residual blocks.

BiLSTM

In this paper, bidirectional long short-term memory (BiLSTM) is chosen to model temporal dependencies. BiLSTM is an improvement of the LSTM neural network, consisting of a pre-LSTM and a backward LSTM, which combines forward and backward data features at the current timestep for prediction. Related studies have shown that BiLSTM has significant performance improvement over LSTM in time series prediction. Considering that the change in water quality indicator content in Lake Taihu is a dynamic process, the monitored water quality data are time series data. Therefore, in this paper, the BiLSTM neural network is used to learn bidirectional sequence features from time series data composed of feature information extracted from the MSBTCN to fully exploit the long-term dependent features of limited sample data.

DMAttention

The DMAttention used in this paper is a dependency matrix attention mechanism based on cosine similarity. DMAttention can assign higher weights to feature vectors with higher similarity at arbitrary time intervals, highlighting important moments and enhancing feature representations. For multivariate time series forecasting. The structure of DMAttention is shown in Fig. 6:

As in Fig. 6, the dependency matrix H is constructed first so that \(A \cdot A^{T}\) denotes the inner product operation of the eigenvectors, \(\:{x}_{ij}\) denotes the dependency between moment i and moment j, and the computation is shown in Eq. (6):

The Softmax operation is performed on \(A \cdot A^{T}\) to obtain the weight matrix B, which reflects the dependency between the moments. Perform matrix multiplication of AT and B with transposition to obtain the weighted sum of the features at each moment, multiply the scale factor γ, which varies with iteration, and perform a jump join to obtain the output matrix C, as shown in Eq. (7):

Thus, for dynamic water quality data, DMAttention can highlight important moments and enhance the feature representation to obtain better results than the fully connected attention-based mechanism.

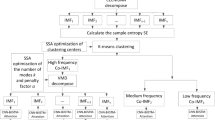

Improved CEEMDAN-MSBTCN-BiLSTM-DMAttention

This study combines three water quality indicators, namely, the hydrogen ion concentration index (pH), dissolved oxygen (DO) and potassium permanganate (KMnO4), obtained from the monitoring station of Taihu Lake in Jiangsu Province. First, in the data processing stage, linear interpolation is used to fill in the missing values and normalize the data to facilitate the subsequent decomposition process. In the time series data decomposition stage, this paper proposes an improved CEEMDAN for mainstream EMD decomposition with the problem of “information leakage” by three methods: multiple training and multiple prediction, single training and multiple prediction, and a sliding window, which are applied to decompose water quality time series indicators. On the basis of the above, this paper combines the MSBTCN, utilizes BiLSTM for prediction and introduces DMAttention to capture bidirectional long-term correlation effectively. Once the decomposition is performed, the resulting IMFs and residual series are used as input features for the deep learning models. In the model evaluation stage, this paper compares and analyzes the four mainstream models, namely, the LSTM, BiLSTM-DMAttention, MSBTCN-BiLSTM-DMAttention, CEEMDAN-MSBTCN-BiLSTM-DMAttention, and three improved CEEMDAN-MSBTCN-BiLSTM-DMAttention models, for comparative analysis. The RMSE and MAPE are selected as model evaluation indices, and an analysis of the degree of optimization of the model evaluation indices reveals that the three improved CEEMDAN-MSBTCN-BiLSTM-DMAttention models proposed in this article have better utility. The flow chart of the specific process is shown in Fig. 7.

Experiment

Dataset

The dataset used in this study was obtained from all Jiangsu Province Lake Tai monitoring stations recorded at four-hour intervals from January 1, 2022, to December 31, 2022, by the Jiangsu Provincial Department of Ecology and Environment on the Chinese government website. hydrogen ion concentration index (pH), dissolved oxygen (DO), and potassium permanganate (KMnO4). There were a total of 11,357 samples, each of which contained pH, DO, and KMnO4. The data at moment t + 1 are used in this paper to predict the data at moment t. Data normalization is performed before model training to avoid the effect of magnitude.

Evaluation metrics and parameter settings

In this work, the root mean square error (RMSE) and MAPE (MAPE), which are commonly used in the fields of regression and prediction to provide effective information in assessing the performance of a model, are used as evaluation metrics. In general, the RMSE is more sensitive to larger errors, whereas the MAPE is more interpretable and eliminates quantiles. In this work, these two evaluation metrics are combined to analyze the model results comprehensively, as shown in Eq. (8)-Eq. (9):

where \(\:{\widehat{y}}_{i}\) represents the predicted value and where \(\:{y}_{i}\) represents the true value. To further visualize and compare the prediction effects of the three improved models, this paper supplements the Spearman rank-order correlation coefficient (SROCC) to contrast with the above metrics. SROCC is capable of handling nonlinear relationships in ratings, as shown in (Eq. (10)):

where \(\:{V}_{i}\) and \(\:{P}_{i}\) denote the ranked positions of \(\:{\widehat{y}}_{i}\) and \(\:{y}_{i}\) in the sequence of predicted and true values. To facilitate readers in understanding all the sliding decomposition window sizes of the models and decomposition methods in this paper, the relevant parameter settings of this paper for the sliding window sizes, the training set test set, the period and the optimizer are shown in Table 6.

Baselines

To better test the effects of the models in this paper, three other models are established in addition to the three improved CEEMDAN methods at the same time.

-

(1)

BiLSTM-DMAttention: Based on the improved CEEMDAN-MSBTCN-BiLSTM-DMAttention in this paper, the CEEMDAN decomposition and MSBTCN model are not added.

-

(2)

MSBTCN-BiLSTM-DMAttention: This is based on (1), with the addition of an improved bidirectional multiscale TCN.

-

(3)

CEEMDAN-MSBTCN-BiLSTM-DMAttention: On the basis of (2), to better observe the enhancement effect of the improved CEEMDAN in this paper, this paper also introduces a model based on the decomposition of the ordinary CEEMDAN method.

Results

Model performance

The final model results of this paper are shown in Table 7, where the BiLSTM-DMAttention and MSBTCN-BiLSTM-DMAttention models perform relatively well on most of the metrics with low RMSE and MAPE values, especially for pH, KMnO4 and DO prediction. This indicates that, compared with LSTM, the incorporation of the improved TCN model and attention mechanism has been effective in predicting water quality. On the other hand, the three improved CEEMDAN methods also yield excellent results in terms of the RMSE and MAPE while solving the “information leakage” problem.

On the basis of the above model results, this paper further calculates the SROCC of the three improved model prediction results, which are shown in Table 8. The results show that the three improved CEEMDAN methods also basically yield better results in terms of the SROCC.

Ablation study

In this paper, the effectiveness of the model is further analyzed by performing an ablation study, and the results are shown in Fig. 8. The comparison reveals that for BiLSTM-DMAttention, for each additional module, there is a significant improvement in the model performance for both DO and KMnO4, although the model performance improvement for pH is not significant. When modeling from BiLSTM-DMAttention, MSBTCN-BiLSTM-DMAttention to the three improved CEEMDAN decomposition methods, MSBTCN-BiLSTM-DMAttention, the reduction in the RMSE is significant, which indicates that the model in this paper is effective; on the other hand, this paper found that MSBTCN-BiLSTM-DMAttention can significantly improve the performance of the model.

Analysis

In this paper, the improved CEEMDAN decomposition method solves the “information leakage” problem and can effectively improve model performance. The results of the three proposed improved methods SW-CEEMDAN, STMP-CEEMDAN and MTMP-CEEMDAN combined with MSBTCN-BiLSTM-DMAttention are 0.499%, 1.939%, and 1.77% and 0.394%, 1.381% and 1.197% higher than the RMSE results of BiLSTM for the three metrics of pH, DO, and KMnO4, respectively, and the RMSE results of BiLSTM for the three metrics of KMnO4. results in 0.394%, 1.381%, and 1.197%, respectively. The accuracy improvements of the three models proposed in this paper are 1.958% and 0.853% in terms of the RMSE and MAPE, respectively.

All three types of EMD methods proposed in this paper can solve the problem of using future values in signal decomposition; however, both SW-EMD decomposition and STMP-EMD need to make an assumption on the number of decompositions of component IMFs, i.e., decompose them according to the number of such assumed decompositions. However, the problem is that the number of decompositions at a certain time may not reach the set minimum value. According to the results of this study, the modeling results of the MTMP-CEEMDAN decomposition method are superior to those of the SW-CEEMDAN and STMP-CEEMDAN decomposition methods.

Conclusion

This paper discusses the problem of “information leakage” in model training via class EMD methods. Taking CEEMDAN as an example, three improved CEEMDAN-MSBTCN-BiLSTM-DMAttention models are proposed to effectively solve the problem of “information leakage” in class EMD. “information leakage” problem. Moreover, to better capture the temporal features, a bidirectional multiscale improved TCN, i.e., the MSBTCN, is used, and DMAttention, an attention mechanism based on the cosine similarity dependency matrix, is introduced. Previously, many scholars have attempted to overcome the problems of incomplete EMD, large reconstruction error, and large computational scale in hybrid approaches that use a combination of empirical decomposition and deep learning. Many scholars have proposed improved EMDs such as VMD and CEEMDAN, but all of them ignore the problem of “information leakage”. Although some scholars have identified the problem of “information leakage” and proposed improvement ideas, they cannot fully explore the advantages and disadvantages of these methods. Therefore, this paper introduces three improvement ideas in a more comprehensive way: SW-EMD decomposition by sliding window decomposition, single training STD-EMD and multiple decomposition prediction on the training set, and MTMP-EMD decomposition of multiple training and multiple prediction. The experimental results show that the model proposed in this paper is able to solve the problem and improve the existing methods, and ultimately, the following conclusions are drawn. (1) Three methods are proposed to improve CEEMDAN, which can effectively solve the problem of “information leakage” that exists in the current models via EMD, which will obtain future data information and features in advance during model training. (2) The proposed improved CEEMDAN-MSBTCN-BiLSTM-DMAttention model can effectively solve the problem of “information leakage” by eliminating the “information leakage”. The DMAttention model, through the ablation study, can prove that its model structure is effective, and at the same time, it can obtain better water quality prediction results, which is suitable for the task of water quality prediction. (3) The three improvement methods proposed in this paper can solve the “information leakage” problem, but the SW-EMD decomposition and STMP-EMD decomposition need to be analyzed for each decomposition of the IMF component, which is the most suitable for the task of water quality prediction. assumptions for each decomposition of IMFs, whereas the MTMP-EMD decomposition does not need assumptions; thus, it can obtain better results, but the computational volume is larger. (4) Compared with the BiLSTM-DMAttention model, the three models proposed in this paper improve the accuracy of the RMSE and MAPE by 1.958% and 0.853%, respectively.

Data availability

Data and code associated with this study have been deposited at https://github.com/riguangliunian/Water-quality-prediction.

References

Rajaee, T. & Boroumand, A. Forecasting of chlorophyll-a concentrations in South San Francisco Bay using five different models. Appl. Ocean Res. 53, 208–217 (2015).

Gardner, E. S. Jr Exponential smoothing: The state of the art. J. Forecast. 4(1), 1–28 (1985).

Harvey, A. C. Forecasting, structural time series models and the Kalman filter. (1990).

De Gooijer, J. G. & Hyndman, R. J. 25 years of time series forecasting. Int. J. Forecast. 22(3), 443–473 (2006).

Bontempi, G., Ben Taieb, S. & Le Borgne, Y. A. Machine learning strategies for time series forecasting. Business Intelligence: Second European Summer School, eBISS 2012, Brussels, Belgium, July 15–21, Tutorial Lectures 2, 2013: 62–77. (2012).

Lim, B. & Zohren, S. Time-series forecasting with deep learning: A survey. Philos. Trans. R. Soc. A. 379(2194), 20200209 (2021).

TEXTO, L. TH Cormen, CE Leiserson, RL Rivest e C. Stein, Introduction toAlgorithms. (2001).

Huang, N. E. et al. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 454(1971), 903–995. (1998).

Hu, Z. et al. A water quality prediction method based on the deep LSTM network considering correlation in smart mariculture. Sensors 19(6), 1420 (2019).

Yuan, L., Ma, Y. & Liu, Y. Ensemble deep learning models for protein secondary structure prediction using bidirectional temporal convolution and bidirectional long short-term memory. Front. Bioeng. Biotechnol. 11, 1051268 (2023).

Li, W. & Jiang, X. Prediction of air pollutant concentrations based on TCN-BiLSTM-DMAttention with STL decomposition. Sci. Rep. 13(1), 4665 (2023).

Kane, M. J. et al. Comparison of ARIMA and Random Forest time series models for prediction of avian influenza H5N1 outbreaks. BMC Bioinform. 15(1), 1–9 (2014).

Masini, R. P., Medeiros, M. C. & Mendes, E. F. Machine learning advances for time series forecasting. J. Econ. Surv. 37(1), 76–111 (2023).

Uddin, M. G. et al. Performance analysis of the water quality index model for predicting water state using machine learning techniques. Process Saf. Environ. Prot. 169, 808–828 (2023).

Zhang, G. P. Time series forecasting using a hybrid ARIMA and neural network model. Neurocomputing 50, 159–175 (2003).

Bengio, Y., Simard, P. & Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (1994).

Baek, S. S., Pyo, J. & Chun, J. A. Prediction of water level and water quality using a CNN-LSTM combined deep learning approach. Water 12(12), 3399 (2020).

Hong, W. C. Chaotic particle swarm optimization algorithm in a support vector regression electric load forecasting model. Energy. Conv. Manag. 50(1), 105–117 (2009).

Li, W. et al. Prediction of dissolved oxygen in a fishery pond based on gated recurrent unit (GRU). Inform. Process. Agric. 8(1), 185–193 (2021).

Hewage, P. et al. Temporal convolutional neural (TCN) network for an effective weather forecasting using time-series data from the local weather station. Soft. Comput. 24, 16453–16482 (2020).

Bi, J. et al. Multi-indicator water quality prediction with attention-assisted bidirectional LSTM and encoder-decoder. Inf. Sci. 625, 65–80 (2023).

Yang, Y. et al. A study on water quality prediction by a hybrid CNN-LSTM model with attention mechanism. Environ. Sci. Pollut. Res. 28(39), 55129–55139 (2021).

Qiu, X. et al. Empirical mode decomposition based ensemble deep learning for load demand time series forecasting. Appl. Soft Comput. 54, 246–255 (2017).

Humphrey, W., Dalke, A. & Schulten, K. VMD: Visual molecular dynamics. J. Mol. Graph. 14(1), 33–38 (1996).

Torres, M. E. et al. A complete ensemble empirical mode decomposition with adaptive noise. In 2011 IEEE international conference on acoustics, speech and signal processing (ICASSP) 4144–4147 (IEEE, 2011).

Qian, Z. et al. A review and discussion of decomposition-based hybrid models for wind energy forecasting applications. Appl. Energy. 235, 939–953 (2019).

Weng, X., Lin, X. & Zhao, S. Stock price prediction model based on empirical mode decomposition and investor sentiment using long short-term memory networks. Computer Applications 42(S2), (2022).

Furlaneto, D. C. et al. Bias effect on predicting market trends with EMD. Expert Syst. Appl. 82, 19–26 (2017).

Gao, R. et al. Walk-forward empirical wavelet random vector functional link for time series forecasting. Appl. Soft Comput. 108, 107450 (2021).

Wang, Z. et al. Monthly ship price forecasting based on multivariate variational mode decomposition. Eng. Appl. Artif. Intell. 125, 106698 (2023).

Agnihotri, J. et al. Higher frozen soil permeability represented in a hydrological model improves spring streamflow prediction from river basin to continental scales. Water Resour. Res. 59(4): e2022WR033075. (2023).

Khalil, M. A. et al. Mapping a hazardous abandoned gypsum mine using self-potential, electrical resistivity tomography, and frequency domain electromagnetic methods. J. Appl. Geophys. 205, 104771 (2022).

Khoei, A. R. et al. An X–FEM technique for numerical simulation of variable-density flow in fractured porous media. MethodsX 10, 102137 (2023).

Zare, N. & Maknoon, R. Urban flood resilience assessment & stormwater management (case study: District 6 of Tehran). Int. J. Disaster Risk Reduct. 102, 104280 (2024).

Larijani, A. & Dehghani, F. An efficient optimization approach for designing machine models based on combined algorithm. FinTech 3(1), 40–54 (2023).

Funding

The research is supported by Hubei Province Emergency Capacity and Safety Production Special Fund (SJZX20230906), and by Humanities and Social Science Foundation of Ministry of Education of China (23YJC910004), and by Open-funding Project of State Key Laboratory of Intelligent Manufacturing Equipment and Technology (IMETKF2023027).

Author information

Authors and Affiliations

Contributions

Conceptualization, X.Y.; methodology, J.L.; formal analysis, X.J.; data curation, X.Y.; supervision, X.J.; writing—original draft preparation, J.L.; writing—review and editing, X.Y.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Yang, X., Li, J. & Jiang, X. Research on information leakage in time series prediction based on empirical mode decomposition. Sci Rep 14, 28362 (2024). https://doi.org/10.1038/s41598-024-80018-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-80018-9