Abstract

Cervical cancer is one of the deadliest cancers that pose a significant threat to women’s health. Early detection and treatment are commonly used methods to prevent cervical cancer. The use of pathological image analysis techniques for the automatic interpretation of cervical cells in pathological slides is a prominent area of research in the field of digital medicine. According to The Bethesda System, cervical cytology necessitates further classification of precancerous lesions based on positive interpretations. However, clinical definitions among different categories of lesion are complex and often characterized by fuzzy boundaries. In addition, pathologists can deduce different criteria for judgment based on The Bethesda System, leading to potential confusion during data labeling. Noisy labels due to this reason are a great challenge for supervised learning. To address the problem caused by noisy labels, we propose a method based on label credibility correction for cervical cell images classification network. Firstly, a contrastive learning network is used to extract discriminative features from cell images to obtain more similar intra-class sample features. Subsequently, these features are fed into an unsupervised method for clustering, resulting in unsupervised class labels. Then unsupervised labels are corresponded to the true labels to separate confusable and typical samples. Through a similarity comparison between the cluster samples and the statistical feature centers of each class, the label credibility analysis is carried out to group labels. Finally, a cervical cell images multi-class network is trained using synergistic grouping method. In order to enhance the stability of the classification model, momentum is incorporated into the synergistic grouping loss. Experimental validation is conducted on a dataset comprising approximately 60,000 cells from multiple hospitals, showcasing the effectiveness of our proposed approach. The method achieves 2-class task accuracy of 0.9241 and 5-class task accuracy of 0.8598. Our proposed method achieves better performance than existing classification networks on cervical cancer.

Similar content being viewed by others

Introduction

Cervical cancer is one of the common malignant tumors that pose a significant threat to women’s health. It is particularly severe in underdeveloped countries or developing countries1. There has been a recent trend of cervical cancer occurring at a younger age. Early detection and treatment can effectively reduce the incidence and mortality of cervical cancer2. Traditional cervical cancer screening involves the collection of samples, staining, and slide preparation, followed by direct observation of cell morphology under a microscope by pathologists to determine the interpretation results3,4. Cervical cell review is challenging and requires experienced pathologists for more accurate diagnosis. However, due to variations in the proficiency of pathologists and severe disparities in the allocation of pathological medical resources, there are still many obstacles in cervical cancer screening, especially in resource-limited developing countries. Many patients are unable to be timely screened in the precancerous stage or may misdiagnosis, resulting in increased difficulty in later-stage treatment5. Therefore, the utilization of computer-aided diagnosis techniques to improve the accuracy and efficiency of pathologists’ review holds significant research value6,7.

Pathological image analysis technology is a hot topic in the field of digital medicine. Computer-aided analysis models have become important tools for pathologists to diagnose diseases and have solved the problem of subjective interpretation by doctors8, improving diagnostic efficiency. Pathological image analysis technology has shown excellent performance in many cell analysis tasks, such as cell detection9, cell segmentation, nuclei segmentation10 and classification11,12. In recent years, more and more researchers have applied machine learning13,14 and deep learning techniques on cervical cell images analysis. Deep learning networks initialized with a random state and reach the optimal parameters through gradient learning methods using back-propagation to ensure the effectiveness of target feature extraction15. Cancer lesion and cancer cell detection are the main steps in pathological image analysis16,17. Researchers have used YOLO18, faster R-CNN19, dense-cascade R-CNN20, and improved MACD R-CNN21 for cervical cell lesions detection, achieving superior performance. In order to learn more effective cell structure features, Chen et al.22 decomposed detection into two sub-tasks and constructed TDCC-Net to improve model performance. Wei et al.23 integrated image-level classification into the cell detection network to filter out false positive cells. In addition, cell and nucleus segmentation can be used for cell/nucleus morphological feature calculation and cell counting, which are important steps in cervical cell pathological image analysis24,25.

End-to-end learning of classification tasks using deep learning methods is also a major research field, which can improve the learning efficiency of models14,26. Deep learning networks have strict requirements for data volume, and a small amount of training data can easily lead to over fitting. Unfortunately, medical image data is often difficult to obtain and annotate. Transfer learning methods can mitigate the problem of insufficient data27. The current mainstream method is to pre-train on the ImageNet dataset and then optimize training on pathological image datasets28,29. Yaman et al.30 used feature pyramid methods to extract cell deep features for classification. Abukhalil et al.31 utilized convolutional neural network (CNN) to extract feature vectors, and then used a recursive neural network for classification to determine cervical lesions. In addition, the interpretation of whole slide images (WSIs) is the main objective of pathological image analysis, which can provide valuable references for pathologists to diagnose. The pixel count of WSIs is too large for current computer technology to handle a whole slide image once. Therefore, WSIs are usually divided into image patches, and these patches are used as data samples for classification32. Alternatively, positive cells are detected from the image patches and represents WSIs for classification33. Cao et al.34 highlighted attention-based feature pyramid networks for detecting lesion cells in image patches and ultimately achieving WSIs classification. Wei et al.23 designed a novel inline connection network and constructs a lightweight model called You Only Look Cytopathology Once (YOLCO) with additional spatial information supervision, and utilized transformer to classify multi-scale and multi-task features.

The above-mentioned classification methods did not consider the issue of confusable sample. Pathological cell classification is a major task in cervical cytology diagnosis35. According to The Bethesda System diagnostic criteria36, there are no clear classification boundaries among categories in cervical cell. Even experienced doctors may have different interpretations, which is allowed in clinical practice. However, in computer assisted analysis tasks, supervised classification based on labeled samples is highly sensitive to samples with divergent interpretations. Different labels assigned to similar samples can greatly affect the performance of the classification model. Lin et al.37 used a synergistic grouping method to mitigate the impact of noisy samples on the network, but they did not specifically address the treatment of confusable samples. Unsupervised learning does not require samples to have fixed labels and can be used to remove the influence of confusable samples on the classification network. Therefore, this paper proposes a method for separating confusable samples based on unsupervised learning, which is applied to cervical cell classification.

The main contributions of this paper are as follows.

a. Utilize contrastive learning for discriminative feature extraction from cervical cell pathological images. Unlike supervised learning, using contrastive learning helps ensure that samples from same class are more similar in feature space, while samples from different classes are positioned farther apart. This approach minimizes the interference from noisy samples, resulting in extracted features with more representative.

b. Propose a label credibility correction method for separating cell samples. This module employs clustering methods to obtain unsupervised learning labels for samples, and maps them back to the true labels to separate confusable samples, thereby constructing a high-quality labeled dataset. This process promotes the stability of the subsequent supervised learning network.

c. Introduce a weight-learnable loss with momentum. The momentum encoder takes into account the direction and magnitude of the gradient transformation from the previous iteration, applying it to learnable loss weights. Compared to traditional loss calculations, this approach can enhance the performance and convergence speed of the classification network.

Cervical cytological pathological images analysis

TBS for reporting cervical cytology

The Bethesda System (TBS) is a commonly used standard for cervical cytology screening36. It was established in 1988 by 50 cytopathologists in Bethesda, Maryland, USA. They abolished the papanicolaou classification system that had been used since 1943 and began adopting descriptive diagnostic reporting. TBS categorizes cervical precancerous lesions into four descriptive interpretations: Low-grade squamous intraepithelial lesion (LSIL), Atypical squamous cells of undetermined significance (ASC-US), High-grade squamous intraepithelial lesion (HSIL), and Atypical squamous cells-cannot exclude HSIL (ASC-H).

LSIL cells are characterized by morphological features including enlarged cell size, abundant and mature cytoplasm, clear boundaries, and nuclei approximately three times larger than normal intermediate cells. The ratio of Nuclear-cytoplasmic is mildly increased, and the distribution of nuclear chromatin is uniform. LSIL cells often exhibit bi-nucleation or multi-nucleation. Figure 1b represents a LSIL cell. ASC-US interpretation is applicable to cellular changes suggesting LSIL. The cell nuclei in ASC-US are approximately 2.5 to 3 times larger than the nuclei of normal intermediate squamous cells. There is a mild increase in the nuclear-cytoplasmic ratio, and the nuclei stain deeply with a slightly irregular distribution of chromatin. Figure 1c illustrates an example of ASC-US cells. HSIL cells are smaller than LSIL cells and have lower cytoplasmic maturity. The size of HSIL cells varies, with a significantly increased nuclear-cytoplasmic ratio. The nuclei stain darker with a uniform distribution of chromatin. The nuclear membrane exhibits irregular contours with distinct notches. Figure 1d depicts an example of HSIL cells. ASC-H interpretation is applicable to cellular changes suggesting HSIL. The cells usually appear individually or in small clusters of fewer than 10 cells. The nuclei of ASC-H cells are approximately 1.5 to 2.5 times larger than normal cells, with a nuclear-cytoplasmic ratio approaching that of HSIL. Figure 1e shows an example of ASC-H cells. Moreover, Atrophic cell, inflammation cell and squamous metaplasia belong to the category of Negative for Intraepithelial Lesion or Malignancy (NILM), which are selectively reported.

According to TBS description, there is no clear boundary between the interpretation of ASC-US and LSIL, and it is easy for pathologists to confuse these two categories during the diagnostic. This kind of diagnostic result is allowed within a certain range of interpretation. Similarly, there is also ambiguity between ASC-H and HSIL. However, for computer-assisted analysis, this confusion can introduce intra-class noisy labels, which is a highly unstable factor, especially for supervised learning classification. Therefore, the robustness of the classification model is greatly challenged.

Synergistic grouping loss for cervical cell images classification

To address the issue of confusion between different categories of cervical cells, researchers have proposed synergistic grouping loss (SGL)37. SGL is specifically designed for multi-class tasks with potential confusion, especially when the distances between different categories of samples in cervical cell interpretation are anisotropic. For example, in Fig. 1, the appearance of \(\{\)ASC-US, LSIL\(\}\) is similarly with \(\{\)ASC-US, HSIL\(\}\). According to TBS, ASC-US interpretation suggests the risk of LSIL, while ASC-H interpretation suggests the risk of HSIL. Therefore, grouping ASC-US, LSIL and ASC-H, HSIL is allowed from a clinical interpretation. Figure 2 illustrates the schematic diagram of cervical cell categories. SGL method decomposes the multi-class problem into multiple sub-classification tasks. The bottom layer is 5-class task by TBS. The interlayer groups ASC-US, LSIL suggests low-grade lesion (SLGL) and ASC-H, HSIL suggests high-grade lesion (SHGL), thus constructing a 3-class task. ASC-US, LSIL, ASC-H, HSIL all belong to abnormal cells in cervical cell diagnosis and are contrasted against normal cells, forming a 2-class task.

SGL calculates three loss functions in one network: \(\hbox {Loss}_2\), \(\hbox {Loss}_3\) and \(\hbox {Loss}_5\). In the case of 3-class task, no penalty is imposed if ASC-H is classified as HSIL, but a penalty is applied if ASC-H is classified as LSIL. Conversely, classifying ASC-H as NILM is an incorrect interpretation and requires a higher penalty. SGL is defined as the sum of \(\hbox {Loss}_2\), \(\hbox {Loss}_3\) and \(\hbox {Loss}_5\). By three synergistic tasks, the tolerance of cervical cell interpretation to inter-class confusable samples can be improved, thus optimizing the network performance.

Cervical cell images classification method

Classification framework

In this study, we propose a cell images classification network based on label credibility correction (LCC-Net). Our method divides the data into typical samples, confusable samples and noisy samples. Figure 3 shows the flowchart of proposed method. Throughout the classification process, the focus of this study is on confusable samples.

Firstly, we extract image discriminative features using self-supervised learning after images augmentation. Then, features extracted by Encoder1 and Encoder2 are concatenated as the input of clustering method. The clusters of samples feature are then mapped to samples with true labels to obtain typical samples and confusable samples. Finally, we train classifier using separated samples with SGL. Based on SGL, we input features of typical samples and confusable samples into multilayer perceptron (MLP), and use Softmax to obtain classification results. We calculate 2-class task, 3-class task and 5-class task losses separately, and combine them to get final classification loss.

Discriminative features extraction based on self-supervised learning

Self-supervised learning does not need manually labeled category labels, and directly uses the data itself as supervision information to learn the expression of samples. Contrastive learning is a discriminative learning method, primarily used for self-supervised tasks. Which core idea is to represent similar samples as closer and dissimilar samples as farther. The feature representations produced by contrastive learning are better suited for clustering. Since contrastive learning does not require sample labels, a image augmentation task is needed to generate positive and negative samples.

We perform data augmentation from multiple aspects such as color channel, texture distribution and contrast respectively for generating positive sample pairs \(\langle {{{\varvec{x}}}, {{\varvec{x}}}{'}} \rangle\), where \({{\varvec{x}}}\) represents the original samples and \({{\varvec{x}}}{'}\) represents the corresponding positive sample group. It is worth mentioning that we did not use geometric stretching, such as affine transformations, to process images in order to minimize the morphological changes of cells. We use individual discrimination to generate negative samples, meaning that for any given image in the dataset, all other images except for its augmentation are considered negative samples. Subsequently, we use CNN as the feature encoders (Encoder1 and Encoder2) to extract features \({{\varvec{f}}}\) and \({{\varvec{f}}}{'}\) from two sets of images. Then, features \(\langle {{{\varvec{f}}}, {{\varvec{f}}}{'}} \rangle\) are separately input into MLP to further obtain the classification features of images. A cosine similarity function, Sim (.), is used to express the similarity between the features of two samples. Contrastive learning loss, \(\hbox {Loss}_{c}\), adopts the commonly used InfoNCE loss, which is defined as follows,

where, \(x^{+} \in x'\) represents positive samples and \(x^{-}\) is negative samples. \(\tau\) is temperature coefficient.

Cervical cell samples separation with label credibility correction (LCC)

Due to the fuzzy boundaries between different categories of cervical cells, the diagnostic criteria of pathologists cannot be completely unified, resulting in the inevitable presence of some noisy labels in sample set. Unsupervised learning methods can better partition similar data into a cluster. In this section, we separate samples into three groups {typical, confusable, noisy} with k-means. Figure 4 illustrates an example of samples separation process with k=5, k is the number of categories.

In features extraction module, Encoder1 and Encoder2 respectively output features \(\langle {{{\varvec{f}}}, {{\varvec{f}}}{'}} \rangle\) of positive sample pairs \(\langle {{{\varvec{x}}}, {{\varvec{x}}}{'}} \rangle\). \({{\varvec{f}}}\) and \({{\varvec{f}}}{'}\) are CNN features. Then, we concatenate \({{\varvec{f}}}\) and \({{\varvec{f}}}{'}\) as the features of sample \({{\varvec{x}}}\), resulting in a features set \(\{f_{x(1)}, f_{x(2)}, f_{x(3)},..., f_{x(n-1)}, f_{x(n)}\}\), where n represents the number of samples. k-means is applied to obtain unsupervised labels for the samples as {[\(f_{x(1)},\mathrm{ulabel_{\textit{l}}}\)], [\(f_{x(2)},\mathrm{ulabel_{\textit{l}}}\)], [\(f_{x(3)},\mathrm{ulabel_{\textit{l}}}\)], ... , [\(f_{x(n-1)},\mathrm{ulabel_{\textit{l}}}\)], [\(f_{x(n)},\mathrm{ulabel_{\textit{l}}}\)]}, \(l \in \{0,1,2,...,k-1\}\), k is the number of clusters. Additionally, each sample has a true label annotated by a pathologist. Based on the true labels of the samples, we manually selected 1000 typical samples from each category to pre-build the features center \(\{f_{t(0)}, f_{t(1)}, f_{t(2)},..., f_{t(k-2)}, f_{t(k-1)}\}\) for each category. Then, distances between the center of unsupervised clusters \(\{f_{u(0)}, f_{u(1)}, f_{u(2)}, ... , f_{u(k-2)}, f_{u(k-1)}\}\) and \(\{f_{t(0)}, f_{t(1)}, f_{t(2)}, ... , f_{t(k-2)}, f_{t(k-1)}\}\) were calculated, which allows us to map the unsupervised clusters to their corresponding true sample sets. For example, if the distance D(\(f_{u(0)}\), \(f_{t(1)}\)) of \(f_{u(0)}\) and \(f_{t(1)}\) is smaller than the distances D(\(f_{u(0)}\), \(f_{t(i)}\)), i\(\in\) {0, 1, 2, ..., k-1} and i \(\ne\) 1, unsupervised label ulabel=0 is mapped to tlabel=1. Samples with ulabel=0 and tlabel=1 are considered typical samples. On the contrary, samples with ulabel=0 and tlabel \(\ne\) 1 are considered confusable samples. Therefore, by using k-means to separate confusable data, we can obtain a sample set with more similarity intra-class features and higher credibility labels.

Private dataset in this paper focus on the 5-class task of cervical cell precancerous lesions. To further mitigate the impact of confusable samples, we introduce SGL for cervical cell image classification. In SGL, confusable samples are not absolute. For example, in 3-class task, tlabel = {HSIL, ASC-H} belongs to the same class. Assuming ulabel = 1 is mapped to tlabel = HSIL, ulabel = 2 is mapped to tlabel = ASC-H, and ulabel = 0 is mapped to tlabel = NILM. Then, if a HSIL sample is clustered into ulabel = 2, it is considered confusable sample in 5-class task, but a typical sample in 3-class task and 2-class task. In other words, the criteria of confusion for a sample is according to classification group. Similarly, if a HSIL sample is clustered into ulabel = 0 and ulabel = 0 is mapped to tlabel = NILM. It is considered incorrect sample in 2-class task, 3-class task and 5-class task, which is considered noisy sample. Since then, we have obtained the grouped label of cervical cell samples. Typical samples and confusable samples can be put into MLP for learning, and the loss is computed based on corresponding group. Noisy samples are not involved in the subsequent classification training tasks.

Weighted synergistic grouping loss for images classification (WSGL)

SGL is the sum of multiple classification task losses. In this paper, we construct three classification task, containing 2-class, 3-class and 5-class. SGL L = \(\hbox {loss}_2\) + \(\hbox {loss}_3\) + \(\hbox {loss}_5\) can improve the tolerance on confusable samples and ultimately achieves multiple classification of cell images.

For a multi-task synergistic method, simply adding up these losses does not highlight the contributions of each task. The performance of classifier strongly depends on the weights of each task, and manually adjusting these weights is a costly and challenging work. Therefore, we introduce learnable weights for each loss,

in which, \(\alpha\), \(\beta\) and \(\gamma\) learn independently during network training. We use homoscedasticity uncertainty38 to learn the weights of loss,

with observation noise scalar \(\sigma _{i}, i \in \{1,2,3\}, \alpha = \frac{1}{\sigma _1^2}, \beta = \frac{1}{\sigma _2^2}, \gamma = \frac{1}{\sigma _3^2}\). Moreover, we initialize the network weights \(\alpha\)=\(\beta\)=\(\gamma\)=1. The sum of three weights is always equal to 3 in training iteration. The formulas are shown as follows,

in which, r is a ratio coefficient. e represents the iteration number.

During experimental process, we observed that simultaneous changes of network parameters and weights have a significant impact on the convergence speed of the model. The network parameters and weights biases are larger, when dealing with training sets containing confusable samples. Therefore, it is desirable to minimize the magnitude of weight changes during training. Based on this consideration, we propose an improved approach using momentum39 to control the magnitude of weight changes. In this approach, the weights of loss for each iteration are computed by adding the current calculation results to the results of previous iteration, and multiplied by momentum coefficients,

Where, m represents momentum coefficient, which decreases as the iteration number increases. This means that the rate of change for weights \(\alpha\), \(\beta\) and \(\gamma\) becomes smaller by incremental training epoch. Momentum update is commonly used to correct the direction and magnitude of gradient during model training. This study investigates its application in correcting the magnitude of weight changes for multi-task loss.

Training strategy and ethic

Unlike traditional cells classification frameworks, the training process of our method is divided into three stages, corresponding to the three innovations discussed in methods section. In the first stage, traditional supervised learning network is replaced by contrastive learning to extract more representative sample features. Encoder 1 and Encoder 2 share same network structure but do not share parameters. After training contrastive learning network, parameters are frozen and used as feature extractors. In the second stage, clustering methods are utilized to obtain a high-quality labeled dataset for subsequent supervised network learning. In the third stage, a supervised classification network based on synergistic grouping is constructed. In this stage, we introduce learnable weights to balance each group’s contribution to the loss. Additionally, momentum encoders are employed to accelerate network learning. In testing process, classification method is different from training process, which does not require clustering methods to optimize the dataset. Therefore, it consists of two stages. Firstly, features are extracted using Encoder 1 and Encoder 2. Secondly, concatenated features from two encoders are input into classifier for final prediction. Figure 5 show the flowchart of test process.

In addition, MobileNetv3 was used as the backbone network for contrastive learning encoder, and InfoNEC was used as contrastive loss. Unsupervised clustering method uses k-means, and we manually select 1,000 typical samples to pre-build sample feature centers for each category, which is also used as the initial point of k-means. Five pathologists jointly select cell images from labeled dataset, and only when all pathologists are in agreement about the labels, did we include the corresponding cell images in the typical sample of manually. Moreover, We generate 5 groups of positive samples through rotation by 90 degrees, vertical flipping, channel transformation (RGB2BGR), random erasing with \(8\times 8\) regions and contrast stretching. \(\tau\) is set to 0.05 in InfoNCE loss function.

The experimental environment is set up using PyTorch 1.10.0 library and Python 3.7 to build the network. Initial learning rate is set to 0.005, and batch size is set to 256. A total of 100 epochs were trained. Training process is run on a workstation with single GPU, specifically the RTX 3090.

All methods of this work were carried out in accordance with relevant guidelines and regulations. Ethical reviews are approved by Biological and Medical Ethics Committee of Northeastern University (No.NEU-EC-2024B001S, Date: Jan. 8, 2024) and Ethics Committee in Clinical Research of the First Affiliated Hospitalof Wenzhou Medical University (No.KY2024-R068, Date: Mar. 20, 2024). Due to the retrospective nature of the study, Biological and Medical Ethics Committee of Northeastern University and Ethics Committee in Clinical Research of the First Affiliated Hospitalof Wenzhou Medical University waived the need of obtaining informed consent.

Experiments and results

Dataset

Two sets of data were used for experimentation in this paper. The private dataset of cervical cell images obtained from 3A hospitals in China. WSIs were obtained using the same scanner with 20\(\times\) magnification. Cell labels were jointly annotated by 5 doctors with rich experience in cell pathology. All positive cells were annotated by three pathologists and then reviewed by a pathologist with over ten years of diagnostic experience. In cases with disputed annotations, consensus was reached through consultation led by another pathology expert.

A total of 58,326 cells were used in experiments, including five categories: HSIL, ASC-H, LSIL, ASC-US and NILM. Table 1 presents the private dataset of cervical cell images. Due to significant imbalance on the quantity of categories in original dataset, especially for ASC-US and NILM, which have a large number of samples. This paper uses the quantity of HSIL data as a standard. For the other categories, a fixed number 12000 samples are selected randomly in order to balance classification dataset. Our private dataset is segmented into 5 categories, which can be combined into 3 or 2 categories according to TBS standard. This enables us to conduct experiments on 2-class, 3-class and 5-class tasks. Normal and NILM share same concept, where NILM corresponds to normal cells according to TBS standard.

The other group dataset is Herlev with 7 categories, namely {carcinoma, light, moderate, severe, columnar, intermediate, superficiel}. Which can also be divided into 3 categories {carcinoma, dysplastic, normal} and 2 categories {abnormal, normal}. Herlev public dataset is shown in Table 2.

Herlev dataset is limited to the size of quantity, so we augmented the dataset using methods such as flipping and mirroring to increase its size by 5 times. The total amount of data after augmentation is 4585.

Experimental setup and evaluation criteria

This paper use cervical cytology private dataset and Herlev dataset to conduct ablation experiments and comparative experiments for evaluating the performance of proposed method. The ablation experiments specifically validated the effectiveness of Label Credibility Correction Module (LCCM) and Weighted Synergistic Grouping Loss (WSGL). Comparative experiments include comparing with existing methods, 5-fold cross-validation and replacing loss function. Additionally, we employ the McNemar’ test to validate significant differences on our proposed method.

The performance of classification method was evaluated using sensitivity (SEN), specificity (SPE), accuracy (ACC) and AUC score. AUC score is calculated by measuring the area under ROC curve, which provides a more intuitive representation of relationship between true positive rate and false positive rate.

Ablation experiment

We design ablation experiments on both private dataset and Herlev dataset to evaluate the effectiveness of proposed Confusable Sample Separation Module (LCCM) and Weighted Synergistic Grouping Loss (WSGL). As clinical diagnosis is more concerned with determining whether a case is normal or abnormal, we computed SEN, SPE, ACC and AUC for 2-class task shown in Table 3.

As shown in Table 3, the performance of classifiers declined without LCCM, particularly, SPE decreased by 0.0845 on private dataset and 0.0459 on Herlev dataset. Classification accuracy also decreased by 0.0508 and 0.0379, respectively. This indicates that the effect of confusable data was effectively mitigated, and LCCM exhibited a higher ability to distinguish mislabeled samples. Similarly, without using WSGL, classification performance on both datasets decreased with a reduction in ACC of 0.0282 and 0.0278, respectively. Therefore, both proposed LCCM and WSGL contribute to an improved accuracy in classification, which effectively alleviate the issue of label ambiguity in clinical interpretation of cervical cells.

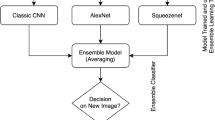

Comparative experiments

We compare the performance of our method with existing studies. Yolov818 and R-CNN19 are proposed as object detector. We input patch-level images into both detectors to detect cells and calculate the accuracy of cell-level classification. Since patch-level images in Herlev public dataset did not found, we only set up validation experiments on our private dataset. MobileNetv340 is a lightweight classification network. MSE-Net26 considers both the mean and standard deviation of confidence scores and effectively integrates three standard classifiers. Alquran et al.24, Basak et al.14 and Yaman et al.30 use CNN to extract features, which are then input into machine learning classifiers after feature selection (Namely FFC-Net24, WGO-Net14, PSP-Net30 in this paper). PSP-Net proposes a pyramid-based feature extraction model. The input for all the above classifiers is cell images. Table 4 shows the accuracy of multiple groups classification on private dataset and Herlev public dataset. The training/validation set split ratio for experiments is 8:2.

As shown in Table 4, Yolov8 and R-CNN perform may well in terms of detection capability. However, due to the need to balance the regression loss of bounding boxes and the classification loss of targets during training, the classification ability of these detectors is not good enough. MobileNetv3 and MSE-Net, as deep learning classifiers, significantly improve the classification performance compared to detectors. Feature engineering plays a crucial role in classification tasks, and well-designed features can facilitate better performance of subsequent classifiers. Therefore, FFC-Net, WGO-Net and PSP-Net employ effective feature selection methods, such as PCA, WGO, NCA, to reduce feature redundancy and improve classification performance. Our method uses MobileNetv3 as the backbone network for feature extraction. Compared with MobileNetv3, LCC-Net has improved the classification accuracy on both datasets. On private dataset, the performance improvement is more evident for the 5-class task with an increase in accuracy of 0.0566. The highest classification accuracy for the 2-class task reached 0.9241. On Herlev dataset, there is an improvement in the classification accuracy at all tasks.

In addition, we performed 5-fold cross-validation to further evaluate the performance of different classification methods. Due to the limited data volume of the Herlev dataset, we only used private dataset for cross-validation. Table 5 displays 2-class task accuracy of R-CNN, MSE-Net, PSP-Net and our method in cross-validation.

From Table 5, it can be observed that PSP-Net and LCC-Net have higher classification accuracy compared to other deep learning detectors and classifiers. This further demonstrates the significant contribution of feature engineering to improving classification performance. Comparing with other existing studies, our proposed method shows more effects on multi-class task.

To further validate the proposed learnable loss function in this paper, we compared WSGLoss with Cross-Entropy Loss (CELoss) and SGL (SGLoss)37. This experiment computed the classification accuracy for each class, which shown as Table 6.

From Table 6, it can be observed that our method significantly improves the performance on ASC-H, ASC-US and NILM, especially with a notable increase of 0.0879 in accuracy for ASC-US compared to CELoss. HSIL and LSIL belong to easier classification categories, showing less improvement. The SGLoss obtained higher accuracy for HSIL classification. Our method achieves an average classification accuracy of 0.8598 in 5-class task, which is an improvement of 0.0429 compared to CELoss and 0.0204 compared to SGLoss. Therefore, our proposed learnable weighted loss approach demonstrates better performance in cervical cell image classification.

McNemar’s test

McNemar’s test41 is used to compare the statistical differences of classifiers in binary classification. It is based on cross-tabulation of mis-classifications, where the observed frequencies of mismatched errors are computed and tested using binomial distribution. In order to reject the null hypothesis of two models being similar, the p-value of test should be smaller than 0.05.

As shown in Table 7, we calculated p-values of proposed method and existing studies, and results were all below 0.05. Therefore, null hypothesis can be rejected, indicating a significant difference between our proposed method and existing classification methods.

Discussions

Confusable samples are prone to occur in classification tasks, because of the specificity of classification objectives and the regularity of annotations. Moreover, there is often an imbalance in sample distances among different categories. Through experiments, the proposed confusable sample separation module and SGL with learnable weights showed promising performance in cervical cytology classification.

Clustering analysis of cervical cell

In the research of cervical cell image analysis, the major problem faced in classification is sample confusion. TBS standard analyzes the types of precancerous lesions based on cytological morphology. This subjective interpretation can lead to diagnostic differences with various experience and standards of doctors. To illustrate the confusion among all samples, we use k-means to cluster all 58,326 samples and mapped the unsupervised clusters to true labels. Figure 6 shows confusion matrix between unsupervised cluster and true labels.

In Fig. 6, matrix columns represent the number of samples in each cluster of k-means, while rows represent true labels annotated by pathologists. From confusion matrix, it can be observed that there is a high level of confusion between HSIL and ASC-H as well as between LSIL and ASC-US, which confirms issue in clinical interpretation. Additionally, 835 cases of ASC-US are clustered into NILM. Due to overlapping diagnostic criteria between these two categories, making it difficult for doctors to reach a unified standard for diagnosis. This also poses significant challenges for computer analysis.

According to confusion matrix and considering feature distances, we separated typical samples, confusable samples and noise samples for classification training. Figure 7(a) shows t-SNE distribution of cervical cell features and Fig. 7b shows t-SNE distribution of typical cervical cell features. Where visualized features are extracted using contrastive learning network. 1000 samples were randomly selected from each category for visualization, in order to provide a more intuitive distribution of sample feature in Fig. 7.

From Fig. 7a, it can be directly observed that there are differences in the sample space of 5 categories \(\{\)HSIL, ASC-H, LSIL, ASC-US, NILM\(\}\). Furthermore, the distribution of {HSIL, ASC-H} is relatively close and the feature space of {LSIL, ASC-US} is also similar, which is consistent with TBS interpretation standard. However, the samples of ASC-US and NILM are also close in distance, which will significantly impact cell classification and clinical interpretation. The green dots in Fig. 7a represent LSIL cells, and we selected three LSIL samples {Cell-1, Cell-2, Cell-3} for analysis. Among them, Cell-1 is located within LSIL cluster, which is a typical sample. Cell-2 is located within ASC-US cluster, and its cell label is incorrect for 5-class task but correct for 2-class and 3-class tasks, making it a confusable sample. Cell-3 is within NILM cluster and is incorrectly labeled for all classification tasks, making it a noise sample. As original annotated dataset, both confusable samples and noise samples exist, which is an impact on the performance of classifiers. In Fig. 7b, typical samples exhibit a more concentrated feature distribution of intra-class, indicating that CNN can learn better network parameters for typical cell classification. Additionally, we employed synergistic grouping method to incorporate confusable samples into network learning, aiming to improve network’s generalization ability.

Grouping loss for cell classification

For CNN, fast convergence during training is an important optimization direction. The size and distribution of training sample set directly affect the robustness of training. We propose a trainable method for loss weight and use momentum to constrain the magnitude of weight change, aiming to improve the learning efficiency of network. In comparative experiments, it can be observed from Table 6 that our proposed WSGLoss method achieves a certain improvement in classification accuracy compared to SGLoss. Figure 8 shows the curves of training set accuracy, training loss and weight change using above two losses.

As shown in Fig. 8, the first row images represents training accuracy during network learning. It can be see that accuracy for 2-class task, 3-class task and 5-class task all reach above 0.95. The second row images depicts loss variation curve, and the weighted sum of three sub-classification losses is smaller than 5-class task with WSGLoss. Furthermore, it can be observed that WSGLoss decreases faster during network training, which validate that our proposed trainable weights based on momentum can improve the convergence speed of classification network. The third row images displays the trend of weight change, where {\(\alpha , \beta , \gamma\)} respectively represent the weights of sub-classification losses for {2-class, 3-class, 5-class}. As the number of iterations increases in training, \(\alpha\) tends to increase, while \(\beta\) and \(\gamma\) decrease. Therefore, 2-class task contributes more during network learning.

K-means analysis

K-means, as a commonly used unsupervised learning method, presents a stability issue in its learning outcomes. In addition to the choice of k, parameters “Init” and “Random state” are two independent yet related parameters. The “Init” determines how the cluster centers are initialized, and it can take options such as random initial points, distributed initial points, and specifying initial points. The distributed method selects initial center points as far apart as possible. “Random state” controls the seed of the random number generator, which influences all random operations within k-means algorithm. Its primary purpose is to ensure the reproducibility of results. To validate the stability of outcomes from k-means, we conducted 3 groups of experiments. The validation data came from the publicly available CIFAR-10, from which we selected 4 categories for better visualization of clustering results. Results are shown as Fig. 9.

In Fig. 9, rows represent results obtained with same “Init” parameter. We observed that clustering results from experiments (a) and (b) were consistent, indicating that “Random state” parameter can ensure the stability of k-means. Similarly, experiments (d) and (e) corroborate this finding. In contrast, (c) and (f) utilized random seed points without “Random state”, resulting in varied outcomes. As shown in experiments (g) (h) and (i), when “Init” parameter is set to specified initial points, the effect of “Random state” completely diminishes. This is because the selection of initialization center points has already been explicitly defined, and k-means will not apply random operations to choose initial centers. In this study, we employed the method of specified initial points to ensure the stability of k-means clustering.

Limitations and future works

The main limitation of our method is inability to achieve end-to-end training. We will attempt to address this issue in our next study. Moreover, clinical validation is the most direct and effective way to test a method. Although we have invited experienced pathologists to meticulously evaluate our study, as well as done clinical testing on a small dataset. However, this does not fully capture the value of the study.

In future works, we will apply our research extensively to aid in clinical diagnosis, with a view to obtaining a more complete assessment and meaningful directions for improvement. In addition, the idea behind our method could be applied to classification for other multi-class and subtype cell images, like thyroid cells, and histopathological images, such as breast cancer42 and gliomas43. We will validate it next, thus expanding the application of our method to a wider range of classification research fields.

Conclusions

In this paper, we proposed a novel unsupervised-based method for label credibility correction in cervical cell images classification. The method leverages contrastive learning to learn more effective features for cervical cell images. Subsequently, this method analyzes the characteristics of confusable categories in cervical cell diagnosis based on TBS, and uses clustering methods to obtain unsupervised labels. Then, cell samples are separated based on their calibration labels. Finally, we incorporate learnable weights into SGL based on momentum to enhance the robustness of classification network. In addition, we set up ablation experiments and comparative experiments to validate the effectiveness of our proposed method. Experiments demonstrate that our method achieves superior classification performance on cervical cell datasets.

Data Availability

The data presented in this study are available on request from corresponding author.

References

Arbyn, M. et al. Estimates of incidence and mortality of cervical cancer in 2018: a worldwide analysis. Lancet Glob. Health 8(2), e191–e203 (2020).

Varalakshmi, P. & Swetha, R. A comparative analysis of machine and deep learning models for cervical cancer classification. In 2021 International Conference on System, Computation, Automation and Networking (ICSCAN) pp. 1-6 (2021).

Mustafa, W. A., Halim, A., Jamlos, M. A. & Idrus, S. Z. S. A Review: Pap smear analysis based on image processing approach. J. Phys. Conf. Ser. 1529(2), 022080 (2021).

Mustafa, W. A., Halim, A. & Ab Rahman, K. S. A Narrative Review: Classification of Pap Smear Cell Image for Cervical Cancer Diagnosis. Oncologie (Tech Science Press)22(2) (2020).

Rahaman, M. M. et al. A survey for cervical cytopathology image analysis using deep learning. IEEE Access 8, 61687–61710 (2020).

Jiang, H. et al. Deep learning for computational cytology: A survey. Med. Image Anal. 84, 102691 (2022).

Idlahcen, F., Idri, A. & Goceri, E. Exploring data mining and machine learning in gynecologic oncology. Artif. Intell. Rev. 57, 20 (2024).

Vaiyapuri, T. et al. Modified metaheuristics with stacked sparse denoising autoencoder model for cervical cancer classification. Comput. Electr. Eng. 103, 108292 (2022).

Mahmood, T., Arsalan, M., Owais, M., Lee, M. B. & Park, K. R. Artificial intelligence-based mitosis detection in breast cancer histopathology images using faster R-CNN and deep CNNs. J. Clin. Med. 9(3), 749 (2020).

Goceri E. Nuclei segmentation using attention aware and adversarial networks. Neurocomputing579 (2024).

Allehaibi, K. H. S., Nugroho, L. E., Lazuardi, L., Prabuwono, A. S. & Mantoro, T. Segmentation and classification of cervical cells using deep learning. IEEE Access 7, 116925–116941 (2019).

Deng, J., Lu, Y. & Ke, J. An accurate neural network for cytologic whole-slide image analysis. In: Proc. Australasian Computer Science Week Multiconference. pp. 1-7 (2020).

Xiao, F., Wen, J. & Pedrycz, W. Generalized divergence-based decision making method with an application to pattern classification. IEEE Trans. Knowl. Data Eng. 35(7), 6941–6956 (2022).

Win, K. P., Kitjaidure, Y., Hamamoto, K. & Myo Aung, T. Computer-assisted screening for cervical cancer using digital image processing of pap smear images. Appl. Sci. 10(5), 1800 (2020).

Diniz, N. & D., T. Rezende, M., GC Bianchi, A., M. Carneiro, C., Js Luz, E., JP Moreira, G., JF Souza, M.,. A deep learning ensemble method to assist cytopathologists in pap test image classification. J. Imaging 7(7), 111 (2021).

Liang, Y. et al. Comparison detector for cervical cell/clumps detection in the limited data scenario. Neurocomputing 437, 195–205 (2021).

Sompawong, N., Mopan, J., Pooprasert, P., Himakhun, W., Suwannarurk, K., Ngamvirojcharoen, J., & Tantibundhit, C. Automated pap smear cervical cancer screening using deep learning. In: 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). pp. 7044-7048 (2019).

Xiang, Y. et al. A novel automation-assisted cervical cancer reading method based on convolutional neural network. Biocybern. Biomed. Eng. 40(2), 611–623 (2020).

Li, X. & Li, Q. Detection and classification of cervical exfoliated cells based on faster R-CNN. In 2019 IEEE 11th international conference on advanced infocomm technology (ICAIT). pp. 52-57 (2019).

Yi, L., Lei, Y., Fan, Z., Zhou, Y., Chen, D. & Liu, R. Automatic detection of cervical cells using dense-cascade R-CNN. In Pattern Recognition and Computer Vision: Third Chinese Conference, PRCV 2020, Nanjing, China, October 16-18, 2020, Proceedings, Part II 3 (pp. 602-613). Springer International Publishing (2020).

Ma, B., Zhang, J., Cao, F. & He, Y. MACD R-CNN: An abnormal cell nucleus detection method. IEEE Access 8, 166658–166669 (2020).

Chen, T., Zheng, W., Ying, H., Tan, X., Li, K., Li, X., & Wu, J. A task decomposing and cell comparing method for cervical lesion cell detection. IEEE Transactions on Medical Imaging41(9), 2432-2442 (2022).

Wei, Z., Cheng, S., Hu, J., Chen, L., Zeng, S. & Liu, X. An efficient cervical whole slide image analysis framework based on multi-scale semantic and spatial deep features. Preprint at arXiv:2106.15113 (2021).

Amorim, J. G. A., Matias, A. V., Cerentini, A., Macarini, L. A. B., Onofre, A. S., Onofre, F. B. & von Wangenheim, A. Comparative analysis of deep learning approaches for AgNOR-stained cytology samples interpretation. Preprint at arXiv:2210.10641 (2022).

Wang, P. et al. Automatic cell nuclei segmentation and classification of cervical Pap smear images. Biomed. Signal Process. Control 48, 93–103 (2019).

Pramanik, R., Banerjee, B. & Sarkar, R. MSENet: Mean and standard deviation based ensemble network for cervical cancer detection. Eng. Appl. Artif. Intell. 123, 106336 (2023).

Pramanik, R., Biswas, M., Sen, S., de Souza Júnior, L. A. & Papa, J. P. A fuzzy distance-based ensemble of deep models for cervical cancer detection. Comput. Methods Programs Biomed. 219, 106776 (2022).

Manna, A., Kundu, R., Kaplun, D., Sinitca, A. & Sarkar, R. A fuzzy rank-based ensemble of CNN models for classification of cervical cytology. Sci. Rep. 11(1), 14538 (2021).

Wang, P., Wang, J., Li, Y., Li, L. & Zhang, H. Adaptive pruning of transfer learned deep convolutional neural network for classification of cervical pap smear images. IEEE Access 8, 50674–50683 (2020).

Yaman, O. & Tuncer, T. Exemplar pyramid deep feature extraction based cervical cancer image classification model using pap-smear images. Biomed. Signal Process. Control 73, 103428 (2022).

AbuKhalil, T., Alqaralleh, B. A. & Al-Omari, A. H. Optimal deep learning based inception model for cervical cancer diagnosis. Comput. Mater. Contin 72, 57–71 (2022).

Gupta, M., Das, C., Roy, A., Gupta, P., Pillai, G. R. & Patole, K. Region of interest identification for cervical cancer images. In 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI). pp. 1293-1296E (2020).

Cheng, S. et al. Robust whole slide image analysis for cervical cancer screening using deep learning. Nat. Commun. 12(1), 5639 (2021).

Cao, L. et al. A novel attention-guided convolutional network for the detection of abnormal cervical cells in cervical cancer screening. Med. Image Analysis 73, 102197 (2021).

Singh, S. K. & Goyal, A. A stack autoencoders based deep neural network approach for cervical cell classification in pap-smear images. Recent Adv. Comput. Sci. Commun. (Formerly: Recent Patents on Computer Science) 14(1), 62–70 (2021).

Nayar, R. & Wilbur, D. C. The Bethesda system for reporting cervical cytology: a historical perspective. Acta Cytol. 61(4–5), 359–372 (2017).

Lin, H. et al. Dual-path network with synergistic grouping loss and evidence driven risk stratification for whole slide cervical image analysis. Med. Image Anal. 69, 101955 (2021).

Kendall, A., Gal, Y. & Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proc. IEEE conference on computer vision and pattern recognition. pp. 7482-7491 (2018).

He, K., Fan, H., Wu, Y., Xie, S. & Girshick, R. Estimates of incidence and mortality of cervical cancer in 2018: a worldwide analysis. In Proc. IEEE/CVF conference on computer vision and pattern recognition. pp. 9729-9738 (2020).

Howard, A., Sandler, M., Chu, G., Chen, L. C., Chen, B., Tan, M., ... & Adam, H. Searching for mobilenetv3. In Proc. IEEE/CVF international conference on computer vision. pp. 1314-1324 (2021).

Pembury Smith, M. Q. & Ruxton, G. D. Effective use of the McNemar test. Behav. Ecol. Sociobiol. 74, 1–9 (2020).

Nakach, F. Z., Idri, A. & Goceri, E. A comprehensive investigation of multimodal deep learning fusion strategies for breast cancer classification. Artif. Intell. Rev. 57, 327 (2024).

Goceri, E. Vision transformer based classification of gliomas from histopathological images. Expert Systems With Applications241 (2024).

Acknowledgements

This research was financially supported by Clinical Research Foundation of Zhejiang Medical Association, China (No.2021ZYC-A163). Shu Jin received this award.

Author information

Authors and Affiliations

Contributions

Conceptualization, W.P., S.J. and H.J.; methodology, W.P. and H.J.; validation, W.P. and Y.Q.; formal analysis, W.P., Y.M.; investigation, W.P., S.J. and Y.M.; resources, W.P. and S.J.; data curation, W.P. and Y.M.; writing-original draft preparation, W.P. and H.J.; writing-review and editing, W.P. and S.J.; visualization, W.P. and Y.Q.; project administration, H.J.; funding acquisition, S.J. All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Pang, W., Qiu, Y., Jin, S. et al. Label credibility correction based on cell morphological differences for cervical cells classification. Sci Rep 15, 2 (2025). https://doi.org/10.1038/s41598-024-84899-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-84899-8