Abstract

The use of naturally available materials such as metakaolin (MK) can greatly reduce the utilization of emission intensive materials like cement in the construction sector. This would reduce the stress on depleting natural resources and foster a sustainable construction industry. However, the laboratory determination of 28 day compressive strength (C-S) of MK-based mortar is associated with several time and resource constraints. Thus, this study was conducted to develop reliable empirical prediction models to assess CS of MK-based mortar from its mixture proportion using machine learning algorithms like gene expression programming (GEP), extreme gradient boosting (XGB), multi expression programming (MEP), bagging regressor (BR), and AdaBoost etc. A comprehensive dataset compiled from published literature having five input parameters including water-to-binder ratio, mortar age, and maximum aggregate diameter etc. was used for this purpose. The developed models were validated by means of error metrics, residual assessment, and external validation checks which revealed that XGB is the most accurate algorithm having testing \(\:{\text{R}}^{2}\) of 0.998 followed by BR having \(\:{\text{R}}^{2}\) values equal to 0.946 while MEP had the lowest testing \(\:{\text{R}}^{2}\) of 0.893. However, MEP and GEP algorithms expressed their output in the form of empirical equations which other black-box algorithms couldn’t produce. Moreover, interpretable machine learning approaches including shapely additive explanatory analysis (SHAP), individual conditional expectation (ICE), and partial dependence plots (PDP) were conducted on the XGB model which highlighted that water-to-binder ratio and sample age are some of the most significant variables to predict the C-S of MK-based cement mortars. Finally, a graphical user interface (GUI) was made for implementation of findings of this study in the civil engineering industry.

Similar content being viewed by others

Introduction

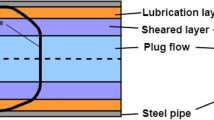

Mortars hold paramount importance in masonry construction since they are used to join bricks/stones in a masonry structure. Generally, mortars are made up of binding materials, aggregates, admixtures, and water, etc. Cement is one of the most significant contributors of \(\:{\text{C}\text{O}}_{2}\) emissions from the construction industry, being the most widely used binder worldwide1. It has been reported that more than 7% of \(\:{\text{C}\text{O}}_{2}\) emissions worldwide originate directly from the cement industry2. The current annual production is 4,000 million tons, with projections indicating a rise to 6,000 million tons by 20603. The release of greenhouse gas \(\:{\text{C}\text{O}}_{2}\) in the atmosphere by virtue of cement production results in climate change and global warming. This leads to melting of icecaps, increased global temperatures, and brings about extreme weather changes. Thus, efforts are being made to discover new materials to replace cement in the mortars to reduce the negative effects of cement production on the environment and to make the mortars more durable, cost-effective, and eco-friendly4,5. The use of alternative materials to replace cement in concrete and mortar will help to lessen the utilization of depleting natural resources such as limestone and gypsum since these the production of cement calls for huge amounts of these precious natural resources. This will also lead to a decrease in carbon emissions within the construction sector and reduce the adverse effects of human development on the natural environment. Moreover, the increased utilization of natural materials in cement mortars will help reduce the costs of construction. In this context, metakaolin (MK) is a promising ingredient that may be added to mortars in place of cement to enhance their cementitious qualities. It is an aluminosilicate that is thermally activated and occurs naturally as a white mineral. It is produced by kaolin clay heating to elevated temperatures between 650 oC and 800oC6,7. As a pozzolanic material, MK combines with calcium hydroxide Ca(OH)2 in water forming compounds having cementitious qualities8. Figure 1 illustrates how the use of MK in place of cement improves the rheological properties, reduces the permeability and increases the consistency of mortars9. Although there has been much research conducted about the utilization of MK and similar materials as a replacement of cement in mortar mixes, there is no explicit method available for optimal design of MK-based cement mortars. This is because the fresh and hardened mortar materials mechanical properties containing mineral admixtures like MK, fly ash, or limestone powder etc. exhibit a highly non-linear behaviour related to their mixture constituents. Due to this non-linear behaviour, it is particularly difficult to develop an empirical formula for predicting mechanical properties using traditional statistical and mathematical models10. In addition to MK, several other materials have been used to make sustainable mortars. For instance, Elfadaly et al.11 developed high-performance geopolymer mortar incorporating pumice powder and rice straw ash as sustainable filler materials. The authors replaced rice husk ash and pumice powder with blast furnace slag at 0%, 15%, 25%, and 30% by volume and revealed that compression strength generally decreases with an increase in the pumice and rice husk ash content.

Accurately determining various mechanical characteristics is necessary for effective utilization of MK-based cement mortars in the construction industry. Out of these mechanical properties, compressive strength (C-S) is one of the most important properties since it is an indicator of overall mortar quality and durability. The C-S test is one of the most common ways for investigating mortar durability, performance, and reliability12,13. However, it has been highlighted time and again that an exhaustive experimental scheme is inevitable to get an idea about the relationship of C-S with the mixture components of admixture containing mortars. The mortar samples casted using different types and sizes of aggregates, variable water-to-cement ratios, and different dosages of superplasticizer (SP) etc. must be tested for compression strength to get a clear idea about the relationship of different mixture components with the C-S. Despite the importance of such an inclusive experimental program, it has several limitations. First of all, a combination of several loading conditions and other physical conditions is necessary for getting a good idea about the relationship of mixture composition and other variables with CS14. In addition, these types of experiments must be carried out in controlled environments to ensure minimum distractions and interferences on the testing procedures. However, ensuring these would result in wastage of significant time and precious resources15. The underlying complexities of the testing procedures and chances of human errors in conduction of such comprehensive experiments further adds to the problem. Furthermore, the most commonly used tests to access mechanical properties of mortars such as C-S are destructive in nature meaning that the testing sample gets destroyed during the experiment providing another way of generating construction waste. In conclusion, the conventional testing methodologies have severe limitations. In this regard, scientists have come up with the innovative solution to use machine learning (ML) techniques for forecasting different properties of construction materials to overcome the limitations highlighted above16. The use of these ML methodologies for this purpose will save time, effort, and cost which is otherwise required in case of laboratory testing17. In Sect. 1.2, a thorough review of previously conducted research on utilization of contemporary ML techniques for prediction of different mortar and concrete properties is given.

Recent decades have seen several attempts to create reliable empirical predictive models to forecast different mechanical properties of cementitious composites using ML techniques to overcome limitations of traditional testing techniques. ML is an area of Artificial Intelligence (AI) that utilized statistics and mathematics to enable machines (computers) to identify patterns and correlations in data. Predictions for new data are then made using the knowledge gained from the data, without the need for direct human involvement. Recently, ML algorithms, with their capability of modelling complex relations between various inputs and outputs, have become the most promising way to predict complex properties of different materials18,19. In civil engineering, several machine learning techniques, including as random forests (RF), XGB, GEP, and artificial neural networks (ANN), have been extensively used to forecast different soil compaction and categorization characteristics20,21,22,23, load carrying capacity of composite concrete columns24,25,26,27, mechanical properties of innovative concrete composites28,29, slope failure and landslide susceptibility etc30,31,32,33. These studies help accurately estimate material attributes, including compressive strength, which necessitates labour- and resource-intensive laboratory studies otherwise. When carefully trained, ML models can predict outcomes far more efficiently, saving a great deal of money, labour, expertise, and time in the lab. The engineering community has seen a substantial rise in the use of ML for the resolution of structural and civil engineering issues34. One major benefit of ML models is their capacity to automatically analyze and discover relationships between many parameters that arise in complicated engineering situations, such as predicting corrosion, assessing the impact of fiber additions, and predicting strength. ML has the potential to revolutionize the civil engineering industry by providing a powerful tool for analyzing and understanding material properties and construction processes by replacing traditional practices and increasing efficiency, accuracy and efficiency. By identifying hidden patterns and trends in large datasets, ML algorithms provide valuable insights that enhance decision-making and promote efficiency and sustainability. A significant application of ML in civil engineering is creating predictive models to estimate the compressive strength of concrete and mortar. Proven effective in optimizing material mixtures, these models minimize the need for extensive lab testing. Additionally, ML in civil engineering supports data-driven decision-making, fostering more sustainable and eco-friendly construction methods. ML is a powerful tool for advancing environmental sustainability in civil engineering through improved material selection and waste reduction. In the field of concrete technology, ML provides a powerful advantage, allowing the development of predictive models that accurately anticipate concrete properties given specified input parameter.

Literature search

The effectiveness of ML algorithms like ANN, RF, decision tree (DT), artificial neuro fuzzy inference systems (ANFIS) etc. to predict different mechanical properties of construction materials has been thoroughly reported in the literature. For example, Handousa et al.35 utilized two algorithms including ANN and Gaussian Process Regression (GPR) to predict compression strength of concrete-filled double-skin steel tubular columns due to their widespread uses in the construction industry. The authors used experimental data from their own laboratory experimentation and highlighted that ANN outperformed its counterpart to predict CS by achieving lower MAE and higher correlation with actual values. Also, the authors employed shapely additive analysis which revealed that the thickness of the external plate and compressive strength of concrete are some of the most predominant factors for determining the output. Also, Abdellatief et al.36 utilized ML for determining the strength in compression and porosity of innovative foam glass for practical applications. The authors used various algorithms including s gradient boosting (GB), random forest (RF), Gaussian process regression (GPR), and linear regression (LR) etc. in a comparative manner and concluded that GPR model was the most accurate with R-values of 0.91 and 0.82 for porosity and compressive strength respectively. Regarding the utilization of ML to determine properties of sustainable mortar, Faraj et al.37 utilized ANN, linear and non-linear regression, and M5P-tree algorithms to predict tensile strain capacity of cement mortars having fibre reinforcement which are commonly known as engineered cementitious composites (ECC). The authors collected 115 experimental results of tensile strain capacity of ECC from punished literature and used them to train the algorithms for tensile strain capacity prediction. The authors assessed the accuracy of developed predictive models using various error evaluation metrices and highlighted that ANN predicted tensile strain capacity with highest accuracy (\(\:{\text{R}}^{2}=0.98\)). Also, Joseph Mwiti Marangu38 utilized a support vector machine (SVM) and ANN algorithms for the purpose of predicting compressive strength of cement mortars having calcined clay as a replacement of cement in various ratios ranging from 30 to 50%. The study utilized laboratory prepared samples to calculate the C-S of cement mortar at the age of 2,7, and 28 days and utilized the resulting data to build SVM and ANN models. The results revealed that ANN predicted mortar strength with greater accuracy having testing \(\:{\text{R}}^{2}\) of 0.95 compared to SVM \(\:{\text{R}}^{2}\) of 0.88. Similarly, Çalışkan et al.39 developed cement mortar by blending fly ash and nano calcite with cement. The samples were cured and tested for C-S after 1,3,7, 28, and 90 days and the data were used to develop predictive models for C-S using SVM and extreme learning machine (ELM) algorithms. The authors compared actual and predicted results using mean squared error (MSE) and \(\:{\text{R}}^{2}\) and concluded that SVM predicted C-S with greater accuracy having \(\:{\text{R}}^{2}\) and MSE values equal to 0.982 and 3.882 compared to ELM values of 0.91 and 20.39. In the same way, Ozcan et al.40 made use of RF, SVM, and AdaBoost techniques on a dataset of more than 250 specimens of cement mortar made with waste rubber tire and blast furnace slag. The ML models were developed for prediction of a single output parameter i.e., C-S by using four input features including age of specimen, cement content, and the quantities of slag and rubber tire waste. The comparison of performance of ML algorithms revealed that AdaBoost predicted C-S of cement mortar with highest accuracy (\(\:{\text{R}}^{2}=0.9831\)). Moreover, in a recently conducted study by Zhiqiang Chen41, the flexural strength and compressive strength of eco-friendly cement mortar made by replacing cement with industrial by-products were predicted using XGB and bagging regressor. The author casted mortar samples by replacing cement with fly ash and rice husk ash in various ratios ranging from 20 to 40%. The study considered four input features containing cement content, specimen age, rice husk ash content, and fly ash content to predict flexural and C-S. The contrast of both algorithms showed that XGB proved to be more accurate than bagging regressor having \(\:{\text{R}}^{2}\) value equal to 0.98 for C-S and 0.94 for flexural strength. In another research conducted by Wang et al.42, the C-S prediction of cement mortar using dynamic elasticity data was investigated. The authors developed a predictive model based on back propagation neural network (BPNN) which is further based on the Bayesian theorem which helped to overcome the problem of overfitting significantly. The authors gathered a dataset of almost 160 experimental results of cement mortar compressive strength having slag as a replacement of cement. The error evaluation of BPNN model revealed that there exists a very strong correlation between actual and anticipated C-S values having \(\:{\text{R}}^{2}\) equal to 0.95. A summary of previous studies regarding different properties prediction of cement mortars is given in (Table 1).

Research significance

As discussed in Sect. 1, the use of alternative cementitious materials like MK in place of cement in concrete and mortar is crucial to foster a sustainable civil engineering industry. Since cement is responsible for the most emissions from the construction industry, the reduction in its usage will reduce the associated \(\:{\text{C}\text{O}}_{2}\) emissions from the industry and consequently reduce the ill effects of human development on nature. It will also help conserve the natural environment by reducing the utilization of limited natural resources like gypsum and limestone etc. Also, MK utilization in mortar will serve as a feasible avenue for utilization of huge amounts of MK available. As evident from the literature summary in (Table 1), much research has been done regarding prediction of strength properties of cement mortars having mineral admixtures like fly ash, marble powder etc. in various percentages in the mortar mix. However, the subject of C-S prediction of mortar containing MK as a replacement of cement is comparatively unexplored. It is largely attributed to the non-linear behaviour of C-S of MK containing mortar in relation to its mixture composition. Also, the experimental determination of strength of MK blended mortar requires extensive time and resources. To bridge this gap in the literature, this study intends to utilize novel ML algorithms including boosting-based techniques (XGB and AdaBoost), bagging regressor, and evolutionary techniques (GEP and MEP) to predict C-S of MK-based cement mortars in a comparative manner. The use of MEP and GEP for prediction purpose of C-S of MK-based cement mortar is attributed to the fact that these two are grey-box models because since they give an empirical equation as their output in contrast to other famous techniques like ANN and XGB which don’t possess the ability to do so. The equations yielded by MEP and GEP will help the professionals to effectively use the developed models and impart transparency to the underlying algorithm methodology53. Similarly, the rationale behind using algorithms like GBR, AdaBoost, and XGB stems from their superior accuracy and easy implementation. These algorithms do not need to specify any optimal model architecture before model development54. Moreover, a particular novelty of this study is that it gives a comparison of various bagging, boosting, and evolutionary techniques to predict C-S of MK-based cement mortar. This will help to identify the type of ML techniques most feasible for utilization in civil and materials industry for prediction of different material properties. Furthermore, this study utilizes several cutting-edge interpretable ML approaches like SHAP, partial dependence plots (PDP) analysis, and individual conditional expectation (ICE) to reveal the most important variables which contribute to the strength of MK-based cement mortar. The investigation of relationships between different inputs and output by means of the explanatory analysis techniques will provide insights into the individual and combined effect of different inputs on the outcome. This will help to optimize the mixture composition of MK-based cement mortars to cater for specific site conditions. In addition, this study depicts a practical of the utilization of transformative power of ML in the field of civil engineering by means of a graphical user interface (GUI). This GUI will enable the professionals and stakeholders to effectively determine C-S of MK-based mortars based on its mixture composition without conducting extensive experimentation.

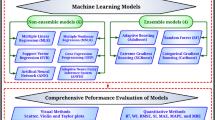

Research methodology

Figure 2 shows the complete step-by-step methodology followed in this study. These steps include (i) Collection of the data, (ii) Analysis of data, (iii) Data splitting, (iv) ML model development, (v) Assessment of error, (vi) explanatory analysis, and (vii) GUI development. Based on the extensive review of literature, it was identified that there is a lack of accurate empirical predictive models for MK-based cement mortars despite its benefit as compared to normal cement mortar. Thus, this study will address the problem of predicting C-S of MK-based mortars based on different ML techniques. For this purpose, a dataset containing all influential variables to predict C-S was collected from the literature which was then processed and analysed using different statistical techniques to check for outliers and investigate the correlation and distribution of the data. After data analysis and representation, the model development process will start which encompass tunning the hyperparameters of algorithms to check which set of parameters will yield the highest accuracy. The algorithms will be trained using these parameters and then the trained algorithms will be validated by error evaluation and scatter plots etc. After training of algorithms and error evaluation, their performance will be compared to point out the most important algorithm which will then be used to conduct further explanatory analyses techniques like SHAP, PDP and ICE etc. Furthermore, the findings of the algorithms will be deployed by means of a GUI to enable the professionals to use the models that are developed in this study.

Prediction methods

Gene expression programming (GEP)

Ferreira in the year 2001 first proposed the GEP algorithm, which combines aspects of genetic algorithms (GA) and genetic programming (GP)55. In GEP, the concept of “survival of the fittest,” inspired by Darwinian principles, plays a central role. The process initiates with the generation of random initial chromosome population, which is then decoded into mathematical expressions. Fitness evaluations determine the optimal individuals, and genetic operators drive the continuous generation of offspring. Continuous iteration refines the solution, ultimately leading to the optimal outcome, as depicted in (Fig. 3). Figure 3b,c illustrate the process through the many steps required to create a mathematical equation for GEP. There are three key parts of GEP namely: (i) Chromosome structure, (ii) Genetic operators, and (iii) Fitness evaluation. Each of the above is explained below:

Chromosome structure

Chromosomes being composed of interconnected genes by a function named linking function, with each gene featuring a head and a tail. The head is made of a set of functions such as logical and mathematical operations and terminations such as variables or constants. On the other hand, only terminations are present in the tail. Expression trees (ETs), which provide explicit expressions and are essential to the decoding of chromosomes56.

Genetic operators

Chromosomes facilitate the emergence of the next generation through genetic operators, including mutation, transposition, and inversion57. Notably, mutation excels as the most efficient operator, offering substantial modification potential. The presence of mutation significantly enhances the efficiency of the individual adaptation process, leading to effective solutions across various problem domains.

Fitness evaluation

The performance of chromosomes is assessed using fitness. The more evolutions there are, the more fitness there is in the population. The final fitness value is reached when the fitness value does not change after a certain number of evolutions.

Multi expression programming (MEP)

MEP is a subclass of GP that is excellent at offering several solutions, including straightforward linear solutions that fit inside a single chromosome, thereby improving the accuracy of the model58. The Binary Tournament is a process used to select two potential parents and recombine them to create new offspring. The genetic evolution of offspring involves both crossover and mutation, resulting in the production of novel offspring. These features allow MEP to search through a wide range of possibilities and identify the best responses. This system allows flexibility in chromosomal rearrangement during the evaluation phase by ensuring that individuals who cannot reproduce are not included in subsequent generations and eventually discarded. The first step in the MEP process is to develop the random population of computer codes. Subsequent steps continue until the initial condition is satisfied in order to formulate the most viable answers to the question. A set (or sets) of strings of formulas are used to describe the MEP genes. The size of the code (the length of the chromosome) is determined by the set of genes and is held the same throughout the process. MEP’s superiority lies in its ability to create reliable models across different contexts. The optimization of the MEP algorithm involves the adjustment of parameters such as crossover probability, code length, sub-population size and mathematical functions. The selection of optimal values will improve the model accuracy but may enhance computational requirements59. The length of the resultant equation in MEP is exactly proportional to the length of the code. The architecture of MEP model is shown in (Fig. 4).

Extreme gradient boosting (XGB)

XGB is recently created gradient boosting tree-based model60. It is similar to gradient boosting methodology (GBM) but more accurate and efficient. XGB, unlike GBDT, employs a more regularized model formulation to control model complexity. XGB additionally supports column subsampling for features, a sparse technique for missing data, and multithreaded parallel computation. To optimize and enhance the XGB model, it is necessary to determine multiple parameters through data learning. XGB excels at identifying relationships within data. It employs a sequence of decision trees to progressively refine predictions. This collaborative approach results in a robust and accurate model. XGB improves its predictions over time through a process of recurrent training. It starts with the creation of a first model based on the training data. It then generates further models, each of which is specialised in correcting the errors that were missed by the previous model. This cycle is repeated until the performance of the algorithm is better than a pre-defined target or until a pre-defined maximum number of models has been reached. Figure 5 presents the model architecture to reach valid output61. The XGB algorithm is concisely summarized by the stages listed below:

-

i.

Assume training and testing datasets and divide accordingly in ratios such as 2/3 and 1/3 or 3/4 and 1/4 etc. of the total dataset. Any general dataset D is represented by Eq. (1).

Then a differentiable loss function is adopted for the evaluation of performance of model. This loss function is defined as follows in Eq. (2):

Where predictors and predictands sets are represented by \(\:{\text{x}}_{\text{i}}\) and \(\:{\text{y}}_{\text{i}}\) respectively and \(\:\text{i}\) is any value assumed from 1 up to any number \(\:\text{n}\) which is the overall number of the datapoints. The predictions are illustrated by the symbol \(\:{\upgamma\:}\) as shown in the equation of loss function. Different loss functions can be considered including mean squared error (MSE). Different hyperparameters including maximum depth of trees for base learners, number of trees, and learning rate of boosting either using default or assumed parameters or using GridsearchCV which is a library for automatic searching of best parameters for a dataset in python62.

Adaptive gradient boosting (AdaBoost)

AdaBoost is well-known as a highly powerful boosting technique. In fact, its significance goes beyond boosting, since it is ranked among the top ten data mining algorithms. AdaBoost uses the training set to create a weak learner and modifies the distribution of data for subsequent rounds of training of the weak learner depending on the performance of the prediction. It should be noted that in the following step, more attention is given to training samples with low prediction accuracy in the previous step. Lastly, the weak learners are combined with different weights to form a strong learner63. The graphical illustration of the adopted methodology is represented in (Fig. 5b). Following methodology is adopted for AdaBoost predictions. Let a training dataset is consisted of 1 up to m labels (\(\:{\text{x}}_{1\:\text{t}\text{o}\:\text{m}}\:,\:{\text{y}}_{1\:\text{t}\text{o}\:\text{m}}\)) depicted as (\(\:{\text{x}}_{1}\:,\:{\text{y}}_{1}\)),…, (\(\:{\text{x}}_{\text{m}}\:,\:{\text{y}}_{\text{m}}\)) for easy understanding where \(\:{\text{x}}_{\text{i}}\:\)belongs to µ domain and\(\:\:{\text{y}}_{\text{i}}\) belongs to a range from −1 to +1. A distribution \(\:{\text{D}}_{\text{t}}\) is calculated for each round (t = 1 to T) of m training instances, as illustrated below in Eq. (3).

Next, to identify a weak hypothesis, a weak learning method is used \(\:{\text{h}}_{\text{t}}:\:{\upmu\:}\:\to\:\{-1,\:+1\}\) which aims for minimizing the weighted error \(\:{\text{ϵ}}_{\text{t}}\) which is relative to \(\:{\text{D}}_{\text{t}}\) as shown in the subsequent Eqs. 4 and 5 respectively:

\(\:{\text{ϵ}}_{\text{t}}=\text{P}{\text{r}}_{\text{i}}\sim{\text{D}}_{\text{t}}[{\text{h}}_{\text{t}}\left({\text{x}}_{\text{i}}\right)\ne\:{\text{y}}_{\text{i}}\)] (4)

and

Where \(\:{\text{Z}}_{\text{t}}\) is factor for scaling selected such that the distribution is ultimately formed by \(\:{\text{D}}_{\text{t}+1}\). A weighted sum of weak hypotheses is finally calculated to determine the sign by the combined hypothesis (H) to arrive at final prediction. This final prediction is represented by Eq. 6.

Bagging regressor (BR)

The ensemble machine learning approach known as “bagging regressor”, which is a contraction of “bootstrap aggregating”, expands the predictive model with more data as it is trained, increasing the accuracy and stability of ML models by integrating multiple base learners. Regression algorithms, such as DTs, are commonly used as the basic learner in Bagging Regressor applications64. By using a subset of the training data to train the base learners, bagging decreases variance and overfitting. Each element of the bagging technique has the same probability of being found in the newly created data set. There is little effect on predictive ability by adding more data points to the training set. Also, the divergence could be substantially reduced by adjusting the forecast in line with the intended outcome. All these data sets are the basis for the development of new models, which are regularly updated. The ensemble models provide predictions, each of which is averaged. It is possible to derive regressive forecasts by taking the average of forecasts from many models65. An improved and more widely applicable model is produced by this averaging procedure, which reduces the effect of over-fitting and outliers in individual base learners. Figure 6 illustrates how Bagging regressor combines training and testing data to obtain final predictions.

Cement mortar database

Experimental data collection

The establishment of a reliable database is a fundamental prerequisite for the development of ML-based predictive models. However, in the context of cement mortar, the data collection process is inherently complex due to complex mixture composition of mortar typically comprising water, binders (such as cement), aggregates, and both natural and synthetic admixtures. Consequently, the careful selection of input features is of critical importance during data collection. Moreover, proper consideration must be given to parameters such as quantity and type of cement as these characteristics can significantly influence C-S of the mortar. Another challenge lies in the acquisition of sufficient data to effectively train ML algorithms. Traditionally, cement mortars are prepared in laboratories, cast into molds, cured under controlled conditions, and tested for C-S using a compression testing machine. This experimental process is resource-intensive, time-consuming, and requires continuous oversight, which limits the feasibility of producing large numbers of specimens. As a result, most studies in the existing literature report compressive strength values up to only 28 or 90 days of curing. Data pertaining to long-term strength development is notably scarce, posing a significant limitation to the construction of a robust and generalizable database. Furthermore, during the collection of data, it is significant to check that the input parameter values cover a wide range of possible values because the accuracy of the developed ML model largely varies on the reliability of the database.

To address these constraints, an extensive database of 249 experimental results of cement mortar C-S containing MK have been aggregated from 8 different studies published in the literature whose summary is given in (Table 2). Although there were some smaller databases already available in the literature, the authors compiled a new and extensive database due to the following reasons: (1) the already existing datasets considered different set of parameters than used in current study, (2) the input variable values were not distributed evenly over a large range in existing datasets. It is an essential factor to take into account because the number of experimental instances in a dataset al.one aren’t sufficient to ensure the reliability of the database, the distribution of each input and output parameter(s) also plays an important role. Thus, the mortars having maximum diameter of aggregate greater than 6 mm and C-S results for ages more than 90 days were not included in the database since such type of experimental results were less than 5% of the total collected dataset and their inclusion would have reduced the reliability of the collected dataset and in turn the accuracy of developed ML algorithms. In regard to the input features, the collected database features only those experimental results containing the following six input parameters: (i) age of specimen, (ii) water-to-binder ratio, (iii) metakaolin percentage in relation to total binder content, (iv) maximum diameter of aggregate, (v) superplasticizer percentage, and (vi) sand-to-total binder content ratio. This is due the fact that these inputs have been recognized as the most important features to predict the mortar C-S by previous related studies39,41,44,66,67,68. It is worth mentioning that in the collected database of experimental studies, OPC was used as the main binding agent and MK was added to the mortar mix in different percentages. The water-to-cement ratio was different for experimental values collected from each study, but the C-S of mortar was measured by casting cubic specimens of 50 cm x 50 cm x 50 cm, curing them at different ages (1,3,7,14,28,60,90), and crushing them using a compression testing machine to measure the C-S according ASTM C 109.

Outlier detection and removal

Regression analysis commonly involves removing outliers from the database prior to the development of regression-based models. Outliers represent anomalies in the data and are defined as are those points which deviate significantly from other data77. In regression analysis involving extensive data, outliers pose a significant challenge, and special care must be taken to detect and remove them from the data78. Therefore, the database comprising 249 experimental instances, was analysed for potential outliers using a descriptive statistical method outlined in79. Outlier detection was performed through hypothesis testing, where a p-test was applied at a 5% significance level. In this context, the null hypothesis assumes that the data values follow a normal distribution, meaning they fall within the expected range of variation. The alternative hypothesis, on the other hand, suggests that certain values particularly those significantly higher or lower than the rest may not belong to the same distribution and could be considered statistical outliers. Identifying and addressing such outliers is important to ensure the reliability and accuracy of the dataset used for model development. The result of this analysis in the form of bivariate boxplots for each variable involved in the study is given in (Fig. 7). The boxplots in Fig. 7 is a useful way to represent the summary of statistical characteristics of the data. The 25–75% range is represented by different coloured bars while the inter quartile range (IQR) is represented as black lines and the outliers are shown as black dots in (Fig. 6). After the identification of outliers by means of box plots as given in (Fig. 7), the strength value was capped at 75 MPa to remove the outliers from the database. Thus, a total of 234 data instances were left to be used for model development. This remaining dataset will be used from now onwards in the paper for statistical analysis and for development of the algorithms. The statistical summary of the remaining data finalized for model development is given in (Table 3). The symbols of the input and output variable(s) along with their units of measurement is given in (Table 3). Moreover, different statistical parameters like average value, maximum value, and standard deviation etc. for each variable is also given in the table.

Data description, correlation, and partitioning

Figure 8 depicts a histogram representation of the data. The graphic illustrates how the dependent and independent variables are dispersed over a broad range. The age ranges from 1 day up to 90 days encompassing values like 7,14, 26, 56, 60 within the range. Also, W/B ranges from 0.36 to 0.6 which is a considerably wide range for W/B ratio. In the same way, binder-to-sand ratio varies from 0.33 to 0.50. The range of maximum diameter of aggregate is from 0.6 to 4.75 which is also a very large range of values. It is because different experimental results collected from variable studies used different sized aggregate to make cement mortars. Furthermore, the percentage of MK in relation to total binder content has range from 0 to 30% which means that the dataset contains experimental results for MK-based cement mortars in which percentage of MK varies from 0 to 30%. This type of data distribution makes sure that the developed predictive models can be used for wide range of input configurations to predict the desired outcome80. The strength of mortar in the same way varies from 6.06 MPa to more than 70 MPa. This wider range of output variable also allows the models trained using this data to be implemented practically in a wide variety of conditions to predict C-S of Mk-based cement mortars ranging from 6 MPa to more than 70 MPa.

In addition to investigating the distribution of the data, the examination of inter-dependency between different features (both input and output) is crucial before starting the formal training process of the algorithms. This is due to the fact during developing ML-based predictive models, the dependency of input variables upon each has a significant role in addition to the correlation between inputs and outputs. If the variables are correlated more with each other rather than with the output, there is a risk of encountering the problem of “multi-collinearity”. This term simply implies that the inputs are correlated greatly with each other rather than with the output and the performance of resulting ML algorithms can be affected if this problem is present in the data81. Thus, to investigate the inter-dependency between all possible feature combinations involved in this study, the Pearson’s correlation matrix or commonly referred to as heat map is given in (Fig. 9). The values written in Fig. 9 are the correlation which range between −1 and 1 and the strength of relationship between two variables is directly related to the value of R with 0 indicating no correlation at all and 1 showing a 100% perfectly linear correlation. Whereas the direction of relationship (positive or negative) between two variables is depicted by the sign of R. Correlation values above 0.8 often indicate a fair correlation among considered two variables, whereas values above 0.9 typically indicate a superior correlation82. Thus, to make a ML model resistant to multi-collinearity, it is important that the R value among any two variables does not exceed 0.881. Figure 8 clearly shows that the correlation of all variable combinations is below 0.8, confirming that multi-collinearity will not arise to impact the model’s performance. It is evident that most of the features have a minimal correlation between them. Only maximum aggregate dia and age has a negative 0.44 R value between them.

The final step after data description and data correlation analysis is the partitioning of the data where the data is split into separate sets to be used to train and test the algorithms. The major portion of the data is utilized in training while the relatively small testing set remains unexposed to the algorithm during training phase, and it is used to check the performance of the trained algorithms. The dataset was divided randomly into 80% for training and 20% for testing, with the training set used to build the algorithms and the testing set for performance assessment. The data splitting was done in this particular ratio to make sure that the algorithms have significant amounts of data to be trained properly and at the same time reserving enough data for the algorithms to be tested83.

Model development & authentication

The first step in the ML robust model’s development is finding a set of variables which will result in the highest accuracy of the algorithms. This process is named as hyperparameter tunning. This step is crucial because every algorithm needs specific parameters which must be finalized through rigorous testing. For this purpose, a thoroughly crafted methodology was developed in this study. The hyperparameter selection process of each algorithm is given under.

MEP model development

MEP algorithm implementation for developing an equation to predict C-S of MK-based cement mortars was done with the help of MEPX 2021.05.18.0. software which enabled to efficiently execute MEP methodology by changing different hyperparameters. The MEP parameters tunned in MEPX 2021.05.18.0. software which affected the performance and accuracy of the algorithm included number of generations, probability of crossover, length of code etc. Same as for GEP model, the preliminary values for the parameters were selected after considering previous studies84 and then extensive trials were performed varying the value of each hyperparameter across a wide range until the optimal values were found for each parameter. These optimal values which yielded the highest model accuracy are tabulated in (Table 4). Each of these parameters signify a key function of the MEP algorithm. The choice of the optimum value for each of these variables is of great importance to ensure the development of a robust model. For instance, crossover probability is used to show the proportion of parents that will go under the process of genetic crossover. It is selected as 0.8 in this study indicating that 80% of the total parents present in the population would go under crossover. Similarly, population size and number of populations deal with the total amount of random chromosomes generated initially and hence these two parameters control the computational power and time required by the algorithm in addition to controlling the accuracy of the resulting equation. As a general rule, increasing these values result in an increase in the accuracy, but selecting abnormally larger values will lead to overfitting of the algorithm85. Furthermore, code length needs to be carefully determined since it affects the length and complexity of the resulting mathematical statement. Table 4 shows the MEP parameters which result in highest accuracy in current study. The functions used for development of MEP equation again include only simplest and easily calculated arithmetic functions to keep the final expression simple as in GEP algorithm.

GEP model development

To develop the MK-based predictive model using GEP algorithm, a software package called GeneXpro Tools (version 5.0) was utilized. Prior research suggestions were used to choose the initial set of these parameters86 and these were changed across a wide range until a set of parameters resulting in highest accuracy was reached. In this context, the most important GEP parameters involved in this study include gene and chromosomes, head size, and linking function etc. The chromosomes numbers and size of dead influences the complexity of the algorithm. Raising the values of these variables might result in a rise in accuracy of the algorithm but it also calls for more computational power and beyond a certain point, increasing these values can cause overfitting87. In the same way, gene number means the number of sub-expressions made by the algorithm which will be joined by linking function to make the equation. Thus, these parameters have their own importance and their optimizing the value of each parameter is significant for convergence of GEP for optimal solution. Therefore, the number of genes, head size, and chromosomes were wisely chosen such that overfitting in the algorithm is not observed and also maintaining the accuracy of the resulting equation. In short, the GEP development process involved varying different hyperparameters of GEP and monitoring their effect on the accuracy of the results. The parameters which resulted in highest accuracy is given in (Table 4). For current study, the number of genes were chosen as 5 which is an indicator that the resultant will have 5 sub-expressions which will be joined by the already selected linkage function to form the complete equation. Table 4 further shows that the logarithmic and square root functions, as well as comparatively basic functions are selected to be included in the final equation to maintain the equation’s simplicity and compactness.

XGB, adaboost, and BR model development

Although the hyperparameter tunning of GEP and MEP was done manually which involved extensive testing using a range of values, the hyperparameter tunning of XGB, BR, and AdaBoost was done in a much efficient way. In particular, a widely used approach known as grid search was utilized for this purpose which is available in scikit-learn library of python in the form of GridSearchCV function88. It is noteworthy that not only the hyperparameter optimization, but the loading of data, splitting, model training and then testing it on unseen data were done using python programming language. The data was split into 80:20 ratio for training and testing of algorithms respectively by employing test_train_split function in Anaconda. The gird search approach was utilized for parameter tunning since it is most efficient and most used approach to tune the parameters of these ML models89. This approach basically involves checking every possible combination of all the parameters involved in model development. While keeping all other parameters constant, only one parameter is varied across a range to find its optimal value. This process is repeated for each parameter which results in finding the optimal value of each parameter. Thus, the same methodology was used to find the optimal values of hyperparameters of XGB, AdaBoost, and BR algorithms whose result is given in (Table 5). These are the parameters which resulted in the highest accuracy of the respective algorithms.

For XGB, AdaBoost, and BR algorithms, there is one common parameter called n-estimators which specifies the total DTs number (base learners) made by the model for prediction purposes. However, XGB and AdaBoost also has a parameter known as maximum depth which is used to control the depth of each DT formed in the search space of the algorithm. These parameters are important to tune carefully since the accuracy of algorithms like XGB and BR etc. depends greatly on these parameters. Moreover, XGB and AdaBoost has an additional parameter known as learning rate. In simple words, this learning rate is a shrinking factor applied to the predictions of each new tree added by the algorithm to reduce the rate of fitting of algorithms because by virtue of the sequential adding of trees for reduction of the residual, XGB and AdaBoost can fit quickly to the training set90. However, if this fitting is left unchecked, it can also lead to overfitting91. Thus, this shrinking factor makes sure that algorithm fitting is done slowly to reduce any potential overfitting. It is recommended in the literature to keep its value between 0.01 and 0.1 so that the new trees addition is not performed swiftly92. Hence, 0.01 learning rate was chosen for both XGB and AdaBoost algorithms in current study as shown in (Table 5).

Model authentication criteria

After the training of algorithms using the selected hyperparameters, the next step is to validate their findings and access their accuracy using some error evaluation metrices. To make sure that model predictions meet the requirements for being categorized as acceptable models, this error evaluation will be carried out throughout both the training and testing stages of the algorithms. Table 6 provides a summary of error evaluation criteria used to evaluate the ML models. Each of the employed metric is used to measure a specific quality of the ML algorithm as given in the table. Also, the mathematic formulas to calculate these error metrices along with the criteria to be classified as acceptable is given in the table. The formulae utilize “n” to denote the total number of data points, and “x” and “y” to represent the actual and model-generated values, respectively.

Results and discussion

GEP result

Owing to its grey-box nature, the GEP algorithm provided an empirical equation to predict the output unlike other black-box algorithms. However, the equation wasn’t directly given as an output, rather several sub-expression trees were generated by the algorithm which were combined by addition function to get the final expression given by Eq. (7). In particular, five sub-expression trees indicated by \(\:{T}_{1},\:{T}_{2},\dots\:,\:{T}_{5}\) were generated by the algorithm since the number of genes were selected as five. The sub-expression trees were decoded in five parts, and the sub-expressions were summed to create the complete equation. The accuracy of GEP equation has been visualized by means of two separate scatter plots for training and testing datasets in (Fig. 10). It should be noted that in the training scatter plot, some points are lying beyond the error lines indicating that at some points, the error between predicted values and original values is more than 20%. However, the points going beyond error lines are comparatively lower in the testing data plot. Moreover, the result of performance evaluation of GEP is also displayed in Table 7 which shows the computed values for error metrices for both phases of training and testing sets. The \(\:{R}^{2}\) value of the GEP is 0.83 for the training session and 0.84 for the test session, both of which are greater than 0.8. MAEs for training and test were 3.33 MPa and 2.87 MPa respectivelyS which shows that GEP equation generated predictions having an error of 3 MPa on average. The RMSE value is 3.8 for testing indicating that the algorithm didn’t yield any abnormally larger errors in the testing phase. The PI value, which combines RRMSE and R to indicate overall model performance is 0.064 for training set and 0.050 for testing which are very near to zero. Furthermore, the OF value of GEP is 0.0587 which again indicates that the GEP developed equation can be utilized practically for forecasting of C-S of MK-based cement mortars.

MEP result

Equation (8) provides the output of the MEP algorithm as an empirical equation. It is worth mentioning that this equation wasn’t directly yielded by MEP algorithm. Rather, the algorithm gave a python code which was used to get Eq. (8). Also, the predictive abilities of this equation to forecast C-S for both datasets of training and testing has been given in scatter plots shown in Fig. 10b from which it is evident that the MEP predictions lie closer to the best fit line. Also, fewer points lie beyond the ± 20% error lines indicating that there are very few MEP forecasted values deviate more than 20% from the C-S actual values. Also, the validation of MEP model has been done by error metrices in (Table 7). Notice the \(\:{R}^{2}\) value of MEP in training phase (0.836) and in testing phase (0.8975) from the table. Both of these values are greater than 0.8 which is the lowest recommended value for a good model. After \(\:{R}^{2}\), it can be seen that MAE value of MEP is 3.20 MPa in training and 2.86 MPa in testing set which shows the minimal difference between predicted and the actual values. The trend is same for RMSE values too. Regarding a20-index, it is 0.9144 for training and 0.8936 for testing indicating the robustness of MEP. Furthermore, the overall performance indicators; PI and OF are also substantially lesser than 0.2. The OF value of MEP is 0.055 which is significantly lesser closer to zero indicating its robustness.

AdaBoost result

The AdaBoost algorithm failed to provide an equation for output prediction. However, the scatter plots for AdaBoost training and testing data is shown in (Fig. 10c). From the plots, it is explanatory that compared to MEP and GEP, very few data points lie out of the 20% error lines. It shows that most of the AdaBoost predictions are accurate and have less than 20% deviation from the real-life C-S values. The result of AdaBoost error evaluation is shown in Table 7 from which it is evident that the \(\:{R}^{2}\) values of AdaBoost for both data partitions is greater than 0.8. The \(\:{R}^{2}\) value is 0.876 for training while it is 0.861 for testing phase. Also, the MAE of AdaBoost is 3.510 MPa in training and 3.45 MPa in testing which is slightly larger than the those of MEP and GEP. Additionally, the a20-index value of AdaBoost is 0.91 in both training and testing phases indicating its robustness. Moreover, the training set PI value of AdaBoost is 0.053 and the testing set PI value is 0.051 both of which are less than those of MEP indicating its accuracy over the evolutionary algorithm models. Furthermore, the indicator of model’s overall efficiency; the OF value of AdaBoost is 0.0521 which is substantially less than 0.2 and very close to zero. Also, it is less than GEP and MEP which shows its superior accuracy in comparison to GEP and MEP. Therefore, the AdaBoost model can be used practically for C-S prediction of MK-based cement mortars with more than 86% accuracy on unseen data. The model exhibited a good accuracy overall (greater than 0.8) and acceptable performance indicator values. However, it is still lower accuracy than other black box models including XGB and BR which may be due to the model being sensitive to noisy data or being unable to accurately pick the complex relationship between inputs and output.

BR result

The scatter plots and in Fig. 10 depict the actual versus predicted values by BR algorithm for both data distributions from which it is evident that almost all of the points lie above the linear line. Some values deviate from the ideal fit line but are still lying within the ± 20% error range which shows the excellent accuracy of BR algorithm. Also, a significant improvement in prediction accuracy offered by BR algorithm is evident from its training a20-index value which is perfectly equal to 1 while it is 0.98 for testing data. It has been shown in Table 7 along with error metrices of other algorithms. These error metrices given in Table 5 verify that the predicted values of BR do not vary greatly from the actual experimentally determined values. The \(\:{R}^{2}\) of BR training is 0.98 while it is 0.94 for testing. Another important thing to mention is the minimal MAE value of BR in training stage (0.8 MPa) and in testing phase (1.73 MPa). Both values are considerable lesser than the MAE values of other algorithms like MEP and GEP. The same is true for RMSE values of BR. Regarding the performance indicators of BR algorithm, the PI values are remarkably lesser than 0.2 for both BR training and testing. The PI value is 0.017 for training and 0.031 for testing. Similarly, the OF value is 0.022 which is the second lowest after XGB algorithm. It shows that BR proved to be very accurate for peak strength prediction of C-S of MK-based cement mortars.

XGB result

The XGB algorithm too like BR and AdaBoost didn’t yield an empirical equation as an output due to its black-box nature. Thus, its results are interpreted and shown in the form of scatter plots in (Fig. 10e) and the results of error evaluation of XGB are also given in (Table 7). The superior accuracy of the XGB model is shown from the scatter plots where all predictions are lying seamlessly on the line. No point deviate from the ideal fit line and all points lie within the ± 20% error range. Due to this reason, the a20-index of XGB model is perfectly 1 for both data splits. The \(\:{R}^{2}\) value of XGB is equal to 0.998 in both phases of training and testing showing that XGB has more than 99% accurate to predict the output. The RMSE and average deviation of XGB are also significantly lesser than the values of all other algorithms. The training MAE of XGB is at the minimal value of 0.30 MPa while it is 0.257 MPa for testing set. These values are significantly lesser than all other algorithms. Moreover, the PI value of XGB training is 0.005 while it is around 0.042 for testing sets which shows the overall efficiency of XGB. These values are again significantly lesser than PI values of its counterparts. Finally, XGB OF is 0.0467 which in addition to being considerably less than 0.2 is less than the OF values of all other algorithms. Therefore, it can be concluded that the performance of XGB surpasses that of all other algorithms used in the current study.

Residual comparison of models

Based on the validation criteria defined in Sect. 6.4, Table 7 proves that the developed are acceptable and the values of all error metrices lie within the literature’s range. However, to draw a comparison between the algorithms based on their errors made for both training and testing data (the combined dataset), Fig. 11 shows the residual distribution plots for the employed algorithms. From Fig. 11a, notice the residual distribution of GEP algorithm which shows that most of the GEP residuals (226 out of 234) lie within the range of -10 to 10. It means that while using the GEP-based equation, the maximum difference between actual and anticipated outcome will be 10 MPa most of the times. While, from Fig. 11b which gives residual distribution for MEP, it can be seen that 222 out of 234 residuals lie in the range −10 to 10. This indicates slightly more residuals beyond the range −10 to 10 for MEP algorithm. However, this range reduces significantly for the AdaBoost algorithm in which more than 95% of the residuals (232) residuals lie in the narrowest range of −2 to 2. For BR algorithm, in the range −4 to 4, 220 out of 234 residuals lie and there is no residual lying beyond the range −8 to 8. Finally, for XGB algorithm, all of the residuals lie in the range −1.5 to 15. It means that at no point the XGB algorithm will give an error more than 1.5 MPa from the actual values. In particular, 225 residuals are lying in the smallest range of −1.0 to 1.0. It again indicates that XGB has the smallest errors and has the superior accuracy compared to its rivals.

External validation of models

To further validate the model’s accuracy, this study utilizes several external validation metrices in addition to the model validation by error metrices, scatter plots, and residual plots etc. The summary of the results of external validation is given in (Table 8). Moreover, the acceptable ranges of the external utilized checks for models to be classified as valid are also given in (Table 8). The external validation values for all algorithms were calculated using the whole dataset without any partition to confirm that the models are overall valid according to the external checks. The parameters \(\:{P}^{{\prime\:}}\) and P depict the slope of regression lines passing through the origin and both of these values must lie within 0.85 to 1.15 for a model to be valid. Also, \(\:{R}_{o}^{2}\) shows the correlation between real life and anticipated values. It is recommended that its value must be close to 1 for a good model. Similarly, \(\:{R}_{m}\) considers the combined effect of \(\:{R}_{o}^{2}\) and \(\:{R}^{2}\). From Table 8, it can be seen that overall, all models qualify the checks and the values of all metrices lie within the suggested range. The XGB algorithm, however, exhibited the highest values of all external validation metrices. The correlation (R value) is the highest for XGB (0.999) followed by BR, AdaBoost, MEP, and lastly GEP. Similarly, \(\:{R}_{m}\) which measures the difference between \(\:{R}^{2}\) and \(\:{R}_{o}^{2}\) is also highest for the XGB model. The \(\:{R}_{m}\)value of XGB is 0.899 followed by 0.895 for BR, 0.787 for AdaBoost, 0.574 for MEP, and it is 0.533 for GEP which is marginally larger than the lowest recommended value (0.5). The trend is same for almost all other external checks too. Therefore, based on the values from Table 8, it can be inferred that all of the ML algorithms presented in this study are efficient and robust since they passed all external validation checks in addition to their validation through error evaluation, scatter plots, and residual analysis.

Best model selection

The current study’s findings demonstrate that the algorithms exhibit acceptable performance based on residual comparison and error evaluation, with XGB algorithm emerging as the most effective among those employed. However, a more robust method for model comparison is the Taylor plot, which evaluates models simultaneously based on their standard deviations and correlation coefficients (R values)101. In a Taylor plot, proximity to the point of actual data indicates greater reliability of the algorithm’s predictions and vice versa. The standard deviation of predictions for each algorithm has been calculated, and the resulting data points are plotted on the taylor plot as shown in (Fig. 12). Notably, the XGB algorithm, depicted by a blue square, is positioned closest to the actual data, underscoring its superior performance and indicating that the outcomes generated by XGB are nearly equivalent to the experimental C-S values. Adjacent to the XGB point, the BR algorithm is positioned closest to the actual data, indicating it ranks as the second-best performer following XGB. In contrast, the other algorithms like MEP, GEP, and AdaBoost exhibit performance levels that are quite similar, as evidenced by the aggregation of their points in close proximity to one another. Specifically, the MEP point is positioned directly above that of AdaBoost, highlighting their nearly identical performance, while the GEP point is slightly lower, suggesting a marginally lesser standard deviation when compared to MEP and AdaBoost. Consequently, based on the analysis derived from the taylor plot, the order of algorithm accuracy is established as follows: XGB > BR > AdaBoost > MEP > GEP. Given that the XGB model consistently demonstrates the highest level of accuracy, it is concluded that this algorithm will be utilized for subsequent explanatory analyses.

Comparison with existing models

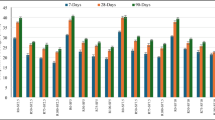

In addition to drawing a comparison between algorithms employed in current study, it is also important to compare their accuracy with the accuracy of already existing models to predict the C-S of cement mortars having diverse types of secondary cementitious materials. Thus, a comparison between models in this study and already existing models described in Table 1 has been made on the basis of \(\:{\text{R}}^{2}\) value and the result are shown in the form of a bar graph in (Fig. 13). From Fig. 13, it can be seen that the perfect \(\:{\text{R}}^{2}\) value of 1 has been achieved by the study of Gayathri et al.48 followed closely by the XGB model presented in this study. XGB model depicted accuracy higher than all previous studies conducted previously except that of Gayathri et al.48 and all ML algorithms investigated in current study with \(\:{\text{R}}^{2}\) value of 0.998. The XGB model was closely followed by Asteris et al.47 having \(\:{\text{R}}^{2}\) equal to 0.996 and 0.990 depicted by Nakkeeran et al.51. After that, the study conducted by Chen41 which predicted flexural strength of cement mortar having RHA had 0.980 \(\:{\text{R}}^{2}\) value. Next to it is the accuracy of Kurt et al.52 with 0.98 and Ali et al.45. However, the least \(\:{\text{R}}^{2}\) value (0.846) was exhibited by the GEP model developed in current study while the accuracy of all other algorithms lies in between the XGB and GEP models. In addition to the superior accuracy of XGB model as compared to previous studies, the model presented in this study also offers the significant advantage that it has been made available in the form of a GUI which can be used to practically implement the findings of the XGB model. This feature was not available in the previously conducted studies. Another advantage is that this study presents explanatory techniques on the developed XGB model which further helps to validate its findings and reinforce its contribution.

Interpretable machine learning

In order to provide transparency to the prediction-making process of the algorithms, post-hoc interpretable techniques are widely used which impart interpretability and transparency characteristics to the black-box ML models. The application of various interpretable techniques on ML models is also crucial since they enhance the understanding of black-box models and enable non-technical users and different stakeholders to understand the predictions made by the algorithms. Thus, this study employs three types of techniques known as SHAP (Shapely Additive Explanatory Analysis), ICE (Individual Conditional Expectation), and PDP (Partial Dependence Plots) analyses on the XGB model since it is consistently highlighted in Sect. 7.6 to 7.9 that XGB is the most effective technique employed in current study. SHAP was selected for its ability to deliver global and local interpretability for sophisticated machine learning models. Based on cooperative game theory, SHAP provides a robust foundation for determining the effect of each input feature on the model’s predictions. Moreover, SHAP values are consistent and readily visualized, making them accessible to non-technical stakeholders. PDPs further elucidate the relationship between a feature and the predicted outcome, while ICE plots illustrate how the prediction changes for an individual instance as a feature varies, providing granular insights into the model’s behaviour. All of these models were used to get a clear understanding of effect of different variables on prediction of output.

Shapely analysis

Taking its inspiration from the coalition game theory, the shapely analysis was introduced by Lundberg and Lee20 as a method to interpret black-box ML models. At its core, it assumes the input features as players and the predictions of the model are assumed to be payouts and thus calculates the contribution (significance) of each player (input) in payouts (predictions). The TreeSHAP version is widely used for interpretation of ML models built on trees (like XGB and AdaBoost etc.). Thus, the TreeSHAP version was employed in Anaconda using python to interpret the trained XGB-based predictive model for C-S of MK-based cement mortars. There are two sub-types of SHAP analysis known as global interpretation and local interpretation. SHAP global interpretation talks about the essence of the inputs as a whole while SHAP local interpretation dissects each individual prediction to give information about how the algorithm reached this particular prediction. The SHAP global interpretation is widely represented by means of a SHAP summary plot (given in Fig. 14). This plot serves two important purposes; it ranks the features based on their SHAP values and also tells which features are positively or negatively correlated with the outcome. A row of dots is present in front of each input on the beeswarm plot with the dots indicating the SHAP values for the particular input variable. The dots range in colour from blue (representing lower input values) to red (representing higher input values). In the summary plot, from top to bottom, the features are arranged in order of decreasing significance in predicting the outcome. Thus, the most influential variables are the ones present at the top and vice versa. Moreover, if there are more red dots on the lower side (negative side) of SHAP plot, it indicates an increase in variable values lead to a decrease in its corresponding SHAP value while the blue plots present on lower side of the plots shows that decreasing variable values causes a decrease in the SHAP values too. From Fig. 14, it can be seen that the feature age is present on the top indicating that it is the most crucial factor to predict the C-S of MK-based cement mortars. After age, W/B is highlighted as the second most essential variable followed by B/S ratio. However, the contribution aggregate diameter and MK/B ratio is the least in anticipation of the outcome. For age, it can be seen that most of the blue dots lie on the negative side on the SHAP values showing that for lower age values, the corresponding SHAP values are also lower and vice versa. It is also true for features MK/B and diameter in which the red dots lie on the positive side of the SHAP values. However, for W/B ratio, the blue dots lie on the positive side while red dots are lying on the negative side showing that increase in W/B ratio decreases its corresponding SHAP values. For B/S, the red dots are clustered near to the zero line while some blue dots lie on both sides of the SHAP values.

In addition to the SHAP global interpretation to know which variables are more significant in prediction of outcome, the SHAP local interpretation to get insight into how the algorithm generates individual predictions is also important. The most common ways to represent the SHAP local interpretation of a prediction is by using a graph known as force plot which helps to depict the local interpretation of a prediction in a concise and compact manner as shown in (Fig. 15a) for the predicted C-S value of 38.94 MPa. The centre point of the force plot indicates the base value, which is the average of all SHAP values from the predictions. From Fig. 15, it can be seen that the base value in our case is around 41.5 MPa. Each input feature which helps to predict the outcome is represented by an arrow in the force plot with the length and direction of the arrow indicating magnitude and the effect (positive or negative) on the output respectively. The colour coding of the arrows in force plot is done in the same way as in summary plot with blue indicating lower input values and red indicating higher input values. The contribution or significance of each variable is proportional to its size and the predicted value is located at the intersection of blue and red arrows as shown in (Fig. 15a). Notice that the blue and red arrows meet at 38.94 MPa which is the predicted C-S value of MK-based cement mortar. The arrow sizes for features age and W/B are the largest indicating their greater influence compared to other variables. The age value for this particular instance was 1 and MK/B ratio was 0 since they are represented by blue coloured arrows (indicating lower input values). However, dia, B/S, and W/B are represented by red colour since their values are on the higher side for this particular data instance. Thus, by means of this force plot, the user can get insight into the individual predictions knowing what input parameter values were used and what outcome did they yielded.

After carrying out SHAP local and global interpretations by means of beeswarm and force plots respectively, it is also beneficial to check the interdependence between the SHAP values of parameters with change in variable values of another input variable. This can be done by using SHAP partial dependence plots which are given in (Fig. 15b). The five plots in Fig. 15b are for the five input features considered in this study. The x-axis contains the input values of a feature, with the left y-axis displaying the corresponding SHAP values. To check the interdependency, the variable values of another parameter are given along the right-hand side of the plots along y-axis. First of all, it can be seen that as W/B ratio varies from 0.4 to 0.6, the SHAP values of age feature changes from −10 to 10. This shows that the W/B values can greatly impact the SHAP values of age feature. It is because these two features were classified as the most significant ones through the SHAP summary plot. Also, notice that increasing MK/B values from 0 to 30 causes SHAP values of diameter to 0.450 to almost 0.575 in a discontinuous manner. Moving on, the SHAP values of MK/B changes slightly from 12 to close to 20 when W/B is changed across its range of 0.4 to 0.6. Moreover, when diameter is varied from 1 to 4.5 mm, the W/B SHAP values change consistently from 0.450 to almost 0.60. Finally, the last sub-plot in (Fig. 15b) is between B/S and W/B from which it is evident that increasing W/B ratio from 0.45 to 0.55 causes the SHAP values of B/S to go from 2 to 4. This comparatively larger change in SHAP values of B/S with a minimal change in W/B is due to the reason that W/B and B/S are some of the most contributing factors to C-S of MK-based cement mortars according to the SHAP global interpretation. In addition, a comparison of the SHAP results with the existing literature demonstrates that the feature contributions identified using the SHAP method are consistent with the known behaviour of metakaolin-based materials. This theoretical validation ensures that the XGB model accounts for the most crucial elements influencing the strength of MK-based mortars, enhancing the credibility of its predictions. The experimental findings indicate, for instance, that the influence of metakaolin on the strength at later ages (7–28 days) was more significant than at the early ages which is consistent with results from SHAP analysis102. Similarly, it has been identified by previous studies regarding strength prediction of different cementitious materials that age and water-to-cement ratio are some of the most important parameters for predicting the output103,104. It is again in accordance with the SHAP results.

ICE analysis

The ICE plots between C-S of cement mortars having MK and the five input variables used in current study are given in (Fig. 16). The plots for five input features are labelled from (i) to (v) as shown in the figure. These plots are developed by keeping all inputs constant and varying only one input across its range. Then, the change in output values with varying input features values is plotted in the form of a curve known as ICE plot. These plots provide an interactive visual way to investigate the relationship of each input feature with the predicted output. Figure 16i the ICE plot for age and it can be seen that the predicted outcome (C-S) generally increased with increase in age. The C-S value showed a consistent increase from almost 20 MPa to more than 50 MPa when age of the specimen was varied from 1 to 90 days. This increase of almost 30 MPa in C-S value over the range of age explains why it was identified as the most significant input feature by the SHAP summary plot. In Fig. 16ii, the plot of MK/B is shown from which it can be seen that C-S slightly increases up to a particular point with increase in MK/B ratio, after which it decreases minimally but there is not a significant change in output value with change in MK/B ratio. It is because MK/B is the least contributing variable to C-S of MK-based cement mortar according to SHAP analysis. In particular, when MK/B ratio was changed from 0 to 15, C-S increased slightly from 39.5 MPa to 43 MPa, after which it dropped again to almost 41 MPa when MK/B further reached 30. Similarly, the relationship between C-S and B/S ratio is depicted by the curve in Fig. 16iii. It is evident from the plot that when B/S ratio was changed from 0.325 to 0.350, the C-S dropped from 52.5 MPa to less than 37.5 MPa. After that, the C-S remain unaffected by increase in B/S ratio up to 0.425. However, when B/S was increased beyond 0.425, the C-S increased slightly to almost 40.5 MPa from less than 37.5 MPa. The relationship between aggregate diameter and predicted outcome is given by Fig. 16iv from which it can be seen that the C-S varies across a narrow range of 37 MPa to 45 MPa when diameter values were changed from less than 1 to more than 5 mm. After a slight initial decrease, the C-S increases from 37 MPa to 45 MPa when diameter value was increased from 1 to 2 mm. After that, the C-S decreased to 41 MPa with diameter reaching 6 mm. This comparatively lesser deviation of C-S with change in diameter values is owed to lesser contribution of diameter in predicting C-S as identified previously. Finally, the effect of change in W/B on the C-S can be seen from Fig. 16v which shows that there is a decreasing relationship between the two variables. The C-S decreased from 57.5 MPa to a value of 37 MPa when W/B was changed from 0.35 to 0.60. This large change is C-S is due to the fact that the strength of cement mortars is dependent on the W/B ratio to a higher degree. It has also been previously identified in the SHAP summary plot where W/B ratio was the second most influential input to predict the outcome (C-S).

PDP analysis

PDP analysis is used to portray the relationship between a specified number of inputs and the output (prediction). This relationship can be linear, intricate, or monotonic etc. It was first introduced by Friedman and since then, has become a really popular method to check the global interpretation of ML models105. It can be used to check how changing a particular variable, or changing two variables simultaneously can affect the output. If the relationship between only one variable and output is investigated, the resulting plots will be known as 1-D PDP plots while if the simultaneous relationship between two inputs and outcome is being monitored, the PDP plots would be called 2-D plots. Since the individual relationship between inputs and outcome has already been covered by means of ICE analysis, this section will focus only on 2-D plots to depict the combined effect of two inputs on the predicted C-S of MK-based mortars.