Abstract

As an emerging healthcare technology, artificial intelligence (AI) health assistants have garnered significant attention. However, the acceptance and intention of ordinary users to adopt AI health assistants require further exploration. This study aims to identify factors influencing users’ intentions to use AI health assistants and enhance understanding of the acceptance mechanisms for this technology. Based on the unified theory of acceptance and use of technology (UTAUT), we expanded the variables of perceived trust (PT) and perceived risk (PR). We recruited 373 Chinese ordinary users online and analyzed the data using covariance-based structural equation modeling (CB-SEM). The results indicate that the original UTAUT structure is robust, performance expectancy (PE), effort expectancy (EE), and social influence (SI) significantly positively affect behavioral intention (BI), while facilitating conditions (FC) do not show a significant impact. Additionally, perceived trust is closely related to performance expectancy, effort expectancy, and behavioral intention, negatively impacting perceived risk. Conversely, perceived risk adversely affects behavioral intention. Our findings provide valuable practical insights for developers and operators of AI health assistants.

Similar content being viewed by others

Introduction

An AI virtual assistant is an advanced software solution capable of interpreting a user’s natural language instructions and executing tasks or providing services accordingly1. These systems leverage artificial intelligence algorithms and integrate automatic speech recognition (ASR) with natural language understanding (NLU) technologies to fulfill their functions2. AI virtual assistants have been extensively incorporated into various smart devices, including smartphones, smart speakers, smart wearables, smart TVs, and smart cars. All these devices can respond to user commands articulated in natural language and perform related tasks or requests1. Previous studies indicate that approximately 85% of smartphones currently available are equipped with virtual assistant capabilities3. Furthermore, a total of 44% of users engage with virtual assistants across different devices such as Google Assistant, Cortana, Alexa, and Siri3. With the growing interest in AI virtual assistants and their increasing usage frequency4, the scope of their application is also expanding. They serve diverse roles in various contexts such as office administration support, after-sales assistance and social media marketing, as well as offering a wide range of services from meeting information briefings to online shopping2. This technology is making significant advancements in mental health care5, customer service enhancement6, personalized education initiatives7, vehicle transportation solutions8, and healthcare delivery improvements9.

Chatbots and virtual assistants powered by artificial intelligence are increasingly recognized as pivotal in the evolution of healthcare10. Their emergence is transforming the landscape of healthcare delivery and is being rapidly integrated into real-world applications11. In recent years, research focused on the development and evaluation of virtual health assistants has proliferated, encompassing a diverse array of application scenarios. For instance, there are robots designed to facilitate self-management of chronic pain12, medical chatbots that assist with self-diagnosis13, chatbots capable of predicting heart disease through intelligent voice recognition14, and robots aimed at promoting fertility awareness and pre-pregnancy health for women of childbearing age15. Additionally, there exist virtual assistants dedicated to physical activity and dietary management16, as well as Woebot—a mental health chatbot that provides support for adolescents dealing with depression and anxiety disorders17.

As a significant branch of artificial intelligence, AI health assistants are integral to personalized healthy lifestyle recommendation systems and fall within the domain of decision support18. Their primary function is to assist users and patients in adopting healthier lifestyles while supporting the management of chronic diseases associated with unhealthy habits through tailored interactions19. The application of AI health assistants in healthcare primarily focuses on health management and is anticipated to contribute to reductions in hospitalization rates, outpatient visits, and treatment requirements20. A patient’s overall health status is influenced by various factors including a nutritionally balanced diet, regular physical activity, effective emotional and psychological stress management, as well as preventive measures for disease treatment21,22. AI health assistants that utilize advanced algorithms can meticulously assess and categorize users’ health issues while providing comprehensive responses to specific inquiries23,24. Users may pose questions related to their health or seek information about diseases via voice or text communication. Leveraging natural language understanding and machine learning techniques enables AI health assistants to accurately interpret and analyze user commands25. In response to specific user requests, these systems can execute a variety of tasks such as offering personalized health advice, reminding users about timely medication intake or scheduling medical appointments26. Today, numerous patients and prospective patients can utilize AI health online platforms to proactively investigate their own health status or obtain information about diseases27. This development has the potential to mitigate some of the limitations associated with telemedicine, such as prolonged waiting times for a definitive response from specialists and the additional costs incurred when consulting a physician online28. AI health assistants not only contribute to the dissemination of fundamental health knowledge but also enhance early disease diagnosis, facilitate the design of personalized treatment plans, and improve follow-up efficiency20. Consequently, these advancements support individuals in maintaining optimal health.

Despite the numerous advantages that AI health assistants offer to contemporary healthcare systems, their extensive application and implementation are accompanied by several challenges29. These challenges include privacy protection, cybersecurity, data ownership and sharing, medical ethics, and the risk of system failure6,22,30,31,32. Ethical concerns are particularly pronounced given the nature of healthcare delivery. The utilization of AI technology may pose threats to patient safety and privacy22,32, potentially leading to a crisis of trust and various risks. Such issues could serve as significant barriers to user acceptance of AI health assistants33,34.

User acceptance refers to the behavioral intent or willingness of an individual to utilize, purchase, or experiment with a system or service35. It is essential for the successful promotion of any system36, Given the significant potential of AI health assistants, ensuring high user acceptance is critical. Low levels of acceptance may hinder the popularity of AI health assistants, result in wasted medical resources and an oversupply of AI devices, and even stifle technological innovation—ultimately impacting patient interests35,37. Technology acceptance serves as a key indicator of users’ willingness to adopt AI health assistants38. Previous studies on accepting AI systems in the medical and health fields have been numerous35. However, most of these investigations have primarily focused on medical professionals, technical aspects such as algorithm explainability, or doctor-patient interaction scenarios39,40,41,42. While these studies have made significant contributions to this area, research concerning the acceptance of AI health assistants by the general public in health consultation contexts remains limited. This gap in perspective may hinder researchers’ understanding of the psychological mechanisms that non-professional users experience when interacting with AI health assistants. Consequently, this study delineates its theoretical boundaries within the context of health consultation and decision-making scenarios for ordinary users, aiming to explore their acceptance and usage behaviors regarding AI health assistants.

Users’ perceived trust is fundamentally influenced by their assessment of the system’s effectiveness, integrity, and capability43. In the context of adopting AI health assistants, users’ perceived trust plays a pivotal role: it not only influences whether users are willing to engage with the system for the first time but also determines their ongoing reliance on and utilization of the system for health management purposes29. Conversely, when users perceive a higher level of risk, their trust in the system diminishes correspondingly33. This apprehension and uncertainty regarding potential risks directly impact users’ evaluations of trust in AI assistants44, which subsequently affects their behavioral intentions toward usage33. Therefore, this study aims to elucidate the behavioral intentions associated with user adoption of AI health assistants by integrating perceptions of both trust and risk.

In general, the objectives of this study are as follows: (1) to examine users’ acceptance of AI health assistants and their intention to utilize these technologies; (2) to analyze how perceived trust and perceived risk influence users’ decisions regarding the use of AI health assistants; and (3) to explore the interrelationships among these factors. To achieve these aims, we extend two core variables—perceived trust and perceived risk—based on the UTAUT, thereby developing a new research model for predicting individuals’ behavioral intentions when engaging with an AI health virtual assistant. The structure of this study is organized as follows: The first section serves as an introduction, outlining the background and objectives of the research; The second section presents a literature review that establishes the theoretical framework; The third section elaborates on the extended UTAUT model and related hypotheses in detail; The fourth section summarizes the research methodologies employed; The fifth section reports on the results obtained from data analysis; The sixth section discusses the implications of the research findings and interprets these results; Finally, in the seventh section, we provide a conclusion that encapsulates our findings, highlights limitations of the study, and suggests directions for future research. Through this structured approach, this study aims to gain a comprehensive understanding of the key factors and underlying mechanisms influencing users’ acceptance of AI health assistants.

Related research

UTAUT: the unified theory of acceptance and use of technology

The acceptance and utilization of information systems (IS) and information technology (IT) innovations have emerged as pivotal issues in both academic research and practical applications45. Understanding the factors that drive users to accept or reject new technologies has become an essential task throughout any IS/IT lifecycle46,47. To investigate this subject, prior research has introduced a range of theoretical models, including the Theory of Rational Action (TRA48, the Theory of Planned Behavior (TPB49, the Technology Acceptance Model (TAM36, and Innovation Diffusion Theory (IDT50, among others. Venkatesh et al.51 proposed the UTAUT, which aims to establish a comprehensive framework for explaining and predicting technology acceptance behaviors52. This model was developed by integrating eight prominent theories and models: the TRA, the TPB, the TAM, a combined TBP/TAM, the Motivational Model, the Model of PC Utilization, Social Cognitive Theory (SCT) and the IDT53. By synthesizing key concepts from these influential frameworks, UTAUT offers a more holistic approach to analyzing technology acceptance dynamics54.

The UTAUT model is robust as it identifies four key constructs—performance expectancy, effort expectancy, social influence, and facilitating conditions—that collectively impact behavioral intention and actual behavior. Compared to earlier models of behavioral intention variables, the UTAUT framework demonstrates greater explanatory power (Venkatesh et al., 2003). Specifically, UTAUT posits that these four core constructs (PE, EE, SI, FC) serve as direct determinants of both behavioral intention and ultimate behavior. In this context, PE refers to the extent to which an individual believes that utilizing the system will enhance their job performance. EE denotes the perceived ease associated with using the system. SI reflects how important an individual perceives others’ opinions regarding their use of the new system. Lastly, FC indicates the degree to which individuals believe that a supportive organizational and technical infrastructure exists for effective system utilization (Venkatesh et al., 2003). Furthermore, UTAUT takes into account moderating variables such as gender, age, voluntariness, and experience in its analysis.

As a widely recognized theoretical model, the extensive application of UTAUT across various fields demonstrates its significant applicability. It has been employed to investigate numerous technology acceptance scenarios, including digital libraries55, the Internet of Things56, artificial intelligence40,57, e-health technologies58, e-government initiatives59, bike-sharing systems60, and educational chatbots61. These studies collectively affirm that UTAUT can effectively elucidate and predict users’ acceptance and willingness to adopt new technologies, particularly in contexts involving technological interaction53. Furthermore, by integrating domain-specific variables or extending the model itself, UTAUT can further enhance its explanatory power to meet the diverse needs of different technologies and environments35. Consequently, this study aims to utilize UTAUT as a theoretical framework to explore the key factors influencing user acceptance of AI health assistants while also verifying its applicability and effectiveness within the realm of AI-based healthcare.

Research on the acceptance of AI systems

In AI systems, acceptance research focuses on customer service62, education7, and consumer goods63 and other areas. Previous studies have employed several models to assess user acceptance of AI systems, including the TAM, the UTAUT, and the artificially intelligent device use acceptance (AIDUA64. The AIDUA framework encompasses three stages of receptive generation: primary appraisal, secondary appraisal and outcome stage. It also identifies six antecedents: social influence, hedonic motivation, anthropomorphism, performance expectancy, effort expectancy and emotion. Gursoy et al.64 established that both social influence and enjoyment motivation exhibit a positive correlation with performance expectancy within the context of AI devices. Furthermore, anthropomorphism was found to be positively correlated with effort expectancy. Additionally, Li65 explored college students’ actual use of AI systems by incorporating attitude and learning motivation into the original TAM constructs—perceived usefulness, perceived ease of use, behavioral intention, and actual usage. Finally, it was found that the perceived usefulness and ease of use of AI systems positively influence students’ attitudes, behavioral intentions, and actual usage. However, college students’ attitudes toward AI systems have minimal impact on their learning motivation to achieve goals and subjective norms. Xiong et al.33 subsequently extended the UTAUT model by incorporating users’ perceptions of trust and risk to better understand user acceptance of AI virtual assistants. Their findings indicated that performance expectancy, effort expectancy, social influence, and facilitating conditions were positively correlated with the behavioral intention to utilize an AI virtual assistant. Furthermore, trust exhibited a positive effect while perceived risk had a negative effect on both attitudes and behavioral intentions regarding the use of AI virtual assistants.

In the field of healthcare, research on the acceptance of AI systems has predominantly concentrated on medical professionals. Alhashmi et al.39 conducted a survey among employees in the health and IT sectors, revealing that management, organizational structure, operational processes, and IT infrastructure significantly enhance both the perceived ease of use and perceived usefulness of AI initiatives. Lin et al.40 examined the attitudes, intentions, and associated influencing factors regarding AI-applied learning among medical staff. The study identified supervisor norms and perceived ease of use as critical determinants of behavioral intent. Similarly, So et al.41 reached a comparable conclusion that there exists a significant relationship between perceived usefulness and subjective norms concerning AI acceptance; these two factors directly influence medical personnel’s attitudes toward embracing AI technologies. Fan et al.66 employed the UTAUT framework to investigate healthcare professionals’ adoption of AI-based Medical Diagnostic Support Systems (AIMDSS). The findings indicated that both initial trust and performance expectancy exert substantial effects on behavioral intent when utilizing AIMDSS; however, effort expectancy and social influence did not demonstrate significant impacts on behavioral intention. In addition, a more comprehensive overview of this research is provided in Table 1. This table presents a partial summary of the findings from studies examining the acceptance of AI in healthcare, including details such as researcher, object, theoretical framework, methodology, country, and key outcomes.

In addition, perceived trust and perceived risk have consistently been significant factors in acceptance studies of AI systems. Numerous prior studies have regarded these elements as extended constructs4,68,70. Research indicates that trust, privacy concerns, and risk expectations collectively predict the intention, willingness, and usage behavior of AI across various industries35. Users express apprehension regarding how AI may exploit their financial, health-related, and other personal information71, as sharing such data raises substantial privacy concerns72. Both Liu and Tao73 and Guo et al.74 found that a loss of privacy adversely affects trust levels; thus, consumers with heightened privacy concerns are less inclined to place their trust in these services. Furthermore, Prakash and Das68 confirmed that users’ perceptions of threats and risks significantly influence their resistance to the adoption of AI systems. Choung et al.75 expanded upon the TAM by demonstrating that trust positively predicts perceived usefulness. Liu and Tao73 also established that trust directly influences behavioral intentions toward utilizing smart healthcare services. In Miltgen et al.‘s study76, trust emerged as the strongest predictor of behavioral intent when employing AI for iris scanning applications. Consequently, confidence in both AI technologies and their providers serves as a crucial driver for the acceptance of AI solutions35. However, it is worth noting that previous acceptance studies have often analyzed perceived trust and perceived risk as independent variables. For instance, researchers have separately examined the inhibitory effect of privacy risk on adoption intention or discussed the promoting function of technical trust in isolation. Research exploring the interaction between these two factors within the context of AI health assistants remains limited. Therefore, this study aims to investigate the influence of perceived trust on perceived risk. Furthermore, it is essential to further confirm how perceived trust affects performance expectancy and effort expectancy in AI health assistants.

Proposed models and assumptions

Given the strong comprehensiveness, excellent predictive power, and high scalability of the UTAUT45, along with its significant applicability to complex technologies such as artificial intelligence33,57, this study aims to expand upon and conduct an in-depth exploration based on this model. The literature review indicates that UTAUT integrates several classical technology acceptance theories (including the TAM, the IDT, the TPB, etc.), effectively encompassing the key factors influencing users’ technology acceptance. In particular, for the emerging technology of AI health assistants, UTAUT can elucidate user behavior from multiple dimensions—such as performance expectation, effort expectation, social influence, and more. Most prior studies examining the acceptance of AI systems and their products have employed the UTAUT40,57,77, further validating its applicability and effectiveness within this domain. Additionally, the flexibility and scalability of the UTAUT enable researchers to incorporate new variables tailored to specific research needs in order to better align with distinct research contexts.

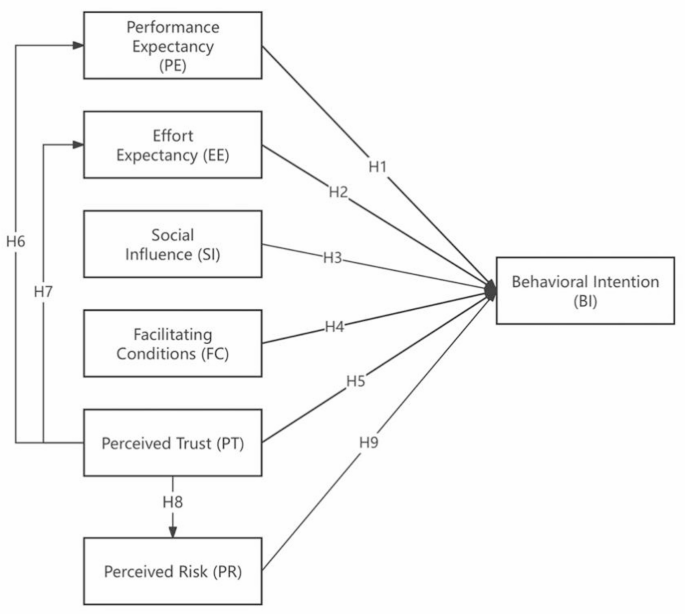

This research model is based on the UTAUT framework, which encompasses performance expectancy, effort expectancy, social influence, and facilitating conditions. To enhance the UTAUT model, two additional constructs—perceived trust and perceived risk—have been incorporated. User acceptance of the AI health assistant was assessed through behavioral intention. In this context, behavioral intention serves as the dependent variable, while performance expectancy, effort expectancy, social influence, facilitating conditions, perceived trust, and perceived risk are treated as independent variables. The proposed research model along with its hypotheses is illustrated in Fig. 1.

Performance expectancy

Performance expectations are defined as “the degree to which an individual believes that the use of the system will contribute to his or her improved job performance"51. This concept also reflects the extent to which the application of technology can benefit users in performing specific activities52. Numerous studies have indicated that within the UTAUT framework, performance expectations positively influence individuals’ willingness to adopt technology45,51,78. Previous studies on AI systems have also confirmed the significant impact of performance expectancy on the intention to use79,80. In particular, within the context of AI health assistants, performance expectation pertains to the capability of AI health systems to deliver services that enhance users’ task execution efficiency. Consequently, this study posits that with support from AI health assistants, performance expectations can not only facilitate more effective access to health information for users but also improve their consultation efficiency, thereby increasing their willingness to utilize the application. Based on this premise, we propose the following hypothesis:

H1

Performance expectancy positively affects behavioral intentions to use the AI health assistant.

Effort expectancy

Effort expectancy refers to the ease of use associated with a particular system51 and is also linked to how effortlessly a user can engage with the technology52. Effort expectancy has demonstrated positive effects in studies concerning AI acceptance33,40,81. Additionally, some research indicates that while the impact of effort expectancy may be modest, it remains positive82. Consequently, within the context of AI health assistants, we hypothesize that effort expectancy will exert a favorable influence on behavioral intention.

H2

Effort expectancy has a positive effect on behavioral intent using the AI health assistant.

Social influence

Social influence is defined as an individual’s perception of the expectations others have regarding their adoption of a particular technology, as well as the degree to which these external opinions affect the individual’s acceptance and utilization of that technology51. Prior research has demonstrated that users are more inclined to engage with a system when it is endorsed by significant individuals in their social circles51,83. In other words, stronger social influence correlates with a heightened willingness to adopt new technologies. Previous studies have shown that this is also the case in the acceptance of AI systems84,85. Consequently, this study hypothesizes that users’ perceptions of social influence will positively impact their willingness to utilize the AI health assistant.

H3

Social influence positively affects behavioral intentions to use the AI health assistant.

Facilitating conditions

Facilitating conditions refer to the extent to which individuals perceive that their organization and technical infrastructure can support the utilization of a technology51. This concept encompasses external factors and objective circumstances within the user’s environment that influence the ease of implementing a behavior86. Facilitating conditions comprise two dimensions: technology-facilitated conditions and resource-facilitated conditions87. In this study, we define conveniences as encompassing both computer facilities and technical support available during the use of AI health systems. However, it is worth noting that, according to the original UTAUT proposed by Venkatesh et al.51, FC is defined as a variable that directly affects the actual use (AU) rather than the behavioral intention. The original theory holds that even with a strong intention to use, the actual use of users can still be hindered if necessary resources or technical support (such as device compatibility and knowledge reserves) are lacking. Therefore, FC has a direct moderating effect on AU51. However, in the context of this study, the adoption of AI technology in China’s healthcare is currently in the early promotion stage. Therefore, we propose the hypothesis that users’ perception of the availability of technical support may have a preemptive impact on their adoption decisions. For instance, when users realize they can obtain official training resources or device compatibility guarantees, it may directly prompt them to form a usage intention rather than only exerting a positive effect at the actual use stage. Additionally, previous studies on AI systems have also shown a significant positive correlation between FC and BI80,88,89 Based on this understanding, we propose the following hypothesis:

H4

Facilitating conditions positively affect behavioral intentions to use the AI health assistant.

Perceived trust

Perceived trust is defined as a user’s belief that an agent can assist him in achieving his goals under uncertain circumstances90. Technological trust plays a crucial role in predicting user behavioral intentions across various online systems, including mobile payments91, information systems92, online shopping,93, and e-commerce94. Most studies indicate that trust significantly influences performance expectancy95,96, the ease of use of the system97, and intention to use the technology97 with a notable positive impact. However, some research on AI systems has suggested that the relationship between trust and performance expectancy may not always be significant33. This indicates that further exploration is needed regarding the interplay between trust and these variables within the context of AI health assistants. Moreover, users may encounter heightened psychological risks when confronted with new technologies—manifesting as fear, hesitation, and other negative emotions43. Consequently, in the realm of AI assistants, trust is closely associated with perceived risk. Trust can enhance users’ confidence in technology while alleviating their concerns about adopting new innovations97. Previous studies have confirmed a negative correlation between trust and perceived risk within Internet technology contexts98,99. Based on this foundation we propose the following hypothesis:

H5

Perceived trust positively affects behavioral intent to use the AI health assistant.

H6

Perceived trust positively affects performance expectancy using the AI health assistant.

H7

Perceived trust positively affects the effort expectancy to use the AI health assistant.

H8

Perceived trust negatively affects the perceived risk of using the AI health assistant.

Perceived risk

Perceived risk is defined as the uncertainty and expectation of negative outcomes that may arise when the intended goal is not achieved100. In services powered by AI technology, perceived risk encompasses various dimensions, including personal risk, psychological risk, economic risk, privacy risk, and technical risk—each associated with potential loss97,101. This study specifically examines the perceived risks related to AI health systems with a focus on privacy and security. Perceived risk can evoke negative emotions and subsequently influence users’ behavioral intentions102, a phenomenon particularly pronounced in the context of healthcare systems103. When individuals experience discomfort due to uncertainty and ambiguity, they are more inclined to refrain from using such systems in order to avoid confronting the aforementioned risks104. Based on this analysis, we propose the following hypothesis:

H9: Perceived risk negatively affects behavioral intent to use the AI health assistant.

Method design

Materials

The IFLY Healthcare application (app) served as the experimental material for this study. Developed by IFlytek, the app is an AI-powered health management tool designed to function as a personal AI health assistant for users. This app integrates various features, including disease self-examination, report interpretation, drug inquiries, medical information searches, and health file management. It primarily targets ordinary Chinese users and offers comprehensive health consultation services. Since its official launch in October 2023, the app has been downloaded over 12 million times and boasts a positive rating of 98.8% along with an active recommendation rate of 42%.

Questionnaire development

The questionnaire is structured into three distinct sections. The first section gathers demographic information, including variables such as gender, age, education level, and experience with the IFLY Healthcare app. The second section comprises items designed to assess the four constructs of the UTAUT, specifically performance expectancy, effort expectancy, social influence, and facilitating conditions. Additionally, this section includes items that evaluate BI regarding the use of AI health assistants. The measurement items for each construct were adapted from prior research demonstrating their reliability and validity51,52. All items are formulated to gauge respondents’ perceptions concerning specific statements, such as “I think the app can provide useful health consultation services.” The third part of the questionnaire encompasses items aimed at measuring participants’ perceived trust and perceived risk associated with using this technology. All measures utilized in this section were adapted from previous studies33. Perceived trust is assessed across four dimensions: reliability, accuracy, worthiness, and overall trust. Perceived risk is evaluated in terms of privacy, information security, and overall risk. All items were measured using a seven-point Likert scale (where “1” indicates strong disagreement and “7” indicates strong agreement). The supplementary material presents the structure, items, and sources of the measurements utilized in this study. In structural equation modeling (SEM), it is recommended that each construct comprises no fewer than three factors for analysis105. This study designed a total of 23 items, with each dimension containing between 3 and 4 items. This approach was adopted to mitigate participant fatigue and boredom resulting from an excessive number of items—factors that could adversely affect response quality and completion rates106. Consequently, we aimed to simplify the questionnaire as much as possible while preserving its clarity.

Pilot study

After determining the structure and candidate items, we invited five experts with backgrounds in AI and healthcare research to discuss the questionnaire and clarify the objectives and scope of the study. To ensure the facial validity of the survey instrument, we incorporated their suggestions and comments into a structured questionnaire, which was subsequently confirmed as a pilot version. The experts indicated that there were no apparent issues with either the construction or presentation of the items. However, they recommended that participants watch a tutorial video on using the IFLY Healthcare app prior to completing the questionnaire. Following this recommendation, participants were asked to utilize a specific feature within the app before filling out an evaluation questionnaire. Consequently, we refined both our experimental procedures and protocols. Pilot studies are essential for verifying item comprehension and experimental methodologies prior to data collection. We randomly selected 35 participants who had experience using AI systems on social platforms such as Weibo and WeChat. All participants completed the survey; most reported that items were easy to understand without any difficulties, taking between one to five minutes to complete. Four participants expressed uncertainty regarding what was meant by “I have the resources necessary to use the app.” In response to this feedback, we considered their suggestions and revised certain wording in order to enhance clarity by including explanations related to hardware and software.

Participants

We recruited 400 Chinese users online. Prior to conducting the survey, all participants provided informed consent by signing informed consent forms. A total of 400 responses were collected. The data underwent a screening process, resulting in the removal of 27 samples deemed invalid. The criteria for screening included completion times of less than one minute (n = 9), response repetition rates exceeding 85% (n = 15), missing data (n = 0), and outliers identified through SPSS analysis (n = 3). Consequently, the total number of valid samples was reduced to 373. This sample size exceeds ten times the number of items, thereby ensuring reliable results from CB-SEM analysis107,108. The demographic results indicate that among the participants there were 114 men and 259 women (see Table 2). The majority of participants were aged between 19 and 39 years old (80.7%) and held a bachelor’s degree (82.04%). Additionally, a significant portion had experience using the IFLY Healthcare app (62.73%).

Programs

-

1.

Researchers disseminated recruitment advertisements via online social platforms, including Weibo, WeChat, Xiaohongshu, and relevant forums. Participants who expressed interest reviewed the experiment’s introduction and signed an informed consent form.

-

2.

Upon signing the consent form, participants accessed the introduction page by clicking on a link or scanning a QR code. They then read the experimental descriptions and viewed a 2-minute tutorial video demonstrating how to use the IFLY Healthcare app.

-

3.

Participants were instructed to install and access the IFLY Healthcare app. After completing their health management profile with basic information, they engaged in at least two back-and-forth conversations with the AI health assistant for a maximum duration of 10 min.

-

4.

Following either the completion of these interactions or after 10 min had elapsed, participants submitted screenshots to the lab assistant for verification. Once confirmed, participants clicked on a link to complete an online questionnaire.

-

5.

After receiving submissions of questionnaires, the lab assistant verified whether any data was missing and issued a payment of 5 yuan upon confirmation that all data was complete.

Results

Measurement evaluation

SEM offers a versatile approach for constructing models based on latent variables, which are connected to observable variables through measurement models109,110. This methodology facilitates the analysis of correlations among underlying variables while accounting for measurement errors, thereby enabling the examination of relationships between mental constructs111. Two widely utilized SEM methods are Covariance-Based SEM and Partial Least Squares SEM. While both methodologies are effective in developing and analyzing structural relationships, CB-SEM imposes stricter requirements on data quality compared to PLS-SEM, which is more accommodating112. CB-SEM rigorously tests the theoretical validity of complex path relationships among multiple variables by assessing the fit between the model’s covariance matrix and the sample data. Furthermore, CB-SEM’s precise estimation capabilities for factor loadings and measurement errors facilitate an accurate evaluation of construct validity113. Research also suggests that CB-SEM models are particularly well-suited for factor-based frameworks, whereas composite-based models yield superior results within PLS-SEM contexts112. Consequently, this study adopted CB-SEM for its analytical framework. In this study, two software packages were employed for data analysis: the Statistical Package for the Social Sciences (SPSS) and the Analysis of Moment Structures (AMOS). Prior to analysis, sample cleaning and screening were conducted to ensure that all data was complete with no missing values and that any invalid data points were removed. It is essential to assess the normality of the data before proceeding with further analyses. In this research, the Jarque-Bera test (which evaluates skewness and kurtosis) was utilized to determine whether the dataset followed a normal distribution. According to Tabachnick et al.114, a normal range for skewness-kurtosis values is considered to be less than 2.58. Following this guideline, it was found that all items in the dataset exhibited a normal distribution (i.e., < 2.58).

We subsequently evaluated the convergence validity, discriminant validity, and model fit of the proposed structure. According to established guidelines, a factor loading of 0.70 or higher is indicative of good index reliability; Composite Reliability (CR) and Cronbach’s alpha values exceeding 0.70 signify adequate internal consistency reliability; an Average Variance Extracted (AVE) value of 0.50 or greater indicates sufficient convergent validity; and the square root of each AVE should surpass the corresponding inter-construct correlations to demonstrate adequate discriminant validity107,115,116. As presented in Table 3, Cronbach’s alpha ranges from 0.838 to 0.897, with all values exceeding 0.8. Both CR and AVE meet the recommended thresholds of 0.7 and 0.5 for all constructs respectively, while standardized factor loadings above 0.7 for all measurement items indicate strong internal consistency. Furthermore, as illustrated in Table 4, the mean square root for each construct is greater than its estimated correlation with other constructs. This finding suggests that each construct is more closely related to its own measurements than to those of other constructs within this study framework. Thus, these results provide support for the discriminant validity inherent in this research. In addition, the heterotrait-monotrait (HTMT) ratio correlation test supplements and reconfirms the discriminant validity. The results in Table 5 show that all the values of the structures are less than 0.90, indicating good discriminant validity117.

The fit index encompasses several key metrics, including χ2/df, Root Mean Square Error of Approximation (RMSEA), Standardized Root Mean Square Error (SRMR), Tucker-Lewis Index (TLI), Normative Fit Index (NFI), Non-Normative Fit Index (NNFI), Comparative Fit Index (CFI), Goodness of Fit Index (GFI), and Adjusted Goodness of Fit Index (AGFI). According to established criteria, a model structure is deemed acceptable when χ2/df falls between 1 and 3, RMSEA and SRMR are below 0.08, and TLI, NFI, NNFI, AGFI, CFI, and GFI all exceed 0.90 107. The model presented in this study demonstrated a good fit based on these fit index estimates as shown in Table 6.

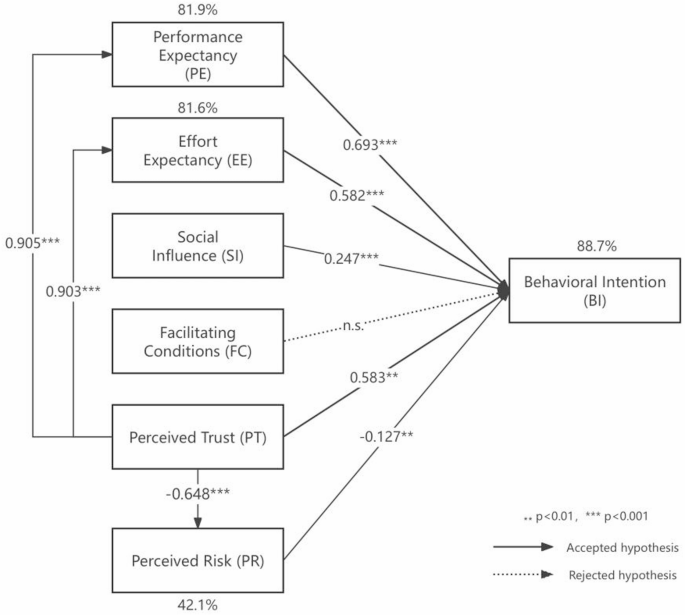

Path analysis and hypothesis testing

The results of the path analysis indicate that eight hypotheses were accepted while one was rejected. All hypotheses received support except for H4. As illustrated in Table 7; Fig. 2, the relationships among PE, EE, SI, and BI in the original UTAUT remain significant; specifically, H1, H2, and H3 are all supported. PE, EE, and SI demonstrated significant positive effects on BI with coefficients of β = 0.693 (p < 0.001), β = 0.582 (p < 0.001), and β = 0.247 (p < 0.001) respectively. However, it is noteworthy that FC did not have a significant effect on BI (β = 0.01, p > 0.05), leading to the rejection of H4. Furthermore, PT significantly positively influenced BI as well as both PE and EE with coefficients of β = 0.583 (p < 0.01), β = 0.905 (p < 0.001), and β = 0.903 (p < 0.001). Conversely, PT negatively affected PR with a coefficient of β=− 0.648 (p < 0.001); thus, hypotheses H5, H6, H7, and H8 are accepted. On another note, PR exhibited a significant negative impact on BI with a coefficient of β=− 0.127 (p < 0 0.01); therefore, H9 is supported. In addition to these findings, the combined influence of PE, EE, SI, PT, and PR accounted for 88.7% of the variance in BI. Moreover, PT explained 81.9% of the variance in PE, 81.6% in EE, and 42.1% in PR.

Discussion

This study extends the two constructs of perceived trust and perceived risk based on the UTAUT to investigate ordinary users’ behavioral intentions towards AI health assistants. The findings confirm the relationship between the original UTAUT constructs—namely, performance expectancy, effort expectancy, social influence, facilitating conditions, and behavioral intentions—and the two extended factors. Overall, the foundational structure of UTAUT demonstrates considerable robustness; specifically, performance expectancy, effort expectancy, and social influence exert positive and significant effects on users’ behavioral intention to utilize this technology. However, the impact of facilitating conditions is not statistically significant. Furthermore, perceived trust is found to be closely associated with performance expectancy, effort expectancy, and usage intention while exhibiting a significant negative effect on perceived risk. Conversely, perceived risk negatively influences usage behavior intention. Path analysis results indicate that eight hypotheses were supported while one hypothesis was rejected.

Performance expectancy and effort expectancy

The results indicated that PE and EE significantly and positively influenced BI in the context of AI health assistants, with coefficients β = 0.693 (p < 0.001) for PE and β = 0.582 (p < 0.001) for EE; notably, PE exerted a more substantial impact on BI than EE. This suggests that higher user expectations regarding the performance achievable through AI health assistants correlate with an increased likelihood of intending to utilize the system. Similarly, lower user expectations concerning the effort required to engage with the system also enhance their intention to use it. These findings align with the majority of prior research, including studies on artificial intelligence-based clinical diagnostic decision support systems68, artificial intelligence virtual assistants33, and service delivery artificial intelligence systems64. However, they stand in contrast to the findings related to organizational decision-making artificial intelligence systems118. Specifically, when users perceive that their health management objectives—such as enhancing health knowledge, better managing diseases, or improving lifestyle—can be effectively attained through the utilization of AI health assistants, they are likely to exhibit a greater willingness to experiment with and persist in using these systems. This positive performance expectancy may bolster users’ confidence in viewing engagement with AI health assistants as a worthwhile investment119. Furthermore, if users perceive that utilizing an AI health assistant will not require excessive time and energy, or if the system features a user-friendly interface that facilitates ease of operation—thereby alleviating the burden during usage—this may further motivate them to engage with the system. A lower anticipated effort implies that users believe they can derive benefits without expending significant resources (such as time and energy), which in turn enhances their willingness to adopt it120.

Furthermore, it is worth noting that due to the random sampling method adopted in this study, the proportion of females is relatively high, and the majority of participants have received higher education. Therefore, the research results can also be interpreted from the perspectives of gender and educational background. Regarding gender, the strong predictive power of PE on BI may partly stem from the specific demands of women for technical efficacy in health management scenarios. Previous studies have shown that women attach great importance to the accuracy of information and the reliability of results in medical decision-making121. This tendency may lead them to more strictly evaluate whether AI health assistants can effectively enhance the efficiency of health management when assessing them, thereby strengthening the correlation between performance expectancy and the intention to adopt technology. In terms of educational background, users with higher education tend to have a relatively higher utilization rate of AI-assisted tools in professional settings. Therefore, they have a relatively stronger ability to understand operational goals and evaluate the functions of AI systems122. This cognitive difference may amplify the validity of performance expectancy measurement indicators among the highly educated group, thereby explaining their higher standardized path coefficients. At the same time, a high level of education may moderate the effect boundary of effort expectancy by reducing the perception of technological complexity. When users have sufficient technical literacy, even if the system has a certain learning curve, they can quickly overcome usage barriers through their existing knowledge reserves. This makes the influence of effort expectancy remain significant but relatively weaker in explaining performance expectancy.

Social influence and facilitating conditions

The findings also indicated that SI had a significant positive effect on BI (β = 0.247, p < 0.001), consistent with previous research40,64. Specifically, when users perceive favorable attitudes and recommendations regarding the use of AI health assistants from individuals in their social circles—such as family members, friends, colleagues, or other network associates—they are more likely to express an intention to utilize the system. This phenomenon may be attributed to the intrinsic nature of humans as social beings, we often shape our behaviors based on the actions and opinions of others123. In particular, if an individual is surrounded by groups that maintain a positive perspective on AI health assistants and these individuals demonstrate the advantages of using such systems in their daily lives—such as enhancing the efficiency of health consultations or streamlining medical procedures—the individual is consequently more inclined to regard the utilization of AI health assistants as beneficial. In addition, social support networks can provide individuals with valuable resources in terms of information and practical assistance, thereby further mitigating the perceived risks associated with adopting a new system124. For instance, by engaging in discussions about the effective utilization of AI health assistants, users can acquire essential tips and advice that not only enhance their confidence but also foster greater acceptance of the system and an increased willingness to utilize it. Alternatively, social influence may operate by fostering a sense of belonging and social identity125. When individuals observe that those around them are using a specific application or technology, they may perceive this behavior as a social norm and feel compelled to participate themselves in order to avoid marginalization or to gain group approval. Furthermore, regarding gender, the relatively high proportion of female participants may have enhanced the path effect of social influence. This phenomenon could be attributed to a characteristic prevalent among women: they tend to rely more on social network support when making health-related decisions126. Specifically, women’s adoption of healthcare technologies is often embedded in denser social relationship networks (such as the role of family health managers, interactions in mother and baby communities, etc.), which makes them more susceptible to the influence of medical professionals’ recommendations or the experiences of friends and relatives when evaluating AI health assistants. Additionally, the high concentration of educational attainment may also influence how social influence operates. Individuals with higher education levels typically possess stronger information screening capabilities; thus, their responses to social influence may prioritize authoritative professional sources (e.g., conclusions drawn from medical journals or expert consensus). Consequently, it is possible that the measurement of social influence in this study primarily captures the mechanism through which professional opinions are adopted.

Moreover, contrary to previous research and unexpectedly33,51, FC had no significant effect on BI (β = 0.01, p > 0.05). Nevertheless, our findings are consistent with the observation that when PE and EE are included in the model, FC appears to be an insignificant factor in predicting usage intent127. Furthermore, the establishment of facilitation conditions seems to exert a more direct influence on actual system use than on behavioral intention51. In this study, despite the presence of various supportive environments or resources designed to facilitate user engagement with AI health assistants—such as technical support, usage tutorials, and device compatibility—these factors do not significantly enhance users’ intentions to utilize these systems. This situation may indicate that users are influenced by various factors when deciding whether to utilize AI health assistants, including personal familiarity with the technology, social influence, performance expectancy, and effort expectancy. Even in the presence of favorable facilitation conditions, users may remain hesitant to try or continue using the system if they lack confidence in their own skills or do not perceive adequate social support and positive social influence128. Furthermore, this suggests that users’ perceptions of the value of an AI health assistant may not be directly shaped by external supportive conditions. For instance, if users believe that the system provides significant health benefits and is user-friendly, they may exhibit a strong inclination to use it even in the absence of additional supportive measures. Furthermore, this phenomenon may be attributed to the distinctive characteristics of the technological ecosystem and the developmental stage of AI health assistants. As a relatively nascent field of technological application in recent years, AI health assistants require further enhancements regarding hardware compatibility. For instance, users may still experience challenges such as failed device logins or data synchronization issues during actual operation. These deficiencies can undermine the effectiveness of theoretical facilitating conditions, making it challenging for infrastructure support to translate into user-perceived convenience. In terms of the service support system, there exists a disparity between the maturity of supporting services provided by AI health assistants and user expectations. Although companies offer online tutorials and customer service channels, the response speed and problem-solving efficiency of these services may not yet al.ign with those found in mature medical IT systems. This is particularly critical when addressing sensitive matters such as privacy data breaches or uncertainties surrounding diagnostic results; in these instances, the professionalism and authority of support services may fall short. Such limitations in service capabilities could lead users to perceive facilitating conditions as “formal guarantees” rather than “substantial support,” thereby diminishing their overall influence.

Perceived trust and perceived risk

This study confirmed that PT significantly positively affected BI, PE, and EE in the context of AI health assistants (β = 0.583, p < 0.01; β = 0.905, p < 0.001; β = 0.903, p < 0.001), and negatively affected PR (β=− 0.648, p < 0.001). This is similar to most studies based on UTAUT33,66,68,129. The results suggest that a higher degree of trust in AI health assistant systems correlates with increased expectations regarding their performance and effort requirements, thereby enhancing users’ inclination to utilize these systems while simultaneously diminishing their perceived risks associated with usage. Specifically, when users exhibit trust towards AI health assistants, they are more likely to believe that such systems can effectively assist them in achieving their health management objectives—such as providing valuable health information or optimizing medication planning—which further elevates their expectations concerning system performance. Moreover, trust may also mitigate psychological barriers related to the effort necessary for utilizing these systems; this is because trust encourages users to invest time and energy into learning how to operate the system effectively while integrating it into their daily routines130. Therefore, a high level of trust not only enhances users’ confidence in the system’s effectiveness but may also mitigate the hesitancy and apprehension typically associated with trying new technologies. Furthermore, this trust directly influences users’ intentions to engage with the system. A trusted system is more likely to garner user favor as individuals perceive it as a reliable option for long-term use. Concurrently, as trust levels rise, users’ perceived risks—such as concerns regarding privacy breaches and data security issues—tend to diminish correspondingly. This indicates that trust can not only foster positive attitudes toward AI health assistants but also alleviate concerns about potential negative outcomes, thereby further encouraging user engagement. Furthermore, and more importantly, the transparency of AI health systems is a fundamental element in fostering user trust131. When users can comprehend how the system generates diagnostic suggestions, understand the data upon which health plans are based, and are aware of the limitations regarding their personal information usage, the “black box” perception associated with technical operations will be significantly diminished. Simultaneously, this transparency mitigates perceived risks through a dual mechanism: on one hand, visualizing decision-making logic (such as illustrating the weight of key symptoms or outlining health trend analysis pathways) enables users to verify the rationality of the system’s outputs. This verification process alleviates concerns about potential algorithmic misjudgments. On the other hand, clear disclosure of data policies (including details on information collection scope, storage duration, and third-party sharing regulations) empowers users to establish a sense of control based on informed consent while diminishing fears related to privacy breaches. This transparent operation not only directly enhances users’ perceptions of technological reliability but also fosters an environment where users regard the system as a collaborative partner rather than an enigmatic external technology by establishing a “traceable and explainable” service framework. When transparency is combined with technical efficacy and robust privacy protection to create a synergistic effect, users’ risk assessments tend toward objective facts rather than subjective speculation. Consequently, driven by trust in these systems, they are more likely to actively embrace and consistently utilize AI health assistants.

In addition, our findings indicate that PR has a significant negative impact on BI (β = − 0.127, p < 0.01). Specifically, when users perceive that utilizing AI health assistants may entail certain risks or uncertainties, their willingness to engage with the system is markedly diminished. These risks can encompass various dimensions, including privacy protection, information security, and technical reliability. If users harbor concerns regarding the potential for improper access to or leakage of their personal health information—or if they express skepticism about the technical performance and accuracy of AI health assistants—such apprehensions may create psychological barriers that deter them from adopting the system. Furthermore, fears related to possible operational errors, misdiagnoses, or an overreliance on AI recommendations at the expense of professional medical advice may also contribute to user hesitancy in employing AI health assistants132. It is essential to recognize that perceived risk not only influences users’ initial willingness to experiment with these technologies but may also impede their transition from short-term trials to sustained usage120. A heightened perception of risk necessitates a greater psychological burden for users; this stands in contrast to their inherent need for security and stability133. Consequently, it is crucial to address and mitigate users’ concerns regarding potential risks in order to enhance their intentions toward using these systems.

In terms of gender, the preponderance of female participants may have strengthened the penetration effect of perceived trust on the assessment of technical efficacy. That is, women may exhibit a stronger need for systemic trust in the adoption of health technologies (such as the demand for data transparency), which may explain the predictive power of perceived trust on performance expectancy and effort expectancy. Specifically, women’s trust in AI health systems may be more dependent on the empathetic design of the interaction interface (such as the humanized expression of health advice) and the dual verification of technical reliability (such as clinical effectiveness certification). This dual anchoring mechanism means that once trust is established, it may simultaneously enhance users’ positive expectancy of technical efficacy and ease of use. However, it should be noted that women’s sensitivity to privacy leaks may partially suppress the negative effect of perceived risk on usage intention in the sample, as the high education level characteristic may enhance the risk compensation mechanism through technical cognitive ability, including install privacy protection software and regularly check the permission settings. For example, even with privacy concerns, highly educated women may still maintain their willingness to use due to the security guarantees provided by the trust mechanism (such as explanations of encryption technology). This explains the relatively small coefficient of perceived risk. Additionally, in terms of educational background, the trust construction of high-education groups (especially those with STEM backgrounds) in AI technology may be more inclined towards cognitive trust rather than emotional trust134,135, which means that the perceived trust variable in the model essentially captures users’ rational recognition of the underlying logic of the technology. This mechanism may explain the significant influence of perceived trust on performance expectancy because when users can deconstruct the decision tree of AI diagnosis through professional knowledge, technical trust will directly translate into a definite judgment of expected efficacy.

Conclusions, limitations and future work

By developing and testing an extended UTAUT model, our study enhances the understanding of general users’ acceptance and behavioral intentions regarding the use of AI health assistants. This research underscores the importance of comprehending individual behavioral intentions by taking into account both the potential benefits and adverse effects associated with AI-based health advisory systems. By integrating UTAUT with perceived trust and perceived risk, a more nuanced discussion on the advantages and disadvantages of AI health assistants can be fostered, thereby aiding in the interpretation and prediction of individuals’ intentions and behaviors when utilizing AI technologies, as well as informing the development of AI health assistants.

This study focuses on addressing two key limitations in existing research at the theoretical level. On the one hand, most existing studies on the adoption of medical AI systems have concentrated on the perspectives of professionals such as doctors to explore the impact of technical features like algorithm explainability on doctor-patient collaboration. However, the unique cognitive mechanisms of ordinary users as direct users remain to be explored. On the other hand, previous studies often analyzed perceived trust and perceived risk as independent variables, while the impact of perceived trust on perceived risk and the expected perception of system functionality still needs to be verified in AI health assistants. Therefore, based on the UTAUT, this study constructed the influence path of perceived trust on perceived risk and empirically found that perceived trust not only directly enhances the intention to use but also has an indirect promoting effect by weakening perceived risk. At the same time, the study also revealed the reinforcing effect of perceived trust on performance expectancy and effort expectancy, proving that users’ confidence in AI health systems positively reshapes their technical utility assessment. This mechanism helps to expand the role map of cognitive variables in traditional UTAUT model. Overall, these findings can provide a new perspective for understanding the technology adoption of ordinary people towards healthcare AI systems.

The findings of this research have the potential to inform the development of more effective strategies and provide valuable insights for developers and related industries aimed at enhancing existing systems or launching new ones. System developers and industry investors must investigate the factors influencing the acceptance of AI health assistants, as well as their operational mechanisms. This exploration will not only promote the effective utilization of technology but also ensure that the capacity of AI health assistants to enhance public health is maximized while safeguarding user privacy and security. By addressing these critical issues, public trust in AI health assistants can be bolstered, thereby increasing their acceptance and utilization rates. For instance, this study identified that social influence exerts a significant positive effect on users’ behavioral intentions regarding system usage. In the context of AI health assistants, social influence is not only reflected in the dissemination of technological practicality but is also deeply embedded in the process of shaping users’ social identity and sense of group belonging. Developers can consider strengthening this mechanism by building a “health decision-making community”. For instance, by embedding a database of real user cases in the system, showcasing successful chronic disease management cases certified by medical experts, and designing a group matching function based on health profiles to enable users to form interactive alliances with groups having similar health backgrounds. At the same time, a “family health data sharing” module can be developed, allowing users to synchronize AI recommendations to family members’ devices under the premise of privacy authorization, thereby enhancing the credibility of the technology through natural dissemination within the intimate relationship network. In marketing strategies, regional health improvement reports can be jointly released with medical institutions, presenting the actual intervention effects of AI health assistants on specific groups (such as the hypertensive patient group) through visualized data. By leveraging the dual role of authoritative institution endorsement and social evidence, individual usage behavior can be transformed into collective health actions. This three-dimensional construction of social influence can enable the adoption of technology to spread from individual cognition to the social network level, ultimately forming a chain effect of “personal health management - family health collaboration—community health demonstration”, systematically enhancing users’ adoption willingness and continuous usage stickiness.

Additionally, the conclusions regarding perceived trust and perceived risk indicate that fostering the application and development of AI health assistants necessitates a concerted effort to build and enhance users’ sense of trust. Developers should prioritize improving system transparency, ensuring robust data security and privacy protection measures, as well as providing clear user guides and support services. These actions are essential for earning users’ trust, thereby increasing their willingness to utilize these technologies while alleviating concerns related to potential risks. Developers can enhance system transparency and privacy protection through a layered strategy. Technically, explainable artificial intelligence (XAI) can serve as the core of the system. For instance, by building real-time visual interfaces that transform decision-making processes such as symptom analysis and health assessment into flowcharts or heat maps and adding a “decision traceability” feature for users to review the sources of medical knowledge and the confidence level of algorithms. Meanwhile, the design should cater to different users, providing non-professionals with brief explanations (such as “Blood pressure warnings are based on the analysis of data fluctuations over the past 30 days”), while retaining an entry for professionals to adjust algorithm parameters. In terms of privacy protection, blockchain technology can be used to establish a data sovereignty mechanism, that is, by using distributed storage to separate biometric features from identity information and leveraging smart contracts to enforce user-customized data access rules (such as only allowing diabetes algorithms to read blood sugar data), with diagnostic data stored on a consortium chain to ensure auditability and immutability. Additionally, transparency requires institutional support. When users add new devices, the system should automatically generate data usage instructions and re-authorize. Regular algorithm update reports should also be released to form a trust loop of “technically traceable—data controllable—process traceable”.

Finally, regarding the discovery of facilitating conditions, it suggests that developers, in terms of system compatibility, can consider collaborating with mainstream smart device manufacturers to promote the standardization of data interfaces, such as accessing the open API of the national medical data center to achieve cross-device synchronization of data. Develop an automatic detection module that initiates adaptation optimization when identifying user devices and uses visual indicators (such as “certified adaptation” prompts) to let users perceive the improvement in compatibility. The service support system can consider adopting a three-level response. That is, routine problems are handled by AI, privacy issues are transferred to professional teams, and diagnostic doubts are directly connected to medical institution experts. At the same time, add a “risk-sharing” function. When data synchronization fails, the system not only fixes the problem but also provides free remote consultations and other compensations, converting service commitments into trust support. In addition, in terms of user capability development, a dynamic training system can be built. For example, customized content can be pushed based on user operation habits (such as the frequency of function usage). For users with lower digital skills, virtual tutorials for simulated consultations can be provided, while for high-knowledge users, the algorithm flow diagram function can be opened to enhance technical understanding. At the same time, develop personalized benefit reports, using specific data to present the benefits of health management.

Although this study has drawn several meaningful conclusions, it is important to acknowledge certain limitations. Firstly, regarding demographic characteristics, the random sampling method resulted in an unbalanced proportion of men and women within the sample. This imbalance may introduce bias related to gender differences in the evaluation of results33. Furthermore, approximately 80% of participants possessed a bachelor’s degree or higher, which is significantly greater than the corresponding percentage in the overall population60. Additionally, the age distribution exhibited limitations as only four samples represented individuals aged 18 years and under. Collectively, these factors constrain our ability to conduct comprehensive analyses of how demographic variables differ across various levels and their moderating effects. In addition, this study classifies user experience into two broad categories: “used” and “not used.” This simplistic classification method does not adequately capture the nuanced differences in user experience across various stages. Future research should consider implementing more detailed classification criteria, such as segmenting usage duration into intervals of less than one month, 1 to 3 months, 3 to 6 months, and so forth. Such an approach would enable a more precise assessment of how usage experiences influence acceptance. Furthermore, key variables—such as financial status and occupational background—that were not thoroughly examined in this study may also significantly affect the acceptance of AI health assistants. Finally, in terms of the theoretical framework, although the UTAUT effectively supports the exploration of basic adoption mechanisms, this study does not incorporate elements specific to personal consumption scenarios as included in UTAUT2 (such as Price Value and Habit). As AI health assistants gradually enter the commercial stage, future research could integrate the UTAUT2, particularly focusing on the moderating effects of Habit and Price Value in paid service scenarios. Additionally, future research could further refine personal habits into the interaction effect between technology use inertia and health management inertia. Moreover, when the industry reaches maturity, a dynamic adoption model could be established to track the longitudinal changes in the weights of various variables as users transition from free trials to paid subscriptions. These expansions not only enhance theoretical explanatory power but also provide differentiated evidence support for product strategies at different development stages.

Data availability

The datasets generated and/or analysed during the current study are not publicly available due to the protection of participants’ privacy but are available from the corresponding author on reasonable request.

References

Dousay, T. A. & Hall, C. Alexa, tell me about using a virtual assistant in the classroom. In Proceedings of EdMedia: World Conference on Educational Media and Technology 1413–1419. https://www.learntechlib.org/primary/p/184359/ (Association for the Advancement of Computing in Education (AACE), 2018).

Song, Y. W. User Acceptance of an Artificial Intelligence (AI) Virtual Assistant: An Extension of the Technology Acceptance Model. Doctoral Dissertation (2019).

Arora, S., Athavale, V. A. & Himanshu Maggu, A. Artificial intelligence and virtual assistant—working model. In Mobile Radio Communications and 5G Networks. Lecture Notes in Networks and Systems, vol 140 (eds Marriwala, N. et al.) https://doi.org/10.1007/978-981-15-7130-5_12 (Springer, 2021).

Zhang, S., Meng, Z., Chen, B., Yang, X. & Zhao, X. Motivation, social emotion, and the acceptance of artificial intelligence virtual assistants—Trust-based mediating effects. Front. Psychol. 12, 95. https://doi.org/10.3389/fpsyg.2021.728495 (2021).

Doraiswamy, P. M. et al. Empowering 8 billion minds: enabling better mental health for all via the ethical adoption of technologies. NAM Perspect.. https://doi.org/10.31478/201910b (2019).

Murphy, K. et al. Artificial intelligence for good health: a scoping review of the ethics literature. BMC Med. Ethics. 22, 14. https://doi.org/10.1186/s12910-021-00577-8 (2021).

Kashive, N., Powale, L. & Kashive, K. Understanding user perception toward artificial intelligence (AI) enabled e-learning. Int. J. Inform. Learn. Technol. 38, 1–19. https://doi.org/10.1108/IJILT-05-2020-0090 (2021).

Kaye, S. A., Lewis, I., Forward, S. & Delhomme, P. A priori acceptance of highly automated cars in Australia, France, and Sweden: A theoretically-informed investigation guided by the TPB and UTAUT. Accid. Anal. Prev. 137, 105441. https://doi.org/10.1016/j.aap.2020.105441 (2020).

Becker, D. Possibilities to improve online mental health treatment: recommendations for future research and developments. In Advances in Information and Communication Networks. FICC 2018. Advances in Intelligent Systems and Computing, vol. 886 (eds Arai, K. et al.) https://doi.org/10.1007/978-3-030-03402-3_8 (Springer, 2019).

Hirani, R. et al. Artificial intelligence and healthcare: a journey through history, present innovations, and future possibilities. Life 14, 557 (2024).

Anchitaalagammai, J. V. et al. Predictive health assistant: AI-driven disease projection tool. In. 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India 1–6. https://doi.org/10.1109/ICRITO61523.2024.10522266 (2024).

Hauser-Ulrich, S., Künzli, H., Meier-Peterhans, D. & Kowatsch, T. A smartphone-based health care chatbot to promote self-management of chronic pain (SELMA): pilot randomized controlled trial. JMIR Mhealth Uhealth. 8, e15806. https://doi.org/10.2196/15806 (2020).

Divya, S., Indumathi, V., Ishwarya, S., Priyasankari, M. & Devi, S. K. A self-diagnosis medical chatbot using artificial intelligence. J. Web Dev. Web Designing. 3, 1–7 (2018).

Muneer, S. et al. Explainable AI-driven chatbot system for heart disease prediction using machine learning. Int. J. Adv. Comput. Sci. Appl. 15, 1 (2024).

Maeda, E. et al. Promoting fertility awareness and preconception health using a chatbot: a randomized controlled trial. Reprod. Biomed. Online. 41, 1133–1143. https://doi.org/10.1016/j.rbmo.2020.09.006 (2020).

Davis, C. R., Murphy, K. J., Curtis, R. G. & Maher, C. A. A process evaluation examining the performance, adherence, and acceptability of a physical activity and diet artificial intelligence virtual health assistant. Int. J. Environ. Res. Public Health. 17 (23), 9137. https://doi.org/10.3390/ijerph17239137 (2020).

Fitzpatrick, K. K., Darcy, A. & Vierhile, M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Ment Health. 4, e19. https://doi.org/10.2196/mental.7785 (2017).

Donadello, I. & Dragoni, M. AI-enabled persuasive personal health assistant. Social Netw. Anal. Min. 12, 106. https://doi.org/10.1007/s13278-022-00935-3 (2022).

Chatterjee, A., Prinz, A., Gerdes, M. & Martinez, S. Digital interventions on healthy lifestyle management: systematic review. J. Med. Internet Res. 23, e26931. https://doi.org/10.2196/26931 (2021).

Davis, C. R., Murphy, K. J., Curtis, R. G. & Maher, C. A. A process evaluation examining the performance, adherence, and acceptability of a physical activity and diet artificial intelligence virtual health assistant. Int. J. Environ. Res. Public Health. 17 (23), 9137. https://doi.org/10.1016/B978-0-12-818438-7.00002-2 (2020).

Melnyk, B. M. et al. Interventions to improve mental health, well-being, physical health, and lifestyle behaviors in physicians and nurses: a systematic review. Am. J. Health Promotion. 34, 929–941 (2020).

Lee, D. & Yoon, S. N. Application of artificial intelligence-based technologies in the healthcare industry: opportunities and challenges. Int. J. Environ. Res. Public Health. 18, 1 (2021).

Gao, X., He, P., Zhou, Y. & Qin, X. Artificial intelligence applications in smart healthcare: a survey. Future Internet. 16, 1 (2024).

Laker, B. & Currell, E. ChatGPT: a novel AI assistant for healthcare messaging—a commentary on its potential in addressing patient queries and reducing clinician burnout. BMJ Lead. 8, 147. https://doi.org/10.1136/leader-2023-000844 (2024).

Dragoni, M., Rospocher, M., Bailoni, T., Maimone, R. & Eccher, C. Semantic technologies for healthy lifestyle monitoring. In The Semantic Web–ISWC 2018. ISWC 2018. Lecture Notes in Computer Science, vol 11137 (eds Vrandečić, D. et al.) https://doi.org/10.1007/978-3-030-00668-6_19 (Springer, 2018).

Babel, A., Taneja, R., Mondello Malvestiti, F., Monaco, A. & Donde, S. Artificial intelligence solutions to increase medication adherence in patients with non-communicable diseases. Front. Digit. Health. 3, 69. https://doi.org/10.3389/fdgth.2021.669869 (2021).

Bickmore, T. W. et al. Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google assistant. J. Med. Internet Res. 20, e11510. https://doi.org/10.2196/11510 (2018).

Bulla, C., Parushetti, C., Teli, A., Aski, S. & Koppad, S. A review of AI based medical assistant chatbot. Res. Appl. Web Dev. Des. 3 (2), 1–14. https://doi.org/10.5281/zenodo.3902215 (2020).

Shevtsova, D. et al. Trust in and acceptance of artificial intelligence applications in medicine: mixed methods study. JMIR Hum. Factors. 11, e47031. https://doi.org/10.2196/47031 (2024).

Gerke, S., Minssen, T. & Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare 295–336 (Academic Press, 2020).

Hlávka, J. P. Security, privacy, and information-sharing aspects of healthcare artificial intelligence. In Artificial Intelligence in Healthcare 235–270 (Academic Press, 2020).

Rigby, M. J. Ethical dimensions of using artificial intelligence in health care. AMA J. Ethics. 21, 121–124. https://doi.org/10.1001/amajethics.2019.121 (2019).

Xiong, Y., Shi, Y., Pu, Q. & Liu, N. More trust or more risk? User acceptance of artificial intelligence virtual assistant. Hum. Factors Ergon. Manuf. Serv. Ind. 34, 190–205. https://doi.org/10.1002/hfm.21020 (2024).