Abstract

Design an effective deep learning-driven method to locate and classify the tibio-femoral knee joint space width (JSW) with respect to both anterior-posterior (AP) and lateral views. Compare the results and see how successfully a deep learning approach can locate and classify tibio-femoral knee joint osteoarthritis from both anterior-posterior (AP) and lateral-view knee joint x-ray images. To evaluate the performance of a deep learning approach to classify and compare radiographic tibio-femoral knee joint osteoarthritis from both AP and lateral view knee joint digital X-ray images. We use 4334 data points (knee X-ray images) for this study. This paper introduces a methodology to locate, classify, and compare the outcomes of tibio-femoral knee joint osteoarthritis from both AP and lateral knee joint x-ray images. We have fine-tuned DenseNet 201 with transfer learning to extract the features to detect and classify tibio-femoral knee joint osteoarthritis from both AP view and lateral view knee joint X-ray images. The proposed model is compared with some classifiers. The proposed model locate the tibio femoral knee JSW localization accuracy at 98.12% (lateral view) and 99.32% (AP view). The classification accuracy with respect to the lateral view is 92.42% and the AP view is 98.57%, which indicates the performance of automatic detection and classification of tibio-femoral knee joint osteoarthritis with respect to both views (AP and lateral views).We represent the first automated deep learning approach to classify tibio-femoral osteoarthritis on both the AP view and the lateral view, respectively. The proposed deep learning approach trained on the femur and tibial bone regions from both AP view and lateral view digital X-ray images. The proposed model performs better at locating and classifying tibio femoral knee joint osteoarthritis than the existing approaches. The proposed approach will be helpful for the clinicians/medical experts to analyze the progression of tibio-femoral knee OA in different views. The proposed approach performs better in AP view than Lateral view. So, when compared to other continuing existing architectures/models, the proposed model offers exceptional outcomes with fine-tuning.

Similar content being viewed by others

Introduction

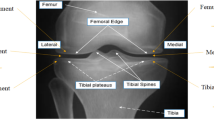

Researchers use digital radiography in the assessment of knee osteoarthritis (OA) because it is economical and speedy. OA is a physical disorder that targets knee joint cartilage and leads to physical instability and functional limitations. It is an important contributor to disability in older people1,2. Clinicians analyze the knee radiographs of the symmetric joints to predict knee OA at an early stage. The presence of marginal osteoarthritis and subchondral cysts in the tibiofemoral joint are signs of osteoarthritis. Both research studies and clinical practice in OA have mainly focused on the tibiofemoral (TF) joint. Experts frequently use anterior-posterior (AP) view digital X-ray images to evaluate the tibiofemoral knee joint OA1. Tibiofemoral osteoarthritis (TFOA) is defined as the degeneration of articular cartilage from the lateral and/or medial compartment combined with the development of subchondral sclerosis and osteoarthritis. Sex and age are significantly associated with TFOA3. We performed the analysis to locate and classify osteoarthritis, which is helpful during the assessment of OA.

Some people cannot realize any pain but have radiological evidence of OA, and vice versa. Improper diagnosis of OA at later stages will lead to surgery. Early detection of OA is the only available option to reduce its progression, and early diagnosis of OA is possible with recent imaging technologies. Prediction of OA at a very early stage has some restrictions due to factors like pain and functional limitations. Plain radiographic imaging is a cost-effective method to diagnose knee osteoarthritis4,5. The diagnostic accuracy of OA is mainly dependent on the experience of clinicians. We have to use computer-assisted machine learning methodologies to locate and classify osteoarthritis more accurately. Several studies have attempted to automatically locate and classify the OA region with the help of ordinary X-ray images6,7,8,9,10. Such studies show the presence of two important sections in the diagnosis of OA: (1) detection of knee OA and (2) classification of knee OA. Priscilla steno et al.11 addresses an approach to detecting small objects with the help of a faster RCNN architecture. Digital X-ray images are still important in modern healthcare management, and most medical consultants prefer digital X-ray images for the initial assessment of osteoarthritis. Researchers have used various methodologies to support clinicians in the evaluation of osteoarthritis12,13. Faster RCNN (Fast RCNN + Region Proposal Network), an object detection approach proposed by Ren et al.14, classifies objects and identifies the exact location of the object. Furthermore, the performance of the system increases through the distribution of convolutional features with the detector module15. Chen et al.16 propose the DFPN architecture to create new anchor boxes and enhance the accuracy of the region of interest (ROI) localization. In the proposed approach, we represent the first fully automated method to locate, classify, and compare knee joint osteoarthritis from both anterior-posterior view and lateral view digital knee joint X-ray images using a deep learning approach.

Materials and methods

Data

In this study, we obtain the digital X-ray images of the patients (868 females and 292 males) from the KGS scan center in Madurai. In 4462 knee data (digital X-ray images), we use only 4334 knee data images for final processing. We have transformed the knee joint digital images into a standard JPEG format for future processing. The experimental protocol for the study was approved by the Code of Ethics Committee (CEC) of Kalasalingam Academy of Research and Education (Reference: KARE/CEC/MoM/2024–2025/01 dated 20.07.2024). All procedures performed in this study involving human participants adhered to the ethical standards of the institution and were conducted in compliance with the principles outlined in the 1964 Helsinki Declaration. This ensures that the research upholds the highest ethical standards, safeguarding the rights, dignity, and well-being of all participants involved. For this study, we have used 70% of the data for training, 10% for validation, and 20% data to test the proposed model. The dataset split is shown in Table 1.

Two radiology experts make certain exclusions (post-surgical evaluation, trauma, infection, and poor-quality images) on this initial dataset (4462) to get the final set (4334) that was used for the analysis. The final dataset included 4334 knee joint images. Medical experts manually located the knee joint osteoarthritis region of interest (ROI). For each knee joint, the knee joint osteoarthritis was labeled in both AP and lateral views, totaling 4334 annotations. The data is split into 1525 knee joint x-ray images for training the detection model, 434 knee joint x-ray images for testing, and 208 knee joint x-ray images for validating the model. With the help of medical experts, we obtained knee joint osteoarthritis labels with respect to AP view (Grade 1, Grade 2, Grade 3, and Grade 4) and lateral view (Grade 1, Grade 2, Grade 3, and Grade 4). Figure 1 shows the X-ray image of the patient’s knee joint with ROI selection on both the AP view and the lateral view.

DICOM images are resize and transferred to JPEG format. Our proposal includes two steps: (1) locating the tibio-femoral knee joint space width in both AP view and lateral view; and (2) classifying the ROI regions. These two actions taken together make up a fully automated system that can estimate knee joint osteoarthritis with respect to both AP and lateral views. Figure 2 depict the flowchart of the proposed work. The grading classification is shown in Table 2.

Tibio-femoral knee JSW localization and classification (AP view and lateral view)

We are using the deep learning model (Faster RCNN architecture + Modified DenseNet 201 using transfer learning) for extracting features to locate ROI on both AP view and lateral view knee joint x-ray images. The Faster RCNN architecture uses a region proposal network (RPN), which is a convolutional network to recognize objects by simultaneously predicting object limits and scores at every position that Faster RCNN uses for detection17. The Region Proposal Network14 in the architecture shares the full image convolution features with the detection network to automatically localize the region of interest (ROI) in digital knee joint X-ray images. Convolution, max pooling, batch normalization, and ReLU unit layers are comprised in each branch. Outputs from the various channels and pass the resulting feature maps to the top convolutional layers. To provide better regional proposals, the RPN has undergone comprehensive training.

We employ the DenseNet 201 architecture as the backbone architecture to locate knee joint OA on both views. Flow chart of tibio-femoral knee joint space width detection and classification architecture (proposed architecture) is shown in Fig. 2. We provide image-bounding boxes as training labels and employed the Jaccard index3, also known as intersection over union, as the loss function that assesses the quality of the detection (IoU). During training, the proposed model produced several detection results and selected the detection with the highest model certainty. We determine the percentage of knees that attained IoU > 0.75 to evaluate the performance of the detection model. An anchor gets a positive label when it has an IoU value greater than 0.75 for every ground-truth box selection or if it has the largest IoU against a ground-truth bounding box. The key benefit of this approach is to locate the tibio-femoral knee joint osteoarthritis with respect to both AP and lateral views. This procedure improves the accuracy of knee joint detection in both views. We also implement a dataset augmentation focusing on tibio-femoral JSW detection with respect to both the AP view and the lateral view. The number of matching anchors increases by locating the JSW of the tibio-femoral region with respect to both the AP view and the lateral view. We use the stochastic gradient descent model to train the proposed system. The initial learning rate was set to 1e^-3, and the proposed architecture was trained for 10 epochs.

We have described the architecture of the DenseNet 201 + transfer learning model. The DenseNet 201 model is a CNN model consisting of 201 layers. Figure 3 (AP view) and b (Lateral view) represents the proposed architecture for knee joint OA detection and classification. The final layer of the original DenseNet 201 model is a fully connected (FC) layer with 1000 classes. We fine-tune the DenseNet 201 model by replacing this FC layer with a set of FC layers. The FC layer has 2048 out-features, ReLU, and then dropout with a probability of 0.5 is applied, and finally only three out-features for four-class classification (Grade 1/Grade 2/Grade 3/Grade 4).

Our proposed approach is based on a single-input CNN architecture (DenseNet 201 with transfer learning). Fully connected layers are layered on top of the convolutional layers to predict the JSW area of tibio-femoral regions. In this study employ DenseNet-201 [5 + (6 + 12 + 48 + 32) × 2) = 201] architecture. Densely connecting all layers is the goal of the DenseNet architecture. The proposed model outcomes are a vector of knee joint osteoarthritis classifications (tibio-femoral). We choose the prediction with the highest confidence score as the final decision. A knee joint osteoarthritis (tibio-femoral JSW) of 224 × 224 pixel serves as an input to the model. We used Matlab 2020a as the software framework, with 8 GB of memory for each experimental analysis. We trained the proposed model using a stochastic gradient descent optimizer with a learning rate of 3e^-3. We chose to train the model for the first 15 epochs without any data augmentation to prevent bias problems in early epochs. We employed data augmentation processes like horizontal flips, rotations, and translations on the training data in the subsequent epochs. We chose to employ a technique similar to oversampling to address a problem with an unbalanced dataset18, which relies on selecting a sample from the training set with the same probability for each of the classes in each batch. To avoid similar classes with more samples, the validation set was modified using the same procedure. It was developed and remains unchanged during all of the epochs. We find that during the training process, random initialization significantly affected the validation score. We test our model on the test set after choosing the model with the greatest validation score.

Results

Tibio-femoral knee JSW detection (AP view and lateral view)

We are using a system configured with an AMD E1-7010 APU with 4.0 GB of RAM and a 1.5 GHz AMD CPU. We have selected Matlab R2022b with a parallel computing toolbox, an image processing toolbox, and a deep learning toolbox for processing. We employ the IoU measure, which calculates the intersection area of the predicted bounding box and annotated bounding box divided by the union area of the two boxes, to assess the performance of our proposed detection system. At IoU > 0.75, our proposed architecture identifies 98.78% of the knees as having osteoarthritis (femur and tibia). The overall detection accuracy is 96.12% for the lateral view and 98.74% for the AP view. The results of tibio-femoral knee JSW detection in the AP view and lateral view are shown in Fig. 4a and b, respectively. Figure 5a–f display the accuracy-loss curve of the validation and training datasets of the three CNN models for both the AP view and the lateral view. Our proposed system successfully localizes tibio-femoral JSW in both the AP view and lateral view.

Tibio-femoral knee JSW classification (Ap view and lateral view)

The classification accuracy (tibio-femoral JSW classification) approach on the test data set with respect to AP view is 98.57%, and the accuracy for lateral view is 92.42%. The quadratic kappa coefficient between the medical experts and the proposed model with respect to tibio-femoral knee JSW with respect to AP view is 0.98, and the kappa coefficient for lateral view is 0.91. We design a deep learning system to classify knee joint osteoarthritis in AP view and lateral view and evaluate the effectiveness of the proposed approach to classify knee joint osteoarthritis in both views. Researchers have the idea that the diagnosis of OA is not possible with the clinical symptoms alone.

(a) Accuracy and loss curve of the proposed approach (tibio-knee joint OA classification—AP view-DenseNet-201). (b) Accuracy and loss curve of the proposed approach (tibio- knee joint OA classification—Lateral view-DenseNet 201). (c) Accuracy and loss curve of the proposed approach (tibio- knee joint OA classification—AP view-ResNet 50). (d) Accuracy and loss curve of the proposed approach (tibio-knee joint OA classification—Lateral view-ResNet 50). (e) Accuracy and loss curve of the proposed approach (tibio-knee joint OA classification—AP view-Inceptionv3). (f) Accuracy and loss curve of the proposed approach (tibio-knee joint OA classification—Lateral view-Inceptionv3).

(a) Confusion matrix of tibio femoral knee joint osteoarthritis classification—AP view (DenseNet 201). (b) Confusion matrix of tibio femoral knee joint osteoarthritis classification—Lateral view (DenseNet 201). (c) Confusion matrix of tibio femoral knee joint osteoarthritis classification—AP view (ResNet 50). (d) Confusion matrix of tibio femoral knee joint osteoarthritis classification—Lateral view (ResNet 50). (e) Confusion matrix of tibio femoral knee joint osteoarthritis classification—AP view (Inception v3). (f) Confusion matrix of tibio femoral knee joint osteoarthritis classification—Lateral view (inception v3).

To the best of my knowledge, this is the first approach to classify and compare tibio-femoral knee joint osteoarthritis from both the AP view and the lateral view. Figure 6 and b display the classification results of the tibio-femoral knee JSW classification with respect to both the AP view and the lateral view. Therefore, we hope that the comparative analysis will be helpful in analyzing the progression of tibiofemoral OA on both views. In comparison, the classification performance (accuracy) of the proposed system is higher than the existing models with respect to the AP view, but the accuracy with respect to the lateral view is lower than some existing models. The improvement to the anchor box design and feature map has strengthened the region proposals, thereby resulting in greater accuracy of localization. Improvements to the anchor box design have enhanced object localization and classification accuracy. The proposed system locates and classifies the tibio-femoral osteoarthritis in both AP view and lateral view knee joint digital X-ray images. While comparing the performance, the classification accuracy of tibio-femoral OA in the AP view is higher than the lateral view. Figure 7a–f display the confusion matrix of 3 CNN models with respect to both AP view and lateral view knee joint X-ray images. The performance of the proposed approach is higher than that of the existing model (Table 5). Performance comparisons of proposed work with existing approaches are shown in Fig. 8.

Discussion

We have designed an automated deep learning model to assess the severity of tibio-femoral knee joint OA on both the AP view and the lateral view. The difference between the proposed model classification outcomes (AP view and lateral view) and the expert’s assessment is nearly the same as the variance between different medical experts (expert 1 and expert 2). There is only minimal agreement between the medical experts and the proposed approach in the grading levels of 3 and 4 (grade 3 and grade 4). We found minimal misclassification variation between our proposed approach and the expert’s assessment, especially in the initial stages of OA with respect to AP view (grades 1 and 2) and severe grades (grades 3 and 4) in lateral view. We think that this variation is due to the subjectivity of those tibio-femoral knee joint osteoarthritis classifications (lateral view), which is supported by an increase in variation in those knee joint osteoarthritis classifications among medical experts. The proposed work obtained a tibio-femoral JSW classification accuracy of 98.507% (AP view) and 92.42% (lateral view). The misclassification difference between the proposed approach and the expert’s evaluation can indicate either an error on the part of the AI learning architecture or that there is an inherent bias of subjectivity present in the evaluation.

There are no prior algorithms that properly contextualized tibio-femoral knee joint osteoarthritis clinically with respect to lateral view because they were based solely on the knee joint space width area. Moreover, the proposed approach perfectly localizes and classifies the tibio-femoral knee joint osteoarthritis in both views (AP and lateral views). Table 3 displays the results of the multi-classification performance of four classes with respect to both the AP view and the lateral view. When compared to the existing works, the performance of the proposed work classification showed better classification accuracy.

The outcomes showed that, in terms of multi-classification accuracy, our proposed model using DenseNet201 outperforms the current techniques indicated in Table 4. Table 4 displays the results of DenseNet 201 and two other deep learning models: Inception v3 and ResNet 50. Deep learning models are used on the real-time dataset for the multi-class classification task. Furthermore, bold font highlights the highest results that were obtained.The proposed approach obtained an accuracy of 98.507% (AP view) and 92.42% (Lateral view). Table 4 displays the DenseNet201, ResNet 50, and Inception v3 classification accuracy in both the AP view and the Lateral view: 98.50%: 92.42%, 89.74%: 84.44%, and 85.71%: 81.16%, respectively. The fine-tuned DenseNet 201 model obtained the highest accuracy, precision, specificity, sensitivity and F1-score in both views. ResNet 50 and Inception v3 obtained a lower accuracy rate of 89.74% (84.44%) and 85.71% (81.16%). Inception v3 had the lowest specificity rate and F1 score of 95.21%: 93.01%; 84.25%; 79.5% (Table 5).

The multi-classification outcomes are detailed in Table 3. We divided the dataset into four groups: Grade 1, Grade 2, Grade 3, and Grade 4. The real time dataset was used to model evaluation metrics (measure accuracy, precision, sensitivity, specificity, and F1-score) after the 3 CNN models—DenseNet 201, InceptionV3, and ResNet 50—were implemented. Precision, recall, F1-Score, accuracy, and specificity for Dense-Net 201 in Grade 4 with respect to both AP and lateral views are 98.51%:92.42%; 98.75%:92.77%; 99.5%:97.69%; 98.75%:91.75%; 98.75%: 92.0%.The maximum accuracy was attained by ResNet 50, InceptionV3, and DenseNet201 for Grade 4 OA in both views: 97.44%: 92.22%; 88.57%: 88.41%; 100%: 95.45%. For Grade 4, the highest recall rate attained by ResNet 50, Inception v3, and DenseNet 201 in both views was 91%:81%, 83%:71%, and 100%:91%, respectively. DenseNet 201 attained a high F1-score of 87%, respectively.

For the Grade 3 OA cases, accuracy, precision, recall, and F1-score in both AP and lateral views are 100%: 95.45%, 100%: 83%, 100%: 91%, and 100%: 87% (DenseNet 201), respectively. Inception v3 and DenseNet 201 architectures obtained a maximum accuracy of 94.29%:88.41% and 100%:95.45%. ResNet 50 and DenseNet 201 attained a maximum recall rate with respect to AP views and lateral views of 100%, 84%, and 90%, respectively. DenseNet 201 offers a maximum precision rate and F1 rate in both AP and Lateral views of 100%:95% and 100%:92%, respectively.

For Grade 2 OA cases, DenseNet 201 achieved a maximum accuracy rate of 98.51% (AP view) and 96.97% (Lateral view). ResNet 50 and DenseNet 201 obtained a maximum precision rate with respect to both AP and lateral views of 88%:88% and 95%:95%. DenseNet 201 achieved the highest recall rate and F1-score of 100% (AP view): 95% (Lateral view) and 98% (AP view): 95% (Lateral view), respectively. For Grade 1 OA cases, DenseNet 201 achieved a maximum accuracy of 98.51% and 96.97% in both the AP view and the lateral view, respectively. Maximum precision rate of 100% (AP view) and (100%) lateral view obtained by ResNet 50 model. Inception v3 and DenseNet 201 obtained a maximum recall rate of 92%, 94% (AP view), and 94% (Lateral view), respectively. ResNet 50 and DenseNet 201 attained a maximum F1 score of 94%:97% (AP view) and 94%:94% (Lateral view), respectively.

Figure 5a–f shows the accuracy and loss for each epoch of the training and validation datasets. For the tibio-femoral knee osteoarthritis classification-AP view, the training and validation accuracy of the DenseNet 201 model were over 95% at epoch 100, and the proposed model’s training and validation loss were equal at 0.5. The InceptionV3 model demonstrated over 90% training accuracy and 80–85% validation accuracy. Training losses were below 0.5, and validation losses were close to 2. The ResNet 50 model’s validation accuracy varied from 80 to 90%, whereas its training accuracy exceeded 90%. The validation losses of the ResNet 50 model were close to two, but the training losses were less than 0.5. For tibio-femoral knee osteoarthritis classification (lateral view), the training and validation accuracy of the DenseNet 201 model were over 70% at epoch 100, and the proposed model’s training and validation loss were 0.5. The InceptionV3 model demonstrated over 80% training accuracy and 80–85% validation accuracy. Training losses were below 0.5, and validation losses were close to 2. The ResNet 50 model’s validation accuracy varied from 80 to 90%, whereas its training accuracy exceeded 90%. The validation loss of the ResNet 50 model was more than two, but the training loss was less than 0. Figure 7a–f displays the confusion matrix for the 3 CNN models (both AP view and lateral view) in the 4 grades (Grade 1–4) dataset. The accuracy of the DenseNet 201 model is 98.507% (AP view): 92.42% (Lateral view); the accuracy of the ResNet 50 is 87.5% (AP view): 84.44% (Lateral view); and the accuracy of the Inception v3 model is 85.71% (AP view): 81.33% (Lateral view).

The proposed model would effectively localize the region of interest (ROI) to analyze its severity. As a result, the localization would allow the medical experts to analyze the progression of knee osteoarthritis from both the views. The use of a lateral view for the assessment of tibio-femoral knee joint OA has some drawbacks. First, it’s very difficult to differentiate lateral and medial compartments in the lateral view position. It is due to the sizes and shapes of the tibial plateaus and femoral condyles (lateral and medial). Second, the knees can frequently be noticeable narrowed on the lateral side due to knee flexion (30 degrees to 50 degrees), but still exhibit a significant knee joint space remaining in the same compartment on the AP view. This is based on the reality that the viewing position contains most of the information required to analyze tibio-femoral knee joint osteoarthritis. The AP view contains more information about tibiofemoral arthritis than the lateral view. This analysis’s conclusion is specific to the method used, and there is a possibility that another approach would produce greater results.

Conclusion

This study proposed a tibio-femoral knee osteoarthritis diagnosis model that might be improved by applying deep learning architecture. The main objective of the proposed model was to analyze the tibio-femoral knee osteoarthritis classification accuracy in both AP view and Lateral view. The proposed system provided the first results for automatic localization and classification of tibio-femoral knee joint osteoarthritis from both the AP view and the lateral view. The proposed model will multi classify tibio-femoral knee osteoarthritis in order to evaluate the severity of OA. The experimental findings show the efficiency and viability of the proposed system to locate and classify tibio-femoral osteoarthritis in both AP view and lateral view. The proposed system performs well in both views. We hope that the outcomes open the way for positive and effective field implementations. The proposed model could help physicians analyze the progression of KOA from different perspectives. An enhanced region proposal network with faster RCNN improves the overall object localization and classification accuracy. A real-time dataset was used to assess the proposed model. Pre-processing methods for the real-time dataset scaling and normalization process. The real-time dataset was used to apply DenseNet201, and two deep learning methods were compared: ResNet 50 and Inception v3. The sensitivity, specificity, accuracy, precision, and F1-score of the DenseNet201 model with respect to AP were 98.75%, 99.5%, 98.51%, 98.75.8%, and 98.75%; lateral views were 92.77%, 97.69%, 92.42%, 91.75%, and 92.0, respectively. To date, to our knowledge, no previous research has examined ROI detection of tibio-femoral knee joint osteoarthritis with respect to lateral view. The proposed work will help the progression of disorders from different perspectives. However, based on our findings, additional investigations using the proposed approach to analyze knee joint osteoarthritis in the knee joint appear to be useful, and we plan to extend this work to locate, classify, and compare knee joint tibio-femoral osteoarthritis in different radiographic positions of the knee joint. Finally, the new method may enable the objectivity and reliability of tibio-femoral knee joint osteoarthritis, which will greatly help in arthritic research. In conclusion, the proposed approach in this work may offer a precise and reliable marker of the severity of knee joint OA use in both research and clinical analysis. We accept that the localization accuracy of the proposed model may vary for other approaches to tibio-femoral osteoarthritis diagnosis. The evaluation of the proposed model demonstrated that the results with tuning are superior to other existing architecture models. Nevertheless, our proposed model has a drawback: the time duration required for the training and testing phases. This drawback comes from the design of the model; therefore, in future work, we will simplify our model to shorten these phases.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author (MPR) on reasonable request.

Change history

03 September 2025

A Correction to this paper has been published: https://doi.org/10.1038/s41598-025-17772-x

References

Conaghan, P. G. et al. Impact and therapy of osteoarthritis: the arthritis care OA Nation 2012 survey. Clin. Rheumatol. https://doi.org/10.1007/s10067-014-2692-1 (2015).

Kellgren, J. H. and J S Lawrence:Radiological assessment of osteo-arthrosis. Ann. Rheum. Dis. https://doi.org/10.1136/ard.16.4.494 (1957).

Lin, J., Li, R., Kang, X. & Li, H. Risk factors for radiographic tibiofemoral knee osteoarthritis: the Wuchuan osteoarthritis study. Int. J. Rheumatol. https://doi.org/10.1155/2010/385826 (2010).

Demehri, S., Hafezi-Nejad, N. & Carrino, J. A. Conventional and novel imaging modalities in osteoarthritis: current state of the evidence. J. Curr. Opin. Rheumatol. https://doi.org/10.1097/BOR.0000000000000163 (2015).

Cibere, J. Do we need radiographs to diagnose osteoarthritis? Best Pract. Res. Clin. Rheumatol. https://doi.org/10.1016/j.berh.2005.08.001 (2006).

Antony, J., McGuinness, K., O’Connor, N. E. & Moran, K. Quantifying radiographic knee osteoarthritis severity using deep convolutional neural networks. In Proceedings of 23rd International Conference on Pattern Recognition. https://doi.org/10.1109/ICPR.2016.7899799 (2016).

Podsiadlo, P., Cicuttini, F. M., Wolski, M., Stachowiak, G. W. & Wluka, A. E. Trabecular bone texture detected by plain radiography is associated with an increased risk of knee replacement in patients with osteoarthritis: A 6-year prospective follow-up study. Osteoarthr. Cartil. https://doi.org/10.1016/j.joca.2013.10.017 (2014).

Shamir, L. et al. Early detection of radiographic knee osteoarthritis using computer-aided analysis. Osteoarthr. Cartil. https://doi.org/10.1016/j.joca.2009.04.010 (2009).

Stachowiak, G. W., Wolski, M., Woloszynski, T. & Podsiadlo P. Detection and prediction of osteoarthritis in knee and hand joints based on the x-ray image analysis. J. Biosurface Biotribology. https://doi.org/10.1016/j.bsbt.2016.11.004 (2016).

Thomson, J., O’Neill, T., Felson, D. & Cootes, T. Automated shape and texture analysis for detection of osteoarthritis from radiographs of the knee. Int. Conf. Med. Image Comput. Computer-Assisted Intervention. https://doi.org/10.1007/978-3-319-24571-3_16 (2015).

Steno, P., Alsadoon, A., Prasad, P. W., Al-Dala’in, T. & Alsadoon, O. H. A novel enhanced region proposal network and modified loss function: threat object detection in secure screening using deep learning. J. Supercomputing. https://doi.org/10.1007/s11227-020-03418-4 (2020).

Shafizadegan, Z., Karimi, M. T., Shafizadegan, F. & Rezaeian, Z. S. Evaluation of ground reaction forces in patients with various severities of knee osteoarthritis. J. Mech. Med. Biology. https://doi.org/10.1142/S0219519416500032 (2016).

Segal, N. A. et al. Diagnostic performance of 3d standing Ct imaging for detection of knee osteoarthritis features. Phys. Sports Med. https://doi.org/10.1080/00913847.2015.1074854 (2015).

Ren, S., He, K., Girshick, R., Sun, J. & Faster, R. C. N. N. Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. https://doi.org/10.1109/TPAMI.2016.2577031 (2017).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. https://doi.org/10.1109/CVPR.2014.81 (2014).

Chen, Y. P., Li, Y. & Wang, G. An enhanced region proposal network for object detection using deep learning method. Plos One. https://doi.org/10.1371/journal.pone.0203897 (2018).

Zhao, Z. Q., Zheng, P., Xu, S. T. & Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Networks Learn. Syst. https://doi.org/10.1109/TNNLS.2018 (2019).

Cicuttini, F. M., Baker, J., Hart, D. J. & Spector, T. D. Association of pain with radiological changes in different compartments and views of the knee joint. Osteoarthr. Cartil. 4(2), 143–147 (1996).

Saleem, M., Farid, M. S., Saleem, S. & Khan, M. H. X-ray image analysis for automated knee osteoarthritis detection. J. Signal. Image Video Process. 14, 1079–1087. https://doi.org/10.1007/s11760-020-01645-z (2020).

Subramoniam, M. & Rajini, V. Local binary pattern approach to the classification of osteoarthritis in knee x-ray images. Asian J. Sci. Res. 6, 805–811. https://doi.org/10.3923/ajsr.2013.805.811 (2013).

Navale, D. I., Ravindra Hegadi, R. S. & Mendgudli, N. Block based texture analysis approach for knee osteoarthritis identification using SVM. In IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), IEEE, Dhaka, Bangladesh, 338–341 https://doi.org/10.1109/WIECONECE.2015.7443932 (2015).

Antony, J., McGuinness, K., Moran, K. & O’Connor, N. E. Auto- matic detection of knee joints and quantification of knee osteoar- thritis severity using convolutional neural networks. In Proceedings of the International Conference on Machine Learning and Data Mining in Pattern Recognition, 376–390. (Springer, 2017).

Tiulpin, A., Thevenot, J., Rahtu, E., Lehenkari, P. & Saarakkala, S. Automatic knee osteoarthritis diagnosis from plain radiographs: a deep learning-based approach. Sci. Rep. 8, 1727. https://doi.org/10.1038/s41598-018-20132-7 (2018).

Suresha, S., Kidzinski, L., Halilaj, E., Gold, G. E. & Delp, S. L. Auto- mated staging of knee osteoarthritis severity using deep neural networks. Osteoarthr. Cartil. 26, S441. https://doi.org/10.1016/j.joca.2018.02.845 (2018).

Mengko, T. L., Wachjudi, G., Suksmono, A. & Danudirdjo, D. Automated detection of unimpaired joint space for knee osteoarthritis assessment. In Proceedings of 7th International Workshop on Enterprise Networking and Computing in Healthcare Industry. HEALTHCOM, Korea, 400–403. https://doi.org/10.1109/HEALTH.2005.1500491 (2005).

Liu, B., Luo, J. & Huang, H. Toward automatic quantification of knee osteoarthritis severity using improved faster R-CNN. Int. J. Comput. Assist. Radiol. Surg. 15, 457–466. https://doi.org/10.1007/s11548-019-02096-9 (2020).

Abd El-Ghany, S., Elmogy, M. & Abd El-Aziz, A. A. A fully automatic fine tuned deep learning model for knee osteoarthritis detection and progression analysis. Egypt. Inf. J. 24, 229–240. https://doi.org/10.1016/j.eij.2023.03.005 (2023).

Schiratti, J. B. et al. A deep learning method for predicting knee osteoarthritis radiographic progression from MRI. Arthritis Res. Ther. 23(1), 262 (2021).

Wang, Y., Wang, X., Gao, T., Du, L. & Liu, W. An automatic knee osteoarthritis diagnosis method based on deep learning: data from the osteoarthritis initiative. J. Healthc. Eng. 5586529 (2021).

Kondal, S. et al. Automatic grading of knee osteoarthritis on the kellgren-lawrence scale from radiographs using convolutional neural networks. Advances in deep learning, artificial intelligence and robotics lecture notes. Networks Syst. 249, 163–173 (2022).

Kokkotis, C. et al. Explainable machine learning for knee osteoarthritis diagnosis based on a novel fuzzy feature selection methodology. Phys. Eng. Sci. Med. 45(1), 219–229 (2022).

Tolpadi, A. A., Lee, J., Pedoia, V. & Majumdar, S. Deep learning predicts total knee replacement from magnetic resonance images. Sci. Rep. 10(1), 6371 (2020).

Chen, P., Gao, L., Shi, X., Allen, K. & Yang, L. Fully automatic knee osteoarthritis severity grading using deep neural networks with a novel ordinal loss. Comput. Med. Imaging Graph. 75, 84–92. https://doi.org/10.1016/j.compmedimag.2019.06.002 (2019).

Abdullah, S. S. & Rajasekaran, M. P. Automatic detection and classification of knee osteoarthritis using deep learning approach. Radiol. Med. 127(4), 398–406. https://doi.org/10.1007/s11547-022-01476-7 (2022). Epub 2022 Mar 9.

Acknowledgements

I thank Dr.Azeem (Orthopedic Surgeon) and Dr.Nizam (Orthopedic Surgeon) for their outstanding support and guidance to classify the datasets. The authors also thank Dr. K G. Srinivasan., M.D. (Radiology) - KGS scan centre and The Department of Biomedical engineering, Kalasalingam Academy of Research and Education, Tamilnadu, India for allowing us to use the computational facilities available in the centre.

Author information

Authors and Affiliations

Contributions

Conception and design: SSA, MPRAnalysis and interpretation of the data: SSA, MPR, MJH, WKW, PKNDrafting of the article: SSA, MPR, MJH, WKW, PKNCritical revision of the article: SSA, MPR, MJH, WKW, PKNFinal approval of the article: MPRProvision of study materials or patients: SSAStatistical expertise: SSA, MPRAdministrative, technical support: SSA, MPR, MJH, WKW, PKNCollection and assembly of data: SSA.

Corresponding authors

Ethics declarations

Consent to participate

A waiver for informed consent of the patients was provided by the Code of Ethics committee (CEC) constituted by the Kalasalingam Academy of Research and Education due to the retrospective and observational nature of our study.

Ethical approval

The experimental protocol for the study was approved by the Code of Ethics Committee (CEC) of Kalasalingam Academy of Research and Education (Reference: KARE/CEC/MoM/2024–2025/01 dated 20.07.2024). All procedures performed in this studies involving human participants adhered to the ethical standards of the institution and were conducted in compliance with the principles outlined in the 1964 Helsinki Declaration. This ensures that the research upholds the highest ethical standards, safeguarding the rights, dignity, and well-being of all participants involved.

Informed consent

The Code of Ethics Committee (CEC) of Kalasalingam Academy of Research and Education has made the decision to waive the need for informed consent for a proposed research study involving the use of a dataset of knee X-ray images (Reference: KARE/CEC/MoM/2024–2025/01 dated 20.07.2024). This decision is based on the fact that the study uses anonymized images of subjects, not directly involving interactions with or identifying details of patients.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this Article was revised: In the original version of this Article, Wai Kit Wong was omitted as a corresponding author. Correspondence and requests for materials should also be addressed to wkwong@mmu.edu.my.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Abdullah, S.S., Rajasekaran, M.P., Hossen, M.J. et al. Deep learning based classification of tibio-femoral knee osteoarthritis from lateral view knee joint X-ray images. Sci Rep 15, 21305 (2025). https://doi.org/10.1038/s41598-025-04869-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-04869-6